How LLMs are and are not like the brain

Hi from buttondown! At the bottom of this newsletter is a bit of administrivia about the new platform

How LLMs are and are not like the brain

Beneath all the conversations about whether large language models comprise "Artificial Intelligence" or "Artificial General Intelligence" there's an substrate of assumptions about the degree to which these systems are or or not akin to the human brain. Boosters tend to argue that in terms of computational scale and complexity these models match the important characteristics of the brain as an information processing system. Skeptics will often refer to LLMs as "fancy autocorrect" and dismiss the idea that there is anything meaningfully brain-like (or capable of manifesting "intelligence", however defined) about these systems. The answer is somewhere in the middle.

If you look at our current understanding of neuroscience, there are good reasons to compare the function of LLMs to parts of our brains. There are real similarities. But the differences usefully illustrate what these models lack. The parts of human cognition that are missing in these models explain much of what is puzzling in their behavior. The path from where we are now to an LLM that truly produces speech like a human is a long one with much unknown and undone. This is made clear by looking at how the brain does it, and which parts of that system LLMs do not include.

The parts of the brain that most resemble LLMs are called "association cortices". These are large—billions of neurons or more—cortical regions that are capable of encoding huge amounts of information about the relations of things in the world. The most prominent ones in the human brain—much larger than they are in any other animal—are in the prefrontal cortex and the parietal cortex. From a neural architecture perspective, they are central to the differences between the brains of humans and other animals. They are essential for the cognitive tasks we think as being human-specific, like complex theory of mind reasoning, reflective self-consciousness, and syntactically rich language.

The reason we know that these regions are essential to human-specific cognitive tasks is that they grew massively larger over the stretch of evolutionary time after humans and apes diverged. They are relatively evolutionarily "late" parts of the brain. Other parts of our brain, like the hippocampus or the amygdala, haven't changed as much. They're more similar in humans and other animals. The (relative, we're talking about evolutionary time) speed with which these parts of the brain emerged in human ancestors constrains the ways they could have evolved. I had this explained to me by the neuroscientist Randy Buckner during my PhD coursework. Changes that happen rapidly in evolutionary time have to be changes that are simple to implement genetically. Evolving a whole new type of brain region—a new functional area—is very evolutionarily "expensive". But adding a bunch more neurons is evolutionarily relatively "cheap": the genetic code that guides the brain's development controls a process of doubling and re-doubling, and to double the number of neurons in a given brain region simply requires changing that code so that the doubling stops one iteration later than it otherwise would. That is a relatively simple mutation, more likely to occur by random chance in a (again, relatively) short period of time. The human prefrontal cortex doubled and redoubled and redoubled again, for millenia, until those two regions were far larger in humans than they are in any other ape species.

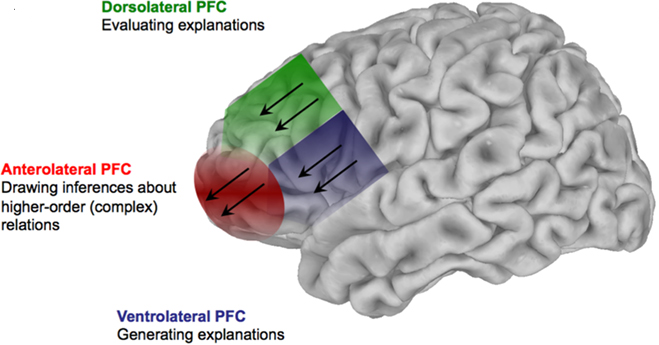

We know, because these regions developed so late, and only in humans, that they must be essential for whatever it is our brains can do that other animals' brains can't. We also know, because of the way these regions developed, that they can't be that specialized—there was no time for different kinds of neural organization to evolve, these regions simply have to be existing regions, but bigger. So what do these regions do? Well, they encode relations. Approximately—really, really, really approximately—the prefrontal cortex gets used to encode information about how the definitions—the whats and whys—of things in the world relate to each other and the parietal cortex gets used to encode information about how the positions and amounts—where, how many—of things in the world relate to each other. These regions get recruited dynamically to help contextualize and manage how we think and act about the relationships between things.

It'll probably help to get a little more specific. The dorsolateral prefrontal cortex is a subregion of the prefrontal cortex. fMRI studies show that it gets engaged for a variety of tasks. Theory of mind reasoning, executive function, speech monitoring. I’ll discuss speech monitoring, in particular. That is what it's called when our brains keep track of what we're saying. It's sort of an oversight function to make sure that the words coming out of our mouths connect together properly.

What association cortices are good at is keeping track of connections and co-occurences. In speech, you want to make sure that one word follows upon the next. You want to make sure that each word not only makes sense in the context of what came before, but also that the speech that you’re producing forms a coherent whole. When you start speaking and when you end speaking your words should fit together, in some more or less abstract sense. The way that association cortices manage this is by encoding an enormous amount of information about what words—concept, ideas, images, impressions—usually go together. Which are near each other, in some sense, and which are part of a valid path from one location—one possible speech beginning—to another, a possible speech conclusion.

To a first approximation this is precisely what the bulk of an LLM does. The “transformer” network in an LLM is (again, very approximately) a massive association cortex trained on an unfathomably huge amount of data. It has encoded with a great deal of fidelity what the universe of human language (or art) says about which words (or parts of images) go together, and what sets of words comprise a valid path from beginning to end of a thought, and how those paths branch and diverge. The mechanism by which these networks learn does not map particularly well to how humans come to encode information about the world in their large association cortices, but the overall function is similar enough that the comparison makes sense.

There is a second part of speech production. You also want to make sure, as you are producing a coherent whole, that the coherent whole is achieving its purpose. Talking is a social behavior; when we say things we're trying to influence something about another person. We want to influence the way they interact with the world.

The real advance of modern LLMs over earlier text completion systems is that they do a fairly good job of emulating this second component of language (or image!) production. In contrast to earlier autocomplete systems, LLMs are capable of maintaining a train of thought, producing long strings of text that are not merely plausibly on a word or sentence level, but which are structured towards a specific communicative goal.

In a LLM, the monitoring towards the end of achieving a goal comes from an algorithmic module that does "reinforcement learning"; what this module is able to do is predict how each additional word will contribute to the eventual achievement of a goal. At each step—each time the system chooses the next "token" (more-or-less a word) to append to its output—the LLM is choosing from an enormous set of possible answers. These answers are weighted (via the LLM's training) in terms of how likely they are as the next step. What the reinforcement learning module does is manipulate these weights slightly, so that the next token is weighted BOTH on how likely it is to appear in the training set AND how much it is contributing to the overall goal of the act of language production.

Something like this happens in the brain. Some of the most compelling research in the history of computational neuroscience showed that regions like the striatum and substantia nigra encode a system of reward prediction that is used to guide cognition. When the large association cortices are recruited their action is shaped and modulated by a goal encoded by these other, evolutionarily older brain regions.

Where LLMs diverge dramatically from the brain is in the complexity and basis of the goal towards which the reinforcement learning points the association cortices. The goals that LLMs are able to attempt to attain are vastly less complex than the internal goal states carried in a human brain.

The reason for this divergence is partly a technical one: reinforcement learning algorithms require a great deal of data to train, and the amount of data necessary to see good performance out of them scales dramatically as the the goal they are aiming for—it's called a "state vector"—gets more complex. If you can limit your reinforcement learning’s state vector to a few dimensions, or better yet a single dimension—good or bad, win or lose, acceptable or unacceptable, valuable or valueless—then successful training of a model is much more achievable.

This technical constraint interacts with another constraint: the kinds of data necessary to train the reinforcement learning components of an LLM are much harder to collect than the data used to train the pick-a-promising-next-token part. The association cortex is trained via massive, pre-existing corpora of data. The state vector for training data for the reinforcement learning module has to be measured, or created. The foundational work in deep reinforcement learning, done by the google subsidiary DeepMind, explored the problem space of games in large part because games – chess, Go, video games – could be simulated internally to the training system, which each simulation generating a training sample with both associations – this move follows that one – and a state vector (did player one win or lose) appropriate for training reinforcement learning systems. When the problem space considered by the reinforcement learning system is instead something as unstructured and open-ended as human communication, the problem of annotating training samples with a state vector gets much, much harder, requiring data to be collected from users who are interacting with the system. The primary data collection goal of OpenAI’s various public betas is very likely to accumulate signals that a given output is good or bad, from the perspective of the user, so that information can be used as a state vector for training their reinforcement learning systems.

In the brain, by contrast, the goal to which the association cortex is keeping our speech aimed is distilled from the phenomenally complex neural network known as, essentially, the rest of the brain and nervous system. When the DLPFC is monitoring and constraining speech to make sure that it is plausibly coherent and connected, the superimposed interpersonal and social goals of speech come from a complex stew of brain chemicals—modulatory neurotransmitters like serotonin, neurepinehrine, dopamine—and unconscious neural activity that together comprise how you feel about that person, how you feel about the interaction, your general state of mind, your behavioral goals, your intuition for their behavioral goals and on and on. To a first approximation the goal—the state vector, in reinforcement learning terms—is the output of the entire informational pathway passing from your connections with the world—fingers, toes, eyes, ears—and with your own body—your enteric nervous system, which passes back information about the state of your digestive system, consists on its own of several hundred million neurons—through to your cortex. It is the first half of a grand loop of embodied perception and action. In this grand loop, the association cortices, as important and essentially human as they are, are merely a single step.

The question of whether machine learning can become "AI"—however you try to define it—is not necessarily predicated on whether these systems contain something precisely analogous to the whole human neural system of taking feedback about the world and our bodies and condensing it to a state vector of valence and desire. But the fundamental emptiness that is disconcertingly present when interacting with a LLM, the sense of vapid agreeableness and a lack of inner life that accompanies the undeniably fluid and impressive speech these systems produce, is absolutely a product of the impoverishment of that little state vector. Getting from where we are now to a system with intelligence reflective of rich inner life and structured, motivated reasoning will, if neuroscience is any guide, be at least as steep a hill to climb as the one we have scaled thus far, and possibly much, much steeper.

New year, new platform, with any luck new and improved newsletter.

Apperceptive on buttondown should not be any different for you as a reader from Apperceptive on Substack, but I did want to acknowledge the change and talk about some minor changes to the newsletter.

I'll still be writing about the same things -- self-driving cars, LLMs, how they relate to the brain and why they're hard, startups, transportation systems and societal change -- although I am going to try to stick to a more regular weekly schedule. So in that sense, I intend for this newsletter offering to improve.

I'll also be rolling out a paid tier relatively soon. Buttondown, unlike substack, is not free for me to use. Or rather, with buttondown I pay in dollars rather than something more amorphous and unpleasant. I'm still thinking about how to make this worthwhile. Probably with a combination of occasional supplementary posts that are too revealing or gossipy about the internal nature of the AI/self-driving businesses to be posted publicly and the promise of a free copy of any book that should eventually result from this larger project I've embarked on, but I'm not sure yet. The newsletter as such will, with any luck, continue and in fact improve for unpaid subscribers, but if people would like to show appreciation financially I won't say no and will do my best to provide extra value.