Last week's dispatches from the Ministry of Intrigue

Hello, faithful reader.

We published the following fresh dispatches last week week:

TIL - Adding a content stats page to Hugo

June 20, 2024, 8:59 p.m.

One thing I used to have on the Django-based version of this blog was a stats page that provided an overview of how much content I had created. When I transitioned to static site generation, this became more unwieldy. I never could figure out how to do it in Octopress/Jekyll, and while I figured out how to do it in Pelican, it slowed the build down too much.

But Hugo does build radically faster than any of the above SSGs, and since I’m fresh off of figuring out my blogroll, I wondered if I could manage to do the whole thing with a custom layout.

Implementation #

Because I’m not a fool, I tried this out by starting a new branch.

git switch -c stats

I created a new file layouts/default/stats.html. I wasn’t looking for anything fancy, so I used my theme’s simple.html layout as a base, with a few adjustments.1

Note: if code examples appear unclear, you may prefer to read on the web.

define "main"

<article class="max-w-full h-entry">

<header>

if .Params.showBreadcrumbs | default (.Site.Params.article.showBreadcrumbs | default false)

partial "breadcrumbs.html" .

<h1 class="mt-0 text-4xl font-extrabold text-neutral-900 dark:text-neutral p-name">

.Title | emojify

</h1>

</header>

<section class="max-w-prose mt-6 prose dark:prose-invert e-content">

.Content | emojify

partial "stats_detail.html" .

</section>

<footer class="pt-8">

partial "sharing-links.html" .

</footer>

</article>

Then I created the partial that does all the calculation at layouts/partials/stats_detail.html.

- $totalPages := .Site.RegularPages.Len

- $totalWords := 0

- $totalReadTime := 0

- range .Site.RegularPages.ByPublishDate

- $totalWords = (add $totalWords .WordCount)

- $totalReadTime = (add $totalReadTime .ReadingTime)

- end

<dl>

<dt>Total Pages</dt>

<dd>$totalPages</dd>

<dt>Total Words</dt>

<dd>$totalWords | lang.FormatNumber 0</dd>

<dt>Total Reading Time</dt>

<dd>math.Floor (math.Div $totalReadTime 60) hours, math.Mod $totalReadTime 60 minutes</dd>

</dl>

Then I add a content page to host it at content/stats/index.md.

---

Title: "Site Statistics"

showDate: false

showPagination: false

showRelatedContent: false

kind: section

layout: stats

---

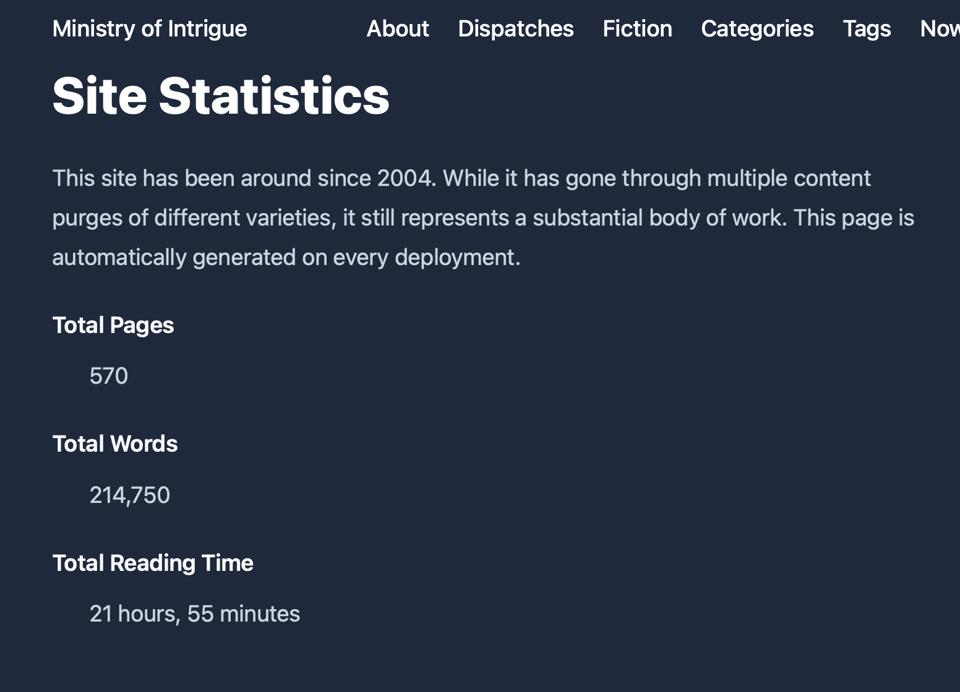

This site has been around since 2004. While it has gone through

multiple content purges of different varieties, it still

represents a substantial body of work. This page is automatically

generated on every deployment.

Ok, let’s see if it all blows up! I start up the server with hugo server -DF.

Victory!

Performance Impact #

Hugo’s speed is one of the reasons I love using it. As I mentioned above, I was concerned that this would negatively impact build performance, which would not fly with me long-term. Now that I had the implementation in place, it was time to evaluate that impact.

In the tests below, I switched between the main and stats branch to handle enabling/disabling the stats page.

A normal build in main without the stats page:

hugo

Start building sites …

hugo v0.127.0+extended darwin/arm64 BuildDate=2024-06-05T10:27:59Z VendorInfo=brew

| EN

-------------------+-------

Pages | 1329

Paginator pages | 111

Non-page files | 90

Static files | 18

Processed images | 683

Aliases | 364

Cleaned | 0

Total in 1055 ms

A build in stats with the stats page:

hugo

Start building sites …

hugo v0.127.0+extended darwin/arm64 BuildDate=2024-06-05T10:27:59Z VendorInfo=brew

| EN

-------------------+-------

Pages | 1331

Paginator pages | 111

Non-page files | 90

Static files | 18

Processed images | 683

Aliases | 364

Cleaned | 0

Total in 1027 ms

That’s confusing. Why is it faster? I assumed that since the rest of the content hasn’t changed, the cache is playing a big role here. Let’s try both builds again without the cache to see what the real performance impact might be.

Normal build in main:

hugo --ignoreCache

Start building sites …

hugo v0.127.0+extended darwin/arm64 BuildDate=2024-06-05T10:27:59Z VendorInfo=brew

| EN

-------------------+-------

Pages | 1329

Paginator pages | 111

Non-page files | 90

Static files | 18

Processed images | 683

Aliases | 364

Cleaned | 0

Total in 27854 ms

I had forgotten what a difference the cache makes! Even without the cache, it’s still way faster than my any of the SSGs I’ve used in the past.

Now, to do the build on the stats branch:

hugo --ignoreCache

Start building sites …

hugo v0.127.0+extended darwin/arm64 BuildDate=2024-06-05T10:27:59Z VendorInfo=brew

| EN

-------------------+-------

Pages | 1331

Paginator pages | 111

Non-page files | 90

Static files | 18

Processed images | 683

Aliases | 364

Cleaned | 0

Total in 28124 ms

Only 270 ms longer! I can live with that. Admittedly, as my content grows this could become more of an issue but it may take years before I have to worry about that again. Add in the fact that it’s highly unlikely that I’m going to be doing a build without the cache, and this becomes a total non-issue.

Cleaning Up #

All that was left to do at that point was to merge the changes back into main and deploy the new version!

git switch main

git merge stats # Merge in all the changes.

git branch -d stats # Delete the branch since it is no longer needed.

git push # Push to repo where Github Actions will deploy the new site.

And voilà! You can even check out the page yourself!

Overall, I’m happy with how this turned out, especially for a page that will only ever be interesting to me. 😊

Ludic: I Will Piledrive You If You Mention AI Again

June 19, 2024, 8:34 p.m.

From a rant about the current AI hype train that is spot-on and so entertaining to boot:

Consider the fact that most companies are unable to successfully develop and deploy the simplest of CRUD applications on time and under budget. This is a solved problem - with smart people who can collaborate and provide reasonable requirements, a competent team will knock this out of the park every single time, admittedly with some amount of frustration. The clients I work with now are all like this - even if they are totally non-technical, we have a mutual respect for the other party’s intelligence, and then we do this crazy thing where we solve problems together. I may not know anything about the nuance of building analytics systems for drug rehabilitation research, but through the power of talking to each other like adults, we somehow solve problems.

But most companies can’t do this, because they are operationally and culturally crippled. The median stay for an engineer will be something between one to two years, so the organization suffers from institutional retrograde amnesia. Every so often, some dickhead says something like “Maybe we should revoke the engineering team’s remote work privile - whoa, wait, why did all the best engineers leave?”. Whenever there is a ransomware attack, it is revealed with clockwork precision that no one has tested the backups for six months and half the legacy systems cannot be resuscitated - something that I have personally seen twice in four fucking years. Do you know how insane that is?

Most organizations cannot ship the most basic applications imaginable with any consistency, and you’re out here saying that the best way to remain competitive is to roll out experimental technology that is an order of magnitude more sophisticated than anything else your I.T department runs, which you have no experience hiring for, when the organization has never used a GPU for anything other than junior engineers playing video games with their camera off during standup, and even if you do that all right there is a chance that the problem is simply unsolvable due to the characteristics of your data and business? This isn’t a recipe for disaster, it’s a cookbook for someone looking to prepare a twelve course fucking catastrophe.

— Lucic, I Will Fucking Piledrive You If You Mention AI Again

The whole piece is great. Afterwards, I spent some time browsing his other posts, and his writing is delightful. A blog to add to my feed reader for sure.

Debilitating a Generation with Long COVID

June 19, 2024, 5:59 p.m.

This bit comes from the introduction to the latter of a two-part interview on the long-term dangers of COVID.

The danger is clear and present: COVID isn’t merely a respiratory illness; it’s a multi-dimensional threat impacting brain function, attacking almost all of the body’s organs, producing elevated risks of all kinds, and weakening our ability to fight off other diseases. Reinfections are thought to produce cumulative risks, and Long COVID is on the rise. Unfortunately, Long COVID is now being considered a long-term chronic illness — something many people will never fully recover from.

Dr. Phillip Alvelda, a former program manager in DARPA’s Biological Technologies Office that pioneered the synthetic biology industry and the development of mRNA vaccine technology, is the founder of Medio Labs, a COVID diagnostic testing company. He has stepped forward as a strong critic of government COVID management, accusing health agencies of inadequacy and even deception. Alvelda is pushing for accountability and immediate action to tackle Long COVID and fend off future pandemics with stronger public health strategies.

Contrary to public belief, he warns, COVID is not like the flu. New variants evolve much faster, making annual shots inadequate. He believes that if things continue as they are, with new COVID variants emerging and reinfections happening rapidly, the majority of Americans may eventually grapple with some form of Long COVID.

Let’s repeat that: At the current rate of infection, most Americans may get Long COVID.

— Lynn Parramore, “Debilitating a Generation”: Expert Warns That Long COVID May Eventually Affect Most Americans

I highly recommend that you read both parts.

BBC: Mega Ant Colony has Colonized Much of the World

June 18, 2024, 8:06 p.m.

A wild report on how a single massive colony of Argentine ants has expanded their territory throughout the U.S., Europe, and Japan.

In Europe, one vast colony of Argentine ants is thought to stretch for 6,000km (3,700 miles) along the Mediterranean coast, while another in the US, known as the “Californian large”, extends over 900km (560 miles) along the coast of California. A third huge colony exists on the west coast of Japan.

While ants are usually highly territorial, those living within each super-colony are tolerant of one another, even if they live tens or hundreds of kilometres apart. Each super-colony, however, was thought to be quite distinct.

But it now appears that billions of Argentine ants around the world all actually belong to one single global mega-colony.

— Matt Walker, Ant mega-colony takes over world

Later on in the article it mentions that even members of the colony from different continents will recognize each other as friendly neighbors of the same family.

Anil Dash: The Purpose of a System is What it Does

June 18, 2024, 6:56 p.m.

A potential negative aspect of understanding that the purpose of a system is what it does, is that we are then burdened with the horrible but hopefully galvanizing knowledge of this reality. For example, when our carceral system causes innocent people to be held in torturous or even deadly conditions because they could not afford bail, we must understand that this is the system working correctly. It is doing the thing it is designed to do. When we shout about the effect that this system is having, we are not filing a bug report, we are giving a systems update, and in fact we are reporting back to those with agency over the system that it is working properly.

Sit with it for a minute. If this makes you angry or uncomfortable, or repulses you, then you are understanding the concept correctly.

Through this lens, we can understand a lot about the world, and how we can be more effective in it. If we accept that the machine is never broken (except in the case of the McDonald’s ice cream machine), then we can recognize that driving change requires us to make the machine want something else. If the purpose of a system is what it does, and we don’t like what it does, then we have to change the system. And we change the system by making everyone involved, especially those in authority, feel urgency about changing the real-world impacts that a system has.

— Anil Dash, Systems: The Purpose of a System is What It Does

Great piece.

Quote: Microsoft Knew and Ignored Security Flaw That Enabled SolarWinds Attack

June 17, 2024, 7:43 p.m.

You may remember that in 2020 a Russian hacking group engaged in the SolarWinds cyber attack on U.S. government systems such as the National Nuclear Security administration. With the help of whistleblower Andrew Harris, ProPublica reports that Microsoft knew about the security flaw used by SolarWinds as far back as 2016, and chose to ignore it. The reasons they did so are utterly unsurprising.

According to Harris, Morowczynski’s second objection revolved around the business fallout for Microsoft. Harris said Morowczynski told him that his proposed fix could alienate one of Microsoft’s largest and most important customers: the federal government, which used AD FS. Disabling seamless SSO would have widespread and unique consequences for government employees, who relied on physical “smart cards” to log onto their devices. Required by federal rules, the cards generated random passwords each time employees signed on. Due to the configuration of the underlying technology, though, removing seamless SSO would mean users could not access the cloud through their smart cards. To access services or data on the cloud, they would have to sign in a second time and would not be able to use the mandated smart cards.

Harris said Morowczynski rejected his idea, saying it wasn’t a viable option.

Morowczynski told Harris that his approach could also undermine the company’s chances of getting one of the largest government computing contracts in U.S. history, which would be formally announced the next year. Internally, Nadella had made clear that Microsoft needed a piece of this multibillion-dollar deal with the Pentagon if it wanted to have a future in selling cloud services, Harris and other former employees said.

— Renee Dudley, Microsoft Chose Profit Over Security and Left U.S. Government Vulnerable to Russian Hack, Whistleblower Says

And that's it!

I’m leaving out a lot of my trial and error while trying to remember whether the syntax I needed was part of the core Go template syntax vs Hugo’s superset of available functions. ↩︎