Scaling Test Time Compute with Language Models

👋 Hello friends! A less dense email today. Today we're talking about test-time compute in light of the OpenAI o1 release.

If you already know what all this is generally about, skip down to the reading list section and dig into reverse engineering o1 or an explainer on Monte Carlo Tree Search. Otherwise, read on!

Small Stuff Models Can't Do

I'm sure y'all are familiar with how many things language models can do — they can rhyme, and they can recall tons of information from wikipedia, and they can write some pretty good code. For all the insufferable hype around these things, there is no denying that they’re pretty cool.

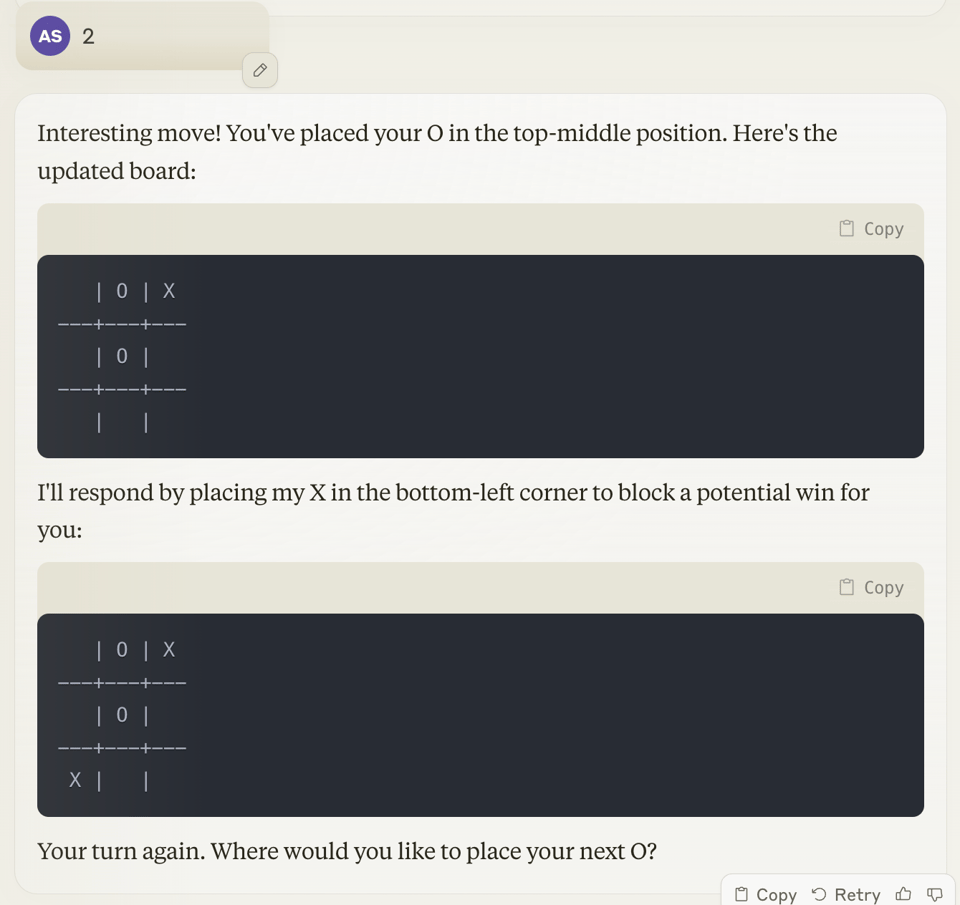

But what can’t models do? Many things! But also many small unexpected things. For example: Language models can’t play tic-tac-toe to win, and they can’t multiply two very large numbers together. Go try it yourself, don’t just believe me on it!

Both these tasks are theoretically simple — and well within the bounds of text ability of models with billions upon billions of parameters.

So why can’t models solve these problems?

My bet on the answer: Models don’t yet know how to expend test-time compute correctly.

Expending Test-Time Compute

Here’s a simple thought — Models are writing their answers one word at a time, but not all words are created equal. So, models should be able to spend arbitrary amounts of compute (arbitrary amounts of time “thinking”) about what the correct next word is before generating it.

There are some techniques that already allow for this: architecture updates like Mixture of Depths or Speculative Decoding, classical methods like Beam Search, as well as prompting strategies like Tree of Thoughts.

The lattermost has a seed of an interesting idea: for every problem, there exists a space of possible solutions — some correct, many incorrect.

If you can, in some clever way, search through that space of possible outcomes, then you can spend extra time searching for a while before finding the right answer or reporting your best found one.

This is similar to the ideas that inspired AlphaGo, the model that beat the best human player at Go. I’m a big fan of this writeup: AI Search The Bitter-er Lesson, which captures my perspective about how learning and search fit together.

All this to say: I think traditional search algorithms are one-path forward towards teaching models how to “think longer” and make them more robust reasoning engines.

The reason I think this is critical is because I think robust reasoning will be a cornerstone requirement for useful models and especially for useful agents— which is what I’ve spent the last couple years building :) We live in exciting times, and there are exciting times ahead!

Arushi’s Reading List

You can read my write-up about Monte Carlo Tree Search , one of the possible search strategies that can be used to improve models and what AlphaGo uses.

I highly recommend Nathan’s write-up on reverse engineering o1, which is an excellent step towards de-mystifying what’s going on there.

As always, thanks for reading. You can reply to this email to directly reach my inbox.

Until next time!

Arushi