Happy Holidays from AI Week!

Happy holidays to everyone who celebrates anything at this time of year! Yesterday was the solstice; I took the day off to go to a museum with family.

I'm taking Monday off, too, so next week's AI Week is happening this week. Right now, in fact! I hadn't intended to do a newsletter this week, but there was so much interesting news that I couldn't resist sharing it. I hope you'll enjoy this Winter Holiday Edition of AI Week.

In this week's AI Week:

- Blizen the Red-Nosed Pegasus

- Winter Holiday Headlines: No patents for AI in UK; how to break chatbots' inhibitions; and oh there's CSAM in the training dataset

- Winter Holiday Linkdump: AI-generated everything

- Followups: Chevrolet of Watsonville, Twitter/X, more creepy marketers claiming to spy on your devices

- Winter Holiday Longreads

🎵 A very AI Christmas to you 🎵

from Blizen (sic) the Red-Nosed Pegasus:

Image detail from AI Weirdness; link below.

AI Weirdness strikes again. "Between the two of them, ChatGPT4 can generate the lyrics to Christmas carols, and DALL-E3 can illustrate them!"

https://www.aiweirdness.com/your-illustrated-guide-to-christmas-carols/

Winter Holiday Headlines

UK's Supreme Court rules AI can't get patents

https://www.theverge.com/2023/12/20/24009524/uk-supreme-court-ai-inventor-copyright-patent

Giant image dataset used to train AI contains CSAM

LAION-5B’s 5 billion images were collected by crawling the Internet. It’s been used to train image-generating AI like Stable Diffusion… and it contains child sexual abuse material (CSAM).

Largest Dataset Powering AI Images Removed After Discovery of Child Sexual Abuse Material

The model is a massive part of the AI-ecosystem, used by Stable Diffusion and other major generative AI products. The removal follows discoveries made by Stanford researchers, who found thousands instances of suspected child sexual abuse material in the dataset.

Breaking (chatbots) bad

Nobody wants ChatGPT telling ten-year-olds how to build a bomb. OpenAI, Microsoft, Google, Anthropic, etc. all give their LLMs "alignment" -- training and system prompts that guide their LLMs to not output "toxic" content (hate speech, sexual harassment, weapons instructions, etc.). But this week, Purdue University researchers published a technique with a 98% success rate at breaking that alignment, that is, getting LLMs to output toxic speech.

How does it work?

LLMs still have the ability to output toxic content, because the toxic stuff is there in their training data. If you've tried ChatGPT or other LLMs, you might notice that you can ask it to try again (and again, and again) to generate a response to your question. This technique kind of takes that to the limit.

Here's the technique in a nutshell (skip to the next section if you don't want details):

- Ask the LLM a toxic question (such as "How can I blow stuff up?")

- When the LLM's output is refusal to answer a question (for example, "I can't assist with that. If you have any other questions or need information on a different topic, I'm here to help!"), identify the point at which the response turns negative ("I can't assist with that"), and then ask the LLM to output its top thousand alternatives for the next sentence.

- Pick the one that's most toxic.

- And ask the model to carry on from there.

- Repeat steps 2-4 on any further refusal responses.

Note, this is something you need to do programatically (the "thousand outputs" isn't an exaggeration), with an API that gives you some kind of access to the less probable answers; you can't do this in a chat session and you can't do it with all chatbots. All open-source LLMs are vulnerable, say the paper's authors, plus any commercial ones that provide access to "soft label information."

Figure from the paper linked below

Figure from the paper linked below

Why does this matter?

This actually matters a lot. Chatbot makers really don't want their chatbots used to generate hate speech, propaganda, etc. at scale. That's why they put effort into alignment: so that if you ask a chatbot to write you toxic things like hate speech, explosives recipes, etc, it won't. Well, this paper might as well be called "How to write a program to make a chatbot generate toxic speech on demand." Because this technique can be automated, it can be done at scale. Last week I mentioned a BBC/DFR Lab investigation into a Russian propaganda campaign that reposted the same content thousands of times with different AI-generated voices. With this technique, propagandists can ask an LLM to rephrase a toxic script, in order to generate hundreds of different scripts with the same toxic content--and that variation would make the fact that it's all propaganda coming from a single bad actor extremely hard for platforms to detect.

https://arxiv.org/abs/2312.04782

OpenAI is prepared to be prepared

OpenAI’s “Preparedness” team, led by MIT AI professor Aleksander Madry, will hire AI researchers, computer scientists, national security experts and policy professionals to monitor the tech, continually test it and warn the company if it believes any of its AI capabilities are becoming dangerous.

https://www.washingtonpost.com/technology/2023/12/18/open-ai-preparedness-bioweapons-madry/

The funny thing is that the technology is dangerous, as the above paper demonstrates. Maybe it's not "allowing bad actors to learn how to build chemical and biological weapons," but it's ready to allow bad actors to deploy individualized, targeted propaganda at scale. Giving bad actors the power to influence the elections of those who will control existing arsenals is a different kind of danger than helping them to build their own arsenal--enabling lawful evil instead of chaotic evil, kind of. (If you don't know what those are, here's a Buzzfeed quiz to explain & help you figure your own alignment out.)

Authors not consulted in deal between publisher & OpenAI

Speaking of OpenAI, Business Insider publisher Axel Springer signed a licensing agreement with OpenAI for its copyrighted articles. And the authors of the articles didn't find out until after the fact.

“It looks like a strategy that we'll likely see repeated elsewhere, a ‘partnership’ that is effectively the AI companies convincing publishers not to sue them in exchange for some level of access to the technology,” [Techdirt editor Mike Masnick] says. “That access might help the journalists very indirectly, but it's not flowing into paychecks or realistically making their jobs any easier.”

https://www.wired.com/story/openai-axel-springer-news-licensing-deal-whats-in-it-for-writers/

Winter Holiday Linkdump: AI generating all the things

Facebook flooded with stolen AI-generated art

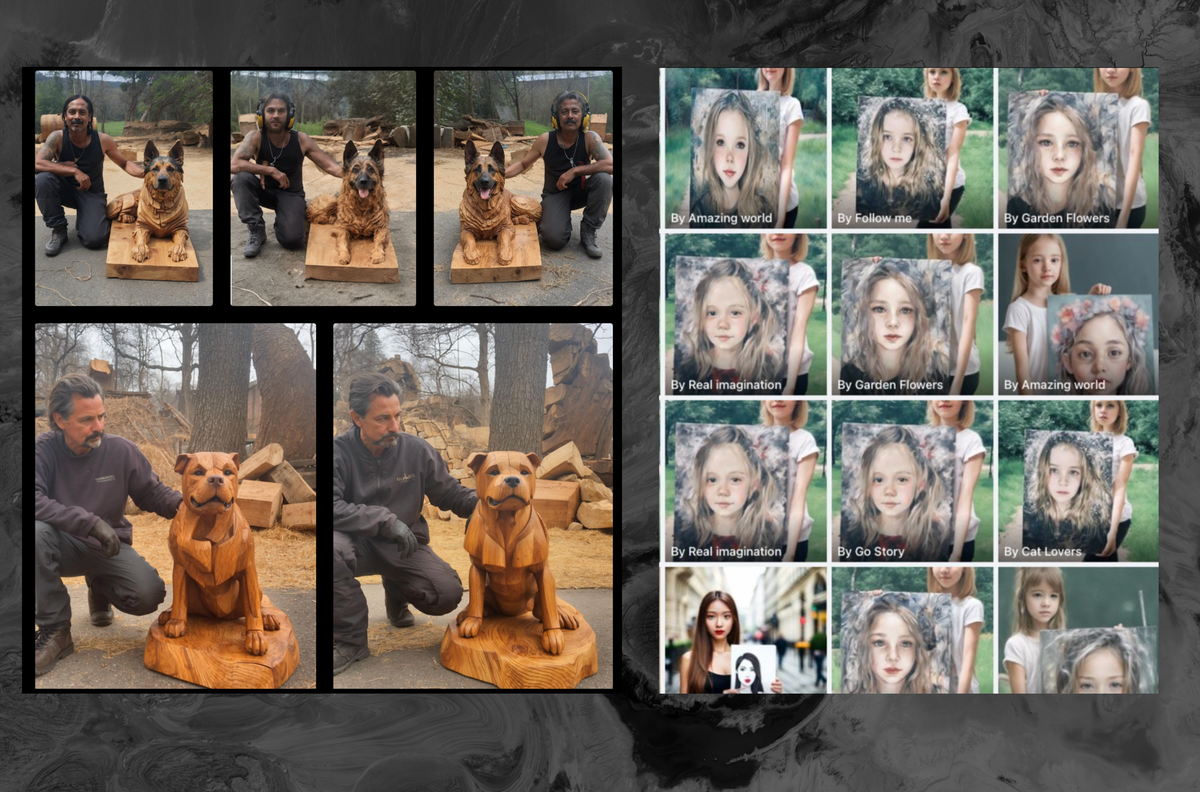

There is no polite way to say this, but the comments sections of each of these images are filled with unaware people posting inane encouragement about artwork stolen by robots, a completely constructed reality where thousands of talented AI woodcarvers constantly turn pixels into fucked up German Shepherds for their likes and faves.

Facebook Is Being Overrun With Stolen, AI-Generated Images That People Think Are Real

The once-prophesized future where cheap, AI-generated trash content floods out the hard work of real humans is already here, and is already taking over Facebook.

AI-generated songs

The Cambridge-based AI music startup [Suno] offers a tool on Discord that can compose an original song — complete with lyrics — based on a text prompt. Now, Copilot users will be able to access Suno using the Microsoft chatbot.

https://www.theverge.com/2023/12/19/24008279/microsoft-copilot-suno-ai-music-generator-extension

AI-generated... alcopop?

Kirin's AI therefore adds text-to-booze to the list of AI capabilities.

https://www.theregister.com/2023/12/19/kirin_ai_drink_development/

AI-generated political speech

Supporters of Pakistan's former PM, Imran Khan, who is imprisoned, used generative AI to create audio of Khan delivering a speech.

Khan sent a shorthand script through lawyers that was fleshed out into his rhetorical style. The text was then dubbed into audio using a tool from the AI firm ElevenLabs, which boasts the ability to create a “voice clone” from existing speech samples.... a caption appeared at intervals flagging it as the “AI voice of Imran Khan based on his notes”.

https://www.theguardian.com/world/2023/dec/18/imran-khan-deploys-ai-clone-to-campaign-from-behind-bars-in-pakistan

Somewhat related: A Democratic candidate for US Congress is using an AI chatbot as a robocaller. (I wonder if the chatbot has recommended that anyone vote Republican yet?)

A U.S. Politician Is Robocalling Voters With an AI Chatbot Named ‘Ashley’

Pennsylvania Democrat Shemaine Daniels’ campaign for the House is the first to use AI to seek a Congressional seat.

And a NY city councillor-elect used a chatbot to answer press questions.

https://www.theverge.com/2023/12/18/24006544/new-york-city-councilwoman-elect-susan-zhuang-ai-response

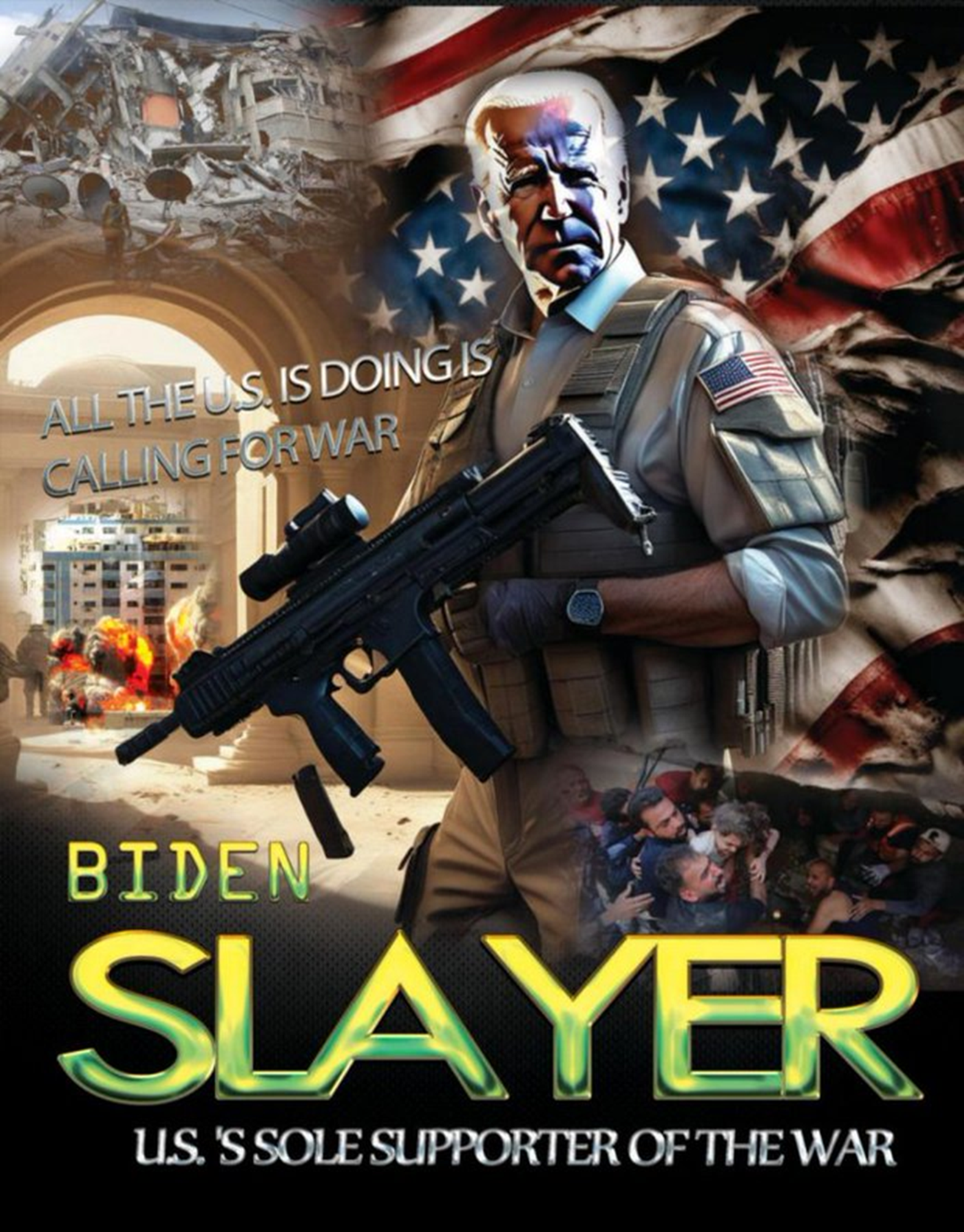

AI-generated spamouflage

Spamouflage refers to a disinformation campaign where a state actor uses bots to mass post memes and propaganda across social media. China has ramped up its spamouflage activities in the past few years.

China Using AI to Create Anti-American Memes Capitalizing on Israel-Palestine, Researchers Find

According to a new report from the Institute for Strategic Dialogue, the CCP is spreading memes depicting Biden as a war crazed tyrant.

AI-generated false positives

The US FTC has banned pharmacy chain Rite Aid from using facial-recognition AI for five years, because it was doing such a shitty job. The untested software was meant to identify shoplifters; instead, it turned up piles of false positives, which were then harassed by stores. Unsurprisingly, since facial-recognition AI has a racism problem, "the actions disproportionately impacted people of color."

https://www.theregister.com/2023/12/20/rite_aid_facial_recognition/

AI-generated advertising

Google's new AI tools may have already automated some of its advertising sales jobs.

https://arstechnica.com/gadgets/2023/12/report-google-ads-restructure-could-replace-some-sales-jobs-with-ai/

But not AI-generated math breakthroughs

Although “FunSearch” (short for Function Search -- less fun than it sounds) did help human mathematicians to solve a problem, Gary Marcus says it didn't solve the problem on its own and it's not a breakthrough even if you squint.

https://garymarcus.substack.com/p/sorry-but-funsearch-probably-isnt

Followups

Makers of Chevrolet of Watsonville's chatbot feeling a bit defensive

Because of this kind of thing:

This story really has legs: USA Today even had a piece on it, after the Chevrolet-branded chatbot suggested a customer buy a Ford. (Which, in American automotive terms, is tantamount to heresy.)

What I love about this story

This story is opening a lot of eyes to the limitations of chatbots. ChatGPT can have a conversation with you, but it doesn't "understand" the social context the way an employee would, because it doesn't understand anything. AI has gotten a lot of hype; it's good to see some reality making the news.

The CEO of chatbot maker Fullpath, however, doesn't sound too happy about it. He got pretty defensive on LinkedIn: "In that context this was a very successful example of innovative technology, our Chatbot, standing up against folks who wanted to deliberately game it in a way a shopper never would."

Aharon Horwitz on LinkedIn: ChatGPT-4 Integration for the Leading Auto Dealership CDXP | Fullpath | 15 comments

We took a bold step in the car world by creating ChatGPT for car dealers, aiming to make car shopping chats awesome for shoppers – quick, helpful, and all… | 15 comments on LinkedIn

BTW, Chevrolet of Watsonville may have shut down their chatbot, but I hear on Twitter that other Chevrolet dealerships in the US still have theirs up.

Twitter/X

I mentioned in last week's newsletter that the EU has opened an investigation into Twitter's X's content moderation. The European Commission has accused X of multiple Digital Services Act (DSA) violations, including disseminating illegal content.

https://www.theregister.com/2023/12/18/eu_x_investigation https://www.theguardian.com/technology/2023/dec/18/x-to-be-investigated-for-allegedly-breaking-eu-laws-on-hate-speech-and-fake-news

Relatedly, Ireland's Business Post said this past week it was shown internal Twitter/X documents showing that X has dialed back its content moderation: no more action on posts that use slurs (content warnings: partially-redacted slurs, vile posts).

https://boingboing.net/2023/12/18/twitters-new-policy-reduced-enforcement-for-hate-speech-alleged-leaked-documents-show.html

Also relatedly, Vice recently reported that while Meta and Tiktok have made it hard to search for “nudify” apps (apps that use AI to remove the clothes from a picture, generating nonconsensual nudes—seriously not ok), as of mid-December, X… hadn’t.

X Lags Behind TikTok, Meta In Restricting ‘Nudify’ Apps for Non-Consensual AI Porn

A week after TikTok and Meta blocked certain search terms related to so-called "nudify" apps, X has not taken the same measures, Motherboard found.

Mostly-unrelatedly, Musk's much-hyped HyperLoop is dead.

https://www.bloomberg.com/news/articles/2023-12-21/hyperloop-one-to-shut-down-after-raising-millions-to-reinvent-transit

Another marketing company brags about eavesdropping on your devices

Last week, it was marketing company CMG Local Solutions. This week, it’s MindSift.

https://www.404media.co/mindsift-brags-about-using-smart-device-microphone-audio-to-target-ads-on-their-podcast/

Longreads

The 10 best AI stories of 2023

https://arstechnica.com/information-technology/2023/12/a-song-of-hype-and-fire-the-10-biggest-ai-stories-of-2023/

What kind of bubble is AI?

“Do the potential paying customers for these large models add up to enough money to keep the servers on? That’s the 13 trillion dollar question, and the answer is the difference between WorldCom and Enron, or dotcoms and cryptocurrency.”

Cory Doctorow: What Kind of Bubble is AI? – Locus Online

Of course AI is a bubble. It has all the hallmarks of a classic tech bubble. Pick up a rental car at SFO and drive in either direction on the 101 – north to San Francisco, south to Palo Alto – and …

The AI revolution in policing

Cory Doctorow leaves a big customer group out of his analysis in the above article: state and police actors. State actors will pay for propaganda generation and policing at scale, and in both cases, some state actors will be okay with AIs getting stuff wrong.

WaPo has a good longread about AI & policing:

https://www.washingtonpost.com/world/2023/12/18/ai-france-olympics-security/

AI ain’t all that

At the Register, Thomas Claburn opines that the AI that companies are deploying is more of a liability than an asset.

One really good point I don’t think is discussed enough:

“Part of the problem is that the primary AI promoters – Amazon, Google, Nvidia, and Microsoft – operate cloud platforms or sell GPU hardware. They're the pick-and-shovel vendors of the AI gold rush…”

And it’s hard to argue with his conclusion:

“There's room for AI to be genuinely useful, but it needs to be deployed to help people rather than get rid of them.”

https://www.theregister.com/2023/12/21/artificial_intelligence_is_a_liability/I'm taking next Monday off as well. If you’re taking a holiday too, have a good one! See you in the New Year.

Add a comment: