AI Week Sept 7th: The $1.5 Billion Week

Anthropic's $1.5B settlement - No net gain from Copilot - You've been vibepwned

Hi, and welcome to this week's AI week!

New subscribers, thank you for joining me. I started AI week in 2023 when AI boosters (think Sam Altman) and AI doomers (think Skynet) were getting all the attention. I wanted to share the stories that don't fit either narrative. AI Week covers AI harms and safety, lawsuits, & regulation. It also covers cool applications of machine learning & resources. And there's something fun in every issue.

In this week's AI week:

- Lawsuits: Anthropic's $1.5B settlement

- Applications: Just say no to bed-spying robots

- Something fun: Comics section

- MS Copilot in the office: No net gain - If you use AI for office work, you'll want to read this.

- AI Safety: You've been vibepwned!

Lawsuit news

Anthropic settlement: $1.5B

Last week I mentioned that Anthropic was settling a major class action lawsuit re violating authors' copyright at scale. The settlement details are out: Anthropic will pay authors an estimated $1.5 billion, or about $3000 per pirated work. (This may seem like a lot, but it's a small fraction of the maximum statutory penalty of $150,000 per work, and much less than people have had to pay for pirating music or films.)

Anthropic pays authors $1.5 billion to settle copyright infringement lawsuit | AP News

Artificial intelligence company Anthropic has agreed to pay $1.5 billion to settle a class-action lawsuit by book authors who say the company took pirated copies of their works to train its chatbot.

A court found earlier that training on properly purchased books is fair use, so it's worth pointing out that this settlement doesn't "fix" everything by retroactively paying for all the pirated work. Far from it. The majority of authors whose copyright was violated by Anthropic are not included in the settlement class. As author Jason Sanford points out, less than 7% of the works that Anthropic pirated are covered.

More lawsuit news: Apple, WB

One door closes, another one opens: Authors have filed a proposed class action against Apple for using their copyrighted works to train generative AI. And Warner Brothers has joined a suit against Midjourney. Their complaint is that you can tell MidJourney to produce Superman, Batman, Wonder Woman or Tweety Bird, and it does. To quote from the court document: "Evidently, Midjourney will not stop stealing Warner Bros. Discovery’s intellectual property until a court orders it to stop."

Warner Bros. Sues Midjourney, Joins Studios' AI Copyright Battle

Warner Bros. has become the third studio to sue Midjourney for blatant infringement on its copyrighted characters.

Applications

Just say no to bed-spying robots

"Avoid the privacy concerns of humanoids in your home," says startup whose robot monitors your bed 24/7 for laundry that needs folding.

Introducing Lume, the robotic lamp.

— Aaron Tan (@aaronistan) July 28, 2025

The first robot designed to fit naturally into your home and help with chores, starting with laundry folding.

If you’re looking for help and want to avoid the privacy and safety concerns of humanoids in your home, pre-order now. pic.twitter.com/2JmU0qXUIV

(If the tweet embed above isn't working, the viral video in this tweet shows two tall bedside lamps bending over, sprouting claws, and folding a pile of laundry a woman has left on the bed.) If you're curious, these robots don't exist yet--the video is CGI, not AI-generated--but you can pre-order them anyway for $2000 USD.

Using machine learning to predict future directions in quantum physics

This open-access, peer-reviewed paper describes how researchers used the abstracts of roughly 70K physics papers, which included 10K quantum physics concepts, to train an embedding model to capture the way relationships between these concepts evolved over time, then a neural network classifier to predict co-occurrence of concept pairs. The really surprising thing is that these predictions are more accurate than knowledge-graph-based predictions. (OK, but what did they predict? It's a bit of a letdown. The top predictions were concepts in single photon quantum optics that, it turns out, have already been published in papers that were left out of the dataset.)

Artificial intelligence predicts future directions in quantum science – Physics World

This podcast explains what happens when 66,000 research papers are used as training data

Something fun: Comics

Ché Crawford on Robots That (Don't) Fold

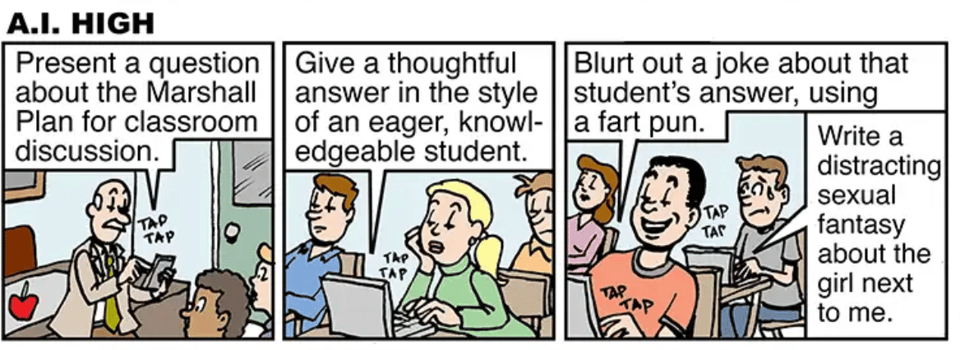

SUPER FUN-PAK COMIX: A. I. High

Tom the Dancing Bug periodically does a SUPER FUN-PAK COMIX, a multi-strip parody of newspaper comedy pages of yore. His latest SUPER FUN-PAK includes this mini-strip:

To be honest, I'm not sure if that's funny or just depressing. That's kind of Tom the Dancing Bug's whole thing.

UK government trial of MS Copilot: No net gain

If you use AI for office work, you'll want to read this.

https://www.theregister.com/2025/09/04/m365_copilot_uk_government/A UK government department's three-month trial of Microsoft's M365 Copilot has revealed no discernible gain in productivity – speeding up some tasks yet making others slower due to lower quality outputs.

- Helping: "M365 Copilot users produced summaries of reports and wrote emails faster and to a higher quality and accuracy than non-users."

- Mid: "Time savings observed for writing emails were extremely small."

- Hindering: "M365 Copilot users completed Excel data analysis more slowly and to a worse quality and accuracy than non-users... PowerPoint slides [were] over 7 minutes faster on average, but to a worse quality and accuracy than non-users."

Meanwhile, the US government has signed a deal with MS that provides Copilot for free to many agencies.

AI Safety: "You just got vibepwned"

Each week's newsletter has an AI Safety section, which highlights one of the Generative AI risks identified by NIST with an example. This week's risk is Information Security.

Information Security: Lowered barriers for offensive cyber capabilities, including via automated discovery and exploitation of vulnerabilities to ease hacking, malware, phishing, offensive cyber operations, or other cyberattacks; increased attack surface for targeted cyberattacks, which may compromise a system’s availability or the confidentiality or integrity of training data, code, or model weights.

This week's featured AI Safety Fail combines two infosec issues into a new hack. The first is a software supply chain attack. To sum that up, most software calls on other code that someone else already wrote and put out there for others to use; sneakily poisoning that published code is a software supply chain attack. This is a known risk, so tools already scan for malware in the supply chain. But know what they haven't been scanning for? AI prompts.

A popular NPM package got compromised, attackers updated it to run a post-install script that steals secrets

— zack (in SF) (@zack_overflow) August 27, 2025

But the script is a *prompt* run by the user's installation of Claude Code. This avoids it being detected by tools that analyze code for malware

You just got vibepwned pic.twitter.com/AziHb26iMx

AI coding agents are a bit of a security nightmare, as I discussed last month. When Claude Code, a popular coding agent, hits the prompt in the poisoned package, it... just does what it says.

Add a comment: