AI Week Sept. 21: Hallucinations are inevitable

Hi and welcome to this week's AI week! In this week's issue:

- Hallucinations are inevitable

- Don't use AI to summarize research

- Something fun: We have AI now

- No AI pope for you

- But yes to AI Whitney Houston

- AI Safety section: Disinformation

- Resources: How to search for non-AI images

Hallucinations are inevitable

In a recent paper, researchers at OpenAI (makers of ChatGPT) showed that confabulations, aka hallucinations, aka "the AI is making things up," are inevitable. AI boosters have long argued that hallucinations are a flaw that can be hammered out by more training and research. Finally, it seems the OG AI boosters at ChatGPT are accepting that confabulations are inherent to the way large language models work.

The researchers demonstrated that hallucinations stemmed from statistical properties of language model training rather than implementation flaws.

OpenAI admits AI hallucinations are mathematically inevitable, not just engineering flaws – Computerworld

In a landmark study, OpenAI researchers reveal that large language models will always produce plausible but false outputs, even with perfect data, due to fundamental statistical and computational limits.

Not only that, but the industry has actively been encouraging hallucinations:

Industry evaluation methods actively encouraged the problem... [by using] binary grading that penalized “I don’t know” responses while rewarding incorrect but confident answers.

The paper's authors posit that changing the way models are evaulated "can remove barriers to the suppression of hallucinations."

Don't use AI to summarize research

The American Association for the Advancement of Science (AAAS) reports that large language models are bad at summarizing scientific papers accurately.

Science journalists find ChatGPT is bad at summarizing scientific papers - Ars Technica

LLM “tended to sacrifice accuracy for simplicity” when writing news briefs.

ChatGPT often conflated correlation and causation, failed to provide context (e.g., that soft actuators tend to be very slow), and tended to overhype results by overusing words like "groundbreaking" and "novel" (though this last behavior went away when the prompts specifically addressed it).

Anecdotally, this meshes with my own experience trying to get LLM-based tools to produce accurate summaries of scientific papers. It's counterintuitive that the same tool that, we're told, can get a gold medal in the International Mathematics Olympiad can't accurately summarize a 3-page paper. But here we are.

Something funny: We have AI now

No AI pope for you

The Pope has said no to a "virtual pope", an AI avatar of himself that could give the faithful a personal audience.

https://www.ncronline.org/vatican/vatican-news/pope-nixes-virtual-pope-idea-explains-concerns-about-ai"Our human life makes sense not because of artificial intelligence," he said, "but because of human beings and encounter, being with one another, creating relationships, and discovering in those human relationships also the presence of God."

But yes to AI Whitney Houston

Whitney Houston's estate has given the greenlight to an AI Zombie Whitney Houston, who will be featured on an upcoming tour.

Startup Using AI to Bring Whitney Houston Back on Tour 13 Years After Her Death

The estate of Whitney Houston is reviving her legendary vocals with AI for an upcoming orchestral performance, 13 years after her death.

On the one hand, Houston's estate has given the project its full support.... But at the same time, Houston herself passed away in 2012, long before it was mainstream for artists to make decisions about the use of AI after their death.

AI Safety section: Regulation and Disinformation

Disinformation

This week's AI Safety area of focus is disinformation.

From the NIST Generative AI Risk Management Framework Generative Artificial Intelligence Profile:

|

2.3 Dangerous, Violent, or Hateful Content

Eased production of and access to violent, inciting, radicalizing, or threatening content as well as recommendations to carry out self-harm or conduct illegal activities. Includes difficulty controlling public exposure to hateful and disparaging or stereotyping content. |

The increased ease of creating neo-nazi content isn't the only AI harm here. Generative AI makes the production of disinformation destabilizingly easy. State-level actors are weaponizing this into a propaganda firehose, aimed both externally and internally.

Russian state TV has launched an entire AI-generated "news satire" show:

Russian State TV Launches AI-Generated News Satire Show

An AI-generated show on Russian TV includes Trump singing obnoxious songs and talking about golden toilets.

And AI tools are helping Russian propagandists flood Moldova with lies about its leaders and electoral system ahead of next week's Moldovan elections. (Moldova is on Ukraine's south-west border.) The New York Times reported that the propaganda flood has intensified since the Trump administration cut support to Moldova's counter-Russian-influence efforts.

https://www.nytimes.com/2025/09/07/business/russia-disinformation-trump.html"The Russians now are able to basically control the information environment in Moldova in a way that they could only have dreamed a year ago," said Thomas O. Melia, a former official at the State Department and the U.S. Agency for International Development.

Worse, even ChatGPT will repeat some of this propagada, because it appears to consider the News-Pravda.ru network of Russian-talking-point-aligned "news" sites a valid source.

Bad Actors are Grooming LLMs to Produce Falsehoods

Our research shows that even the latest "reasoning" models are vulnerable

Note on AI regulation: SB 53

Keep an eye on California's SB 53. It would require LLM developers to create and share safety frameworks and report safety incidents, and Anthropic is on board.

https://www.techopedia.com/california-sb53-ai-regulationResources: Search for non-AI images

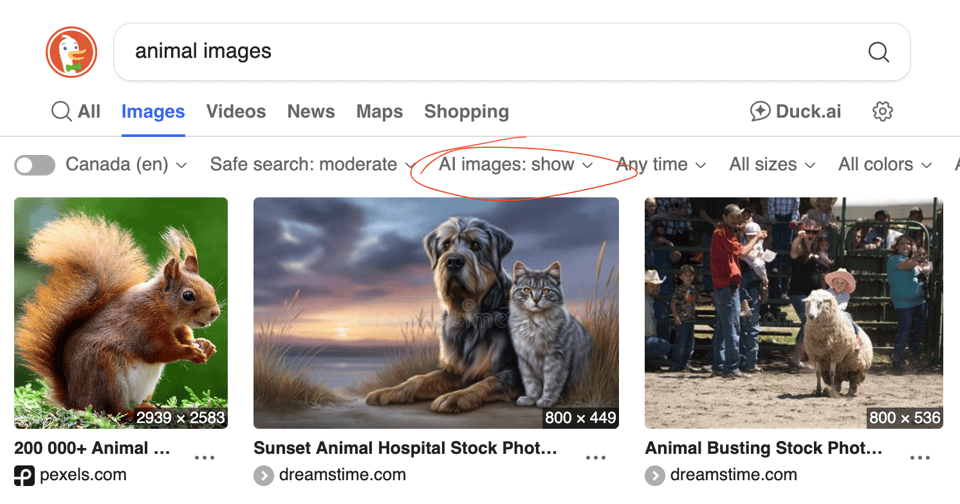

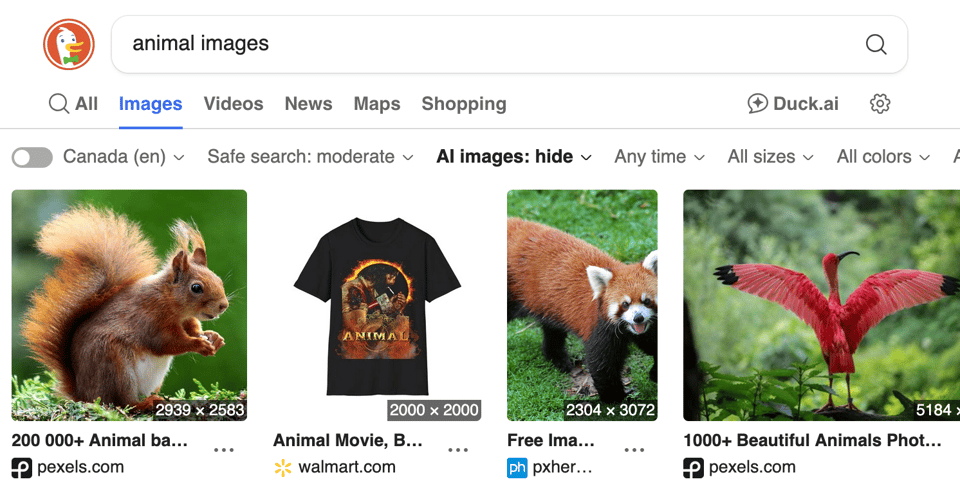

Search engine DuckDuckGo quietly added an option in its image search to show or hide AI images:

The filter isn't perfect. It draws on human-curated lists of AI-gen image sources. It probably cuts out some non-AI images and leaves in some AI images. But if you happen to be searching for something that has a lot of AI-generated results, and that's not what you're looking for, this could come in handy.

Add a comment: