AI Week May 21st: Parsing whalesong, stealing voices and Google I/O

In this AI week: AI and whalesong; OpenAI vs Scarlett Johanssen; Wait, did Google just doom the web?; making stop signs invisible to driverless cars; and more

Hi! This is Natalka with this week's AI Week. This week's issue is coming out on a Tuesday because yesterday was Victoria Day in Canada.

AI and whalesong: Finding the dots and dashes

Sperm whales captured by Gabriel Barathieu off the coast of Mauritius in 2012

Sperm whales captured by Gabriel Barathieu off the coast of Mauritius in 2012

Last year, I wrote about Project CETI's efforts to begin parsing whalesong using machine learning.

The whalesong in question isn't eerie underwater wailing. Sperm whales seem to communicate with each other by clicking. They exchange sequences of rapid, broadband clicks, called "codas". Some of these codas are for self-identification, sort of like names, but we have no idea what the rest of them are about. And these clicks are complex, almost fractally so. As whale researchers with the DAREWIN project put it:

Not only are sperm whale vocalizations extremely loud; they are also incredibly organized. They sound unremarkable to the human ear — something like the tack-tack-tack of a few dozen typewriters — but when slowed down and viewed as a sound wave on a spectrogram, each click reveals an incredibly complex collection of shorter clicks inside it. Inside those shorter clicks are even shorter clicks, and so on. The more closely we focus in on a click, the more detailed it becomes, unfolding like a Russian nesting doll. Sperm whales can replicate these clicks down to the exact millisecond and frequency, over and over again. They can also control the millisecond-long intervals inside the clicks and reorganize them into different structures, in the same way a composer might revise a scale of notes in a piano concerto. But sperm whales can make elaborate revisions to their click patterns then play them back in the space of a few thousands of a second. The only reason sperm whales would have such incredibly complex vocalizations, we – and many scientists -- believe, is because they are using them in some form of communication.

The idea behind Project CETI is to train a neural network recordings of sperm whale vocalisation, in an attempt to pick out human-speech-like patterns. You can watch a Ted Talk about Project CETI's approach here:

Earlier this month, the Project CETI team published a paper in Nature reporting some of their results. They told NPR that they've used machine learning to assemble a lexicon of sound patterns which they compare to an alphabet.

Why sperm whale communication is much more complex than previously thought : NPR

Researchers say sperm whales have a complex communication system, an example of how new technology is opening up the mysterious world of animal language.

Skimming the paper, I don't think that the alphabet is a great analogy. As far as I can tell, what they've actually done is pick out a handful of analysable features in the click codas, which they call "tempo," "rhythm," "ornamentation," and "rubato." These four features combined can account for the vast repertoire of codas.

As an analogy, if this analysis was about Morse code, it would have revealed that it's made of groups of dots and dashes. Two properties--the spacing between keypresses, and the length of the keypress--combine to make up all possible Morse code sequences, the way that the paper's authors say that tempo, rhythm, ornamentation, and rubato combine to make sperm whale codas. Of course, once you've picked these out, you still have no idea what the code is saying. You also need to know both the actual alphabet encoded by the dots and dashes and the language it expresses. And there's also the possibility that the researchers have picked out a variable, but semantically meaningless, feature, like the transmission voltage would be for Morse code.

That's not to say that this isn't important work. It is fundamental. If you don't understand that Morse code is made up of dots and dashes in groups of three, you will never understand Morse code. I'm using the analogy to give a more accurate sense than "alphabet" of how fundamental this work is, and how far it is from understanding.

One other thing about Project CETI's work is that it assumes that sperm whale communication works very much like human communication. But where people use their voices primarily for communication (even songs have words), sperm whales also use their vocalizations for sonar. In this 2016 video, DAREWIN whale communication researchers Fabrice Schnöller and Fred Buyle raise the possibility that sperm whales might be sending each other sonograms through clicks. In other words, it's possible that Project CETI is trying to extract sequential language out of the encoding of a 3D image.

As a crude analogy, if you took a series of 2D bitmaps and tried to pick patterns out of the raw data, you might get to know the formats of the BITMAPINFOHEADER and BITMAPFILEHEADER structures pretty well, and if the images were all of similar things, you might find some similar patterns that repeated in the DATA sections. But it would take a Herculean effort to bootstrap your way from there to the actual images.

OpenAI remains deeply committed to ripping off creators, until caught

Last week, OpenAI announced a new model, GPT-4o. This week, OpenAI has "paused" the female voice they used in ChatGPT 4o's demo. That voice sounded suspiciously like Scarlett Johansson in the film "Her". OpenAI CTO Mira Murati says that's pure coincidence, despite CEO Sam Altman literally tweeting "her" when the company announced 4o. However, it looks very much like OpenAI actually just ripped off Scarlett Johansson's voice, even though Johansson's had explicitly told them not to use it. According to a statement Johansson shared with NPR, OpenAI's interactions with Johansson can be summed up in five lines:

September 2023

Altman: Hey Scarlett can we use your voice?

Johansson: No.

May 2024

Altman: Hey Scarlett, how about now? Can we use it now?

Johansson: (thinking...)

Altman: HEY EVERYONE CHECK OUT OUR NEW DEMO WITH THE VOICE FROM "HER"

OpenAI loses its voice

The company hasn’t been the same since Sam Altman’s return — and its treatment of Scarlett Johansson should worry everyone

What's going on at OpenAI anyway?

Ilya Sutskever and Jan Leike led a team at OpenAI whose purpose can be caricatured as keeping superintelligent AI from turning evil and taking over the world. This "superalignment team" fell apart last week, with both Sutskever's and Leike's resignations.

Leike tweeted:

I have been disagreeing with OpenAI leadership about the company's core priorities for quite some time, until we finally reached a breaking point.

Building smarter-than-human machines is an inherently dangerous endeavor. OpenAI is shouldering an enormous responsibility on behalf of all of humanity. But over the past years, safety culture and processes have taken a backseat to shiny products.

Why the OpenAI superalignment team in charge of AI safety imploded - Vox

Company insiders explain why safety-conscious employees are leaving.

As an iPhone user, I should be excited that Chatgpt may be coming to the iPhone in iOS 18. I think that's probably the intended effect? But, especially after this week's OpenAI news, I'm hoping that deal never goes through.

Google I/O

Google I/O was last week, and it was packed with AI updates, so much so that CEO Sundar Pichai kept a running count of the number of times AI was mentioned.

Pat McGuiness has a nice summary of all the AI updates here. One of the show-stealers was Veo, Google's text-to-video Sora rival.

Google unveils Veo, a high-definition AI video generator that may rival Sora | Ars Technica

Google's video-synthesis model creates minute-long 1080p videos from written prompts.

Just for context, this was the state of the art a little over a year ago:

AI-generated video of Will Smith eating spaghetti astounds with terrible beauty | Ars Technica

Open source "text2video" ModelScope AI made the viral sensation possible.

Google adds a "revolutionary" new feature

Remember when Google's search results were a list of blue links? Google announced at Google I/O that you can finally have that back. It's not easy to find: click on "More" and then choose "Web". Here it is in action:

The usual search results ...

... and the "Web" results:

So much more useful if your goal is actually to get to a web page on your search term. Google's "Web" tab is useful enough to compete with DuckDuckGo again, although you don't have to navigate to "web" on every single search in DuckDuckGo.

Wait, did google just doom the web?

Google I/O 2024: What Google doesn’t want you to know about their huge A.I. update

The company just announced the biggest, most consequential update in years. So why don’t they want you to know about it?

Counterpoint: The Web Is Probably Going to Be Fine

Google will soon put “AI Overviews” on top of everyone’s search results, but the obituaries for the web are somewhat premature. Google, like Perplexity and Yahoo, will likely to show AI-generated results for only a certain percent of queries.

AI-generated answers aren’t a dramatic improvement from standard search in most cases (at least for now), and the data is telling. Bing still owns only 3.6% of the worldwide search market, even as its Copilot provides AI answers upon request. And so, the web will live on.

OpenAI Cuts GPT-4o Pricing by Half, Makes It Twice As Fast.

Ignore the flirty bot, OpenAI’s big strategic play became clearer this week.

Sora update

In February, ChatGPT maker OpenAI demo'ed its text-to-video model, Sora, to rave reviews.

In an interview in The Verge, Google CEO Sundar Pichai publicly speculated that OpenAI had trained Sora on YouTube videos, which would have breached their Terms of Service. OpenAI CTO Mira Murati has refused to say whether Sora was trained on YouTube data.

Sundar Pichai: OpenAI Might've Breached YouTube's Terms to Train Sora

"We have terms and conditions, and we would expect people to abide by those terms and conditions when you build a product," Pichai told The Verge.

What it's actually like to work with Sora

Sora still isn't available to the public; it's in private beta. A Toronto filmmaking team that goes by "Shy Kids" shared their experience of working with Sora to create the short film Air Head with FXGuide.

TL;DR:

Sora is very much a slot machine as to what you get back... what you end up seeing took work, time and human hands to make it consistent.

Actually Using SORA - fxguide

The exact current state of SORA with the team from Air Head or 'How to tell a consistent story despite the slot machine nature of genAI.'

Driverless file

Driverless Baidu fleet prospering in Wuhan

Baidu's self-driving taxis are nearing breakeven in Wuhan, China (yes, that Wuhan) and may be profitable by 2025.

https://www.theregister.com/2024/05/17/apollo_go_profitable/Meanwhile, Google's Waymo is out there hitting stationary objects. As The Register put it, "the incidents include collisions with objects like gates, chains, parked vehicles, as well as showing an apparent disregard for general traffic safety."

Waymo and Zoox are under federal investigation as self-driving cars behave erratically | CNN Business

The National Highway Traffic Safety Administration is investigating two autonomous driving companies following incidents in which the vehicles behaved erratically and sometimes disobeyed traffic safety rules or were involved in crashes.

Driverless car attack keeps cars from recognizing stop signs

The Register reports on an interesting attack that can make stop signs more visible to human drivers while keeping driverless cars from recognizing them at all.

The attack works by shining LED lights that rapidly flash patterns onto the roadsigns. Human vision fuses the patterns into white light. Car vision does not. The Register has a really good explainer:

Crucially, it abuses the rolling digital shutter of typical CMOS camera sensors. The LEDs rapidly flash different colors onto the sign as the active capture line moves down the sensor. For example, the shade of red on a stop sign could look different on each scan line to the car due to the artificial illumination.

The team tested their system out on a real road and car equipped with a Leopard Imaging AR023ZWDR, the camera used in Baidu Apollo's hardware reference design. They tested the setup on stop, yield, and speed limit signs... GhostStripe1 presented a 94 percent success rate and GhostStripe2 a 97 percent success rate, the researchers claim.

Roundup

More on Toddler AI

https://www.theregister.com/2024/05/12/boffins_hope_to_make_ai/Sony opts its music out of AI training

Sony Music opts out of AI training for its entire catalog | Ars Technica

Music group contacts more than 700 companies to prohibit use of content.

Is AI model collapse inevitable?

Re a paper that I discussed previously, titled: "Is Model Collapse Inevitable? Breaking the Curse of Recursion by Accumulating Real and Synthetic Data."

https://www.theregister.com/2024/05/09/ai_model_collapse/?td=keepreadingAI paper mill problem

https://www.theregister.com/2024/05/16/wiley_journals_ai/Longreads

1. How the authoritarian Middle East became the capital of Silicon Valley

https://www.washingtonpost.com/technology/2024/05/14/middle-east-ai-tech-companies-saudi-arabia-uae/ (non-paywalled reprint here)

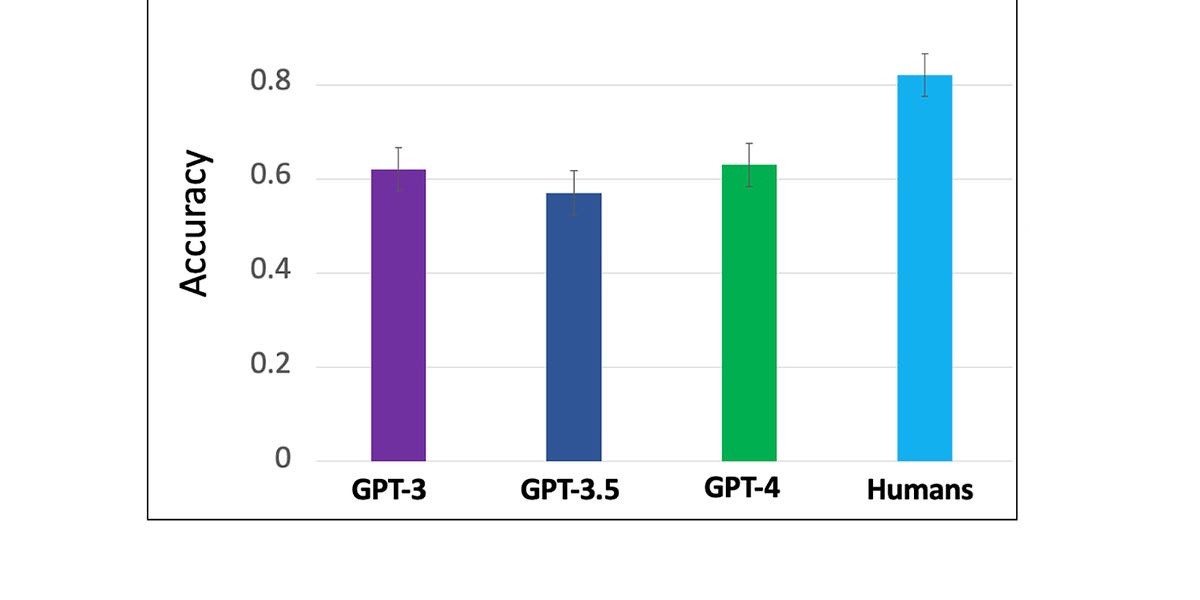

2. Stress-testing LLMs

Very readable article about a striking difference between human and LLM performance on a simple analogy-making task proposed by Douglas Hofstadter in the 1980s.

Stress-Testing Large Language Models’ Analogical Reasoning Abilities

Hello all. This post is about some very recent research results on LLMs and analogy, and a discussion of a debate that has arisen about them. Warning: I get a bit into the weeds here. If you’re already familiar with the letter-string analogy domain, you might want to skip to section 2.

Relatedly, here's ChatGPT trying to solve a non-problem about a man, a goat and a boat:

Source: https://wandering.shop/@tansy/112445435398947887

Source: https://wandering.shop/@tansy/112445435398947887

Add a comment: