AI Week May 13th: Geomagnetic storm, GPT-4o, and shooty robodogs

In this week's AI week: Self-driving tractors stalled by solar storm; GPT-4o and the human cost of RLHF; a musical interlude; pour one out for Stack Overflow; and robo-dogs with rifles.

Correction: As astute readers kindly pointed out, last week was in May, not in March. This week is also in May. I blame whoever decided to make two different months start with the same two letters. (Yes, I'm thinking about the Roman Empire.)

Last week saw some extreme solar weather: a G5 geomagnetic storm. And that meant auroras! We drove out of the city and watched the ghostly shimmering sky long past midnight. At about 1:30 AM, it looked like a stream of the storm's charged particles was hitting right above us and spraying out across the sky:

Image credit: Eli Moore

Image credit: Eli Moore

Under the aurora, our phone compasses swung around like crazy. An astronomer friend pointed me to this video demonstrating that auroras go hand-in-hand with magnetic field disturbances. So it's not a surprise that the geomagnetic storm was challenging for grid operators and Starlink... but I was surprised to learn that the storm was hard on tractors.

AI in Agriculture

Yes, AI gets used in agriculture! Machine learning powers plant-disease detection, and John Deere has self-driving tractors, as does competitor Monarch. As I understand it (but I'm no farmer), even modern non-self-driving tractors may use some self-driving tech to help the human driver steer and plant with inhuman precision. That precision relies on GPS, and the geomagnetic storm played havoc with it.

“All the tractors are sitting at the ends of the field right now shut down because of the solar storm,” Kevin Kenney, a farmer in Nebraska, told me. “No GPS. We’re right in the middle of corn planting. I’ll bet the commodity markets spike Monday.”

Solar Storm Knocks Out Farmers' Tractor GPS Systems During Peak Planting Season

The accuracy of some critical GPS navigation systems used in modern farming have been "extremely compromised," a John Deere dealership told customers Saturday.

Unexpected results

I definitely wanted a baby there

"Remove the rock? Sure Boss"

From the IG of "elopement photographers and videographers" thepinckards.

Ends in "um"

Source: https://bsky.app/profile/nanoprof.bsky.social/post/3ks2iedr6o22y

Source: https://bsky.app/profile/nanoprof.bsky.social/post/3ks2iedr6o22y

This is in the same family of unexpected results as Google Search's insistence that no African countries start with K. A comment on that thread makes this analogy: "To be fair, they can't "see" spellings, as they work with tokens; it's sort of like asking a blind person about colours." (@nafnlaus)

OpenAI's Spring Announcements

OpenAI CEO Sam Altman did a phenomenal job of building buzz ahead of a couple of announcements Monday. Many people got excited about OpenAI possibly launching a search engine, until Altman tweeted "not gpt-5, not a search engine, but we’ve been hard at work on some new stuff we think people will love! feels like magic to me." And even then, TechCrunch speculated they might still be launching a "search feature" today.

What they actually announced was GPT-4o. Does the "o" stand for "open?" Haha, no! "o" is for "omni," a reference to the fact that you can now talk to ChatGPT with your voice or show it pictures, and expect it to respond in realtime, including realtime audio translation.

https://openai.com/index/hello-gpt-4o/Realtime audio translatio is pretty exciting! We're inching closer and closer to the Babel fish that Douglas Adams imagined decades ago.

The animated Babel fish as seen in the Making of The Hitchhiker's Guide to the Galaxy TV documentary

The animated Babel fish as seen in the Making of The Hitchhiker's Guide to the Galaxy TV documentary

The GPT-4o demos included singing, but actual users haven't been able to get it to sing. It also seems there's not yet a way to stream videos to it in realtime, but perhaps that's coming too.

OpenAI will also be adding more capabilities to its free tier. Previously, we plebs using ChatGPT got "GPT 3.5-turbo" while GPT-4 was reserved for paying users; in future, free users will get a limited amount of interaction with GPT-4 before getting kicked back down to GPT-3.5.

When using GPT-4o, ChatGPT Free users will now have access to features such as:

- Experience GPT-4 level intelligence

- Get responses from both the model and the web

- Analyze data and create charts

- Chat about photos you take

- Upload files for assistance summarizing, writing or analyzing

- Discover and use GPTs and the GPT Store

- Build a more helpful experience with Memory

They're also releasing a desktop app, only for MacOS, "that is designed to integrate seamlessly into anything you’re doing on your computer." Personally, I'd want to read the ToS really carefully before giving OpenAI access to everything I do and type. (Several employers have banned ChatGPT use over concerns about data leaks.)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25445920/chatgpt_desktop_app.png)

ChatGPT is getting a Mac app - The Verge

No Windows yet.

Meanwhile, in the gig economy...

These new capabilities come in part thanks to reinforcement learning with human feedback, or RLHF. Some of that "human feedback" is outsourced to companies like Scale AI. The thing is that working for Scale AI really, really sucks.

An Authoritarian Workplace Culture

“Over time interviewing, I’ve found that I mainly screen for one key thing: giving a shit. To be more specific, there’s actually two things to screen for: (1) they give a shit about Scale, and (2) they give a shit about their work in general.

Whenever an AI company comes out with cool new features, they have a human cost. They don't have to -- annotation jobs and RLHF jobs could treat their workers with respect -- but they do.

Further details of the toxic work environment of ScaleAI and/or its subsidiary, Outlier AI, can be fund in this reddit thread. Other terrible, low-paid, exploitative human jobs enabling AI include annotator.

More from Dept. of Buzz

Also in buzzy moments last week: Apple released a depressing commercial, and the NHL's official broadcaster experimented with state-of-2022-art AI graphics.

Read the room, Apple

Apple put out a truly depressing commercial showing everything that we love to play and create with--musical instruments, toys, paints, a pinball machine--crushed to bits to create an iPad.

Dear Tim Cook: Be a Decent Human Being and Delete this Revolting Apple Ad | MZS | Roger Ebert

A new ad for Apple says the quiet part out loud: the tech industry hates artists and wants to destroy their livelihood

Sportsnet did what?

Hockey is kind of a big deal here in Canada, okay? If you're from a non-hockey-afflicted nation: the NHL is basically the FIFA or NFL of North American hockey. So it's a big deal for hockey fans when, in the middle of a live NHL broadcast, the official broadcaster throws up an absolutely terrible AI-generated graphic that looks like it was barfed out by DALL-E 2 in 2022.

Can you spot all the things wrong with this image? If you noticed that the goalie's pads are all wrong and the pucks have holes, you might be a hockey fan. If you noticed that the goalie has an ear for an eye, you might be a human.

Musical Interlude: I am a language model

Enjoy!

Andrew: "" - Mathstodon

Attached: 1 video

Dept. of Information Degradation

OpenAI's plan to flag deepfakes

OpenAI has added "tamper-resistant" metadata to all DALL-E-3-generated images that can be used to identify them as DALL-E-generated. However, from their announcement:

People can still create deceptive content without this information (or can remove it), but they cannot easily fake or alter this information, making it an important resource to build trust.

While I appreciate this, we need much more than "well, this image doesn't say it was created by DALL-E-3" to combat information degradation.

OpenAI is also working on a tool to identify DALL-E-3 created images (presumably without reading the metadata). Interestingly, it's much much better at identifying generated images from DALL-E than those from any of the multiple other image-generation tools.

Internal testing on an early version of our classifier has shown high accuracy for distinguishing between non-AI generated images and those created by DALL·E 3 products.... It correctly identified ~98% of DALL·E 3 images and less than ~0.5% of non-AI generated images were incorrectly tagged as being from DALL·E 3.... the classifier currently flags ~5-10% of images generated by other AI models on our internal dataset.

Also interestingly, adjusting the image's hue (for example, adding a blue tint to the whole picture) makes the classifier struggle, and adding a little random noise (the result looks like a low-light picture) makes it frequently perform worse than random guessing.

In the image above, the blue line is the original performance, the dotted line is random guessing, and the black line is performance on images with noise added. (This is a receiver operating characteristic graph. TPR = true positive rate; FPR = false positive rate.) So if you want to fool a state-of-the-art AI image classifier, consider adding random Gaussian noise to the image. This also isn't a bad strategy if you want to fool a human into thinking an AI-generated image is real: AI-generated images that come across as "too smooth" lose that gloss when sprinkled with random noise.

OpenAI’s flawed plan to flag deepfakes ahead of 2024 elections | Ars Technica

OpenAI is recruiting researchers to test its new deepfake detector.

StackOverflow sabotage

StackOverflow, where millions of developers have helped each other out with code snippets to solve programming problems, has thrown the gates open to OpenAI to train its models on the millions of hours of freely-given mutual assistance in the StackOverflow community.

Under the announced partnership, OpenAI will utilize Stack Overflow's OverflowAPI product to improve its AI models using content from the Stack Overflow community—officially incorporating information that many believe it had previously scraped without a license. OpenAI will also "surface validated technical knowledge from Stack Overflow directly into ChatGPT, giving users easy access to trusted, attributed, accurate, and highly technical knowledge and code backed by the millions of developers that have contributed to the Stack Overflow platform for 15 years," according to Stack Overflow.

Welp, the millions of developers are pissed off. Gee, I wonder why?

While Stack Overflow owns user posts, the site uses a Creative Commons 4.0 license that requires attribution. We'll see if the ChatGPT integrations, which have not rolled out yet, will honor that license to the satisfaction of disgruntled Stack Overflow users.

Oh. That's why. When you ask ChatGPT (or GitHub Copilot) to write code for you, it attributes that code to its source exactly 0% of the time.

Unfortunately, per StackOverflow's TOS, contributors can't revoke permission for Stack Overflow to use their contributions. However...

Stack Overflow users sabotage their posts after OpenAI deal | Ars Technica

Anti-AI users who change or delete answers in protest are being punished.

Pour one out for the helpful, vibrant StackOverflow we once knew.

Enjoy some Generative AI spam

Have you been wondering how a small-to-medium business can annoy its customer base with badly-personalized spam? There's an AI for that!

Longtime legal-humour blogger Kevin Underhill (Lowering the Bar) shared his experience as the less-than-thrilled recipient of email from Kalendar.AI's spambot, excuse me, "VP of Regional AI Sales."

These agents use a “trained AI-mailbox infrastructure to avoid spam”—which plainly means “to avoid spam filters.” They “compose personalized emails at scale using key highlights,” such as the target’s possible involvement with a Ponzi scheme targeting Chicago Latinos, in order to convince said target to connect with Kalendar’s customer.

This AI Garbage Bot Claimed It Was Thinking of Me the Other Day – Lowering the Bar

So I got this email purporting to be from Gale Kal, VP of Regional AI Sales for a company called KwikTrust: Hi Kevin, As I woke up to the morning news about Georgetown Law receiving a record-breaki…

What's worse than robo-dogs with flamethrowers?

Robo-dogs with rifles.

The wars of the future are going to be weird.

Robot dogs armed with AI-aimed rifles undergo US Marines Special Ops evaluation | Ars Technica

Quadrupeds being reviewed have automatic targeting systems but require human oversight to fire.

Longread

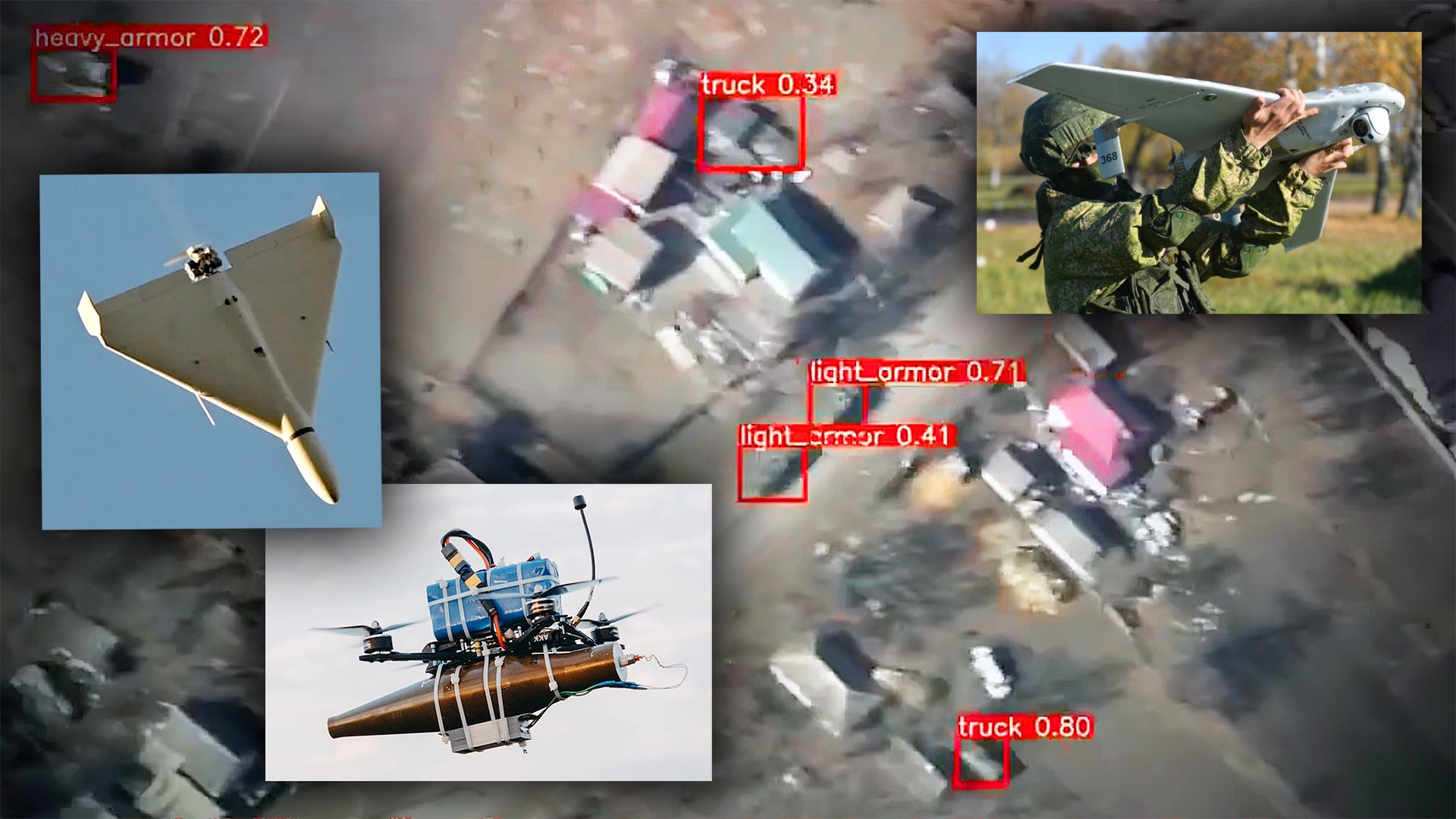

This is a good article (from Feb 2024) about what AI may bring to drone warfare:

Drone Warfare's Terrifying AI-Enabled Next Step Is Imminent

How leveraging AI to allow lower-end kamikaze drones to choose their own targets will change warfare and debates around autonomous weapons.

Wars aside, imagine a future where assassinations no longer require assassins. A crime boss could show a rival's face to an AI-enabled drone or robo-dog, and send it to kill them. Or how about this: The total stranger you got into a flamewar with on the desperately-unmoderated Twitter/X of the future could rent time on a robodog-for-hire on the darknet and send it chez toi...

Add a comment: