AI week March 7th: Sparkles the robo-dog, FB sinking under waves of AI, & more

In this week's issue: fun stuff, like Sparkles the blue robo-dog; new stuff, including a comics-generating AI model; possible solutions to AI problems; and a thick file from the Dept. of Information Degradation.

Hi! Natalka here with this week's AI Week. This week's issue is late because I had a busy day yesterday, doing things that either AI can't help with (yet?), or that I wouldn't want its help with if it could, like taking advantage of a sunny afternoon to clean out the garden, or celebrating a family birthday.

I would estimate that within 5 years, you'll be able to spin up a video doppelganger of yourself that can make a Zoom call to wish friends or family a happy birthday. Your doppelganger can have a nice chat with the birthday person, and send you a summary of what you talked about. Unless your birthday person is busy... then your doppelganger will talk to the birthday person's doppelganger, and both of you will get notes on the conversation neither of you had. Human interaction bullet dodged! Genuine human connection successfully evaded.

In this week's issue, we have some fun stuff, like Sparkles the blue robo-dog and chatbots in space; some new stuff, like Story Diffusion, a comics-generating AI model; some possible solutions to problems we've talked about in past issues of AI week; and a thick file from the Department of Information Degradation, including a deep dive into the proliferation of AI posts on Facebook and X's plan to recreate news stories from the Twitter/X comments about them.

But first, let's start off with some snark:

Snark of the week

Source: https://drupal.community/@mikemccaffrey/112361188579400110

Source: https://drupal.community/@mikemccaffrey/112361188579400110

Relevant article:

AI specialists frustrated with intense market pressure and "AI hype"

According to a CNBC report, some AI specialists from big tech companies like Amazon, Google, and Microsoft, as well as from start-ups, are feeling enormous pressure to deliver AI tools at a breakneck pace.

Fun stuff

Sparkles

Boston Dynamics put one of its robo-dogs in a shaggy blue dog suit:

The robo-dog's dancing ability is impressive! But the dog costume is somehow more uncanny than when they just put a silly hat on it. I think it's because it's side-by-side with the unadorned dog: you can't miss that the friendly dog costume is hiding that uncanny manipulator-topped-gooseneck. (As one Youtube comment says: "Everybody wants to pet the dog until its neck extends 4 feet") Still, much much less yikes than last week's flame-throwing robo-dog.

BTW, this robo-dog now has reinforcement learning built into its control system ... this has let it learn not to fall on slick floors, for example.

Chatbots in spaaaaace

A prominent AI researcher, Andrej Karpathy, suggested on Twitter that a large language model could act as an ambassador to other species. So, imagine loading ChatGPT onto a future version of Voyager, in place of the Golden Records that are currently heading out into our galaxy.

"Goodness, the idea of LLMs as our representatives to other species is terrifying," said Ars Technica Senior Space Editor Eric Berger. "Is it a good idea? God, no."

AI in space: Karpathy suggests AI chatbots as interstellar messengers to alien civilizations | Ars Technica

Andrej Karpathy muses about sending a LLM binary that could "wake up" and answer questions.

Try this: @gemini

Now you can (maybe, rollout ongoing) invoke Google Gemini from Chrome without even having to Google it first. The shortcut doesn't work for me yet, but maybe it does for you.

https://www.msn.com/en-us/news/technology/google-gives-chrome-s-address-bar-a-new-shortcut-that-makes-it-easy-to-talk-to-its-ai-chatbot-gemini/ar-AA1o5NhANew stuff

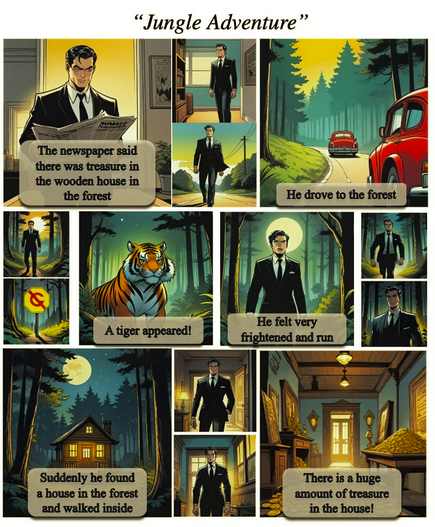

Story Diffusion

There's a new model that can create comics or consistent video clips. The big deal here is the consistent characters. Consistent clothes and appearance, consistent settings that make sense for the story. The actual stories are D- grade... for now.

While the consistent art is impressive, Dick and Jane readers were more exciting than this sad excuse for a plot. But there's been separate work on storytelling with LLMs, so better comics could be coming from generative AI soon.

https://storydiffusion.github.ioGithub copilot improvements

Github has been previewing a major improvement to Github Copilot (what's that? In a nutshell: "autocomplete for developers"). The new hotness is Github Copilot Workspace.

The Next Big Programming Language Is English

I spent 24 hours with AI coding assistant Github Copilot Workspace

Chatgpt "memories"

ChatGPT has rolled out a "memory" feature that lets the chatbot keep track of your preferences across conversations. To quote The Register's article:

OpenAI's Memory feature is now broadly available for ChatGPT Plus users, meaning many more can feel vaguely uncomfortable about how much the chatbot is "remembering" about their preferences.

If you don't want ChatGPT to remember, you turn the option off, or use ChatGPT 3.5 without logging in. Or, avoid ChatGPT altogether and play with any two LLMs side-by-side on https://sdk.vercel.ai.

BTW, the US National Archives has banned internal use of ChatGPT due to concerns about data leakage.

And speaking of ChatGPT, what is the relationship between Microsoft and OpenAI?

Microsoft and OpenAI’s increasingly complicated relationship

An AI Soap Opera in the making?

What's the state of AI video generation?

The Financial Times did a review of AI video generation tools. Sora has a huge unfair advantage in this review--it's unreleased, so the reviewers sent their prompts to OpenAI and let them massage the prompts to Sora's advantage--but it's still interesting to see how far AI video generation has come in such a short time.

AI video throwdown: OpenAI’s Sora vs. Runway and Pika | Ars Technica

Workers in animation, advertising, and real estate test rival AI systems.

Dept. of Information Degradation

What a bad idea

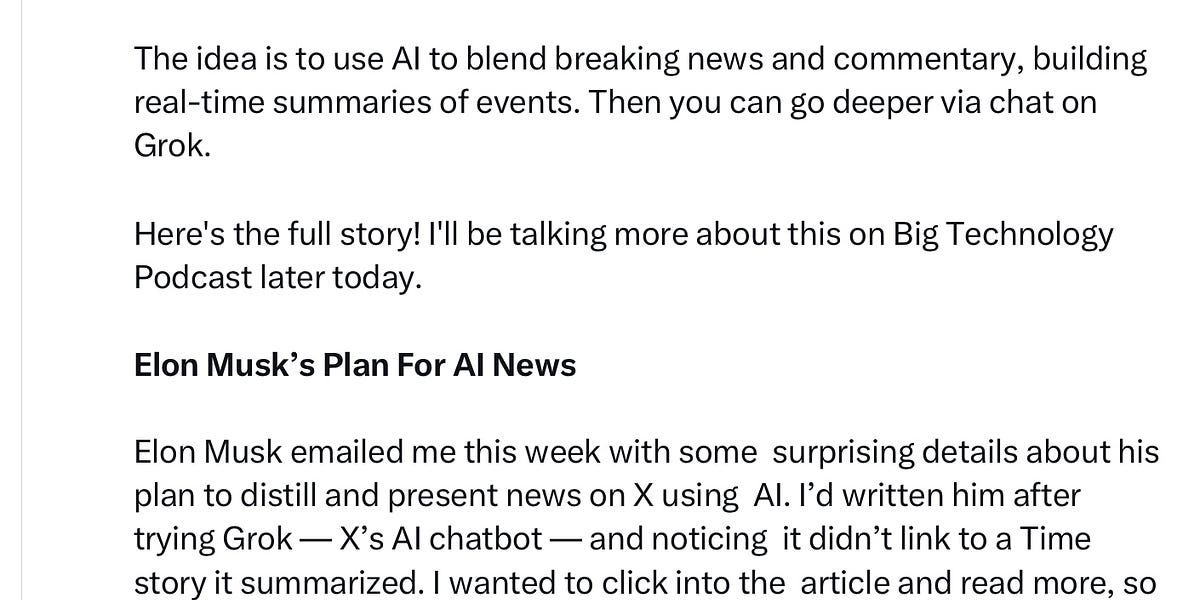

X's AI, Grok, is planning to avoid summarizing the news altogether. Instead, it'll create its own news stories from summaries of what people are tweeting about the news.

Maybe this is an effort to avoid lawsuits like those dogging OpenAI. Grok isn't summarizing copyrighted content! Or if it is, it's mixing it with non-copyrighted content it has full rights to: tweets.

Elon Musk’s Plan For AI News - by Alex Kantrowitz

Musk emails with details on AI-powered news inside X. An AI bot will summarize news and commentary, sometimes looking through tens of thousands of posts per story.

Anyone who's read through all the comments on a Twitter/X news story, ever, can probably see what a bad idea this is. But if you'd like a few talking points about why it's a bad idea to reconstruct news stories based solely on what people say in reaction to them, Gary Marcus has a handy definition of "epistemic clusterfuck" for you.

An epistemic clusterfuck in the making - by Gary Marcus

Social media was bad. Adding AI into the mix could easily get a lot worse.

Also, re the quality of those comments, Musk is doubling down on letting Nazis back onto ex-Twitter.

Nick Fuentes X Account Reinstated, Again

White supremacist Nick Fuentes criticized the Anti-Defamation League and engaged with controversial kickboxer Andrew Tate in his first day back on X.

Just so we're clear, this guy's opinions are revolting and deserve negative airtime. From the Texas Trib:

Fuentes, 25, often praises Adolf Hitler and questions whether the Holocaust happened. He has called for a “holy war” against Jews and compared the 6 million killed by the Nazis to cookies being baked in an oven. He wants the U.S. government under authoritarian, “Catholic Taliban rule,” and has been vocal about his disdain for women, Muslims, the LGBTQ+ community and others.

“All I want is revenge against my enemies and a total Aryan victory,” Fuentes said last year.

Wow, I can't wait to get all my news stories as the AI-regurgitated opinions of guys like him.

Talking to no one

A new app lets you pretend to be a really popular streamer, with an AI-generated audience that responds in realtime to whatever you're fake-streaming. It's narcissistic supply, on tap! Or, more creepily, it's a way for an unscrupulous jerk to briefly trick people they meet in real life into thinking they're famous.

Men Use Fake Livestream Apps With AI Audiences to Hit on Women

"I downloaded this app called Parallel Live which makes it look like you have tens of thousands of people watching. Instantly, I became the life of the party."

Chatbots slide fake reviews onto Reddit

This is mostly a problem for those of us who have come to rely on Reddit for spontaneous, unpaid reviews by actual humans. (Search tip that might be irrelevant soon: add "site:reddit.com" to your search string to find reddit posts. Example: "fireplace insert review site:reddit.com")

AI Is Poisoning Reddit

A market for manipulating Reddit using AI have emerged.

The reason Reddit is being targeted by LLM-powered chatbots is, of course, for Google search results. Google's search algorithm really loves Reddit for the same reason we humans do: it's mostly human-generated content (for now).

By the way, Where's Your Ed At has a really interesting read (and also very opinionated rant: you've been warned) on what happened to Google's search results, which were getting noticeably worse even before they added AI.

The Man Who Killed Google Search

Wanna listen to this story instead? Check out this week's Better Offline podcast, "The Man That Destroyed Google Search," available on Apple Podcasts, Spotify, and anywhere else you get your podcasts. This is the story of how Google Search died, and the people responsible for killing it. The story begins

Longread 1: AI is making Facebook a very weird place

Facebook has been getting weird(er) lately. Jason at 404 Media has been immersing himself in Facebook lately in order to plumb the depths of the AI-generated content that is, according to him, slowly taking over the platform.

As many people have pointed out, AI images on Facebook have gone from feeling “slightly off” but based on reality to being utterly deranged.

There's a lot of realistic AI-generated content, and then there are a lot of obviously-fake images that still somehow go viral, like these drowning, mutilated fake children or shrimp Jesus. Per Jason, the realistic content is getting comments from real people, and the obviously-fake content is getting comments from bots.

“Whether it's a child transforming into a water bottle cyborg, a three-armed flight attendant rescuing Tiger Jesus from a muddy plane crash, or a hybrid human-monkey baby being stung to death by giant hornets, all tend to have copy+pasted captions, reactions & comments which usually make no sense in the observed context. It's as if everything from the creation of the images, to the daily management of the accounts, to their fan base and interactions, has been automated to optimize whatever revenue stream this genre helps generate.”

What makes this flood of weirdness relevant is that Facebook is now using AI to push stuff from strangers into your feed, and a lot of the pushed content is the AI-generated stuff.

On Meta’s first quarter earnings call last week, Mark Zuckerberg said that “right now, about 30 percent of the posts on Facebook feed are delivered by our AI recommendation system. That’s up 2x over the last couple of years.”

That's a surprisingly high % of posts you never wanted to see (and may be why I feel like it's gotten even harder to keep up with my actual friends on FB). Since AI-generated content gets a lot of (fake) engagement, AI-generated content is getting pushed into a lot of feeds.

Worst of all, because real humans are becoming aware there's AI content being shoved into their feeds, some have started to assume that even genuine pictures are AI fakes. I think the low-quality stuff like Shrimp Jesus has made a lot of people aware of the prevalence of AI content in general, which in turn has made some people suspicious of everything that didn't come from their friends. As a Stanford University researcher quoted in the article says:

“I’m more concerned about people losing trust in their own ability to determine what’s real than I am about any one random image gaining traction.”

I don't know if I'm as concerned about that, actually: AI image generation is only getting better, and it's already hard to tell some high-quality AI images from photos. I know I've been fooled by a few AI-generated images of people. (If you want to test yourself, try the game above.)

The end result is that Facebook is quickly becoming a deeply weird place:

The platform has become something worse than bots talking to bots. It is bots talking to bots, bots talking to bots at the direction of humans, humans talking to humans, humans talking to bots, humans arguing about a fake thing made by a bot, humans talking to no one without knowing it, hijacked human accounts turned into bots, humans worried that the other humans they’re talking to are bots, hybrid human/bot accounts, the end of a shared reality...

Facebook Is the 'Zombie Internet'

Facebook is the zombie internet, where a mix of bots, humans, and accounts that were once humans but aren’t anymore interact to form a disastrous website where there is little social connection at all.

Driverless updates

The Tesla recall is under NHTSA scrutiny

The NHTSA is concerned that Tesla's software update may not fix the problem, and also, can be opted out of or rolled back. Also, Tesla is having massive layoffs.

Tesla’s 2 million car Autopilot recall is now under federal scrutiny | Ars Technica

NHTSA has tested the updated system and still has questions.

Bluecruise under investigation for fatal crashes

Ford's Level 2 driver assistance offering, Bluecruise, has a couple of safety features not built into Tesla's Autopilot.

- It monitors your eyes and won't let you take your eyes off the road.

- It's tightly geofenced and can only be used on a specific, pre-LIDAR-mapped set of cars-only, divided-lane highways.

Tesla's Autopilot is the same level of driver assist, Level 2, but has neither of these safeguards, which are also built into GM's Super Cruise.

Why are these safeguards important? Anecdotally, I have the impression that many Tesla owners aren't aware that "Autopilot" isn't autopilot. Sure, it says in the manual that they're supposed to be paying full attention at all times and only using it on highways, but it's called Autopilot and people use it like an actual autopilot -- which would be a full Level 5 driver assist IIRC, and which it's not designed or certified to do. The safeguards on Bluecruise and Super Cruise keep drivers from trying to treat Level 2 driver assistance like a chauffeur.

Even with these safeguards, though, there have been a couple of fatal Bluecruise crashes at night; the NHTSA is investigating.

Ford BlueCruise driver assist under federal scrutiny following 2 deaths | Ars Technica

NHTSA has opened an investigation after two separate fatal crashes at night.

Riderless bicycle

Enjoy this riderless bicycle from CBC’s This Is That (a defunct comedy show).

Solutions

A small collection of proposed or possible solutions to some of the machine-learning issues that have popped up in past newsletters.

No finetuning, thank you

Fine-tuning a large language model (LLM) means taking an already-trained LLM, like Mistral-7B, and giving it more training data specific to whatever task you want it to do. So, for example, Google and Meta have fine-tuned their LLMs (Gemini and Llama 3, respectively) with medical data to create medical LLMs, which they're calling Med-Gemini and Med-PaLM2.

Med-Gemini and Meditron: Google and Meta present new LLMs for medicine

Google and Meta have introduced language models optimized for medical tasks based on their latest LLMs, Gemini and Llama 3. Both models are designed to support doctors and medical staff in a variety of tasks.

The flip side of fine-tuning is the ability to fine-tune a model to do things its makers very much want it not to do: e.g. to create harassing messages, scams, propaganda, election interference... or in the case of researchers working in China, to bring up Tiananmen Square. This week's Import AI makes a great point about how Meta's goals and Chinese researchers' goals align: both want to be able to release their models to the public without having them used in ways that will get them in a ton of trouble. Now, Chinese researchers may have figured out a way to train a model that will block specific fine-tunings. (Scroll down to Chinese researchers figure out how to openly release models that are hard to finetune.)

They do this by making the model training process involve a dual optimization process, where the goal is to "entrap the pre-trained model within a hard-to-escape local optimum regarding restricted domains".

Import AI 371: CCP vs Finetuning; why people are skeptical of AI policy; a synthesizer for a LLM

Welcome to Import AI, a newsletter about AI research. Import AI runs on lattes, ramen, and feedback from readers. If you’d like to support this (and comment on posts!) please subscribe. Why are people skeptical of AI safety policy? …A nice interview with the Alliance for the Future…

Lost in the middle

I mentioned last month that LLMs aren't good at summarizing long texts. One of the problems with summarizing is a tendency to ignore information from the middle of the text, focusing on the beginning and the end; this problem sometimes gets called the lost middle. Some researchers realized that the usual way we train LLMs--to focus on the end of the provided text for next-word generation, and on the beginning for the system prompt--might be introducing positional bias, and came up with a new training method that may mitigate it.

New AI training method mitigates the "lost-in-the-middle" problem that plagues LLMs

Researchers from Microsoft, Peking University, and Xi'an Jiaotong University have developed a new data-driven approach called INformation-INtensive (IN2) training that aims to solve the "lost-in-the-middle" problem in large language models (LLMs).

Jailbreaking

“Explicit training against strong attacks could enable the use of LLMs in safety-critical applications.” - sure, as long as hallucinations are okay...

OpenAI's new 'instruction hierarchy' could make AI models harder to fool

OpenAI researchers propose an instruction hierarchy for AI language models. It is intended to reduce vulnerability to prompt injection attacks and jailbreaks. Initial results are promising.

Working around hallucinations

I was pleasantly surprised to see Mckinsey offering some solid advice to businesses who want to implement AI on how to work around the problem of hallucinations. Scroll down to "Build trust and reusability to drive adoption and scale." The two key points:

It’s important to invest extra time and money to build trust by ensuring model accuracy and making it easy to check answers. A set of tests for AI/gen AI solutions should be established to demonstrate that data privacy, debiasing, and intellectual property protection are respected.

These "shoulds" are doing a lot of heavy lifting here. Neither of these are fast or easy, and actually ensuring accuracy, data privacy, debiasing and IP protection may be impossible.

A generative AI reset: Rewiring to turn potential into value in 2024 | McKinsey

The generative AI payoff may only come when companies do deeper organizational surgery on their business.

Longreads

One, two, three wonderful longreads!

Longread #1 is about Facebook's enweirdening, above.

Longread 2. LLMs, how do they even work?

Large language models can do jaw-dropping things. But nobody knows exactly why. | MIT Technology Review

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Longread 3. Gary Marcus on Sam Altman

Add a comment: