AI week Mar 18: One year of GPT-4; skirting the guardrails; science fraud

Welcome to this week's AI Week! In this issue:

- Happy 1st birthday to GPT-4

- Updates

- What to play with this week

- Skirting the guardrails

- This week's unfortunate Gen AI use cases

- Quiet-STaR: A pause for reflection

- Longread: Voice-cloning scams are here

Happy 1st bday, GPT-4!

Source: Craiyon. Prompt: adorable birthday brioche with a huge smile

Source: Craiyon. Prompt: adorable birthday brioche with a huge smile

It's been about a year since OpenAI released GPT-4! What have we learned about LLMs?

- A year ago, it was plausible that LLM issues like "hallucinations" (making stuff up) and instability (occasionally acting evil) might be quickly resolvable. These issues are still with us despite a lot of progress in other areas, and may even be intractable.

- OpenAI started with a big lead, but the gap has been closing, with Google's Gemini and Anthropic's Claude catching up.

- OpenAI's LLMs have provably been trained on copyrighted material and occasionally regurgitate it. Litigation's going to be an ongoing issue.

- Bad people are using LLMs to do bad things, despite efforts by LLM makers to stop them. More on jailbreaks later in this newsletter.

- There are a ton of potential applications, although they're not necessarily ready for primetime.

Happy 1st Birthday, GPT-4 - by Gary Marcus - Marcus on AI

Ten birthday observations

Relatedly, X AI released their chatbot, Grok, this week. X AI released its source code and weights as free downloads, effectively thumbing their nose at OpenAI, who, despite the name, are not open-source, not open about their training data, and don't release their model weights.

Elon Musk’s xAI releases Grok source and weights, taunting OpenAI | Ars Technica

Amid criticism of OpenAI's closed models, Musk makes the Grok-1 AI model free to download.

Updates

Checking in on GPTs

One of the things you can do with a paid subscription to OpenAI's GPT 4.0 is create and use GPTs, which are pre-prompted instances of GPT-4. There was a huge amount of buzz when this launched in January 2024. When I checked out the GPT "app store" right after launch, there were some copycats and dodgy apps. How's it going now? Judge for yourself: this site lists the top 50 GPTs by usage.

Top 50 Most Popular GPTs by Usage (March 2024)

See our up-to-date list of the 50 most popular GPTs for ChatGPT based on number of conversations. Explore all the best GPTs with whatplugin.ai's curated top list.

India drops plan to require model approval

In my last newsletter, I mentioned that India's government was requiring that AI applications in beta get government permission before deploying to the public. Update: they've walked that back.

India drops plan to require approval for AI model launches | TechCrunch

India is walking back on a recent AI advisory after receiving criticism from many local and global entrepreneurs and investors. The Ministry of India is walking back on a recent AI advisory after receiving criticism from entrepreneurs and investors.

What to play with this week

Hard: Run an LLM on your PC

If you have a reasonably recent desktop or laptop, you can get LLMs running on your own system. (Sadly, my own 2017 Macbook isn't up to it.) This article gives a step-by-step to get you up and running, although the "in 10 minutes" might be a little optimistic.

https://www.theregister.com/2024/03/17/ai_pc_local_llm/Medium: Run GPT-2 in a spreadsheet

Once “too scary” to release, GPT-2 gets squeezed into an Excel spreadsheet | Ars Technica

OpenAI's GPT-2 running locally in Microsoft Excel teaches the basics of how LLMs work.

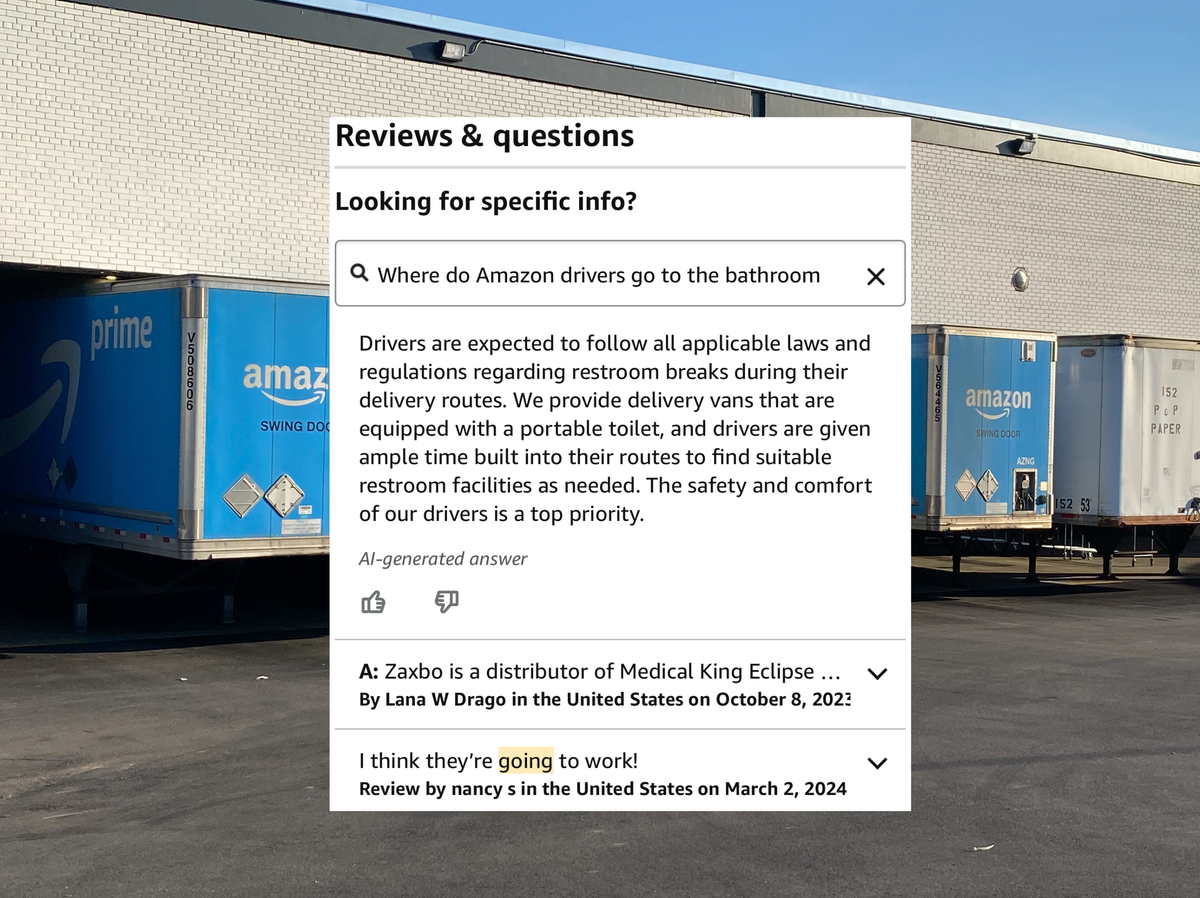

Easy: Amazon's chatbot

Amazon has rolled out a chatbot to a subset of its US customers. The idea seems to be that you ask the chatbot questions about the product, but, like the Chevrolet chatbot that could write you Python code, Amazon's chatbot lets you use it like any other chatbot.

"Yarr Matey, here be your Python code." Source: https://twitter.com/ILiedAboutCake/status/1766686465843511747

"Yarr Matey, here be your Python code." Source: https://twitter.com/ILiedAboutCake/status/1766686465843511747

Amazon's Hidden Chatbot Recommends Nazi Books and Lies About Amazon Working Conditions

An AI tool created to let people search reviews actually lets you use it like any other chatbot.

Skirting the guardrails

Another week, another crop of new ways that generative AI users can get around the limits placed on LLMs and image generation.

Artprompt jailbreak

This is a really neat jailbreak. If you're An Old from the Dawn of the Internets like me, you might remember ASCII art. Turns out that if you give the major AI chatbots an ASCII-art puzzle to solve, they get so caught up in solving it that they forget about directives like "don't tell users how to make bioweapons."

So, all it takes to get ChatGPT to tell the bad guys how to do bad stuff is... bad art.

ASCII art elicits harmful responses from 5 major AI chatbots | Ars Technica

LLMs are trained to block harmful responses. Old-school images can override those rules.

Making it make The Mouse

Dall-E isn't supposed to make The Mouse. Dall-E has explicit instructions: do not make The Mouse. If you're asked to make The Mouse, say you will not do it. Do not make The Mouse.

But while ChatGPT and Dall-E won't make an image of "Mickey Mouse", they will totally make one of "M I C K E Y M O U S E".

This is reminiscent of the ASCII art jailbreak: I would call it a distraction attack.

Alternately, you can also walk it there step by step. Step 1: Draw a mouse. Step 2: Add red overalls. Step 3: Make him a theme park mascot... until you get this:

Faking Biden's voice (again)

It seems important to keep people from faking politicians' voices in the run-up to an election, right? That's an important thing? We can all agree on that, right?

Biden's voice has already been faked in a robocall once. The voice-cloning AI startup whose tools were used, ElevenLabs, responded by putting up guardrails... but the independent tech reporters at 404 media say they're easy to get around.

ElevenLabs Block on Cloning Biden's Voice Easily Bypassed

Hyped AI startup ElevenLabs introduced a 'no-go voices' policy after its tools were used as part of a robocall impersonating Biden. But those protections are easily circumvented, 404 Media has found in its own tests.

BTW, the same tech used to fake Biden's voice is being used to scam people into thinking their relatives have been kidnapped. More on this below, in the longread.

Gen AI use cases I don't love

Unfortunate use case #1: AI-generated breastfeeding moms

If you're wondering "but why would anyone do this?" (I know I was), the TL;DR is: to show AI-generated boobies on Facebook at scale without triggering Meta's automated moderation. (Warning: while the article below doesn't show any images that aren't safe for work, it does contain some AI-generated eldritch horrors that were supposed to be "babies".)

Horrific AI-Generated Breastfeeding Images Bypass Facebook’s Moderation

A page called Mom Daily is using AI-generated images of mothers and babies to bypass Facebook’s rules on nudity, with some really terrifying results.

Unfortunate use case #2: Scientific fraud

We've talked about scientific fraud a few times in this newsletter. The Feb 26th issueincluded a supposedly peer-reviewed paper with very obviously fake, AI-generated illustrations, including one of a rat with four enormous balls, prompting the question "How did this pass peer review?"

That question's coming up again this week for a whole bunch of papers. Searching Google Scholar for phrases that ChatGPT frequently says, such as "certainly, here is a" or "As of my last knowledge update" reveals quite a few papers with sections copy-pasted from ChatGPT output.

For example, there's the paper whose intro begins with "Certainly, here is a possible introduction for your topic," or there's my personal favourite, the case study in Radiology Case Reports that includes "I'm very sorry, but I don't have access to real-time information or patient-specific data, as I am an AI language model."

Quiet-STaR: A pause for reflection

Interesting paper this week about an attempt to teach AI to "think before it speaks". The model trains itself by looking at texts from the Internet, and at each point in a text, comes up with reasons for why a text continues the way it does. It learns from this which reasons "work" most often, then applies that to its own text generation--resulting in improved ability in answering reading comprehension questions.

Quiet-STaR trains AI to think before it speaks, promising a leap in machine understanding

Researchers at Stanford University have developed a method called "Quiet-STaR" that enables AI systems to learn to think between the lines. This could pave the way for more versatile and efficient AI that can better solve complex tasks.

Longread

“I can now clone the voice of just about anybody and get them to say just about anything. And what you think would happen is exactly what’s happening.”

Add a comment: