AI Week Mar 12: Why we don't trust AI; the Iron Triangle; On meaning

Welcome to this week's AI Week! I'm glad to have you with me. It's spring break here in Ottawa, and the beginning of DST in North America (yes, already... yes, it's dumb).

In this week's newsletter:

- AI + whalesong = understanding (someday)?

- Why we don't trust AI

- The Iron Triangle

- News: new LLMs; music's "ChatGPT moment"

- Longread: The importance of meaning

But first, a fascinating, meaningless image:

A generative AI produced this lion-faced orchid when prompted with the nonsense string "elecaxjslz."

Followup: Using AI on whalesong

A few months ago, I wrote about efforts to begin parsing whalesong using machine learning (ML). "Begin" is the right word: the goal of the paper in question was to identify pieces ("codas") of sperm whale songs that might act like vowels. Now, the Atlantic has a longer piece on the project of decoding sperm whales' song, and what we would say to them if we could:

How to Talk to Whales - The Atlantic

If we can learn to speak their language, what should we say?

Why we don't trust AI

Axios reported this week on a PR firm's non-peer-reviewed findings that public trust in AI is dropping. Trust in AI companies is down, particularly in the developed world; according to Edelman, who conducted the poll, the public would like to see government regulation of AI.

Source: https://www.edelman.com/trust/2024/trust-barometer

Source: https://www.edelman.com/trust/2024/trust-barometer

In the spirit of this finding, this week offered up an assortment of stories about why we don't trust AI.

1. Why we don't trust OpenAI

Comments - OpenAI’s “Own Goal” - by Gary Marcus

And why it is becoming increasingly difficult to take them at their word

2. Thanks to AI, 12-year-olds have the power to virtually strip their classmates

Look, I used to be 12, so I know that 12-year-olds can be really, really dumb. We all know this. Did anyone think that a world where 12-year-olds can access nudify apps was going to be a good thing? Yet here we are.

In the US, AOC has a plan to address deepfake porn by enabling lawsuits against people who use, distribute, or view nonconsensual deepfakes. Maybe it goes without saying, this won't deter 12-year-olds, who won't know about the law, don't understand how the legal system works, and definitely haven't told their parents about the app they just downloaded.

I have a kid who'll be going into middle school soon. I sat him down for a convo about nudify apps. It wasn't how I wanted to spend a Friday night, but, again, here we are.

3. Who is selling your data to train AI?

Turns out, the places we've trusted with our musings and conversations over the last decade can't be trusted not to feed them to the AI mill.

Reddit, Tumblr, Wordpress: The deals that will sell your data to train AI models - Vox

Those Tumblr, Reddit, and WordPress posts you never thought would see the light of day? Yep, them too.

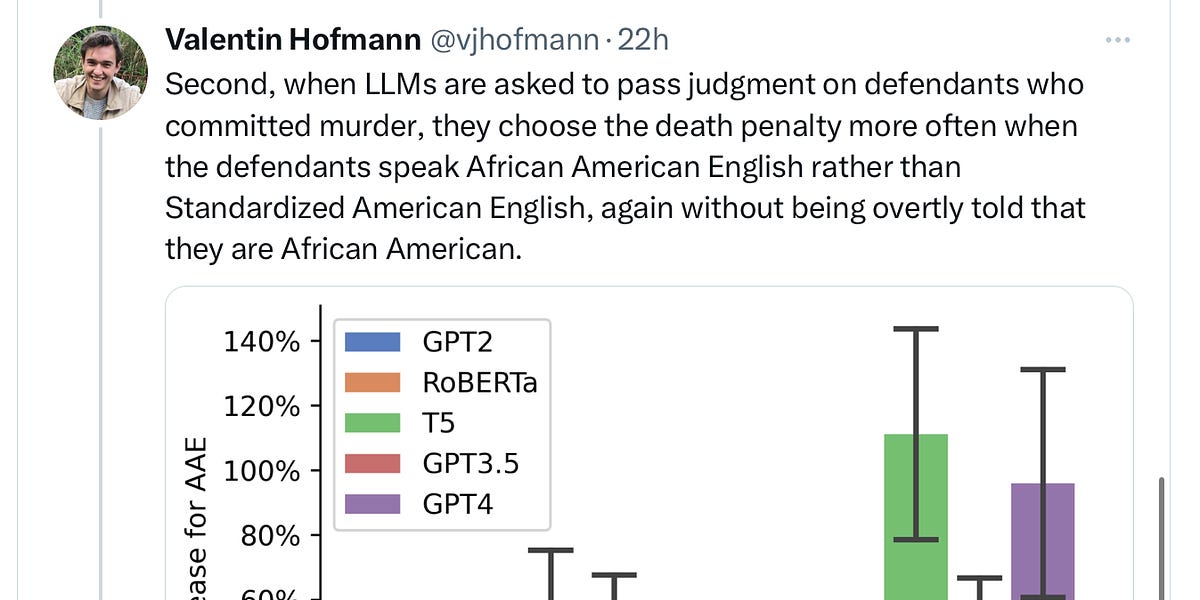

4. Deep-seated racism in LLMs

Recent research finds that LLMs like ChatGPT have implicit bias.

Covert racism in LLMs - by Gary Marcus - Marcus on AI

Shocking new paper with potentially serious implications

5. India's government has lost trust in beta AIs

... ever since Google's AI, Gemini, agreed that Narendra Modi was a fascist. Now, India's government is requiring that AI applications in beta get government permission before deploying to the public.

https://www.theregister.com/2024/03/04/india_ai_permission/6. The AI Incident Database

This newsletter has only been able to scratch the surface of the harms done by AI deployments. I'm glad to see that someone has gotten organized and started a database.

The AI Incident Database is dedicated to indexing the collective history of harms or near harms realized in the real world by the deployment of artificial intelligence systems. Like similar databases in aviation and computer security, the AI Incident Database aims to learn from experience so we can prevent or mitigate bad outcomes. You are invited to submit incident reports, whereupon submissions will be indexed and made discoverable to the world. Artificial intelligence will only be a benefit to people and society if we collectively record and learn from its failings.

Here's the AI Incident Roundup for February alone: https://incidentdatabase.ai/blog/incident-report-2024-february/

A thought: the Iron Triangle

(Photo by Jorge Salvador on Unsplash)

(Photo by Jorge Salvador on Unsplash)

“Better, faster, cheaper: pick two” - this is known as the Iron Triangle, and it's a well-known adage in software engineering, aerospace, and assorted other industries. (One blogger traces the saying back to print shops in the 1980s, but notes it probably appeared earlier.)

The Iron Triangle currently applies to the things AI does. In art, coding, and writing, AI is faster and cheaper than humans (especially when it's heavily subsidized by venture capital and/or large corporations). But it's not better.

Unfortunately, "faster and cheaper" is destroying the livelihoods of the people who can do the "better" (authors, artists). It's not only replacing their work product with something that is worse, it's pushing them out of the business.

Why is that a problem for anyone other than artists and authors? Well... In Ottawa, where I live, the city laid off a substantial proportion of its bus drivers when they opened a new light rail line. The light rail line had enormous problems, and the city had to bring back some buses. But the city couldn't hire all the drivers they needed. The laid-off drivers had moved on.

Writing well isn't easy. Creating high-quality digital art isn't something you can pick up overnight. When our authors and artists move on, who will be left when we need words or images that aren't faster and cheaper, but better?

News

New LLM releases

Pat McGuinness's newsletter offers a nice summary of new LLM releases this week:

Two big AI model releases this week - Claude 3 Opus and Inflection 2.5 - mean that we now have 5 competitive GPT-4-class AI models to choose from.

AI Week In Review 24.03.09 - by Patrick McGuinness

Inflection 2.5, Claude 3 Opus, Sonnet, Haiku, Suno V3, LTX Studio, WildBench, OpenRouter, ArtPrompt, StableDiffusion 3 paper, OpenAI rebuilds its board and fires back at Elon.

Is AI music going thru a "chatGPT moment" ?

A lot of people on Twitter got really excited about a clip someone generated with suno.ai's v3 beta. (Suno is partnered with MS Copilot.) The clip featured silly lyrics (I think user-supplied) and some surprisingly humanish vocals, including a long sustained note with what, if it had been sung by a human, we might interpret as lots of emotion.

AI has come for music. So far, there's a Great Big Sea between people and algorithms | CBC News

Bob Hallett says so far, generative artificial intelligence tools haven't been able to mimic the unique tunes Great Big Sea is famous for. But others warn that the quality could increase quickly.

Longread: The importance of meaning

This is a really long read, but a vital one. TL;DR: Deep meaning requires both a communicator and someone who's receiving the communication. Deep meaning is created when two minds interact with each other. The text is not the goal; the communication between two minds is.

When you create something with the assistance of an AI – when you generate an image or compose an essay or write some code by prompting an AI to do it – you are fundamentally changing the meaning that it has for you.... You are now alienated from your own creation in a really profound way.

Last week, I generated some fascinating images with AI using the prompt "elecaxjslz." That was it; that was the whole prompt. I was really pleased with some of the images: a skull with horns and four-and-a-half legs; a fox-headed bird; the lion-faced orchid above. But there's no sense in which I created them. My prompt, "elecaxjslz.", expressed zero intention, zero thoughts, zero feelings. There is no meaning behind these images. They communicate nothing.

Three interpretations of "elecaxjslz." by FastSDXL.ai

Three interpretations of "elecaxjslz." by FastSDXL.ai

In writing this, however, I am communicating with you about these images. If I prompted an LLM with something like "Write a paragraph about the importance of meaning in communication" and pasted the result here, then all I would really be intending to communicate is "I have something to say". And even that would be a lie.

I feel like the importance of meaning is a vital piece of the conversation around AI, and one that's largely neglected. I'm glad to see this blog post touching on it. Big recommend.

Add a comment: