AI week Jul 27: Why AI lies, and other stories

3 cool things we've learned thanks to AI; why AI lies; ChatGPT's lack of legal privilege; and more

Hi! Welcome to this week's AI Week.

The White House's AI action plan dropped this week. It's a deregulatory gift to the AI industry, who essentially got everything on their wishlist.

Read on for 3 cool things we've learned thanks to AI, followed by

- Why AI chatbots "lie"

- ChatGPT has no legal privilege

- Something fun

- Longread: The Hater's Guide to the AI Bubble

3 Cool Things We've Learned With AI

1. ML at Yellowstone

Machine learning has helped us detect thousands of earthquakes swarming beneath Yellowstone:

Beneath Yellowstone’s stunning surface lies a hyperactive seismic world, now better understood thanks to machine learning. Researchers have uncovered over 86,000 earthquakes—10 times more than previously known—revealing chaotic swarms moving along rough, young fault lines.

AI uncovers 86,000 hidden earthquakes beneath Yellowstone’s surface | ScienceDaily

Beneath Yellowstone’s stunning surface lies a hyperactive seismic world, now better understood thanks to machine learning. Researchers have uncovered over 86,000 earthquakes—10 times more than previously known—revealing chaotic swarms moving along rough, young fault lines. With these new insights, we’re getting closer to decoding Earth’s volcanic heartbeat and improving how we predict and manage volcanic and geothermal hazards.

2. Assembling a lost Babylonian hymn

Babylonian text missing for 1,000 years deciphered with AI | Popular Science

The ‘Hymn to Babylon’ praises the ancient city.

The lines below are from a newly discovered hymn, describing the river Euphrates. The city was located on the riverbanks at the time.

The Euphrates is her river—established by wise lord Nudimmud—

It quenches the lea, saturates the canebrake,

Disgorges its waters into lagoon and sea,

Its fields burgeon with herbs and flowers,

Its meadows, in brilliant bloom, sprout barley,

From which, gathered, sheaves are stacked,

Herds and flocks lie on verdant pastures,

Wealth and splendor—what befit mankind—

Are bestowed, multiplied, and regally granted.

3. Contextualizing Roman inscriptions

Google develops AI tool that fills missing words in Roman inscriptions | Science | The Guardian

Program Aeneas, which predicts where and when Latin texts were made, called ‘transformative’ by historians

Although the "fill in missing words" aspect is in beta, the tool is providing context for inscriptions by finding linguistically similar ones.

In another test, Aeneas analysed inscriptions on a votive altar from Mogontiacum, now Mainz in Germany, and revealed through subtle linguistic similarities how it had been influenced by an older votive altar in the region. “Those were jaw-dropping moments for us,” said Sommerschield.

Why AI chatbots "lie"

I mentioned last week that the FDA has started using an AI system codenamed "Elsa". Update: Apparently Elsa is as prone to hallucinations as any other LLM.

FDA is using an AI system that staff say frequently invents or misrepresents drug research

The US Food and Drug Administration is relying on Elsa, a generative AI system, to help evaluate new drugs - even though, according to insiders, it regularly fabricates studies.

Why do those hallucinations happen? Why do LLMs fabricate data and information? The following article has some thoughts.

https://www.science.org/doi/10.1126/science.aea3922TL;DR: "They are most likely a result of two factors: AI models’ pretraining, which induces them to “role-play,” and the special posttraining that these models receive from human feedback."

User manual: ChatGPT has no legal privilege

OpenAI CEO Sam Altman reminded everyone this week that conversations with ChatGPT aren't confidential. This became obvious when the NYT won a court order to disclose chat histories. This discussion on LinkedIn points out that there isn't necessarily any attorney-client privilege with ChatGPT Law either, and lays out some potential legal consequences.

Even using an off-prem AI transcription assistant could potentially pose problems for attorney-client privilege. Unfortunately, Andrew Yang says that large firms are having ChatGPT do the work of lawyers:

A partner at a prominent law firm told me “AI is now doing work that used to be done by 1st to 3rd year associates. AI can generate a motion in an hour that might take an associate a week. And the work is better. Someone should tell the folks applying to law school right now.”

— Andrew Yang🧢⬆️🇺🇸 (@AndrewYang) July 26, 2025

And I'll just leave this here for your amusement:

Partner Who Wrote About AI Ethics, Fired For Citing Fake AI Cases

Something fun

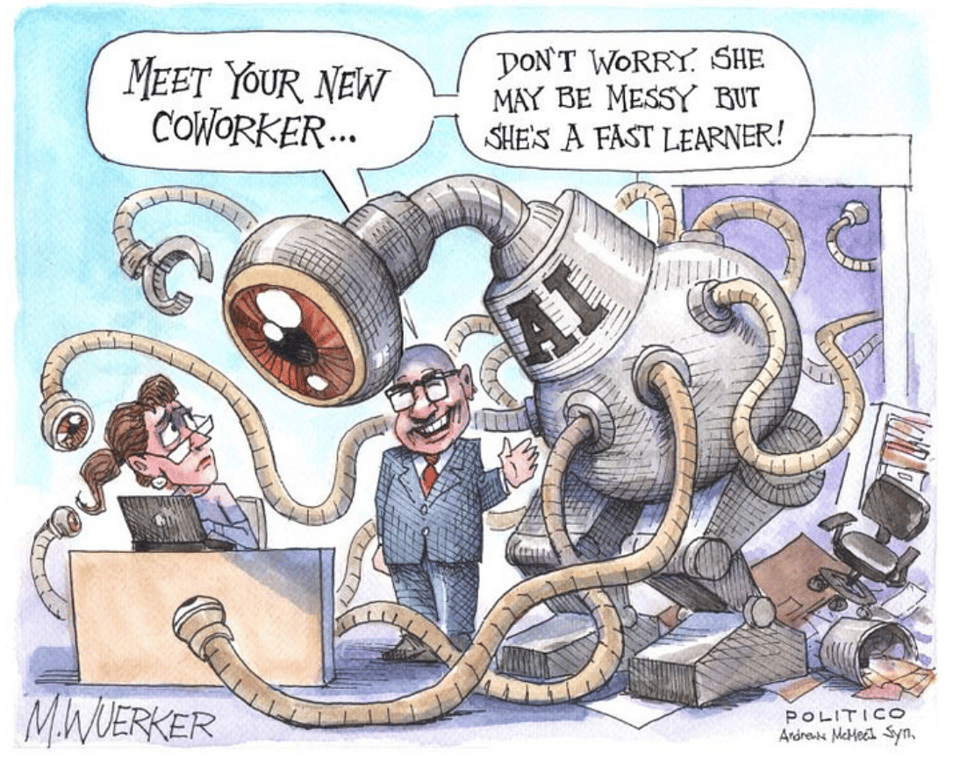

Context: Owner Axel Springer mandated AI use at Politico and Business Insider, and Politico staffers are not happy. No wonder, as Mr Springer has not only mandated AI use, but made his staffers responsible for any mistakes that AI makes.

Relatedly, one in six US workers pretends to use AI in order to please management. Politico staffers, take note.

Longread: The hater's guide to the AI bubble

AI can be a useful tool, if you don't mind that it's partly trained on pirated material & fully trained without buying training rights or even asking consent, and can work around the occasional plagiarism and hallucination. But is that tool overvalued? Ed Zitron, my favourite spicy AI contrarian, has a rant that says "yes".

Add a comment: