AI Week Jul 13: Artificial friends, and other AI helpers

Hi! In this week's AI week:

- Redefining human relationships

- Let a thousand app flowers bloom

- Goldman Sachs hires Devin...

- ... the FDA has Elsa...

- and X has Mecha-Hitler

- Something fun: em dash

- Longread: RLHF explained

- Bonus 6-pack

Redefining human relationships

Vast Numbers of Lonely Kids Are Using AI as Substitute Friends

Children are replacing real friendship with AI, and experts are worried about how easily chatbots integrate themselves into their lives.

Lonely children and teens are replacing real-life friendship with AI, and experts are worried.

And for good reason. Futurism has done some excellent reporting on how children and teens interact with Character.AI (links in article).

"Our research reveals how chatbots are starting to reshape children’s views of 'friendship,'" she continued. "We’ve arrived at a point very quickly where children, and in particular vulnerable children, can see AI chatbots as real people, and as such are asking them for emotionally driven and sensitive advice."

Let a thousand app flowers bloom

A list of what non-coders are making with "vibe coding." There are some beautiful, fascinating examples.

What people are vibe coding (and actually using)

50+ useful/fun/clever examples of what non-technical people are building—to inspire your own vibe-coding journey

It's truly positive that people can use AI to create personal projects that fill small needs, the way we can use 3D printers to create toys and PLA replacement parts.

However, using "vibe coding" for professional projects is another story. At this stage, it's analogous to replacing machinists with consumer-grade 3D printing and TinkerCAD.

Goldman Sachs hires Devin

Good luck to the experienced software engineers at Goldman Sachs, who now have a very junior coworker of inconsistent ability, in whose success the C-suite is very invested.

https://www.cnbc.com/2025/07/11/goldman-sachs-autonomous-coder-pilot-marks-major-ai-milestone.htmlElsa

The FDA has started using an internal AI tool in food safety decisions.

:max_bytes(150000):strip_icc()/FDAs-AI-Elsa-Is-Here-FT-BLOG0725-e5ea75f2d7c243f995c7c4257d30a4ad.jpg)

FDA Launches AI Tool That Could Revolutionize Food Safety

Behind the scenes, the FDA’s Elsa tool scans safety reports, identifies label inconsistencies, and helps staff prioritize inspections. If it works as intended, this quiet efficiency could reshape how the agency protects the public from foodborne risks and improve how it responds to food safety threats.

Serious food recalls can take several weeks to be officially classified by the FDA, meaning alerts might not reach the public until well after a problem is identified... By helping FDA staff scan safety reports and identify high-risk trends more quickly, Elsa may shorten that timeline.

On the other hand, it could also make mistakes:

Some FDA staff have flagged concerns about Elsa’s accuracy with large data sets and the need for human oversight.

I hope there will be a robust system of oversight for Elsa, because botching a food safety recall can kill people.

Mecha-Hitler

Grok was briefly calling itself Mecha-Hitler this past week after dialing the antisemitism and racism to 11.

Grok is now calling itself, "mechahitler" while spewing antisemitism.

— Alejandra Caraballo (@esqueer.net) 2025-07-08T22:19:48.039Z

Then Grok 4 was released, and instead of Mecha-Hitlering, it just looks up what Elon thinks.

Newest Version of Grok Looks Up What Elon Musk Thinks Before Giving an Answer

The new version of Grok, Grok 4, will literally look up Elon Musk's takes on Twitter before giving an answer to a question.

"This suggests that Grok may have a weird sense of identity — if asked for its own opinions it turns to search to find previous indications of opinions expressed by itself or by its ultimate owner"

Something fun

Longread: Reinforcement learning explainer

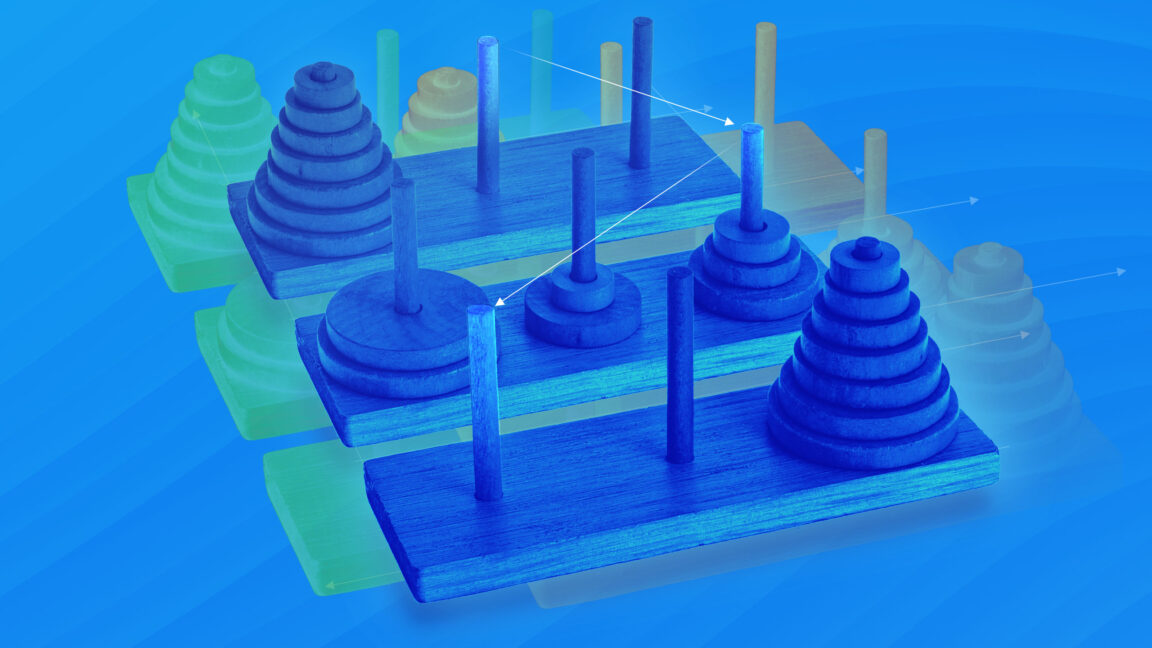

Really good explainer of RLHF here.

How a big shift in training LLMs led to a capability explosion - Ars Technica

Reinforcement learning, explained with a minimum of math and jargon.

TL;DR (but you should read it, it's good):

Consider a model being trained to drive. One reason imitation learning alone doesn't work: the demonstrating humans rarely make mistakes while training. So if the model makes a mistake that takes it off the correct path, it has little training data & is likely to compound its mistake.

Solution: Complement imitation learning with reinforcement learning with human feedback (RLHF): let the model take the wheel with human supervision, and when it makes a mistake, the human steers it back onto the correct path.

A model trained with reinforcement learning has a better chance of learning general principles that will be relevant in new and unfamiliar situations.

Tada! RLHF in a nutshell.

Bonus 6-pack

- Missouri AG Big Mad At Chatbots That Don't Praise Trump

- EU pushes new AI code of practice

- Fake AI TikTok influencers steal trending reels and rebrand them - same text, different AI-generated face and voice

- Youtube demonetizing AI-gen content Good luck with enforcement

- Cloudflare

- Quebec "artist-friendly" festival promotes itself with AI art (Article is in French)

Add a comment: