AI week Jan 15th: Zombie George Carlin, shiny CES, and shady GPTs

In this week's AI Week:

- Where's Waldo?

- Zombie George Carlin

- A longread and a free read: Content moderation

- "I apologize, but I cannot complete this task"

- Cool AI stuff from CES (and elsewhere)

- OpenAI once again gets its own section:

- ChatGPT's app store opens (with copycats & dodgy apps)

- OpenAI: It's impossible not to steal your IP!

- Could OpenAI's nonprofit be forced to dissolve?

This week's AI week is about a 15-minute read. Enjoy!

Where is Waldo? Where could he be?

Where is Waldo? Where could he be?

Where's Waldo?

Today's fun AI image is ChatGPT's attempt at a "Where's Waldo?" picture:

Source:

https://www.reddit.com/r/ChatGPT/comments/18z9a0j/where_ever_could_waldo_be/

Zoom in for Boschian horrors:

Zombie George Carlin is unlicensed

A comedy podcast is literally putting words in George Carlin's dead mouth, and his daughter Kelly Carlin is furious.

George Carlin’s daughter denounces new AI imitation comedy special - Polygon

Carlin’s estate did not give permission for the hour-long special generated off his face and voice.

I listened to most of the set on Youtube (with adblocker on: not interested in monetizing zombies). It's very sweary! Some of it is close-ish to the George Carlin I remember, and some of it I had a hard time believing Carlin would ever have said. (Zombie George Carlin pushes the idea that Democrats and Republicans are the same. The actual George Carlin was fiercely pro-choice.) The set sounds like it was written by human comedians with their own axes to grind, then cast in George Carlin's voice by generative AI.

The set opens with a peppy AI voice claiming that this is an "impersonation" that falls in the same category as Elvis impersonators. But Elvis impersonators don't make up entirely new songs and sing them in his voice. They sing his songs in their voice. They're not the same.

Verdict: A Carlin-ish comedy set written by a comedian lacking the courage to perform it in their own voice.

The audio is accompanied by AI-generated images that really showcase image-generation AI's difficulties with text:

(plus this one which is just awesome)

RELATED: "No AI FRAUD" Act ; SAG-AFTRA licensing agreement for voice

On Wednesday, lawmakers in the U. S. House introduced the "No AI FRAUD" Act (No Artificial Intelligence Fake Replicas And Unauthorized Duplications Act) in an attempt to establish legal protection against using someone else's voice or likeness without their permission. Too late for Zombie George Carlin, although his likeness might be protected by "right of publicity" laws.

https://petapixel.com/2023/10/16/no-fakes-act-seeks-to-ban-unauthorized-ai-generated-likenesses/

And on Tuesday, SAG-AFTRA, a union that represents American actors, announced an agreement at the Consumer Electronics Show (CES, and we're definitely going to get to more about CES in a minute) that will let union members license their voices in video games:

https://www.cnet.com/tech/this-ai-deal-will-let-actors-license-digital-voice-replicas/

Relevant bit for Zombie George, or at least his estate:

Crabtree-Ireland also said he saw no reason why the estates of dead performers could not agree to the use of those voices under the new licensing agreement.

Did someone forward you this newsletter? Subscribe here.

A longread and a free read: Two stories about content moderation

The longread this week is a story about content moderators backstopping AI. AI hasn't reached the point where it can reliably identify all the toxic images and videos (think: sexual abuse, torture, violence, beheadings) that litter Meta's social media, so Meta outsources much of this work to contractors for an African firm called Sama. And it's hard on them.

Ranta was one of dozens of young Africans recruited from across the continent to work in Sama’s Nairobi hub. This army of moderators would help filter some of the internet’s most distressing content, the sewage that gushes daily through our digital pipes, unseen by almost everyone. For their work inspecting the worst of the effluence, they would be paid around $2.20 an hour, after tax, a wage Sama says was good by Kenyan standards.

http://www.ft.com/content/ef42e78f-e578-450b-9e43-36fbd1e20d01

The content moderators here are backstopping AI, and it's a terrible job: imagine watching the worst of the worst, all day, every day. This terrible job of is the focus of my 2019 short story for TechDirt editor Mike Masnick, "The Auditor and the Exorcist". You can read it for free on my website or download it as an ebook.

| CORRECTION: |

|---|

| Last week's longread was a post by blogger and programmer Alex Bolenok, who writes and posts as Quassnoi, in which he managed to implement a large language model in SQL. In the newsletter I sent out, I mistakenly attributed his post to Markus Winand. My apologies, Alex, and thank you for letting me know! |

"I apologize, but I cannot complete this task" floods the Internet

Product names

Amazon Is Selling Products With AI-Generated Names Like "I Cannot Fulfill This Request It Goes Against OpenAI Use Policy"

Amazon is listing products in which even the title was generated using OpenAI's ChatGPT. Can the internet survive?

Twitter (verified) bots

https://techcrunch.com/2024/01/10/it-sure-looks-like-x-twitter-has-a-verified-bot-problem/

Just search twitter for "as it goes against OpenAI's use case policy" to find more bots, many of which are "verified". (Link goes to search result on nitter.net, a Twitter mirror with no Javascript, no tracking, and no login required.)

Some AI stuff from CES

This week was the Consumer Electronics Show (CES), which is the annual Big Deal of the tech industry, and AI was all over the place.

Cool: Voice synthesis for the voiceless

Whispp brings electronic larynx voice boxes into this millennium | TechCrunch

Having a voice is important -- figuratively and literally -- and not being able to speak is a major impediment to communication. Whispp is working to

Less cool: baby cry translator, a robot to play with your dog, an AI-powered.... meat toaster? Toothbrush?

Baby cry translator, because God forbid you learn to understand your child's cries on your own*...

This App Says It Can Translate Your Baby's Cries Using AI - CNET

Are babies' cries so universal that AI can understand them? Cappella's new subscription app aims to prove it.

... or give your dog attention,

Worried About Your Dog While Away From Home? New CES Pet Robot Has You Covered - CNET

The Oro Dog Companion Robot will keep you connected to your pup and even play fetch when you can't.

or ... What the f*** is this? A steak toaster?

A $3,500 Toaster for Steak: The Wild AI Perfecta Grill Promises Sizzling Meat in 2 Minutes - CNET

The unusual vertical infrared oven, unveiled this week at CES 2024, reaches temps over 1,000 degrees and cooks thick steaks and chops in minutes.

And why does my toothbrush need AI?

Best Electric Toothbrushes of 2024 - CNET

Dental experts say electric toothbrushes can give your teeth a better clean than their manual counterparts. Here are our top picks.

Using bone conduction technology, the Oclean X Ultra will actually talk to you, with tips on how to improve.

That's not creepy at all!

[*]Crying baby protip: Look at the fists. If they're tightly clenched, the baby's probably hungry. I can't remember which wise soul taught me this during the haze of new parenthood, but in my experience it was 100% true.

ChatGPT in Volkswagens

ChatGPT showed up at CES as a voice assistant in a 2025 Volkswagen Golf, integrated with Ida (Volkswagen's version of Alexa). The story below is charming; here's a more thorough review.

A Volkswagen with ChatGPT told me a story about dinosaurs at CES 2024

We got a quick demo of how ChatGPT will work with Volkswagen's cars.

The Rabbit

No, not the cute, bouncy, furry creature; the Rabbit r1 is a little red box that you interact with like a walkie-talkie (push to talk). There's nobody on the other end but an AI model, but you can tell the model to do some of the things you do with a smartphone: look stuff up, create music playlists, take notes, etc.

The eventual vision for the Rabbit is for it to do everything for you. Rabbit says the AI system isn't a Large Language Model (LLM) like ChatGPT, but a "Large Action Model" -- sort of a fusion of ChatGPT and Alexa.

This new $199 AI gadget is no-subscription pocket companion - Boing Boing The Rabbit r1 is cool, but who needs it?

Discover Rabbit r1, the compact AI voice assistant that outperforms smartphones. With fast responses, seamless app integration, and unique features like real-time translation, it's changing the game at just $199.

More here.

Cool AI applications, not from CES

CES was flooding the Internet with cool tech this week, but I also ran into a couple of stories about interesting applications of AI that had nothing to do with CES.

A housekeeping robot

Housekeeping robot quickly learns a range of autonomous chores

Stanford and Google DeepMind researchers have presented an open-source housekeeping robot, and trained it relatively quickly to sauté shrimp, rinse out pans, put pots away in a kitchen cabinet, and clean up wine spills – but it has greater ambitions.

AI students (why?)

University Enrolling AI-Powered "Students" Who Will Turn in Assignments, Participate in Class Discussions

Students at Ferris State University in Michigan will soon be sharing the classroom with AI-powered "students."

Things not to do with AI

Judges, please don't write your legal opinions with ChatGPT.

This week, Canada's federal court banned Canadian judges from using AI to write their opinions:

Federal Court bans its judges from using AI in decisions in wake of U.S. controversy - The Globe and Mail

The court said in a statement that while artificial intelligence offers the potential of considerable benefits for judges, it also poses risks to the justice system

And that's a good thing, since LLMs hallucinate 75% of the time in legal matters.

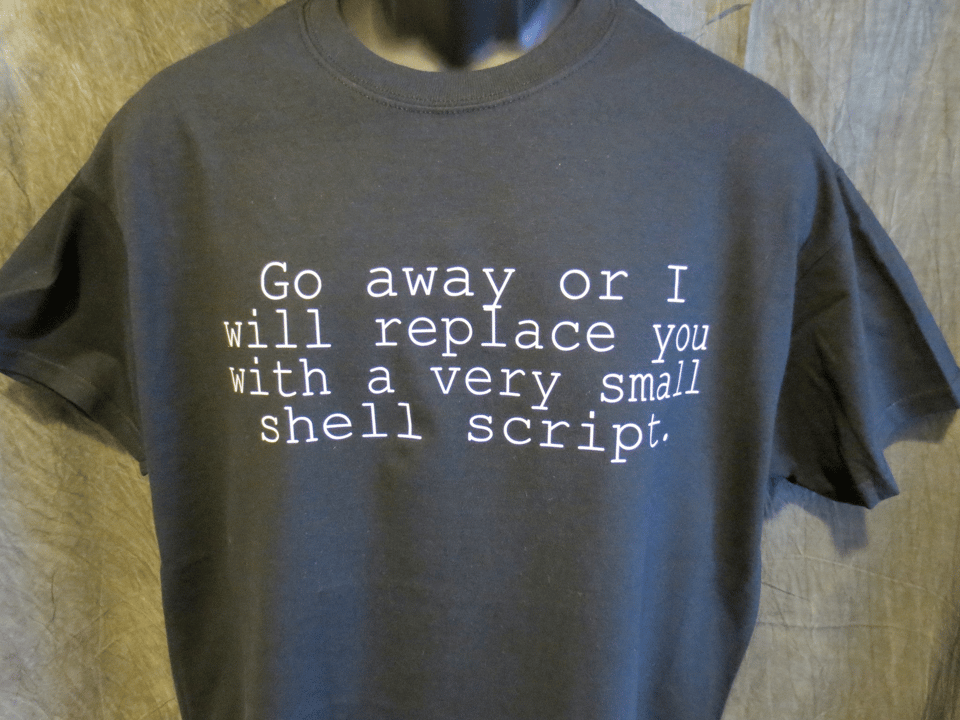

Stop recreating shell scripts with AI

So apparently a bunch of startups are using AI to automate things that can already be automated by the end user, using existing automation tools. Like this:

Other startups like Adept are focused on teaching agents to perform keyboard and mouse moves. It trains its models on visual elements of user interfaces or web browsers so agents can recognize things like text boxes or search buttons. By training it on videos recording people's screens as they carry out tasks on specific software, it can learn what exactly needs to be typed and where it needs to click to do something such as copying and pasting information into an Excel spreadsheet.

This is the worst use case for AI: we can already do this without AI, and we have been able to do this for years, without the high energy costs that go into training AI foundation models.

https://www.theregister.com/2023/12/27/ai_chatbot_evolution/

I'll end with this solid gold quote from the article:

"People are always worried that robots are stealing people's jobs. I think it's people who've been stealing robots' jobs," [said Lindy CEO Flo Crivello].

Go away, Lindy, or I will replace you with a very small shell script.

Did someone forward you this newsletter? Subscribe here.

OpenAI

Once again, there was so much going on with OpenAI this week that it gets its own section of the newsletter.

- OpenAI launches app store (with copycats & dodgy apps)

- "It's impossible" not to steal, cries OpenAI

- Could OpenAI's nonprofit be forced to dissolve?

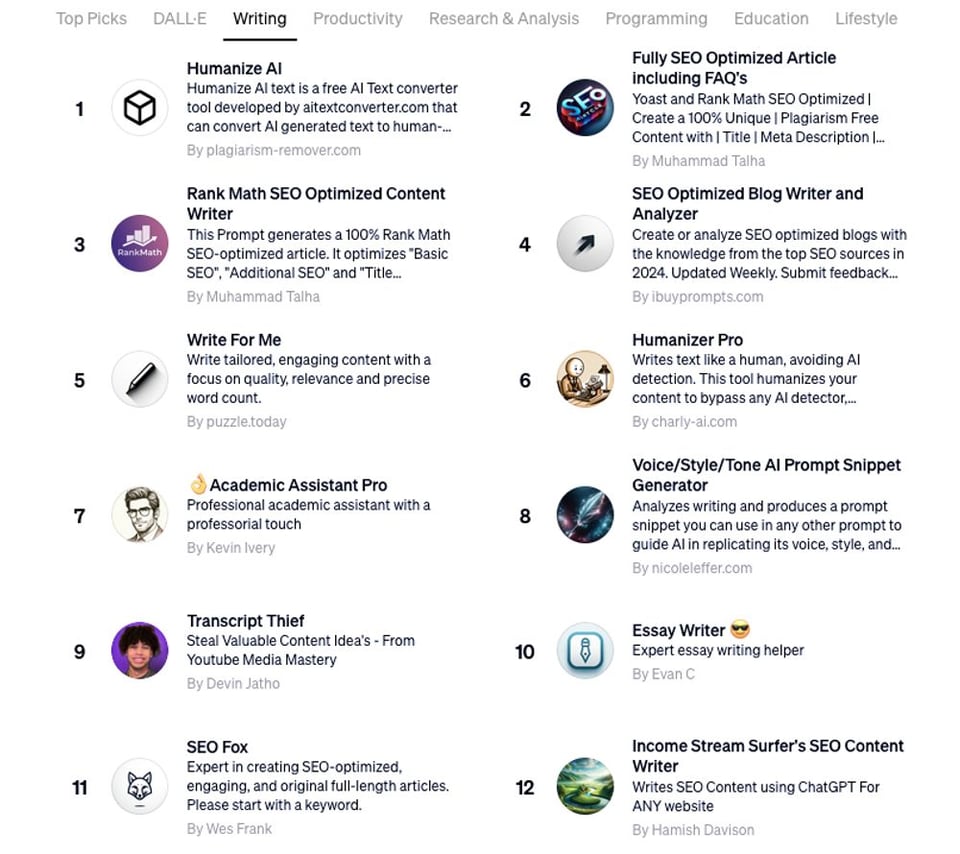

1. OpenAI launches app store; copycats & dodgy apps apbound

OpenAI launched its so-called "app store" this week.

- The "apps" are called "GPTs".

- A "GPT" is just a pre-prompted instance of ChatGPT 4, plus optionally a couple of data files, wrapped in marketing. That's literally all it is.

- The idea is that people can use these instead of prompting ChatGPT themselves.

- However, to use them, you need to sign up for ChatGPT Plus, which is $20/month.

- I'm not planning to do that because ChatGPT used a ton of copyrighted material to create the foundation model and fine-tune it; by selling the end product they're using the copyrighted material commercially without reimbursing the copyright holders (thus all the lawsuits); that's not cool and I don't want to enable it.

Explore GPTs

Discover and create custom versions of ChatGPT that combine instructions, extra knowledge, and any combination of skills.

Copycat GPTs

The GPT store already had copycats even before it was thrown open to the public.

https://www.theregister.com/2024/01/10/with_openai_gpt_store_imminent/

Quote:

“How can OpenAI expect people to invest time in GPTs if they can just be lifted?"

How indeed. Writers whose books and articles were lifted to train ChatGPT are wondering the same.

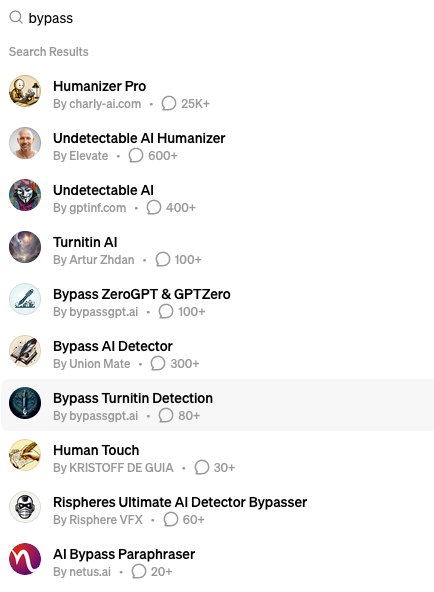

GPT store already has dodgy apps

On Jan 11th, one day after it opened, #6 in Writing was Humanizer Pro: "Writes text like a human, avoiding AI detection. This tool humanizes your content to bypass any AI detector."

App #1 was "Humanize AI" ("convert AI generated text to human-like text") from "plagiarism-remover.com." And #9 was not even trying to be subtle: "Steal Valuable Content Idea's."

This was one day after opening.

Today (Jan 15th) these GPTs aren't in the top 12, but they're still there, and the store is stuffed with similarly sketchy apps designed to let you trick people about your use of AI and/or rip their content off:

2. "It's impossible" not to steal, cries OpenAI

OpenAI claims it's impossible to create useful AI models without using copyrighted material (without paying):

OpenAI says it’s “impossible” to create useful AI models without copyrighted material | Ars Technica

"Copyright today covers virtually every sort of human expression" and cannot be avoided.

For a spicier take, DailyKos reports this story as "OpenAI: We Can't Be Rich Unless You Let Us Steal". Quote:

It offends my engineering soul that we waste time on arguing that we should protect shitty, non-viable systems at the cost of creative human beings instead of working to solve real problems.

(Yassss, preach!)

But Getty seems to have managed to!

Getty launched iStock generative AI this week. Getty (who's suing Stability AI for training its Stable Diffusion model on Getty's images without licensing) claims that its model has been fine-tuned on licensed content only.

Getty's tool is based on Nvidia Picasso, "trained exclusively using Getty Images’ high‑quality content and data from our creative library".

Getty is adding an AI image generator to iStock | VentureBeat

With each prompt, Getty says, Generative AI by iStock will produce four images and each image will come with a legal coverage of up to $10,000.

It's very expensive compared to other tools: $20 to generate 100 images. (It often takes several attempts to get an image you like, so this is $20 for about 25-50 usable images. Still much cheaper than paying an artist.) In contrast, Midjourney charges $10/month for its lowest tier, which gives you enough CPU time for well over 50 images, and Stable Diffusion charges $10 for about 5,000 generations.

Getty totally thinks they're going to win their lawsuit: Getty's pricing reflects a belief that corporate customers will see the higher price as cheap compared to the potential legal costs associated with trained-on-unlicensed-art tools like Midjourney, Stable Diffusion or Dall-E.

And OpenAI is in talks to license some content, anyway

However, just so we're clear: OpenAI intend to license this content for fine-tuning the model they already built. They have no intention of licensing the content they have already stolen to create their foundation model. That stolen content isn't "not being used any more": the foundation model is the base of everything else they release, and researchers have proved that some or all of ** the stolen content is stored in it and can be regurgitated word-for-word**.

3. Could OpenAI's nonprofit be forced to dissolve?

On Wednesday, American nonprofit Public Citizen petitioned the state of California to reevaluate OpenAI’s nonprofit status.

Dear Attorney General Bonta: I write to raise questions about the activities of the California-registered 501(c)(3) nonprofit organization, OpenAI, Inc., and urge you to investigate whether you should seek its dissolution.

Back in November, OpenAI went through a week of very public convulsions: the non-profit board fired for-profit CEO Sam Altman, the company and backer Microsoft revolted, and Altman was re-hired while most of the non-profit board members were forced out. The way Public Citizen's president sees it, OpenAI's for-profit side beat its non-profit side and it's time for California to reevaluate their non-profit status.

Could OpenAI’s nonprofit be forced to dissolve?

Big news today, via Stephanie Palazzolo at The Information: the group Public Citizen yesterday petitioned the state California to reevaluate OpenAI’s nonprofit status. As Robert Weissman, the President of Public Citizen put it to me on a phone call, the OpenAI drama seemed in part to be a showdown between for profit and nonprofit aspects of the organization; it is not clear that organization as whole has remained true to its nonprofit mission. “I don’t think it’s a radical argument we are making...

Did someone forward you this newsletter? Subscribe here.