AI Week for Monday, Nov 27th

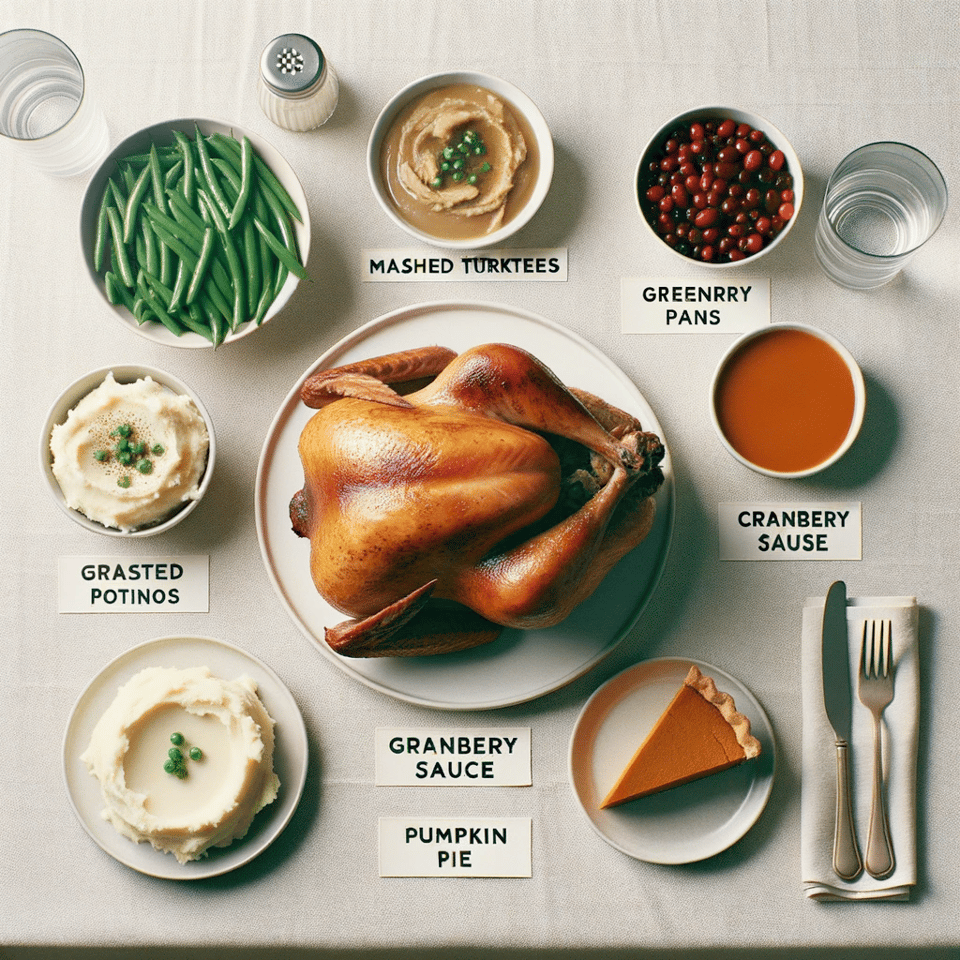

Happy Thanksgiving! Did you enjoy some Mashed Turktees with Greenrry Pans?  (Thanksgiving meal generated by DALL-E 3, from AI Weirdness)

(Thanksgiving meal generated by DALL-E 3, from AI Weirdness)

In this week's AI news:

- Where did Sam Altman land?

- What's OpenAI up to now?

- Bing generates Disney logos… sometimes

- IN BRIEF: Meta gives up on AI ethics, Hugging Face pulls singing Xi Jinping but not Biden, Anthropic releases Claude 2.1

- This week's AI application: JobGPT

- Bonus: Inflate that jelly!

1. Where did Sam Altman land?

After all the drama, Sam Altman's back as the CEO of OpenAI with a new, three-member board: chair Bret Taylor (former CEO of Salesforce), Larry Summers (former president of Harvard and former Treasury Secretary), and previous board member Adam D'Angelo (cofounder of Quora).

Yes, all the women are off the board

This isn't the first shuffle of OpenAI's board, which has changed frequently. The board that removed Sam Altman last week was made up of OpenAI chief scientist Ilya Sutskever, Adam D'Angelo, Helen Toner, and Tasha McCauley. You might remember from last week that Ilya Sutskever played a key role in the ouster -- and then regretted it, signing an open letter threatening to quit and join Microsoft unless the board resigned.

It's interesting that both Toner and McCauley are off the board, but not D'Angelo. Australian Helen Toner, Director of Strategy at Georgetown’s Center for Security and Emerging Technology (CSET) and, like temporary CEO Emmett Shear, an effective altruist, was a voice for AI safety on the board. Tasha McCauley is a co-founder of the Center for the Governance of AI (GovAI); she and Helen Toner are members of its board. GovAI is partly funded by Open Philanthropy, another effective altruism organization.

At the moment, all the effective altruists appear to be off the board; also, all the women, and all the people working in AI safety.

Sidebar: Wait, what's effective altruism (EA)?

EA is a philanthropic philosophy that's had a lot of impact on a lot of people involved with corporate AI research. Here are three things to know about EA:

- It's very popular in Silicon Valley. Open Philanthropy = Facebook co-founder Dustin Moskovitz.

- There's significant overlap with the self-described "rationalists" - the "Less Wrong" crowd around Eliezer Yudkowsky, author of the Harry Potter fanfiction that name-dropped last week's OpenAI CEO Emmett Shear.

- Sam Bankman-Fried was a major effective altruist.

The EA principle in a nutshell is to philanthropize more effectively, i.e., to make sure the money you donate is having the maximum possible impact. This principle has led EAs to some surprising places:

- Organizing your life around making as much money as possible as a philanthropic goal, in order to give that money away.

- Not donating that money to charity, in order to use that money to make more money so that you can donate the largest possible sum on your death -- a philosophy attributed to Binance founder Changpeng Zhao, aka CZ.

- Considering possible catastrophic future impacts means prioritizing funding goals like "mitigating the harms of AI" over more traditional charitable goals, like helping people who are at risk of dying today.

Larry Summers is on the board of OpenAI?

Yeah, this is a surprising choice, as the 68-year-old former Treasury Secretary hasn't got a deep history in tech or AI. Forbes points out that Larry Summers wrote an op-ed in 2017 about AI taking US workers' jobs, and commented to Bloomberg earlier this year that ChatGPT was “coming for the cognitive class.” So it's possible that Summers was a compromise pick to appease those concerned that the board had shed everyone interested in AI safety and governance. Unlike Helen Toner and Tasha McCauley, though, Larry Summers is hardly an expert in either area.

2. What's OpenAI up to now?

Talk to me, ChatGPT

OpenAI's leadership was convulsing last week, but that didn't stop the company from releasing voice chat. Premium (paid) users already had this feature, but now anyone who puts ChatGPT's app on their phone can have a voice chat with ChatGPT.

ICYMI: ChatGPT's getting its own app store soon

Earlier this month, ChatGPT introduced what it calls "GPTs": easy-to-build customized interfaces with ChatGPT. To be clear, developers have been building their own apps on ChatGPT for years. The example I know best is James Yu and Amit Gupta's Sudowrite, an "AI writing partner" built using ChatGPT's API. (When it launched, the reaction of the writing community was mixed but largely negative, mainly because ChatGPT trained on fiction without permission.) Now, ChatGPT wants to bring customization to its power users--the ones who have text files filled with their favourite prompts--by rolling out a no-coding interface. (Paid users only.) Their Nov. 6th blog post says they plan to roll out a GPT app store.

OpenAI model Q* reportedly able to math

Large language models (LLMs) like ChatGPT work by predicting the next word (that's a very-short-and-not-very-good explanation, this one is better), and as such they're bad at math. That's not necessarily a huge problem--for example, you can hook ChatGPT up to a powerful mathematical engine, like Wolfram Alpha. But it's a big deal Ilya Sutskever's team at OpenAI created an experimental model, known as Q*, that reportedly could solve basic math problems accurately. Some in the AI community see this as a big step toward Artificial General Intelligence (AGI), and as such, it's possible that this was one of the factors that led to the board's revolt against Sam Altman last week. Business Insider sums the excitement up with one quote:

"If it has the ability to logically reason and reason about abstract concepts, which right now is what it really struggles with, that's a pretty tremendous leap," said Charles Higgins, a cofounder of the AI-training startup Tromero who's also a Ph.D. candidate in AI safety.

3. Bing generates Disney logos… sometimes

AI image generation is notoriously bad at generating text. Janelle Shane's AI Weirdness blog collects delightful examples, like the Thanksgiving feast above with "MASHED TURKTEES" and "GRASTED POTINOS".

But last week, Bing had to restrict the use of word "Disney" in prompts because so many people were using it to generate the Disney logo, which it does beautifully:

It's really a success that Bing can generate the Disney logo so well! It's likely that in the data the model was trained on, there were a lot of "Disney" logos associated with the word "Disney". Unfortunately, Disney isn't likely to approve of this stunning success, especially since some people have already used it to create fake movie posters.

Microsoft recently promised they would cover the legal costs of users sued for copyright infringement due to use of their AI Copilots, a policy they call their "Copilot Copyright Commitment." I'm not sure whether or not this pledge extends to free users of Bing image generation. If it does, since Disney isn’t exactly chill about unauthorized use of the mouse, its logo, or any other IP, it could be very expensive for Microsoft.

What does this tell us about AI and copyright?

One argument of the artists suing text-to-image AI generators for copyright infringement is that since the models were trained on their work, they can easily be induced to reproduce it in a way that's not different enough from the original to count as "transformative" and therefore fair use. This beautifully rendered "Disney" logo certainly supports that argument. Here's another example, via The Register:

4. IN BRIEF

Meta gives up on AI ethics

Meta (Facebook and Whatsapp’s parent company) disbanded its Responsible AI group.

Hugging Face pulls singing Xi but not singing Biden

Hugging Face, which calls itself the "GitHub for machine learning". Unfortunately for them, the platform's been blocked in China as of sometime in September. I'm sure that has nothing to do with why HuggingFace has taken down models that can be used to imitate Xi Jinping, but not Joe Biden.

Anthropic's Claude 2.1 available to play with... er, test

quote: "Our latest model, Claude 2.1, is now available over API in our Console. The model offers an industry-leading 200K token context window, significant reductions in rates of model hallucination, system prompts, and tool use. We’re also updating our pricing to improve cost-efficiency, driving more value for our customers across models.""

5. This week's AI application: JobGPT

I thought it would be fun to showcase an AI application every other week. This week’s AI application is job applications. You can now get AI assistance to fill out online job applications in bulk. This Wired article (via Ars Technica) goes into the details.

Why this is interesting:

Most large corporate HR departments use some algorithmic screening to cut down on the number of applications a human has to read, meaning many job applications aren't ever read by a human before being discarded. HR has been experimenting with using AI in hiring, as well. We're heading toward a future where increasing volumes of AI-generated applications are scanned by AI assistants. The increased efficiencies on each side of the equation are likely to cancel each other out, and the net result may be that it's even harder for a person who would be a great fit for a job to actually get their application seen by another human.

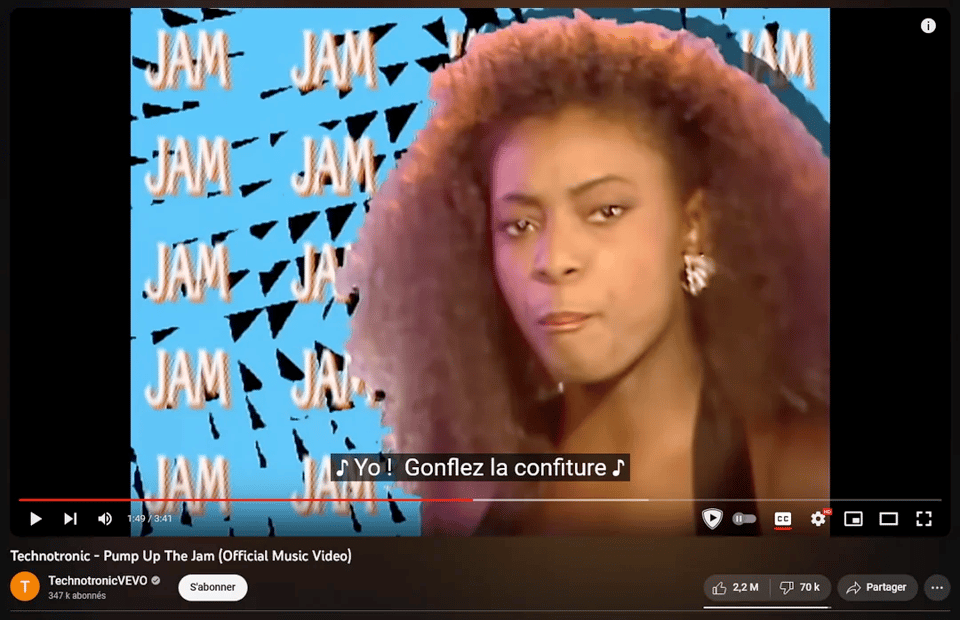

6. Bonus: Inflate that jelly!

Special shout-out to the automated AI translation of “Pump up the jams” as “Inflate the jelly"”:

This gem is from reddit.

"Pump up" and "jam" both have multiple meanings in English, and "Yo! Gonflez la confiture" managed to pick the wrong one for both of them. Inflate that jelly!

What... why... how did this translation disaster happen?

YouTube removed community captions in 2020, to the dismay of people who relied on them for translations. They started experimenting with AI translations in 2021, and in 2022 rolled out auto-translated captions for video on mobile devices. You can now enable Auto-Translate for captions on the web and mobile devices.

And this is wonderful in so many ways! It's amazing that I can get an idea of what's going on in a video in a language I don't speak and have never studied.

But languages are tricky, idiomatic, and very context-dependent. "Jam" in particular has a ton of meanings: tasty breakfast spread, an overabundance of traffic, a tough situation... and music. Human translators have the advantage of lived experience.