AI Week for Apr 15: Synthetic songs and data, pink slime, and LLMentalists

In this week's AI Week: Synthetic songs and training data; "pink slime" and other information pollution; jailbreaking; a robodog hooked up to an LLM; plus, why do we fall for LLMs?

What to play with this week: Udio

Udio music generation opened up a free public beta this week.

Udio | Make your music

Discover, create, and share music with the world.

I gave it this prompt:

"a song about medicinal herbs and health, refreshing, uplifting, weightlifting, polka, weird al"

and got back a male voice + accordion doing this:

sprinkle ginger dash

of mint for the flavour punch

mix in turmeric roots

in your tea for your lunch

then aha muscles pump

lifting iron

feel the jumps

The tune is... something. It wanders through keys chaotically. Between the slightly-unhinged melody, the slightly-unhinged lyrics, and the supernumerary accordion flourishes, this song made me laugh pretty hard. Have a listen:

Udio | Herbal Tonic Groove by NRMR

Make your music

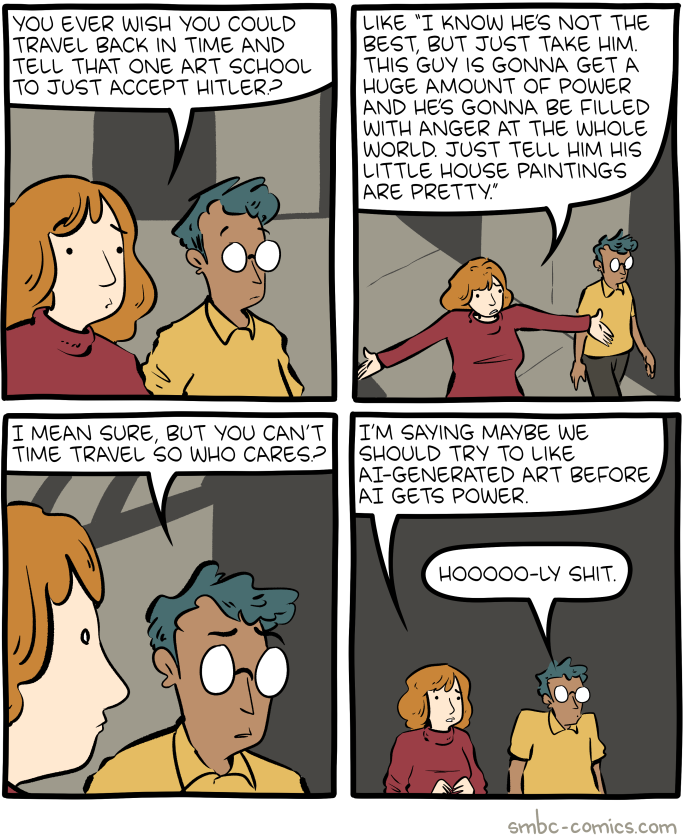

Comic

Saturday Morning Breakfast Cereal - Art

Saturday Morning Breakfast Cereal - Art

Training data

Legislating training data transparency

https://thehill.com/homenews/house/4583318-schiff-unveils-ai-training-transparency-measure/Rep. Adam Schiff (D-Calif.) unveiled legislation on Tuesday that would require companies using copyrighted material to train their generative artificial intelligence models to publicly disclose all of the work that they used to do so.

The bill, called the “Generative AI Copyright Disclosure Act,” would require people creating training datasets – or making any significant changes to a dataset – to submit a notice to the Register of Copyrights with a “detailed summary of any copyrighted works used” and the URL for any publicly available material.

Speaking of training data transparency

I'm extremely annoyed with Adobe for pitching its AI image generator, Firefly, as "commercially safe" (ie did not steal unlicensed images for training - trained only on licensed art). I thought this made it an ethical alternative to Midjourney, Stable Diffusion, etc. But it turns out that about 1 in 20 of the training images came from other, not-commercially-safe AIs... because people had uploaded them to Adobe’s stock image market.

Adobe trained its AI image generator on Midjourney images, but it's complicated

According to a Bloomberg report, Adobe has been training its Firefly image generator with images from rival generator Midjourney and presumably other image AIs. This is awkward because Adobe advertises that Firefly is legally and ethically sound.

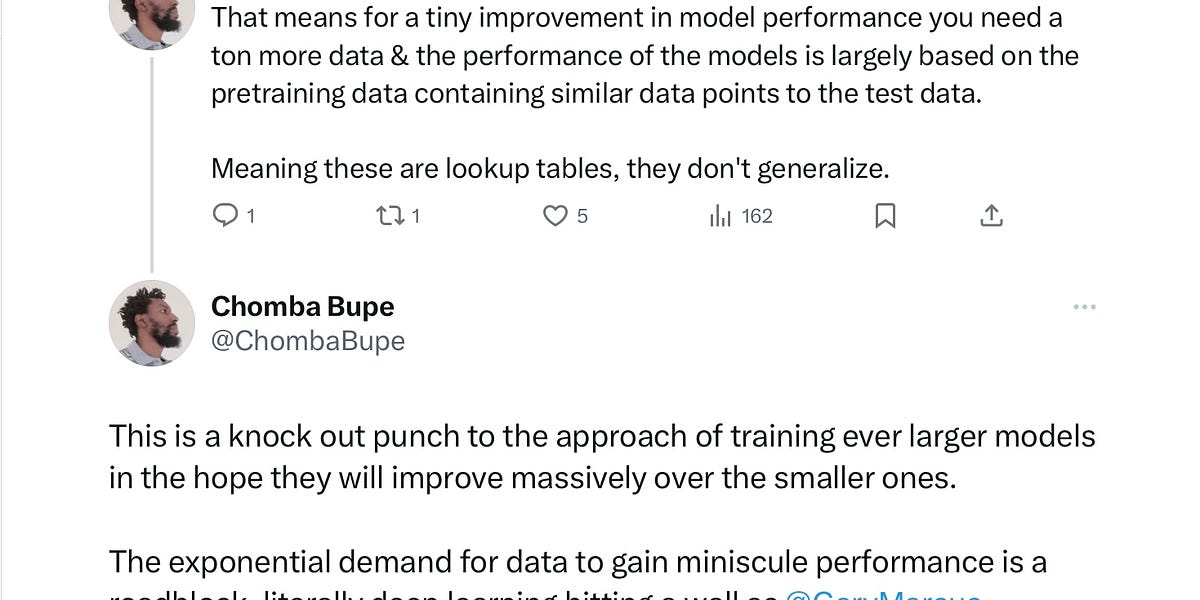

Do we need infinite amounts of training data?

A lot of the improvements we've seen in generative AI since 2022 come from having fed them much more training data. There's kind of an idea floating around that if we just feed generative AI enough training data, eventually it'll turn into AGI (or be indistinguishable from it). A preprint came out last week that might be the death of that idea, essentially arguing that incremental improvements will require exponentially more data.

Breaking news: Scaling will never get us to AGI

A new result casts serious doubt on the viability of scaling

Making up more training data

You might've heard some rumbles about "running out" of training data (even if for incremental gains, per the paper above): The internet is big, but finite. Tech companies are casting ever wider nets in search of data -- at one point Meta was even planning to buy publisher Simon & Schuster in order to "strip mine its catalog for data training", as Publishers Lunch puts it.

The alternative is "synthetic data": training data generated by one model to train another one. There's an obvious potential problem: Garbage in, garbage out. Plus, if you train on only or predominantly synthetic data, eventually the model collapses. Still, synthetic data has become an important part of how generative AI is trained, and it's likely to grow in importance. Jack Clark's Import AI newsletter has a really good summary of a Google-Stanford-GIT paper about how, and how much, synthetic data is being used in AI training today.

Garbage in Garbage out is a phenomenon where you can generate crap data, train an AI system on it, and as a consequence degrade the quality of the resulting system. That used to be an important consideration for training on synthetic data - but then AI systems got dramatically better and it became easier to use AI systems to generate more data. Now, it's less a question of if you should use synthetic data and more a question of how much (for instance, if you over-train on synth data you can break your systems, Import AI 333).

Import AI 369: Conscious machines are possible; AI agents; the varied uses of synthetic data

Who do you know who doesn't know about AI advances but should?

(By the way, Import AI is a great newsletter. If you'd enjoy a more-technical AI newsletter that's still very readable by non-developers, I recommend it.)

Google employees: no tech for apartheid

Exclusive: Google Workers Revolt Over $1.2 Billion Israel Contract | TIME

Two Google workers have resigned and another was fired over a project providing AI and cloud services to the Israeli government and military

Musk: AGI will be here next year!

Along with fusion, right?

Elon Musk predicts superhuman AI will be smarter than people next year | Elon Musk | The Guardian

His claims come with a caveat that shortages of training chips and growing demand for power could limit plans in the near term

Counterpoint: LLMs far behind humans at navigating, understanding environment

Meta made an "embodied AI" that boodled around a home (it looks like they hooked a Boston Dynamics robodog up to an LLM) and answered text questions about its environment.

That's pretty impressive, but also, I am not seeing this beating the smartest humans by next year. It's particularly bad at questions about spatial orientation:

As an example, for the question “I’m sitting on the living room couch watching TV. Which room is directly behind me?”, the models guess different rooms essentially at random without significantly benefitting from visual episodic memory that should provide an understanding of the space.

As the github page notes, "performance is substantially worse than the human baselines."

OpenEQA: From word models to world models

Today, we’re introducing the Open-Vocabulary Embodied Question Answering (OpenEQA) framework—a new benchmark to measure an AI agent’s understanding of its environment.

What would a conscious machine actually look like?

The same issue of Import AI I linked above has a very interesting discussion of a very long paper that tries to lay out the theoretical foundation for a "Conscious Turing Machine (CTM)" embodied in a robot. Honestly, it's just an excellent issue of Jack's newsletter all around, you should read the whole thing.

CES update: the Humane pin

One of the cooler new AI gadgets at this year's Consumer Electronics Show (CES) was the Humane pin, a little computer that you wear and talk to like a Star Trek communicator. Now that people have had a chance to try it, The Verge has a review. TL;DR: Cool idea, don't buy it.

On the one hand:

I do lots of things on my phone that I might like to do somewhere else. So, yeah, this is something. Maybe something big.

On the other:

I’m hard-pressed to name a single thing it’s genuinely good at. None of this — not the hardware, not the software, not even GPT-4 — is ready yet.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25379325/247075_Humane_AI_pin_AKrales_0144.jpg)

Humane AI Pin review: the post-smartphone future isn’t here yet - The Verge

AI gadgets might be great. But not today, and not this one.

Information pollution round-up

As we careen toward the 2024 US election, here are some of the stories from just this week about ways that generative AI is currently polluting the information landscape.

"AI is making shit up, and that made-up stuff is trending on X"

Information pollution reaches new heights - by Gary Marcus

AI is making shit up, and thar made-up stuff is trending on X

Unfortunately, LLMs are good at being persuasive

https://www.theregister.com/2024/04/03/ai_chatbots_persuasive/"Experts have widely expressed concerns about the risk of LLMs being used to manipulate online conversations and pollute the information ecosystem by spreading misinformation," the paper states. There are plenty of examples of those sorts of findings from other research projects – and some have even found that LLMs are better than humans at creating convincing fake info. Even OpenAI CEO Sam Altman has admitted the persuasive capabilities of AI are worth keeping an eye on for the future.

Google Books indexes AI trash

Google Books Indexes AI Trash

Google said it will continue to evaluate its approach “as the world of book publishing evolves.”

"Pink Slime"

“Pink slime” local news outlets erupt all over US as election nears | Ars Technica

Number of partisan news sites roughly equals those doing actual, legitimate journalism.

Obituary spam

/cdn.vox-cdn.com/uploads/chorus_asset/file/25275361/246992_AI_at_Work_OBITS_ECarter.jpg)

The rise of obituary spam - The Verge

AI-generated obituaries are beginning to litter search results, turning the deaths of private individuals into clunky, repetitive content.

These AI influencers aren't even all AI

Now we have AI influencers that are just AI-generated faces deepfaked onto other women’s videos. The people behind these fake-deepfakes are appropriating women's bodies for profit, without their consent or knowledge. This is beyond gross.

‘AI Instagram Influencers’ Are Deepfaking Their Faces Onto Real Women’s Bodies

AI 'influencers' on Instagram have racked up hundreds of thousands of followers and millions of views by stealing reels from real women to make the AI seem more "believable."

LLMs are surprisingly not great at summarizing long texts

This is relevant because people are selling publishers tools aimed at summarizing submissions. There was a really big flap in the SF community last week when SF small press Angry Robot revealed that they'd be using Storywise, an AI system, to sort submissions, a position they revised after ample and strident feedback from the community. And I'm glad they did, because of the findings of a recent paper.

This paper aims to determine how good LLMs are at summarizing really long texts, and how good LLMs are at evaluating summaries, by having humans check their work. Here's a quick human-made summary:

Summarizing: Accuracy varied from 70% (terrible) to 90% (might be okay for a book report).

- Claude-3-Opus (from Anthropic) was the best at about 90% accuracy (ie, 1 in 10 claims it made about a long text were wrong/unsupported/only partly right). Its summaries also didn't suffer from some of the flaws that the other models did, like repetitiveness or vagueness

- Mixtral (this is from mistral.ai above) underperformed at 70% accuracy with 11% of claims being outright lies.

- All of the LLMs made errors of omission. 30-60% of summaries left out points that the humans thought were key.

Evaluating summaries: None of the LLMs tested were able to reliably determine whether a summary was accurate or not, so they can't be used to give feedback to each other in order to automate training.

https://arxiv.org/pdf/2404.01261.pdf

Jailbreaking update

“Many-shot jailbreaking” in a nutshell: If you prompt an LLM with enough examples of a question/answer that breaks its own rules, then ask the LLM to answer a question that breaks its rules, it will answer. The more examples you provide, the more likely it is to break its rules when answering; the likelihood goes up exponentially with the number of examples.

This wasn't a big problem last year, when most LLMs had much smaller limits on the "context window", limiting the amount of user-provided information the LLM uses in its responses. Now, though, we're seeing models with huge context windows, meaning lots of room to provide question/answer pairs

Why is this a big deal?

- It's hard to fix

- It's incredibly easy to do

- It works on multiple models

Many-shot jailbreaking Anthropic

Anthropic is an AI safety and research company that's working to build reliable, interpretable, and steerable AI systems.

(If it seems too tedious for bad actors to generate 250-300 examples of an LLM breaking its guardrails, they could turn to one of the many jailbroken LLMs available on the dark web. Failing that, it was pretty easy to prompt ChatGPT 3.5, which now doesn't require login, to provide me with several examples of minor crimes.)

Relatedly: IT professionals do not trust AI security

https://diginomica-com.cdn.ampproject.org/c/s/diginomica.com/ai-two-reports-reveal-massive-enterprise-pause-over-security-and-ethicsthe reality for many software engineering teams is the C-suite demanding an AI ‘hammer’ with little idea of what business nail they want to hit with it.

Longread: Why do we fall for LLMs?

Maybe you know someone who's convinced that ChatGPT, or another large language model (LLM), is conscious. Or maybe you're still wondering about the Google researcher who believed that their 2022 LaMDA chatbot was a sentient AI. Why do so many smart people fall for LLMs?

If you've ever seen a psychic work a crowd, or known anyone who's deeply convinced that their horoscope is true, it might be the same effect. This longread from 2023 draws some interesting and compelling parallels between how psychics (etc) convince some people that they can see the future and how LLMs convince some people that they're conscious. Some of the techniques that psychics have evolved for convincing marks of their legitimacy may have been kinda-accidentally baked into LLM training.

In trying to make the LLM sound more human, more confident, and more engaging, but without being able to edit specific details in its output, AI researchers seem to have created a mechanical mentalist.

Long read but 100% worth your time.

The LLMentalist Effect: how chat-based Large Language Models replicate the mechanisms of a psychic's con

The new era of tech seems to be built on superstitious behaviour

[*]No offense meant to any astrologically-oriented readers. But if you do happen to be astrologically-oriented, I'd be interested to hear whether you feel the same way after reading this week's longread.

Add a comment: