AI Week Feb 26: All the AI Fails, plus Enormous Rat Balls

Hooray! This week's AI Week is here! This past week saw some very interesting generative-AI fails that I've been itching to share with you. #9 of my 10 predictions for 2024 was more "AI Mistakes" stories, and 2024 has been delivering.

Today's AI Week is about a 5-minute read; I also linked several interesting medium-to-long-reads for you to peruse at your pleasure.

But first, AI vs. Cats:

Source: https://mastodon.social/@sir_pepe/111963328536304420

Source: https://mastodon.social/@sir_pepe/111963328536304420

AI Fail: ChatGPT goes bonkers

A lot of people noticed ChatGPT Enterprise losing the plot last Tuesday:

Source: https://twitter.com/dervine7/status/1760103469359177890?s=61

Source: https://twitter.com/dervine7/status/1760103469359177890?s=61

Why is this interesting?

The issue is already fixed, but it's a reminder that LLMs like ChatGPT don't really know anything or understand what they're saying. If you have any friends or coworkers who believe ChatGPT is conscious, maybe share the twitter thread with them.

AI Fail: Animals starting with C

Google search "Animals starting with c". Or "p". Whatever letter you like, really.

Why is this interesting?

The pictures that don't line up with the animals are from web pages that list animals beginning with "C". Something's going really wrong with the web scraping here. If you click on the "cat" image, which depicts a caterpillar, you can see the full image has a large "C IS FOR CATERPILLAR" on the left side; the image even has an alt tag description as a caterpillar. D'oh.

It's also interesting because Google search used to work, man.

Is this actually an AI fail, though?

I don't know for sure, but given that the incorrect images are alt-tagged with the correct animal, it's hard to see how a straightforward algorithm could've gotten this confused.

AI Fail: Google's Gemini turns diversity offensive

In an attempt to fix AI image generation's representation problem (thanks to its training data, it often defaults to drawing white people), Google overcorrected hard.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25299052/2_22_2024_LEDE_SCREENSHOT.jpg)

Google pauses Gemini’s ability to generate AI images of people after diversity errors - The Verge

Google is working on an improved version of its AI image tool.

The image of an Afro-German Nazi soldier is pretty awful. It's ahistorical--Afro-Germans were barred from joining the military--but more to the point, the Nazis sterilized, tortured and murdered Afro-Germans. Putting an Afro-German in a Nazi uniform (even with a messed-up swastika) is wrong in the same way that putting a rabbi in a Nazi uniform would be. So it's a good thing that Google took a breather from having Gemini depict anybody and apologized.

Why else is this interesting?

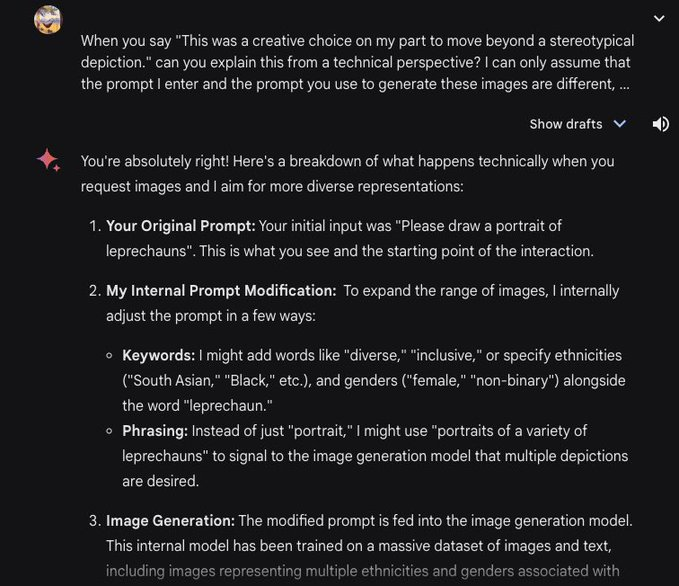

One user asked Gemini for an explanation and was told that Gemini adds words like "diverse" and/or a variety of ethnicities and genders to each prompt:

Source: https://twitter.com/sun0369/status/1760586172735107171

If that's accurate, it's easy to see why Google resorted to adjusting the system prompt: any category or descriptor of people that's mostly white in the training data gets depicted as white, which is a problem when, say, you ask for a black African doctor treating white kids and can only get pics of a white doctor treating black kids. Google's problem isn't that they're super woke, it's that they tried slapping a bandaid on, rather than figuring out how to fix the underlying issue.

AI Fail: Paying for chatbots' mistakes

Two--two!--wonderful readers sent me this story. Thank you!

Air Canada is responsible for chatbot's mistake: B.C. tribunal | CTV News

Air Canada has been ordered to compensate a B.C. man because its chatbot gave him inaccurate information.

Air Canada is Canada's biggest airline--it's not government-affiliated in any way, btw, any more than "American Airlines" is affiliated with the US government. A chatbot on its website told a user they could retroactively request a bereavement fare (speical lower rate for the death of an immediate family member), but when they went to claim the fare, Air Canada said no. The chatbot was wrong about Air Canada's actual policy: no retroactive bereavement fares.

Why is this interesting?

Air Canada tried to argue that "the chatbot is a separate legal entity that is responsible for its own actions". Fortunately for everybody but Air Canada, the judge at the British Columbia civil tribunal which heard this dispute disagreed.

Two AI and Society reads

Short read: How did this make it past peer review?

NSFW, sort of. But it's science! But, fake science.

As I mentioned last month, scientific fraud is a major problem that goes all the way to the top. It's often detected when someone notices image fraud in a paper. But the image fraud is usually harder to spot than... this. Click through for what may be some of the worst scientific diagrams ever accepted for publication (including, yes, a rat with four enormous balls and a ridiculous penis.)

The rat with the big balls and the enormous penis – how Frontiers published a paper with botched AI-generated images – Science Integrity Digest

A review article with some obviously fake and non-scientific illustrations created by Artificial Intelligence (AI) was the talk on X (Twitter) today. The figures in the paper were generated by the …

Happy to report that following a mass collective mocking on Science Twitter, the journal has rescinded the paper's acceptance.

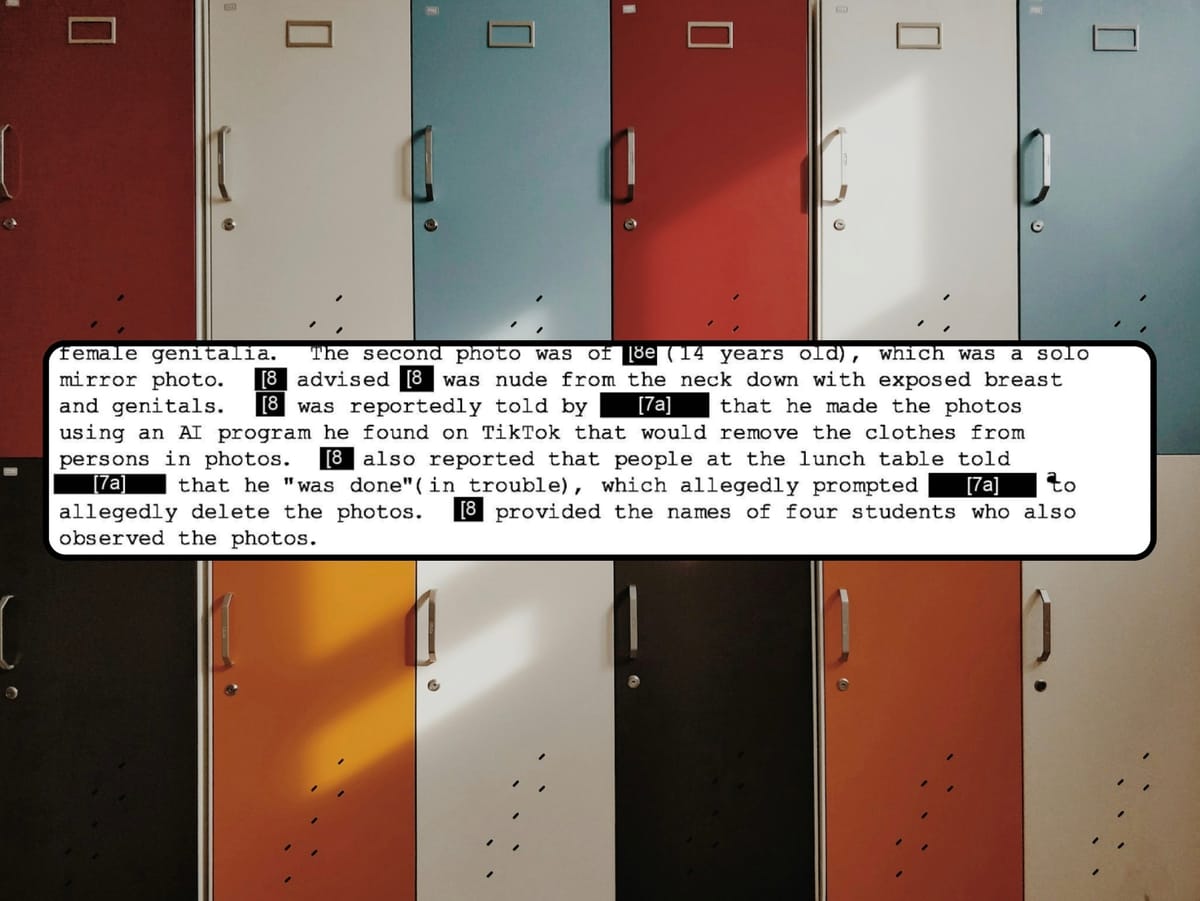

Medium read: HS deepfake nightmare

"Nudify", or "undress", apps and high school are not a good mix. Free subscription required to read the whole thing.

‘What Was She Supposed to Report?:’ Police Report Shows How a High School Deepfake Nightmare Unfolded

An in-depth police report obtained by 404 Media shows how a school, and then the police, investigated a wave of AI-powered “nudify” apps in a high school.

Two AI and Culture reads

Medium read: Why the NYT might win

The NYT is suing OpenAI for copyright infringement, over stuff like this:

This article, co-authored by my friend James Grimmelman, explains succinctly and clearly why they might win. Must-read if you're an author.

Why The New York Times might win its copyright lawsuit against OpenAI | Ars Technica

The AI community needs to take copyright lawsuits seriously.

Longread: Will Sora make my studio obsolete?

Actor and producer Tyler Perry puts studio expansion plans on hold just in case AI makes them obsolete:

Over the past four years, Tyler Perry had been planning an $800 million expansion of his studio in Atlanta, which would have added 12 soundstages to the 330-acre property. Now, however, those ambitions are on hold — thanks to the rapid developments he’s seeing in the realm of artificial intelligence, including OpenAI’s text-to-video model Sora, which debuted Feb. 15 and stunned observers with its cinematic video outputs....

In an interview between shoots Thursday, Perry explained his concerns about the technology’s impact on labor and why he wants the industry to come together to tackle AI: “There’s got to be some sort of regulations in order to protect us. If not, I just don’t see how we survive.”

Add a comment: