AI Week Dec 18th: Deepfaked news anchors, Tesla recall, chatbot fun, and more

Hi! I'm glad you could join me for this week's recap of the past week's interesting and fun AI news.

If this is your first time reading this newsletter, welcome! I hope you'll stick around. If it's your second, or third, or fourth time, thanks for sticking with me. As promised, this week's newsletter is shorter.

- Deepfaking news anchors

- Fun with chatbots

- Tesla recall, plus more bad news from the Muskiverse

- Followup: The EU's AI act, Apple's MLX, Google Gemini, AI home spies

- Longread: The AI Trust Crisis

- Where to learn more about AI

Bonus: Add some AI into your spreadsheets, because why not?

AI-generated news anchors

Startup Channel1 dropped a twenty-minute "showcase" news episode on Twitter this week. The big deal? All of the news anchors were AI-generated avatars.

Ceci n'est pas un news anchor

Ceci n'est pas un news anchor

To be fair, these news-avatars were based on real people who were paid for their likenesses. AI was used to generate video of these models reading the headlines: they're basically deepfakes of actors reading the news. (And they're very convincing, except that they don't move their bodies enough. Some of them look like they're being invisibly held by the shoulders.)

Channel1's demo video also showed off a couple of other things generative AI could do for (or to) the news:

- You know that annoying thing where people in other countries speak languages that are, like, not English? Newswatchers don't need to be exposed to that anymore, since generative AI can deepfake a translation onto footage of non-English speakers.

- Stories that didn't get caught on film can be reenacted by AI, with a little "AI generated image" badge in the corner (which I'm sure viewers will never overlook).

Channel1 is careful to specify that all their news stories are real, either written by actual journalists or AI-generated from "a trusted primary source", and to badge all their deepfakes as AI-generated. But imagine what this tech can do in less scrupulous hands. Won't it be fun when ONN starts using this tech?

See the highest quality AI footage in the world.

— Channel 1 (@channel1_ai) December 12, 2023

🤯 - Our generated anchors deliver stories that are informative, heartfelt and entertaining.

Watch the showcase episode of our upcoming news network now. pic.twitter.com/61TaG6Kix3

AI-generated voices in fake news

Russian propagandists are already using AI-generated voices to create propaganda videos at scale. DFRLab and BBC uncovered a Russian influence operation that targeted then-Ukrainian defense minister Oleksii Reznikov, accusing him of corruption. The use of AI-generated voices let the propagandists create tens of thousands of TikTok accounts, forcing moderators to play a losing game of whack-a-mole and racking up millions of views for videos packed with "false and misleading" claims.

Massive Russian influence operation targeted former Ukrainian defense minister on TikTok - DFRLab

Joint DFRLab-BBC Verify investigation exposes the largest information operation ever uncovered on popular social media platform TikTok.

Speaking of fake newscasters...

... Sports Illustrated, which was exposed at the end of November as using fake AI-generated sportswriters, has fired its CEO in a decision that naturally has nothing at all to do with last month's scandal.

Fun with (badly-deployed) chatbots

The ChatGPT implementation of the week was going to be tax help, because H&R block just released a new chatbot that answers tax questions. But it's been upstaged by another application: car sales.

Car dealerships have been integrating ChatGPT-powered chatbots into their websites, and this week, Chevrolet of Watsonville's chatbot has been showing off all the ways that can go wrong.

On Twitter, Colin Fraser had fun getting the chatbot to promise him a ridiculously sweet deal:

I don't really read all that much into it, LLM output is just meaningless random text unanchored to any structure. The chat api is a desperate attempt to impose some structure but it doesn't really work if the user doesn't play along.

— Colin Fraser | @colin-fraser.net on bsky (@colin_fraser) December 17, 2023

(By the way, to read a thread on Twitter without logging in, swap out "twitter.com" in the URL for "nitter.net". You're welcome.)

Over on Mastodon, Chris White got it to write him some code:

https://stoney.monster/@stoneymonster/111592567052438463

https://stoney.monster/@stoneymonster/111592567052438463

And AutoEvolution reports that Chevrolet of Watsonville's chatbot tried to sell Roman Müller a Tesla.

I can't find the chatbot on https://www.chevroletofwatsonville.com/... maybe they took it down when the Internet started running up their OpenAI bill.

ChatGPT for tax help: what could go wrong?

H&R Block has trained its chatbot on its library of tax information. But taxes are a very “if-then” situation, following flowchart logic, and ChatGPT is not only bad at deductive (if-then) reasoning, it's prone to being led astray... like the poor, abused chatbot at Chevrolet of Watsonville. Not to mention the whole "hallucination" issue, in which generative AI confidently says stuff that isn't true, which is the last thing I want from tax help.

In fact, if you zoom in on the screenshot in H&R Block's press release, you can see the fine print: "AI Tax Assist is a digital helper that's still learning, so please review all responses." Caveat emptor.

Is ChatGPT getting lazier?

Speaking of ChatGPT, paying users have been complaining that its answers are getting worse--refusing to do things like create a list of calendar dates, or write more than a couple of lines of code.

GPT-4 has become so @#$%@ lazy, it won't even output more than a handful of code lines now:

— Benjamin De Kraker (@BenjaminDEKR) November 28, 2023

Fix. This. pic.twitter.com/RHBgEwIVnW

(I love ChatGPT's code comments. "Rest of the function goes here"... what developer hasn't slapped something like that in at the end of a much-too-long day?)

One (probably unrelated) thing ChatGPT will no longer do is write out a googol for you. I reported a couple of weeks ago that if you got ChatGPT 3.5 to write out a googol for you, but write the zeros as "one", it would spew "one"s until it started producing random text, some of which was its training data. ChatGPT is still happy to start spewing "one"s, but now it stops after about 100 with an error:

(It's not clear to me how this violates the content policy or terms of use. I asked ChatGPT, but it didn't know either.)

And is ChatGPT's maker OpenAI still actually separate from Microsoft?

After the messy politics at ChatGPT maker OpenAI last month, that saw CEO Sam Altman get fired, publicly join Microsoft, then be reinstated as OpenAI's CEO instead, Microsoft gained a "non-voting observer" seat on OpenAI's board. This got the attention of the UK's Competition and Markets Authority, and now, the US FTC is reportedly considering an investigation.

Elon Musk's no-good, very bad week

Tesla recall: Autopilot really is that bad

Last week, the Washington Post ran a story linking Tesla's Autopilot to a number of fatal crashes. In each case, Autopilot was enabled when it shouldn't have been--in a rural area, for example--and the driver wasn't paying attention.

The problem with Autopilot is that while Elon Musk and Tesla have been promoting it since about 2016 as something that will drive your car for you, the fine print actually says that it requires constant supervision. In other words, you have to pay as much attention as if you were driving unassisted, while not actually driving. As this Rolling Stone article points out, that's something that humans are really bad at.

Good news: there's a recall

This week, the US NHTSA finally announced a recall of the Autosteer component. The recall affects some two million Teslas. Good news, right?

Bad news: it's just a sofware patch, and that's probably not good enough

The bad news is that the "recall" is just a software patch, which Tesla will push wirelessly. The worse news is that the patch probably won't fix all the issues that make Autosteer dangerous.

The software update will make it harder to turn on Autosteer in the wrong place, and lock the driver out of Autosteer if the car notices they're not paying attention three times in a row.

Because Autopilot requires supervision, the car is supposed to monitor the driver to make sure they're paying attention to the road. Prior to about 2021, Teslas did this mainly by checking for torque on the steering wheel--a test that Consumer Reports famously beat by hanging a water bottle from the steering wheel. You could literally get your Tesla to drive itself with no one in the driver's seat. Tesla then installed cameras pointing at the driver's seat... but the cameras don't work in low light.

The Verge's article highlights several problems with a software-only recall:

- The driver-monitoring cameras don't work in low light.

- Unlike competitors, Tesla doesn't have forward-facing radar to detect obstacles in front of the car: only cameras and image processing software, which can make mistakes.

- There's no geofencing: Tesla could GPS-map the roads where Autosteer has been tested and is safe to use and geofence the feature, but they haven't, so it can still be turned on in settings where Autosteer isn't intended to be used, like residential areas.

(The Model S manual that I linked in the last point above has a stern warning to only use Autosteer "on controlled-access highways" and not "in areas where bicyclists or pedestrians may be present"--and then, further down the same page, there's a shamelessly cavalier acknowledgement that Tesla drivers will do it anyway: "if you choose to use Autosteer on residential roads, a road without a center divider, or a road where access is not limited, Autosteer may limit the maximum allowed cruising speed [to] the speed limit of the road plus 5 mph (10 km/h).")

Elon Musk's Big Lie About Tesla Is Finally Exposed

More than 2 million of the cars are being recalled — because Tesla’s “self-driving” systems have always been anything but

More bad news from the Muskiverse

In other Elon Musk news this past week:

- Starlink failed to win a fat subsidy from the FCC to expand rural broadband

- Twitter's ad revenue fell by $1.5 billion this year (and their spin is very funny: But that doesn't mean anything, because we're not Twitter, we're X!)

- Sources told Ars Technica this week that "Elon Musk told bankers they wouldn’t lose any money on Twitter purchase"... hahahahaha no

- The US tax credit for Tesla sedans is going away, although it's not the only electric car to lose it

And, in case you missed it, Swedish Tesla employees are still on strike, and unions in Denmark and Norway recently joined sympathy boycotts.

Oh, you made a chatbot? Even my Furby can do better than that

Musk's former partner Grimes launched a trio of AI-powered Furbys with tech startup Curio--and the star of the toy trio shares a name with X's hostile chatbot Grok. And the plushie was trademarked first. Zing!

Image from Curio's website

Image from Curio's website

"Absurdly by the time we realized the Grok team was also using this name it was too late for either AI to change names, so there are two AIs named Grok now," wrote Grimes.

https://exclaim.ca/music/article/grimes_launches_ai_childrens_toy_with_the_same_name_as_elon_musks_anti-woke_chatbotDon't worry, there's more

Tune in next week for more bad news for Musk, I guess: while I was writing this up, the EU launched an investigation into X under Europe's Digital Services Act.

But hey look it's A Philanthropy!

Musk wants to open a university in Texas. 1000 Internet Points to BoingBoing for calling the planned university, which will for some reason start out as a K-12 school, "Musk-a-tonic University."

Followup

A little follow-up on some of last week's stories:

Why the EU's AI act was so hard to pass

/cdn.vox-cdn.com/uploads/chorus_asset/file/22512650/acastro_210512_1777_deepfake_0002.jpg)

Why the EU’s landmark AI Act was so hard to pass - The Verge

Lawmakers crammed overnight like students to reach a deal.

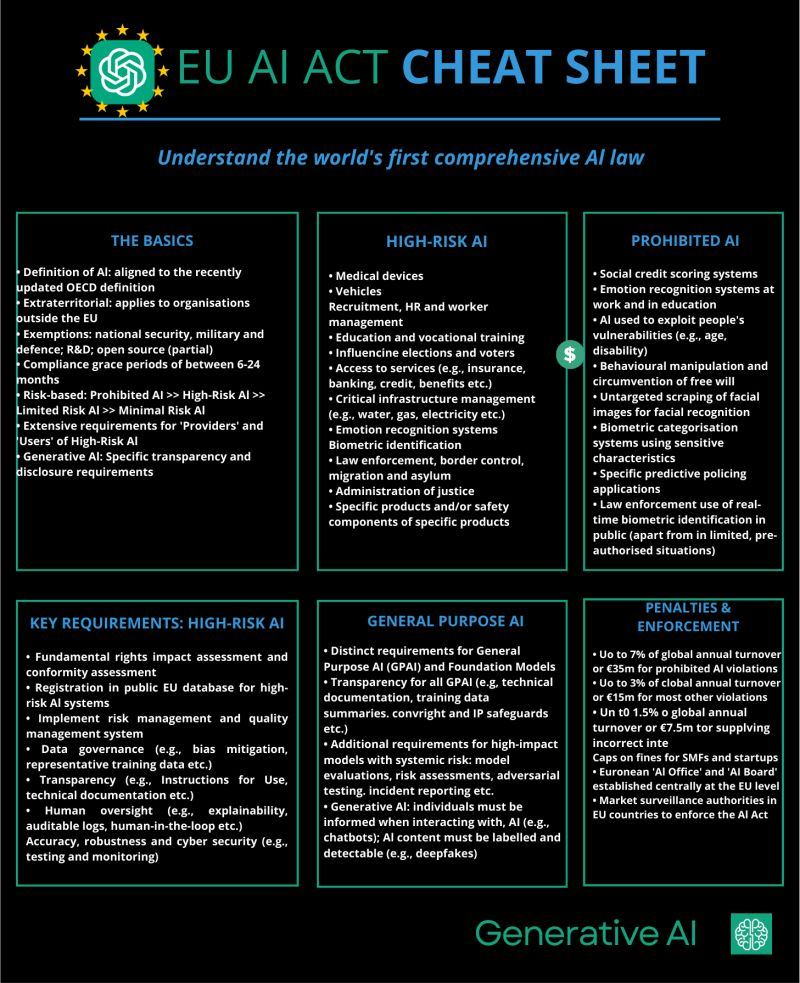

A cheat sheet on the AI Act

I was never going to read the whole thing, so I appreciate this cheat sheet from LinkedIn page Generative AI:

Apple's MLX

A reader pointed out that last week's newsletter really underestimated the potential of MLX, the framework that Apple released last week to enable machine learning on Apple hardware. I seem to recall scoffing at the idea that puny MacBook chips could compete with the power of Nvidia graphics cards... and I was wrong. I've been running an old Intel MacBook for years and I haven't kept up with everything that Apple's M1 through M3 chips can do.

Here's a more in-depth review of MLX if you're interested: https://www.computerworld.com/article/3711408/apple-launches-mlx-machine-learning-framework-for-apple-silicon.html

Google Gemini

Google launched its generative AI suite, Gemini, last week. This week, text-generation model Gemini Pro is available for developers to use for free, with a relatively generous cap of 60 requests per minute. If you're interested, check it out here: https://ai.google.dev/models/gemini

Also, the Google Pixel 9 might have a new, Gemini-powered AI assistant, super-cutely named Pixie.

AI Spies at Home

Last week's longread (well, one of them) was Bruce Schenier's article about the ways that AI will enable states--or other actors--to compile the masses of surveillance data that the modern Internet generates into intelligence. (If you haven't read it, go read it now. It's worth your time.) This week, a marketing company sparked panic by essentially claiming that they compile everything your phone hears in your presence into marketing intelligence. (Link goes to an archived version of their page.)

Creepy, right? This Ars Technica article breaks it down and concludes the marketing company was probably exaggerating--by which I mean: they do create marketing intelligence out of the information they get, but they probably don't have access to the conversations you have in the vicinity of your phone, TV, Alexa, etc.

But the panic that their exaggerated claims caused speaks to a bigger issue, which is...

This week's longread: the AI Trust crisis

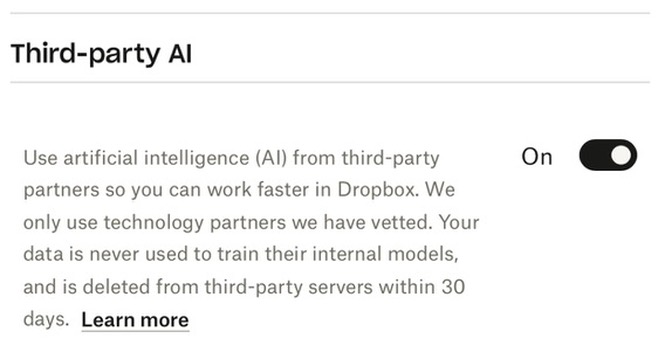

Is Dropbox using your files to train its AI? It's a reasonable question, since Meta and X both brag about using your content to train their AIs. But Dropbox typically has much more of its users' information, much of which wasn't intended to be public. So there was some panic this week when a bunch of people, including AWS CTO Werner Vogels tweeted and re-tweeted that Dropbox was automatically opting users into using their data for AI training.

If you are using @dropbox and do not want 3rd party AI technology partners to have access to your dropbox files, flip this switch (on by default, or did I agree to this somewhere?) (web->account->settings-3rd party AI) /cc @EU_EDPS pic.twitter.com/rnKM332KSh

— Werner Vogels (@Werner) December 13, 2023

But... it's not true. Dropbox doesn't use your files to train third-party AI, per its CEO. The confusion came from a badly labelled option in the settings.

However, DropBox does send your data to OpenAI if you have that option set to "yes" -- so that you can chat with its new, ChatGPT-powered search interface to find stuff in your own files. It's not great, though, that that option seems to default to "yes". Oh, sure, OpenAI supposedly doesn't use the data from DropBox to refine its models. _Why is that not reassuring? _

Developer Simon Willinson coined the phrase "The AI Trust Crisis" for this problem: "It’s increasing clear to me like people simply don’t believe OpenAI when they’re told that data won’t be used for training."

The AI trust crisis

Dropbox added some new AI features. In the past couple of days these have attracted a firestorm of criticism. Benj Edwards rounds it up in Dropbox spooks users with new …

TL;DR: To train ChatGPT and DALL-E, OpenAI has sucked down a ton of data that it arguably had no right to, including pirated, copyrighted ebooks and copyrighted art. When OpenAI says they're not going to use your data for training, people don't believe it. That's a big problem for OpenAI.

Where to go to learn more about AI

Last week a reader asked me for some ways to learn more about AI. I thought I'd list a couple of my favourite sources for non-programmers here. (Also fine for programmers who want to learn about AI, not how to code AI.)

Websites

My favourite AI & ML coverage for a tech-savvy but non-expert audience (like me!) is in Ars Technica, The Verge and The Register.

Courses

Coursera has some MOOCs that provide an intro to AI for non-programmers. I haven't taken these courses, but they look solid:

- Some of my former coworkers in tech have been taking Coursera courses taught by Coursera co-founder Andrew Ng. A good starting point is his AI for Everyone course.

- IBM has an Introduction to Artificial Intelligence course on Coursera. The nice thing about this course is that if you enjoy it, it's the first in a four-course track of AI Foundations for Everyone.

- If you're looking for a brief introduction to the key concepts behind generative AI tools like ChatGPT and Midjourney, this 40-minute video by Google's Dr. Gwendolyn Stripling is pretty good. (I read the transcript; if you're not interested in programming, stop watching when she gets to the code file conversion problem. Everything before that is general-interest.)

Introduction to Generative AI - Introduction to Generative AI | Coursera

Video created by Google Cloud for the course "Introduction to Generative AI ". This is an introductory level microlearning course aimed at explaining what Generative AI is, how it is used, and how it differs from traditional machine learning ...

Bonus: How to wedge Generative AI into your spreadsheets (but not why)

This week, Anthropic released Claude for Google Sheets, a Chrome extension that lets you call Claude in a Google spreadsheet. It's free to download, but you'll need to have a Claude API key to use it. (It's not available in Canada, unfortunately.)

That's not the only way to get some AI text generation into your spreadsheets, either. Here's how to use ChatGPT in Google Sheets.

What I'd like to know is why. Spreadsheets make the business world go around, but I'm having a hard time thinking of reasons I'd want to add a chatbot to one. If you can think up a use case, please let me know!

https://workspace.google.com/marketplace/app/claude_for_sheets/909417792257AI Week is taking next Monday off. See you in the new year!