AI week Dec 11: Whalesong, spying, deepfakes, and hot ocoate in a sweater

Happy Winter! We got our first real snow here in Ottawa this week. Time to cuddle up with some hot ocoate in a sweater:

Welcome to this week's AI week! If this is your first time joining me, thanks. I'd love to hear what you think -- email me at aiweek@rdbms-insight.com or reply to this email.

In this week's interesting AI news:

- I'm dreaming of a Dall-E Christmas

- AI application of the week: ML helps decode whale speech

- Schneier: AI to usher in new era of spying

- When anything could be a deepfake, everything is

- In brief: EU's AI Act, SAG-AFTRA, Q* and more

- Mega-corp AI news: Assistants galore!

- Longreads: Microsoft & OpenAI; ChatGPT's 2023

Before we get started, I have to admit that I miiiight have gotten a little carried away this week. There was just so much interesting stuff going on!

So this week's newsletter is longer than I planned. If it's too much, feel free to let me know. Next week's newsletter will be shorter--I promise.

AI Weirdness's advent calendar is out

The "hot ocoate" above is from Janelle Shane's AI Weirdness advent calendar. I've only been writing this newsletter for four weeks, but it should already be obvious that I love Janelle Shane's AI Weirdness.

We celebrate "secular Christmas" in our house, by which I mean that we have a tree and presents and feasts and lights, but don't actually practice any of the religions that have been associated with these traditions over the centuries. (5 or 6, for our household's traditions: Germanic paganism - midwinter Yule celebration, Roman Saturnalia - greenery and gifts, Nordic paganism - stockings, Ukrainian paganism - embroidered cloths, carols and kutia, Ukrainian Catholicism - 12-course Christmas Eve feast, Western Christianity - more carols, Coca-Cola - Santa Claus.) One of these traditions is an advent calendar for the kid, and what could be better than a GPT-4/DALL-E3 advent calendar? Seriously, check it out, it's delightful and I'm not going to spoil it any more than I already have. (If you're not familiar with the tradition, it's a 24-day calendar hiding a little surprise for each day from December 1-24.)

https://www.aiweirdness.com/ai-weirdness-advent-calendar-2023/

AI application of the week: Decoding whale speech

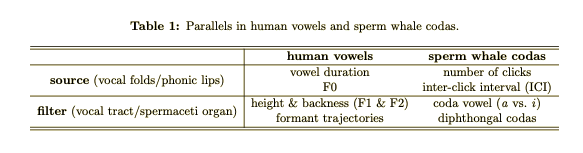

Yep, I called it "speech," not "song." That's because researchers at UC Berkeley used machine learning to find what seems like the equivalent of speech sounds in sperm whale songs (preprint).

Scientists Have Reported a Breakthrough In Understanding Whale Language

Researchers have identified new elements of whale vocalizations that they propose are analogous to human speech, including vowels and pitch.

To summarize their process: First they trained a model to take audio recordings of human speech and pick individual speech sounds out of it (if you think about it, this is one of those very hard tasks that feels like it's effortless). They then turned that model on audio recordings of sperm whale songs to pick out a couple of properties of whalesong that might be analogous to vowels in human speech:

This is hands-down one of the coolest applications of AI that I've seen. Translating between two humans speaking different languages is cool, but training a neural net to parse whale speech isn't just imitating something people can do (like chatbots), or doing something people can do but faster (like drug discovery) -- it's using ML to figure out stuff humans have really not been able to figure out, at all, despite decades of effort.

Speaking of drug discovery...

Speaking of drug discovery, there were a couple of stories this week about companies using generative AI to find new antibody drugs.

- There are a ton of antibodies the body could make, and some of them might be useful for sticking to cancer. Startup Absci is training models to recognize characteristics of antibodies that might be useful.

- A pharma company, Boehringer Ingelheim (BI, because I apparently can't type their name the same way twice), is teaming up with IBM to train IBM's models on BI's proprietary data in the hope of discovering new, useful antibodies.

AI has been used to great effect in drug discovery. In May, a team used machine learning to suggest potential antibiotics against the multi-drug-resistant superbug Acinetobacter baumannii, resulting in the development of the experimental antibiotic abaucin.

https://www.nature.com/articles/s41589-023-01349-8

ML has been used in pharmacological research for more than just drug discovery. If you're interested in a more in-depth overview, this open-access paper seems to be a decent starting place:

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10539991/

Sidebar: AI in medicine, the less-good side

| Machine learning is already contributing to pharmacological research, and is showing promise in other areas like cancer risk evaluation, but not all of its healthcare applications have been positive. A few weeks ago, health insurance firm UnitedHealthcare was revealed to be using an AI model to deny 90% of certain claims. (I was once insured by UHC, and I'm sorry to say this doesn't surprise me.) Cigna was sued for the same thing in July, although they claim that no AI was involved, only a boring old if-then algorithm. (Even if so, that's still a problem, because judgements of medical necessity are supposed to be made by a doctor.) |

Must-read: AI to usher in new era of spying

Bruce Schneier has a longread up on Slate. TL;DR (but you should read it!): Surveillance and spying are different things. Surveillance is tracking someone and recording where they've been, what they've been doing, and what they've been saying. Spycraft, however, involves compiling that big heap of surveillance data into intelligence.

Our modern technological infrastructure is pretty good at mass surveillance (those online ads that follow you everywhere are the tip of the iceberg), but what we haven't had until now is a way to compile that massive volume of surveillance information into intelligence. Well, thanks to AI, now we do.

Spying has always been limited by the need for human labor. A.I. is going to change that.

The internet enabled mass surveillance. A.I. will enable mass spying.

When anything could be a deepfake, everything is

The Lincoln Project, a group of Republicans against Trump, recently aired a commercial with an embarrassing video of Trump. They've been doing this kind of thing for years, but this time, Trump hit on an easy way to dismiss it: Ignore that video, it's a deepfake.

On his social network Truth Social, he posted:

The perverts and losers at the failed and once disbanded Lincoln Project, and others, are using A.I.(Artificial Intelligence) in their Fake television commercials in order to make me look as bad and pathetic as Crooked Joe Biden, not an easy thing to do...

I mean, we've all seen many of these clips before, this is not a deepfake. But it's a just-believable-enough excuse, because...

Deepfakes are getting really easy to use

It's so easy to create deepfakes that the only way most of us can be 100% sure that a video is authentic is if we shot it ourselves. India's been waking up to this thanks to a series of deepfakes featuring Bollywood stars--most recently, a TikTok that supposedly showed Bollywood star Kajol changing her outfit, but was actually an Instagram influencer with Kajol's face edited on.

It was exposed as a deepfake due to intense scrutiny:

- A single frame shows one eye looking up and the other looking down, which is very hard for healthy humans to do.

- This raised fact-checkers' suspicions, and reverse image search on keyframes let them track down the original video.

But this video only got that scrutiny because it featured a Bollywood star. (And if the Lincoln Project really had used a deepfake, it would have gotten similar scrutiny and debunking. So. Not a fake.) Not to induce paranoia, but if someone deepfaked a video of you or me, who's going to give it that deep scrutiny?

Fact Check: Viral Video Claiming To Show Kajol Changing Clothes On Camera Is A Deepfake

A video has been circulating on social media claiming to show Bollywood actor Kajol changing her clothes in front of the camera.

In brief

Speaking of lawsuits...

... Getty's lawsuit against Stability AI (the company behind image-generation AI Stable Diffusion) will go to trial in the UK. Getty is suing them for allegedly slurping down Getty's images without permission or payment.

This is just one of a host of lawsuits filed in the UK and US against the makers of generative AI text-to-image tools like Midjourney and generative AI chatbots like ChatGPT, all of which were trained on huge amounts of human-produced (and, in many cases, human-copyrighted) data.

EU's AI Act comes one step closer to becoming law

/cdn.vox-cdn.com/uploads/chorus_asset/file/24814378/STK450_European_Union_02.jpg)

EU reaches provisionap deal on AI Act, paving way for law - The Verge

It still likely won’t come into effect until 2025 at the earliest.

Why everyone was really excited when an AI could do math... and why it's called Q*

A couple of weeks ago, I mentioned that there was a lot of excitement around the news that Ilya Sutskever's team at OpenAI created an experimental model, known as Q*, that reportedly could solve basic math problems accurately. For a little context on why this is exciting, here's a screenshot from a conversation in which I was trying to help Claude (Anthropic's LLM chatbot) to write out a googol, ie, one followed by 100 zeroes:

In case it's not obvious at a glance, that's way more than 100 zeroes. Claude simply can't count. (I still haven't found a prompt that will get it to successfully write a googol.) In contrast, Q* reportedly can solve elementary-school math problems.

If you'd like to read more about Q*, Ars Technica has a really solid article.

Pullquote:

So with all this background, we can make an educated guess about what Q* is: an effort to combine large language models with AlphaGo-style [tree] search—and ideally to train this hybrid model with reinforcement learning.

The real research behind the wild rumors about OpenAI’s Q* project | Ars Technica

OpenAI hasn't said what Q* is, but it has revealed plenty of clues.

If you don't have time for the full article, read its conclusion:

I suspect that building a truly general reasoning engine will require a more fundamental architectural innovation. What’s needed is a way for language models to learn new abstractions that go beyond their training data and have these evolving abstractions influence the model’s choices as it explores the space of possible solutions. We know this is possible because the human brain does it. But it might be a while before OpenAI, DeepMind, or anyone else figures out how to do it in silicon.

AAP responds to Big Tech's arguments on AI

The Association of American Publishers (AAP) has published their response to the US Copyright Office's call for comments on AI. The subhead of their press release gives the gist of it: "There Should be No Free Pass for Multi-billion Dollar AI Companies Engaged in Mass Copying of Authorship Without Consent."

(Some background for the non-writers reading this newsletter: Writers and publishers are extremely unhappy that large language models have been trained on their writing, including entire copyrighted books, without payment or consent. If you read last week's newsletter, you might remember the bit about how chunks of whatever the LLM is trained on get stored in the model. It's hard for me to see this as anything but blatant theft of copyrighted writing.)

Publishers Lunch has a good rundown of the AAP response; it's paywalled, but here are a couple of pull quotes:

-

re "memorization":

As for the too cute by half contention that “memorization,” in which AI systems have been found capable of recapitulating copyrighted works word for word, is a bug not a feature and will be fixed, the AAP says a “trust us” promise from developers is not nearly good enough.

-

re compensation:

The companies that benefit from the commercialization of this technology should be required not only to compensate rights holders for their past ingestion of copyrighted works to train Gen AI systems but also for their ongoing and future use of protected works to train new Gen AI systems or fine-tune their existing products.

Actors of SAG-AFTRA vote to ratify union contract that regulates uses of AI

The contract sets limits on studios' and producers' use of AI. But not everyone feels that regulation goes far enough:

“We were advocating for protecting human being jobs in this negotiation, and we left all these doors open and all these people vulnerable,” board member Shaan Sharma, who voted against the deal, said of its AI language.

SAG-AFTRA Deal: Actors Push Past AI Concerns to Get Back to Work – The Hollywood Reporter

SAG-AFTRA’s members give a vote of confidence to leadership in approving its deal with studios.

Some of this week's mega-corporate AI news

- Meta: image generation

- Google: Gemini

- Quick list of recently-released AI assistants

- Apple: FOSS framework

- AI Alliance

- Musk: xAI seeks $$$

1. Meta launches standalone image generator

Meta launched its image generator as a standalone this week at imagine.meta.com. It uses Meta's own image-generating model, Emu, which was already integrated into the "Meta AI" feature of their messaging apps (more on this below). Instead of "@MetaAI /imagine a hippo eating spaghetti", you can now go to a standalone website to generate a hippo eating spaghetti. Again, this is for US users only.

That hippo ain't cheap...

By the way, generating one image can take almost as much power as it takes to charge a smartphone.

And, since nobody stops at just one hippo--let's be honest, the first one always has three eyes, or is in a bathtub, or something else you never thought to exclude from your prompt--an October study estimated that AI processing could eventually consume "as much energy as Ireland".

...and it was trained on your photos

So glad I put my child’s precious baby photos on Facebook!

Meta’s new AI image generator was trained on 1.1 billion Instagram and Facebook photos | Ars Technica

"Imagine with Meta AI" turns prompts into images, trained using public Facebook data.

ICYMI, Meta has an AI assistant

Meta also has an AI Assistant, the very-creatively-named "Meta AI," although it's currently only available to US users:

We are rolling out AIs in the US only for now. To interact with Meta AI, start a new message and select “Create an AI chat” on our messaging platforms, or type “@MetaAI” in a group chat followed by what you’d like the assistant to help with. You can also say “Hey Meta” while wearing your Ray-Ban Meta smart glasses."

Yes, "our messaging platforms" means you can chat with @MetaAI in WhatsApp, Instagram or Facebook, although you might still need to sign up for a beta-tester program--it's not clear. I'm not in the US! If you are, give @MetaAI a shout for me and let me know about your experience. You can also chat with 28 fake celebrities--no, I'm not making this up; someone at Meta decided that the best and most ethical thing to do with their new chatbot tech was to make it pretend to be Tom Brady playing "Bru, confident sports debater" on FB and IG.

2. Google launches its own AI assistant, Gemini

with a YouTube video that's pretty cool:

All I have to say about this video is: Don't get too excited, because it's kind of a fake. The reality is more like this:

(Image is from the linked Bloomberg article.)

(Image is from the linked Bloomberg article.)

Having said that, a big difference between Google's Gemini and other public-facing AI offerings is that Gemini can work with text, images and videos, instead of being three separate assistants which each works with one. (It can't watch a video and tell you what it's seeing in realtime, though. That's just deceptive.)

Google claims Gemini is beating OpenAI and others on various benchmarks, but not everyone agrees. (Ars Technica has an in-depth comparison, if you're curious.)

Google is already rolling out Gemini 1.0 in Google Bard (or so I read; Google Bard is not available in Canada, but if you live in the US, you can try it out), and if you have a Pixel 8 Pro it now runs a wee mini version called Gemini Nano. (Weirdly, the price of the Pixel 8 Pro on amazon.com dropped sharply right after this announcement. I don't know what to make of that.) Apparently Gemini will be coming soon to Search. Here's hoping it performs better than this:

Google's note-taking app rolls out to all US adults

"NotebookLM" can summarize sets of documents for you, create outlines and study guides, and more. On Friday, Google made it available to US users over 18.

This looks like it'll be incredibly useful, but also, easy to overuse: taking notes on something you read can help you learn the material, especially for tactile/kinesthetic learners.

When memory's not personal

Google also has a project in the works, "Project Ellman,"(https://www.cnbc.com/2023/12/08/google-weighing-project-ellmann-uses-gemini-ai-to-tell-life-stories.html ) to use Gemini to analyze your Google Photos to figure out what you've been up to, so that it can tell you all about your own life if you ask it. "Ellman Chat" combines the photo-analyzing capability of Apple Photos' "memories" with an LLM to generate gems like this one:

“You seem to enjoy Italian food. There are several photos of pasta dishes, as well as a photo of a pizza.”

The project's tagline for Elllman Chat is "Imagine opening ChatGPT but it already knows everything about your life. What would you ask it?”

I might ask "How can I get you to stop?," but that's just me.

If Project Ellman and NotebookLM remind you of Bruce Schneier's article, it should. Schneier's talking about using the same technological capability to compile intelligence. Everything that software like Project Ellman can tell you about your own life, it can tell anyone who has access to your data.

3. AI "assistants" are sprouting up like mushrooms lately. Let's keep track!

- Google: Gemini AI

- Amazon: CodeWhisperer (coding assistant), Amazon Q (coming soon)(no relation to Q*)

- Microsoft: Microsoft CoPilots

- Facebook/Meta: MetaAI

4. Apple: FOSS framework

Apple's taking a different direction. This week it launched MLX, a free and open-source (FOSS) framework (ie, toolkit) for other developers to build machine learning (ML, which often gets called "AI") applications on Apple's own chips, which it calls Apple Silicon, so developers can do ML applications on Apple hardware. (Here's MLX on Github. It has APIs -- interfaces -- for both C++ and Python protrammers.)

Most ML is done on either GPUs (graphics processing cards) or specialist AI chips, from specialist manufacturers like NVIDIA or AMD (btw, bonus news item: Meta, OpenAI and Microsoft all announced this week that they're moving away from NVIDIA chips to AMD's newest AI chips), or on custom-built processors; here's NVIDIA's set of tools for ML, for example. It's hard to see how Apple Silicon could compete with GPUs for ML, so maybe the intended appeal here is that you can train your own LLM at home on your laptop. I can see how this might fit with Apple's privacy-focused brand: I'd much rather have my laptop tell me I seem to like Italian food than Google.

5. IBM, Intel, and 48 others form AI Alliance

Its members include IBM, Meta (Facebook's parent company), Intel, Hugging Face, AMD, and more. The missing names include: NVidia, OpenAI, Microsoft.

AI Alliance

A community of technology creators, developers and adopters collaborating to advance safe, responsible AI rooted in open innovation.

6. Elon Musk wants $1bn for xAI

xAI's only product is Grok, a snarky, less-capable version of ChatGPT. It's not available to the general public yet, and may only ever be available to X (Twitter) Premium subscribers, but here's Elon Musk celebrating its snarkiness:

Grok's not even been released and it already has a reputation for vulgarity. Regardless, Musk is seeking $1bn in funding.

By the way, Twitter's X's CEO Yaccarino made waves this week with a kinda-nonsensical tweet that users speculated was written by Grok:

Welcome to the world Grok — the ultimate ride or die.

— Linda Yaccarino (@lindayaX) December 7, 2023 (source tweet)

Companies like OpenAI and Anthropic have gone to great lengths to (not always successfully) keep their language models from being used to hurt others by installing rules called "guardrails". Elon Musk has taken the guardrails off Twitter since he acquired it - hate speech has gone up, etc. Is Musk's xAI going to have the same absence of guardrails as Twitter? (TBH, Grok's rudeness and vulgarity point to "yes".)

Three things:

- Elon Musk's personal fortune is over $200bn. Why he needs to raise money for anything is a mystery to me. I guess it would be too inconvenient to sell 1/200th of his Tesla stock? #justbillionairethings

- Speaking of Tesla, self-driving is another form of machine learning ("AI"), and Tesla's record there doesn't inspire confidence.

- Elon Musk's record on AI is all over the place. Here's a quick timeline:

Elon Musk's AI timeline

- In 2015: Musk co-founded OpenAI (the ChatGPT company)

- Feb 2018: Musk left OpenAI, after his offer to become its CEO was rebuffed

- March 2023: Musk signed the letter calling for a six-month pause in developing AI

- July 2023: Musk changed his mind on the pause and founded xAI

- Oct 2023: while at the global AI safety summit at Bletchley Park, Musk described AI as a threat to humanity and mused that we may not be able to control it. Bonus: In a post-Bletchley meeting with Rishi Sunak (UK PM), Musk said that AI is coming for our jobs: “There will come a point where no job is needed.”

- Shortly after the Bletchley Park AI safety summit, Musk's xAI unveiled its foul-mouthed LLM-based chatbot, Grok

- Dec 2023: Musk is now seeking $1bn in funding for xAI.

These are either the actions of someone who's not really worried about AI being an "uncontrollable" threat but wants us to think he is, or of someone who's completely lost the plot. Or, I suppose, of someone a bit egotistical who thinks that no one else can contain the threat of AI, so he, a super-genius, had better get there first. If the latter, good luck: he’s getting a very late start.

Longreads

My pick for must-read longread this week is Bruce Schneier's article in Slate, above, but two other interesting longreads crossed my path this week.

Microsoft & OpenAI: What happened there?

https://www.newyorker.com/magazine/2023/12/11/the-inside-story-of-microsofts-partnership-with-openai

A couple of weeks ago, the board of ChatGPT makers OpenAI fired its CEO Sam Altman, then hired another one, then rehired Sam Altman. (You can read a summary in my newsletter from that week.) This is the story of how that chaotic week looked from inside OpenAI's major funder, Microsoft.

The Inside Story of Microsoft’s Partnership with OpenAI | The New Yorker

The companies had honed a protocol for releasing artificial intelligence ambitiously but safely. Then OpenAI’s board exploded all their carefully laid plans.

One interesting aspect of that article was their brief profile of the motivations of Microsoft's CTO, Kevin Scott, for investing so heavily in OpenAI.

The discourse around A.I., [Scott] believed, had been strangely focussed on science-fiction scenarios—computers destroying humanity—and had largely ignored the technology’s potential to “level the playing field,” as Scott put it, for people who knew what they wanted computers to do but lacked the training to make it happen. He felt that A.I., with its ability to converse with users in plain language, could be a transformative, equalizing force—if it was built with enough caution and introduced with sufficient patience.

Remember Clippy? (It's OK if you don't. Clippy was awful.) Scott's thinking, according to this article, was that if Microsoft could introduce AI to the general public as a Clippy-like assistant, flaws and all, people would both get used to AI and become familiar with its non-infallibility.

Back to OpenAI -- while the NY Mag piece doesn't mention it, the Washington Post had a story about Altman's attempted manipulations of the board, which they characterize as psychologically abusive. Seems that when the board said Altman had lost their confidence, they were talking about the lies Altman told in his campaign to remove Helen Toner from the board.

ChatGPT's year in review

This week, The Atlantic is kicking off an eight-week series "in which The Atlantic’s leading thinkers on AI will help you understand the complexity and opportunities of this groundbreaking technology." You can read it as a newsletter--if you've been enjoying this newsletter, you'll probably enjoy theirs, too. The first installment is their reflections on ChatGPT's 2023:

(

( (

(

Add a comment: