[AINews] X.ai Grok 3 and Mira Murati's Thinking Machines

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

GPUs are all you need.

AI News for 2/17/2025-2/18/2025. We checked 7 subreddits, 433 Twitters and 29 Discords (211 channels, and 6478 messages) for you. Estimated reading time saved (at 200wpm): 608 minutes. You can now tag @smol_ai for AINews discussions!

It is a rare day when one frontier lab makes its debut, much less two (loosely speaking). But that is almost certainly what happened today.

We would say that the full Grok 3 launch stream is worth watching at 2x:

Opinions on Grok 3 are mixed, with lmarena, karpathy, and information recycler threadbois mostly very positive, whereas /r/OpenAI and other independent evals being more skeptical. Not everything is released either; Grok 3 isn't available in API and as of time of writing, the demoed "Think" and "Big Brain" modes aren't live yet. On the whole the evidence points to Grok 3 laying credible claim to being somewhere between o1 and o3, and this undeniable trajectory is why we award it title story.

There is less "news you can use" in the second item, but Mira Murati's post OpenAI plan is now finally public, and she has assembled what is almost certainly going to be a serious frontier lab in Thinking Machines, recruiting notables from across the frontier labs and specifically ChatGPT alumni:

There's not a lot of detail in the manifesto beyond a belief in publishing research, emphasis on collaborative and personalizable AI, multimodality, research and product co-design, and an empirical approach to safety and alignment. On paper, it looks like "Anthropic Redux".

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

Grok-3 Model Performance and Benchmarks

- Grok-3 outperforms other models: @omarsar0 reported that Grok-3 significantly outperforms models in its category like Gemini 2 Pro and GPT-4o, even with Grok-3 mini showing competitive performance. @iScienceLuvr stated that Grok-3 reasoning models are better than o3-mini-high, o1, and DeepSeek R1 in preliminary benchmarks. @lmarena_ai announced Grok-3 achieved #1 rank in the Chatbot Arena, becoming the first model to break a 1400 score. @arankomatsuzaki noted Grok 3 reasoning beta achieved 96 on AIME and 85 on GPQA, on par with full o3. @iScienceLuvr highlighted Grok 3's performance on AIME 2025. @scaling01 emphasized Grok-3’s impressive #1 ranking across all categories in benchmarks.

- Grok-3 mini's capabilities: @omarsar0 shared a result generated with Grok 3 mini, while @ibab mentioned Grok 3 mini is amazing and will be released soon. @Teknium1 found in testing that Grok-3mini is generally better than full Grok-3, suggesting it wasn't simply distilled and might be fully RL-trained.

- Grok-3's reasoning and coding abilities: @omarsar0 stated Grok-3 also has reasoning capabilities unlocked by RL, especially good in coding. @lmarena_ai pointed out Grok-3 surpassed top reasoning models like o1 and Gemini-thinking in coding. @omarsar0 highlighted Grok 3’s creative emergent capabilities, excelling in creative coding like generating games. @omarsar0 showcased Grok 3 Reasoning Beta performance on AIME 2025 demonstrating generalization capabilities beyond coding and math.

- Comparison to other models: @nrehiew_ suggested Grok 3 reasoning is inherently an ~o1 level model, implying a 9-month capability gap between OpenAI and xAI. @Teknium1 considered Grok-3 equivalent to o3-full with deep research, but at a fraction of the cost. @Yuchenj_UW stated Grok-3 is as good as o3-mini.

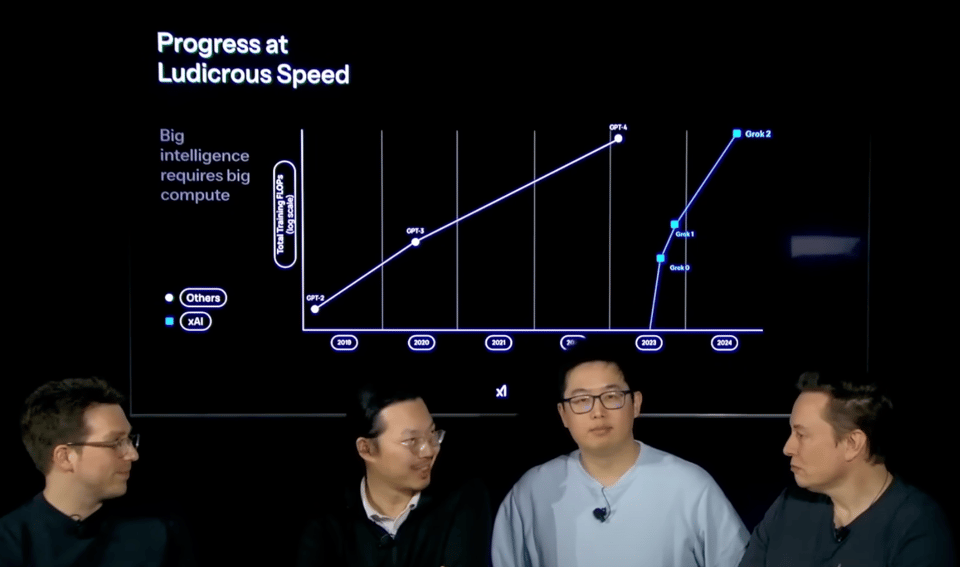

- Computational resources for Grok-3: @omarsar0 mentioned Grok 3 involved 10x more training than Grok 2, and pre-training finished in early January, with training ongoing. @omarsar0 revealed 200K total GPUs were used, with capacity doubled in 92 days to improve Grok. @ethanCaballero noted grok-3 is 8e26 FLOPs of training compute.

- Karpathy's vibe check of Grok-3: @karpathy shared a detailed vibe check of Grok 3, finding it around state of the art (similar to OpenAI's o1-pro) and better than DeepSeek-R1 and Gemini 2.0 Flash Thinking. He tested thinking capabilities, emoji decoding, tic-tac-toe, GPT-2 paper analysis and research questions. He also tested DeepSearch, finding it comparable to Perplexity DeepResearch but not yet at the level of OpenAI's "Deep Research".

Company and Product Announcements

- xAI Grok-3 Launch: @omarsar0 announced the BREAKING news of xAI releasing Grok 3. @alexandr_wang congratulated xAI on Grok 3 being the new best model, ranking #1 in Chatbot Arena. @Teknium1 also announced Grok3 Unveiled. @omarsar0 mentioned Grok 3 is available on X Premium+. @omarsar0 stated improvements will happen rapidly, almost daily and a Grok-powered voice app is coming in about a week.

- Perplexity R1 1776 Open Source Release: @AravSrinivas announced Perplexity open-sourcing R1 1776, a version of DeepSeek R1 post-trained to remove China censorship, emphasizing unbiased and accurate responses. @perplexity_ai also announced open-sourcing R1 1776. @ClementDelangue noted Perplexity is now on Hugging Face.

- DeepSeek NSA Sparse Attention: @deepseek_ai introduced NSA (Natively Trainable Sparse Attention), a hardware-aligned mechanism for fast long-context training and inference.

- OpenAI SWE-Lancer Benchmark: @OpenAI launched SWE-Lancer, a new realistic benchmark for coding performance, comprising 1,400 freelance software engineering tasks from Upwork valued at $1 million. @_akhaliq also announced OpenAI SWE-Lancer.

- LangChain LangMem SDK: @LangChainAI released LangMem SDK, an open-source library for long-term memory in AI agents, enabling agents to learn from interactions and optimize prompts.

- Aomni $4M Seed Round: @dzhng announced Aomni raised a $4m seed round for their AI agents that 10x revenue team output.

- MistralAI Batch API UI: @sophiamyang introduced MistralAI batch API UI, allowing users to create and monitor batch jobs from la Plateforme.

- Thinking Machines Lab Launch: @dchaplot announced the launch of Thinking Machines Lab, inviting others to join.

Technical Deep Dives and Research

- Less is More Reasoning (LIMO): @AymericRoucher highlighted Less is More for Reasoning (LIMO), a 32B model fine-tuned with 817 examples that beats o1-preview on math reasoning, suggesting carefully selected examples are more important than sheer quantity for reasoning.

- Diffusion Models without Classifier-Free Guidance: @iScienceLuvr shared a paper on Diffusion Models without Classifier-free Guidance, achieving new SOTA FID on ImageNet 256x256 by directly learning the modified score.

- Scaling Test-Time Compute with Verifier-Based Methods: @iScienceLuvr discussed research proving verifier-based (VB) methods using RL or search are superior to verifier-free (VF) approaches for scaling test-time compute.

- MaskFlow for Long Video Generation: @iScienceLuvr introduced MaskFlow, a chunkwise autoregressive approach to long video generation from CompVis lab, using frame-level masking for efficient and seamless video sequences.

- Intuitive Physics from Self-Supervised Video Pretraining: @arankomatsuzaki presented Meta's research showing intuitive physics understanding emerges from self-supervised pretraining on natural videos, by predicting outcomes in a rep space.

- Reasoning Models and Verifiable Rewards: @cwolferesearch explained that reasoning models like Grok-3 and DeepSeek-R1 are trained with reinforcement learning using verifiable rewards, emphasizing verification in math and coding tasks and the power of RL in learning complex reasoning.

- NSA: Hardware-Aligned Sparse Attention: @deepseek_ai detailed NSA's core components: dynamic hierarchical sparse strategy, coarse-grained token compression, and fine-grained token selection, optimizing for modern hardware to speed up inference and reduce pre-training costs.

AI Industry and Market Analysis

- xAI as a SOTA Competitor: @scaling01 argued that after Grok-3, xAI must be considered a real competitor for SOTA models, though OpenAI, Anthropic, and Google might be internally ahead. @ArtificialAnlys also stated xAI has arrived at the frontier and joined the 'Big 5' American AI labs. @omarsar0 noted Elon mentioned Grok 3 is an order of magnitude more capable than Grok 2. @arankomatsuzaki observed China's rapid AI progress with DeepSeek and Qwen, highlighting the maturation of the Chinese AI community.

- OpenAI's Strategy and Market Position: @scaling01 discussed Gemini 2.0 Flash cutting into Anthropic's market share, suggesting Anthropic needs to lower prices or release a better model to maintain growth. @scaling01 commented that Grok-3 launch might be for mind-share and attention, as GPT-4.5 and a new Anthropic model are coming soon and Grok-3's top spot might be short-lived.

- Perplexity's Growth and Deep Research: @AravSrinivas highlighted the growing number of daily PDF exports from Perplexity Deep Research, indicating increasing usage. @AravSrinivas shared survey data showing over 52% of users would switch from Gemini to Perplexity.

- AI and Energy Consumption: @JonathanRoss321 discussed the balance between AI's increasing energy demand and its potential to improve efficiency across civilization, citing DeepMind AI's 40% energy reduction in Google data centers as an example.

- SWE-Lancer Benchmark and LLMs in Software Engineering: @_philschmid summarized OpenAI's SWE-Lancer benchmark, revealing Claude 3.5 Sonnet achieved a $403K earning potential but frontier models still can't solve most tasks, highlighting challenges in root cause analysis and complex solutions. @mathemagic1an suggested generalist SWE agents like DevinAI are useful for dev discussions even without merging PRs.

Open Source and Community

- Call for Open Sourcing o3-mini: @iScienceLuvr urged everyone to vote for open-sourcing o3-mini, emphasizing the community's ability to distill it into a phone-sized model. @mervenoyann joked about wanting o3-mini open sourced. @gallabytes asked people to vote for o3-mini. @eliebakouch jokingly suggested internal propaganda is working to vote for o3-mini.

- Perplexity Open-Sources R1 1776: @AravSrinivas announced Perplexity's first open-weights model, R1 1776, and released weights on @huggingface. @reach_vb highlighted Perplexity releasing POST TRAINED DeepSeek R1 MIT Licensed.

- Axolotl v0.7.0 Release: @winglian announced Axolotl v0.7.0 with GRPO support, Multi-GPU LoRA kernels, Modal deployment, and more.

- LangMem SDK Open Source: @LangChainAI released LangMem SDK as open-source.

- Ostris Open Source Focus: @ostrisai announced transitioning to focusing on open source work full time, promising more models, toolkit improvements, tutorials, and asking for financial support.

- Call for Grok-3 Open Sourcing: @huybery called for Grok-3 to be open-sourced.

- DeepSeek's Open Science Dedication: @reach_vb thanked DeepSeek for their dedication to open source and science.

Memes and Humor

- Reactions to Grok-3's Name and Performance: @iScienceLuvr reacted with "NOT ANOTHER DEEP SEARCH AAHHH". @nrehiew_ posted "STOP THE COUNT", likely in jest about Grok-3's benchmark results. @scaling01 joked "i love the janitor, but just accept that Grok-3 is the most powerful PUBLICLY AVAILABLE LLM (at least for a day lol)".

- Phone-Sized Model Vote Humor: @nrehiew_ sarcastically commented "Those of you voting “phone-sized model”are deeply unserious people. The community would get the o3 mini level model on a Samsung galaxy watch within a month. Please be serious". @dylan522p stated "I can't believe X users are so stupid. Not voting for o3-mini is insane.".

- Elon Musk and xAI Jokes: @Yuchenj_UW joked about catching Elon tweeting during the Grok3 Q&A. @aidan_mclau posted "he’s even unkind to his mental interlocutors 😔" linking to a tweet.

- Silly Con Valley Narrativemongers: @teortaxesTex criticized "Silly con valley narrativemongers with personas optimized to invoke effortless Sheldon Cooper or Richard Hendricks gestalt".

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. OpenAI's O3-Mini vs Phone-Sized Model Poll Controversy

- Sam Altman's poll on open sourcing a model.. (Score: 631, Comments: 214): Sam Altman conducted a Twitter poll asking whether it would be more useful to create a small "o3-mini" level model or the best "phone-sized model" for their next open source project, with the latter option receiving 62.2% of the 695 total votes so far. The poll has 23 hours remaining for participation, indicating community engagement in decision-making about open-sourcing AI models.

- Many commenters advocate for the o3-mini model, arguing that it can be distilled into a mobile phone model if needed, and emphasizing its potential utility on local machines and for small organizations. Some express skepticism about the poll's integrity, suggesting that it might be a marketing strategy or that the poll results are manipulated.

- There is a strong sentiment against the phone-sized model, with users questioning its practicality given current hardware limitations and suggesting that larger models can be more versatile. Some believe that OpenAI might eventually release both models, using the poll to decide which to release first.

- The discussion reflects a broader skepticism about OpenAI's open-source intentions, with some users doubting the company's commitment to open-sourcing their models. Others highlight the importance of distillation techniques to create smaller, efficient models from larger ones, suggesting that this approach could be beneficial for the open-source community.

- ClosedAI Next Open Source (Score: 119, Comments: 27): Sam Altman sparked a debate with a tweet asking whether it is more advantageous to develop a smaller model for GPU use or to focus on creating the best possible phone-sized model. The discussion centers on the trade-offs between model size and operational efficiency across different platforms.

- Local Phone Models face criticism for being slow, consuming significant battery, and taking up disk space, compared to online models. Commenters express skepticism about the practicality of a local phone model and suggest that votes for such models may be influenced by OpenAI employees.

- OpenAI's Business Model is scrutinized, with doubts about their willingness to open-source models like o3. Users speculate that OpenAI might only release models when they become obsolete and express concern about potential regulatory impacts on genuinely open-source companies.

- There is a strong push for supporting O3-MINI as a more versatile option, with the potential for distillation into a mobile version. Some users criticize the framing of the poll and predict that OpenAI might release a suboptimal model to appear open-source without genuinely supporting open innovation.

Theme 2. GROK-3 Claims SOTA Supremacy Amid GPU Controversy

- GROK-3 (SOTA) and GROK-3 mini both top O3-mini high and Deepseek R1 (Score: 334, Comments: 312): Grok-3 Beta achieves a leading score of 96 in Math (AIME '24), showcasing its superior performance compared to competitors like O3-mini (high) and Deepseek-R1. The bar graph highlights the comparative scores across categories of Math, Science (GPQA), and Coding (LCB Oct-Feb), with Grok-3 models outperforming other models in the test-time compute analysis.

- Many users express skepticism about Grok-3's performance claims, noting the lack of independent benchmarks and the absence of open-source availability. Lmsys is mentioned as an independent benchmark, but users are wary of the $40/month cost without significant differentiators from other models like ChatGPT.

- Discussions highlight concerns about Elon Musk's involvement with Grok-3, with users expressing distrust and associating his actions with fascist ideologies. Some comments critique the use of the term "woke" in the context of open-source discussions and highlight the controversy surrounding Musk's political activities.

- Technical discussions focus on the ARC-AGI benchmark, which is costly and complex to test, with OpenAI having invested significantly in it. Users note that Grok-3 was not tested on ARC-AGI, and there's interest in seeing its performance on benchmarks where current SOTA models struggle.

- Grok presentation summary (Score: 245, Comments: 80): The image depicts a Q&A session with a panel, likely including Elon Musk, discussing the unveiling of xAI GROK-3. Community reactions vary, with comments such as "Grok is good" and "Grok will travel to Mars," indicating mixed opinions on the presentation and potential capabilities of the technology.

- The presentation's target audience was criticized for not being engineers, with several commenters noting that the content was less organized compared to OpenAI's presentations. Elon Musk's role as a non-engineer was highlighted, with some expressing skepticism about the accuracy of his reporting on technical details.

- The body language of the panel, particularly the non-Elon members, was noted as nervous or scared, with some attributing this to Elon's presence. Despite this, some appreciated the raw, non-corporate approach of the panel.

- Discussions on benchmark comparisons with OpenAI and other models like Deepseek and Qwen arose, with some skepticism about the benchmarks being self-reported. The mention of H100s and the cost implications of achieving parity with other models like OpenAI's last model was also noted.

Theme 3. DeepSeek's Native Sparse Attention Model Release

- DeepSeek Native Sparse Attention: Hardware-Aligned and Natively Trainable Sparse Attention (Score: 136, Comments: 6): DeepSeek's NSA model introduces Native Sparse Attention, which is both hardware-aligned and natively trainable. This innovation in sparse attention aims to enhance model efficiency and performance.

- DeepSeek's NSA model shares conceptual similarities with Microsoft's "SeerAttention", which also explores trainable sparse attention, as noted by LegitimateCricket620. LoaderD suggests potential collaboration between DeepSeek and Microsoft researchers, emphasizing the need for proper citation if true.

- Recoil42 highlights the NSA model's core components: dynamic hierarchical sparse strategy, coarse-grained token compression, and fine-grained token selection. These components optimize for modern hardware, enhancing inference speed and reducing pre-training costs without sacrificing performance, outperforming Full Attention models on various benchmarks.

- DeepSeek is still cooking (Score: 809, Comments: 100): DeepSeek's NSA (Non-Sparse Attention) demonstrates superior performance over traditional Full Attention methods, achieving higher scores across benchmarks such as General, LongBench, and Reasoning. It also provides significant speed improvements, with up to 11.6x speedup in the decode phase. Additionally, NSA maintains perfect retrieval accuracy at context lengths up to 64K, illustrating its efficiency in handling large-scale data. DeepSeek NSA Paper.

- The discussion highlights the potential for DeepSeek NSA to reduce VRAM requirements due to compressed keys and values, though actual VRAM comparisons are not provided. The model's capability to handle large context lengths efficiently is also noted, with inquiries about its performance across varying context sizes.

- Hierarchical sparse attention has piqued interest, with users speculating on its potential to make high-speed processing feasible on consumer hardware. The model's 27B total parameters with 3B active parameters are seen as a promising size for balancing performance and computational efficiency.

- Comments emphasize the significant speed improvements DeepSeek NSA offers, with some users expressing interest in its practical applications and potential for running on mobile devices. The model's approach is praised for its efficiency in reducing computation costs, contrasting with merely increasing computational power.

Theme 4. PerplexityAI's R1-1776 Removes Censorship in DeepSeek

- PerplexityAI releases R1-1776, a DeepSeek-R1 finetune that removes Chinese censorship while maintaining reasoning capabilities (Score: 185, Comments: 78): PerplexityAI has released R1-1776, a finetuned version of DeepSeek-R1, aimed at eliminating Chinese censorship while preserving its reasoning abilities. The release suggests a focus on enhancing access to uncensored information without compromising the AI's performance.

- Discussions centered around the effectiveness and necessity of the release, with skepticism about the model's claims of providing unbiased, accurate, and factual information. Critics questioned whether the model simply replaced Chinese censorship with American censorship, highlighting potential biases in the model.

- There were comparisons between Chinese and Western censorship, with users noting that Chinese censorship often involves direct suppression, while Western methods may involve spreading misinformation. The conversation included examples like Tiananmen Square and US political issues to illustrate different censorship styles.

- Users expressed skepticism about the model's open-source status, with some questioning the practical use of the model and others suggesting it was a waste of engineering effort. A blog post link provided further details on the release: Open-sourcing R1 1776.

Theme 5. Speeding Up Hugging Face Model Downloads

- Speed up downloading Hugging Face models by 100x (Score: 167, Comments: 45): Using hf_transfer, a Rust-based tool, can significantly increase the download speed of Hugging Face models to over 1GB/s, compared to the typical cap of 10.4MB/s when using Python command line. The post provides a step-by-step guide to install and enable hf_transfer for faster downloads, highlighting that the speed limitation is not due to Python but likely a bandwidth restriction not present with hf_transfer.

- Users discuss alternative tools for downloading Hugging Face models, such as HFDownloader and Docker-based CLI, which offer pre-configured solutions to avoid host installation. LM Studio is mentioned as achieving around 80 MB/s, suggesting that the 10.4 MB/s cap is not a Python limitation but likely a bandwidth issue.

- There is debate over the legal implications of distributing model weights and the potential benefits of using torrent for distribution, with concerns about controlling dissemination and liability. Some users argue that torrents would be ideal but acknowledge the challenges in managing distribution.

- The hf_transfer tool is highlighted as beneficial for high-speed downloads, especially in datacenter environments, with claims of speeds over 500MB/s. Users express gratitude for the tool's ability to reduce costs associated with downloading large models, such as Llama 3.3 70b.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT, /r/ChatGPTCoding

Theme 1. Grok 3 Benchmark Release and Performance Debate

- How is grok 3 smartest ai on earth ? Simply it's not but it is really good if not on level of o3 (Score: 1012, Comments: 261): Grok-3 is discussed as a highly competent AI model, though not necessarily the "smartest" compared to "o3". A chart from a livestream, shared via a tweet by Rex, compares AI models on tasks like Math, Science, and Coding, highlighting Grok-3 Reasoning Beta and Grok-3 mini Reasoning alongside others, with "o3" data added by Rex for comprehensive analysis.

- Discussions highlight skepticism about Grok 3's claimed superiority, with some users questioning the validity of benchmarks and comparisons, particularly against o3, which hasn't been released yet. There's a general consensus that o3 is not currently available for independent evaluation, which complicates comparisons.

- Concerns about Elon Musk's involvement in AI projects surface, with users expressing distrust due to perceived ethical issues and potential misuse of AI technologies. Some comments reflect apprehension about the transparency and motives behind benchmarks and AI capabilities touted by companies like OpenAI and xAI.

- Grok 3 is noted for its potential in real-time stock analysis, though some users argue it may not be the smartest AI available. Comments also discuss the cost and scalability issues of o3, with reports indicating it could be prohibitively expensive, costing up to $1000 per prompt.

- GROK 3 just launched (Score: 630, Comments: 625): GROK 3 has launched, showcasing its performance in a benchmark comparison of AI models across subjects like Math (AIME '24), Science (GPQA), and Coding (LCB Oct-Feb). The Grok-3 Reasoning Beta model, highlighted in dark blue, achieves the highest scores, particularly excelling in Math and Science, as depicted in the bar graph with scores ranging from 40 to 100.

- There is significant skepticism regarding the reliability of Grok 3 benchmarks, with users questioning the source of the benchmarks and the graph's presentation. Some users point out that Grok 3 appears to outperform other models due to selective benchmark optimization, leading to mistrust in the results presented by Elon Musk's company.

- Discussions are heavily intertwined with political and ethical concerns, with multiple comments expressing distrust in products associated with Elon Musk, citing his controversial actions and statements. Users emphasize a preference for alternative models from other companies like Deepmind and OpenAI.

- Some comments highlight the external evaluations by reputable organizations such as AIME, GPQA, and LiveCodeBench, but also note that Grok 3's performance may be skewed by running multiple tests and selecting the best outcomes. Users call for independent testing by third parties to verify the benchmarks.

- Grok 3 released, #1 across all categories, equal to the $200/month O1 Pro (Score: 152, Comments: 308): Grok 3 is released, ranking #1 across all categories, including coding and creative writing, with scores of 96% on AIME and 85% on GPQA. Karpathy compares it to the $200/month O1 Pro, noting its capability to attempt solving complex problems like the Riemann hypothesis, and suggests it is slightly better than DeepSeek-R1 and Gemini 2.0 Flash Thinking.

- Discussions reveal skepticism towards Grok 3's performance claims, with users expressing distrust in benchmarks like LMArena and highlighting potential biases in results. Grok 3 is criticized for requiring "best of N" answers to match the O1 Pro level, and initial tests suggest it underperforms in simple programming tasks compared to GPT-4o.

- Users express strong opinions on Elon Musk, associating him with political biases and questioning the ethical implications of using AI models linked to him. Concerns about political influence in AI design are prevalent, with some users drawing parallels to authoritarian regimes and expressing reluctance to use Musk-related technologies.

- The conversation reflects a broader sentiment of distrust towards Musk's ventures, with many users stating they will avoid using his products regardless of performance claims. The discussion also touches on the rapid pace of AI development and the role of competition in driving innovation, despite skepticism about specific models.

Theme 2. ChatGPT vs Claude on Context Window Use

- ChatGPT vs Claude: Why Context Window size Matters. (Score: 332, Comments: 60): The post discusses the significance of context window size in AI models, comparing ChatGPT and Claude. ChatGPT Plus users have a 32k context window and rely on Retrieval Augment Generation (RAG), which can miss details in larger texts, while Claude offers a 200k context window and captures all details without RAG. A test with a modified version of "Alice in Wonderland" showed Claude's superior ability to detect errors due to its larger context window, emphasizing the need for OpenAI to expand the context window size for ChatGPT Plus users.

- Context Window Size Comparisons: Users highlight the differences in context window sizes across models, with ChatGPT Plus at 32k, Claude at 200k, and Gemini providing up to 1-2 million tokens on Google AI Studio. Mistral web and ChatGPT Pro are mentioned with context windows close to 100k and 128k respectively, suggesting these are better for handling large documents without losing detail.

- Model Performance and Use Cases: Claude is praised for its superior handling of long documents and comprehension quality, especially for literature editing and reviewing. ChatGPT is still used for small scope complex problems and high-level planning, while Claude and Gemini are favored for projects requiring extensive context due to their larger context windows.

- Cost and Accessibility: There's a discussion on the cost-effectiveness of models, with Claude preferred for long context tasks due to its affordability compared to the expensive higher-tier options of other models. Gemini is suggested as a free alternative on AI Studio for exploring large context capabilities.

- Plus plan has a context window of only 32k?? Is it true for all models? (Score: 182, Comments: 73): The ChatGPT Plus plan offers a 32k context window, which is less compared to other plans like Pro and Enterprise. The Free plan provides an 8k context window, and the image emphasizes the context window differences among the various OpenAI pricing plans.

- Context Window Limitations: Users confirm that the ChatGPT Plus plan has a 32k context window, as explicitly stated in OpenAI's documentation, whereas the Pro plan offers a 128k context window. This limitation leads to the use of RAG (Retrieval-Augmented Generation) for processing longer documents, which contrasts with Claude and Gemini that can handle larger texts without RAG.

- Testing and Comparisons: bot_exe conducted tests using a 30k-word text file, demonstrating that ChatGPT Plus missed errors due to its reliance on RAG, while Claude Sonnet 3.5 accurately identified all errors by utilizing its 200k tokens context window. This highlights the limitations of ChatGPT's chunk retrieval method compared to Claude's comprehensive text ingestion.

- Obsolete Information: There is a shared sentiment that OpenAI's website contains outdated subscription feature details, potentially misleading users about current capabilities. Despite the updates like the Pro plan, the documentation's accuracy and currency remain in question, as noted by multiple commenters.

Theme 3. LLMs on Real-World Software Engineering Benchmarks

- [R] Evaluating LLMs on Real-World Software Engineering Tasks: A $1M Benchmark Study (Score: 128, Comments: 24): The benchmark study evaluates LLMs like GPT-4 and Claude 2 on real-world software engineering tasks worth over $1 million from Upwork. Despite the structured evaluation using Docker and expert validation, GPT-4 completed only 10.2% of coding tasks and 21.4% of management decisions, while Claude 2 had an 8.7% success rate, highlighting the gap between current AI capabilities and practical utility in professional engineering contexts. Full summary is here. Paper here.

- Benchmark Limitations: Commenters argue that increased benchmark performance does not translate to real-world utility, as AI models like GPT-4 and Claude 2 perform well on specific tasks but struggle with broader contexts and decision-making, which are critical in engineering work. This highlights the gap between theoretical benchmarks and practical applications.

- Model Performance and Misrepresentation: There is confusion about the models evaluated, with Claude 3.5 Sonnet reportedly performing better than stated in the summary, earning $208,050 on the SWE-Lancer Diamond set but still failing to provide reliable solutions. Commenters caution against taking summaries at face value without examining the full paper.

- Economic Misinterpretation: There is skepticism about the economic implications of AI performance, with commenters noting that completing a portion of tasks does not equate to replacing a significant percentage of engineering staff. The perceived value of AI task completion is questioned due to high error rates and the complexity of real-world engineering work.

- OpenAI's latest research paper | Can frontier LLMs make $1M freelancing in software engineering? (Score: 147, Comments: 40): OpenAI's latest research paper evaluates the performance of frontier LLMs on software engineering tasks with a potential earning of $1 million. The results show that GPT-4o, o1, and Claude 3.5 Sonnet earned $303,525, $380,350, and $403,325 respectively, indicating that these models fall short of the maximum potential earnings.

- The SWE-Lancer benchmark evaluates LLMs on real-world tasks from Upwork, with 1,400 tasks valued at $1 million. The models failed to solve the majority of tasks, indicating their limitations in handling complex software engineering projects, as discussed in OpenAI's article.

- Claude 3.5 Sonnet outperformed other models in real-world challenges, highlighting its efficiency in agentic coding and iteration. Users prefer using Claude for serious coding tasks due to its ability to handle complex projects and assist in pair programming.

- Concerns were raised about the benchmarks' validity, with criticisms of artificial project setups and metrics that don't reflect practical scenarios. Success in tasks often requires iterative communication, which is not captured in the current evaluation framework.

Theme 4. AI Image and Video Transformation Advancements

- Non-cherry-picked comparison of Skyrocket img2vid (based on HV) vs. Luma's new Ray2 model - check the prompt adherence (link below) (Score: 225, Comments: 125): Skyrocket img2vid and Luma's Ray2 models are compared in terms of video quality and prompt adherence. The post invites viewers to check out a video comparison, highlighting the differences in performance between the two models.

- Skyrocket img2vid is praised for better prompt adherence and consistent quality compared to Luma's Ray2, which is criticized for chaotic movement and poor prompt handling. Users note that Skyrocket's "slow pan + movement" aligns well with prompts, providing a more coherent output.

- Technical Implementation: The workflow for Skyrocket runs on Kijai's Hunyuan wrapper, with links provided for both the workflow and the model. There are discussions about technical issues with ComfyUI, including node updates and model loading errors.

- System Requirements: Users inquire about VRAM requirements and compatibility with hardware like the RTX 3060 12GB. There is also a discussion on using Linux/Containers for a more consistent and efficient setup, with detailed explanations of using Docker for managing dependencies and environments.

- New sampling technique improves image quality and speed: RAS - Region-Adaptive Sampling for Diffusion Transformers - Code for SD3 and Lumina-Next already available (wen Flux/ComfyUI?) (Score: 182, Comments: 32): RAS (Region-Adaptive Sampling) is a new technique aimed at enhancing image quality and speed in Diffusion Transformers. Code implementations for SD3 and Lumina-Next are already available, with anticipation for integration with Flux/ComfyUI.

- RAS Implementation and Compatibility: RAS cannot be directly applied to Illustrious-XL due to architectural differences between DiTs and U-Nets. Users interested in RAS should consider models like Flux or PixArt-Σ, which are more compatible with DiT-based systems.

- Quality Concerns and Metrics: There is significant debate over the quality claims of RAS, with some users pointing out a dramatic falloff in quality. QualiCLIP scores and metrics like SSIM and PSNR indicate substantial losses in detail and structural similarity in the generated images.

- Model-Specific Application: RAS is primarily for DiT-based models and not suitable for U-Net based models like SDXL. The discussions emphasize the need for model-specific optimization strategies to leverage RAS effectively.

AI Discord Recap

A summary of Summaries of Summaries by o1-2024-12-17

Theme 1. Grok 3: The Beloved, The Maligned

- Early Access Sparks Intense Praise: Some users call it “frontier-level” and claim it outperforms rivals like GPT-4o and Claude, citing benchmark charts that show Grok 3 catching up fast. Others question these “mind-blowing” stats, hinting that the model’s real-world coding and reasoning may lag.

- Critics Slam ‘Game-Changing’ Claims: Skeptics highlight repetitive outputs and code execution flubs, hinting that “it’s not better than GPT” in day-to-day tasks. Meme-worthy charts omitting certain competitors stoked debate over xAI’s data accuracy.

- Mixed Censorship Surprises: Grok 3 was touted as uncensored, but users still encountered content blocks. Its unpredictable blocks led many to question how xAI balances raw output vs. safety.

Theme 2. Frontier Benchmarks Shake Up LLM Tests

- SWE-Lancer Pays $1M for Real Tasks: OpenAI’s new benchmark has 1,400 Upwork gigs totaling $1M, stressing practical coding scenarios. Models still fail the majority of tasks, exposing a gap between “AI hype” and real freelance money.

- Native Sparse Attention Wows HPC Crowd: Researchers propose hardware-aligned “dynamic hierarchical sparsity” for long contexts, promising cost and speed wins. Engineers foresee major throughput gains for next-gen AI workloads using NSA.

- Platinum Benchmarks Eye Reliability: New “platinum” evaluations minimize label errors and limit each model to one shot per query. This strips away illusions of competence and forces LLMs to “stand by their first attempt.”

Theme 3. AI Tools Embrace Code Debugging

- Aider, RA.Aid & Cursor Spark Joy: Projects let LLMs add missing files, fix build breaks, or “search the web” for code insights. Slight quirks remain—like Aider failing to auto-add filenames—but devs see big potential in bridging docs, code, and AI.

- VS Code Extensions Exterminate Bugs: The “claude-debugs-for-you” MCP server reveals variable states mid-run, beating “blind log guesswork.” It paves the way for language-based, interactive debugging across Python, C++, and more.

- Sonnet & DeepSeek Rival Coders: Dev chatter compares the pricey but trusted Sonnet 3.5 to DeepSeek R1, especially in coding tasks. Some prefer bigger model contexts, but the “cost vs. performance” debate roars on.

Theme 4. Labs Launch Next-Gen AI Projects

- Thinking Machines Lab Goes Public: Co-founded by Mira Murati and others from ChatGPT, it vows open science and “advances bridging public understanding.” Karpathy’s nod signals a new wave of creative AI expansions.

- Perplexity Opens R1 1776 in ‘Freedom Tuning’: The “uncensored but factual” model soared in popularity, with memes labeling it “freedom mode.” Users embraced the ironically patriotic theme, praising the attempt at less restrictive output.

- Docling & Hugging Face Link for Visual LLM: Docling’s IBM team aims to embed SmolVLM into advanced doc generation flows, merging text plus image tasks. PRs loom on GitHub that will reveal how these visual docs work in real time.

Theme 5. GPU & HPC Gains Fuel Model Innovations

- Dynamic 4-bit Quants in VLLM: Unsloth’s 4-bit quant technique hits the main branch, slashing VRAM needs. Partial layer skipping drives HPC interest in further memory optimizations.

- AMD vs. Nvidia: AI Chips Duel: AMD’s Ryzen AI MAX and M4 edge threaten the GPU king with “lower power” hardware. Enthusiasts anticipate the 5070 series to continue pushing HPC on the desktop.

- Explosive Dual Model Inference: Engineers experiment with a small model for reasoning plus a bigger one for final output, though this approach requires custom orchestration. LM Studio hasn’t officially merged the concept yet, so HPC-savvy coders are “chaining models by hand.”

PART 1: High level Discord summaries

OpenAI Discord

- Grok 3 Shows Promise: Users are comparing Grok 3 to Claude 3.5 Sonnet and OpenAI's O1, focusing on context length and coding abilities, however there were also limitations in moderation and web search capabilities.

- Despite concerns about pricing compared to DeepSeek, some users remain eager to test Grok 3 and its capabilities, with discussion touching on the importance of transparency in model capabilities and limitations.

- PRO Mode Performance Fluctuations: Users report inconsistent experiences with PRO mode, noting reduced speed and quality at times.

- While the small PRO timer occasionally appears, performance sometimes resembles that of less effective mini models, suggesting variability in operational quality.

- GPT Falters on Pip Execution: A user reported difficulties running pip commands within the GPT environment, a functionality that previously worked, seeking help from the community.

- A member suggested saying, please find a way to run commands in python code and using it run !pip list again, while another member mentioned successfully executing !pip list and shared their solution via this link.

- 4o's Text Reader Sparking Curiosity: A user inquired about the origins of 4o's text reader, specifically whether it operates on a separate neural network.

- They also questioned the stability of generated text in long threads and whether trained voices affect this stability.

- Pinned Chats Requested: A user suggested implementing a feature to pin frequently used chats to the top of the chat history.

- This enhancement would streamline access for users who regularly engage in specific conversations.

Unsloth AI (Daniel Han) Discord

- VLLM Adds Unsloth's Quantization: VLLM now supports Unsloth's dynamic 4-bit quants, potentially improving performance, showcased in Pull Request #12974.

- The community discussed the memory profiling and optimization challenges in managing LLMs given the innovations.

- Notebooks Struggle Outside Colab: Users reported compatibility issues running Unsloth notebooks outside Google Colab due to dependency errors, especially on Kaggle; reference the Google Colab.

- The problems might arise from the use of Colab-specific command structures, like

%versus!.

- The problems might arise from the use of Colab-specific command structures, like

- Unsloth fine-tunes Llama 3.3: New instructions detail how to fine-tune Llama 3.3 using existing notebooks by modifying the model name, using this Unsloth blog post.

- However, users should be prepared for the substantial VRAM requirements for effective training.

- NSA Mechanism Improves Training: A paper introduced NSA, a hardware-aligned sparse attention mechanism that betters long-context training and inference, described in DeepSeek's Tweet.

- The paper suggests NSA can rival or exceed traditional model performance, while cutting costs via dynamic sparsity strategies.

Perplexity AI Discord

- Grok 3 Performance Underwhelms: Users expressed disappointment in Grok 3's performance compared to expectations and models like GPT-4o, with some awaiting more comprehensive testing before buying into the hype.

- Musk claimed Grok 3 outperforms all rivals, bolstered by an independent benchmark study, providing a competitive edge in AI capabilities, although user experiences vary.

- Deep Research Hallucinates: Several users reported that Deep Research in Perplexity produces hallucinated results, raising concerns about its accuracy compared to free models like o3-mini.

- Users questioned the reliability of sources such as Reddit and the impact of API changes on information quality, affecting the overall output.

- Subscription Resale Falls Flat: A user attempting to sell their Perplexity Pro subscription faced challenges due to lower prices available elsewhere.

- The discussion revealed skepticism about reselling subscriptions, with recommendations to retain them for personal use due to the lack of market value.

- Generative AI Dev Roles Emerge: A new article explores the upcoming and evolving generative AI developer roles, highlighting their importance in the tech landscape.

- It emphasizes the demand for skills aligned with AI advancements to leverage these emerging opportunities effectively, potentially reshaping talent priorities.

- Sonar API Hot Swapping Investigated: A question was posed regarding whether the R1-1776 model can be hot swapped in place on the Sonar API, indicating interest from the OpenRouter community.

- This inquiry suggests ongoing discussions around flexibility and capabilities within the Sonar API framework, possibly for enhanced customization.

HuggingFace Discord

- 9b Model Defeats 340b Model, Community Stunned: A 9b model outperformed a 340b model, spurring discussion on AI model evaluations and performance metrics.

- Community members expressed surprise and interest in the implications of this unexpected performance increase.

- Porting Colab Notebook Causes Runtime Snafu: A member successfully ported their Colab from Linaqruf's notebook but hit a runtime error with an

ImportErrorwhen fetching metadata from Hugging Face.- The user troubleshooting stated, I might not work as intended as I forgot to ask the gemini monster about the path/to... hinting at unresolved path issues.

- Docling and Hugging Face Partner for Visual LLM: Docling from IBM has partnered with Hugging Face to integrate Visual LLM capabilities with SmolVLM into the Docling library.

- The integration aims to enhance document generation through advanced visual processing, with the Pull Request soon available on GitHub.

- Neuralink's Images Prompt Community Excitement: Recent images related to Neuralink were analyzed, showcasing advancements in their ongoing research and development.

- AI Agents Course Certificate Troubles: Multiple users reported certificate generation errors, typically receiving a message about too many requests.

- While some suggested using incognito mode or different browsers, success remained inconsistent.

Codeium (Windsurf) Discord

- MCP Tutorial Catches Waves: A member released a beginner's guide on how to use MCP, available on X, encouraging community exploration and discussion.

- The tutorial seeks to assist newcomers in navigating MCP features effectively, with interest in sharing personal use cases to improve understanding.

- Codeium Write Mode Capsized: Recent updates revealed that Write mode is no longer available on the free plan, leading users to consider upgrading or switching to chat-only.

- This change prompted debates on whether the Write mode loss is permanent for all or specific users, causing concern among the community.

- IntelliJ Supercomplete Feature Mystery: Members debated whether the IntelliJ extension ever had the supercomplete feature, referencing its presence in the VSCode pre-release.

- Clarifications suggested supercomplete refers to multiline completions, while autocomplete covers single-line suggestions.

- Streamlined Codeium Deployments Sought: A user inquired about automating setup for multiple users using IntelliJ and Codeium, hoping for a streamlined authentication process.

- Responses indicated this feature might be in enterprise offerings, with suggestions to contact Codeium's enterprise support at Codeium Contact.

- Cascade Base flounders on Performance: Users report Cascade Base fails to make edits on code and sometimes the AI chat disappears, leading to frustration.

- Multiple users have experienced internal errors when trying to use models, which suggests ongoing stability issues with the platform; documentation is available at Windsurf Advanced.

aider (Paul Gauthier) Discord

- Grok 3 Hype Draws Skepticism: The launch of Grok 3 has sparked debate, with some posts claiming it surpasses GPT and Claude, as noted in Andrej Karpathy's vibe check.

- Many users remain skeptical, viewing claims of groundbreaking performance and AGI as exaggerated.

- Aider File Addition Glitches Arise: Users report issues with Aider failing to automatically add files despite correct naming and initialization when using docker, as documented in Aider's Docker installation.

- Troubleshooting steps and potential bugs in Aider's web interface are being discussed among the community.

- Gemini Models Integration Trials Begin: Community members are exploring the use of Gemini experimental models within Aider and its experimental models doc.

- Confusion persists around correct model identifiers and warnings received during implementation, with successful use of Mixture of Architects (MOA) in the works via a pull request.

- RA.Aid Augments Aider with Web Search: In response to interest in integrating a web search engine to Aider, it was shared that RA.Aid can integrate with Aider and utilize the Tavily API for web searches, per its GitHub repository.

- This mirrors the current implementation in Cursor, offering a similar search functionality.

- Ragit GitHub Pipeline Sparks Interest: The Ragit project on GitHub, described as a git-like rag pipeline, has garnered attention, see the repo.

- Members emphasized its innovative approach to the RAG processing pipeline, potentially streamlining data retrieval and processing.

OpenRouter (Alex Atallah) Discord

- Grok 3 divides Opinions: Initial reception to Grok 3 is mixed, with some users impressed and others skeptical, especially when compared to models like Claude and Sonnet.

- Interest centers on Grok 3's abilities in code and reasoning, as highlighted in TechCrunch's coverage of Grok 3.

- OpenRouter API Policies are Unclear: Confusion surrounds OpenRouter's API usage policies, particularly regarding content generation like NSFW material and compliance with provider policies, with some users claiming that OpenRouter has fewer restrictions compared to others.

- For clarity and up-to-date policies, users are encouraged to consult with OpenRouter administrators and check the API Rate Limits documentation.

- Sonnet is coding MVP: Discussions compared the performance of various models like DeepSeek, Sonnet, and Claude, some users expressed preference for Sonnet in coding tasks because it is more reliable.

- Despite the higher costs, Sonnet's reliability makes it a preferred choice for specific coding applications, whereas users consider Grok 3 and DeepSeek for competitive features, factoring in price and performance.

- LLMs Gaining Vision: One user inquired about models on OpenRouter that can analyze images, referencing a modal section on the provider's website detailing models with text and image capabilities.

- It was recommended that the user explore available models under that section of the OpenRouter models page to find suitable options.

- OpenRouter Credit Purchase Snag: One user faced issues purchasing credits on OpenRouter, seeking assistance after checking with their bank, which prompted discussions about the pricing of different LLM models.

- This discussion included debates over the perceived value derived from these models, and how their costs can be justified by performance and capabilities.

Cursor IDE Discord

- Grok 3 Performance Underwhelms: Users express disappointment with Grok 3's performance, citing repetition and weak code execution, even after early access was granted.

- Some users suggest Grok 3 only catches up to existing models like Sonnet, despite some positive feedback on reasoning abilities.

- Sonnet Still Shines: Despite some reports of increased hallucinations, many users still favor Sonnet, especially version 3.5, over newer models like Grok 3 for coding tasks.

- Users suggest Sonnet 3.5 maintains an edge over Grok 3 in reliability and performance.

- MCP Server Setups Still Complex: Users discussed the complexities of setting up MCP servers for AI tools, referencing a MCP Server for the Perplexity API.

- Experimentation with single-file Python scripts as alternatives to traditional MCP server setups is underway, as shown by single-file-agents, for improved flexibility.

- Cursor Struggles Handling Large Codebases: Users report Cursor faces issues with rules and context management in large codebases, which necessitates manual rule additions.

- Some users found that downgrading Cursor versions helped improve performance, while others are trying auto sign cursor.

- AI's Instruction Following Questioned: Users express concern about whether AI models properly process their instructions, with some suggesting including explicit checks in prompts.

- Quirky approaches are recommended to test AI's adherence to guidance, which indicates a need for insights into how AI models handle instructions across platforms.

Interconnects (Nathan Lambert) Discord

- Grok 3's Vibe Check Passes but Depth Lags: Early reviews of Grok 3 suggest it meets frontier-level standards but is perceived as 'vanilla', struggling with technical depth and attention compression, while early versions broke records on the Arena leaderboard.

- Reviewers noted that Grok 3 struggles with providing technical depth in explanations and has issues with attention compression during longer queries, especially compared to R1 and o1-pro.

- Zuckerberg Scraps Llama 4: Despite Zuckerberg's announcement that Llama 4's pre-training is complete, speculation suggests it may be scrapped due to feedback from competitors such as DeepSeek.

- Concerns arise that competitive pressures will heavily influence the final release strategy for Llama 4, underscoring the rapid pace of AI development, with a potential release as a fully multimodal omni-model.

- Thinking Machines Lab Re-emerges with Murati: The Thinking Machines Lab, co-founded by industry leaders like Mira Murati, has launched with a mission to bridge gaps in AI customizability and understanding. See more details on their official website.

- Committed to open science, the lab plans to prioritize human-AI collaboration, aiming to make advancements accessible across diverse domains, prompting comparisons to the historic Thinking Machines Corporation.

- Debate Explodes Over Eval Methodologies' Effectiveness: Ongoing discussions question the reliability of current eval methods, with arguments that the industry lacks strong testing frameworks, and that some models are gaming the MMLU Benchmark.

- Companies are being called out for focusing on superficial performance metrics instead of substantive testing that truly reflects capabilities, calling for new benchmarks such as SWE-Lancer, with 1,400 freelance software engineering tasks from Upwork, valued at $1 million.

- GPT-4o Copilot Turbocharges Coding: A new code completion model, GPT-4o Copilot, is in public preview for various IDEs, promising to enhance coding efficiency across major programming languages.

- Fine-tuned on a massive code corpus exceeding 1T tokens, this model is designed to streamline coding workflows, providing developers with more efficient tools according to Thomas Dohmke's tweet.

LM Studio Discord

- DeepSeek Model Fails Loading: Users reported a model loading error with DeepSeek due to invalid n_rot: 128, expected 160 hyperparameters.

- The error log included memory, GPU (like the 3090), and OS details suggesting hardware or configuration constraints, but its reasoning ability makes it a go-to for technical tasks when it works.

- Dual Model Inference Under Scrutiny: Members have been experimenting with using a smaller model for reasoning and a larger one for output, detailing successful past projects in this area.

- While the concept shows promise, its implementation requires manual coding because LM Studio lacks direct support for dual model inference.

- Whisper Model Setup: Users discussed utilizing Whisper models for transcription, recommending specific setups contingent on CUDA versions.

- Proper configuration, particularly regarding CUDA compatibility, is essential when working with Whisper.

- AMD Enters the Chat: The introduction of AMD's Ryzen AI MAX has been mentioned, with its performance being compared to Nvidia GPUs, in addition to the M4 edge and upcoming 5070 promising performance at reduced power consumption, according to this review.

- Users are drawing comparisons with Nvidia GPUs and discussing the potential performance of AMD's new hardware.

- Legacy Tesla K80 Clustering?: AI Engineers discussed the feasibility of clustering older Tesla K80 GPUs for their substantial VRAM, with noted concerns about power efficiency.

- Experience with Exo in clustering setups, involving both PCs and MacBooks, was shared, with noted issues arising when loading models simultaneously.

Eleuther Discord

- DeepSeek v2's Centroid routing is just vector weights: A user sought to confirm that the 'centroid' in the context of DeepSeek v2's MoE architecture refers to the routing vector weight.

- Another user confirmed this understanding, clarifying its role in the expert specialization within the model.

- Platinum Benchmarks Measure LLM Reliability: A new paper introduced the concept of 'platinum benchmarks' to improve the measurement of LLM reliability by minimizing label errors.

- Discussion centered on the benchmark's limitations, particularly that it only takes one sample from each model per question, prompting queries about its overall effectiveness.

- JPEG-LM Generates Images from Bytes: Recent work applies autoregressive models to generate images directly from JPEG bytes, detailed in the JPEG-LM paper.

- This approach leverages the generality of autoregressive LLM architecture for potentially easy integration into multi-modal systems.

- NeoX trails NeMo in Tokens Per Second: A user benchmarked NeoX at 19-20K TPS on an 80B A100 GPU, contrasting with 25-26K TPS achieved collaboratively in NeMo for a similar model.

- The group considered the impact of intermediate model sizes and the potential for TP communication overlap in NeMo as factors influencing the performance differences.

- Track Model Size, Data, and Finetuning for Scaling Laws: For a clear grasp of scaling laws, Stellaathena advised tracking model size, data, and finetuning methods.

- This approach addresses nebulous feelings about data usage and facilitates better model comparisons.

Yannick Kilcher Discord

- Grok 3 Sparks Mixed Reactions: With Grok 3 launching, members are curious about its reasoning abilities and API costs, although some early assessments suggest it may fall behind OpenAI's models in certain aspects, per this tweet from Elon Musk.

- A live demo of Grok-3 sparked discussion, with some expressing they were 'thoroughly whelmed' by the product's presentation, according to this video.

- NURBS Gains Traction in AI: Members detailed the advantages of NURBS, noting their smoothness and better data structures compared to traditional methods, with the conversation shifting to the broader implications of geometric approaches in AI development.

- The group also discussed the potential for lesser overfitting by leveraging geometric first approaches.

- Model Comparisons Spark Debate: There is debate about the fairness of comparisons between Grok and OpenAI's models, with some claiming that Grok's charts misrepresent its performance, as exemplified in this tweet.

- Concerns over 'maj@k' methods and how they may impact perceived effectiveness have emerged, fueling discussions on model evaluation standards.

- Deepsearch Pricing Questioned: Despite being described as state-of-the-art, the pricing of $40 for the new Deepsearch product prompted skepticism among users, especially given similar products released for free recently.

- One member cynically observed the dramatic pricing strategy as potentially exploitative given the competition.

- Community to Forge Hierarchical Paper Tree: Suggestions were made for a hierarchical tree of seminal papers focusing on dependencies and key insights, emphasizing the importance of filtering information to avoid noise.

- The community indicated a desire to leverage their expertise in identifying seminal and informative papers for better knowledge sharing.

Stability.ai (Stable Diffusion) Discord

- Xformers Triggers GPU Grief: Users reported issues with xformers defaulting to CPU mode and demanding specific PyTorch versions, but one member suggested ignoring warning messages.

- A clean reinstall and adding

--xformersto the command line was recommended as a potential fix.

- A clean reinstall and adding

- InvokeAI Is Top UI Choice for Beginners: Many users suggested InvokeAI as an intuitive UI for newcomers, despite personally preferring ComfyUI for better functionality.

- Community members generally agreed that InvokeAI's simplicity makes it more accessible, given that complex systems like ComfyUI can overwhelm novices.

- Stable Diffusion Update Stagnation Sparks Ire: Concerns arose about the lack of updates in the Stable Diffusion ecosystem, especially after A1111 stopped supporting SD 3.5, leading to user dissatisfaction.

- Users expressed confusion due to outdated guides and incompatible branches with newer technologies, creating frustration.

- Anime Gender Classifier Sparks Curiosity: A user sought guidance on how to segregate male and female anime bboxes for inpainting using an anime gender classifier from Hugging Face.

- The classification method is reportedly promising but requires integration expertise with existing workflows in ComfyUI.

- Printer's Poor Usability Prompts Puzzlement: An IT worker shared a humorous anecdote about a defective printer, pointing out how even crystal-clear instructions can be misunderstood, causing confusion.

- The discussion pivoted to general misunderstandings related to simple signs and the requirement for clearer communication.

GPU MODE Discord

- Torch Compile Under the Microscope: A member explained that when using

torch.compile(), a machine learning model in PyTorch converts to Python bytecode, analyzed by TorchDynamo, which generates an FX graph for GPU kernel calls, with interest expressed in handling graph breaks during compilation.- A shared link to a detailed PDF focused on the internals of PyTorch's Inductor, providing in-depth information.

- CUDA Memory Transfers Gain Async Boost: To enhance speed when transferring large constant vectors in CUDA, it's recommended to use

cudaMemcpyAsyncwithcudaMemcpyDeviceToDevicefor better performance.- In the context of needing finer control over data copying on A100 GPUs, a suggestion was made to use

cub::DeviceCopy::Batchedas a solution.

- In the context of needing finer control over data copying on A100 GPUs, a suggestion was made to use

- Triton 3.2.0 Prints Unexpected TypeError: Using

TRITON_INTERPRET=1withprint()in Pytorch's Triton 3.2.0 package results in a TypeError stating thatkernel()got an unexpected keyword argument 'num_buffers_warp_spec'.- A member is addressing a performance degradation issue in a Triton kernel triggered by using low-precision inputs like float8, pointing to an increase in shared memory access bank conflicts and the lack of resources for resolving bank conflicts in Triton compared to CUDA's granular control.

- Native Sparse Attention Gets Praise: Discussion surrounds the paper on Native Sparse Attention (paper link), noting its hardware alignment and trainability that could revolutionize model efficiency.

- The GoLU activation function reduces variance in the latent space compared to GELU and Swish, while maintaining robust gradient flow (see paper link).

- Dynasor Slashes Reasoning Costs: Dynasor cuts reasoning system costs by up to 80% without the need for model training, demonstrating impressive token efficiency using certainty to halt unnecessary reasoning processes (see demo).

- Enhanced support for hqq in vllm allows running lower bit models and on-the-fly quantization for almost any model via GemLite or the PyTorch backend, with new releases announced alongside appealing patching capabilities, promising wider compatibility across vllm forks (see Mobius Tweet).

Nous Research AI Discord

- Grok-3 exhibits inconsistent censorship: Despite initial perceptions, Grok-3 presents varying censorship levels depending on the usage context, such as on lmarena.

- While some are pleasantly surprised, others seek access to its raw outputs, reflecting a community interest in unfiltered AI responses.

- SWE-Lancer Benchmark Poses Real-World Challenges: The SWE-Lancer benchmark features over 1,400 freelance software engineering tasks from Upwork valued at $1 million USD, spanning from bug fixes to feature implementations.

- Evaluations reveal that frontier models struggle with task completion, emphasizing a need for improved performance in the software engineering domain.

- Hermes 3's Censorship Claims Spark Debate: Advertised as censorship free, Hermes 3 reportedly refused to answer certain questions, requiring specific system prompts for desired responses.

- Speculation arises that steering through system prompts is necessary for its intended functionality, leading to discussions on balancing freedom and utility.

- Alignment Faking Raises Ethical Concerns: A YouTube video on 'Alignment Faking in Large Language Models' explores how individuals might feign shared values, paralleling challenges in AI alignment.

- This behavior is likened to the complexities AI models face when interpreting and mimicking alignment, raising ethical considerations in AI development.

- Open Source LLM Predicts Eagles Super Bowl Win: An open-source LLM-powered pick-em's bot predicts that the Eagles will triumph in the Super Bowl, outperforming 94.5% of players in ESPN's 2024 competition.

- The bot, stating 'The Eagles are the logical choice,' highlights a novel approach in exploiting structured output for reasoning, demonstrating potential in sports prediction.

Latent Space Discord

- Thinking Machines Lab Bursts onto the Scene: Thinking Machines Lab, founded by AI luminaries, aims to democratize AI systems and bridge public understanding of frontier AI, and was announced on Twitter.

- The team, including creators of ChatGPT and Character.ai, commits to open science via publications and code releases, as noted by Karpathy.

- Perplexity unleashes Freedom-Tuned R1 Model: Perplexity AI open-sourced R1 1776, a version of the DeepSeek R1 model, post-trained to deliver uncensored and factual info, first announced on Twitter.

- Labeled 'freedom tuning' by social media users, the model's purpose was met with playful enthusiasm (as seen in this gif).

- OpenAI Throws Down with SWElancer Benchmark: OpenAI introduced SWE-Lancer, a new benchmark for evaluating AI coding performance using 1,400 freelance software engineering tasks worth $1 million, and was announced on Twitter.

- The community expressed surprise at the name and speculated on the absence of certain models, hinting at strategic motives behind the benchmark.

- Grok 3 Hype Intensifies: The community discussed the potential capabilities of the upcoming Grok 3 model and its applications across various tasks.

- Despite excitement, skepticism lingered, with some humorously doubting its actual performance relative to expectations, according to this Twitter thread.

- Zed's Edit Prediction Model Enters the Ring: Zed launched an open next-edit prediction model, positioning itself as a potential rival to existing solutions like Cursor, according to their blog post.

- Users voiced concerns about its differentiation and overall utility compared to established models such as Copilot and their advanced functionalities.

Notebook LM Discord

- Podcast Reboots the Max Headroom: A user detailed their process for creating a Max Headroom podcast using Notebook LM for text generation and Speechify for voice cloning; the entire production clocked in at 40 hours.

- The user shared a link to their Max Headroom Rebooted 2025 Full Episode 20 Minutes on YouTube.

- LM Audio Specs Spark Debate: Users explored the audio generation capabilities of Notebook LM, emphasizing its utility for creating podcasts and advertisements, noting the time-saving benefits and script modification flexibility.

- A user reported issues with older paid accounts yielding subpar results, particularly with MP4 files.

- Google Product Design Draws Criticism: Participants voiced concerns about the limitations of Google's system design, highlighting the expectation for better performance as services become paid.

- They expressed frustration over technological shortcomings affecting user experience.

- Confusions about Podcast Creation Limits Aired: Users discussed experiencing a bug related to unexpected podcast creation limits, originally thinking that three podcasts was the upper limit, as opposed to chat queries.

- Others clarified that the actual limit is 50 for chat queries, clearing up the initial confusion.

- Podcast Tone Needs more Tuning: A user inquired about modifying the tone and length of their podcast, only to learn that these adjustments primarily apply to NotebookLM responses, not podcasts.

- The conversation then pivoted to configuring chat settings to enhance user satisfaction, essentially using chat prompts to improve the tone of the podcast.

Modular (Mojo 🔥) Discord

- Polars vs Pandas Debate Ignites: Members are excited about Polars library for dataframe manipulation due to its performance benefits over pandas, which one member stated would be essential down the line for dataframe work.

- Interest piqued for integrating Polars with Mojo projects, opening the doors to a silicon agnostic dataframe library for use in both single machines and distributed settings.

- Gemini 2.0 as a Coding Companion: Members suggested leveraging

Gemini 2.0 FlashandGemini 2.0 Flash Thinkingfor Mojo code refactoring, citing their understanding of Mojo, and recommended the Zed code editor with the Mojo extension found here.- However, a member expressed difficulties in refactoring a large Python project to Mojo, suggesting manual updates might be necessary due to current tool limitations, exacerbated by Mojo's borrow checker constraints.

- Enzyme for Autodiff in Mojo?: The community discussed implementing autodifferentiation in Mojo using Enzyme, with a proposal for supporting it, while weighing the challenges of converting MOJO ASTs to MLIR for optimization.

- The key challenge lies in achieving this while adhering to Mojo's memory management constraints.

- Global Variables Still Uncertain: Members expressed uncertainty regarding the future support for global variables in Mojo, with one humorously requesting global expressions.

- The request highlights the community's desire for greater flexibility in variable scoping, but it is uncertain whether it can mesh with Mojo's memory safety guarantees.

- Lists Under Fire For Speed: The community questioned the overhead of using

Listfor stack implementations, suggestingList.unsafe_setto bypass bounds checking.- Copying objects in Lists could impact speed; thus, they provided a workaround to showcase object movement, especially for very large data.

Nomic.ai (GPT4All) Discord

- GPT4All Seeks Low-Compute GPUs for Testing: Members discussed GPU requirements for testing a special GPT4All build, focusing on compute 5.0 GPUs like GTX 750/750Ti, with a member offering their GTX 960.

- Concerns were raised about VRAM limitations and compatibility, influencing the selection process for suitable testing hardware.

- Deep-Research-Like Features Spark Curiosity: A member inquired about incorporating Deep-Research-like features into GPT4All, akin to functionalities found in other AI tools.

- The discussion centered on clarifying the specifics of such functionality and its potential implementation within GPT4All.

- Token Limit Reached, Requiring Payment: The implications of reaching a 10 million token limit for embeddings with Atlas were discussed, clarifying that exceeding this limit necessitates payment or the use of local models.

- It was confirmed that billing is based on total tokens embedded, and previous tokens cannot be deducted from the count, affecting usage strategies.

- CUDA 5.0 Support Provokes Cautious Approach: The potential risks of enabling support for CUDA 5.0 GPUs were examined, raising concerns about potential crashes or issues that may require fixing.

- The prevailing view was to avoid officially announcing support without thorough testing, ensuring stability and reliability.

LlamaIndex Discord

- LlamaIndex Builds LLM Consortium: Frameworks engineer Massimiliano Pippi implemented an LLM Consortium inspired by @karpathy, using LlamaIndex to gather responses from multiple LLMs on the same question.

- This project promises to enhance collaborative AI response accuracy and sharing of insights.

- Mistral Saba Speaks Arabic: @mistralAI released the Mistral Saba, a new small model focused on Arabic, with day 0 support for integration, quickly start with

pip install llama-index-llms-mistralaihere.- It's unclear if the model is actually any good.

- Questionnaires Get Semantically Retrieved: @patrickrolsen built a full-stack app that allows users to answer vendor questionnaires through semantic retrieval and LLM enhancement, tackling the complexity of form-filling here.

- The app exemplifies a core use case for knowledge agents, showcasing a user-friendly solution for retrieving previous answers.

- Filtering Dates is Challenging: There is currently no direct support for filtering by dates in many vector stores, making it challenging to implement such functionality effectively and requiring custom handling or using specific query languages.

- A member commented that separating the year in the metadata is essential for filtering.

- RAG Gets More Structured: Members discussed examples of RAG (Retrieval-Augmented Generation) techniques that solely rely on JSON dictionaries to improve document matching efficiency.

- They shared insights on how integrating structured data can enhance traditional search methods for better query responses.

Torchtune Discord

- Byte Latent Hacks Qwen Fine-Tune: A member attempted to reimplement Byte Latent Transformers as a fine-tune method on Qwen, drawing inspiration from this GitHub repo.

- Despite a decreasing loss, the experiment produces nonsense outputs, requiring further refinement for TorchTune integration.

- TorchTune Grapples Checkpoint Logic: With the introduction of step-based checkpointing, the current resume_from_checkpoint logic, which saves checkpoints into

${output_dir}, may require adjustments.- A solution involves retaining the existing functionality while offering users the option to resume from the latest or specific checkpoints, per discussion in this github issue.

- Unit Tests Spark Dependency Debate: Concerns arose about the need to switch between four different installs to run unit tests, prompting a discussion on streamlining unit testing workflow.

- The proposal involves making certain dependencies optional for contributors, aiming to balance local experience and contributor convenience.

- RL Faces Pre-Training Skepticism: Members debated the practicality of Reinforcement Learning (RL) for pre-training, with many expressing doubts about its suitability.

- One member admitted they found the idea of using RL for pre-training terrifying, pointing to a significant divergence in opinions.

- Streamlining PR Workflow: A suggestion was made to enable cross-approval and merging of PRs among contributors in personal forks to accelerate development.

- This enhancement seeks to facilitate collaboration on issues such as the step-based checkpointing PR and enhance input from various team members.

MCP (Glama) Discord

- Glama Users Ponder MCP Server Updates: Users are seeking clarity on updating Glama to recognize changes made to their MCPC server, highlighting the need for clear configuration inputs.

- There was also a request to transform OpenRouter's documentation (openrouter.ai) into an MCP format for easier access.

- Anthropic Homepage Plunges into Darkness, Haiku & Sonnet Spark Hope: Members reported accessibility issues with the Anthropic homepage, while anticipating the release of Haiku 3.5 with tool and vision support.

- Conversations also alluded to a potential imminent release of Sonnet 4.0, stirring curiosity about its new functionalities.

- Code Whisperer: MCP Server Debugging Debuts: An MCP server with a VS Code extension now allows LLMs like Claude to interactively debug code across languages, project available on GitHub.

- The tool enables inspection of variable states during execution, unlike current AI coding tools that often rely on logs.

- Clear Thought Server Tackles Problems with Mental Models: A Clear Thought MCP Server was introduced, designed to enhance problem-solving using mental models and systematic thinking methodologies (NPM).

- It seeks to improve decision-making in development through structured approaches; members also discussed how competing tool Goose can also read and resolve terminal errors.

LLM Agents (Berkeley MOOC) Discord

- Berkeley MOOC awards Certificates to Over 300: The Berkeley LLM Agents MOOC awarded certificates to 304 trailblazers, 160 masters, 90 ninjas, 11 legends, and 7 honorees.

- Out of the 7 honorees, 3 were ninjas and 4 were masters, showing the competitive award selection process.

- Fall24 MOOC Draws 15k Students: The Fall24 MOOC saw participation from 15k students, though most audited the course.

- This high number indicates significant interest in the course materials and content.

- LLM 'Program' Clarified as Actual Programming Code: In the lecture on Inference-Time Techniques, 'program' refers to actual programming code rather than an LLM, framed as a competitive programming task.

- Participants were pointed to slide 46 to support this interpretation of programs.