[AINews] Vision Everywhere: Apple AIMv2 and Jina CLIP v2

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Autoregressive objectives are all you need.

AI News for 11/22/2024-11/23/2024. We checked 7 subreddits, 433 Twitters and 28 Discords (211 channels, and 2674 messages) for you. Estimated reading time saved (at 200wpm): 265 minutes. You can now tag @smol_ai for AINews discussions!

Inline with the general theme of everyone going multimodal (Pixtral, Llama 3.2, Pixtral Large), advancements in "multimodal" (really just vision) embeddings are very foundational. This makes Apple and Jina's releases in the past 48 hours particularly welcome.

Apple AIMv2

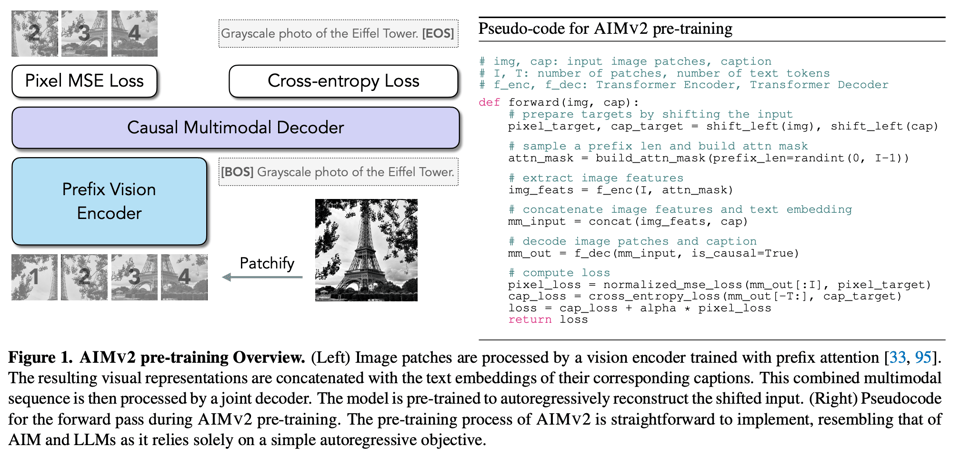

Their paper (GitHub here) details "a novel method for pre-training of large-scale vision encoders": pairing the vision encoder with a multimodal decoder that autoregressively generates raw image patches and text tokens.

This extends last year's AIMv1 work on vision models pre-trained with an autoregressive objective, which added T5-style prefix attention and a token-level prediction head, managing to pre-train a 7b AIM that achieves 84.0% on ImageNet1k with a frozen trunk.

The main update is introducing joint visual and textual objectives, which seem to scale up very well:

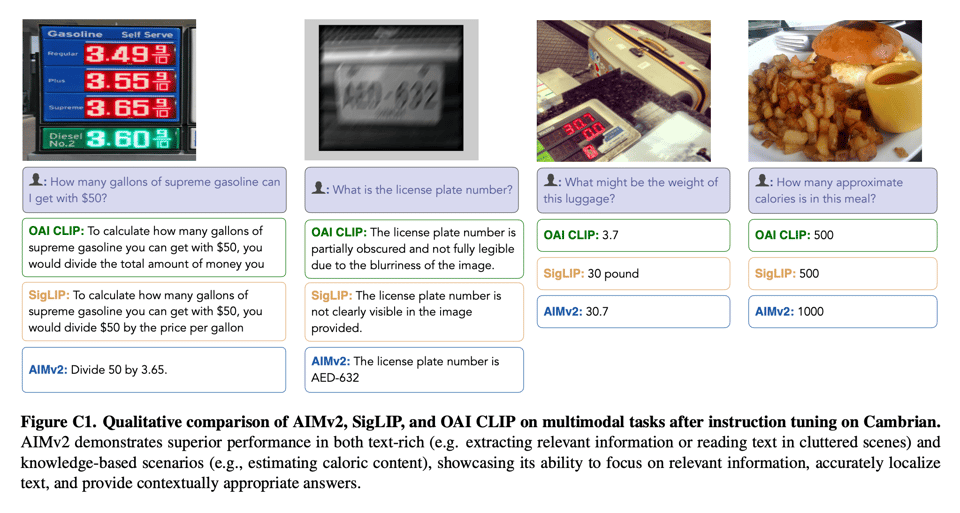

AIMV2-3B now achieves 89.5% accuracy on the same benchmark - smaller but better. The qualitative vibes are also excellent:

Jina CLIP v2

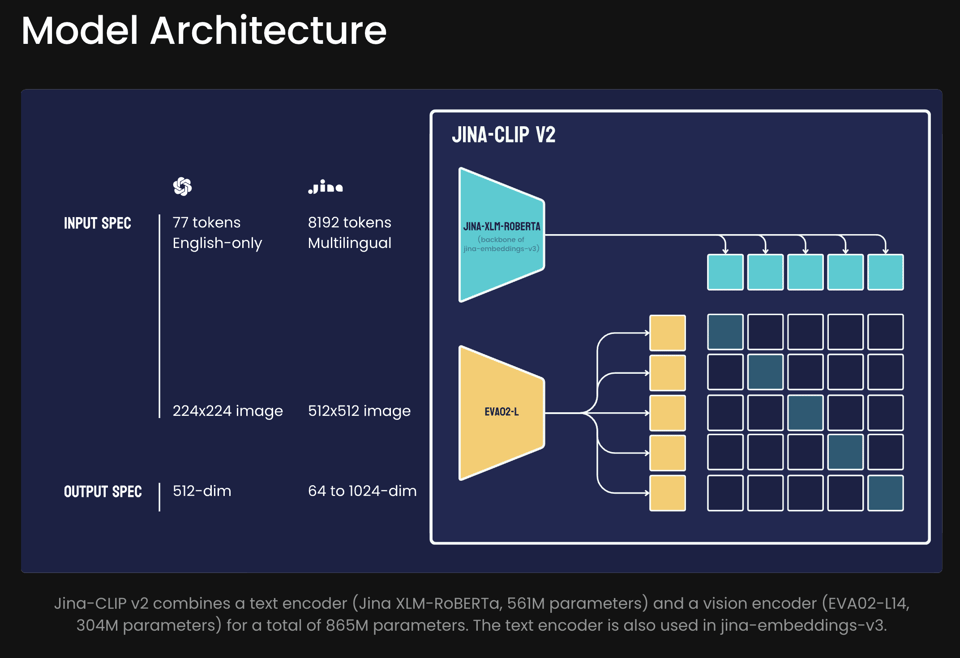

While Apple did more foundational VQA research, Jina's new CLIP descendant is immediately useful for multimodal RAG workloads. Jina released embeddings-v3 a couple months ago, and now is rolling its text encoder into its CLIP offering:

The tagline speaks to how many state of the art features Jina have packed into their release: "a 0.9B multimodal embedding model with multilingual support of 89 languages, high image resolution at 512x512, and Matryoshka representations."

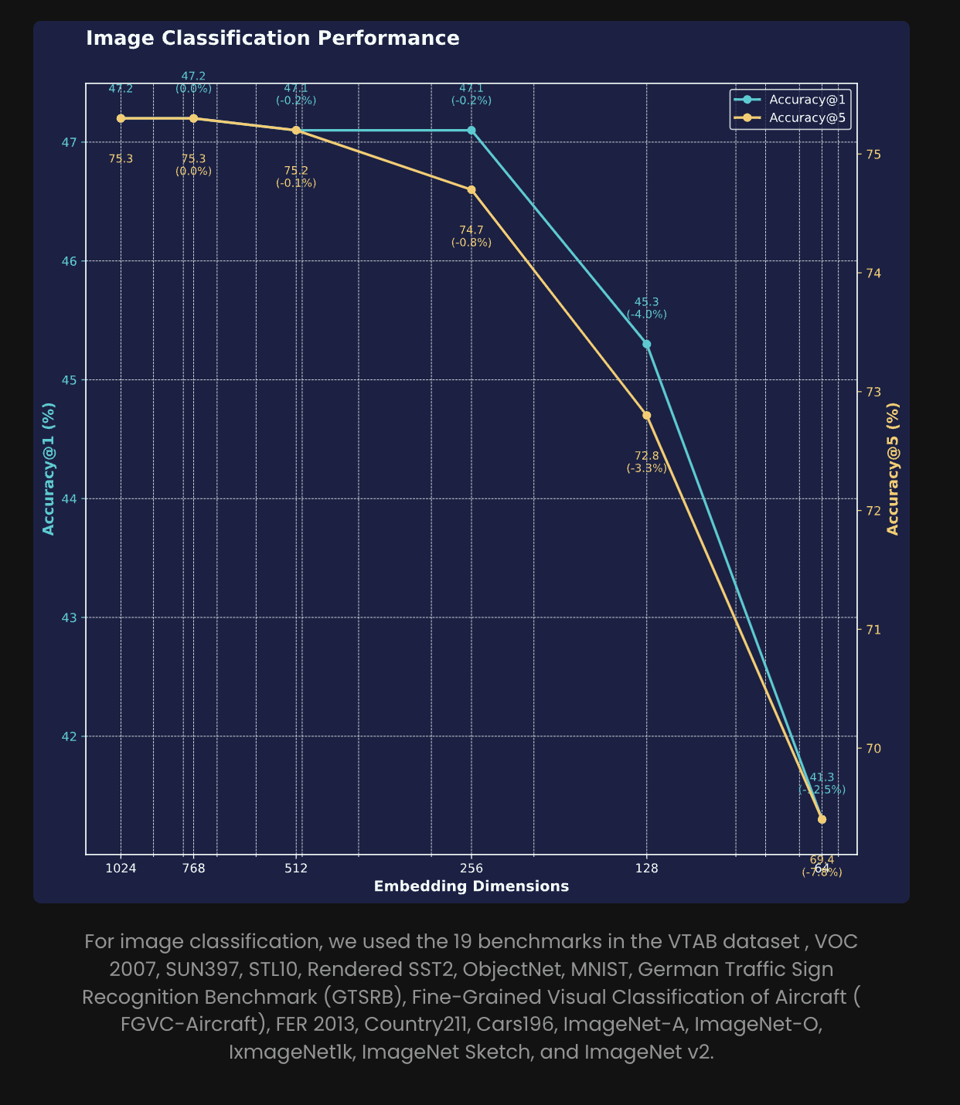

The Matryoshka embeddings are of particular distinction: "Compressing from 1024 to 64 dimensions (94% reduction) results in only an 8% drop in top-5 accuracy and 12.5% in top-1, highlighting its potential for efficient deployment with minimal performance loss."

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

1. Cutting-edge AI Model Releases and Developments: Tülu 3, AIMv2, and More

- Tülu 3 models by @allen_ai: Tülu 3 family, based on Llama 3.1, includes 8B and 70B models and offers 2.5x faster inference compared to Tulu 2. It's aligned using SFT, DPO, and RL-based methods with all resources publicly available.

- Discussions on Tülu 3 stress its competitiveness with other leading LLMs like Claude 3.5 and Llama 3.1 70B. The release includes public access to datasets, model checkpoints, and training code for practical experimentation.

- Effective Open Science for Tülu models is emphasized, praising the incorporation of new techniques like RL with Verifiable Rewards (RLVR).

- Apple's AIMv2 Vision Encoders: AIMv2 encoders outperform CLIP and SigLIP in multimodal benchmarks. They feature impressive results in open-vocabulary object detection and high ImageNet accuracy with a frozen trunk.

- AIMv2-3B achieves 89.5% on ImageNet using integrated transformers code.

- Jina-CLIP-v2 by JinaAI: A multimodal model with support for 89 languages and 512x512 image resolution, built to enhance text and image interactions. The model shows strong performance on retrieval and classification tasks.

2. AI Agents Enhancements & Applications: FLUX Tools, Insights from Suno

- FLUX Tools Suite by Black Forest Labs: New specialized models offer enhanced control over AI image generation. Available in anychat via @replicate API, supporting new Canny, Depth, and Redux models.

- FLUX tools empower developers to create engaging multimedia content with greater precision and customization.

- Suno and AI in Music Production: Suno's v4 is used for creative music endeavors, showcasing AI's transformative role in music production with Bassel from Suno delivering new AI-generated compositions.

- Additionally, discussions on integrating AI with beatboxing reflect on Suno's unique contributions to music creation.

3. AI, Science, and Society

- Generating Scientific Discoveries with AI: A panel by GoogleDeepMind spotlights AI revolutionizing scientific methods and aiding discovery. Key participants include Eric Topol, Pushmeet, Alison Noble, and Fiona Marshall.

- Baby-AIGS System Research explores AI's potential in scientific discovery through falsification and ablation studies, highlighting early-stage research focusing on executable scientific proposals.

- AI and Scientific Method Discussions: A deeper look into how AI is reshaping scientific methodologies with distinguished experts sharing insights.

- Participants debate AI's role in fostering new scientific breakthroughs and its intersection with biomedical research.

4. Advancements in AI Ethics, Red Teaming, and Bug Fixing

- OpenAI's Red Teaming Enhancements: White papers on red teaming disclose new methods involving external red teamers and automated systems, enhancing AI safety evaluations.

- These efforts aim to enrich AI's robustness by actively involving diverse human feedback in testing.

- MarsCode Agent in Bug Fixing: ByteDance's MarsCode Agent showcases significant success in automated bug fixing on the SWE-bench Lite benchmark, stressing the importance of precise error localization in problem resolution.

- Challenge areas are highlighted for future innovation in automated workflows.

5. Collaborations and Innovations in Companies and Tools

- Anthropic and Amazon's $4B Collaboration: A partnership to develop next-generation AI models focusing on AWS infrastructure, illustrating a strong alliance in AI development.

- This strategic investment emphasizes using Amazon-developed silicon to optimize training processes.

- LangGraph Voice Interaction Features: Integration of voice recognition capabilities with AI agents, leveraging OpenAI's Whisper and ElevenLabs for seamless voice interfaces.

- LangGraph enhances AI's adaptability in real-world applications, offering more natural interactions.

6. Memes, Humor, and Social Commentary

- Humor in Tech Tensions with LLM Benchmarks: A satirical take on AI model performance 'wars' and benchmarks, mocking the obsession with evaluation scores as reductive and misleading.

- Community voices express skepticism over the relevance of certain benchmarks in real-world AI model performance.

- Commentary on Elon Musk's Ventures: Wry remarks scrutinize the narrative of free speech as perceived on platforms owned by tech giants like Musk, challenging assumptions of open discourse.

- Critical reflections on changes in major tech platforms and their implications for genuine free expression.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. DeepSeek Emerges as Leading Chinese Open Source AI Company

- Chad Deepseek (Score: 1486, Comments: 174): DeepSeek created a model that matches or exceeds OpenAI's performance while using only 18,000 GPUs compared to OpenAI's 100,000 GPUs. This efficiency demonstrates significant improvements in model training approaches and resource utilization in large language model development.

- Strong community support for Chinese open-source AI companies including Qwen, DeepSeek, and Yi, with users highlighting their efficiency in achieving comparable results with fewer resources (18K GPUs vs OpenAI's 100K GPUs).

- Discussion around model performance focused on practical capabilities, with users reporting success in mathematical reasoning (specifically the "-4 squared" problem) and coding tasks, while some noted limitations in creative reasoning and nuanced responses.

- Debate emerged about political censorship in AI models, with users discussing how both Chinese and Western models handle sensitive historical topics, and the impact of GPU export restrictions potentially incentivizing more open-source development from Chinese companies.

- Competition is still going on when i am posting this.. DeepSeek R1 lite has impressed me more than any model releases, qwen 2.5 coder is not capable for these competitions , but deepseek r1 solved 4 of 7 , R1 lite is milestone in open source ai world truly (Score: 41, Comments: 10): DeepSeek R1 Lite demonstrates strong performance in an ongoing coding competition by solving 4 out of 7 problems, outperforming Qwen 2.5 Coder. The model's success marks significant progress in open-source AI development.

- DeepSeek R1 Lite is rumored to be a 16B parameter model, though performance suggests it could be larger. Community speculation points to it being a 16B MoE model, given the timing with OpenAI's O1 release and current GPU shortages.

- Model weights are not yet publicly available but are expected to be released "soon". Community sentiment emphasizes waiting for actual open-source release before declaring it a milestone.

- No detailed model information or technical specifications have been officially released yet, making performance claims preliminary.

Theme 2. Innovative Model Architectures: Marco-o1 and OpenScholar

- Marco-o1 from MarcoPolo Alibaba and what it proposes (Score: 40, Comments: 0): Marco-o1, developed by MarcoPolo Alibaba, combines Chain of Thought (CoT), Monte Carlo Tree Search (MCTS), and reasoning action to tackle open-ended problems without established solutions, differentiating itself from OpenAI's o1. The model integrates these three components to enable logical problem-solving, optimal path selection, and dynamic detail adjustment, while aiming to excel at both writing and reasoning tasks across multiple domains, with the model available at AIDC-AI/Marco-o1.

- OpenScholar: The open-source AI outperforming GPT-4o in scientific research (Score: 98, Comments: 2): OpenScholar, developed by Allen Institute for AI and University of Washington, combines retrieval systems with a fine-tuned language model to provide citation-backed research answers, outperforming GPT-4o in factuality and citation accuracy. The system implements a self-feedback inference loop for output refinement and is available as an open-source model on Hugging Face, making it more accessible to smaller institutions and researchers in developing countries, despite limitations in open-access paper availability.

- The AI2 blog post provides more comprehensive technical details about OpenScholar compared to the VentureBeat coverage, as referenced at allenai.org/blog/openscholar.

Theme 3. System Prompts and Tokenizer Optimization Insights

- Leaked System prompts from v0 - Vercels AI component generator. (100% legit) (Score: 292, Comments: 54): A developer leaked Vercel's V0 system prompts which reveal the AI tool uses MDX components, specialized code blocks, and a structured thinking process with internal reminders for generating UI components. The system includes detailed specifications for handling React, Node.js, Python, and HTML code blocks, with emphasis on using shadcn/ui library, Tailwind CSS, and maintaining accessibility standards, as documented in their GitHub repository.

- The discussion suggests V0 likely uses Claude/Sonnet rather than GPT-4 due to its XML tag structure and proficiency with shadcn/ui, as referenced in Anthropic's documentation about prompt structuring.

- Multiple users confirm the system uses closed-source SOTA models rather than open-source ones, with the complete prompt being approximately 16,000 tokens long and containing dynamic content including NextJS/React documentation.

- An updated version of the V0 system prompts was leaked and shared via GitHub, with some users noting similarities to Qwen2.5-Coder-Artifacts but specifically for React implementations.

- Beware of broken tokenizers! Learned of this while creating v1.3 RPMax models! (Score: 137, Comments: 31): Tokenizer issues affect model performance in RPMax v1.3 models, though no specific details were provided about the nature of the problems or solutions.

- RPMax versions have evolved from v1.0 to v1.3, with v1.3 implementing rsLoRA+ (rank-stabilized low rank adaptation) for improved learning and output quality. Mistral-based models proved most effective due to being naturally uncensored, while Llama 3.1 70B achieved the lowest loss rates.

- A critical tokenizer bug in the Huggingface transformers library causes tokenizer file sizes to double when modified, affecting model performance. The issue can be reproduced using AutoTokenizer.from_pretrained() followed by save_pretrained(), which incorrectly regenerates the "merges" section.

- The RPMax training approach is unconventional, using a single epoch, low gradient accumulation, and higher learning rates, resulting in unstable but steadily decreasing loss curves. This method aims to prevent the model from reinforcing specific character tropes or story patterns.

Theme 4. INTELLECT-1: Distributed Training Innovation

- Open Source LLM INTELLECT-1 finished training (Score: 268, Comments: 28): INTELLECT-1, an open source Large Language Model, completed its training phase using distributed GPU resources worldwide. No additional context or technical details about the model architecture, training parameters, or performance metrics were provided in the post.

- The model's distributed training approach across global GPU resources generated significant community interest, with users comparing it to protein folding projects and requesting ways to contribute their own GPUs. The dataset is expected to be released by the end of November according to their website.

- Discussion around the model's open source status sparked debate, with comparisons to existing open models like Olmo and K2-65B. Users noted that while other models have shared scripts and datasets, INTELLECT-1's distributed compute contribution represents a unique approach.

- Technical observations included a perplexity and loss bump coinciding with learning rate reduction, attributed to the introduction of higher quality data with different token distribution. Users noted that while the model's performance isn't exceptional, it represents an important first iteration.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

Theme 1. Amazon x Anthropic $4B Investment & Cloud Partnership

- It's happening. Amazon X Anthropic. (Score: 323, Comments: 103): Amazon commits $4 billion investment in Anthropic and establishes AWS as their primary cloud and training partner according to Anthropic's announcement. The partnership focuses on cloud infrastructure and AI model training using AWS Trainium.

- AWS users note they've been using Claude in production for over a year, with some expressing that Amazon Q already uses Claude behind the scenes. The partnership strengthens an existing relationship that included plans to bring Claude to Alexa.

- A key benefit of the deal addresses Claude's compute limitations and performance issues, with AWS Trainium replacing CUDA for model training. The shift from Nvidia hardware to Amazon's infrastructure suggests significant technical changes ahead.

- Users highlight concerns about rate limits and service reliability, with speculation about potential Prime integration (possibly with ads). Some compare this to the Microsoft-OpenAI partnership, noting that Google (also an Anthropic investor) may face challenges with their investment position.

- Tired of "We're experiencing High Demand" (Score: 34, Comments: 19): Claude's paid service faces increasing capacity issues, with users frequently encountering "We're experiencing High Demand" messages despite being paying subscribers. The post criticizes Anthropic's prioritization of feature releases over infrastructure scalability, expressing frustration with service limitations for paid customers.

- Users report that Claude's quality degrades during high demand periods, with "Full Response" mode potentially offering limited inference capabilities and faster token consumption.

- Multiple users confirm experiencing daily service disruptions, with one user canceling their subscription due to the AI's unreliability in handling routine tasks, making manual completion more efficient.

- A user speculates that military procurement of computing resources might be causing the capacity constraints, though this remains unverified.

Theme 2. GPT-4o Performance Regression on Technical Benchmarks

- Independent evaluator finds the new GPT-4o model significantly worse, e.g. "GPQA Diamond decrease from 51% to 39%, MATH decrease from 78% to 69%" (Score: 262, Comments: 53): GPT-4o shows performance drops across technical benchmarks, with GPQA Diamond scores falling from 51% to 39% and MATH scores decreasing from 78% to 69%. The decline in performance on technical tasks suggests a potential regression in the latest model's capabilities compared to its predecessor.

- GPT-4o appears to be optimized for natural language tasks over technical ones, with users noting it feels "more natural" despite lower benchmark scores. Several users suggest OpenAI is intentionally separating capabilities between models, with GPT-4o focusing on writing and O1 handling technical reasoning.

- Users report mixed experiences with current model alternatives: Claude Sonnet faces message limits, O1-mini is described as verbose, and O1-preview is restricted to 50 questions per week. Some users mention Gemini experimental 1121 showing promise in problem-solving and math.

- Discussion around benchmarking methods emerged, with users criticizing LMSYS as an inadequate performance metric and questioning the value of single-token math answers versus complex instruction responses. The model's decline in technical performance may reflect intentional trade-offs rather than regression.

- Why does ChatGPT get lazy? (Score: 146, Comments: 134): Users report ChatGPT providing increasingly superficial and incomplete responses, with examples of the AI giving brief, non-comprehensive answers that miss key details from prompts and require frequent corrections. The post author notes experiencing a pattern where ChatGPT acknowledges mistakes when corrected but continues to provide shallow responses, questioning whether there's an underlying reason for this perceived decline in performance.

- Users report significant decline in GPT-4 performance after recent "creative upgrade", with evidence showing it performing worse than GPT-4 mini on STEM subjects and basic math, as shown in a comparison image.

- Multiple users describe context retention issues and memory problems, with the AI frequently ignoring detailed prompts and custom instructions. The degradation appears linked to OpenAI's cost-cutting measures and reduced processing power allocation.

- Technical users report specific issues with code generation, document review, and detailed queries, noting that responses have become more generic and less task-specific. Several mention needing multiple attempts to get comprehensive answers that were previously provided in single responses.

Theme 3. LTX Video: New Open Source Fast Video Generation Model

- LTX Video - New Open Source Video Model with ComfyUI Workflows (Score: 259, Comments: 122): LTX Video, a new open source video model, integrates with ComfyUI and is available through Hugging Face and ComfyUI examples. The model provides video generation capabilities through ComfyUI workflows, offering users direct access to video creation tools.

- A member of the research team confirmed that LTX-Video can generate 24 FPS videos at 768x512 resolution in real-time, with more improvements planned. The model runs on a 3060/12GB in about 1 minute, while a 4090 takes 1:48s for a 10s video.

- Users reported mixed results with the model's performance, particularly with img2video functionality showing glitches. The research team acknowledged that results are highly prompt-sensitive and provided detailed example prompts on their GitHub page.

- The model is now integrated into the latest ComfyUI update and supports multiple modes including Text2Video, Image2Video, and Video2Video. Users need to follow specific prompt structures, with movement descriptions placed early in the prompt for best results.

- LTX-Video is Lightning fast - 153 frames in 1-1.5 minutes despite RAM offload and 12 GB VRAM (Score: 95, Comments: 30): LTX-Video demonstrates high-speed video generation capabilities by producing 153 frames in 1-1.5 minutes while operating with 12GB VRAM constraints and RAM offloading. This performance metric shows efficient consumer hardware utilization for video generation tasks.

- LTX-Video runs efficiently on consumer hardware, with users confirming successful operation on a 12GB 4070Ti despite 18GB VRAM requirements through RAM offloading. Installation guide available at ComfyUI blog.

- Users discuss future potential, noting upcoming 32GB VRAM consumer cards and comparing current state to early Toy Story era skepticism. The technology is expected to advance significantly in 2-3 years.

- Current version (0.9) is described as prompt-sensitive with improvements planned, while some users debate output quality. Raw outputs are generated without interpolation.

Theme 4. Chinese AI Models Emerge as Potential Competitors

- Has anyone explored Chinese AI in depth? (Score: 62, Comments: 128): Chinese AI models including DeepSeek, ChatGLM, and Ernie Bot offer free access and produce high-quality responses that potentially compete with ChatGPT-4 in certain domains. The post author notes limited community discussion about these models despite their capabilities.

- Users express strong concerns about data privacy and censorship under the Chinese Communist Party (CCP), with multiple commenters citing risks of surveillance and information control. The highest-scoring comments focus on these trust and privacy issues.

- Discussion highlights potential future scenarios including an "East versus West" divide in AI development and possible competing singularities between nations. Several users note this could lead to compatibility and competition challenges.

- Comments point to market awareness and first-mover advantage of Western AI models (ChatGPT, Claude, Gemini) as a key factor in their dominance, rather than technical capabilities being the primary differentiator.

AI Discord Recap

A summary of Summaries of Summaries by O1-preview

Theme 1. The AI Arms Race: New Models and Breakthroughs

- INTELLECT-1 Trained Across the Globe in Decentralized First: Prime Intellect announced the completion of INTELLECT-1, the first-ever 10B model trained via decentralized efforts across the US, Europe, and Asia. An open-source release is coming in about a week, marking a milestone in collaborative AI development.

- Alibaba Drops Marco-o1: Open-Sourced Alternative to ChatGPT's o1: AlibabaGroup released Marco-o1, an Apache 2 licensed model designed for complex problem-solving using Chain-of-Thought (CoT) fine-tuning and Monte Carlo Tree Search (MCTS). Researchers like Xin Dong and Yonggan Fu aim to enhance reasoning in ambiguous domains.

- Lightricks' LTX Video Model Generates 5-Second Videos in a Flash: Lightricks unveiled the LTX Video model, which can generate 5-second videos in just 4 seconds on high-performance hardware. The model is open-source and available through APIs, pushing the boundaries of rapid video generation.

Theme 2. Billion-Dollar Moves: Anthropic and Amazon Shake Hands

- Anthropic Bags $4 Billion from Amazon, AWS Becomes BFF: Anthropic expanded its collaboration with AWS, securing a whopping $4 billion investment from Amazon. AWS is now Anthropic's primary cloud and training partner, leveraging AWS Trainium to power their largest models.

- Cerebras Claims Speed King with Llama 3.1 Deployment: Cerebras is boasting about running Llama 3.1 405B at impressive speeds, positioning themselves as leaders in large language model deployment and stirring up the AI hardware competition.

Theme 3. AI Accused: OpenAI Deletes Evidence in Lawsuit

- Oops! OpenAI 'Accidentally' Deletes Data Amid Lawsuit: Lawyers allege OpenAI erased data after 150 hours of search in a copyright lawsuit with The New York Times and Daily News. This raises serious concerns about data handling in legal disputes.

- CamelAI's Account Vanishes After 1 Million-Agent Simulation: CamelAIOrg had its OpenAI account terminated, possibly due to their OASIS social simulation project involving one million agents. Despite reaching out, they've waited 5 days without a response, leaving their community in limbo.

Theme 4. AI Tools Get Smarter: Enhancing Development and Workflows

- Unsloth Update Slashes VRAM Usage, Adds Vision Finetuning: The latest Unsloth update boosts VRAM efficiency by 30-70% and introduces vision finetuning for models like Llama 3.2 Vision. It also supports Pixtral finetuning in a free 16GB Colab, making advanced AI more accessible.

- LM Studio Debates Multi-GPU Magic and GPU Showdowns: LM Studio users discuss balancing multi-GPU inference and compare GPUs like RTX 4070 Ti and Radeon RX 7900 XT. While power consumption varies, they find performance differences marginal, sparking debates over the best hardware for AI tasks.

- Aider Users Tackle Quantization Quirks and Benchmark Bafflements: Aider community delves into how different quantization methods impact model performance. They note Qwen 2.5 Coder shows inconsistent results across providers, emphasizing the need to mind the quantization details.

Theme 5. AI Art and Creativity: Machines with a (Sense of) Humor

- AI Art Turing Test Confuses (and Amuses) Everyone: The recent AI Art Turing Test left participants puzzled, struggling to distinguish AI-generated art from human creations. Discussions sparked about the test's effectiveness and the evolving role of AI in art.

- Voice Cloning Glitches Turn Audiobooks into Surprise Musicals: Users experimenting with voice cloning for audiobooks encountered unexpected glitches, resulting in the AI singing responses. These happy accidents add an amusing twist to audiobook production.

- ChatGPT's Quest for Comedy Falls Flat (Again): Despite advances, AI models like ChatGPT still struggle with humor, often delivering lackluster jokes. Users note attempts at humor or ASCII art often end in gibberish, highlighting room for improvement in AI's comedic skills.

PART 1: High level Discord summaries

Eleuther Discord

- Test-Time Training Boosts ARC Performance: Recent experiments with Test-Time Training (TTT) achieved up to 6x accuracy improvement on the Abstraction and Reasoning Corpus (ARC) compared to base models.

- Key factors include initial finetuning on similar tasks, auxiliary task formatting, and per-instance training, demonstrating TTT's potential in enhancing reasoning capabilities.

- Wave Network Introduces Complex Token Representation: The Wave Network utilizes complex vectors for token representation, separating global and local semantics, leading to high accuracy on the AG News classification task.

- Each token's value ratio to the global semantics vector establishes a novel relationship to overall sequence norms, enhancing the model's understanding of input context.

- Debate Emerges Over Learnable Positional Embeddings: Discussions on learnable positional embeddings in models like Mamba highlight their effectiveness based on input dependence compared to traditional embeddings.

- Concerns are raised about their performance in less constrained conditions, with alternatives like Yarn or Alibi being suggested for better flexibility.

- RNNs Demonstrate Out-of-Distribution Extrapolation: RNNs have shown capability to extrapolate out-of-distribution on algorithmic tasks, with some suggesting chaining of thought could be adapted to linear models.

- However, for complex tasks like those in the ARC, TTT may be more beneficial than In-Context Learning (ICL) due to RNNs' inherent representation limitations.

- Insights into Muon Orthogonalization Techniques: The implementation of Muon employs momentum and orthogonalization post-momentum update, which may affect its effectiveness.

- Discussions emphasize the importance of sufficient batch sizes for effective orthogonalization, especially when dealing with low-rank matrices.

Unsloth AI (Daniel Han) Discord

- Unsloth Update Boosts VRAM Efficiency: The latest Unsloth update introduces vision finetuning for models such as Llama 3.2 Vision, enhancing VRAM usage by 30-70% and adding support for Pixtral finetuning in a free 16GB Colab environment.

- Additionally, the update includes merging models into 16bit for streamlined inference and long context support for vision models, significantly improving usability.

- Mistral Models Outperform Peers: Users report that Mistral models excel in finetuning, demonstrating strong prompt adherence and superior accuracy compared to Llama and Qwen models.

- Despite their performance, some skepticism remains regarding Qwen's effectiveness, with reports of gibberish outputs in specific applications.

- Fine-tuning and Inference Challenges: Community members face difficulties when fine-tuning models, such as failing to load fine-tuned models for inference and encountering multiple model versions like BF16 and Q4 quantization in output folders.

- During inference, errors like

AttributeErrorandWebServerErrorsarise, particularly with models like 'Mistral-Nemo-Instruct-2407-bnb-4bit', prompting suggestions to replace model paths and verify compatibility with Hugging Face endpoints.

- During inference, errors like

- Tokenization and Pretraining Guidance: Issues with tokenization have been reported, including errors related to mismatched column lengths during training on datasets like Hindi, and empty predictions during evaluation stages.

- For continued pretraining, users discuss utilizing models beyond Unsloth's offerings and are encouraged to seek community support or raise compatibility requests on GitHub for non-supported models.

LM Studio Discord

- Balancing Multi-GPU Inference: Users discussed the feasibility of multi-GPU performance in LM Studio, particularly regarding inference and model distribution across multiple GPUs, noting that load balancing can complicate VRAM allocation.

- Concerns were raised about whether to pair different GPUs or opt for a more powerful single GPU for better overall performance.

- Comparing RTX 4070 Ti and Radeon RX 7900 XT: The community compared RTX 4070 Ti, Radeon RX 7900 XT, and GeForce RTX 4080 for performance at 1440p and 4K resolutions, noting that while power consumption varies, performance differences are generally marginal.

- Members discussed balancing power usage and performance, suggesting that higher quality models should be preferred for optimal results.

- Fine-tuning Models vs. RAG Strategies: Members debated the merits of fine-tuning models versus using RAG (Retrieval-Augmented Generation) strategies, with a consensus that fine-tuning can specialize a model for specific tasks while RAG offers more flexibility.

- Fine-tuning was exemplified with adapting models for C# coding languages, but concerns were raised about security implications with sensitive company data.

- AMD GPUs in LLM Benchmarking: AMD GPUs can run LLMs through ROCm or Vulkan; however, ongoing concerns about driver updates impacting performance were discussed.

- It was pointed out that ROCm operates mainly on Linux or WSL, limiting usability for some users.

- Anticipating the 5090 Graphics Card Release: Members expressed anticipation for the upcoming 5090 graphics card, with concerns about availability and pricing.

- Discussions included the impact of tariffs on hardware prices and the need to secure equipment ahead of expected price increases.

HuggingFace Discord

- IntelliBricks Toolkit Streamlines AI App Development: IntelliBricks is an open-source toolkit designed to simplify the development of AI-powered applications, featuring

msgspec.Structfor structured outputs. It is currently under development, with contributions welcome on its GitHub repository.- Developers are encouraged to contribute to enhance its capabilities, fostering a collaborative environment for building efficient AI applications.

- FLUX.1 Tools Enhance Image Editing Capabilities: The release of FLUX.1 Tools introduces a suite for editing and modifying images, including models like FLUX.1 Fill and FLUX.1 Depth, as announced by Black Forest Labs.

- These models improve steerability for text-to-image tasks, allowing users to experiment with open-access features and enhance their image generation workflows.

- Decentralized Training Completes INTELLECT-1 Model: Prime Intellect announced the completion of INTELLECT-1, a 10B model trained through decentralized efforts across the US, Europe, and Asia. A full open-source release is expected in approximately one week.

- This milestone highlights the effectiveness of decentralized training methodologies, with further details available in Prime Intellect's tweet.

- Cybertron v4 UNA-MGS Model Tops LLM Benchmarks: The cybertron-v4-qw7B-UNAMGS model has been reintroduced, achieving the #1 7-8B LLM ranking with no contamination and enhanced reasoning capabilities, as showcased on its Hugging Face page.

- Utilizing unique techniques such as

MGSandUNA, the model demonstrates superior benchmark performance, attracting attention from the AI engineering community.

- Utilizing unique techniques such as

- Cerebras Leads with High-Speed Llama 3.1 Deployment: Cerebras is setting the pace in LLM performance by running Llama 3.1 405B at impressive speeds, positioning themselves as leaders in large language model deployment.

- This advancement underscores Cerebras' commitment to optimizing AI model performance, providing a competitive edge in the rapidly evolving field of large language models.

Latent Space Discord

- Anthropic Secures $4B from AWS: Anthropic has received an additional $4 billion investment from Amazon, designating AWS as its primary cloud and training partner to enhance AI model training through AWS Trainium.

- This collaboration aims to leverage AWS infrastructure for developing and deploying Anthropic's largest foundation models, as detailed in their official announcement.

- AI Art Turing Test Sparks Mixed Reactions: The recent AI Art Turing Test has generated discussions, highlighted in this analysis, with participants struggling to distinguish between AI and human-generated artworks.

- Members are interested in evaluating the test with art restoration experts to better assess its effectiveness.

- Lightricks Unveils Open-Source LTX Video Model: Lightricks launched the LTX Video model, capable of generating 5-second videos in just 4 seconds on high-performance hardware, available through APIs.

- Discussions are focused on balancing local processing capabilities with cloud-related costs when utilizing the LTX Video Model.

- Stanford Releases AI Vibrancy Rankings Tool: Stanford introduced the AI Vibrancy Rankings Tool, which assesses countries based on customizable AI development metrics, allowing users to adjust indicator weights to match their perspectives.

- The tool has been praised for its flexibility in providing insights into global AI progress.

- LLM-powered Requirements Analysis Gains Traction: LLM-powered requirements analysis is emerging as a key topic, with members highlighting its effectiveness in automating complex problem understanding and modeling processes.

- The conversation points to significant potential for LLMs to streamline analysis workflows, referencing the DDD starter modeling process.

OpenAI Discord

- Voice Cloning Glitches Enhance Audiobooks: Members discussed voice cloning techniques for audiobook adaptations, noting unexpected sounds and glitches that sometimes resulted in surprising enhancements like singing. #ai-discussions

- A user shared experiences with various voice models, highlighting how voice cloning can create eerie effects in dialogues.

- ChatGPT Integrates with Airtable and Notion: ChatGPT was explored for its integration capabilities with tools like Airtable and Notion, aiming to enhance prompt writing within these applications. #ai-discussions

- Members shared their goals to improve prompt writing, seeking more personalized and effective interactions.

- Copilot's Image Generation Sparks Speculation: Curiosity arose about Copilot's image generation capabilities, with speculation on whether they're sourced from unreleased DALL-E models or a new program called Sora. #ai-discussions

- Comparisons were made between images generated by different AI tools, pointing out quality differences influenced by other models.

- GPT's Vocabulary Constraints in Dall-E Usage: A member expressed frustration that their GPT tends to forget specific vocabulary constraints after generating around 10 images with Dall-E. #gpt-4-discussions

- They are seeking tips to maintain character descriptions and avoid unwanted words in generated content.

- Exploring Alternatives and Free Image Models Beyond Dall-E: Members discussed alternatives to Dall-E such as Stable Diffusion and Flux models with comfyUI, suggesting they might better handle specific vocabulary restrictions. #gpt-4-discussions

- They recommended checking for recent tutorials on YouTube to ensure updated methods for preserving character integrity.

aider (Paul Gauthier) Discord

- Qwen 2.5 Coder's Performance Variability: Users observed that the Qwen 2.5 Coder model delivers inconsistent performance across providers, with Hyperbolic recording 47.4% compared to the leaderboard's 71.4%.

- Community discussions highlighted the effects of quantization, noting that BF16 and other variants yield different performance outcomes.

- Update in Aider's Benchmarking Approach: Aider's leaderboard for Qwen 2.5 Coder 32B now utilizes weights from HuggingFace via GLHF, enhancing benchmarking accuracy.

- Users raised concerns about score discrepancies linked to various hosting platforms, questioning potential variations in model quality.

- Direct API Integration with Qwen Models: The Aider framework can now access Qwen models directly without relying on OpenRouter, streamlining usage.

- This update aims to improve user experience by minimizing dependence on third-party services while maintaining model performance.

- Introduction of Uithub as a GitHub Alternative: Users are endorsing Uithub as a GitHub alternative for effortlessly copying repositories to LLMs by altering 'G' to 'U'.

- Feedback from members like Nick Dobos and Ian Nuttall emphasizes Uithub's capability to fetch full repository contexts, enhancing development workflows.

- Amazon's $4 Billion Investment in Anthropic: Amazon announced an additional $4 billion investment in Anthropic, intensifying the competitive landscape in AI development.

- This move has sparked discussions about the sustainability and innovation pace within AI projects amidst increasing corporate investments.

Nous Research AI Discord

- Marco-o1 Launch as ChatGPT o1 Alternative: AlibabaGroup has released Marco-o1, an Apache 2 licensed alternative to ChatGPT's o1 model, designed for complex problem-solving using Chain-of-Thought (CoT) fine-tuning and Monte Carlo Tree Search (MCTS).

- Researchers such as Xin Dong, Yonggan Fu, and Jan Kautz are leading the development of Marco-o1, aiming to enhance reasoning capabilities across domains with ambiguous standards and challenging reward quantification.

- Agentic Translation Workflow with Few-shot Prompting: The agentic translation workflow employs few-shot prompting and an iterative feedback loop instead of traditional fine-tuning, enabling the LLM to critique and refine its translations for increased flexibility and customization.

- By utilizing iterative feedback, this workflow avoids the overhead of training, thereby enhancing productivity in translation tasks.

- Alibaba's AWS Collaboration and $4B Investment: AnthropicAI announced a partnership with AWS, including a new $4 billion investment from Amazon, positioning AWS as their primary cloud and training partner, as shared in this tweet.

- Teknium highlighted in a tweet that sustaining pretraining scaling at the current pace will require $200 billion over the next two years, questioning the feasibility of ongoing advancements.

- Open WebUI for LLMs and Multi-model Chat Interfaces: Members discussed graphical user interfaces (GUIs) for LLM-hosted chat experiences, favoring tools like Open WebUI and LibreChat, with Open WebUI being widely preferred for its user-friendly interface.

- An animated demonstration of Open WebUI's features was shared, emphasizing its support for various LLM runners and its capacity to handle multiple model interactions efficiently.

- Fine-tuning Datasets with Axolotl's Example Defaults: A member seeking to fine-tune models and create datasets expressed concerns over high trial-and-error costs, prompting recommendations to use Axolotl's example defaults, which are deemed effective for training runs.

- Using example defaults from Axolotl can streamline the fine-tuning process, reducing costs and enhancing the efficacy of dataset creation efforts.

OpenRouter (Alex Atallah) Discord

- Claude 3.5 Haiku ID Changes: The Claude 3.5 Haiku model has been renamed to use a dot instead of a dash in its ID, altering its availability. New model IDs are available at Claude 3.5 Haiku and Claude 3.5 Haiku 20241022, though access may be restricted.

- Users seeking these models can request access through the Discord channel, while previous IDs remain functional.

- Gemini Model Quota Issues: Users are encountering quota errors when accessing the Gemini Experimental 1121 model via OpenRouter. It is recommended to connect directly to Google Gemini for more reliable access.

- These quota limitations are impacting users relying on the free version, prompting suggestions for alternative connection methods.

- OpenRouter API Token Discrepancies: There are reports that the Qwen 2.5 72B Turbo model is not returning token counts through the OpenRouter API, unlike other providers. However, activity reports on the OpenRouter page display token usage accurately.

- This inconsistency suggests a potential issue with how OpenRouter handles token counts for specific models.

- Tax on OpenRouter Credits in Europe: A user questioned why purchasing OpenRouter credits in Europe does not include VAT, unlike services from OpenAI or Anthropic. The response clarified that VAT calculation is the user's responsibility, with plans to implement automatic tax calculations in the future.

- This lack of VAT inclusion has raised concerns among European users, highlighting the need for streamlined tax processes.

- Access to Custom Provider Keys: Multiple users have requested access to custom provider keys, with repeated appeals emphasizing strong interest in this feature. Users like sportswook420 and vneqisntreal have highlighted the demand.

- The community's enthusiasm for custom provider keys indicates a desire for enhanced functionality, though access procedures remain unspecified.

Perplexity AI Discord

- Gemini AI vs ChatGPT: Users reported that Gemini AI often stops responding after a few interactions, raising questions about its reliability compared to ChatGPT.

- Discussions highlighted differences in performance, with some members finding ChatGPT more consistent for extended conversations.

- Perplexity Browser Extensions: There was a conversation about the availability of Perplexity extensions for Safari, including a search engine addition and a discontinued summarization tool.

- Members shared alternative solutions for non-Safari browsers and provided tips for managing existing extensions.

- AI Accessibility for Non-Coders: A proposal was made for a tier-based learning system to make AI technologies more accessible to non-coders, featuring a structured curriculum of projects and tutorials.

- The system aims to offer step-by-step guidance, fostering skill development within a community-oriented framework.

- Digital Twins in AI: Digital twins were explored, focusing on their application in monitoring and optimizing real-world entities across various industries.

- Users expressed significant interest in how digital twins enhance simulation capabilities and operational efficiency.

- AI's Influence on Grammarly: The impact of AI on Grammarly was debated, with this discussion examining the integration of AI advancements into writing tools.

- Participants considered both the benefits and potential drawbacks of incorporating AI to enhance Grammarly's functionality.

Stability.ai (Stable Diffusion) Discord

- SDXL Lightning Embraces Image Prompts: A user inquired about utilizing image prompts in SDXL Lightning via Python, seeking guidance on integrating photos into specific contexts.

- Another user confirmed the possibility and suggested exchanging more information through direct messaging.

- Optimizing WebUI for 12GB VRAM: Discussions focused on enhancing webui.bat parameters for better performance with 12GB VRAM, with suggestions to include '--no-half-vae'.

- Users agreed that this adjustment sufficiently optimizes performance without introducing further complications.

- Converting Corporate Photos to Pixar Styles: A request was made for methods to transform corporate photos into Pixar-style images, requiring the processing of about ten portraits on short notice.

- Members debated the feasibility, noting the possible unavailability of free services and recommended fine-tuning an image generation model.

- Exploring Video Fine-tuning Services with Cogvideo: Users expressed interest in video fine-tuning and inquired about available servers or services, referencing the Cogvideo model.

- It was highlighted that while Cogvideo is prominent in video generation, alternative specific fine-tunes might better suit user needs.

- Downloading Stable Diffusion and Its Use Cases: A new user sought the easiest and fastest method to download Stable Diffusion for PC and inquired about its relevant use cases.

- Another user requested help in creating a specific image using Stable Diffusion while navigating content filters, indicating a need for more permissive software options.

LlamaIndex Discord

- Simplifying Function Calling in Workflows: Users discussed streamlining function calling in workflows, recommending the use of prebuilt agents like

FunctionCallingAgentfor automated function invocation without boilerplate code.- A member highlighted that while boilerplate code offers more control, utilizing a prebuilt agent can streamline the process.

- LlamaIndex Security Compliance: LlamaIndex confirmed its compliance with SOC2, detailing the secure handling of original documents via LlamaParse and LlamaCloud.

- LlamaParse encrypts files for 48 hours, while LlamaCloud chunks and stores data securely.

- Ollama Package Issues in LlamaIndex: Users reported issues with the Ollama package in LlamaIndex, citing a bug in the latest version that caused errors during chat responses.

- Downgrading to Ollama version 0.3.3 was recommended, with some members confirming that this action resolved their issues, referencing Pull Request #17036.

- Hugging Face Embedding Compatibility Issues: Concerns were raised about embeddings from the CODE-BERT model on Hugging Face not aligning with LlamaIndex's expected format.

- Users recommended raising an issue on GitHub to address the potential mismatch in handling model responses.

- LlamaParse Parsing Challenges: LlamaParse is experiencing issues with returning redundant information, including headers and bibliographies, despite detailed instructions to exclude them.

- A member asked, 'Has anyone else experienced this issue?', and shared comprehensive parsing instructions for scientific articles to maintain logical content flow and exclude non-essential elements like acknowledgments and references.

Notebook LM Discord Discord

- NotebookLM Implements Retrieval-Augmented Generation: NotebookLM now leverages Retrieval-Augmented Generation to enhance response accuracy and citation tracking, as discussed by community members.

- This implementation aims to provide more reliable and verifiable outputs for users engaging in extensive query sessions.

- Podcastfy.ai Emerges as NotebookLM API Alternative: A member recommended Podcastfy.ai as an open source alternative to NotebookLM's podcast API, prompting discussions on feature comparisons.

- Users are evaluating how Podcastfy.ai stacks up against existing NotebookLM options for podcast creation and management.

- Demand Grows for Producer Studio in NotebookLM: A user highlighted their Producer Studio feature request for NotebookLM, advocating for enhanced podcast production capabilities.

- Community members are showing interest in advanced production tools to streamline podcast creation within the platform.

- NotebookLM Seeks Multilingual Audio Translation Support: Users are requesting the ability to translate NotebookLM audio outputs into languages like German and Italian, highlighting the need for broader multilingual support.

- This demand underscores the platform's potential to cater to a more diverse, global engineering audience.

- Podcast Creation Limits in NotebookLM Clarified: Users have identified a 100-podcast per account limit and a possible daily cap of 20 podcast creations within NotebookLM.

- This constraint leads users to manage their podcast inventories carefully, as deletion of older podcasts resets their creation capacity.

Interconnects (Nathan Lambert) Discord

- OpenAI's Data Deletion Lawsuit: Lawyers for The New York Times and Daily News are suing OpenAI, alleging accidental deletion of data after over 150 hours of search.

- On November 14, data on a single virtual machine was erased, potentially impacting the case as outlined in a court letter.

- Prime Intellect's INTELLECT-1 Decentralized 10B Model: Prime Intellect announced the completion of INTELLECT-1, the first decentralized training of a 10B model across multiple continents.

- A full open-source release is expected within a week, inviting collaboration to build open-source AGI.

- Anthropic's $4B AWS Partnership: Anthropic expanded its collaboration with AWS through a $4 billion investment from Amazon to establish AWS as their primary cloud and training partner.

- This partnership aims to enhance Anthropic's AI technologies, as detailed in their official news release.

- Tulu 3's On-policy DPO Analysis: Discussions on the Tulu 3 paper questioned whether the described DPO method is truly on-policy due to evolving model policies during training.

- Members debated that online DPO, mentioned in section 8.1, aligns more with on-policy reasoning by sampling completions for the reward model at each training step.

- CamelAIOrg's OASIS Social Simulation Project: CamelAIOrg faced account termination by OpenAI, possibly related to their recent OASIS social simulation project involving one million agents, as detailed on their GitHub page.

- Despite reaching out for assistance, 20+ community members are still awaiting API keys after 5 days without a response.

GPU MODE Discord

- Triton Optimizes AMD GPUs: In a YouTube video, Lei Zhang and Lixun Zhang discuss Triton optimizations for AMD GPUs, focusing on techniques like swizzle for L2 cache and memory access efficiency.

- They also explore MLIR analysis to enhance Triton kernels, with participants highlighting the importance of these optimizations in improving overall GPU performance.

- FlashAttention Optimizations: Members discussed advancements in FlashAttention, including approximation techniques for the global exponential sum based on local sums within the tiling block of QK^T.

- Emphasis was placed on understanding the relationship between local and global exponential sums to optimize attention mechanisms effectively.

- Pruning Techniques for LLMs: A member requested the latest pruning and model efficiency papers for large language models (LLMs), referencing the What Matters in Transformers paper and its data-dependent techniques.

- The discussion highlighted a need for non data-dependent techniques to enhance model efficiency across varied industrial applications.

- GPT-2 Training Methods: A GitHub Gist was shared detailing how to train GPT-2 in five minutes for free, including a supportive function to streamline the process.

- Additionally, there was a conversation about integrating GPT-2 training capabilities into a Discord bot, aiming to improve user experience for AI-related tasks.

- NPU Acceleration Solutions: A member inquired about libraries or runtimes that support NPU acceleration, mentioning that Executorch offers some support for Qualcomm NPUs.

- The discussion sought to identify additional frameworks that effectively leverage NPU acceleration, encouraging community recommendations.

Cohere Discord

- Cohere API Enhances Chat Editing: Users requested a front-end compatible with the Cohere API that includes an edit button for modifying chat history without restarting conversations.

- It was clarified that edits should seamlessly integrate into the chat history, highlighting the current absence of an edit option on both the Cohere website's chat and playground-chat pages.

- SQL Agent Integrates with Langchain: The SQL Agent project was showcased in Cohere's documentation and received positive feedback from the community.

tinygrad (George Hotz) Discord

- SDXL Benchmark Slows Post-Update: After applying update #7644, SDXL no longer casts to half on CI tinybox green, causing benchmarks to slow down by over 2x.

- Members questioned if this casting change was intentional to address previous incorrect casting implementations.

- Concerns Over SDXL's Latest Regression: The removal of half casting in SDXL with update #7644 has raised regression concerns among the community.

- Users are seeking clarification on whether the decreased benchmark performance signifies a backward step in SDXL's capabilities.

- Proposed Intermediate Casting Strategy Shift: A proposal was made to determine intermediate casting based on input dtypes rather than the device, advocating for a pure functional approach.

- The suggestion includes adopting a method similar to fp16 in stable diffusion to enhance model and input casting efficiency.

- Tinygrad Removes Custom Kernel Functions: Custom kernel functions have been removed from the latest versions of the Tinygrad repository.

- George Hotz recommended alternative methods to achieve desired outcomes without compromising the abstraction layers.

- Introduction to Tinygrad Shared via YouTube: An introduction to Tinygrad was shared through a YouTube link to assist beginners.

- This resource aims to help new users understand the fundamentals of Tinygrad more effectively.

Modular (Mojo 🔥) Discord

- Mojo-Python Interoperability on the Horizon: The Mojo roadmap includes enabling Python developers to import Mojo packages and call Mojo functions, aiming to improve cross-language interoperability.

- Community members are actively developing this feature, with preliminary methods already available for those not requiring optimal performance.

- Async Event Loop Configuration in Mojo: Asynchronous operations in Mojo now require setting up an event loop to manage state machines effectively, despite initial support for async-allocated data structures.

- Future plans intend to allow compiling out the async runtime when it's unnecessary, thus optimizing performance.

- Multithreading Integration Workaround for Mojo-Python: A user shared a method where Mojo and Python communicate via a queue, facilitating asynchronous interactions using Python's multithreading.

- While effective for certain cases, some find this approach overly complex for simpler needs, advocating for an official solution.

- Advancing Mojo Features for Speed Optimization: A member highlighted Mojo's main utility as a Python-like alternative for C/C++/Rust, emphasizing its role in accelerating slow processes.

- They stressed the importance of foundational features like parameterized traits and Rust-style enums over basic Mojo classes.

Torchtune Discord

- HF Transfer Accelerates Model Downloads: The addition of HF transfer to torchtune significantly reduces model downloading times, with the time for llama 8b dropping from 2m12s to 32s.

- Users can enable it by running

pip install hf_transferand adding the flagHF_HUB_ENABLE_HF_TRANSFER=1for non-nightly versions. Additionally, some users reported achieving download speeds exceeding 1GB/s by downloading one file at a time via HF transfer on home internet connections.

- Users can enable it by running

- Anthropic Paper Questions AI Evaluation Consistency: A recent research paper from Anthropic discusses the reliability of AI model evaluations, questioning if performance differences are genuine or if they arise from random luck in question selection.

- The study encourages the AI research community to adopt more rigorous statistical reporting methods, while some community members expressed skepticism about the emphasis on error bars, highlighting an ongoing conversation about enhancing evaluation standards.

LLM Agents (Berkeley MOOC) Discord

- Hackathon Goes Fully Online: The upcoming hackathon is 100% online, allowing participants to join from any location without the need for a physical venue.

- This decision addresses logistical concerns and ensures broader accessibility for all team members.

- Simplified Team Registration: Team registration now sends a confirmation email to the email entered in the first field, ensuring at least one team member receives the confirmation.

- This change streamlines the registration process, making it more user-friendly and efficient.

- Percy Liang's Presentation: Percy Liang's presentation this week received positive feedback from members.

- Participants highlighted the clarity and depth of the content delivered during the session.

OpenInterpreter Discord

- Desktop App Release Timeline Remains Uncertain: A newcomer inquired about the release schedule for the desktop app after joining the waiting list, but no specific release date was provided, leaving the timeline uncertain.

- This uncertainty suggests ongoing development efforts, with no definitive timeline announced for the desktop app.

- Exponent Demo Highlights Windsurf's Effectiveness: A member shared their experience conducting a demo with Exponent, mentioning continued experimentation with its features.

- Positive feedback was given about Windsurf, underlining its effectiveness during the demo.

- Community Explores Open Source Devin: The discussion mentioned an open-source version of Devin that community members have been exploring, though not all have tried it yet.

- This reflects ongoing interest in leveraging open-source tools for project experimentation.

- Overcoming Installation Challenges for O1 on Linux: A member reported difficulties in installing O1 on their Linux system and is seeking solutions to resolve the issue.

- They are seeking advice about potential solutions or workarounds for installation issues. Additionally, discussions touched on the feasibility of integrating Groq API or other free APIs with O1 on Linux.

DSPy Discord

- VLMs Enhance Invoice Processing: A member is exploring the use of a VLM for a high-stakes invoice processing project, seeking guidance on how DSPy could enhance their prompt for specialized subtasks.

- There's a mention of recent support for VLMs and specifically Qwen by DSPy.

- DSPy Integrates Qwen for VLM Support: DSPy has added support for Qwen, a specific VLM, to enhance its capabilities in handling specialized tasks.

- This integration aims to improve prompt engineering for projects like high-stakes invoice processing.

- Testing DSPy on Visual Analysis Projects: A member suggested trying DSPy for VLMs, sharing their success with it for a visual analysis project and noting that the CoT module functions effectively with image inputs.

- They haven't tested the optimizers yet, indicating there's more to explore.

- Simplifying Project Development with DSPy: Another member emphasized starting with simple tasks before gradually adding complexity to projects, reinforcing the notion of accessibility with DSPy.

- It's not very hard, if you start simple and add complexity over time! conveys a sense of encouragement to experiment.

OpenAccess AI Collective (axolotl) Discord

- INTELLECT-1 Completes Decentralized Training: Prime Intellect announced the completion of INTELLECT-1, marking the first-ever decentralized training of a 10B model across multiple continents. A tweet from Prime Intellect confirmed that post-training with arcee_ai is now underway, with a full open-source release scheduled for approximately one week from now.

- INTELLECT-1 achieves a significant milestone in decentralized AI training, enabling collaborative development across the US, Europe, and Asia. The upcoming open-source release is expected to foster broader community engagement in AGI research.

- 10x Enhancement in Training Capability: Prime Intellect claims a 10x improvement in decentralized training capability with INTELLECT-1 compared to previous models. This advancement underscores the project's scalability and efficiency in distributed environments.

- The 10x enhancement positions INTELLECT-1 as a leading model in decentralized AI training, inviting AI engineers to contribute towards building a more robust open-source AGI framework.

- Anticipation for Axolotl Fine-tuning: There is hopeful anticipation regarding the fine-tuning capabilities in Axolotl once INTELLECT-1 is released. Participants are eager to evaluate how the system manages finetuning given the advancements in decentralized training.

- The integration of Axolotl's fine-tuning features with INTELLECT-1's decentralized framework is expected to enhance model adaptability and performance, benefiting the technical engineering community.

LAION Discord

- Neural Turing Machines Enthusiasm: A member has been exploring Neural Turing Machines for the past few days.

- They would love to bounce ideas off others who share this interest.

- Differentiable Neural Computers Deep Dive: A member is delving into Differentiable Neural Computers to gain further insights.

- They are seeking fellow enthusiasts to collaborate on thoughts and insights related to these technologies.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!