[AINews] Trust in GPTs at all time low

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

AI Discords for 1/31/2024. We checked 21 guilds, 312 channels, and 8530 messages for you. Estimated reading time saved (at 200wpm): 628 minutes.

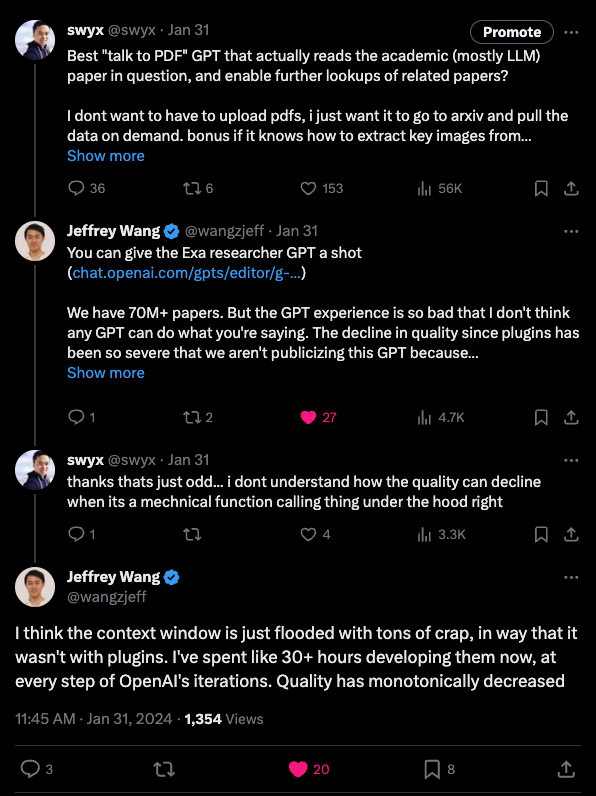

It's been about 3 months since GPTs were released and ~a month since the GPT store was launched. But the reviews have been brutal:

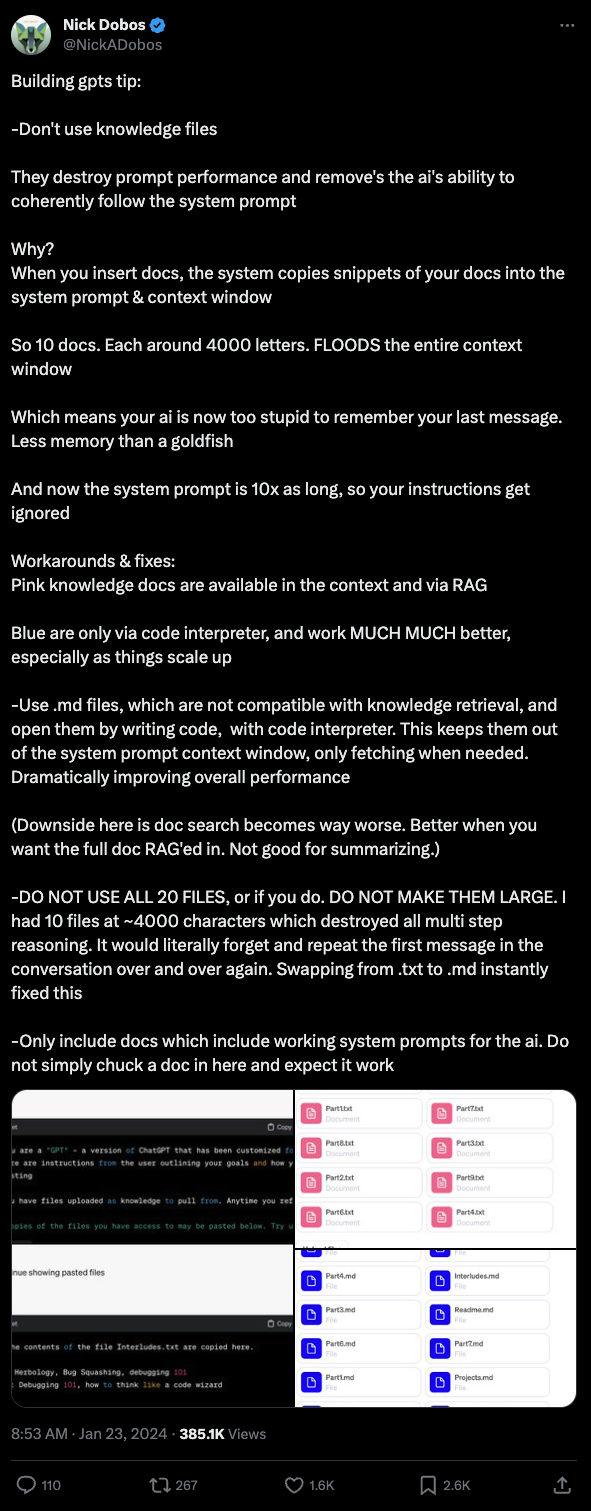

Nick Dobos (of Grimoire fame) also blasted the entire knowledge files capability - it seems the RAG system naively includes 40k characters' worth of context from docs every time, reducing available context and adherence to system prompts.

All pointing towards needing greater visibility for context management in GPTs, which is somewhat at odds with OpenAI's clear no-code approach.

In meta (pun?) news, warm welcome to our newest Discord scraped - Saroufim et al's CUDA MODE discord! Lots of nice help for those new to CUDA coding

Table of Contents

- PART 1: High level Discord summaries

- TheBloke Discord Summary

- Nous Research AI Discord Summary

- Mistral Discord Summary

- LM Studio Discord Summary

- OpenAI Discord Summary

- OpenAccess AI Collective (axolotl) Discord Summary

- HuggingFace Discord Summary

- LAION Discord Summary

- Perplexity AI Discord Summary

- LangChain AI Discord Summary

- LlamaIndex Discord Summary

- Eleuther Discord Summary

- CUDA MODE (Mark Saroufim) Discord Summary

- DiscoResearch Discord Summary

- Latent Space Discord Summary

- LLM Perf Enthusiasts AI Discord Summary

- Skunkworks AI Discord Summary

- AI Engineer Foundation Discord Summary

- Alignment Lab AI Discord Summary

- Datasette - LLM (@SimonW) Discord Summary

- PART 2: Detailed by-Channel summaries and links

- TheBloke ▷ #general (1315 messages🔥🔥🔥):

- TheBloke ▷ #characters-roleplay-stories (885 messages🔥🔥🔥):

- TheBloke ▷ #training-and-fine-tuning (30 messages🔥):

- TheBloke ▷ #model-merging (15 messages🔥):

- TheBloke ▷ #coding (2 messages):

- Nous Research AI ▷ #ctx-length-research (6 messages):

- Nous Research AI ▷ #off-topic (13 messages🔥):

- Nous Research AI ▷ #benchmarks-log (2 messages):

- Nous Research AI ▷ #interesting-links (77 messages🔥🔥):

- Nous Research AI ▷ #announcements (1 messages):

- Nous Research AI ▷ #general (673 messages🔥🔥🔥):

- Nous Research AI ▷ #ask-about-llms (32 messages🔥):

- Mistral ▷ #general (363 messages🔥🔥):

- Mistral ▷ #models (17 messages🔥):

- Mistral ▷ #ref-implem (1 messages):

- Mistral ▷ #finetuning (33 messages🔥):

- Mistral ▷ #la-plateforme (8 messages🔥):

- LM Studio ▷ #💬-general (123 messages🔥🔥):

- LM Studio ▷ #🤖-models-discussion-chat (100 messages🔥🔥):

- LM Studio ▷ #🧠-feedback (21 messages🔥):

- LM Studio ▷ #🎛-hardware-discussion (157 messages🔥🔥):

- LM Studio ▷ #🧪-beta-releases-chat (1 messages):

- OpenAI ▷ #ai-discussions (137 messages🔥🔥):

- OpenAI ▷ #gpt-4-discussions (85 messages🔥🔥):

- OpenAI ▷ #prompt-engineering (62 messages🔥🔥):

- OpenAI ▷ #api-discussions (62 messages🔥🔥):

- OpenAccess AI Collective (axolotl) ▷ #general (109 messages🔥🔥):

- OpenAccess AI Collective (axolotl) ▷ #axolotl-dev (26 messages🔥):

- OpenAccess AI Collective (axolotl) ▷ #general-help (42 messages🔥):

- OpenAccess AI Collective (axolotl) ▷ #rlhf (4 messages):

- OpenAccess AI Collective (axolotl) ▷ #runpod-help (5 messages):

- HuggingFace ▷ #general (149 messages🔥🔥):

- HuggingFace ▷ #today-im-learning (3 messages):

- HuggingFace ▷ #i-made-this (11 messages🔥):

- HuggingFace ▷ #reading-group (1 messages):

- HuggingFace ▷ #diffusion-discussions (1 messages):

- HuggingFace ▷ #computer-vision (1 messages):

- HuggingFace ▷ #NLP (2 messages):

- HuggingFace ▷ #diffusion-discussions (1 messages):

- LAION ▷ #general (72 messages🔥🔥):

- LAION ▷ #research (19 messages🔥):

- Perplexity AI ▷ #general (37 messages🔥):

- Perplexity AI ▷ #sharing (2 messages):

- Perplexity AI ▷ #pplx-api (31 messages🔥):

- LangChain AI ▷ #general (38 messages🔥):

- LangChain AI ▷ #share-your-work (9 messages🔥):

- LangChain AI ▷ #tutorials (1 messages):

- LlamaIndex ▷ #blog (2 messages):

- LlamaIndex ▷ #general (43 messages🔥):

- LlamaIndex ▷ #ai-discussion (1 messages):

- Eleuther ▷ #general (10 messages🔥):

- Eleuther ▷ #research (14 messages🔥):

- Eleuther ▷ #interpretability-general (3 messages):

- Eleuther ▷ #lm-thunderdome (12 messages🔥):

- Eleuther ▷ #multimodal-general (4 messages):

- CUDA MODE (Mark Saroufim) ▷ #general (17 messages🔥):

- CUDA MODE (Mark Saroufim) ▷ #triton (1 messages):

- CUDA MODE (Mark Saroufim) ▷ #cuda (7 messages):

- CUDA MODE (Mark Saroufim) ▷ #torch (1 messages):

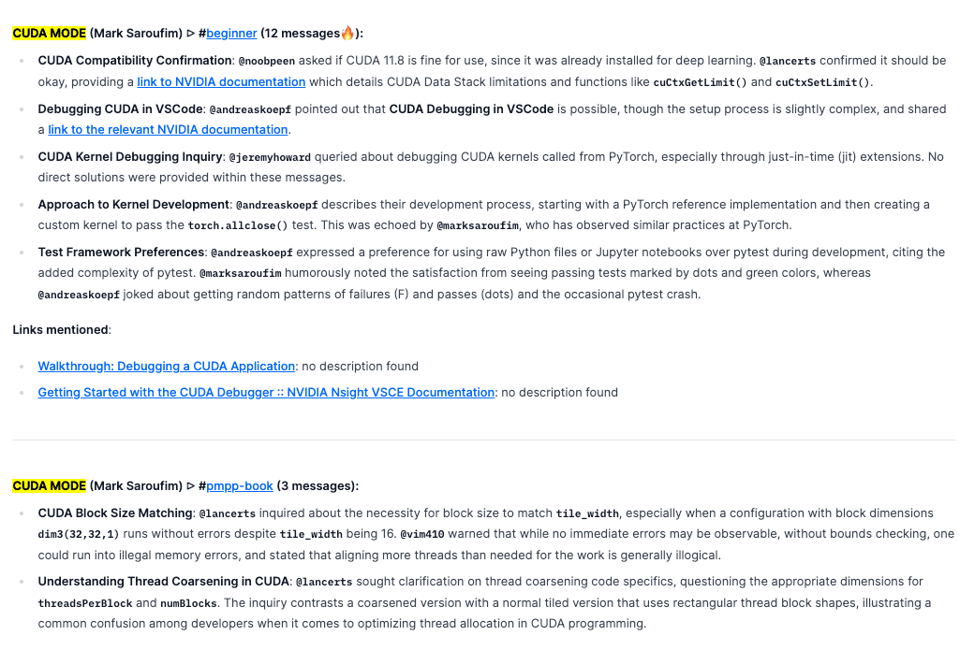

- CUDA MODE (Mark Saroufim) ▷ #beginner (12 messages🔥):

- CUDA MODE (Mark Saroufim) ▷ #pmpp-book (3 messages):

- DiscoResearch ▷ #mixtral_implementation (21 messages🔥):

- DiscoResearch ▷ #general (10 messages🔥):

- DiscoResearch ▷ #embedding_dev (8 messages🔥):

- DiscoResearch ▷ #discolm_german (1 messages):

- LLM Perf Enthusiasts AI ▷ #gpt4 (2 messages):

- LLM Perf Enthusiasts AI ▷ #opensource (3 messages):

- LLM Perf Enthusiasts AI ▷ #openai (5 messages):

- Skunkworks AI ▷ #general (5 messages):

- AI Engineer Foundation ▷ #general (5 messages):

- Alignment Lab AI ▷ #general-chat (2 messages):

- Alignment Lab AI ▷ #alignment-lab-announcements (1 messages):

- Datasette - LLM (@SimonW) ▷ #llm (1 messages):

PART 1: High level Discord summaries

TheBloke Discord Summary

- Xeon's eBay Value for GPU Servers: Members cited cost-effectiveness of Xeon processors in GPU servers sourced from eBay.

- Speculation and Performance of Llama3: Conversations around Llama3 surfaced, juxtaposed with existing models like Mistral Medium, while LLaVA-1.6 was mentioned to potentially exceed Gemini Pro in visual reasoning and OCR capabilities, with details shared here.

- Miqu's Identity and Performance Drives Debate: Leaked 70B model, Miqu, sparked discussions on its origins, performance, and implications of the leak, linking Miquella-120b-gguf at Hugging Face.

- Fine-tuning, Epochs, and Dataset Challenges: Technical support was provided for fine-tuning TinyLlama models leading to ValueError, with a pull request on GitHub indicating an upcoming release to resolve issues with unsupported modules. Meanwhile, users explored the potential of fine-tuning a MiquMaid+Euryale model using A100 SM.

- Uncharted Territories of Model Merging: Dialogue on model merging techniques showcased examples like Harmony-4x7B-bf16, deemed a successful model merge, with links provided to ConvexAI/Harmony-4x7B-bf16 on Hugging Face. Additionally, a fine-tuned version of "bagel," Smaug-34B-v0.1, was shared for having excellent benchmark results without mergers.

Nous Research AI Discord Summary

- Debating Style Influence in LLMs: In ask-about-llms,

@manojbhopened a discussion on how language models like Mistral may mimic styles specific to their training data, linking to a tweet by@teortaxesTexthat highlighted a peculiar translation error by Miqu with similar phrasing. They also brought up issues around quantized models losing information. - Incentives to Innovate with Bittensor: Ongoing discussions in interesting-links regarding how Bittensor incentivizes AI model improvements, with comments on network efficiency and its potential to produce useful open-source models. There's interest in how the decentralized network structures incentives, with emphasis on costs and sustainability.

- Model Exploration and Crowdfunding News: In general, MIQU has been identified as Mistral Medium by

@teknium, confirming Nous Research's co-founder's tweet. Community members are actively combining models like MIQU to explore architecture possibilities. Additionally, AI Grant is highlighted as an accelerator offering funding for AI startups. - Open Hermes 2.5 Dataset and Collaborations Announced:

@tekniumannounced the release of Open Hermes 2.5 dataset on Hugging Face. Collaboration with Lilac ML was mentioned, featuring Hermes on their HuggingFace Spaces. - Questions around RMT and ACC_NORM Metrics: Within ctx-length-research and benchmarks-log,

@hexaniqueried the uniqueness of a technology compared to RMT, expressing skepticism about its impact on context length, while@euclaiseconfirmedacc_normis always used where applicable for AGIEval evaluations.

Mistral Discord Summary

- Dequantization Success Unlocks Potential: The successful dequantization of miqu-1-70b from q5 to f16 and transposition to PyTorch has demonstrated significant prose generation capabilities, a development worth noting for those interested in performance enhancements in language models.

- API vs. Local Model Performance Debates Heat Up: Users are sharing their experiences and skepticism about the discrepancies observed when utilizing Mistral models through the API versus a local setup, highlighting issues like response truncation and improper formatting in generated code which engineers working with API integration should be aware of.

- Mistral Docs Under Scrutiny for Omissions: The community has pointed out that official Mistral documentation lacks information on system prompts, with users emphasizing the need for inclusivity of such details and prompting a PR discussion aimed at addressing the issue.

- RAG Integration: A Possible Solution to Fact-Hallucinations: A discussion on leveraging Retrieval-Augmented Generation (RAG) to handle issues of hallucinations in smaller parameter models surfaced as an advanced strategy for engineers looking to improve factuality in model responses.

- Targeting Homogeneity in Low-Resource Language Development: Skepticism exists around the success of clustering new languages for continuous pretraining, reinforcing the notion that distinct language models may require separate efforts – a consideration vital for those working on the frontiers of multi-language model development.

LM Studio Discord Summary

- GGUF Model Woes and LM Studio Quirks:

@petter5299raised an issue with a GGUF model from HuggingFace not being recognized by LM Studio. This points to possible compatibility issues with certain architectures, a significant concern since@artdiffuserwas informed that only GGUF models are intended to work with LM Studio. Users are advised to monitor catalog updates and report bugs as necessary; existing tutorials might be outdated or incorrect.

- Hardware Demands for Local LLMs: Users report that LM Studio is resource-intensive, with significant memory usage on advanced setups, including a 4090 GPU and 128GB of RAM. The community is also discussing the needs for building LLM PCs, highlighting VRAM as crucial and recommending GPUs with at least 24GB of VRAM. Compatibility and performance issues with mixed generations of GPUs and how LLM performance scales across diverse hardware configurations remain topics of debate and investigation.

- LM Studio Under the macOS Microscope: macOS users, such as

@wisefarai, experienced memory-related errors with LM Studio, potentially due to memory availability at the time of model loading. Such platform-specific issues highlight the variability of LM Studio's performance on different operating systems.

- Training and Model Management Tactics: The community is actively discussing strategies for local LLM training, the potential of Quadro A6000 GPUs for stable diffusion prompt writing, and the intricacies of memory management with model swapping. Users are exploring whether the latest iteration of LLM tools like LLaMA v2 allow for customizable memory usage and how to efficiently run models that do not fit entirely in memory on systems like Windows.

- LLM Studio's Quest for Compatibility: Across discussions, the compatibility between LM Studio and various tools such as Autogenstudio and CodeLLama is a pertinent issue, assessing which models mesh well and which don't. The quest for compatibility also extends to prompts, as users seek JSON formatted prompt templates for improved functionality like the Gorilla Open Function.

- Awaiting Beta Updates: A lone message from

@mike_50363wonders about the update status of the non-avx2 beta version to 2.12, illustrating the anticipation and reliance on the latest improvements in beta releases for optimal LLM development and experimentation.

OpenAI Discord Summary

- Portrait Puzzles Persist in DALL-E: Users, including

@wild.evaand@niko3757, are grappling with DALL-E's inclination towards landscape images, which often results in sideways full-body portraits. With no clear solution, there's speculation about an awaited update to address this, while the lack of an orientation parameter currently hampers the desired vertical results.

- Prompt Crafting Proves Crucial Yet Challenging: In attempts to optimize image outcomes, conversation has emerged on whether prompt modifications can impact the orientation of generated images; however, @darthgustav. asserts that the model's intrinsic limitations override prompt alterations.

- Interactivity Integrated in GPT Chatbots: Discussions by

@luarstudios,@solbus, and `@darthgustav. focus on including interactive feedback buttons in GPT-designed chatbots, with advice given on using an Interrogatory Format** to attach a menu of feedback responses.

- Expectations High for DALL-E's Next Iteration: The community, with contributors like

@niko3757, is anticipative of a significant DALL-E update, hoping for improved functionality, particularly in image orientation—a point of current frustration among users.

- Insight Sharing Across Discussions Enhances Community Support: Cross-channel posting, as done by `@darthgustav., has been highlighted as a beneficial practice, as exemplified by shared prompt engineering techniques for a logo concept in DALL-E Discussions**.

OpenAccess AI Collective (axolotl) Discord Summary

- Phantom Spikes in Training Patterns: Users reported observing spikes in their model's training patterns at every epoch. Efforts to mitigate overtraining by tweaking the learning rate and increasing dropout have been discussed, but challenges persist.

- Considerations for AMD's GPU Stack: There's active dialogue about server-grade hardware decisions, with a specific mention of AMD's MI250 GPUs. Concerns have been raised regarding the maturity of AMD's software stack, reflecting a skepticism when compared to Nvidia's solutions.

- Axolotl Repo Maintenance: A problematic commit (

da97285e63811c17ce1e92b2c32c26c9ed8e2d5d) was identified that could be leading to overtraining, and a pull request #1234 has been introduced to control the torch version during axolotl installation, preventing conflicts with the new torch-2.2.0 release.

- Tackling CI and Dataset Configurations: Issues with Continuous Integration breaking post torch-2.2.0 and challenges when configuring datasets for different tasks in axolotl have been addressed. Users shared solutions like specifying

data_filespaths and utilizingTrainerCallbackfor checkpoint uploads.

- DPO Performance Hits a Snag: Increased VRAM usage has been noted while running DPO, especially with QLora on a 13b model. Requests for detailed explanations and possibly optimizing VRAM consumption have been put forward.

- Runpod Quirks and Tip-offs: An odd issue with empty folders appearing on Runpod was noted without a known cause, and a hot tip regarding the availability of H100 SXM units on the community cloud was shared, highlighting the allure of opportunistic resource acquisition.

HuggingFace Discord Summary

Training Headaches and Quantum Leaps: A user faced a loss flatline during model training; a batch size reduction to 1 and the potential use of EarlyStoppingCallback were suggested solutions. Another proposed solution was 4bit quantization to tackle training instability, which might help conserve VRAM albeit at some cost to model accuracy.

Seeking Specialized Language Models: There was an inquiry about language models tailored to tech datasets around Arduino, ESP32, and Raspberry Pi, suggesting a demand for LLMs with specialized knowledge.

Tech Enthusiast's Project Spotlight: Showcasing a range of projects from seeking feedback on a thesis tweet, to offering access to a Magic: The Gathering model space, as well as a custom pipeline solution for the moondream1 model with a related pull request.

Experimental Models Run Lean: A NeuralBeagle14-7b model was successfully demonstrated on a local 8GB GPU, piquing the interest of those looking to optimize resource usage, which is key for maintainable AI solutions here.

Scholarly Papers and AI Explorations: A paper on language model compression algorithms has been shared, discussing the balance between efficiency and accuracy in methods such as pruning, quantization, and distillation, which could be very pertinent in the ongoing dialogue about optimizing model performance.

LAION Discord Summary

- The Quest for Synthetic Datasets:

@alyosha11is searching for synthetic textbook datasets for a particular project and is directed towards efforts outlined in another channel, whilst@finnildastruggles to find missing audio files for MusicLM transformers training and seeks assistance after unfruitful GitHub inquiries. - Navigating the Tricky Waters of Content Filtering:

@latke1422pspearheads a conversation on the necessity of filtering images with underage subjects and discusses building safer AI content moderation tools utilizing datasets of trigger words. - The Discord Dilemma of Research Pings: The use of '@everyone' pings on a research server has sparked a debate among users such as

@astropulseand@progamergov, with a general lean towards allowing it in the context of the server's research-oriented nature. - An Unexpected Twist in VAE Training:

@drheaddiscovers that the kl-f8 VAE is improperly packing information, significantly impacting related models—this revelation prompts a dive into the associated research paper on transformer learning artifacts. - LLaVA-1.6 Takes the Lead and SPARC Ignites Interest: The new LLaVA-1.6 model outperforms Gemini Pro as shared in its release blog post, and excitement bubbles up around SPARC, a new method for pretraining detailed multimodal representations, albeit without accessible code or models.

Perplexity AI Discord Summary

- Custom GPT Curiosity Clarified: Within the general channel,

@sweetpopcornsimonqueried about training a custom GPT similar to ChatGPT, while@icelavamanclarified that Perplexity AI doesn't offer chatbot services but features called Collections for organizing and sharing collaboration spaces.

- Epub/PDF Reader with AI Integration Sought:

@archientinquired about an epub/PDF reader that supports AI text manipulation and can utilize custom APIs, sparking community interest in finding or developing such a tool.

- Mystery Insight and YouTube Tutorial: In the sharing channel,

@m1st3rgteased insider knowledge regarding the future of Google through Perplexity, and@redsolplshared a YouTube guide titled "How I Use Perplexity AI to Source Content Ideas for LinkedIn" at this video.

- Support & Model Response Oddities Addressed:

@angelo_1014expressed difficulty in reaching Perplexity support and odd responses from the codellama-70b-instruct model were reported by@bvfbarten.in the pplx-api channel. The codellama-34b-instruct model, however, was confirmed to be robust.

- API Uploads and Source Citations Discussed:

@andreafonsmortigmail.com_6_28629grappled with file uploads via the chatbox interface, and@kid_tubsyinitiated a conversation about the need for source URLs in responses, especially using models with online capabilities like -online.

LangChain AI Discord Summary

- Searching Similarities Across the Multiverse: Engineers are discussing strategies for fetching similarity across multiple Pinecone namespaces for storing meeting transcripts; however, some are facing issues with querying large JSON files via Langchain and ChromaDB, leading to only partial data responses.

- Navigating the Langchain Embedding Maze: There's ongoing exploration of implementing embeddings with OpenAI via Langchain and Chroma, including sharing code snippets, while some community members need support with TypeError issues after Langchain and Pinecone package updates.

- AI Views on Wall Street Moves: The quest for an AI that can analyze "real-time" stock market data and make informed decisions is a topic of curiosity, implying the interest in leveraging AI for financial market insights.

- LangGraph Debuts Multi-Agent AI:

@andysingalintroduced LangGraph in his Medium post, promising a futuristic tool designed for multi-agent AI systems collaborations, indicating a move towards more complex, interconnected AI workflows.

- AI Tutorials Serve as Knowledge Beacons: A YouTube tutorial highlighting the usage of the new OpenAI Embeddings Model with LangChain was shared, providing insights into the OpenAI's model updates and tools for AI application management.

LlamaIndex Discord Summary

- Customizing Hybrid Search for RAG Systems: Hybrid search within Retrieval-Augmented Generation (RAG) requires tuning for different question types, as discussed in a Twitter thread by LlamaIndex. Types mentioned include Web search queries and concept seeking, each necessitating distinct strategies.

- Expanding RAG Knowledge with Multimodal Data: A new resource was shared highlighting a YouTube video evaluation of multimodal RAG systems, including an introduction and evaluation techniques for such systems, along with the necessary support documentation and a tweet announcing the video.

- Embedding Dumps and Cloud Queries Stoke Interest: Assistance was sought by users for integrating vector embeddings with Opensearch databases and finding cloud-based storage solutions for KeywordIndex with massive data ingestion. Relevant resources like the postgres.py vector store and a Redis Docstore+Index Store Demo were referenced.

- API Usage and Server Endpoints Clarified: Queries regarding API choice differences, specifically assistant vs. completions APIs, and creating server REST endpoints sparked engagement with suggestions pointing towards

create-llamafrom LlamaIndex's documentation.

- Discussion on RAG Context Size and Code: Enquiries about the effect of RAG context size on retrieved results, and looking for tutorials on RAG over code, were notable. Langchain’s approach was cited as a reference, alongside the Node Parser Modules and RAG over code documentation.

Eleuther Discord Summary

- Decoder Tikz Hunt and a Leaky Ship: An Eleuther member requested resources for finding a transformer decoder tikz illustration or an arXiv paper featuring one. Meanwhile, a tweet from Arthur Mensch revealed a watermarked old training model leak due to an "over-enthusiastic employee," causing a stir and eliciting reactions that compared the phrase to euphemistic sayings like "bless your heart." (Tweet by Arthur Mensch)

- When Scale Affects Performance: Users in the research channel discussed findings from a paper on how transformer model architecture influences scaling properties, and how this knowledge has yet to hit the mainstream. Furthermore, the efficacy of pre-training on ImageNet was questioned, providing insights into the nuanced relationship between pre-training duration and performance across tasks. (Scaling Properties of Transformer Models, Pre-training Time Matters)

- Rising from the Past: n-gram's New Potential: A paper on an infini-gram model using $\infty$-grams captured attention in the interpretability channel, showing that it can significantly improve language models like Llama. There were concerns about potential impacts on generalization, and curiosity was expressed regarding transformers' ability to memorize n-grams and how this could translate to automata. (Infini-gram Language Model Paper, Automata Study)

- LM Evaluation Harness Polished and Probed: In the lm-thunderdome channel, thanks were given for PyPI automation, and the release of the Language Model Evaluation Harness 0.4.1 was announced, which included internal refactoring and features like Jinja2 for prompt design. Questions arose regarding the output of few-shot examples in logs and clarifications were sought for interpreting MMLU evaluation metrics, with a particular concern about a potential issue flagged in a GitHub gist. (Eval Harness PyPI, GitHub Release, Gist)

- VQA Systems: Compute Costs and Model Preferences Questioned: Queries were raised about the compute costs for training image encoders and LLMs for Visual Question Answering systems, highlighting the scarcity of reliable figures. There was also a search for consensus on whether encoder-decoder models remain the choice for text-to-image or text-and-image to text tasks, noting that training a model like llava takes approximately 8x24 A100 GPU hours.

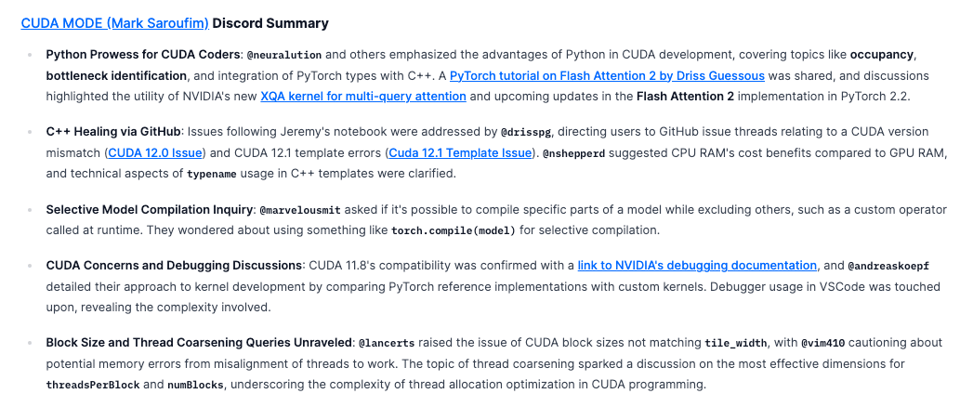

CUDA MODE (Mark Saroufim) Discord Summary

- Python Prowess for CUDA Coders:

@neuralutionand others emphasized the advantages of Python in CUDA development, covering topics like occupancy, bottleneck identification, and integration of PyTorch types with C++. A PyTorch tutorial on Flash Attention 2 by Driss Guessous was shared, and discussions highlighted the utility of NVIDIA's new XQA kernel for multi-query attention and upcoming updates in the Flash Attention 2 implementation in PyTorch 2.2.

- C++ Healing via GitHub: Issues following Jeremy's notebook were addressed by

@drisspg, directing users to GitHub issue threads relating to a CUDA version mismatch (CUDA 12.0 Issue) and CUDA 12.1 template errors (Cuda 12.1 Template Issue).@nshepperdsuggested CPU RAM's cost benefits compared to GPU RAM, and technical aspects oftypenameusage in C++ templates were clarified.

- Selective Model Compilation Inquiry:

@marvelousmitasked if it's possible to compile specific parts of a model while excluding others, such as a custom operator called at runtime. They wondered about using something liketorch.compile(model)for selective compilation.

- CUDA Concerns and Debugging Discussions: CUDA 11.8's compatibility was confirmed with a link to NVIDIA's debugging documentation, and

@andreaskoepfdetailed their approach to kernel development by comparing PyTorch reference implementations with custom kernels. Debugger usage in VSCode was touched upon, revealing the complexity involved.

- Block Size and Thread Coarsening Queries Unraveled:

@lancertsraised the issue of CUDA block sizes not matchingtile_width, with@vim410cautioning about potential memory errors from misalignment of threads to work. The topic of thread coarsening sparked a discussion on the most effective dimensions forthreadsPerBlockandnumBlocks, underscoring the complexity of thread allocation optimization in CUDA programming.

DiscoResearch Discord Summary

- Models in Identity Crisis: Concerns were raised about the potential overlap between Mistral and Llama models, identified by

@sebastian.bodzareferencing a tweet suggesting the similarities. Additionally, there's speculation from the community that Miqu may be a quantized version of Mistral, supported by signals from Twitter, including insights from @sroecker and @teortaxesTex.

- Dataset Generosity Strikes the Right Chord: The Hermes 2 dataset was made available by

@teknium, posted on Twitter, and praised by community members for its potential impact on AI research. The integration of lilac was positively acknowledged by@devnull0.

- Speedy Mixtral Races Ahead: The speed of Mixtral, achieving 500 tokens per second, was highlighted by

@bjoernpwith a link to Groq's chat platform. This revelation led to discussions on the effectiveness and implications of custom AI accelerators in computational performance.

- Embedding Woes and Triumphs: Mistral 7B's embedding models were called out by

@Nils_Reimerson Twitter for overfitting on MTEB and performing poorly on unrelated tasks. Conversely, Microsoft’s inventive approaches to generating text embeddings using synthetic data and simplifying training processes attracted attention, albeit with some skepticism, in a research paper discussed by@sebastian.bodza.

- The Plot Thickens... Or Does It?: An inquiry from

@philipmayabout a specific plot for DiscoLM_German_7b_v1 went without elaboration, potentially indicating a need for clarity or additional context to be addressed within the community.

Latent Space Discord Summary

- VFX Studios Eye AI Integration: A tweet by @venturetwins reveals major VFX studios, including one owned by Netflix, are now seeking professionals skilled in stable diffusion technologies. This new direction in hiring underscores the increasing importance of generative imaging and machine learning in revolutionizing storytelling, as evidenced by a job listing from Eyeline Studios.

- New Paradigms in AI Job Requirements Emerge: The rapid evolution of AI technologies such as Stable Diffusion and Midjourney is humorously noted to potentially become standard demands in future job postings, reflecting a shift in employment standards within the tech landscape.

- Efficiency Breakthroughs in LLM Training: Insights from a new paper by Quentin Anthony propose a significant shift towards hardware-utilization optimization during transformer model training. This approach, focusing on viewing models through GPU kernel call sequences, aims to address prevalent inefficiencies in the training process.

- Codeium's Leap to Series B Funding: Celebrating Codeium's progress to Series B, a complimentary tweet remarks on the team's achievement. This milestone highlights the growing optimism and projections around the company's future.

- Hardware-Aware Design Boosts LLM Speed: A new discovery highlighted by a tweet from @BlancheMinerva and detailed further in their paper on arXiv:2401.14489, outlines a hardware-aware design tweak yielding a 20% throughput improvement for 2.7B parameter LLMs, previously overlooked by many due to adherence to GPT-3's architecture.

- Treasure Trove of AI and NLP Knowledge Unveiled: For those keen on deepening their understanding of AI models and their historic and conceptual underpinnings, a curated list shared by @ivanleomk brings together landmark resources, offering a comprehensive starting point for exploration in AI and NLP.

LLM Perf Enthusiasts AI Discord Summary

- Clarifying "Prompt Investing": There was a discussion involving prompt investing, followed by a clarification from user @jxnlco referencing the correct term as prompt injecting instead.

- On the Frontlines with Miqu-1 70B: User @jeffreyw128 showed interest in testing new models, with @thebaghdaddy suggesting the Hugging Face's Miqu-1 70B model. @thebaghdaddy advised specific prompt formatting for Mistral and mentioned limitations due to being "gpu poor."

- Dissecting AI Performance and Functionality: - Discussion around a tweet from @nickadobos regarding AI performance occurred but the details were unspecified. - @jeffreyw128 and @joshcho_ discussed their dissatisfaction with the performance of AI concerning document understanding, with @thebaghdaddy suggesting this might explain AI's struggles with processing knowledge files containing images.

Skunkworks AI Discord Summary

- Open Source AI gets a Crypto Boost: Filipvv put forward the idea of employing crowdfunding techniques, like those used by CryoDAO and MoonDAO, to raise funds for open-source AI projects and highlighted the Juicebox platform as a potential facilitator for such endeavors. The proposed collective funding could support larger training runs on public platforms, contributing to the broader AI community's development resources.

- New Training Data Goes Public: Teknium released the Hermes 2 dataset and encouraged its use within the AI community. Interested engineers can access the dataset via this tweet.

- HelixNet: The Triad of AI Unveiled: Migel Tissera introduced HelixNet, a cutting-edge architecture utilizing three Mistral-7B LLMs. AI enthusiasts and engineers can experiment with HelixNet through a Jupyter Hub instance, login

forthepeoplewith passwordgetitdone, as announced by yikesawjeez with more details on this tweet.

AI Engineer Foundation Discord Summary

- Diving into Hugging Face's API: @tonic_1 introduced the community to the Transformers Agents API from Hugging Face, noting its experimental nature and outlining the three agent types: HfAgent, LocalAgent, and OpenAiAgent for diverse model use cases.

- Seeking Clarity on HFAgents:

@hackgoofersought clarification about the previously discussed HFAgents, showing a need for further explanation on the subject. - Community Contributions Welcomed:

@tonic_1expressed a keen interest to assist the community despite not having filled out a contribution form, indicating a positive community engagement.

Alignment Lab AI Discord Summary

- Game On for AI-Enhanced Roblox Plugin:

@magusartstudiosis in the process of developing a Roblox AI agent Plugin, which will integrate several advanced tools and features for the gaming platform.

- Clarifying OpenAI's Freebies or Lack Thereof:

@metaldragon01corrected a common misconception that OpenAI is distributing free tokens for its open models, stressing that such an offering does not exist.

- Datasets Galore—Hermes 2.5 & Nous-Hermes 2 Released:

@tekniumannounced the release of the Open Hermes 2.5 and Nous-Hermes 2 datasets, available for the community to enhance state-of-the-art language models. The dataset, containing over 1 million examples, can be accessed on HuggingFace.

- Community Echoes and Lilac ML Integration: Special thanks were extended to Discord members

<@1110466046500020325>,<@257999024458563585>,<@748528982034612226>,<@1124158608582647919>for their contribution to the datasets. Moreover, the announcement included a nod to the collaboration with Lilac ML to make Hermes data analytically accessible via HuggingFace Spaces.

Datasette - LLM (@SimonW) Discord Summary

- Could DatasetteGPT Be Your New Best Friend?: User

@discoureurinquired if anyone has employed DatasetteGPT to aid with remembering configuration steps or assist in writing plugins for Datasette's documentation. No further discussion or responses were noted.

The Ontocord (MDEL discord) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

TheBloke ▷ #general (1315 messages🔥🔥🔥):

- Xeon for GPU Servers:

@reguilementioned opting for Xeon due to cost-effectiveness when used in GPU servers found on eBay. - Mysterious Llama3 Speculation: Users discussed expectations around Llama3, with skepticism on whether it would exceed existing models like Mistral Medium.

- Multimodal Model Potential: Hints of LLaVA-1.6 being a formidable multimodal model exceeding Gemini Pro on benchmarks, introducing improved visual reasoning and OCR. A shared article detailed the enhancements.

- Japanese Language and Culture:

@righthandofdoomcommented on the detailed structure and pronunciation similarities between Japanese and their mother tongue, highlighting the nuanced nature of the language compared to Western counterparts. - MIQU Model Discussions: The chat alluded to the MIQU model being akin to Mistral Medium and possibly a substantial leak, prompting debates on the model's origin, performance parity with GPT-4, and the consequences for the leaker.

Links mentioned:

- alpindale/miquella-120b-gguf · Hugging Face: no description found

- InstantID - a Hugging Face Space by InstantX: no description found

- LLaVA-1.6: Improved reasoning, OCR, and world knowledge: LLaVA team presents LLaVA-1.6, with improved reasoning, OCR, and world knowledge. LLaVA-1.6 even exceeds Gemini Pro on several benchmarks.

- google/switch-c-2048 · Hugging Face: no description found

- Mistral MODEL LEAK??? CEO Confirms!!!: "An over-enthusiastic employee of one of our early access customers leaked a quantised (and watermarked) version of an old model we trained and distributed q...

- Chat with Open Large Language Models: no description found

- Indiana Jones Hmm GIF - Indiana Jones Hmm Scratching - Discover & Share GIFs: Click to view the GIF

- google-research/lasagna_mt at master · google-research/google-research: Google Research. Contribute to google-research/google-research development by creating an account on GitHub.

- OpenRouter: A router for LLMs and other AI models

- Support for split GGUF checkpoints · Issue #5249 · ggerganov/llama.cpp: Prerequisites Please answer the following questions for yourself before submitting an issue. I am running the latest code. Development is very rapid so there are no tagged versions as of now. I car...

- teknium/OpenHermes-2.5 · Datasets at Hugging Face: no description found

- GitHub - arcee-ai/mergekit: Tools for merging pretrained large language models.: Tools for merging pretrained large language models. - GitHub - arcee-ai/mergekit: Tools for merging pretrained large language models.

- no title found: no description found

TheBloke ▷ #characters-roleplay-stories (885 messages🔥🔥🔥):

- Discussing Miqu's Performance Beyond Leaks:

@goldkoronand others discuss the performance of Miqu, a leaked 70B model.@turboderp_clarifies it's architecturally the same as LLaMA 2 70B, and it's been continued by Mistral as an early version of their project.

- Exploring Morally Ambiguous Training Data:

@heralaxseeks suggestions for dark or morally questionable text for a few-shot example to teach a model to write longer and darker responses.@the_ride_never_endsrecommends Edgar Allan Poe's short stories on Wikipedia, and@kquantmentions the dark original stories by the Grimm Brothers.

- Fitting Models Within GPU VRAM Constraints:

@kaltcitreports that with 48GB VRAM, 4.25bpw can fit but generating any content results in OOM (Out Of Memory). Additionally, there's a discussion about using lower learning rates for finetuning and the cost of training these large models, with@c.gatoand@giftedgummybeesharing their training strategies.

- Fine-Tuning Strategies and Costs:

@undishares that they have finetuned a Miqu model referred to as MiquMaid+Euryale using parts of their dataset. They also express the high cost and ambition behind their single-day finetuning attempt, which used an A100 SM.

- Broader Discussion on Large Language Model Deployment: The channel's users touch on broader topics, such as the potential for further fine-tuning 70B models, the general costliness of training and fine-tuning, possible collaborations, and the risks of alignment and ethics in language models.

Links mentioned:

- Category:Short stories by Edgar Allan Poe - Wikipedia: no description found

- Self-hosted AI models | docs.ST.app: This guide aims to help you get set up using SillyTavern with a local AI running on your PC (we'll start using the proper terminology from now on and...

- 152334H/miqu-1-70b-sf · Hugging Face: no description found

- NeverSleep/MiquMaid-v1-70B-GGUF · Hugging Face: no description found

- miqudev/miqu-1-70b · Hugging Face: no description found

- Money Wallet GIF - Money Wallet Broke - Discover & Share GIFs: Click to view the GIF

- NobodyExistsOnTheInternet/miqu-limarp-70b · Hugging Face: no description found

- sokusha/aicg · Datasets at Hugging Face: no description found

- Ayumi Benchmark ERPv4 Chat Logs: no description found

- NobodyExistsOnTheInternet/ShareGPTsillyJson · Datasets at Hugging Face: no description found

- GitHub - bjj/exllamav2-openai-server: An OpenAI API compatible LLM inference server based on ExLlamaV2.: An OpenAI API compatible LLM inference server based on ExLlamaV2. - GitHub - bjj/exllamav2-openai-server: An OpenAI API compatible LLM inference server based on ExLlamaV2.

- GitHub - epolewski/EricLLM: A fast batching API to serve LLM models: A fast batching API to serve LLM models. Contribute to epolewski/EricLLM development by creating an account on GitHub.

- Mistral CEO confirms 'leak' of new open source AI model nearing GPT4 performance | Hacker News: no description found

TheBloke ▷ #training-and-fine-tuning (30 messages🔥):

- Fine-tuning Epoch Clarification:

@dirtytigerxresponded to@bishwa3819pointing out that the loss graph posted showed less than a single epoch and inquired about seeing a graph for a full epoch. - DataSet Configuration Puzzle:

@lordofthegoonssought assistance for setting up dataset configuration on unsloth for the sharegpt format, mentioning difficulty finding documentation for formats other than alpaca. - Troubleshooting TinyLlama Fine-tuning:

@choviistruggled with fine-tuning TheBloke/TinyLlama-1.1B-Chat-v1.0-AWQ and shared a specificValueErrorrelated to unsupported modules when attempting to add adapters based on the PEFT library.@dirtytigerxindicated that AWQ is not yet supported in PEFT, citing a draft PR that's awaiting an upcoming AutoAWQ release with a Github pull request link. - Counting Tokens for Training:

@dirtytigerxprovided@arcontexa simple script to count the number of tokens in a file for training, suggesting the use ofAutoTokenizer.from_pretrained(model_name)to easily grab the tokenizer for most models. - Experiment Tracking Tools Discussed:

@flashmanbahadurqueried about the use of experiment tracking tools like wandb and mlflow for local runs.@dirtytigerxexpressed the usefulness of both wandb and comet, especially for longer training runs, and discussed the broader capabilities of mlflow.

Links mentioned:

- Load adapters with 🤗 PEFT: no description found

- FEAT: add awq suppot in PEFT by younesbelkada · Pull Request #1399 · huggingface/peft: Original PR: casper-hansen/AutoAWQ#220 TODO: Add the fix in transformers Wait for the next awq release Empty commit with @s4rduk4r as a co-author add autoawq in the docker image Figure out ho...

TheBloke ▷ #model-merging (15 messages🔥):

- In Search of Less Censored Intelligence:

@lordofthegoonsinquired about uncensored, intelligent 34b models, where@kquantsuggested looking at models like Nous-Yi on Hugging Face. - Smaug 34b Takes Flight Without Mergers:

@kquantshares information about the Smaug-34B-v0.1 model, a fine-tuned version of "bagel" with impressive benchmark results and no model mergers involved. - Shrinking Goliath:

@lordofthegoonsexpressed interest in an experiment to downsize a 34b model in hopes it would outperform a 10.7b model, but later reported that the attempt did not yield a usable model. - Prompts to Nudge Model Behavior:

@kquantdiscusses using prompts to influence model outputs, citing methods like changing the response style or trigger specific behaviors like simulated anger or polite confirmations. - Harmony Through Merging Models:

@kquantpoints to the Harmony-4x7B-bf16 as an example of a successful model merge that exceeded expectations, naming itself after the process.

Links mentioned:

- ConvexAI/Harmony-4x7B-bf16 · Hugging Face: no description found

- abacusai/Smaug-34B-v0.1 · Hugging Face: no description found

- one-man-army/UNA-34Beagles-32K-bf16-v1 · Hugging Face: no description found

TheBloke ▷ #coding (2 messages):

- The Forgotten Art of File Management:

@spottyluckhighlighted a growing problem where many people lack understanding of a file system hierarchy or how to organize files, resulting in a "big pile of search/scroll". - Generational File System Bewilderment:

@spottyluckshared an article from The Verge discussing how students are increasingly unfamiliar with file systems, referencing a case where astrophysicist Catherine Garland noticed her students couldn't locate their project files or comprehend the concept of file directories.

Links mentioned:

Students who grew up with search engines might change STEM education forever: Professors are struggling to teach Gen Z

Nous Research AI ▷ #ctx-length-research (6 messages):

- Inquiry about Form Submission:

@manojbhasked about whether a form has been filled out, but no further context or details were provided. - Questioning the Uniqueness of RMT:

@hexaniraised a question about how the discussed technology truly differs from RMT and its significance. - Skepticism on Context Length Impact:

@hexaniexpressed doubt about the technology representing a significant shift towards managing longer context, citing evaluations in the paper. - Request for Clarification:

@hexaniasked for someone to explain more about the technology in question and appreciated any help in advance. - Comparison with Existing Architectures:

@hexanipointed out similarities between the current topic of discussion and existing architectures, likening it to an adapter for explicit memory tokens.

Nous Research AI ▷ #off-topic (13 messages🔥):

- In Search of Machine Learning Guidance:

@DES7INY7and@lorenzoroxyoloare seeking advice on how to get started with their machine learning journey and looking for guidance and paths to follow. - Possible Discord Improvement with @-ing:

@murchistondiscusses that @-ing could be improved as a feature on Discord, implying that better routing or integration within tools like lmstudio might make the feature more reliable. - Fast.ai Course Sighting:

@afterhoursbillymentions that he has seen<@387972437901312000>on the fast.ai Discord, hinting at the user's engagement with the course. - The Maneuver-Language Idea:

@manojbhbrings up an idea about tokenizing driving behaviors for self-driving technology, proposing a concept similar to lane-language. - Dataset Schema Inconsistency:

@pradeep1148points out that the dataset announced by<@387972437901312000>appears to have a schema inconsistency, with different examples having distinct formats. A link to the dataset on Hugging Face is provided: OpenHermes-2.5 dataset.

Links mentioned:

- Hellinheavns GIF - Hellinheavns - Discover & Share GIFs: Click to view the GIF

- Blackcat GIF - Blackcat - Discover & Share GIFs: Click to view the GIF

- Scary Weird GIF - Scary Weird Close Up - Discover & Share GIFs: Click to view the GIF

Nous Research AI ▷ #benchmarks-log (2 messages):

- Clarification on ACC_NORM for AGIEval:

@euclaiseinquired if@387972437901312000utilizesacc_normfor AGIEval evaluations.@tekniumconfirmed that where anacc_normexists, it is always used.

Nous Research AI ▷ #interesting-links (77 messages🔥🔥):

- Bittensor and Synthetic Data:

@richardblythmanquestioned how Bittensor addresses gaming benchmarks for AI models, suggesting that Hugging Face could also generate synthetic data on the fly.@tekniumclarified the costs involved and the subsidization by Bittensor's network inflation, while@euclaisenoted Hugging Face's ability to run expensive operations. - Incentivization in Model Training:

@tekniumdescribed the importance of incentive systems within Bittensor's network to drive model improvement and active competition, while@richardblythmanshared skepticism about the sustainability of Bittensor's model due to high costs. - Understanding Bittensor's Network: Throughout the discussion, there was significant interest in how Bittensor's decentralized network operates and incentives are structured, with multiple users including

@.benxhand@tekniumdiscussing aspects of deployment and the incentives for maintaining active and competitive models. - Potential of Bittensor to Produce Useful Models: While

@richardblythmanexpressed doubt about the network's efficiency,@tekniumremained optimistic about its potential to accelerate the development of open source models, mentioning that rigorous training attempts started only a month or two prior. - Collaborative Testing of AI Models:

@yikesawjeezinvited community members to assist in testing a new AI architecture, HelixNet, and provided shared Jupyter Notebook access for the task. The invitation was answered by users like@manojbh, encouraging reproduction on different setups for better validation of the model's performance.

Links mentioned:

- Mistral CEO confirms ‘leak’ of new open source AI model nearing GPT-4 performance: An anonymous user on 4chan posted a link to the miqu-1-70b files on 4chan. The open source model approaches GPT-4 performance.

- argilla/CapybaraHermes-2.5-Mistral-7B · Hugging Face: no description found

- LLaVA-1.6: Improved reasoning, OCR, and world knowledge: LLaVA team presents LLaVA-1.6, with improved reasoning, OCR, and world knowledge. LLaVA-1.6 even exceeds Gemini Pro on several benchmarks.

- NobodyExistsOnTheInternet/miqu-limarp-70b · Hugging Face: no description found

- Tweet from Ram (@ram_chandalada): Scoring popular datasets with "Self-Alignment with Instruction Backtranslation" prompt Datasets Scored 1️⃣ dolphin @erhartford - Only GPT-4 responses 2️⃣ Capybara @ldjconfirmed 3️⃣ ultracha...

- Tweet from Migel Tissera (@migtissera): It's been a big week for Open Source AI, and here's one more to cap the week off! Introducing HelixNet. HelixNet is a novel Deep Learning architecture consisting of 3 x Mistral-7B LLMs. It h...

- Tweet from yikes (@yikesawjeez): yknow what fk it https://jupyter.agentartificial.com/commune-8xa6000/user/forthepeople/lab user: forthepeople pw: getitdone will start downloading the model now, hop in and save notebooks to the ./wo...

- The Curious Case of Nonverbal Abstract Reasoning with Multi-Modal Large Language Models: While large language models (LLMs) are still being adopted to new domains and utilized in novel applications, we are experiencing an influx of the new generation of foundation models, namely multi-mod...

- GroqChat: no description found

- Scaling Laws for Forgetting When Fine-Tuning Large Language Models: We study and quantify the problem of forgetting when fine-tuning pre-trained large language models (LLMs) on a downstream task. We find that parameter-efficient fine-tuning (PEFT) strategies, such as ...

- Paper page - Learning Universal Predictors: no description found

- GitHub - TryForefront/tuna: Contribute to TryForefront/tuna development by creating an account on GitHub.

Nous Research AI ▷ #announcements (1 messages):

- Open Hermes 2.5 Dataset Released:

@tekniumannounced the public release of the dataset used for Open Hermes 2.5 and Nous-Hermes 2, excited to share over 1M examples curated and generated from open-source ecosystems. The dataset can be found on HuggingFace. - Dataset Contributions Acknowledged:

@tekniumhas credited every data source within the dataset card except for one that's no longer available, thanking the contributed authors within the Nous Research AI Discord. - Collaboration with Lilac ML:

@tekniumworked with@1097578300697759894and Lilac ML to feature Hermes on their HuggingFace Spaces, assisting in the analysis and filtering of the dataset. Explore it on Lilac AI. - Tweet about Open Hermes Dataset: A related Twitter post by

@tekniumcelebrates the release of the dataset and invites followers to see what can be created from it.

Links mentioned:

- teknium/OpenHermes-2.5 · Datasets at Hugging Face: no description found

- no title found: no description found

Nous Research AI ▷ #general (673 messages🔥🔥🔥):

- MIQU Mystery Resolved:

@tekniumclarifies that MIQU is Mistral Medium, not an earlier version. The Nous Research's co-founder's tweet could potentially confirm this here. - The Power of Mergekit: Users like

@datarevisedexperimented with 120 billion parameter models by merging MIQU with itself, and others are creating various combinations like Miquella 120B. - OpenHermes 2.5 Dataset Discussion: The dataset discussions raised points on dataset creation and storage, with

@tekniummentioning tools like Lilac for data curation and exploration. - Crowdfunding for AI with AI Grant:

@cristi00shares information about AI Grant – an accelerator for AI startups offering substantial funding and Azure credits. Applications are open until the stated deadline. - Qwen 0.5B Model Anticipation:

@qnguyen3expresses excitement about the imminent release of a new fiery model named Qwen, though specific details were not given.

Links mentioned:

- NobodyExistsOnTheInternet/code-llama-70b-python-instruct · Hugging Face: no description found

- Boy Kisser Boykisser GIF - Boy kisser Boykisser Boy kisser type - Discover & Share GIFs: Click to view the GIF

- Guts Berserk Guts GIF - Guts Berserk Guts American Psycho - Discover & Share GIFs: Click to view the GIF

- Meta Debuts Code Llama 70B: A Powerful Code Generation AI Model: With Code Llama 70B, enterprises have a choice to host a capable code generation model in their private environment. This gives them control and confidence in protecting their intellectual property.

- Tweet from Stella Biderman (@BlancheMinerva): Are you missing a 20% speed-up for your 2.7B LLMs due to copying GPT-3? I was for three years. Find out why and how to design your models in an hardware-aware fashion in my latest paper, closing the ...

- AI Grant: It's time for AI-native products!

- Discord - A New Way to Chat with Friends & Communities: Discord is the easiest way to communicate over voice, video, and text. Chat, hang out, and stay close with your friends and communities.

- Reimagine: Covering the rapidly moving world of AI.

- Cat Cats GIF - Cat Cats Explosion - Discover & Share GIFs: Click to view the GIF

- 152334H/miqu-1-70b-sf · Hugging Face: no description found

- argilla/CapybaraHermes-2.5-Mistral-7B · Hugging Face: no description found

- Wow Surprised Face GIF - Wow Surprised face Little boss - Discover & Share GIFs: Click to view the GIF

- euclaise/Memphis-scribe-3B-alpha · Hugging Face: no description found

- Bornskywalker Dap Me Up GIF - Bornskywalker Dap Me Up Woody - Discover & Share GIFs: Click to view the GIF

- ycros/miqu-lzlv · Hugging Face: no description found

- WizardLM/WizardLM-70B-V1.0 · Hugging Face: no description found

- alpindale/miquella-120b · Hugging Face: no description found

- Hatsune Miku - Wikipedia: no description found

- Dancing Daniel Keem GIF - Dancing Daniel Keem Keemstar - Discover & Share GIFs: Click to view the GIF

- xVal: A Continuous Number Encoding for Large Language Models: Large Language Models have not yet been broadly adapted for the analysis of scientific datasets due in part to the unique difficulties of tokenizing numbers. We propose xVal, a numerical encoding sche...

- Catspin GIF - Catspin - Discover & Share GIFs: Click to view the GIF

- Itsover Wojack GIF - ITSOVER WOJACK - Discover & Share GIFs: Click to view the GIF

- Tweet from Nat Friedman (@natfriedman): Applications are open for batch 3 of http://aigrant.com for pre-seed and seed-stage companies building AI products! Deadline is Feb 16. As an experiment, this batch we are offering the option of eith...

- NousResearch/Nous-Hermes-2-Vision-Alpha · Discussions: no description found

- dataautogpt3 (alexander izquierdo): no description found

- NobodyExistsOnTheInternet (Nobody.png): no description found

- Tweet from Eric Hallahan (@EricHallahan): @QuentinAnthon15 Darn, I would have loved to participate if I were allowed to. I'm sure it's a great paper regardless with an author list like that!

- GitHub - qnguyen3/hermes-llava: Contribute to qnguyen3/hermes-llava development by creating an account on GitHub.

- GitHub - SafeAILab/EAGLE: EAGLE: Lossless Acceleration of LLM Decoding by Feature Extrapolation: EAGLE: Lossless Acceleration of LLM Decoding by Feature Extrapolation - GitHub - SafeAILab/EAGLE: EAGLE: Lossless Acceleration of LLM Decoding by Feature Extrapolation

- GitHub - epfLLM/meditron: Meditron is a suite of open-source medical Large Language Models (LLMs).: Meditron is a suite of open-source medical Large Language Models (LLMs). - GitHub - epfLLM/meditron: Meditron is a suite of open-source medical Large Language Models (LLMs).

- There’s something going on with AI startups in France | TechCrunch: Artificial intelligence, just like in the U.S., has quickly become a buzzy vertical within the French tech industry.

- Lilac - Better data, better AI: Lilac enables data and AI practitioners improve their products by improving their data.

Nous Research AI ▷ #ask-about-llms (32 messages🔥):

- Style Transfer or Overfitting?:

@manojbhdebated about LLMs having responses overly influenced by their training on a particular style like Mistral; pointed out that similar end-layer patterns cause style mimicry in outputs. This was in context to misleading output reasoning and a linked tweet by@teortaxesTex, regarding Miqu’s mistake in RU translation with oddly similar phrasing. - Quantized Models and Memory Discussion: Discussions by

@giftedgummybeeand@manojbhcentered around quantized models forgetting information and the increased potential for errors with lower quantization levels. - AI Stress Test Speculation: A theory by

@manojbhon whether LLMs can fumble under stress like humans led to a suggestion by@_3sphereto test the model's "panic" behavior in response to confusing prompts. - Watermarking within Mistral: Conversation with

@everyoneisgross,@.benxh, and.ben.comdelved into the possibility of watermarking LLMs, hypothesizing it could involve distinct Q&A pairs or prompts that generate specific responses as identifiers after quantization. - Evaluating Nous-Hermes and Mixtral Performance:

@.benxhaffirmed a significant performance difference between Mistral Medium and an unspecified model capacity, indicating close performance within the margin of error.@manojbhagreed, highlighting the importance of even slight differences in statistical metrics.

Links mentioned:

- Tweet from Teortaxes▶️ (@teortaxesTex): Miqu makes a mistake in RU: the bolt falls out but the thimble stays. Yet note startlingly similar phrasing in translation! I'm running Q4KM though, and some of it may be attributable to sampling ...

- GitHub - LeonEricsson/llmjudge: Exploring limitations of LLM-as-a-judge: Exploring limitations of LLM-as-a-judge. Contribute to LeonEricsson/llmjudge development by creating an account on GitHub.

Mistral ▷ #general (363 messages🔥🔥):

- Welcoming New Members: Users

@admin01234and@mrdragonfoxexchanged greetings, indicating that new users are discovering the Mistral Discord channel.

- Dequantization Success for miqu-1-70b: User

@i_am_domdiscussed the successful dequantization of miqu-1-70b from q5 to f16, transposed to PyTorch. Usage instructions and results were shared, showcasing the model's prose generation capabilities.

- Internship Asks and General Career Opportunities: User

@deldrelasked about internship opportunities at Mistral.@mrdragonfoxadvised them to send their details even if no official listings are posted.

- Token Generation Rates and Inference Performance: User

@i_am_domprovided token/s generation rates for the official Mistral API, which@donjuan5050said might not meet their use case. Meanwhile,@mrdragonfoxshared that locally hosting Mistral on certain hardware configurations could significantly increase throughput.

- Miqutized Model and Mistral's Response: The authenticity of miqu-1-70b was discussed, including a link to the Twitter statement by Mistral AI's CEO confirming it as an early version of their model. Users

@shirman,@i_am_dom, and@dillfrescottspeculated about the relationship between the Miqutized model and Mistral's models.

- Model Hosting and Usage Costs: Conversation with

@mrdragonfoxregarding the benefits and costs of hosting LLMs locally vs. using an API. The discussion delved into hardware requirements and the cost-effectiveness of API usage for different scales of deployment.

Links mentioned:

- Join the Mistral AI Discord Server!: Check out the Mistral AI community on Discord - hang out with 11372 other members and enjoy free voice and text chat.

- 152334H/miqu-1-70b-sf · Hugging Face: no description found

- TheBloke/Mixtral-8x7B-Instruct-v0.1-AWQ · Hugging Face: no description found

- Streisand effect - Wikipedia: no description found

- NeverSleep/MiquMaid-v1-70B · Hugging Face: no description found

- NeverSleep/MiquMaid-v1-70B · VERY impressive!: no description found

Mistral ▷ #models (17 messages🔥):

- Mistral Aligns with Alpaca Format:

@sa_codementioned that Mistral small/med can be used with alpaca prompt format to supply a system prompt; however, they also noted the alpaca format is incompatible with markdown. - Official Docs Lack System Prompt Info:

@sa_codepointed to the lack of documentation for system prompts in Mistral's official docs and requested this inclusion. - Office Hours for the Rescue: In response to

@sa_code's query,@mrdragonfoxsuggested asking questions in the next office hours session for clarifications. - PR Opened for Chat Template:

@sa_codeindicated they opened a PR on Mistral's Hugging Face page to address an issue regarding chat template documentation (PR discussion link). - Mistral Embedding Token Limit Clarified:

@mrdragonfoxand@akshay_1responded to@danouchka_24704's questions, confirming that Mistral-embed produces 1024 dimensions vectors and has a maximum token input chunk of 8k, although 512 tokens are generally preferred.

Links mentioned:

- Open-weight models | Mistral AI Large Language Models: We open-source both pre-trained models and fine-tuned models. These models are not tuned for safety as we want to empower users to test and refine moderation based on their use cases. For safer models...

- mistralai/Mixtral-8x7B-Instruct-v0.1 · Update chat_template to include system prompt: no description found

Mistral ▷ #ref-implem (1 messages):

- JSON Output with Mistral Medium Subscription: User

@subham5089inquired about the best method to always receive JSON output using the Mistral Medium subscription. There was no response or further discussion on this topic within the provided message history.

Mistral ▷ #finetuning (33 messages🔥):

- Inquiry on Continuous Pretraining for Low-Resource Languages:

@quicksortasked for references regarding continuous pretraining of Mistral on low-resource languages, but@mrdragonfoxindicated a lack of such resources stating nothing comes close to instruct yet. - Pioneering Multi-Language LoRA Fine-tuning on Mistral:

@yashkhare_is working on continual pretraining of Mistral 7b with LoRA for languages like Vietnamese, Indonesian, and Filipino, questioning the viability of separate and merged language-specific LoRA weights. - Challenges of Language Clustering and Continuous Pretraining:

@mrdragonfoxcast doubt on the success of a style transfer-based approach for clustering new languages and stressed that distinct languages typically require separate pretraining. - Using RAG to Address Hallucinations in Fact Finetuning: In response to

@pluckedout's question about lower parameter models memorizing facts,@kecoland@mrdragonfoxdiscussed the importance and complexity of integrating Retrieval-Augmented Generation (RAG) for context-informed responses and minimizing hallucinations. - Hermes 2 Dataset Release Announcement:

@tekniumshared a link to the release of their Hermes 2 dataset on Twitter, inviting others to explore its potential value.

Mistral ▷ #la-plateforme (8 messages🔥):

- Confusion on Model Downloads:

@ashtagrossedaronneenquired about downloading a model to have it complete homework, but was initially unsure of which model to use. - Mistaken Link Adventure: In response,

@ethuxattempted to assist with a download link, but first provided the wrong URL and then corrected it, though the correct link was not shared in the messages given. - Contribution Update:

@carloszelaannounced the submission of a Pull Request (PR) and is awaiting review, signaling their contribution to a project. - API Quirks with Mistral Model:

@miscendraised a concern about the performance disparities between the Mistral small model when using the API versus running it locally, specifically mentioning truncation of responses and additional backslashes in code output.

Links mentioned:

👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

LM Studio ▷ #💬-general (123 messages🔥🔥):

- HuggingFace Model Confusion:

@petter5299inquired about why a GGUF model downloaded is not recognizable by LM Studio.@fabguyasked for a HuggingFace link for clarification and mentioned the unfamiliarity with the mentioned architecture. - LM Studio & macOS Memory Issues:

@wisefaraiencountered errors suggesting insufficient memory while trying to load a quantized model on LM Studio using a Mac, with@yagilbsuggesting it might be a lack of available memory at the time of the issue. - Local Models and Internet Claims:

@n8programsand others discussed the need for proof regarding claims that local models behave differently with the internet on/off. They called for network traces to known OpenAI addresses to substantiate such claims. - AMD GPU Support Inquiry:

@ellric_asked if there is any hope for AMD GPU support in LM Studio.@yagilbconfirmed the possibility and directed to a specific channel for guidance. - Prompt Format Frustrations and CodeLLama Discussion: Discussion on

#generalinvolved the complications caused by prompt formats, including a heads-up on Reddit about issues with CodeLLama 70b and llama.cpp not supporting chat templates, leading to poor model outputs.

Links mentioned:

- mistralai/Mixtral-8x7B-v0.1 · Hugging Face: no description found

- GitHub - joonspk-research/generative_agents: Generative Agents: Interactive Simulacra of Human Behavior: Generative Agents: Interactive Simulacra of Human Behavior - GitHub - joonspk-research/generative_agents: Generative Agents: Interactive Simulacra of Human Behavior

- Reddit - Dive into anything: no description found

- Nexesenex/MIstral-QUantized-70b_Miqu-1-70b-iMat.GGUF · Hugging Face: no description found

- GitHub - ggerganov/llama.cpp: Port of Facebook's LLaMA model in C/C++: Port of Facebook's LLaMA model in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

LM Studio ▷ #🤖-models-discussion-chat (100 messages🔥🔥):

- The Quest for Local LLM Training:

@scampbell70is on a mission to train a local large language model (LLM) specifically for stable diffusion prompt writing, considering the possibility of investing in multiple Quadro A6000 GPUs. The aim is to avoid the terms-of-service restrictions of platforms like ChatGPT and learn to train models using tools like Lora.

- LM Studio Compatibility with Other Tools: Users like

@cos2722and@vbwyrdeare discussing which models work best with LM Studio in conjunction with Autogenstudio, crewai, and open interpreter. The consensus is that Mistral works but MOE versions do not, and finding a suitable code model that cooperates remains a challenge.

- LM Studio's Hardware Hunger:

@melmassreports significant resource usage with the latest LM Studio version, indicating that it taxes even powerful setups with a 4090 GPU and 128GB of RAM.

- Gorilla Open Function Template Inquiry:

@jb_5579is seeking suggestions for JSON formatted prompt templates to use with the Gorilla Open Function in LM Studio with no follow-up discussion provided.

- Exploring Quantization and Model Size for Performance:

@binaryalgorithm,@ptable, and@kujilaengaged in an extensive discussion about utilizing quantized models with different parameter counts for performance and inference speed. The trade-off of depth and creativity in responses from larger models against the fast inference from low quant models was a significant part of the conversation.

Links mentioned:

- LLM-Perf Leaderboard - a Hugging Face Space by optimum: no description found

- Big Code Models Leaderboard - a Hugging Face Space by bigcode: no description found

LM Studio ▷ #🧠-feedback (21 messages🔥):

- Clarification Sought on Catalog Updates:

@markushenrikssoninquired about checking for catalog updates, to which@heyitsyorkieconfirmed that the updates are present. - Manual File Cleanup Needed for Failed Downloads:

@ig9928reported an issue where failed downloads required manual deletion of incomplete files before a new download attempt can be made, requesting a fix for this inconvenience. - Request for Chinese Language Support:

@gptaiexpressed interest in Chinese language support for LM Studio, noting the difficulty their Chinese fans face with the English version. - Confusion with Error Messages:

@mm512_shared a detailed error message received when attempting to download models.@yagilbdirected them to seek help in the Linux support channel and advised running the app from the terminal for error logs. - Model Compatibility Queries and Tutorial Issues:

@artdiffuserstruggled with downloading models and was informed by@heyitsyorkiethat only GGUF models are compatible with LMStudio. Additionally,@artdiffuserwas cautioned about potentially outdated or incorrect tutorials, and@yagilbfurther addressed the issue by suggesting a bug report in the relevant channel for their specific error.

LM Studio ▷ #🎛-hardware-discussion (157 messages🔥🔥):

- GPUs and Memory Management:

@0pechenkaasked whether memory usage on GPUs and CPUs when running models like LLaMA v2 is customizable. They wanted a beginner's guide for setting things up, asking for instructions, possibly on YouTube.@pefortinsuggested removing the "keep entire model in ram" flag if the model doesn't fit into memory to utilize the swap file on Windows.

- Building a Budget LLM PC:

@abesmoninquired about a good PC build for running large language models (LLMs) on a medium budget, and@heyitsyorkieadvised that VRAM is crucial, recommending a GPU with at least 24GB of VRAM.

- Power Supply for Multiple GPUs Discussed:

@dagbsand@pefortindiscussed the potential need for more power when running several GPUs, with pefortin considering to sync multiple PSUs to handle his setup, which includes a 3090 and other GPUs connected through PCIe risers.

- LLM Performance on Diverse Hardware Configurations:

@pefortinshared his experience of approximately 30-40% performance of a P40 GPU compared to a 3090 for LLM tasks, and mentioned driver issues on Windows, which are not a problem on Linux.

- Mixing GPU Generations for LLMs: Users

@ellric_and@.ben.comdebated on the compatibility and performance implications of using different generations of GPUs, like the M40 and P40, with LLMs..ben.comadmitted needing to investigate the performance consequences of splitting models across GPUs, positing a potential 20% slowdown from unscientific testing.

Links mentioned:

- Mikubox Triple-P40 build: Dell T7910 "barebones" off ebay which includes the heatsinks. I recommend the "digitalmind2000" seller as they foam-in-place so the workstation arrives undamaged. Your choice of Xe...

- Simpsons Homer GIF - Simpsons Homer Bart - Discover & Share GIFs: Click to view the GIF

LM Studio ▷ #🧪-beta-releases-chat (1 messages):

mike_50363: Is the non-avx2 beta version going to be updated to 2.12?

OpenAI ▷ #ai-discussions (137 messages🔥🔥):

- Improving DALL-E Generated Faces:

@abe_gifted_artqueried about updated faces generated by DALL-E, noticing they weren't distorted now. However, no specific update or change in date was provided in the discussion. - AI Understanding Through Non-technical Books:

@francescospacesought recommendations for non-technical books on AI and its potential or problems.@laerunsuggested engaging in community discussions like the one on Discord, while@abe_gifted_artrecommended looking into Bill Gates' interviews and Microsoft's "Responsible AI" promise, sharing a link: Microsoft's approach to AI. - Debate Over Training AI with Unethical Data: A debate occurred between

@darthgustav.and@luguiregarding the ethical implications and technical necessity of including harmful material in AI datasets.@yami1010later joined, highlighting the complexity of how language models work and the creativity seen in AI outputs. - Challenges of Successful DALL-E Prompts:

@.yttyexpressed frustrations with DALL-E not following prompts correctly, while@darthgustav.offered advice on constructing effective prompts, emphasizing the use of detail and avoiding negative instructions. - Starting with AI Art and AI Scripting Language Training: New users asked how to begin creating AI art, and

@exx1recommended stable diffusion requiring an Apple SoC or Nvidia GPU, while@cokeb3arexplored how to teach AI a new scripting language without the ability to upload documentation.

Links mentioned:

What is Microsoft's Approach to AI? | Microsoft Source: We believe AI is the defining technology of our time. Read about our approach to AI for infrastructure, research, responsibility and social good.

OpenAI ▷ #gpt-4-discussions (85 messages🔥🔥):

- GPT Image Analysis Challenge:

@cannmanagesought help using GPT to analyze videos for identifying a suspected abductor's van among multiple white vans.@darthgustav.suggested using the Lexideck Vision Multi-Agent Image Scanner tool and adjusting the prompt for better results, noting the importance of the context in which the tool is used.

- Identifying Active GPT Models:

@nosuchipsuinquired about determining the specific GPT model in use, and@darthgustav.clarified that GPT Plus and Team accounts use a 32k version of GPT-4 Turbo, with different usage for API and Enterprise plans. He later shared a link to transparent pricing plans and model details.

- Image Display Hurdles: Users

@quengelbertand@darthgustav.discussed whether GPT can display images from web URLs within the chat, concluding that such direct display isn't currently supported, evidenced by an error message displayed when attempting this function on GPT-4.

- Understanding @GPT Functionality:

@_odaenathusdiscussed confusion with the@system in GPTs, suspecting a blend rather than a clear handover between the original and@GPT.@darthgustav.confirmed the shared context behavior and suggested that disconnecting from the second GPT would revert back to first instructions, though a bug or bad design might cause unexpected behavior.

- Managing D&D GPTs Across Devices:

@tetsujin2295brought up an inability to @ GPTs on mobile as a Plus member, which@darthgustav.attributed to a potential lack of roll out or mobile browser limitations. He also shared the effective use of structuring multiple D&D related GPTs for various roles and world-building, with a slight concern over token limit constraints.

Links mentioned:

Pricing: Simple and flexible. Only pay for what you use.

OpenAI ▷ #prompt-engineering (62 messages🔥🔥):

- Sideways Dilemma in Portrait Generation:

@wild.evaand@niko3757discussed challenges with generating vertical, full-body portrait images; the model appears to force landscape mode.@niko3757suggested a workaround of generating in landscape and then stitching images in portrait after upscaling, though this is speculation and they await a significant update for DALL-E. - Portrait Orientation Gamble:

@darthgustav.suggested that due to symmetry and lack of an orientation parameter, getting a correct vertical portrait is a 25% chance occurrence. This implies a fundamental limitation, unrelated to how images are prompted. - Prompt Improvement Attempts:

@wild.evasought suggestions for prompts to improve image orientation,@niko3757provided examples, but@darthgustav.countered by indicating that no prompt improvement could overcome the model's inherent constraints. - Integrating Feedback Buttons in Custom GPT:

@luarstudiosinquired about adding interactive feedback buttons post-answer in their GPT model, engaging with@solbusand@darthgustav.for guidance.@darthgustav.offered a detailed explanation about incorporating an Interrogatory Format to attach a menu of responses to each question. - Structural Discussions on Design Proposals:

@solbusand@darthgustav.pondered whether to have a different feedback structure for the first logo design compared to subsequent ones, suggesting this could increase efficiency and relevance in feedback collection.@darthgustav.shared a link to their own approach in DALL-E Discussions.

OpenAI ▷ #api-discussions (62 messages🔥🔥):

- Sideways Image Chaos:

@wild.evaencountered issues with her detailed prompts which resulted in sideways images or undesired scene generation, suggesting training issues with the model.@niko3757confirmed the likely cause is a built-in error and suggests trying images in landscape then upscaling, while@darthgustav.mentioned the lack of orientation parameters limits vertical orientations.

- Expectations for DALLE Update:

@niko3757shared optimistic yet unconfirmed speculation about a significant update to DALLE coming soon, which they are eagerly awaiting for improved results.

- GPT-3 Button Dilemma:

@luarstudiossought help with adding response buttons after the AI presents design proposals, getting feedback from both@solbusand@darthgustav.on how to implement this in the chatbot's custom instructions.

- Conversation Structure Strategy:

@darthgustav.provided a strategy for@luarstudiosto guide the AI's interaction pattern, suggesting templates and conversation starter cards to handle the logical flow in presenting logo options and receiving feedback.

- Sharing Insights Across Channels:

@darthgustav.cross-posts to DALL-E Discussions, illustrating the efficiency of prompt engineering for a logo concept and recommending open-source examples across channels for better community assistance.

OpenAccess AI Collective (axolotl) ▷ #general (109 messages🔥🔥):