[AINews] Cursor reaches >1000 tok/s finetuning Llama3-70b for fast file editing

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Speculative edits is all you need.

AI News for 5/15/2024-5/16/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (428 channels, and 6173 messages) for you. Estimated reading time saved (at 200wpm): 696 minutes.

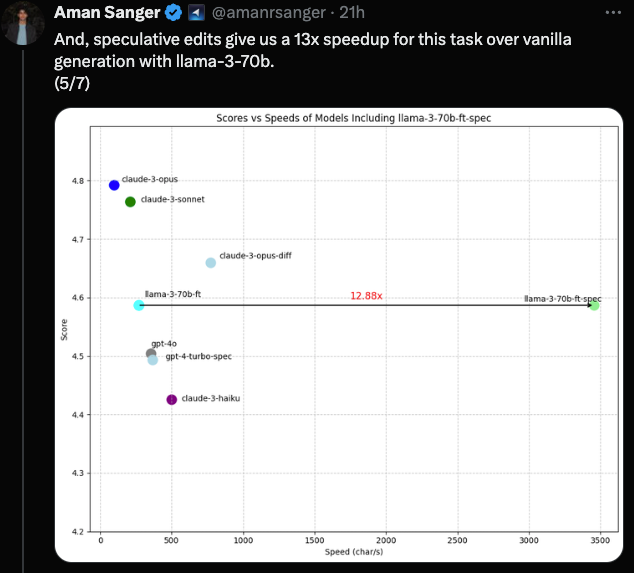

As an AI-native IDE, Cursor edits a lot of code, and needs to do it fast, particularly Full-File Edits. They have just announced a result that

"surpasses GPT-4 and GPT-4o performance and pushes the pareto frontier on the accuracy / latency curve. We achieve speeds of >1000 tokens/s (just under 4000 char/s) on our 70b model using a speculative-decoding variant tailored for code-edits, called speculative edits."

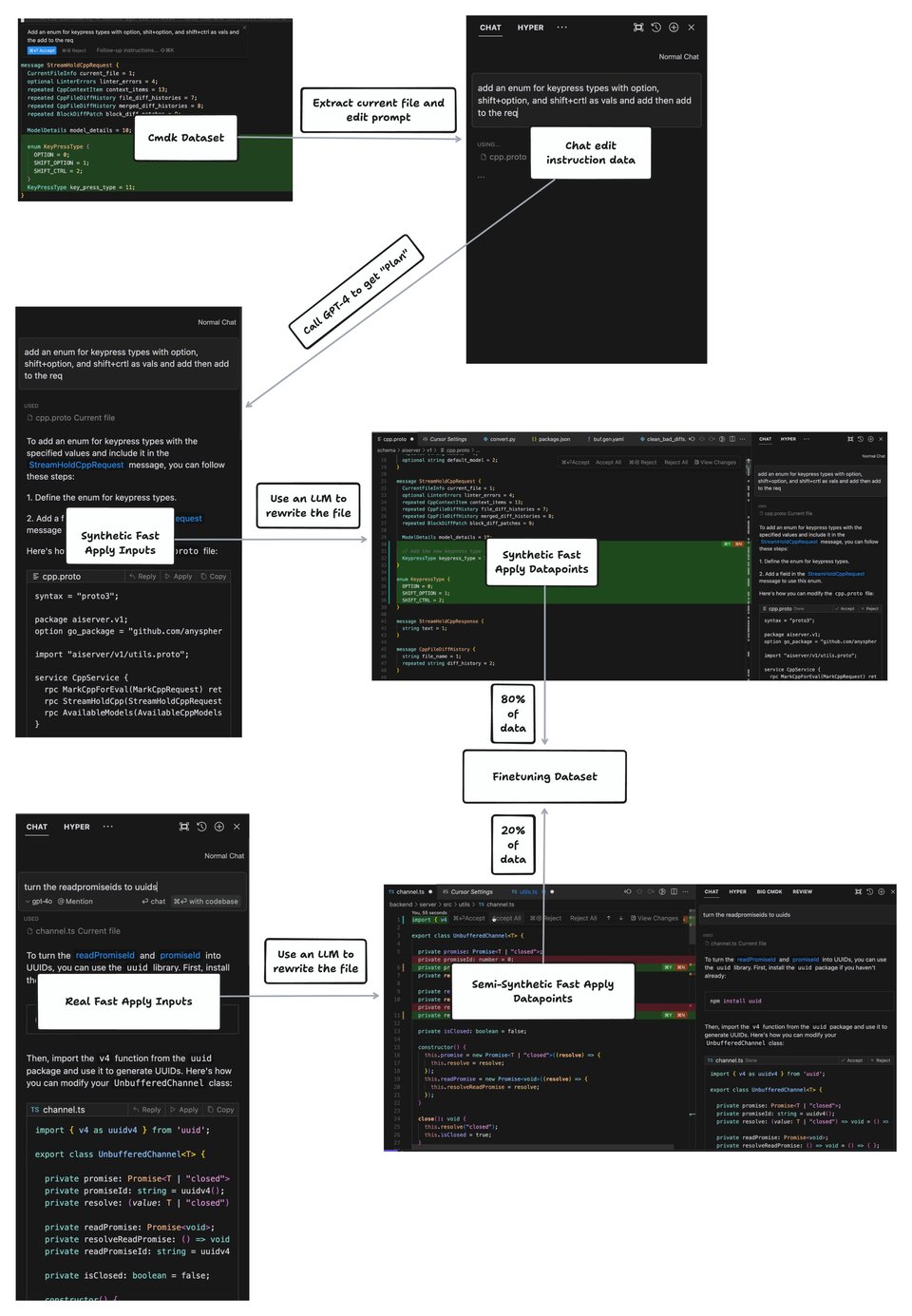

Because the focus is solely on the "fast apply" task, the team used a synthetic data pipeline tuned to do just that:

They are a little cagey about the speculative edit alogirthm - this is all they say:

"With code edits, we have a strong prior on the draft tokens at any point in time, so we can speculate on future tokens using a deterministic algorithm rather than a draft model."

If you can figure out how to do it on gpt-4-turbo, there is a free month of Cursor Pro for you.

Table of Contents

- AI Twitter Recap

- AI Reddit Recap

- AI Discord Recap

- PART 1: High level Discord summaries

- Unsloth AI (Daniel Han) Discord

- Stability.ai (Stable Diffusion) Discord

- OpenAI Discord

- Perplexity AI Discord

- HuggingFace Discord

- Nous Research AI Discord

- LM Studio Discord

- Modular (Mojo 🔥) Discord

- CUDA MODE Discord

- LlamaIndex Discord

- LAION Discord

- Eleuther Discord

- Interconnects (Nathan Lambert) Discord

- LangChain AI Discord

- OpenRouter (Alex Atallah) Discord

- OpenInterpreter Discord

- Datasette - LLM (@SimonW) Discord

- Latent Space Discord

- OpenAccess AI Collective (axolotl) Discord

- AI Stack Devs (Yoko Li) Discord

- tinygrad (George Hotz) Discord

- MLOps @Chipro Discord

- Cohere Discord

- DiscoResearch Discord

- LLM Perf Enthusiasts AI Discord

- Mozilla AI Discord

- PART 2: Detailed by-Channel summaries and links

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

OpenAI GPT-4o Release

- Multimodal Capabilities: @sama noted GPT-4o's release marks a potential revolution in how we use computers, with audio, vision, and text capabilities in an omni model. @imjaredz added it is 2x faster and 50% cheaper than GPT-4 turbo.

- Coding Performance: Early tests show mixed results for GPT-4o's coding abilities. @erhartford found it makes a lot of mistakes compared to GPT-4 turbo, while @abacaj noted it is very good at code, outperforming Opus.

- Instruction Following and Languages: Some customers rolled back to GPT-4 turbo due to worse instruction following with GPT-4o, especially for JSON, edge cases, and specialized formats, per @imjaredz. However, GPT-4o performs better at non-English languages.

- Multimodal Capabilities: @gdb mentioned GPT-4o has impressive image generation capabilities to explore. @sama clarified the new voice mode hasn't shipped yet, but the text mode is currently in beta.

- Reasoning and Knowledge: @goodside found GPT-4o can explain complex AI methods it hasn't seen before. @mbusigin noted it is familiar with niche AI research.

Anthropic, Google, and AI Developments

- Anthropic's New Features: @alexalbert__ announced streaming, forced tool use, and vision features rolling out to Anthropic devs, enabling fine-grained streaming, tool choice forcing, and foundations for multimodal tool use.

- Google's Imagen Video and Gemini Models: @GoogleDeepMind introduced Imagen Video, which can understand nuanced effects and tone from prompts. @drjwrae shared Gemini 1.5 Flash, a 1M-context small model with fast performance.

- Open-Source Releases and Compute Access: @HuggingFace is distributing $10M of free GPUs via ZeroGPU to the open-source AI community. Models like Llama, BLOOM, Stable Diffusion, DALL-E Mini are available on the platform.

AI Evaluation and Safety Considerations

- Evaluating LLMs: @svpino noted LLMs fail on novel problems outside their training data. @maximelabonne mentioned MMLU benchmarks are reaching saturation for top models. @_philschmid shared MMLU-Pro, a more robust benchmark that drops top model performance by 17-31%.

- Jailbreaking and Adversarial Attacks: @_akhaliq shared SpeechGuard research on jailbreaking vulnerabilities in speech-language models, with high success rates. Proposed countermeasures significantly reduce the attack success.

- Ethical and Societal Implications: @alexandr_wang noted solving AI data challenges is crucial, as breakthroughs are driven by better data in a hard human-expert symbiosis. @ClementDelangue contributed to the AI Policy Forum to mitigate risks and support responsible AI innovation.

AI Startups, Products and Courses

- AI-Powered Search and Agents: @perplexity_ai added advisors to guide search, mobile, and distribution efforts. @cursor_ai trained a 70B model achieving over 1000 tokens/s.

- Educational Initiatives: @svpino shared 300 hours of free ML engineering courses from Google. @HamelHusain announced an AI course with compute credits from @replicate, @modal_labs and @hwchase17.

- Open-Source Libraries: @llama_index added GPT-4o support in LlamaParse for complex document parsing and indexing.

Memes and Humor

- @svpino joked "This is not funny anymore" about GPT-4o claiming training data up to 2023.

- @saranormous posted a meme contrasting the marketing versus reality of AI agent products.

- @jxnlco jested about "alex hormozi making me and my friends rich, I understand coaching now."

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

AI Model Releases and Capabilities

- GPT-4o multimodal capabilities: In /r/singularity, GPT-4o from OpenAI demonstrates impressive real-time audio and video processing while being optimized for fast inference, showing potential for enabling insect-sized intelligent robots.

- Google's advanced vision models: Google's Project Astra memorizes object sequences and Paligemma has a 3D understanding of the world, showcasing advanced vision capabilities.

- MMLU-Pro benchmark released: In an image post, TIGER-Lab released the MMLU-Pro benchmark with 12,000 questions, fixing issues with the original MMLU and providing better model separation.

- Cerebras introduces Sparse Llama: Cerebras introduces Sparse Llama, which is 70% smaller, 3x faster, with full accuracy compared to the original Llama model.

AI Safety and Ethics

- Key AI safety researchers resign from OpenAI: Several key AI safety researchers, including Ilya Sutskever, resign from OpenAI, raising concerns about the company's direction and priorities.

- OpenAI considers allowing AI-generated NSFW content: OpenAI considers allowing AI-generated NSFW content, potentially exploiting lonely people with AI girlfriends, according to some discussions.

- US senators unveil AI policy roadmap: US senators unveil AI policy roadmap and seek government funding boost to address AI governance challenges.

AI Applications and Use Cases

- AI-designed cancer inhibitor announced: Insilico announced an AI-designed cancer inhibitor, demonstrating the potential of AI in drug discovery.

- Neuromorphic vision system for autonomous drones: A fully neuromorphic vision and control system was developed for autonomous drone flight, consuming only 7-12 milliwatts of power when running the network.

- AI-powered search app using local LLMs: An AI-powered search app for websites was created using local LLMs, combining llamaindex, pgvector, and llama3:instruct for document extraction and structured responses.

Technical Discussions and Tutorials

- Comparing Llama 3 quantization methods: In /r/LocalLLaMA, a comparison of Llama 3 quantization methods for GGUF, exl2, and transformers highlighted the performance of GGUF I-Quants and exl2 for higher speed or long context.

- Intuition behind fine-tuning LLMs: Also in /r/LocalLLaMA, a discussion on the intuition behind fine-tuning LLMs sought resources to understand model behavior and optimization beyond the basics.

- Microsoft and Georgia Tech introduce Vidur: Microsoft and Georgia Tech introduced Vidur, an LLM inference simulator to find optimal deployment settings and maximize GPU performance.

Memes and Humor

- AI announcement war meme: A meme about the AI announcement war between Google and OpenAI.

- Feeling the AGI coming meme: A meme about feeling the AGI coming.

AI Discord Recap

A summary of Summaries of Summaries

- GPT-4o Generates Buzz and Criticism: Across multiple Discords, GPT-4o was a hot topic, with users on OpenAI and Perplexity AI praising its speed and multimodal capabilities compared to GPT-4. However, some on OpenAI and LM Studio noted performance issues, generic outputs, and missing features. Discussions on Reddit and the OpenAI FAQ provided more context.

- Quantization and Optimization Techniques Advance: In the CUDA MODE and Latent Space Discords, members explored techniques like Bitnet 1.58 for quantization, the CORDIC algorithm for faster trigonometric calculations, and Google's InfiniAttention for efficient transformer memory. The Torch AO repository was suggested for centralizing bitnet implementations.

- New Benchmarks, Datasets and Models Unveiled: Across research-focused Discords, new resources were announced, including the challenging NIAN benchmark, the VidProM video prompt dataset, Google's Imagen 3 model, and the Nordic language Viking 7B model. Nous Research's Hermes 2 Θ model also generated interest.

- Mojo and CUDA Advancements Spark Optimism: The Modular and CUDA MODE Discords buzzed with excitement over Mojo's portability across GPUs and discussions on improving CUDA stream handling in projects like llm.c. Members also explored using NVMe to GPU DMA for faster data transfer.

- Concerns over AI Transparency and Reliability: Across Discords like Datasette and Interconnects, members expressed frustration over the lack of acknowledgment of LLM unreliability at events like Google I/O. Suggestions were made for a "Sober AI" showcase of practical applications. Changes to performance metrics for models like GPT-4o also raised questions about transparency.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- GPT4o Generates Buzz Among Engineers: Discord members exchanged brisk evaluations of GPT4o, noting its "Crazy fast" response times, but also reported a lack of image generation capability. The discussion was highlighted, including a link to a Reddit conversation.

- Finetuning Frenzy: Base or Instruct?: The advice for finetuning larger models, "If you have a large dataset always go base," spurred discussion and pointed users towards educational content, such as a Medium article.

- Dataset Dilemmas and GGUF Grief: The community dove into dataset generation errors, suggesting working with JSON in pandas for conversion, while issues with converting the ShareGPT dataset and with GGUF files for llama.cpp were tackled, with downgrading PEFT offered as a remedy. GitHub issue tracking offered additional insights.

- Unsloth AI Picks Up in Popularity: A user mentioned Unsloth AI being featured in the tutorial on the Replete-AI code_bagel dataset, signaling its rising popularity for fine-tuning Llama models.

- Summarization Skills Score Smiles: An AI-summarization feature received praise within the community, for budgeting down to the brass tacks of dialogues without direct relation to AI News, displaying the model's crisp encapsulation of verbose discussions.

Stability.ai (Stable Diffusion) Discord

SD3 Release: Will We Ever See It?: Despite skepticism surrounding the release and quality of Stability AI's SD3, members keep hope alive with rumors that SD3 might be held back to boost sales, but there is no firm release date or pricing information provided.

GPU Wars: 4060 TI vs 4070 TI Smackdown: The 4060 TI 16GB was pitted against the 4070 TI 12GB, with the former being recommended for ComfyUI usage while the latter was touted as better for gaming performance, though specifics were not detailed.

API Alternatives High in Demand: Members are actively seeking and debating APIs, with Forge being equated to A1111's UI for model training and asset design, and Invoke also being part of the discussion.

Workhorse GPUs Get a Benchmark: An informative benchmark site was circulated for evaluating GPU performance; it offers data on models such as 1.5 and XL Models and filters for specific hardware including Intel Arc a770.

The Divide of Dollars and Sense: Intense dialogues opened up about economic inequality, with some members emphasizing the moral and well-being costs of chasing wealth, though these were general philosophical conversations rather than specific AI-focused discourse.

OpenAI Discord

- GPT-4o's Rough Start: Discussions highlighted performance issues with GPT-4o, where users reported bugs such as slower response times, repeated topics, and lack of expected features. Contrastingly, GPT-4o was praised for better real-world context understanding when compared to GPT-4 in some prompt engineering cases.

- Navigating GPT-4o's Access and Features: Users expressed confusion around the access and rollout phases of GPT-4o, with clarifications provided that it's being prioritized for paid accounts—with more details in OpenAI's FAQ. Concerns about ChatGPT Plus subscription benefits were highlighted, given usage limits that may affect heavy use scenarios like software development.

- Prompt Engineering Unlocks Potentials and Pitfalls: Prompt engineering strategies are being refined, exploring how language nuances influence AI performance. Meanwhile, there's a push to understand the correct token limits and functionalities of GPT versions, with suggestions to craft character-based prompts for richer interactions.

- AI's Role in Future Work and Emotional Design Debated: The guild contemplated the impact of AI on job markets, stirring discussions between the potential for new job creation versus the threat of obsolescence. Additionally, the appropriate level of AI emotional responsiveness was debated, questioning if AI should lean towards human-like interactions or maintain professional neutrality.

- Community Powers AI Voice Assistant Advancements: An easily deployable Plug & Play AI Voice Assistant was introduced, with user feedback requested to refine the product further. It's claimed to be operational within 10 minutes, emphasizing user-friendly implementation and community-driven improvements.

Perplexity AI Discord

- GPT-4o Delivers Performance: On Perplexity, GPT-4o is turning heads with faster and more efficient results than GPT-4 Turbo, although some users encounter rollout snags.

- Perplexity Triumphs in Research: Users have given a nod to Perplexity's accurate and up-to-date sourcing and search functions compared to its rivals, earning it the go-to status for detailed research.

- DALL-E's Text Rendering Puzzle: Users report challenges with text appearing as gibberish in images generated by DALL-E; recommended solutions include revising prompt structures to prioritize text instructions.

- Perplexity Pro's iOS Voice Mode Gains Fans: The voice functionality on Perplexity Pro's iOS app garners praise for its fluid and natural interaction, sparking anticipation for an Android equivalent.

- Perplexity Pro Payment Glitches Reported: Subscribers facing payment issues with Perplexity Pro are directed to reach out to support@perplexity.ai for help.

- Perplexity's Information Highway: Links to Perplexity AI highlighted user interests ranging from finetuning search results, the lowdown on Aztec Stadium, a Google recap, the buzz in culinary circles, and Anthropic's team expansion.

- An API A-List for Engineers: Within the Perplexity AI community, concerns have surfaced covering topics such as beta access requests for citation features in the API, the ability of llama-3-sonar-large-32k-online model to search the web, calls for constant model aliases, unpredictable API latency, and challenges with autocorrected prompts skewing results.

HuggingFace Discord

- Terminus Leads the Dance: Terminus models have been updated to offer improved functionalities, and their latest collection is available on HuggingFace. The Velocity v2.1 checkpoint has hit 61k downloads and provides enhanced performance with negative prompts.

- PaliGemma Grabs the Spotlight: Conversations revolved around the PaliGemma models, from issues with generated code to the unveiling of a powerful Vision Language Model that marries visual and linguistic tasks effectively. DeepMind's Veo, a video generation model, also marched into view with its promise of 1080p cinematic-style videos, integrating soon with YouTube Shorts.

- Model Mysteries and Epsilon Greedy Investigations: Curiosity-driven reinforcement learning (RL) received attention, with discussions on how epsilon greedy policies and novel curiosity mechanisms can foster exploration. In the NLP field, challenges emerged with outdated coding knowledge in models; members stressed the importance of continuous retraining to maintain relevance.

- dstack as the On-Prem GPU Hero: The tool dstack was highly praised for simplifying the management of on-prem GPU clusters with CLI tools. Elsewhere, AI's role in PowerPoint slide content refinements was debated, with suggestions to use RAG or LLM models for learning and adapting from past presentations.

- Diverse Discussions Keep Engineers Engaged: Various technical threads illuminated the guild's landscape—a call for MIT license expertise for commercial purposes, using UNet models in computer vision, and OpenAI's Ilya Sutskever's recent industry update gained attention.

In essence, community dialogues peaked around novel AI tools and model fine-tuning within a vibrant tapestry of technological advancements and practical implementations.

Nous Research AI Discord

Streaming the Brain for Better AI: Engineers suggest that AI could adopt a streaming-like method akin to human thought processes, referencing the Infini-attention paper as a potential framework to improve LLM's handling of long context without overwhelming their finite working memory.

Beneath the Needles, a Tougher Benchmark: The Needle in a Needlestack (NIAN) benchmark has been introduced as a more challenging test for evaluating LLMs, posing a hurdle even for robust models like GPT-4-turbo; further info available on NIAN's website and GitHub.

Unveiling Nordic NLP Treasure, Viking 7B: Viking 7B emerges as the first open-source multilingual LLM for Nordic languages, while SUPRA is presented as a cost-effective approach to retrofitting large transformers by enhancing them into Recurrent Neural Networks to improve scaling.

Hermes 2 Ω: Merging LLMs for Superior Results: Nous Research heralds the release of Hermes 2 Ω, a model merger of Hermes 2 Pro and Llama-3 Instruct, refined further, showing promising results on benchmarks and accessible on HuggingFace.

Multimodal Meld and Finetuning: Meta's release of ImageBind raises the bar with a new AI model capable of joint embedding across various modalities, while discussions enter on the potential of finetuning existing models like PaliGemma for enhanced interactivity.

LM Studio Discord

- Soviet-Era Crypto Machine Inspires Perfumed Hallucination: A creative hallucination about a Soviet encryption machine called Fialka that supposedly used purple perfume was met with amusement in the general chat, as it underscored how LM models can sometimes whimsically deviate from reality.

- APUs Struggle Under Model Weight: While discussing the role of APUs in model performance, members concluded that llama.cpp does not leverage APUs any differently than CPUs during inference, which could affect decisions on hardware purchases for running large models.

- Iglu Ice-Cold Model Building: Frustration was aired over building imatrix for hefty models like llama3 70b on CPU, with users reporting several-hour-long build times and thermal throttling as notable challenges, demonstrating the practical constraints of current infrastructure.

- Hardware Heavyweights Flex Their Muscles: A high-end build comprising a 32 core threadripper, 512GB RAM, and an RTX6000 was shared, demonstrating the power of top-tier configurations to achieve a 0.10s time to first model token and a 102.45 token/sec generation speed.

- Software Snags and AVX Anomalies: Discussions surfaced around LM Studio’s compatibility and UI issues, with one user flagging that AVX1 systems are unable to run LM Studio (which requires AVX2), while others called for UI refinements to enhance user experience during complex server management tasks.

Modular (Mojo 🔥) Discord

- Mojo Rises in AI Development: Engineers shared resources for Mojo SDK learning, with links to the Mojo manual and the Mandelbrot tutorial. Mojo's advantages were highlighted, specifically its GPU flexibility across vendors and its potential for advancing hardware competition.

- Open Source Status Sparks Debate: The community debated Mojo's partial open-source nature, noting that its standard library is open, but the compiler and Max toolchain currently aren't. Excitement was shown for the compiler potentially going open source, while Max is unlikely to do so.

- Syntactical Snafus and Conditional Methods: Discussions revealed syntax inconsistencies in Mojo's documentation and issues with the

aliasdata structure iteration. Members praised the syntax for conditional methods, a new feature, although issues in locating changelog information about it were mentioned.

- Community Engages with Compiler and Contributions: The latest Mojo compiler build (

2024.5.1515) prompted discussions about non-deterministic self-test failures on macOS. Concerns about "cookie licking" in the repository were raised, suggesting smaller PRs as a solution for faster community contributions.

- Modular Spotlights Joe Pamer and Updates: Modular tweeted about their latest updates (no specific content referenced) and showcased Joe Pamer, the Engineering Lead for Mojo, through a blog post. No specifics on what was discussed in the tweets or the contents of the blog post were provided.

CUDA MODE Discord

Tensor Tug of War: Engineers discussed the use of torch.tensor Accessors versus directly passing tensor.data_ptr to kernels in CUDA, with some concerned about the potential unsigned char pointers and lack of clear documentation. The conversation pointed to PyTorch's CppDocs for using Accessors and the implications on tensor efficiency.

Solving Vexing CUDA Puzzles: Members tackled the dot product problem from the CUDA puzzle repo, noting the pitfalls of floating-point overflow with naive approaches, while reduction-based kernels maintain fp32 precision. A user's experiences and code snippets, including a floating-point overflow error, were shared on GitHub Gist.

Battle Against Non-Contiguous Tensors: Discussions on torch.compile issues and custom ops in PyTorch highlighted challenges with non-contiguous tensor strides and memory cache constraints. Engineers exchanged ideas on using tags in custom op definitions, as suggested by [torch library](https://pytorch.org/docs/main/library.html) and advocated for plans to reduce compilation times for torch.compile, pointing to conversations on the PyTorch forum.

Exploring Bitnet's Quantization Quest: Enthusiasm bubbled up for Bitnet 1.58, with calls for organizing it on platforms like GitHub and digging into training-aware quantization for linear layers and 2-bit kernels. The discussions recommended centralizing efforts in the Torch AO repository, and highlighted HQQ and BitBLAS as existing solutions for bitpacking and 2-bit GPU kernels.

Footnotes on Kernel Kinks and Gadgets: A user posted a link to an article about instant apply techniques without further context, while another shared wisdom with the GPU Programming Guide, and yet another user ran into a CUDA-related ONNXRuntimeError.

Torching Into Precision and Performance: The discussions have converged on a collective effort to recalibrate CUDA streams, with suggestions on wiping the slate clean and starting over, resulting in significant discourse and corresponding GitHub Pull Requests. The elusive dream of direct NVMe to GPU DMA transfer was also mentioned, with a nod to the ssd-gpu-dma repository.

LlamaIndex Discord

Vertex AI Welcomes LlamaIndex: LlamaIndex teamed up with Vertex AI for a new RAG API, aiming to enhance users' ability to implement retrieval-augmented generation models on Vertex’s cloud platform. The community can explore the announcement via LlamaIndex's Twitter post.

GPT-4o Quartz gets Friendly with LlamaIndex: The update to LlamaIndex's create-llama now incorporates GPT-4o, providing an intuitive way to create chatbots using a simple Q&A format over user data. For additional information, there's a comprehensive breakdown on LlamaIndex’s Twitter.

LlamaParse Merges with Quivr: LlamaIndex has forged a collaboration with Quivr, resulting in LlamaParse—a tool designed to parse multifaceted document formats (.pdf, .pptx, .md) by leveraging advanced AI. A link to Twitter provides more insights on this development.

UI Tweaks Spark Joy in LlamaParse: The LlamaIndex team unveiled major enhancements to the LlamaParse UI, promising a broadened suite of functionalities for users. The GUI improvements can be seen in the latest Twitter update.

Empower Your SQL with the Right Model: The #general channel saw concerns on choosing the right embedding models for SQL tables, with users suggesting a glance at models on the MTEB Leaderboard. However, a snag was noticed since these models are generally text-centric and may not cater specifically to SQL data.

Chat through Docs with RAG: In the #ai-discussion channel, a user needed assistance for integrating Cohere AI's retrieval-augmented generation (RAG) capabilities within Llama, aspiring to create a "Chat with your docs" application. They sought community advice on methods and resources for an effective implementation.

LAION Discord

AI Powers Up the Grid: There's a buzz about the energy demands of AI, with a highlight on how a fleet of 5000 H100 GPUs can idle at a massive 375kW. This speaks volumes about the increasing energy footprint of AI technologies.

Stable Diffusion Goes Native on Mac: A project named DiffusionKit, in partnership with Stability AI, has successfully brought Stable Diffusion 3 on-device for Mac users, signaling advances in accessibility to powerful AI tools. The news arrived via a tweet, raising expectations for the open-source release.

The Open Source Compromise: A heated debate simmered around the choice between the innovative spirit of open-source ventures and the financial lure of proprietary companies, intensified by concerns over restrictive non-compete clauses, becoming more focused in light of the FTC's recent rule banning such agreements (FTC announcement).

GPT-4o Leads the Multimodal Revolution: Discussion pointed towards GPT-4o's prowess in multimodal functions, including image generation and editing, suggesting a growing consensus that multimodal models stand at the forefront of AI development.

Breakthroughs in Video Dataset and Sampling Approaches: From unveiling VidProM, a substantial dataset to accelerate text-to-video research, found in an arXiv paper, to a novel approach in overcoming the limitations of bilinear sampling for neural networks, these discussions underscored the relentless pursuit of innovation. Meanwhile, Google's Imagen 3 is making waves as a leading image generation model, with a role in creating synthetic data sets discussed eagerly by community members (Imagen 3 information).

Eleuther Discord

- Epinets Pose a Tricky Balance: Epinet usage was scrutinized for their tuning complexities and potential as a perturbative bias. A notable quote emphasized the notion: "The epinet is supposed to be kept small though, so I assume the residual just acts as an inductive bias..."

- Transformer Tidbits and Model Insights: Technical talks revolved around transformer backpropagation techniques and a shared DCFormer GitHub repository, with some examining the execution of transformer models concerning path composition and associativity challenges backed by recent research.

- From Scaling Laws to AGI Aspirations: There were aspirations woven into discussions around meta-learning in symbolic space and AGI potential, alongside practical takeaways from GPT-4 post-training Elo score improvements.

- GPT-NeoX Conversion Confounded by Bugs: The

convert_neox_to_hf.pyscript faced bugs when handling different Pipeline Parallelism configurations, with a fix proposed by a contributor. Incompatibilities involvingrmsnormled to advice for trying a different configuration file suitable for Huggingface.

- Refining Model Evaluation and Conversion: In the realm of competition and model comparison, the use of

--log_sampleswas shared to facilitate the extraction of multiple-choice answer metrics, which is critical for AI models' performance analysis.

Interconnects (Nathan Lambert) Discord

Neural Networks Agree on Reality: Members engaged in discussions suggesting that neural networks, despite varying objectives and data, are displaying convergence towards a universal statistical model of reality within their representation spaces. Phillip Isola's recent insights support this, as shared through his project site, academic paper, and Twitter thread, showing how large language models and vision models begin sharing representations as they scale.

OpenAI Tokenization Enigma: The community pondered if OpenAI's tokenizer could be "fake," speculating that different modalities would likely necessitate distinct tokenizers. Despite skepticism, some members advocated for giving the benefit of the doubt, suggesting detailed methodologies may exist even within seemingly chaotic projects.

Anthropic Swings to Product Focus: Transitioning to a product-based approach, Anthropic embraces the necessity for marketable deliverables to enhance data refinement, amidst discussions of broader challenges facing AI organizations such as OpenAI and Anthropic, including the sustainability of their valuations and their dependence on external infrastructures.

AGI Timing Tug-of-War: Dialogues on the plausibility of approaching AGI, prompted by a Dwarkesh interview, revealed a stark divide in the community, ranging from optimism to criticism on the practicality and impact of AGI timeline predictions.

Transparency in AI Model Metrics Called Into Question: The community flagged the unexplained drop in GPT-4o’s ELO ratings and reduction in LMsys evaluation detail, sparking discussions on the need for clear communication and consistent update protocols. Resources and perspectives on this issue were exchanged through various tweets and video content.

LangChain AI Discord

- Get Streamlined Token Output with LangChain: LangChain's

.astream_eventsAPI provides the means for custom streaming with individual token outputs that was expected from.streamwithAgentExecutor. The detailed streaming documentation sheds light on the process.

- Solve Jsonloader Compatibility Woes: A user highlighted a fix for Jsonloader's inability to install jq schema for JSON parsing on Windows 11; details can be found in the issue tracker for Langchain, Issue #21658.

- Engineer Crafty Strategies for Bot Memory: Strategies for endowing chatbots with memory to maintain context between conversations were discussed, including tracking chat history and introducing memory variables within prompts.

- Cut Through Service Interruptions & Rate Limits: Members conversed about disruptions caused by "rate exceeded" errors and server inactivity leading to workflow inefficiencies; questions about deploying revisions and examining patterns related to server inactivity were also raised (no URLs provided).

- Insightful Tutorials & Crafty Projects Shared: An instructional video was shared on creating a universal web scraper agent, and a user showcased their integration of py4j for handling crypto transactions in Langserve backends, as well as their implementation of an innovative real estate AI tool combining LLMs, RAG, and interactive UI components (LinkedIn: Abhigael Carranza, YouTube: Real Estate AI Assistant demo).

OpenRouter (Alex Atallah) Discord

- Hindi Chatbot Boost: The Hindi 8B Chatbot Model named "pranavajay/hindi-8b" has been released with 10.2B parameters, showing promise for chatbot and language translation applications. Its release adds a new layer of capability for Hindi NLP tasks.

- Mobile Chatbots Get Friendlier: ChatterUI, a minimalistic UI for chatbots on Android, has been launched, designed specifically to be character-focused and compatible with OpenRouter backends. Developers can explore and contribute to ChatterUI through its GitHub repository.

- Invisibility Cloaks Your MacOS: The new MacOS Copilot titled Invisibility integrates GPT4o, Gemini 1.5 Pro, and Claude-3 Opus, featuring a video sidekick and plans for voice and memory enhancements. The community can expect an iOS version soon, as highlighted in its announcement.

- Lepton Lends a Hand to WizardLM-2: Suggestions were made to switch to Lepton for the WizardLM-2 8x22B Nitro to utilize OpenRouter's Text Completion API, enhancing performance due to Lepton's capabilities despite it being removed from some lists over issues.

- Efficient Context Management Unpacked: Google's InfiniAttention was cited for its ability to handle large token contexts in Transformers, prompting discussions on memory and performance efficiency in LLMs, backed by a relevant research paper.

OpenInterpreter Discord

- Hacking Pays Off: An individual managed to bypass the gatekeeper dialog of OpenAI's desktop app, resulting in an invitation to a private Discord channel to participate in its development.

- Realizing GPT-4o's Limitations: Users report that while attempting to utilize GPT-4o's image recognition feature within OpenInterpreter (OI), it fails post-screenshot phase, highlighting a gap in functionality.

- Performance Dilemma: Although dolphin-mixtral:8x22b processes at a sluggish rate of 3-4 tokens per second, it's been identified as effectively performing, with the faster CodeGemma:Instruct serving as a balanced alternative.

- OI Feature Enrichments & Debugging Avenues: Suggestions were made for more informative LED feedback on hardware devices, and a new TestFlight app (TestFlight link) for iOS debugging was introduced, assisting in resolving audio output issues.

- Setup Struggles and Solutions: Within O1's framework, members shared technical challenges involving the grok server configuration and model compatibility, with proposed fixes including server setups and usage of tools like Poetry for Linux installations. Links to resources like setup guides and GitHub repositories (01/software at main) were shared for community support.

Datasette - LLM (@SimonW) Discord

Google I/O Overlooks LLM Reliability: Engineers in the guild highlighted the absence of discussion on LLM reliability issues at Google I/O, expressing concern for the lack of acknowledgment on the matter by key presenters.

"Sober" Take on AI: A concept for a "Sober AI" showcase was proposed to display practical, reliable AI without the hype, aiming to set realistic expectations for large language model applications.

Transforming AI: The group discussed the potential of rebranding AI as "transformative" instead of "generative" to better reflect its capabilities in altering and processing information, suggesting that this could lead to a more accurate and productive discourse.

Prompt Caching For Efficiency: Technical discussion touched on using Gemini's prompt caching to lower the cost of token usage by maintaining prompts in GPU memory, albeit with an operational cost of $4.50 per million tokens per hour.

Model Switching And Desktop Client Concerns: The technical community raised concerns about switching between LLMs mid-conversation and the potential data integrity issues it might cause. Additionally, worries were voiced that SimonW's Mac desktop solution had been abandoned, prompting discussions on alternatives for a seamless experience.

Latent Space Discord

- OpenAI Snags Google Whiz for Search Showdown: OpenAI's strategic recruitment of Shivakumar Venkataraman, a former Google heavyweight, accelerates their ambition to rival Google with their own search engine.

- Model Merging Mastery: Pioneering work by Nous Research in model merging continues, with conversations highlighting "post-training" as an umbrella term for techniques including RLHF (Reinforcement Learning from Human Feedback), fine-tuning, and quantization, showing Nous' research direction.

- Watching and Learning from Dwarkesh Patel's Dialogues: Dwarkesh Patel’s latest podcast episode received mixed reactions, from praise for big-name guests to criticism for a perceived lack of interviewer engagement, with the episode being termed "mid" but worthy for its guest list.

- The Rich Text Translation Conundrum: The community delved into complexities of translating rich text, suggesting HTML as an intermediary to ensure span semantics are not lost across languages.

- Hugging Face's Generous GPU Gesture: In an effort to democratize AI development, Hugging Face has committed $10 million in GPUs to support smaller developers, academia, and startups, aiming to decentralize AI innovation.

- Fresh Podcast Episode Alert: Swyxio dropped a link to a new podcast episode, adding to the team's continuous consumption and discussion of industry insights.

OpenAccess AI Collective (axolotl) Discord

Falcon Versus LLaMA in Licensing Showdown: Falcon 11B and LLaMA 3's licenses sparked debate, with concerns raised about Falcon's Acceptable Use Policy updates potentially being unenforceable. Original prompt fidelity is key when applying LORA to models like LLaMA 3.

Docker Dilemmas and Data Discussions: A Docker setup for 8xH100 PCIe was successful but the SXM variant status was unclear. Meanwhile, the STEM MMLU dataset has been expanded, creating a more detailed benchmark for STEM-related AI evaluation.

Tiny But Mighty: TinyLlama Issues and Fixes: TinyLlama presented training troubles, necessitating manual launches with accelerate. Members are seeking fixes for this discrepancy, which seems to be a current challenge.

Cross-Format Conversations: The Alpaca format for training chatbots faced criticism for its inconsistent follow-up questions, driving the preference for maintaining consistent chat formats during AI training.

Hunyuan-DiT Throws Its Hat in the Ring: Attention was drawn to the Hunyuan-DiT model, a new multi-resolution diffusion transformer tailored for Chinese language processing and detailed in their arXiv paper.

Using the Right Tokens: Queries related to LLaMA 3 and ChatML tokenization were resolved with confirmation that ChatML's ShareGPT format is compatible without requiring additional special tokens.

AI Stack Devs (Yoko Li) Discord

- AI Town Explores New Frontiers: Discussions on AI Town highlighted the interest in an API for agent control, notably without support for agent-specific LLMs. Members are keen on exploring various API levels, including those compatible with OpenAI, and a mention of a potential Discord iframe with multiplayer capabilities was notably enthusiastic, citing a ready-to-use starter template for building Discord activities.

- NPC Tuning for Enhanced Performance: In AI Town development, suggestions to improve performance by reducing NPC activities were made, focusing on cooldown constants that can affect NPC behavior. The upcoming launch of an AI Reality TV Platform, which is open to community-contributed custom maps, was announced.

- Community Contributions and Feature Hype: The community's willingness to contribute to projects like the Discord iframe for AI Town reflects a proactive approach, hinting at a collaborative effort to introduce new features like multiplayer activities.

- Sequoia's PMF Framework Sparks Interest: An article on Sequoia Capital's PMF Framework was shared, detailing three types of product-market fit to assist founders with market positioning, which could provide valuable insights for product-focused AI engineers.

- New Member Gets a Helping Hand: A new member received help from the community with avatar customization, enhancing their personal experience in the virtual space and fostering a helpful community spirit.

tinygrad (George Hotz) Discord

CORDIC Conquers Complexity: Engineers discussed the advantages of the CORDIC algorithm over Taylor series for calculating trigonometric functions, addressing simplicity and speed benefits. A Python implementation and approaches for handling large argument values were deliberated, expressing concerns over precision and efficacy in machine learning applications.

Taming Trigonometry: The conversation shifted towards efficient ways to reduce arguments in trigonometric functions, ensuring precise results in an acceptable range (-π to π or -π/2 to π/2). Potential optimization paths for GPUs and fallbacks using Taylor approximations were considered for tackling large trigonometric values.

Efficient Visualization Utilized for Shape Indexing: A visualization tool to aid in understanding shape expressions in tensor reshaping operations was introduced, addressing the challenge of complex mappings. This tool is public and can be found here.

Exploring TACO for Code Generation: The community evaluated TACO, a code generator for tensor algebra, as an efficient resource for tensor computations. An exploration into using custom CUDA kernels for large tensor reductions in Tinygrad was also suggested for direct result accumulation.

Seeking Clarity on Tinygrad Operations: Clarification was sought regarding uops in a compute graph, particularly the DEFINE_GLOBAL operation and the output buffer tag, emphasizing a need for clearer documentation in low-level operations. Additionally, UseAdrenaline was recommended as a learning aid for understanding various repositories, including Tinygrad.

MLOps @Chipro Discord

Catch-Up with Members at Data AI Summit: Engineer colleagues are coordinating an informal meet-up during the Data AI Summit, scheduled for June 6-16 in the Bay Area. The suggestion has sparked mutual interest among members for an in-person connection.

Put a Pin in Monthly Casuals: The regularly scheduled casual event organized by Chip is on hold for the next few months, leaving participants to wonder about when the next social mixer might occur.

Interactive Learning Opportunity at Snowflake Dev Day: Members of the Discord have received an invitation to visit a booth at Snowflake Dev Day on June 6, promising potential insights into Snowflake's integration with data science workflows.

NVIDIA Ups the Ante with Developer Contest: There's excitement about NVIDIA & LangChain's Generative AI Agents Developer Contest, which includes the NVIDIA® GeForce RTX™ 4090 GPU among its rewards, even if geo-restrictions have dampened the spirits for some.

Exploring the Evolution of AI Hardware: An in-depth article was shared, dissecting the historical development of machine learning microprocessors and projecting future trends, noting the transformative impact of transformer-based models with a nod to Nvidia's soaring valuation. It forecasts exciting advances for NVMe drives and Tenstorrent technology, but posits a cooling period for GPUs in the mid-term future.

Cohere Discord

- Cohere Reranker Functional, Desires Highlight Reel: Users achieved impressive results with the rerank-multilingual-v3.0 model from Cohere but would appreciate a feature similar to ColBERT that can highlight key words relevant to the retrieval task.

- Connectors Explained, but PHP Client Queries Remain: Discourse clarified that Cohere connectors are meant to integrate with data sources, yet the community is seeking advice for a solid PHP client for Cohere, with one untested option being cohere-php on GitHub.

- Toolkit Wizardry and Reranking Wonders Questioned: Inquiry about the Cohere application toolkit underscored interest in its scalability features for production usage while the community expressed curiosity about why Cohere's reranking model outperforms other open-source alternatives.

DiscoResearch Discord

Ilya Sutskever Bids Farewell to OpenAI: The announcement of Ilya Sutskever's departure from OpenAI ignited debate over the organization's appeal to alignment researchers, stirring concerns about its future research direction.

GPT-4-turbo Meets its Match with NIAN: The Needle in a Needlestack (NIAN) benchmark presents a new level of challenge for context-sensitive responses in large language models, with reports that "even GPT-4-turbo struggles with this benchmark." Explore the code and the website for details.

LLM Perf Enthusiasts AI Discord

- AI Studio Ambush on the Hunt for Senior Talent: Ambush is looking for a remote senior fullstack web developer to craft intuitive UX/UI for DeFi products, with an emphasis of 70% frontend and 30% backend duties. Interested AI engineers should explore the Ambush job listing, which offers a $5k referral bonus for successful hires.

Mozilla AI Discord

- Hyperlink Hiccup in Llamafile: Engineers report Markdown hyperlinks not rendering into HTML in Mozilla's llamafile project, with suggestions made to open a GitHub issue to resolve this code snag.

- Timeout Troubles Plague Private Assistant: AI Engineers faced a httpx.ReadTimeout error, while running Mozilla's private search assistant, that terminated the generation of embeddings at 9%, sparking a discussion on extending the timeout settings.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Skunkworks AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The YAIG (a16z Infra) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

Unsloth AI (Daniel Han) ▷ #general (1022 messages🔥🔥🔥):

- Rapid Fire Opinions on GPT4o: Members shared quickfire impressions about the performance of GPT4o, with comments like "Crazy fast" and the downside of not being capable of image generation. Discussion link.

- Insight on Instruction vs. Base Models for Finetuning: Theyruinedelise advised, "If you have a large dataset always go base. If small dataset, go instruct," with sources provided for further reading on Medium.

- Unsloth Supports Qwen and Continuous Improvements: Theyruinedelise announced support for Qwen and shared updated Colab notebooks, recommending an installation update: "!pip install "unsloth[colab-new] @ git+https://github.com/unslothai/unsloth.git".

- Datasets for AI Training: lh0x00 released new bilingual datasets for English-Vietnamese translation on Huggingface, facilitating ease of use with tools like unsloth, transformers, and alignment-handbook.

- Financial Report Extraction Study: Preemware shared a study comparing RAG and finetuning methods, showing substantial performance drops with RAG for models like Mistral and Llama 3, detailed on Parsee.ai.

- WizardLM (WizardLM): no description found

- mixedbread-ai (mixedbread ai): no description found

- Skorcht/schizogptdatasetclean · Datasets at Hugging Face: no description found

- Reddit - Dive into anything: no description found

- unsloth (Unsloth AI): no description found

- lamhieu/translate_tinystories_dialogue_envi · Datasets at Hugging Face: no description found

- Reddit - Dive into anything: no description found

- AI Unplugged 10: KAN, xLSTM, OpenAI GPT4o and Google I/O updates, Alpha Fold 3, Fishing for MagiKarp: Insights over Information

- Tweet from VCK5000 Versal Development Card: The AMD VCK5000 Versal development card is built on the AMD 7nm Versal™ adaptive SoC architecture and is designed for (AI) Engine development with Vitis end-to-end flow and AI Inference development wi...

- cognitivecomputations/Dolphin-2.9 · Datasets at Hugging Face: no description found

- Google Colab: no description found

- Google Colab: no description found

- Google Colab: no description found

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- lamhieu/alpaca_gpt4_dialogue_vi · Datasets at Hugging Face: no description found

- lamhieu/alpaca_gpt4_dialogue_en · Datasets at Hugging Face: no description found

- finRAG Dataset: Deep Dive into Financial Report Analysis with LLMs: Discover the finRAG Dataset and Study at Parsee.ai. Dive into our analysis of language models in financial report extraction and gain unique insights into AI-driven data interpretation.

- Tweet from Should AI Be Open?: I. H.G. Wells’ 1914 sci-fi book The World Set Free did a pretty good job predicting nuclear weapons:They did not see it until the atomic bombs burst in their fumbling hands…before the l…

Unsloth AI (Daniel Han) ▷ #random (27 messages🔥):

- Unsloth appears in fine-tuning tutorial: A user excitedly mentioned that the Replete-AI code_bagel dataset uses Unsloth in their tutorial for fine-tuning a Llama. "I am so glad Unsloth is getting so popular."

- High losses post-tokenizer fix in Llama3: A user reported that after fixing tokenizer issues, their Llama3 model showed losses double what they were before. Further experimentation without the EOS_TOKEN did not resolve the issue, leading to continued high training losses.

- RAM issues with ShareGPT dataset conversion: A user shared that their 64GB of RAM was insufficient for converting the ShareGPT dataset, while another user, likely Rombodawg, mentioned that the code should normally require about 10GB of RAM. They discussed this over DMs to sort out the code issues.

Link mentioned: Replete-AI/code_bagel · Datasets at Hugging Face: no description found

Unsloth AI (Daniel Han) ▷ #help (448 messages🔥🔥🔥):

- Dataset Issues with JSON Format: Multiple users, including mapler and noob_master169, troubleshoot a dataset generation error. The suggestion to load the JSON in pandas and then convert to a dataset was offered as a fix.

- Dataset Generation Troubleshooting: theyruinedelise confirmed that the issue is likely a dataset format problem. They also discussed potential solutions and confirmed the approach was tackling the root problem.

- GGUF Conversion Errors: Multiple users, like leoandlibe and jiaryoo, discuss problems with llama.cpp conversions and GGUF files. theyruinedelise and others identified PEFT updates as a potential cause and recommended downgrading.

- Using GPT-3 for Custom Queries: just_iced faced issues when querying a driver’s manual using Llama 3. After troubleshooting with other members, they resolved their issue by transitioning to using Ollama instead.

- Model Compatibility and Issues: Questions about compatibility and installation of models like Unsloth, Llama 3, and issues regarding context window limitations were discussed. starsupernova gave specific steps to resolve the issues, such as changing installation instructions in Colab.

- Google Colab: no description found

- unsloth/mistral-7b-instruct-v0.2 · Hugging Face: no description found

- In-depth guide to fine-tuning LLMs with LoRA and QLoRA: In this blog we provide detailed explanation of how QLoRA works and how you can use it in hugging face to finetune your models.

- Skorcht/orthonogilizereformatted at main: no description found

- Tweet from Nicolas Mejia Petit (@mejia_petit): @unslothai running unsloth in windows to train models 2x faster than regular hf+fa2 and with 2x less memory letting me do a batch size of 10 with a sequence length of 2048 on a single 3090. Need a tut...

- Blade Runner GIF - Blade Runner Blade Runner - Discover & Share GIFs: Click to view the GIF

- Skorcht/syntheticdata · Datasets at Hugging Face: no description found

- RuntimeError: Unsloth: llama.cpp GGUF seems to be too buggy to install. · Issue #479 · unslothai/unsloth: prerequisites %%capture # Installs Unsloth, Xformers (Flash Attention) and all other packages! !pip install "unsloth[colab-new] @ git+https://github.com/unslothai/unsloth.git" !pip install -...

- unilm/bitnet/The-Era-of-1-bit-LLMs__Training_Tips_Code_FAQ.pdf at master · microsoft/unilm: Large-scale Self-supervised Pre-training Across Tasks, Languages, and Modalities - microsoft/unilm

- Skorcht/thebigonecursed · Datasets at Hugging Face: no description found

- Welcome to PyPDF2 — PyPDF2 documentation: no description found

- I got unsloth running in native windows. · Issue #210 · unslothai/unsloth: I got unsloth running in native windows, (no wsl). You need visual studio 2022 c++ compiler, triton, and deepspeed. I have a full tutorial on installing it, I would write it all here but I’m on mob...

- Mechanistic Interpretability — Neel Nanda: Blog posts about Mechanistic Interpretability Research

- KeyError: 'I8' when trying to convert finetuned 8bit model to GGUF · Issue #4199 · ggerganov/llama.cpp: Prerequisites Hi there, I am finetuning the model https://huggingface.co/jphme/em_german_7b_v01 using own data (I just replaced the questions and answers by dots to keep it short and simple). The m...

- Google Colab: no description found

- Google Colab: no description found

- Google Colab: no description found

- GPU Support (NVIDIA CUDA & AMD ROCm) — SingularityCE User Guide 4.1 documentation: no description found

Unsloth AI (Daniel Han) ▷ #showcase (5 messages):

- AI News Recognition Enjoyed by Members: A member expressed joy at recognizing the Discord bot's achievements being highlighted in AI News. They joked about the cyclical nature of recognition, saying, "AI News mentioning another AI News mention".

- Positive Feedback for Summarization Feature: A member was enthusiastic about the AI summarization abilities, appreciated swyxio’s highlight, and showed gratitude for its helpfulness. Another member clarified that the summarization was from a different conversation, unrelated to AI News.

Unsloth AI (Daniel Han) ▷ #community-collaboration (1 messages):

starsupernova: Oh fantastic - if u need help - ask away!

Stability.ai (Stable Diffusion) ▷ #general-chat (966 messages🔥🔥🔥):

- SD3 Release Doubts and High Prices: Discussions revolving around the release timeline and quality of SD3, with some users skeptical about its release and quality. There's speculation that Stability AI is holding SD3 to drive sales; one member mentioned "SD3 will be released," maintaining hope despite uncertainties.

- GPU Debate – 4060 TI vs 4070 TI: Members debated the performance between 4060 TI 16GB and 4070 TI 12GB for gaming and AI tasks. One favored the 4060 TI for ComfyUI, while another highlighted the 4070 TI's superior gaming performance.

- API Alternatives and Usage: Several inquiries and suggestions about using API alternatives like Invoke or Forge for model training and asset design. One user praised the efficiency of Forge and described it as an "exactly the same" UI as A1111.

- Benchmark Website Shared: A user shared a benchmark site for evaluating GPU performance. The site offers comprehensive data on models like 1.5 and XL Models, with users directed to filter results for specific GPUs like the Intel Arc a770.

- Frustrations with Economic Inequality Expressed: Intense debates around economic struggles and inequality, touching on capitalism, technological advancements, and historical injustices. Some argued that economic disparity and the pursuit of wealth come at the cost of morality and personal well-being.

- SD WebUI Benchmark Data: no description found

- ProGamerGov/synthetic-dataset-1m-dalle3-high-quality-captions · Datasets at Hugging Face: no description found

OpenAI ▷ #ai-discussions (280 messages🔥🔥):

- GPT-4o faces criticism for performance: Multiple users, including this Reddit post, have reported that GPT-4o performs worse than its predecessors, often introducing errors in tasks like coding and answering less effectively than GPT-2.

- Discussing GPT-4o's Vision Capabilities: Users like

vl2uare experimenting with GPT-4o's ability to analyze medical images and pushing the limits of its "identity," but results are mixed and sometimes lead the model to provide libraries rather than direct analyses. - Hyperstition as a concept for AI: The notion of "hyperstition" was explored, exemplified by how AI can be nudged into new identities and beliefs through reinforcement. AI's role in confirming self-fulfilling prophecies was discussed in the context of its training and interaction patterns.

- Future job market and impact of AI: Users exchanged views on AI potentially rendering many jobs obsolete, leading to speculation on how humans will adapt and find new ways of living and working in an AI-dominated future. Some believe AI will create new job opportunities, while others worry about mass unemployment.

- Concerns about AI's emotional realism: Debates emerged about whether AI should remain neutral and professional or simulate human-like emotional responses. The balance between creating a relatable AI versus an efficient, emotionless assistant was a key discussion point in the community.

Link mentioned: Reddit - Dive into anything: no description found

OpenAI ▷ #gpt-4-discussions (103 messages🔥🔥):

- Confusion over GPT-4o Accessibility: Many users were unsure about the availability of GPT-4o, asking if it was free or exclusive to paid accounts. Clarifications were given that GPT-4o is rolling out over time and currently prioritized for paid users, as detailed here.

- Issues with ChatGPT-4o Functionality: Several users reported issues with the new ChatGPT-4o model, including slow responses and persistent topic repetition. Some found it lacked certain features like voice interaction or image generation that were expected from the demos.

- Custom GPTs and Voice Features Concerns: Users inquired if custom GPTs would utilize GPT-4o and discussed features like voice mode, which is currently rolling out to Plus accounts only. It's noted that custom GPTs will switch to GPT-4o in a few weeks according to the official FAQ.

- Technical Glitches with Updates and Subscriptions: Multiple participants expressed frustration with technical issues such as failed app updates, subscription problems, and missing features like voice options. The expectation is that these are temporary glitches due to the high demand and ongoing updates.

- Interaction and Token Count Clarification: There was a discussion about the token limits and proper functionality measurement of different GPT versions, with a detailed explanation provided in a token counting guide. Users were advised to check GPT response times to identify the underlying model.

OpenAI ▷ #prompt-engineering (192 messages🔥🔥):

- Members evaluate differences between GPT-4 and GPT-4o: Multiple users discussed the capabilities of GPT-4 vs. GPT-4o across a range of prompts, such as understanding real-world scenarios and solving puzzles. They noted subtle differences, with GPT-4o sometimes showing better grounding in real-world contexts.

- Prompt engineering techniques explored: Strategies for improving chatbot responses were shared, including using politeness, encouragement, and context-specific instructions to elicit better outputs. A user shared insights from studies that showed how motivator phrases could elevate the performance of AI models.

- Challenges with custom GPTs and maintaining prompt fidelity: Users discussed difficulties in getting GPTs to follow custom instructions, particularly around avoiding complicated calculations. Suggestions included focusing solely on positive instructions and providing clear, hierarchical guidance.

- Creative applications and outputs: Users experimented with creative and nuanced prompts to test GPT-4 and GPT-4o's capabilities, such as cryptic message decryption and complex storytelling scenarios. There were discussions around the effectiveness of different prompting styles in eliciting desired AI behaviors.

- Practical concerns about usage limits and subscriptions: Some members debated the utility of the ChatGPT Plus subscription given the message limits, especially for heavy use cases like software engineering. Others highlighted different usage strategies to optimize the subscription benefits.

Link mentioned: ChatGPT can now access the live Internet. Can the API?: Given the news announcement I am wondering if the API now has that same access to the Internet. Thanks in advance!

OpenAI ▷ #api-discussions (192 messages🔥🔥):

- Exploring AI Prompt Engineering: Discussion revolved around the effectiveness of different prompt strategies for GPT models, touching on how politeness, encouragement, and specific instructions can improve AI responses. Multiple techniques like "EmotionPrompt" and asking AI to act as experts were explored to enhance performance.

- Testing GPT-4 vs. GPT-4o: Members conducted comparative tests to identify differences between GPT-4 and GPT-4o, focusing on tasks like deciphering codes and understanding real-world concepts. Subtle differences were noted, particularly in how GPT-4o handles multimodal inputs.

- Addressing Response Validity: A recurring theme was the AI's occasional production of incorrect or irrelevant data. Strategies discussed included enforcing stricter data source usage instructions and guiding the model's attention more effectively.

- Character Role Playing for Enhanced Interaction: There was interest in using detailed prompts to create AI characters with distinct personas for dynamic interactions. Markdown formatting was suggested to structure these prompts effectively.

- Bridge Crossing Problem & Image Description: The classic "bridge crossing with a flashlight" problem and an image description task were used to challenge the AI. Comparisons were made between different model’s abilities to interpret and respond to these puzzles.

Link mentioned: ChatGPT can now access the live Internet. Can the API?: Given the news announcement I am wondering if the API now has that same access to the Internet. Thanks in advance!

OpenAI ▷ #api-projects (4 messages):

- Plug & Play AI Voice Assistant available: A Plug & Play AI Voice Assistant is heavily promoted for its simplicity and ease of use. "Ready in 10 min!" is highlighted as a key benefit.

- Feedback invitation for Plug & Play AI: Users are encouraged to "try it and share your feedback" to improve the product. This open invitation points to community-driven enhancement efforts.

Perplexity AI ▷ #general (477 messages🔥🔥🔥):

- GPT-4o generates buzz: Users confirm that GPT-4o is available on Perplexity showcasing faster responses and better performance compared to GPT-4 Turbo. Many still face issues as it rolls out gradually.

- Perplexity nabs the research crown: Researchers delight in Perplexity’s accuracy, sourcing, and search capabilities over ChatGPT, making it their tool of choice for detailed inquiries and current information.

- Generating text in AI images troubles users: Users struggle with "text gibberish" in DALL-E generated images, prompting discussions on prompt structure. Tips include placing text instructions upfront and generating multiple versions for better results.

- Voice features glitter on iOS: The voice mode in Perplexity Pro's iOS app impresses with natural interactions amid anticipation for updates on Android. Users appreciate the ability to have long, uninterrupted conversations for ease of use.

- Billing hiccups for Perplexity Pro: Users experience payment issues while subscribing to Perplexity Pro. Support is advised via support@perplexity.ai for assistance.

- What is phidata? - Phidata: no description found

- ChatGPT: Introducing ChatGPT for iOS: OpenAI’s latest advancements at your fingertips. This official app is free, syncs your history across devices, and brings you the newest model improvements from OpenAI. ...

- no title found: no description found

- GitHub - kagisearch/llm-chess-puzzles: Benchmark LLM reasoning capability by solving chess puzzles.: Benchmark LLM reasoning capability by solving chess puzzles. - kagisearch/llm-chess-puzzles

- GitHub - openai/simple-evals: Contribute to openai/simple-evals development by creating an account on GitHub.

- I <3 Coffee GIF - Jimcarrey Brucealmighty Coffee - Discover & Share GIFs: Click to view the GIF

- no title found: no description found

- Gift Present GIF - Gift Present Surprise - Discover & Share GIFs: Click to view the GIF

Perplexity AI ▷ #sharing (12 messages🔥):

- Finetuning link shared: A member posted a link to a Finetuning search result on Perplexity AI. Check out the link here.

- Aztec Stadium page shared: A member shared a link to a Perplexity AI page about Aztec Stadium. View the page here.

- Recap of Google: An intriguing link about a Google recap was posted. Explore the recap here.

- Latest cooking trends: A member shared a link about the latest cooking trends. Dive into the trends here.

- Anthropic hires Instagram: A link discussing Anthropic hiring from Instagram was shared. Read more on this topic here.

Perplexity AI ▷ #pplx-api (10 messages🔥):

- Beta Access Plea for API Citations: One user requested beta access to Perplexity API's citation feature, emphasizing its importance for their business. They acknowledged potential backlogs but stressed the significance of gaining access to close deals with key customers.

- llama-3-sonar-large-32k-online Searches the Web: A user inquired if the llama-3-sonar-large-32k-online model API performs web searches similar to Perplexity.com. It was confirmed that this model does search the web.

- Request for Stable API Model Aliases: A user expressed frustration over frequent changes in model names and requested the establishment of stable aliases that would always point to the newest models when older ones are deprecated.

- Increased API Latency Today: A user noted an increase in latency when making API calls to Perplexity on the current day.

- Autocorrect Issue with Prompts: A user reported an issue where Perplexity autocorrects prompts incorrectly, leading to inaccurate responses. They are seeking suggestions to tweak prompts to avoid this problem.

HuggingFace ▷ #announcements (3 messages):

- Terminus Models Updated in HuggingFace: The Terminus models have a new updated collection by a community member. These updates provide new functionalities and improvements.

- OSS AI+Music Explorations on YouTube: Check out more AI and music explorations by a community member on YouTube. These explorations offer innovative ways to combine AI and music.

- Manage On-Prem GPU Clusters Efficiently: Learn a new way to manage on-prem GPU clusters. This method provides enhanced control and scalability for intensive computational tasks.

- Understanding AI for Story Generation: Engage with AI in story generation through a detailed article and a related Discord event. The discussion will delve into the applications and implications of AI in creative narratives.

- OpenGPT-4o Introduced: Explore the new OpenGPT-4o. It accepts text, text+image, and audio inputs, and can generate multiple forms of output including text, image, and audio.

- SimpleTuner/documentation/DREAMBOOTH.md at main · bghira/SimpleTuner: A general fine-tuning kit geared toward Stable Diffusion 2.1, DeepFloyd, and SDXL. - bghira/SimpleTuner

- Vi-VLM/Vista · Datasets at Hugging Face: no description found

HuggingFace ▷ #general (261 messages🔥🔥):

- OpenAI's Ilya Sutskever news stirs reaction: Discussion sparked by a tweet about Ilya Sutskever's departure (link). Another member shared a related tweet from Jan Leike..

- Tips on React for fetching PDFs: A user sought advice on how to use React to grab PDFs listed by GPT. Community engagement followed with various users chiming in.

- Challenges with PaliGemma models: Users discussed issues and solutions related to using the PaliGemma models, including links to code examples and HuggingFace collections. One user highlighted incorrect results due to

do_sample=False. - Exploring ZeroGPU and model deployment: Members discussed the capabilities and beta access of ZeroGPU for deploying machine learning models (link). Spaces built on ZeroGPU were referenced as examples.

- MIT License utilization on HuggingFace platform: A user sought clarity on using MIT-licensed models for commercial purposes on the HuggingFace platform. Another user confirmed it should be fine, referencing the MIT License documentation (link).

- MIT License: A short and simple permissive license with conditions only requiring preservation of copyright and license notices. Licensed works, modifications, and larger works may be distributed under different t...

- — Zero GPU Spaces — - a Hugging Face Space by enzostvs: no description found

- Inference Endpoints - Hugging Face: no description found

- Welcome to the Community Computer Vision Course - Hugging Face Community Computer Vision Course: no description found

- Stable Diffusion 2-1 - a Hugging Face Space by stabilityai: no description found

- zero-gpu-explorers (ZeroGPU Explorers): no description found

- 2024-04-22 - Hub Incident Post Mortem: no description found

- Batched multilingual caption generation using PaliGemma 3B! · huggingface/diffusers · Discussion #7953: Multilingual captioning with PaliGemma 3B Motivation The default code examples for the PaliGemma series I think are very fast, but limited. I wanted to see what these models were capable of, so I d...

- Python Calculator in 20 Seconds! #shorts #python #calculator: Hey there Python pals! 🐍💻 Need a calculator but can't wait for a coffee break? Say no more! Dive into the world of Python magic with our lightning-fast Pyt...

- “Wait, this Agent can Scrape ANYTHING?!” - Build universal web scraping agent: Build an universal Web Scraper for ecommerce sites in 5 min; Try CleanMyMac X with a 7 day-free trial https://bit.ly/AIJasonCleanMyMacX. Use my code AIJASON ...

- Future of SaaS and UI with AI Agents: A brief survey about the impact of AI agents on b2b and SaaS by https://hai.ai

- no title found: no description found

- Spaces - Hugging Face: no description found

- Lamini - Enterprise LLM Platform: Lamini is the enterprise LLM platform for existing software teams to quickly develop and control their own LLMs. Lamini has built-in best practices for specializing LLMs on billions of proprietary doc...

- PaliGemma Release - a google Collection: no description found

- PaliGemma FT Models - a google Collection: no description found

- GitHub - huggingface/transformers: 🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX.: 🤗 Transformers: State-of-the-art Machine Learning for Pytorch, TensorFlow, and JAX. - huggingface/transformers

HuggingFace ▷ #today-im-learning (13 messages🔥):

- Epsilon Greedy Policy maintains RL trade-offs: In response to a question about maintaining exploration/exploitation trade-off in RL, a member explained that the epsilon greedy policy is used. They suggested learning more via ChatGPT and encouraged curiosity.

- Curiosity in RL encourages exploration: A member recommended looking into curiosity-driven exploration as a way to encourage exploration in RL. They shared a paper by Pathak et al. which rewards agents for "error in an agent's ability to predict the consequence of its own actions."

- Unusual situations drive exploration: It was discussed that in curiosity-driven exploration, agents are encouraged to choose actions leading to unusual situations to increase their reward. This method helps in scenarios where dense rewards are challenging to maintain in RL.

Link mentioned: Curiosity-driven Exploration by Self-supervised Prediction: Pathak, Agrawal, Efros, Darrell. Curiosity-driven Exploration by Self-supervised Prediction. In ICML, 2017.

HuggingFace ▷ #cool-finds (6 messages):

- Unveiling PaliGemma Vision Language Model: A member shared a link to an article about the PaliGemma Vision Language Model on Medium. This model claims to provide powerful capabilities in combining vision and language tasks. Read more.

- Veo Video Generation Model Launches: DeepMind's latest video generation model, Veo, produces 1080p resolution videos with a variety of cinematic styles and extensive creative control. Selected creators can access these features through Google’s experimental tool VideoFX, and it will eventually integrate with YouTube Shorts. More details.

- Joint Language Modeling for Speech and Text: A research paper explores joint language modeling for speech units and text. The study shows improvements in spoken language understanding tasks by mixing speech and text using proposed techniques. Read the paper.

- Google IO 2024 Full Breakdown: A YouTube video provides a comprehensive analysis of the Google IO 2024 event, claiming it made Google relevant in AI again. Watch here.

- Getting Started with Candle: A Medium article offers a guide on how to start using Candle, a new tool or technique in AI. Read the article.

- Veo: Veo is our most capable video generation model to date. It generates high-quality, 1080p resolution videos that can go beyond a minute, in a wide range of cinematic and visual styles.

- Toward Joint Language Modeling for Speech Units and Text: Speech and text are two major forms of human language. The research community has been focusing on mapping speech to text or vice versa for many years. However, in the field of language modeling, very...

- Google IO 2024 Full Breakdown: Google is RELEVANT Again!: Here's my full breakdown of the Google IO 2024 event, which, in my opinion, made Google very relevant again in AI.Join My Newsletter for Regular AI Updates ?...

HuggingFace ▷ #i-made-this (7 messages):

- dstack simplifies on-prem GPU management: Announcing a "game changer" for managing on-prem GPU clusters, dstack allows team members to use a CLI to run dev environments, tasks, and services on both on-prem and cloud servers. Learn more and check out their docs and examples.

- Excited reactions about dstack: Members expressed curiosity and excitement about dstack's capabilities, with one planning to avoid a deep dive into slurm in favor of dstack. Another mentioned it seems worthwhile before making decisions on cluster management.

- Musicgen continuations max4live device: A member shared updates on the Musicgen continuations project, highlighting improvements to the max4live device backend and its addictive features. Check out the YouTube demo.

- Terminus model updates improve performance: Every terminus model in the collection has been updated with the correct unconditional input config, enhancing their function with and without negative prompts. The velocity v2.1 checkpoint now boasts 61k downloads, available at Terminus XL Velocity V2.

Link mentioned: Terminus XL - a ptx0 Collection: no description found

HuggingFace ▷ #reading-group (12 messages🔥):

- Plan for a Saturday Event: A member suggested organizing an event on Saturday, creating a placeholder to confirm the timing. They shared an invite link to the event on Discord: event link.

- Dwarf Fortress Reference Appreciated: A member expressed their admiration for the Dwarf Fortress reference, stating it as one of their favorite games. Another member noted they've watched many Dwarf Fortress stories on YouTube.

- Thumbnails for Reading Group on YouTube: A member offered to design thumbnails for the Reading Group sessions uploaded to YouTube. They shared a design that received positive feedback, with minor suggestions for improving text readability.

Link mentioned: Join the Hugging Face Discord Server!: We're working to democratize good machine learning 🤗Verify to link your Hub and Discord accounts! | 79111 members

HuggingFace ▷ #computer-vision (10 messages🔥):

- Training Data Essential for Sales Prediction: A member suggested using a table where columns represent image features and sales figures, and comparing new product visual features to this data could provide insights. They stressed the importance of having relevant training data.

- Sales Prediction Dataset Shared: Another member shared a Sales Prediction Dataset including image pixels and sales figures. This dataset aims to assist in building a model that uses image inputs to predict sales outputs.

- Training Models on Image Features: A member recommended fine-tuning a CNN to get feature maps, then appending these maps with sales data. They further suggested training models like RF, SVM, or XGBoost to evaluate against image similarity results.

- Query on Image Manipulation Detection Models: A member inquired about models capable of detecting image forgery without needing a dataset. They sought models that could determine if an image has been edited.