[AINews] Stable Diffusion 3 — Rombach & Esser did it again!

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

AI News for 3/4-3/5/2024. We checked 356 Twitters and 22 Discords (352 channels, and 7550 messages) for you. Estimated reading time saved (at 200wpm): 697 minutes.

Warm welcome to the >2500 people who joined from Soumith's shoutout last night! Its kinda like having a crowd of visitors over when the house isn't clean yet - we're still very much building the plane while we jump off a cliff. But we're increasingly happy with our prompts, pipeline, and exploration of what useful, SOTA LLM-based summarization can and should do.

Lots of people are still processing Claude 3 but we're moving on. Today's big news is the Stable Diffusion 3 paper. SD3 was announced (not released) a few days ago, but the paper provides much more detail.

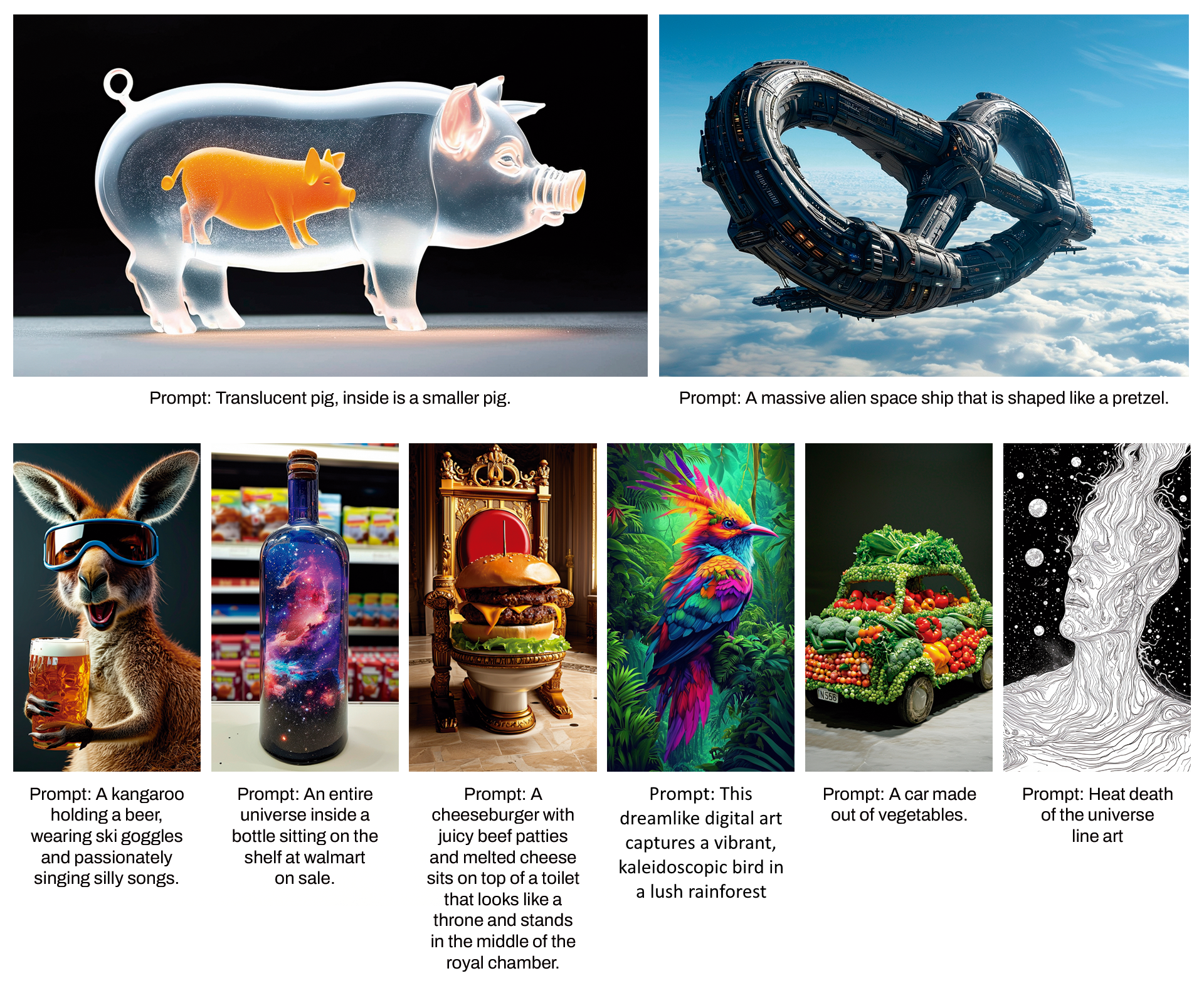

Obligatory images because really who reads the text I'm writing here when you can see pretty pictures:

We are more impressed with the incredible level of text-in-image control and handling of complex prompts (see the progress over the last 2 years):

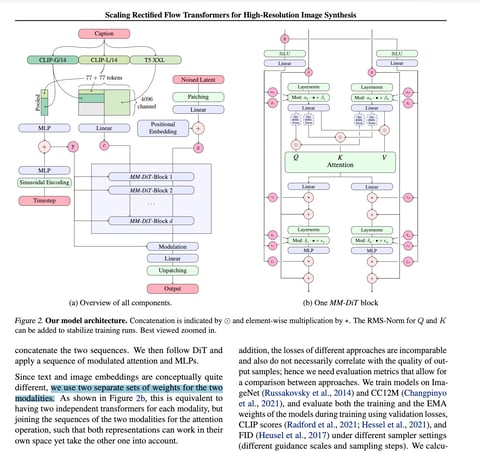

Paper highlights here but in short they have modified Bill Peebles' Diffusion Transformer (yes the one used in Sora) to be even more multimodal, hence "MMDiT":

DiT variants have been the subject of intense research this year, eg for Hourglass Diffusion and Emo.

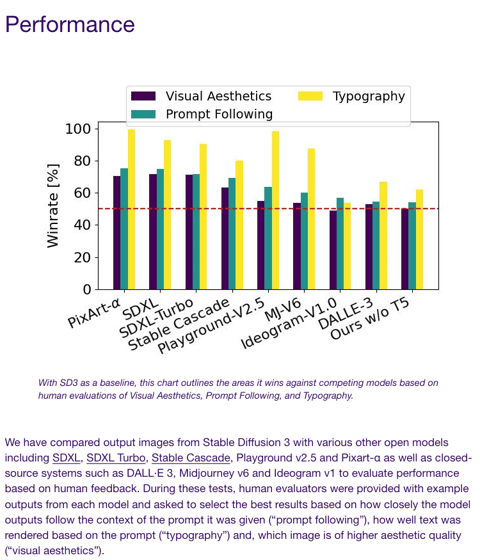

Stability's messaging around its benchmarks has been all over the place recently (e.g. for SD2 and SDXL and , making it unclear whether the main benefit is image quality or open source customizability or something else, but SD3 is pretty unambiguous - when evaluated on Partiprompts questions via REAL HUMANS ($$$), SD3's win rate beats all other SOTA image gen models (except perhaps Ideogram).

It's currently unclear whether the 8B SD3 model will ever be released beyond Stability's API wall. But surely a new SOTA model, from the people that launched the new imagegen summer, is to be celebrated regardless.

Table of Contents

We are experimenting with removing Table of Contents as many people reported it wasn't as helpful as hoped. Let us know if you miss the TOCs, or they'll be gone permanently.

PART X: AI Twitter Recap

Claude 3 Sonnet (14B?)

Anthropic Claude 3 Release

- Anthropic released Claude 3 models, which some feel are slightly better than GPT-4 and significantly better than other models like Mistral. Key improvements include more human-like responses and ability to roleplay with emotional depth.

- Claude 3 scored 79.88% on a HumanEval test, lower than GPT-4's 88% on the same test. It is also over 2x the price of GPT-4.

- There are now three top-tier models of comparable intelligence (Anthropic Claude 3, OpenAI GPT-4, Anthropic Gemini Ultra), enabling advances in imitation-based fine-tuning.

AI Model Releases & Datasets

- Microsoft released new Orca-based models and datasets.

- Stability AI and Tripo AI released TripoSR, an image-to-3D model capable of high quality outputs in under a second.

- DolphinCoder-StarCoder2-15b was released by Latitude with strong coding knowledge. Smaller StarCoder2 models and CodeLlama are planned.

AI Capabilities & Use Cases

- Claude 3 models are very good at generating d3 visualizations from text descriptions.

- Perplexity AI is integrating Playground AI's image models to enhance answers with visual illustrations.

- PolySpectra uses LLMs to generate 3D CAD models from text prompts, powered by LlamaIndex.

AI Development & Evaluation

- Fine-grained RLHF improves LLM performance and customization compared to holistic preference, based on research from MosaicML and Stanford.

- LLMs can "know" when they are being tested and elementary reasoning errors in LLMs are similar to those made by humans.

- Validation loss is a poor metric for choosing LLM checkpoints to deploy.

Memes & Humor

- Humorous tweet about a cat colony memorializing Julius Caesar amidst discussion of the new Claude model.

- Joke about breaking a MacBook screen and leg bone while walking into a heavy hotel table.

In summary, the release of Anthropic's Claude 3 models has generated significant discussion, with comparisons being made to GPT-4 in terms of performance, cost, and capabilities. Claude 3 demonstrates strong language understanding and generation, but lags behind GPT-4 on some coding tests.

Alongside the Claude 3 release, there have been other notable AI model and dataset releases from Microsoft, Stability AI, Latitude, and others. These span a range of applications including coding, 3D model generation, and image-to-text.

Researchers continue to advance techniques for fine-tuning and evaluating large language models, such as using fine-grained RLHF and being cautious with metrics like validation loss. There are also observations about the reasoning capabilities and potential self-awareness of LLMs.

Amidst the technical discussions, there is still room for humor, as evidenced by jokes and memes shared alongside the AI news and analysis. Overall, the tweets paint a picture of an AI field that is rapidly advancing in terms of model scale and capabilities, but also grappling with important questions around evaluation, safety, and potential impacts.

ChatGPT (GPT4T)

- Claude 3 vs GPT-4 Discussions: The AI community is actively discussing Claude 3's human-like response capabilities, with one engineer noting its ability to emphasize words in a way GPT-4 doesn't. However, there's skepticism about whether its performance is truly groundbreaking or just the result of specific training data, as mentioned in a tweet by Giffmana. Claude 3's humaneval test score comparison with GPT-4 was noted, highlighting Claude 3 scored 79.88% versus GPT-4's 88% in a specific test, as Abacaj tweeted.

- Model Performance and Benchmarks: The debate on the efficiency and effectiveness of AI models like Claude 3 continues, with a particular focus on cost versus performance. Some tweets highlight Claude 3's ability in specific tasks (Teknium1's comparison) and the comparison in price to performance ratio against GPT-4, providing valuable insights for developers focusing on optimizing resource allocation in AI projects.

- Affiliate Revenue Distribution: A tweet disclosing AG1 costs and revenue distribution sheds light on the financial mechanics of AI service products. Such transparency in revenue sharing models offers a nuanced understanding of the AI product ecosystem, crucial for entrepreneurs and engineers in the tech space.

- Playground AI's Integration: The integration of Playground AI as the default model for Perplexity Pro users (AravSrinivas's announcement) is a significant step forward in AI-driven image generation. Also, the deployment of TripoSR for creating 3D models from images highlights the advancing capabilities in multidimensional AI applications.

- Importance of Quality Data: A succinct reminder of the value of quality over quantity in data for AI training was highlighted by Svpino, a crucial consideration for engineers working on data-driven AI models (related tweet).

- Attention to Datasets: BlancheMinerva's urging of deeper dataset analysis (tweet) before jumping to conclusions about LLM behavior underscores the critical need for meticulous data scrutiny in AI development.

AI Humor & Memes

- Creative AI Misadventures: A humorous account of technological mishaps and the lighter side of AI-related accidents, like breaking a leg on a heavy hotel table and then proceeding to make jokes about further potential "breaks" (Levelsio's tweet), provides much-needed levity in the often serious AI conversation sphere.

This summary illuminates the multifaceted discussions within the AI tech community, from deep dives into model performance and its real-world applicability to societal reflections observed through technological lenses. The emphasis on Claude 3's capabilities versus GPT-4, alongside methodological considerations in AI model development and deployment, underscores the ongoing efforts toward more nuanced, human-like AI. Furthermore, the exploration of Korea's cultural and economic landscapes through the tech lens highlights the complex interplay between societal structures and technological development, offering invaluable insights for tech professionals navigating global AI applications.

PART 0: Summary of Summaries of Summaries

Operator notes: Prompt we use for Claude, and our summarizer GPT used for ChatGPT. What is shown is subjective best of 3 runs each.

Claude 3 Sonnet (14B?)

Interestingly Sonnet failed to understand the task the 2nd time we ran it (not understanding that we want it to summarize across ALL the summaries and raw text - which today total 20k words).

- Mistral Model Insights and Confusion: Conversations centered around Mistral models, including clarifications on context size handling across different tokenizers like tiktoken, hardware recommendations, free availability concerns with LeChat, and inquiries into Mistral's open-source direction and minimalistic reference implementations. Mixtral's lack of sliding window attention was also discussed.

- Perplexity AI Integration and Usability: Users explored the integration of Playground AI's V2.5 model and Claude 3 with Perplexity AI Pro, shared thoughts on quota limits for Claude 3 Opus, speculated about Perplexity's future directions, and exchanged tips on optimizing AI-powered features like image generation and search.

- LLM Coding Prowess and Quantization Techniques: Cutting-edge AI models like OpenHermes-2.5-Code-290k-13B and Code-290k-6.7B-Instruct were introduced, boasting impressive coding capabilities. Developers also discussed quantization approaches like GGUF quantizations and their quality/speed trade-offs.

- Nvidia Puts the Brakes on Translation Layers: Nvidia has implemented a ban on using translation layers to run CUDA-based software on non-Nvidia chips, targeting projects like ZLUDA, with further details discussed in a Tom's Hardware article. Some members expressed skepticism over the enforceability of this ban.

- Lecture 8 on CUDA Performance Redone and Released: The CUDA community received a re-recorded version of Lecture 8: CUDA Performance Checklist, which includes a YouTube video, code on GitHub, and slides on Google Docs, garnering appreciation from community members. Discussions ensued on the mentioned DRAM throughput numbers and performance differences in coarsening.

Claude 3 Opus (8x220B?)

- Claude 3 Shakes Up the AI Landscape: Anthropic's release of the Claude 3 model family has sparked widespread discussion, with the Claude 3 Opus and Claude 3 Sonnet variants demonstrating impressive capabilities in reasoning, math, coding, and multi-modal tasks. Users report Claude 3 outperforming GPT-4 in certain benchmarks like summarization and instruction-following. However, concerns arise over its pricing structure and regional availability.

- CUDA Controversies and Optimizations: Nvidia's ban on translation layers for CUDA on non-Nvidia chips has stirred skepticism over enforceability. Meanwhile, developers troubleshoot CUDA errors like

CUBLAS_STATUS_NOT_INITIALIZED, with causes ranging from tensor dimensions to memory issues. Optimization discussions cover CUTLASS, cuda::pipeline efficiency, and the nuances of effective bandwidth versus latency.

- Prompt-Engineering Puzzles and LLM Integrations: Across various communities, users grapple with prompt-engineering challenges, from AI's refusal to accept internet access capabilities to inconsistencies in ChatGPT API transitioning. Simultaneously, new tools and integrations emerge, like the RAPTOR tree-structured indexing technique, Claude 3 support in LlamaIndex, and Datasette's plugin for Claude 3 interaction.

- Pushing Boundaries in AI Applications: Exciting developments surface across AI subdomains, including the text-to-3D model generation platform neThing.xyz, leveraging Claude 3 and LLM code generation. Real-Time Retrieval-Augmented Generation (RAG) with LangChain enables enhanced chatbots, while explorations in augmenting classification datasets promise improved model reasoning. Initiatives like the Open Source AI Definition and AI-native business repositories aim to guide and curate the rapidly evolving AI landscape.

ChatGPT (GPT4T)

AI Ethics and Regulatory Discussions: Detailed conversations across various Discords, such as TheBloke, underline the criticality of AI ethics, regulatory measures, and security in AI development, including the White House's stance on avoiding C and C++ for security reasons and the UK's potential AI legislation.

Model Innovations and Performance: Discussions span multiple platforms, from Mistral explaining Mixtral vs. Mistral model differences, to Perplexity AI highlighting Claude 3's capabilities and Nous Research AI debating Claude 3 and GPT-4. Emerging models like OpenHermes-2.5-Code-290k-13B showcase superior performance, while CUDA MODE focuses on CUDA's technical challenges and advancements.

Emerging Technologies and AI's Role in Creative Domains: LAION and Latent Space discussions delve into AI's impact on creative fields, such as pixel art generation techniques and 3D modeling advancements, highlighting the Stable Diffusion 3's MMDiT architecture and its superior performance.

Technical Challenges and Solutions in AI Application: LangChain AI explores caching issues in LLM interaction and Real-Time Retrieval-Augmented Generation (RAG), while OpenAccess AI Collective (axolotl) discusses model merging with MergeKit as an innovative alternative to traditional fine-tuning methods.

PART 1: High level Discord summaries

TheBloke Discord Summary

- AI Ethics Calls for Caution: Engaging discussions focused on AI ethics and the importance of regulatory measures to prevent misuse, as well as the implications of AI in mass profiling and surveillance. The White House's stance on avoiding C and C++ for security reasons and potential AI legislation by the UK government were part of the discussions.

- Model Performance Measures and Development Strides: Conversations pivoted around AI capabilities, including remarks on improved responses from GPT-3.5 Turbo on riddles, and the challenges in gradient-free deep learning. Experimental quantization techniques, including imatrix quants and GGUF quantizations, were debated considering their quality and speed trade-offs.

- Emerging AI Models Take the Spotlight: The model OpenHermes-2.5-Code-290k-13B was shared, boasting superior performance and combining datasets with rankings under Junior-v2 in CanAiCode. Moreover, details on the training of Code-290k-6.7B-Instruct, taking 85 hours for 3 epochs on 4 x A100 80GB, were also provided.

- Legal Complexities Touch AI: The discussion also delved into legal aspects, highlighting enforceable verbal contracts in Scotland and Germany's mandate for open-source government systems. Participants also expressed concern over using unlicensed AI models like miquliz and cautioned against potential legal repercussions.

- Crypto Dialogue Entangles Blockchain Skepticism: The volatility and viability of cryptocurrency markets were hot topics, accompanied by the debate on the overestimation of blockchain benefits for distributed computing and current investment fads.

Mistral Discord Summary

- Mixtral vs. Mistral Context Confusion Resolved:

i_am_domclarified that Mixtral does not support sliding window attention like Mistral, referencing a Reddit post. The issue of Mixtral Tiny GPTQ using different tokenization was probed, prompting discussions on correct context size handling and the impact of different tokenizers on VRAM requirements.

- Mistral Models Tug-of-War: There were deep dives into Mistral models' capabilities, with

mrdragonfoxsuggesting that the Next model might be an independent line from Medium, andmehdi1991_exploring GPU options for running large models. The community shared concern about the LeChat model's free availability potentially leading to service abuse.

- Open-Sourcing and Models in the Marketplace: A desire for clarity on Mistral's open-source direction was indicated.

@casper_ai's request for a minimalistic reference implementation for Mistral highlights the community's need for better understanding of the model's training process. Discord bots supporting over 100 LLMs and Telegram-hosted chatbots powered by mistral-small-latest illustrated the active integration of Mistral models across various platforms.

- Anthropic's New Model and Mistral Pricing Conversations:

@benjoyo.shared news on Anthropic's Claude 3 models and its function-calling feature in alpha. The community debated the cost of using new models like Opus, and Mistral's potential open weights advantage was discussed as a unique selling point.

- Real World Model Evaluations and Educational Office Hours: In the office-hour channel, discussions on manual and real-life evaluation such as benchmarking against MMLU were key, while future model training and expansion queried by users like

@kalomazeand@rtyaxshowed the community's future-looking interests.

- Troubleshooting Day-to-Day Model Anomalies: Users encountered and resolved issues from incorrect JSON response handling to authentication anomalies. The effectiveness of Mistral 8x7b at sentiment analysis was discussed, while "405" API errors were debugged with advice to use the "POST" method.

- Mathematical Challenges Reveal Model Limitations:

@awild_techand others pointed out inconsistencies in Mistral Large's responses to mathematical problems like the floor of 0.999 repeating, illustrating limitations in understanding and consistency within the models.

Perplexity AI Discord Summary

- Perplexity AI Pro Enhances with V2.5 and Claude 3: Users now have access to Playground AI's new V2.5 for generating images, and Perplexity Pro users can explore the capabilities of Claude 3, with the advanced Opus model allowed for 5 daily queries. Details on Playground’s collaboration with Perplexity can be found in their blog post, while the distinction between Claude 3 Opus and the faster Sonnet model has raised questions among users about their deployment and operations.

- Community Weighs In on Claude 3's Daily Query Limit: There's a buzz around the 5-query per day limit for Claude 3 Opus, and members are discussing whether it outperforms GPT-4 in coding and problem-solving, with some advocating for improvements on Claude 3 Sonnet's usability.

- Perplexity's Future Speculations and Promotions: Users are sharing tips on optimizing Perplexity's AI for tasks like image generation and search functionality, alongside predictions for future dedicated AI models. Conversations also revolve around accessing Claude 3 and related models, with references to the Rabbit R1 deal described in Dataconomy's article.

- Assorted Uses of Perplexity AI Search Revealed: Engagement in Perplexity AI's search capacity show users looking for information on diverse topics including Antarctica, US-Jordan relations, promotional Vultr codes, and historical inquiries.

- API Access and Configuration Chatter for AI Engineers: API users like

@_samratare advised patience for access to citations, with response times upwards of 1-2 weeks. There's an evolving understanding that the temperature setting in NLP tasks can be nuanced, with lower temperatures not guaranteeing more reliable outcomes, and curiosity about potential model censorship via API and quota carryovers between different platforms.

OpenAI Discord Summary

- Translation AI Search for Purity: Users seeking alternatives to Google Translate mentioned dissatisfaction with its robotic output, with GPT-3.5 suggested as a superior option for quick and accurate translations.

- Claude 3 Outshining ChatGPT?: Conversations around Claude 3 from Anthropic highlighted its improved capabilities, particularly in graduate-level reasoning, although some users reported weaker logic and image recognition in Chinese compared to GPT-4.

- GPT-4 Token Limits in the Spotlight: Technical discussions pinpointed limitations with GPT-4, especially around token limits for inputs and contexts, with users sharing their experiences with various versions of the model.

- Storytellers Prefer Claude: Claude 3 was reported to excel in roleplay and creative writing, prompting users to anticipate the release of GPT-5 as competition heats up with advanced language models like Gemini.

- Real-Time Confusion and API Woes: Prompt-engineering conundrums included an AI's denial of internet access capabilities, difficulties in improving clarity and conciseness, and Custom GPT models giving uncooperative responses.

- Seeking Solid API Foundations: Users debated the challenges of style consistency when transitioning from ChatGPT to GPT 3.5 API, as well as identifying usability issues with GPT's visual and mathematical prompt handling.

Nous Research AI Discord Summary

- Claude 3 Sparks Price and Performance Debate: AnthropicAI's announcement of Claude 3 stirred discussions comparing its performance and cost to GPT-4. Some users, using EvalPlus Leaderboard comparisons and related tweets, pondered Claude 3's value proposition over OpenAI's offerings, citing differences in human eval scores.

- New AI Models and Efficiency Takes Center Stage: Articles referencing OpenAI CEO Sam Altman's remarks about the future being in architectural innovations and parameter-efficient models, rather than simply larger ones, were shared (source). Additionally, the release of moondream2, a small vision language model suitable for edge devices, was brought into focus (GitHub link).

- Prompt Engineering Resources Shared: A guide detailing prompt engineering techniques to enhance Large Language Models (LLMs) outputs was circulated, offering strategies for improved safety and structured output (guide link). Model comparison and evaluation discussions highlighted new AI models like

dolphin-2.8-experiment26-7b-preview.

- Continuous Pretraining and Model Training Discourse: A dialogue about continuous pretraining featured suggestions of using a modified gpt-neox codebase and axolotl for varied scales of pretraining. Inquiries about training on large datasets of physics papers suggested a blend into pretraining datasets, emphasizing the need for ample compute resources.

- Combining Inference Strategies for Enhanced AI: A proposition to combine models like Hermes Mixtral or Mixtral-Instruct v0.1 using techniques such as RAG and specific system prompts was discussed, referencing tools like fireworksAI or openrouter for effective inference.

- Bittensor Finetune Subnet v0.2.2 Update: The Bittensor Finetune Subnet released version 0.2.2, featuring an updated transformers package with a fixed Gemma implementation (GitHub PR). The release equaled the reward ratio between Mistral and Gemma to 50% each.

LAION Discord Summary

- Scaling Titan: Machine Learning Faces Compute Bottlenecks: The discussion led by

@pseudoterminalxfocused on challenges in scaling up model training, particularly bottlenecks in data transfer between CPU/GPU and the limited benefits of increasing compute resources without overcoming these inefficiencies.

- AI's Pixel Art Palette Problem:

@nodjaand@astropulseexplored techniques for generating pixel art using AI, with talks delving into methods for applying palettes in latent space and integrating them into ML models, highlighting the nuanced technical hurdles in this creative AI domain.

- Claude 3 Outshines GPT-4: The engineering community compared the capabilities of the newly unveiled Claude 3 model against GPT-4, citing its improved performance with

@segmentationfault8268contemplating a switch from ChatGPT Plus based on this advancement, as per discussions and announcements found on Reddit and LinkedIn.

- Stable Diffusion 3 Peaks Interest with MMDiT Architecture: The shared research paper from Stability AI's blog post, posted by

@mfcool, presented Stable Diffusion 3's impressive performance, surpassing DALL·E 3 and others, thanks to its Multimodal Diffusion Transformer.

- SmartBrush: The New Inpainting Maestro?: User

@hiieeprompted discussions around SmartBrush, a model for text and shape-guided image inpainting showcased in an arXiv paper, with inquiries about its open-source availability and its potential for preserving backgrounds better than existing inpainting alternatives.

HuggingFace Discord Summary

AI Breakthroughs and Hiccups: Discussions spanned the performance of Hermes 2.5 over Hermes 2 and the limitations of expanding Mistral beyond 8k. There's also a focus on calculating gradients in novel ways and the repeated request for assistance with dataset creation without yet finding a resolution.

Diffusion Model Guidance on HuggingFace: Members discussed a potential NSFW model, AstraliteHeart/pony-diffusion-v6, on HuggingFace, with suggestions to tag it appropriately or report it. Additionally, guidance was provided for image prompting in diffusion models, directing users to a IP-Adapter tutorial.

CV and NLP Cross-Talk: The community engaged in topics ranging from the introduction of the Terminator network and its integration of past technologies to the quest for the SOTA in bidirectional NLP language models, touching on options like Deberta V3 and the monarch-mixer. Problems shared included difficulties with enhancing Mistral with GBNF grammar, variable inference times with Mistral and BLOOM models, and implementing Mistral in Windows apps.

Kubeflow Gets a Terraform Boost: In the realm of tools and platforms, Kubeflow can now be deployed using a terraform module, effectively transforming Kubernetes clusters into AI-ready environments. Moreover, MEGA's performance on short-context GLUE benchmarks and Gemma Model's speed boost using Unsloth were also introduced, showcasing various community-driven advancements.

Video-Related Innovations and Problems: The release of Pika operates as an indicator of the growing trend in text-to-video generation. Contrastingly, a user experienced visual issues with a Gradio-embedded OpenAI API chatbot, looking for assistance to fix the layout.

Reading Group Revival: Concern was expressed over the scheduling of reading group sessions, debating the merits between Discord and Zoom for hosting. There is also mention of recordings available on Isamu Isozaki's YouTube profile for those unable to attend live sessions.

OpenRouter (Alex Atallah) Discord Summary

- Claude 3: The New Kid on the Block: OpenRouter introduces Claude 3, encompassing a sophisticated experimental self-moderated version, Claude 3 Opus with impressive emotional intelligence (EQ), and a cost-effective alternative to GPT-4, Claude 3 Sonnet, with multi-modal capabilities.

- Price Wrangling Incites Discussion: The guild debates Claude 3's pricing, with users baffled by the price hike from Claude 3 Sonnet to Claude 3 Opus. This includes comic comparisons to physical services and a general need for clarification on the cost structures.

- Tech Troubles Stir the Pot: Members report issues interacting with the new Claude models, receiving blank responses from all except the 2.0 beta. The community steps in offering troubleshooting advice, with potential causes like region blocks or using unimplemented features like image inputs.

- The Pen is Mightier with Claude: Claude's literary capabilities are a mixed bag, getting applause for their writing quality from some, while others encounter repetitious, unwanted auto-generating responses. Community troubleshooters suggest this might be due to tokenization errors rather than the model's inherent flaws.

- More Robust than GPT-4? Persistent conversations about Claude 3's performance vis-à-vis other models highlight its edge in certain tests, but also raise questions about the predictability of real vs predicted costs, which are key considerations for scalability and integration in enterprise solutions.

LlamaIndex Discord Summary

- RAPTOR Webinar Beckons: A webinar featuring RAPTOR, an innovative tree-structured indexing technique, is announced with a registration link. The session aims to enlighten participants on clustering and summarizing information hierarchically and is scheduled for this Thursday at 9 am PT.

- Launch of Claude 3 with Strong Benchmarks: Claude 3 is now supported by LlamaIndex and is claimed to have superior benchmark performance than GPT-4. It comes in three versions, with Claude Opus being the most powerful and is suitable for a broad spectrum of tasks including multimodal applications; a comprehensive guide and showcase Colab notebook are provided here.

- 3D Modeling Revolution with neThing.xyz: The platform neThing.xyz uses LLM code generation to transform text prompts into ready-to-use 3D CAD models, propelled by the capabilities of Claude 3 and profiled by a LlamaIndex tweet.

- Evolving Infrastructure Discussions:

- Llama Networks has a FastAPI server setup suitable for client-server models, and is receptive to expansion ideas.

- Updating nodes in PGVectorStore typically requires document reinsertion as opposed to individual node edits.

- Business planning may benefit from the integration of ReAct Agent and FunctionTool with OpenAI services.

- A request was made to amend installation commands to lowercase on the Llama-Index website for accuracy.

- Exploring Deep AI Topics: An article discussing the integration of LlamaIndex with LongContext and highlighting Google’s Gemini 1.5 Pro’s 1-million context window drew interest from participants, signaling its relevance to AI development and enterprise applications. The article can be explored here.

CUDA MODE Discord Summary

- Nvidia Puts the Brakes on Translation Layers: Nvidia has implemented a ban on using translation layers to run CUDA-based software on non-Nvidia chips, targeting projects like ZLUDA, with further details discussed in a Tom's Hardware article. Some members expressed skepticism over the enforceability of this ban.

- CUDA Error Riddles and Kernel Puzzles: CUDA developers are troubleshooting errors like

CUBLAS_STATUS_NOT_INITIALIZEDwith suggestions pointing to tensor dimensions and memory issues, as seen in related forum posts. Other discussions centered aroundcuda::pipelineefficiency and understanding effective bandwidth versus latency, referencing resources such as Lecture 8 and a blog on CUDA Vectorized Memory Access.

- CUTLASS Installation Q&A for Beginners: New AI engineers sought advice on installing CUTLASS, learning that it's a header-only template library, with installation guidance available on the CUTLASS GitHub repository, and requested resources for implementing custom CUDA kernels.

- Ring-Attention Project Gets the Spotlight: A flurry of activity took place around the ring-attention experiments with conversations ranging from benchmarking strategies to the progression of the 'ring-llama' test. An issue with a sampling script is in the process of being resolved as reflected in the Pull Request #13 on GitHub, and the Ring-Attention GitHub repository was shared for those interested in the project.

- Lecture 8 on CUDA Performance Redone and Released: The CUDA community received a re-recorded version of Lecture 8: CUDA Performance Checklist, which includes a YouTube video, code on GitHub, and slides on Google Docs, garnering appreciation from community members. Discussions ensued on the mentioned DRAM throughput numbers and performance differences in coarsening.

LLM Perf Enthusiasts AI Discord Summary

- OpenAI's Browsing Innovation: @jeffreyw128 expressed excitement about OpenAI's new browsing feature, which is akin to Gemini/Perplexity. They highlighted this with a shared Twitter announcement.

- Claude 3 in the Running Against GPT-4: Claude 3 was a hot topic, with suggestions from

@res6969and@ivanleomkthat it might surpass GPT-4 in math and code benchmarks.

- Opus Model Pricing Discussed: There was debate over Opus pricing; it is reported to be 1.5x the cost of GPT-4 turbo, yet 66% cheaper than regular GPT-4 as clarified by

@pantsforbirdsand@res6969.

- The Excitement and Skepticism Around Fine-Tuning: The community exchanged views on fine-tuning LLMs.

@edencoderargued for its cost-effective benefits for specialized tasks, while@res6969questioned the return on investment for particular applications.

- Anthropic's Models Gain Mixed Reviews: Insights into Anthropic's models were mixed:

@potrockand@joshcho_discussed Opus' strengths in coding tasks, while@thebaghdaddynoted that for fields like medicine and biology, GPT-4 still overtakes newer models in performance.

Interconnects (Nathan Lambert) Discord Summary

- Intel's Strategic Stumbles:

@natolambertshared insights on Intel's current position in the tech industry via a Stratechery article and YouTube video titled: "Intel's Humbling", underscoring the nuanced analysis provided.

- Claude 3 Steals The Show:

Claude 3, announced by AnthropicAI, has sparked excitement and debate with its improved abilities. Discussion revolves around its performance, with @xeophon. and

@natolambertspeaking on specific instances of its capabilities, while@mike.lambertpondered its impact on the open-source landscape and@canadagoose1and@sid221134224expressed that it might surpass GPT-4.

- Flaming Q* Tweets Over Claude 3:

Drama ensued on Twitter with Q* tweets following Claude 3's release, with

@natolambertoffering a critical take on the discussions and the idea of using alt accounts being dismissed due to the effort involved.

- AI2 Eyeing Pretraining Pros:

@natolambertreached out for specialists in pretraining interested in AI2's mission, specifically noting that they are presently focusing on this area of hiring and humorously suggesting that individuals disillusioned with Google's handling of Gemini may be potential recruits.

- RL Debate on Cohere's PPO Paper:

The dialogue in RL circles touched on Cohere's claim about the redundancy of PPO corrections for large language models, with

@vj256searching for further evidence or replication and@natolambertacknowledging prior familiarity with these claims, also directing to related interviews and research papers pertinent to Reinforcement Learning from Human Feedback (RLHF).

LangChain AI Discord Summary

- Cache Woes in Langserve's LLM Interaction: Users

@kandieskyand@veryboldbageldelved into difficulties with caching in Langserve.@kandieskyrevealed that caching only operated correctly with the/invokeendpoint and not with/stream, while@veryboldbagelnoted the root issue lies in langchain-core.

- Real-Time RAG Unleashed:

@hkdulaypresented a blog post that showcases building a Real-Time Retrieval-Augmented Generation (RAG) chatbot with LangChain, stressing the significant step-up it can provide in improving language model responses.

- Deep Dive into RAG's Indexing: In a quest for enhanced AI responses,

@tailwind8960shared insights into the indexing challenges in the Advanced RAG series through a new installment, emphasizing the need to preserve context in queries.

- Synergizing AI and Business Projects:

@manojsaharanis spearheading a collaborative initiative to amalgamate AI with business in a GitHub repository and invited contributors from the LangChain community to join via this link to the repository.

- Enter Control Net, the Standup Chicken Imager:

@neil6430demonstrated the whimsical potential of AI-generated art using control net features from ML blocks, achieving the odd task of a chicken emulating Seinfeld's standup stance.

Latent Space Discord Summary

- Claude 3 Sparks Interest and Debate: Engineers discussed Claude 3's benchmarks and pricing, with competitive performance comparisons to GPT-4 sparking significant interest. Concerns about API rate limits were raised, referencing Anthropic's rate limits documentation, suggesting a potential bottleneck for scalability.

- Direct Model Showdown: AI enthusiasts performed analysis between Claude 3 and GPT-4, with a shared gist by @thenoahhein detailing Claude 3's alignment and summary skills, championing its capabilities over GPT-4.

- Next-Gen 3D Modeling Teased: A partnership to develop sub-second 3D model generation technology was previewed by @EMostaque, discussing advancements such as auto-rigging, hinting at significant implications for creative industries.

- Based Architecture Unveiled: A discussion unfolded around a new Based Architecture paper with attention-like primitives optimized for efficiency, which resonates with the engineer's continuous quest for improved computational processes.

- AI Consciousness Controversy: The AI community engaged in a heated debate over the sentience of Claude 3, following a provocative LessWrong post. Counterarguments against AI consciousness were circulated, keeping the discourse grounded in skepticism amidst anthropomorphic claims.

OpenAccess AI Collective (axolotl) Discord Summary

- Math Bots to the Rescue: A dataset for bot-based math problem solving was highlighted on the Orca Math Word Problems dataset available on Hugging Face, demonstrating bots' capability in algebraic reasoning and competition ranking tasks.

- MergeKit Magic: Interest in model merging as an alternative to fine-tuning using the MergeKit tool on GitHub was noted, indicating an innovative tool to combine pre-trained model weights of large language models.

- AI Censorship Balance: A discussion on the challenging aspect of balancing AI response generation in Claude - 3 model, especially related to racial sensitivity, was informed by a relevant ArXiv paper.

- Dataset Enrichment for AI Reasoning: A strategy for enhancing dataset reasoning capabilities for AI was shared through a guide on Twitter by

@winglian. - LoRA+ Experiments Advance:

@suikamelonconducted experiments with the LoRA+ ratio feature, noticing it requires a lower learning rate, as per the discussion in the #axolotl-dev channel, based on guidelines from the LoRA paper.

Datasette - LLM (@SimonW) Discord Summary

- Prompt Injection vs Jailbreaking: Prompt injection is an exploitative technique that combines trusted and untrusted inputs in LLM applications different from jailbreaking, which attempts to bypass LLM safety filters. Simon Willison's blog expands on this crucial distinction.

- LLM Misuse by State Actors: Microsoft's blog outlined the use of LLMs by state-backed actors for cybercrimes like vulnerability exploitation and creating spear phishing emails, including the incident with "Salmon Typhoon." The related OpenAI research can be found here.

- Early Warning System for LLM-Aided Biorisks: OpenAI is devising systems to flag biological threats assisted by LLMs, as they can easily facilitate access to sensitive information. Their initiative details are available here.

- Ceasefire with Multi-Modal Prompt Injection: Addressing prompt injection risks, it's admitted that even human review can miss some forms of injection, especially those concealed within images. Simon Willison analyzes this threat vector deeper in his write-up.

- Mistral's Might & Plug-in Pick-Me-Up: The new mistral large model is applauded for its data extraction prowess, although costly, and the rapid creation of a Claude 3 plugin was notably commended. Also, there's a movement towards standardizing locations for model files to optimize development workflows.

DiscoResearch Discord Summary

- Claude-3's Multilingual Performance Evaluated:

@bjoernpsparked a conversation about Claude-3's multilingual capabilities, with@thomasrenkertreporting that Claude-3-Sonnet provides decent German answers and outperforms GPT-4 in structure and knowledge. - Claude-3 Spotted in the EU: Despite its official geographical limitations, members like

@sten6633and@devnull0have managed to sign up and access Claude-3 in Germany, including workaround mentions like tardigrada.io. - Opus API Embraces German Users: The Opus API now seemingly accepts German phone numbers for registration, incentivizing new users with credits, and earning praise for its efficacy in resolving complex data science queries.

- Test AI Models Without Costs:

@crispstrobehighlights a way to try out AI models free of charge using chat.lmsys.org, with conditions that input may be used for training data, and shares poe.com's offer of three different models for trial, including a Claude 3 variant with a limit of 5 messages a day.

Skunkworks AI Discord Summary

No relevant technical discussions or important topics to summarize were provided in the given messages.

Alignment Lab AI Discord Summary

- Let's Collaborate!: User

@wasoolishowed interest in a collaborative project within the Alignment Lab AI and has been encouraged to discuss further details through direct messages by@taodoggy.

AI Engineer Foundation Discord Summary

- Keep Up with Open Source AI Progress:

@swyxiodrew attention to the Open Source Initiative's efforts in providing monthly updates about the Open Source AI Definition, with the recent version 0.0.5 being published on January 30, 2024. Practitioners can stay informed and contribute to the conversation by reviewing the monthly drafts.

PART 2: Detailed by-Channel summaries and links

TheBloke ▷ #general (1100 messages🔥🔥🔥):

- Discussions on AI Ethics and Regulatory Measures: The channel engaged in debates on AI ethics, the necessity of regulatory measures to prevent misuse, and talked about the White House report on not using C and C++. Concerns about mass profiling/surveillance, data privacy under laws like the EU's "Right to be Forgotten," and potential AI legislation narratives by the UK government were discussed.

- AI Performance and Development: Users compared AI capabilities, citing improved responses from models like GPT-3.5 Turbo on riddles. There were also conversations about the incremental progress of AI models, and the challenges of attempting gradient-free deep learning.

- A look at Claude 3 Opus: User

@rolandtannousshared his positive experience using Claude 3 Opus as a brainstorming partner, showing an improvement in handling tasks like coding, highlighting that Claude 3 Opus performed superior to its previous versions. - Legal Discussions in AI Utilization: The chat touched briefly upon legal issues related to AI, such as enforceable verbal contracts in Scotland and the implications of regulations like Germany's requirement for government systems to be open-source.

- Crypto Market and Blockchains: Participants discussed the state and reliability of cryptocurrency markets, the potential for blockchain in distributed computing, and current investment trends. Concerns about the over-hyping of blockchain benefits and the practicality of cryptocurrencies were expressed.

Links mentioned:

- no title found: no description found

- Docker: no description found

- BASED: Simple linear attention language models balance the recall-throughput tradeoff: no description found

- abacusai/Liberated-Qwen1.5-72B · Hugging Face: no description found

- Futurama Maybe GIF - Futurama Maybe Indifferent - Discover & Share GIFs: Click to view the GIF

- Magician-turned-mathematician uncovers bias in coin flipping | Stanford News Release: no description found

- Philosophical zombie - Wikipedia: no description found

- Squidward Spare GIF - Squidward Spare Some Change - Discover & Share GIFs: Click to view the GIF

- You Need to Pay Better Attention: We introduce three new attention mechanisms that outperform standard multi-head attention in terms of efficiency and learning capabilities, thereby improving the performance and broader deployability ...

- Taking Notes Write Down GIF - Taking Notes Write Down Notes - Discover & Share GIFs: Click to view the GIF

- gist:09378d3520690d03169f89183adebe9c: GitHub Gist: instantly share code, notes, and snippets.

- Spongebob Squarepants Leaving GIF - Spongebob Squarepants Leaving Patrick Star - Discover & Share GIFs: Click to view the GIF

- Gemini WONT SHOW C++ To Underage Kids "ITS NOT SAFE": Recorded live on twitch, GET IN https://twitch.tv/ThePrimeagenBecome a backend engineer. Its my favorite sitehttps://boot.dev/?promo=PRIMEYTThis is also the...

- Release 0.0.14 · turboderp/exllamav2: Adds support for Qwen1.5 and Gemma architectures. Various fixes and optimizations. Full Changelog since 0.0.13: v0.0.13...v0.0.14

TheBloke ▷ #characters-roleplay-stories (73 messages🔥🔥):

- Debate on Performance and Quantization Techniques:

@dreamgenand@capt.geniusdiscussed the use of imatrix quants for model performance, with@capt.geniusstating that imatrix offers better quality than speed. However,@spottyluckquestioned the necessity of quantizing outliers, leading to a shared GitHub gist by@4onendiscussing GGUF quantizations and their speed penalties. GGUF quantizations overview.

- Intriguing Technologies in Roleplay Applications:

@sunijainquired about the AutoGPT project's status for potential roleplay applications, with@wolfsaugereferencing relevant research and GitHub repositories like DSPy optimization that could programmatically create and evaluate prompt variations.

- Hydration and Health Tips Enter the Chat: A light-hearted tangent emerged as

@lyrcaxisoffered advice on proper hydration to@potatooff, suggesting daily water intake based on body weight for optimal health and discussing the impact of heating solutions on throat dryness and coughing.

- Evaluating Experimental AI Models: Users

@sunijaand@johnrobertsmithengaged in conversation about the effectiveness of experimental AI models like Miquella and Goliath, referencing a reddit post LLM comparison/test for general intelligence with plans to share personal reviews on performance in roleplay contexts.

- Legal and Ethical Considerations in AI: The use of miquliz, as publicized by

@reinman_, was met with legal and ethical concerns from@mrdragonfox, who cautioned against utilizing unlicensed AI models due to potential legal repercussions. Project Atlantis was mentioned as a platform hosting various models including miquliz, although the licensing issues were flagged as a potential problem.

Links mentioned:

- GGUF quantizations overview: GGUF quantizations overview. GitHub Gist: instantly share code, notes, and snippets.

- llama.cpp/examples/quantize/quantize.cpp at 21b08674331e1ea1b599f17c5ca91f0ed173be31 · ggerganov/llama.cpp: LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- Project Atlantis - AI Sandbox: no description found

TheBloke ▷ #coding (9 messages🔥):

- New Model Competing with the Big Boys: User

@ajibawa_2023shared their state-of-the-art Llama-2 Fine-tune Model named OpenHermes-2.5-Code-290k-13B, claiming superior performance over an existing model by teknium. It leverages the combined datasets OpenHermes-2.5 and Code-290k-ShareGPT, and ranks 12th under Junior-v2 in CanAiCode.

- Training Details on a Large-Scale Fine-Tuned Model: Another model, Code-290k-6.7B-Instruct, was trained on a varied code dataset, took 85 hours for 3 epochs using 4 x A100 80GB, and ranks well under Senior category in CanAiCode. Credits were given to Bartowski for quantized models like Exllama v2.

- Seeking the Smallest Model for API Testing: In pursuit of a lightweight model for API testing,

@gamingdaveukinquired about the smallest possible model that can run on Text Gen Web UI with a limited VRAM laptop. The suggested options included tiny models like tinyllama and quantized versions like gptq/exl2/gguf.

- A Celebration of Innovation in AI Modeling: Recognizing the development of new AI models,

@rawwerksgave a humorous kudos to@ajibawa_2023, joking about a hypothetical massive investment to rival Claude-3-Opus.

- Career Path Queries Looking Towards AI: User

@_jayciesought advice on what to expect from interviews in the genAI and ML space, expressing a desire to shift career paths from fullstack development towards AI and eventually pursuing grad school for research.

Links mentioned:

- ajibawa-2023/OpenHermes-2.5-Code-290k-13B · Hugging Face: no description found

- ajibawa-2023/Code-290k-6.7B-Instruct · Hugging Face: no description found

Mistral ▷ #general (412 messages🔥🔥🔥):

- Context Size Confusion Cleared: After an extensive discussion, it was clarified that Mixtral doesn't support sliding window attention, unlike Mistral, as confirmed by

i_am_domreferencing a Reddit post. - What's Next for Next?: Discourse regarding Mistral’s Next model with

i_am_domsuggesting it may be an improved version of Medium.mrdragonfoxasserts Next is a completely separate, newer model line unrelated to Medium. - Hardware Recommendations for Running Models: The user

mehdi1991_inquired about the appropriate hardware for Mistral models, with various members advising at least 24GB VRAM for large models and the feasibility of running on a range of GPUs like the RTX 3060 or 3090. - LeChat's Model Accessibility Debated: The free availability of LeChat and potential overuse of the service sparked debate, with

mrdragonfoxemphasizing the importance of not abusing the service andlerelamentioning adaptation to such misuse. - Mistral in the Market: A brief discussion on Mistal’s possible business model, with speculation that they may not release new open-source models any sooner, prompted by

.mechap’s queries on the future of Mistral AI’s open source offerings.

Links mentioned:

- mistralai/Mistral-7B-Instruct-v0.2 · Hugging Face: no description found

- Mixtral Tiny GPTQ By TheBlokeAI: Benchmarks and Detailed Analysis. Insights on Mixtral Tiny GPTQ.: LLM Card: 90.1m LLM, VRAM: 0.2GB, Context: 128K, Quantized.

- augmentoolkit/prompts at master · e-p-armstrong/augmentoolkit: Convert Compute And Books Into Instruct-Tuning Datasets - e-p-armstrong/augmentoolkit

- Mixtral: no description found

- Reddit - Dive into anything: no description found

Mistral ▷ #models (75 messages🔥🔥):

- Model Context Limit Confusion:

@fauji2464was reminded about the 32k token limit on the Mistral model, but questioned why the warning appeared when using smaller documents.@mrdragonfoxclarified that the model will ignore inputs exceeding the limit and that different tokenizers affect context size differently. - Tokenization Discrepancies Highlighted: When

@fauji2464mentioned checking token sizes with tiktoken,@mrdragonfoxpointed out that tiktoken and Mistral use different tokenization methods and vocabulary sizes, elucidating why issues with context size might occur. - Inference Output Not Limited by Context Window: The conversation between

@fauji2464and@_._pandora_._led to an explanation of how LLMs consider context and the potential for outputs even if inputs exceed the 32k token maximum. - Visualization Aid for Understanding LLMs:

@mrdragonfoxprovided a link to a visualization to help understand how transformer models work, indicating that what was discussed applies to all transformers, not just Mistral. - Enterprise Usage of Mistral Models Explored:

@orogor.inquired about deploying Mistral's paid engine on their own clusters instead of Azure, to which@mrdragonfoxsuggested contacting enterprise sales to discuss options for on-premises deployment and licensing.

Links mentioned:

LLM Visualization: no description found

Mistral ▷ #ref-implem (1 messages):

- Request for Mistral Training Clarification:

@casper_aipointed out the community's struggles with Mistral model training, referencing past discussions that suggest an implementation difference in the Hugging Face Trainer. They requested a minimalistic reference implementation to aid in producing optimal results.

Mistral ▷ #showcase (7 messages):

- Discord Bot Flex by @jakobdylanc: @jakobdylanc promotes their Discord bot that supports over 100 LLMs, offers collaborative prompting, vision support, and features streamed responses in just 200 lines of code. Find the bot on GitHub with the green embed signal indicating the end of a response: GitHub - discord-llm-chatbot.

- Color-coded Chat Bot Responses: In response to @_dampf's query, @jakobdylanc explains that their bot uses embeds in Discord, which turn green to indicate a completed response and allow for a higher character limit.

- Just the Facts, No Frills for the Bot: Addressing @_dampf's suggestions, @jakobdylanc mentions their bot is a LLM prompting tool and they are not interested in it ignoring messages or introducing artificial delay, though they are open to exploring koboldcpp support.

- Model Formats Fight for Flexibility: @fergusfettes shares their 'looming' experiment comparing mistral-large with other LLMs, noting Mistral's good performance but issues with formatting. They advocate for a model's ability to understand both completion and chat modes and share a YouTube video illustrating the method.

- Chatbots Descend on Telegram: @edmund5 launches three new Telegram bots powered by mistral-small-latest: Christine AI for mindfulness, Anna AI for joyful interactions, and Pia AI for elegant conversations. The bots offer varying themes to users, available on Telegram: Christine AI, Anna AI, and Pia AI.

Links mentioned:

- Multiloom Demo: Fieldshifting Nightshade: Demonstrating a loom for integrating LLM outputs into one coherent document by fieldshifting a research paper from computer science into sociology.Results vi...

- GitHub - jakobdylanc/discord-llm-chatbot: Supports 100+ LLMs • Collaborative prompting • Vision support • Streamed responses • 200 lines of code 🔥: Supports 100+ LLMs • Collaborative prompting • Vision support • Streamed responses • 200 lines of code 🔥 - jakobdylanc/discord-llm-chatbot

- Christine AI 🧘♀️: Your serene companion for mindfulness and calm, anytime, anywhere.

- Anna AI 👱♀️: Your bright and engaging friend ready to chat, learn, and play 24/7.

- Pia AI 👸: Your royal confidante. Elegant conversations and wise counsel await you, 24/7.

Mistral ▷ #random (24 messages🔥):

- Alextreebeard Unveils K8S Package for AI:

@alextreebeardhas open-sourced a Kubeflow Terraform Module for Kubernetes, aimed at simplifying the setup of AI tools on k8s and is looking for user feedback. The package includes functionality for running Jupyter in Kubernetes. - Introducing Claude 3 Model Family:

@benjoyo.shared news about Anthropic's launch of the Claude 3 model family, featuring three models with increasing levels of capability: Claude 3 Haiku, Claude 3 Sonnet, and Claude 3 Opus, and noted the impressive capabilities and adherence of the models. - Open Weights as a Key Differentiator: Amidst the discussion of new models from competitors,

@benjoyo.hopes that Mistral retains open weights as a competitive advantage, even as other platforms like Anthropic introduce advanced and prompt-adherent models. - Cost Comparison on AI Models: Comparing the costs of new models,

@mrdragonfoxhighlighted that Opus model is priced at $15 per megatoken for input and $75 per megatoken for output, prompting a wider discussion on price justification and the benefits of having different model variants. - Advanced Use Cases with Claude 3 Alpha:

@benjoyo.pointed to the alpha support for function calling with Claude 3, which allows the model to interact with external tools, expanding its capabilities beyond initial training. This feature promises to facilitate a wider variety of tasks, although it's still in early alpha stages.

Links mentioned:

- Introducing the next generation of Claude: Today, we're announcing the Claude 3 model family, which sets new industry benchmarks across a wide range of cognitive tasks. The family includes three state-of-the-art models in ascending order ...

- Functions & external tools: Although formal support is still in the works, Claude has the capability to interact with external client-side tools and functions in order to expand its capabilities beyond what it was initially trai...

- GitHub - treebeardtech/terraform-helm-kubeflow: Kubeflow Terraform Modules - run Jupyter in Kubernetes 🪐: Kubeflow Terraform Modules - run Jupyter in Kubernetes 🪐 - treebeardtech/terraform-helm-kubeflow

Mistral ▷ #la-plateforme (32 messages🔥):

- JSON Response Format Troubles:

@gbourdinreported issues with the new JSON response format, failing multiple times during use. After being advised by@proffessorblueand checking the Mistral documentation, they solved the problem attributing it to their own oversight.

- Pricing Enquiry Hits a Wall:

@jackie_chen43had difficulty finding pricing information post-signup, commenting on the platform's development stage.@mrdragonfoxprovided a direct link to pricing and noted the admirable effort given the small team size compared to larger organizations like OpenAI.

- Sentiment Analysis Disparities Noted:

@krangbaeexperienced that the Mistral 8x7b model outperformed the Mistral Small model for sentiment analysis, pointing out this disparity when it came to performance.

- Handling 500 Errors on API Calls:

@georgyturevichencountered a 500 error response from the API, which@lerelaaddressed, asking for more details. The error was tracked down to themax_tokensparameter being set tonull.

- Table Data in Prompts Provoke JSON Confusion:

@samseumfaced JSON parsing errors while trying to insert table data into API prompts and received support on handling JSON escaping from@lerela, with additional debugging interaction from@_._pandora_._.

Links mentioned:

- Pricing and rate limits | Mistral AI Large Language Models: Pay-as-you-go

- Client code | Mistral AI Large Language Models: We provide client codes in both Python and Javascript.

Mistral ▷ #office-hour (387 messages🔥🔥):

- Office Hour Session on Model Evaluation:

@sophiamyanginitiated the office hour, inviting discussions on how individuals evaluate models and benchmarks, encouraging the community to share their approaches or ask questions. - Inquiries about Mistral's Future and Open Source Releases:

@potatooffinquired about the future plans for Mistral's open source releases, while@nicolas_mistraldirected to a Twitter post by@arthurmenschas the best source of information for the most recent updates. - Request for Model Training Code and Expansion: Users expressed interest in official Mistral training code (

@kalomaze) and expanding the 7B base model (@rtyax), while@sophiamyangacknowledged the suggestions without providing certainty on future implementation. - Performance Discussions and Release Plans:

@yesiamkurtasked about the performance differences between Mixtral 8x7b and Mistral’s large models, to which@sophiamyangprovided a link to their benchmarks, indicating Mistral Large is superior, but did not disclose any release plans. - Evaluating Models with Real Life Data and Manual Checks: Participants discussed the real-life use of evaluation datasets with

@kalomazementioning MMLU as a representative benchmark;@netrvediscussed manual evaluation using Salesforce Mistral Embedding Model;@_kquantadvised on the importance of challenging models in evaluations.

Links mentioned:

- Becario AI asistente virtual on demand.: no description found

- Phospho: Open Source text analytics for LLM apps: no description found

- Endpoints and benchmarks | Mistral AI Large Language Models: We provide five different API endpoints to serve our generative models with different price/performance tradeoffs and one embedding endpoint for our embedding model.

- Large Language Models and the Multiverse: no description found

- GitHub - wyona/katie-backend: Katie Backend: Katie Backend. Contribute to wyona/katie-backend development by creating an account on GitHub.

Mistral ▷ #le-chat (151 messages🔥🔥):

- Troubleshooting API Access: User

@batlzencountered a "Method Not Allowed" 405 error when using the chat completions endpoint. After some discussion and suggestions from@mrdragonfox, it was resolved that@batlzneeded to switch to using the "POST" method. - Le Chat Model Confusion:

@godefvreported that Le Chat identified itself with GPT-like attributes, highlighting a potential training data issue or hallucinations, as models lack introspection.@mrdragonfoxand others discussed the matter, concluding self-knowledge must be in the dataset to avoid such errors. - Daily Cap Queries and Limitations: Users like

@cm1987expressed concerns about hitting usage limits, with@mrdragonfoxreminding them that it's part of using a beta product, and limits are to be expected. - Web UI Response Display Issues:

@steelpotato1and@venkybeastreported a display bug where response text appears above the initial prompt before jumping below it in the web UI. - Authentication and API Key Anomalies: User

@foxalabs_32486experienced difficulties accessing their account with credentials apparently not recognized. It was later revealed that an email confusion with the auth manager was the issue, which was resolved with assistance from other users.

Links mentioned:

Client code | Mistral AI Large Language Models: We provide client codes in both Python and Javascript.

Mistral ▷ #failed-prompts (12 messages🔥):

- Mistral's Mathematical Mishap:

@awild_techreported that Mistral Large failed to correctly answer what the floor of 0.999 repeating is, with the model outputting 0 instead of the expected 1. This question seems to stump multiple models across the board. - Inconsistent Answers in Multiple Languages: Despite being initially correct,

@awild_techpointed out that when they repeated the question in French, Mistral Large fluctuated between correct and incorrect responses. - Random Luck or Flawed Understanding?:

@_._pandora_._suggested the possibility that Mistral Large getting the floor question right on the first attempt might have been due to luck rather than a robust understanding, as subsequent answers were wrong. - Detailed Explanation by Mistral Strikes Out:

@i_am_domshared a detailed explanation from Mistral Large on the floor of 0.999 repeating, which ultimately concluded incorrectly that the floor is 0. - Mistral Misquotes "System" Role: In an attempt to retrieve the previous message by the "system" role,

@i_am_domnoted that Mistral Large provided various inaccurate versions instead of the expected verbatim quote.

Perplexity AI ▷ #announcements (2 messages):

- Playground AI's New V2.5 Model Unveiled:

@ok.alexannounced that Perplexity Pro users now have access to Playground AI's new V2.5 as the default model for generating a variety of images. Further details about the Perplexity and Playground collaboration can be found in this blog post.

- Introducing Claude 3 for Perplexity Pro Users:

@ok.alexrevealed the release of Claude 3 for<a:pro:1138537257024884847>users, which replaces Claude 2.1 and provides 5 daily queries with the advanced Claude 3 Opus model. Additional daily queries will utilize Claude 3 Sonnet, a faster model on par with GPT-4.

Links mentioned:

no title found: no description found

Perplexity AI ▷ #general (831 messages🔥🔥🔥):

- Claude 3 Opus Usage a Hot Topic: Users like

@stevenmcmackin,@naivecoder786, and@dailyfocus_dailyexpressed concerns about the limit of 5 queries per day for Claude 3 Opus on Perplexity AI Pro, finding the restriction too meager for their usage needs. Some wondered what happens after the limit is reached, while others suggested improving the quota or the usability of Claude 3 Sonnet. - Discussions on Claude 3's Effectiveness: Members such as

@dailyfocus_daily,@akumaenjeru,@detectivejohnkimble_51308engaged in discussions on Claude 3's capabilities, with mixed opinions on whether Claude or GPT-4 is superior for tasks like coding and problem-solving. Some users have personally found Claude 3 to outperform GPT-4, especially in coding. - Claude's Integration and Model Clarity: Questions were raised by users like

@cereal,@heathenist, and@eli_pcon how to differentiate between Opus and Sonnet, and on the transparency of model context size and operations. There seems to be a desire for more clarity on when and how different models are deployed. - Perplexity's AI-powered Features and Plans: Users

@fluxkraken,@cereal, and@joed8.discussed workarounds and techniques for image generation and search functionality, shedding light on how to optimize Perplexity's AI for various tasks. Speculation about Perplexity's future moves towards dedicated models was also shared by@fluxkraken. - Promotional Deals and Model Access: Users talked about promotional offers like the Rabbit R1 deal (

@jawnze,@fluxkraken,@drewgs06) and how it ties with Perplexity AI Pro subscriptions. Some discussed Claude 3 not being available on the iOS app yet, while others enhanced the conversation with how to access various models.

Links mentioned:

- Chat with Open Large Language Models: no description found

- Oliver Twist GIF - Oliver Twist - Discover & Share GIFs: Click to view the GIF

- YouTube Summary with ChatGPT & Claude | Glasp: YouTube Summary with ChatGPT & Claude is a free Chrome Extension that lets you quickly access the summary of both YouTube videos and web articles you're consuming.

- Claude-3-Opus - Poe: Anthropic’s most intelligent model, which can handle complex analysis, longer tasks with multiple steps, and higher-order math and coding tasks. Context window has been shortened to optimize for speed...

- 📖[PDF] An Introduction to Theories of Personality by Robert B. Ewen | Perlego: Start reading 📖 An Introduction to Theories of Personality online and get access to an unlimited library of academic and non-fiction books on Perlego.

- David Leonhardt book talk: Ours Was the Shining Future, The Story of the American Dream: Join Professor Jeff Colgan in conversation with senior New York Times writer David Leonhardt as they discuss David’s new book, which examines the past centur...

- CLAUDE 3 Just SHOCKED The ENTIRE INDUSTRY! (GPT-4 +Gemini BEATEN) AI AGENTS + FULL Breakdown: ✉️ Join My Weekly Newsletter - https://mailchi.mp/6cff54ad7e2e/theaigrid🐤 Follow Me on Twitter https://twitter.com/TheAiGrid🌐 Checkout My website - https:/...

- SmartGPT: Major Benchmark Broken - 89.0% on MMLU + Exam's Many Errors: Has GPT4, using a SmartGPT system, broken a major benchmark, the MMLU, in more ways than one? 89.0% is an unofficial record, but do we urgently need a new, a...

- Puppet Red GIF - Puppet Red Ball - Discover & Share GIFs: Click to view the GIF

- Rabbit R1 and Perplexity AI dance into the future: Rabbit R1 Perplexity AI usage is explained in this article. In the ever-evolving landscape of technology, the collaboration between the

- 必应: no description found

Perplexity AI ▷ #sharing (18 messages🔥):

- Exploring the Antarctic:

@christianbugsshared a link to information about Antarctica using Perplexity AI's search functionality. The link is anticipated to contain details about the continent's geography, climate, and other characteristics. - Announcement of Claude 3:

@dailyfocus_dailyand@_paradroidprovided links to discussions around the newly announced Claude 3 via Perplexity AI.@ethan0810.also shared a link about Anthropic launching Claude. - Vultr Promo Hunt:

@mares1317sought promotional codes for Vultr, a cloud hosting service, and used Perplexity AI's search feature to possibly find some deals. - Understanding US-Jordan Relations:

@_paradroidused Perplexity AI to search into the relationship between the United States and Jordan, indicating an inquiry into their bilateral interactions. - History Unveiled:

@whimsical_beetle_50663appeared to look deeper into history with a link provided from Perplexity AI's search, though the specific historical topic is not mentioned.

Perplexity AI ▷ #pplx-api (18 messages🔥):

- Patience is a Virtue for API Access:

@_samratinquired about how to follow up on getting access to citations in the API after submitting their request.@icelavamanand@brknclock1215responded suggesting that the usual response time is 1-2 weeks or longer and to exercise patience. - Improvement Noted with Configuration Tweaks:

@brknclock1215expressed satisfaction with recent results from tweaking configurations, mentioning a preference for keeping the temperature below 0.5 when using system prompts for instruction following. - Temperature Factor in Language Models: Users

@brknclock1215,@heathenist, and@thedigitalcatengaged in a discussion about the role of temperature in natural language generation. They noted that lower temperatures don't always equate to more reliable results, indicating the complexity of linguistics and language models. - Query on Quota Increases Across Different Models:

@stijntratsaert_01927asked whether previously assigned quota increases on one platform would carry over to another, specifically from pplx70bonline to sonar medium online. - Concerns About Model Censorship Via API:

@randomguy0660raised a question about whether the models accessible through the API are subject to censorship.

OpenAI ▷ #ai-discussions (314 messages🔥🔥):

- Translation AI Enthusiasts Seek Options:

@jackal101022inquired about alternative translation AI services, expressing dissatisfaction with the robotic output from Google Translate and the subsequent edits needed fromGemini or ChatGPT.@luguirecommended using GPT-3.5, praising its quick and accurate performance.

- Thinking Mathematically with Classic Problems: After

@kiddurequested a mathematical problem to enhance logical thinking,@mrsyntaxsuggested the "traveling salesman" problem for its mix of optimization and efficiency challenges.

- Anthropic's Claude 3 Sparks Interest: Discussion around the new Claude 3 AI from Anthropic is on the rise, with

@glamrat,@odiseo3468, and others chiming in on its impressive capabilities. Users are comparing Claude 3 against ChatGPT, particularly for to Oups model which@odiseo3468found to be exceptionally good at graduate-level reasoning, though@cook_sleepreported weaker logic and image recognition compared to GPT-4, especially in Chinese.

- OpenAI's Model Versions and Limits Scrutinized: The conversation turned technical as users like

@johnnyrobertand@pteromaplediscussed the limitations that they've experienced with GPT-4, particularly around the token limits for inputs and contexts in various versions of the model.

- Comparing Chatbots for Roleplay and Storytelling: Multiple users, including

@webheadand@cook_sleep, shared their experiences with Claude 3's superior performance in roleplay and creative writing although noting some limitations in other areas. This has led to suggestions that OpenAI should push to release GPT-5 as competition intensifies with models like Gemini and Claude showcasing advanced language expression capabilities.

Links mentioned:

- Anthropic says its latest AI bot can beat Gemini and ChatGPT: Claude 3 is here with some big improvements.

- New models and developer products announced at DevDay: GPT-4 Turbo with 128K context and lower prices, the new Assistants API, GPT-4 Turbo with Vision, DALL·E 3 API, and more.

OpenAI ▷ #gpt-4-discussions (11 messages🔥):

- Seeking Practical GPT Use Cases:

@flo.0001is in search of channels or resources for practical applications of GPT or the Assistant API in business and productivity, and@mrsyntaxsuggested checking out a specific channel with the ID<#1171489862164168774>. - Looking for Business Implementation Insights: Despite the channel recommendation,

@flo.0001mentioned that they are overwhelmed and are looking more for direct examples of GPT implementation in business and productivity systems. - Chatbot Browser Issues Raised:

@snoopdogui.reported the ChatGPT browser version was down for them, and@solbusprovided a link to a previously shared message potentially addressing the issue. - Errors in Saving GPTs:

@bluenail65expressed confusion over receiving an error message about Saving GPTs despite not having any files uploaded. - Perceived Performance Deterioration of GPT-4:

@watcherkkand@bluenail65both noted that they feel GPT-4's performance has declined since its release, experiencing slower response times, while@cheekatiobserved that the model appears more restrictive in referencing materials.

OpenAI ▷ #prompt-engineering (60 messages🔥🔥):

- Refusal to Accept Internet Capability:

@jungle_joexpressed confusion about an AI's persistent denial of its ability to access the internet, despite being told it can search the internet for real-time information in the system prompt. - Seeking Prompt-Engineering Tips:

@thetwenty8thffsrequested suggestions for improving a prompt aimed at assisting customers with unrecognized credit card charges to make it more clear and concise. - Custom GPT Reluctance:

@ray_themad_nomadreported issues with getting direct refusals from a Custom GPT model, with regenerations and prompt adjustments failing to receive cooperative responses, and wondered if others are experiencing similar difficulties. - Visual Prompts for AI Creativity:

@ikereinezshared success in teaching an AI to generate complex visuals from real photos, using detailed and imaginative descriptions of futuristic cityscapes. - AI's Selective Response Frustrations: Several users, including

@darthgustav., discussed difficulties and debated potential internal mechanisms of AI models, with focus on prompt engineering, visualization errors, and AI transparency, hinting at possible limitations in the Vision system used in conjunction with OpenAI GPT models.

OpenAI ▷ #api-discussions (60 messages🔥🔥):

- Prompt-engineering Fundamentals:

@dantekavalastruggled with style consistency in prompts when transitioning from ChatGPT to the GPT 3.5 API, noting a lack of adaptability despite several attempts.@madame_architectdirected@dantekavalatowards the developer's corner for more focused aid.

- User Experience Challenges with the GPT API: In a sequence of messages,

@darthgustav.and@eskcantadiscussed challenges in working with the visual and mathematical capabilities of GPT, indicating a need for improvement in how vision models interpret and handle prompts.

- In Search of Translation Prompt Excellence:

@kronos97__16076asked for suggestions on designing Chinese-English translation prompts, with community members like@neighbor8103suggesting the use of external tools for verification of machine translation accuracy.

- Exploring Vision Model Limitations: Users

@aminelg,@eskcanta, and@madame_architectengaged in investigative discussions about the Vision model's interpretation of stimuli, the possibility of teaching models within a conversation, and the fun challenges in prompt engineering for vision-related tasks.

- Frustration with Custom Models:

@ray_themad_nomadexpressed dissatisfaction with the response quality from Custom GPTs, receiving frequent refusals despite different attempts at prompting, suggesting a setback in user interaction with tailored models.

Nous Research AI ▷ #off-topic (6 messages):

- Request for Academic Paper:

@ben.comseeks a link to an academic paper, noting inability to view it as they are not a Twitter user. - OpenAI's Supposed Downfall a Hot Topic:

@leontellocomments on the abundance of posts on AI Twitter claiming that OpenAI has been surpassed. - Confusion Over AI Community Reactions:

@leontellouses a custom emoji to express confusion or skepticism about the discussions around AI performance. - Claude 3 Model Claims Spotlight:

@pradeep1148shares a YouTube video titled "Introducing Claude 3 LLM which surpasses GPT-4," highlighting the introduction of the Claude 3 model family. - Apple Test as a Benchmark:

@mautonomysuggests that the apple test is a reliable indicator, presumably in the context of evaluating AI, responding to the discussion about AI superiority.

Links mentioned:

Introducing Claude 3 LLM which surpasses GPT-4: Today, we're look at the Claude 3 model family, which sets new industry benchmarks across a wide range of cognitive tasks. The family includes three state-of...

Nous Research AI ▷ #interesting-links (42 messages🔥):

- Unveiling Prompt Engineering Tricks: User

@everyoneisgrossshared a comprehensive guide to help enhance the output of Large Language Models (LLMs) by prompt engineering. The guide contains methods to improve safety, determinism, and structured output.

- Massive Multi-Model Evaluation:

@mautonomyrevealed a Reddit post comparing 17 new AI models, raising the total to 64 ranked models. The post showcases models likedolphin-2.8-experiment26-7b-previewandMidnight-Rose-70B-v2.0.3-GGUF.

- Skepticism about AI Parameter and Brain Function Claims:

@ldjexpressed criticism towards certain speculations about AI parameters and brain functions, suggesting that they are based on flawed assumptions.

- End of Giant AI Models Era:

@ldjreferenced articles referring to statements from Sam Altman about future AI models becoming more parameter-efficient and progress coming from architectural innovations rather than scale. The discussions emphasize that OpenAI believes the era of simply making larger models has peaked source 1, source 2.

- Introducing 'moondream2' for Edge Devices: User

@tsunemotoshared an announcement from@vikhyatkabout the release of moondream2, a small vision language model that requires less than 5GB to run Tweet Link. It's designed for efficiency on edge devices with 1.8B parameters.

Links mentioned:

- Tweet from vik (@vikhyatk): Releasing moondream2 - a small, open-source, vision language model designed to run efficiently on edge devices. Clocking in at 1.8B parameters, moondream requires less than 5GB of memory to run in 16 ...