[AINews] SciCode: HumanEval gets a STEM PhD upgrade

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

PhD-level benchmarks are all you need.

AI News for 7/15/2024-7/16/2024.

We checked 7 subreddits, 384 Twitters and 29 Discords (466 channels, and 2228 messages) for you.

Estimated reading time saved (at 200wpm): 248 minutes. You can now tag @smol_ai for AINews discussions!

Lots of small updates here and there - HuggingFace's SmolLM replicated MobileLLM (our coverage just a week ago), Yi Tay wrote up the Death of BERT (our podcast 2 weeks ago), and 1 square block of San Francisco raised/sold for well over $30m in deals across Exa, SFCompute, and Brev (congrats friends!).

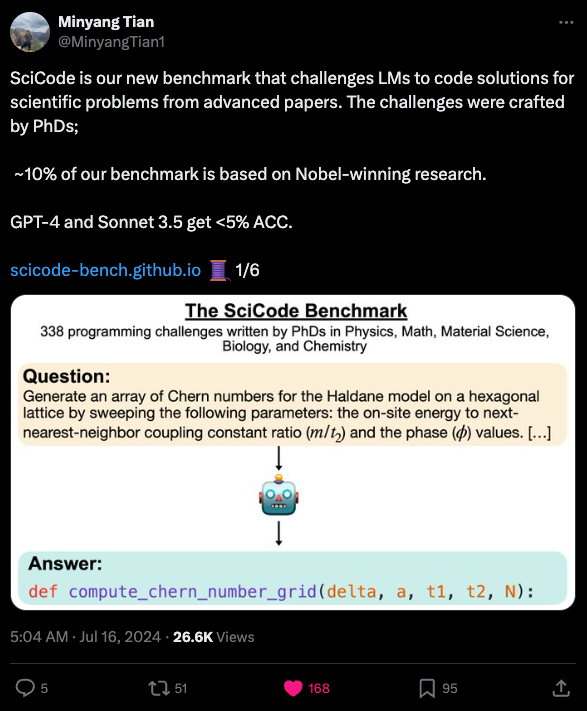

However our technical highlight of today is SciCode, which challenges LMs to code solutions for scientific problems from advanced papers. The challenges were crafted by PhDs (~10% is based on Nobel-winning research) and the two leading LLMs, GPT-4 and Sonnet 3.5, score <5% on this new benchmark.

Other than HumanEval and MBPP, the next claim to a top coding benchmark has been SWEBench (more info on our coverage, but it is expensive to run and more so an integration test of agentic systems rather than test of pure coding ability/world knowledge. SciCode provides a nice extension of the very popular HumanEval approach that is easy/cheap to run, and nevertheless still is remarkably difficult for SOTA LLMs, providing a nice gradient to run.

Nothing lasts forever (SOTA SWEbench went from 2% to 40% in 6 months) but new and immediately applicable benchmark work is very nice when done well.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Developments

- Anthropic API updates: @alexalbert__ noted Anthropic doubled the max output token limit for Claude 3.5 Sonnet from 4096 to 8192 in the Anthropic API, just add the header "anthropic-beta": "max-tokens-3-5-sonnet-2024-07-15" to API calls.

- Effective Claude Sonnet 3.5 Coding System Prompt: @rohanpaul_ai shared an effective Claude Sonnet 3.5 Coding System Prompt with explanations of the guided chain-of-thought steps: Code Review, Planning, Output Security Review.

- Q-GaLore enables training 7B models on 16GB GPUs: @rohanpaul_ai noted Q-GaLore incorporates low precision training with low-rank gradients and lazy layer-wise subspace exploration to enable training LLaMA-7B from scratch on a single 16GB NVIDIA RTX 4060 Ti, though it is mostly slower.

- Mosaic compiler generates efficient H100 code: @tri_dao highlighted that the Mosaic compiler, originally for TPU, can generate very efficient H100 code, showing convergence of AI accelerators.

- Dolphin 2.9.3-Yi-1.5-34B-32k-GGUF model on Hugging Face: @01AI_Yi gave kudos to @bartowski1182 and @cognitivecompai for the remarkable Yi fine-tune model on Hugging Face with over 111k downloads last month.

AI Model Performance and Benchmarking

- Llama 3 model performance: @awnihannun compared ChatGPT (free) vs MLX LM with Gemma 2 9B on M2 Ultra, showing comparable performance. @teortaxesTex noted Llama 3 0-shotting 90% on the MATH dataset.

- Synthetic data limitations: @abacaj argued synthetic data is dumb and unlikely to result in better models, questioning the realism of synthetic instructions. @Teknium1 countered that synthetic data takes many forms and sweeping claims are unwise.

- Evaluating LLMs with LLMs: @percyliang highlighted the power of using LLMs to generate inputs and evaluate outputs of other LLMs, as in AlpacaEval, while cautioning about over-reliance on automatic evals.

- LLM-as-a-judge techniques: @cwolferesearch provided an overview of recent research on using LLMs to evaluate the output of other LLMs, including early research, more formal analysis revealing biases, and specialized evaluators.

AI Safety and Regulation

- FTC sued Meta over acquiring VR companies: @ID_AA_Carmack noted he was dragged into two court cases where the FTC sued Meta over acquiring tiny VR companies and that big tech has significantly ramped down acquisitions across the board, which is bad for startups as acquisition exits are being curtailed.

- Killing open source AI may politicize AI safety: @jeremyphoward warned that killing open source AI is likely to result in politicizing AI safety and that open-source is the solution.

- LLMs are not intelligent, just memorization machines: @svpino argued that LLMs are incredibly powerful memorization machines that are impressive but not intelligent. They can memorize large amounts of data and generalize a bit from it, but can't adapt to new problems, synthesize novel solutions, keep up with the world changing, or reason.

AI Applications and Demos

- AI and Robotics weekly breakdown: @adcock_brett provided a breakdown of the most important AI and Robotics research and developments from the past week.

- Agentic RAG and multi-agent architecture concepts: @jerryjliu0 shared @nicolaygerold's thread and diagrams on agentic RAG and multi-agent architecture concepts discussed during their @aiDotEngineer talk.

- MLX LM with Gemma 2 9B on M2 Ultra vs ChatGPT: @awnihannun compared ChatGPT (free) vs MLX LM with Gemma 2 9B on M2 Ultra.

- Odyssey AI video generation platform: @adcock_brett noted Odyssey emerged from stealth with a 'Hollywood-grade' AI video generation platform developing four specialized AI video models.

Memes and Humor

- 9.11 is bigger than 9.9: @goodside joked that "9.11 is bigger than 9.9" in a humorous tweet, with follow-up variations and explanations in subsequent tweets.

- Perplexity Office: @AravSrinivas shared a humorous image titled "New Perplexity Office!"

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

Theme 1. New Frontiers

- [/r/singularity] A different source briefed on the matter said OpenAI has tested AI internally that scored over 90% on a MATH dataset (Score: 206, Comments: 59): OpenAI's AI reportedly scores over 90% on MATH dataset. An unnamed source claims that OpenAI has internally tested an AI system capable of achieving over 90% accuracy on a MATH dataset, suggesting significant advancements in AI's mathematical problem-solving abilities. This development, if confirmed, could have far-reaching implications for AI's potential in tackling complex mathematical challenges and its application in various fields requiring advanced mathematical reasoning.

- [/r/singularity] A new quantum computer has shattered the world record set by Google’s Sycamore machine. The new 56-qubit H2-1 computer smashed ‘quantum supremacy’ record by 100 fold. (Score: 365, Comments: 110): Xanadu's 56-qubit H2-1 quantum computer has reportedly surpassed Google's Sycamore machine in the quantum supremacy benchmark by a factor of 100. This achievement marks a significant leap in quantum computing capabilities, potentially accelerating the field's progress towards practical applications. The news was shared on X (formerly Twitter) by @dr_singularity, though further details and verification of this claim are yet to be provided.

Theme 2. Advanced Stable Diffusion Techniques for Detailed Image Generation

- [/r/StableDiffusion] Tile controlnet + Tiled diffusion = very realistic upscaler workflow (Score: 517, Comments: 109): Tile controlnet combined with Tiled diffusion creates a highly effective workflow for realistic image upscaling. This technique allows for upscaling images to 4K or 8K resolution while maintaining fine details and textures, surpassing the quality of traditional AI upscalers. The process involves using controlnet to generate a high-resolution tile pattern, which is then used as a guide for tiled diffusion, resulting in a seamless and detailed final image.

- [/r/StableDiffusion] Creating detailed worlds with SD is still my favorite thing to do! (Score: 357, Comments: 49): Creating detailed fantasy worlds using Stable Diffusion remains a top choice for creative expression. The ability to generate intricate, imaginative landscapes and environments showcases the power of AI in visual art creation. This technique allows artists and enthusiasts to bring their fantastical visions to life with remarkable detail and depth.

- NeededMonster details their 4-stage workflow for creating detailed fantasy worlds, including initial prompting, inpainting/outpainting, upscaling, and refining details. The process can take 1.5 hours per image.

- Commenters laud the images as "book cover quality" and "the best I've seen yet", with some suggesting the artist's skills are "worth hiring". NeededMonster expresses interest in finding work creating such images.

Theme 3. Fine-tuning Llama 3 with Unsloth and Ollama

- [/r/LocalLLaMA] Step-By-Step Tutorial: How to Fine-tune Llama 3 (8B) with Unsloth + Google Colab & deploy it to Ollama (Score: 219, Comments: 41): Unsloth-Powered Llama 3 Finetuning Tutorial This tutorial demonstrates how to finetune Llama-3 (8B) using Unsloth and deploy it to Ollama for local use. The process involves using Google Colab for free GPU access, finetuning on the Alpaca dataset, and exporting the model to Ollama with automatic

Modelfilecreation. Key features include 2x faster finetuning, 70% less memory usage, and support for multi-turn conversations through Unsloth'sconversation_extensionparameter.

AI Discord Recap

A summary of Summaries of Summaries

1. Mamba Models Make Waves

- Codestral Mamba Slithers into Spotlight: Mistral AI released Codestral Mamba, a 7B coding model using Mamba2 architecture instead of transformers, offering linear time inference and infinite sequence handling capabilities.

- The model, available under Apache 2.0 license, aims to boost code productivity. Community discussions highlighted its potential impact on LLM architectures, with some noting it's not yet supported in popular frameworks like

llama.cpp.

- The model, available under Apache 2.0 license, aims to boost code productivity. Community discussions highlighted its potential impact on LLM architectures, with some noting it's not yet supported in popular frameworks like

- Mathstral Multiplies STEM Strengths: Alongside Codestral Mamba, Mistral AI introduced Mathstral, a 7B model fine-tuned for STEM reasoning, achieving impressive scores of 56.6% on MATH and 63.47% on MMLU benchmarks.

- Developed in collaboration with Project Numina, Mathstral exemplifies the growing trend of specialized models optimized for specific domains, potentially reshaping AI applications in scientific and technical fields.

2. Efficient LLM Architectures Evolve

- SmolLM Packs a Petite Punch: SmolLM introduced new state-of-the-art models ranging from 135M to 1.7B parameters, trained on high-quality web, code, and synthetic data, outperforming larger counterparts like MobileLLM and Phi1.5.

- These compact models highlight the growing importance of efficient, on-device LLM deployment. The release sparked discussions on balancing model size with performance, particularly for edge computing and mobile applications.

- Q-Sparse Spices Up Sparsity: Researchers introduced Q-Sparse, a technique enabling fully sparsely-activated large language models (LLMs) to achieve results comparable to dense baselines with higher efficiency.

- This advancement comes four months after the release of BitNet b1.58, which compressed LLMs to 1.58 bits. The AI community discussed how Q-Sparse could potentially reshape LLM training and inference, particularly for resource-constrained environments.

3. AI Education and Benchmarking Breakthroughs

- Karpathy's Eureka Moment in AI Education: Andrej Karpathy announced the launch of Eureka Labs, an AI-native educational platform starting with LLM101n, an undergraduate-level course on training personal AI models.

- The initiative aims to blend AI expertise with innovative teaching methods, potentially transforming how AI is taught and learned. Community reactions were largely positive, with discussions on the implications for democratizing AI education.

- SciCode Sets New Bar for LLM Evaluation: Researchers introduced SciCode, a new benchmark challenging LLMs to code solutions for scientific problems from advanced papers, including Nobel-winning research.

- Initial tests showed even advanced models like GPT-4 and Claude 3.5 Sonnet achieving less than 5% accuracy, highlighting the benchmark's difficulty. The AI community discussed its potential impact on model evaluation and the need for more rigorous, domain-specific testing.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- NVIDIA Bids Adieu to Project: The NVIDIA shutdown of a project left community members speculating about its future, triggering discussions on safekeeping work against abrupt discontinuations.

- One user recommends local storage as a contingency to project shutdowns, aiming to minimize work loss.

- RAG Under the Microscope: Skepticism surrounds RAG (Retrieval-Augmented Generation), which is reportedly easy to start but challenging and costly to fine-tune to perfection.

- A deep dive into optimization revealed the complexities involved, with members quoting 'fine-tuning the LLM, the embedder, and the reranker'.

- Hefty Price Tag on Model Tuning: Fine-tuning a language model exceeding 200GB could incur significant costs, stirring debate on the financial accessibility of advancing large models.

- Google Cloud's A2 instances emerged as a possible yet still pricey alternative, emphasizing the weight of cost in the scaling equation.

- Codestral Mamba Springs into Action: Mistral AI's Codestral Mamba** breaks ground with linear time inference capabilities, offering rapid code productivity solutions.

- Mathstral accompanies the release, spotlighting its advanced reasoning in STEM fields and garnering interest for its under-the-hood prowess.

- Unsloth Pro Exclusive Club: The new Unsloth Pro** version, currently under NDA, excites with its multi-GPU support and DRM system, yet is exclusively tied to a paid subscription model.

- Expectations are geared towards an effective DRM system for diversified deployments, albeit limited to premium users.

Modular (Mojo 🔥) Discord

- FlatBuffers Flexes Its Muscles Against Protobuf: The community discussed the advantages of FlatBuffers over Protobuf, highlighting FlatBuffers' performance and Apache Arrow's integration, which nevertheless uses Protobuf for data transfer.

- Despite its efficiency, FlatBuffers faces challenges with harder usage and less industry penetration, sparking a debate on choosing serialization frameworks.

- Mojo's Python Compatibility Conundrum: A proposal for a Mojo mode that disables full compatibility with Python was debated, aiming to push Mojo-centric syntax and robust error handling.

- Discussion included suggestions to adopt Rust-like monadic error handling to enhance reliability, avoiding traditional try-catch blocks.

- MAX Graph API Tutorial Hits a Snag: Learners faced hurdles with the MAX Graph API tutorial, encountering Python script discrepancies and installation errors.

- Community interventions corrected missteps such as mismatched Jupyter kernels and import issues, pointing newcomers to nightly builds for smoother experiences.

- Mistral 7B Coding Model Wows with Infinite Sequences: Mistral released a new 7B coding model leveraging Mamba2, altering the landscape of coding productivity with its sequence processing capabilities.

- The community showed enthusiasm for the model and shared resources for GUI building and ONNX conversion.

- Error Handling Heats Up Mojo Discussions: Talks surged around error handling in Mojo, with strong opinions on explicit error propagation and the implications for functions in systems programming.

- An overarching theme was the emphasis on explicit declarations to improve code maintenance and to accommodate error handling on diverse hardware like GPUs and FPGAs.

HuggingFace Discord

- Champion Math Solver Now in the Open: NuminaMath, the AI Math Olympiad champ, has been released as open source flaunting a 7B model and Apollo 11-like scores of 29/50 on Kaggle.

- A toil of intelligence, the model was fine-tuned in two distinguished stages utilizing a vast expanse of math problems and synthetic datasets, specifically tuned for GPT-4.

- Whisper Timestamped Marks Every Word: Stamping authority on speech rec, Whisper Timestamped has now tuned into the multilingual realm with a robust in-browser solution using Transformers.js.

- This whispering marvel gifts in-browser video editing wizards its full-bodied code and a magic demo for time-stamped transcriptions.

- Vocal Virtuoso: Nvidia's BigVGAN v2 Soars: Nvidia harmonized the release of BigVGAN v2, their latest Neural Vocoder that compiles Mel spectrograms into symphonies faster on its A100 stage.

- With a makeover featuring a spruced-up CUDA core, a finely tuned discriminator, and a loss that resonates, this model promises an auditory feast supporting up to 44 kHz.

- Merging Minds: Hugging Face x Keras: Keras now brandishes NLP features with cunning from its alliance with Hugging Face, pushing the envelope for developers in neural prose.

- This melding of minds is staged to bring forth a cascade of NLP functions into the Keras ecosystem, welcoming devs to a show of seamless model integrations.

- An Interface Odyssey: Hugging Face Tokens: Hugging Face has revamped their token management interface, imbuing it with novel features like token expiry dates and an elegant peek at the last four digits.

- Shielding your precious tokens, this UI uplift serves as a castellan for your token lists, offering intricate details at just a glance.

Stability.ai (Stable Diffusion) Discord

- Sayonara NSFW: AI Morph Clamps Down: AI Morph, a tool by Daily Joy Studio, triggered conversations when it stopped allowing NSFW content, showing a 'does not meet guidelines' alert.

- The community reaction was mixed, with some speculating about the impact on content creation, while others discussed alternative tools.

- Anime Artistry with Stable Diffusion: Queries emerged on how to finesse Stable Diffusion for anime art's color, attire, and facial expression accuracy, hinting at the need for fine-grained control mechanisms.

- Several users exchanged tips, with some pointing to certain GitHub repositories as potential resources.

- Detweiler's Tutorials: A Community Favorite: The community hailed Scott Detweiler's YouTube tutorials on Stable Diffusion, praising his quality insights into the tool.

- His contributions, as part of his quality assurance role at Stability.ai, were highlighted, cementing his place as a go-to source for learning.

- Homemade AI Tools Marry Local and Stable: Excitement brewed around the development of a local AI tool that cleverly integrates Stable Diffusion, becoming a preferred tool for capt.asic.

- The discussion branched into the effectiveness of combining Stable Diffusion with Local Language Model (LLM) support.

- AI's Graphical Gladiators: 4090 vs. 7900XTX: GPU performance for AI tasks sparked debates, with NVIDIA's 4090 facing off against AMD's 7900XTX on aspects like cost-efficiency and accessibility.

- Amid the specs talk, links to Google Colab projects and specs comparisons furthered the discussion.

CUDA MODE Discord

- CUDA Kernel Capers & PyTorch Prowess: Discussions in #general centred on the invocation of CUDA kernels via PyTorch in Python scripts, suggesting the use of the PyTorch profiler to untangle which ATen functions are triggered.

- A performance tug-of-war was highlighted, citing a lecture where a raw CUDA matrix multiplication kernel took 6ms, while its PyTorch counterpart breezed through in 2ms, raising questions about the efficiency of PyTorch's kernels for convolution operations in CNNs.

- Spectral Compute's SCALE Toolkit Triumph: SCALE emerged in #cool-links, praised for its ability to transpile CUDA applications for AMD GPUs, an initiative that could shift computational paradigms.

- With the future promise of broader GPU vendor support, developers were directed to SCALE's documentation for tutorials and examples, portending a potential upsurge in cross-platform GPU programming agility.

- Suno's Search for Machine Learning Virtuosos: #jobs spotlighted Suno's campaign to enlist ML engineers, skilled in torch.compile and triton, for crafting real-time audio models; familiarity with Cutlass is a bonus, not a requirement as per the Job Posting.

- Internship roles also surfaced, offering neophytes an entry point to the intricate dance of training and inferencing within Suno's ML landscape.

- Lightning Strikes and Huggingface Hugs Development: #beginner discussions showcased Lightning AI's Studios as a deftly designed hybrid cloud-browser development arena, while CUDA development aspirations for Huggingface Spaces Dev Mode hung unanswered.

- The latter hosted queries on the feasibility of CUDA endeavors within Huggingface's nurturing ecosystem, reflecting a community at the edge of experimentation and discovery.

- Karpathy's Eureka Moment with AI+Education: Andrej Karpathy announces his leap into the AI-aided education sphere with Eureka Labs; a discussion in #llmdotc touched on the intent to synergize teaching and technology for the AI-curious.

- A precursor course, LLM101n, signals the start of Eureka's mission to augment educational accessibility and engagement, setting the stage for AI to potentially reshape the learning experience.

OpenRouter (Alex Atallah) Discord

- Generous Gesture by OpenRouter: OpenRouter has graciously provided the community with free access to the Qwen 2 7B Instruct model, enhancing the arsenal of AI tools for eager engineers.

- To tap into this offering, users can visit OpenRouter and engage with the model without a subscription.

- The Gemini Debate: Free Tier Frustrations: Contrasting views emerged on Google's Gemini 1.0 available through its free tier, with strong opinions stating it falls short of OpenAI's GPT-4o benchmark.

- A member highlighted the overlooked promise in Gemini 1.5 Pro, citing it has creative prowess despite its coding quirks.

- OpenRouter Oscillation: Connectivity Woes: OpenRouter users faced erratic disruptions accessing the site and its API, sparking a series of concerns within the community.

- Official statements attributed the sporadic outages to transient routing detours and potential issues with third-party services like Cloudflare.

- Longing for Longer Contexts in Llama 3: Engineers shared their plight over the 8k context window limitation in Llama 3-70B Instruct, pondering over superior alternatives.

- Suggestions for models providing extended context abilities included Euryale and Magnum-72B, yet their consistency and cost factors were of concern.

- Necessities and Nuances of OpenRouter Access: Clarification spread amongst users regarding OpenRouter's model accessibility, noting not all models are free and some require a paid agreement.

- Despite the confusion, OpenRouter does offer a selection of APIs and models free of cost, while hosting enterprise-grade dry-run models demands underscoring specific business contracts.

Perplexity AI Discord

- Perplexity AI Tempest: Pro-level Troubles: Users voiced their struggles with Pro Subscription Support** at Perplexity AI, facing activation issues across devices despite confirmation emails.

- Discussions revolved around questions like implementing model settings for separate collections and sharing excitement over a new Perplexity Office.

- Alphabet's Audacious Acquisition: $23B Sealed: Alphabet** has made waves with a hefty $23 billion acquisition, stirring up the market which you can catch in a YouTube briefing.

- Speculation and chatter ensued on how this move could usher in new strides, putting Alphabet on the radar for potent market expansion.

- Lunar Refuge Uncovered: A Cave for Future Astronauts: An accessible lunar cave** found in Mare Tranquillitatis could be a boon for astronaut habitation, thanks to its protection against the moon's extremes.

- With a breadth of at least 130 feet and a more temperate environment, it stands out as a potential lunar base as per Perplexity AI's coverage.

- 7-Eleven's Experience Elevator: Customer Delight in Sight: Improving shopping delight, 7-Eleven** is gearing up for a major upgrade, possibly revamping consumer interaction landscapes.

- Piquing interests, 7-Even invites you to explore the upgrade, perhaps setting a new benchmark in retail convenience.

- API Angst: pplx-api Perturbations: The

pplx-apicrowd discussed missing features like adeleted_urlsequivalent and the frustration of 524 errors** affectingsonarmodels.- Workarounds suggested include setting the

"stream": Trueto keep connections alive amid timeouts withllama-3-sonar-small-32k-onlineand related models.

- Workarounds suggested include setting the

Interconnects (Nathan Lambert) Discord

- Codestral Mamba Strikes with Infinite Sequence Modeling: The introduction of Codestral Mamba, featuring linear time inference, marks a milestone in AI's ability to handle infinite sequences, aiding code productivity benchmarks.

- Developed with Albert Gu and Tri Dao, Codestral's architecture competes with top transformer models, indicating a shift towards more efficient sequence learning.

- Mathstral Crunches Numbers with Precision: Mathstral, focusing on STEM, shines with 56.6% on MATH and 63.47% on MMLU benchmarks, bolstering performance in niche technical spheres.

- In association with Project Numina, Mathstral represents a calculated balance of speed and high-level reasoning in specialized areas.

- SmolLM Packs a Punch On-Device: SmolLM's new SOTA models offer high performance with reduced scale, making advancements in the on-device deployment of LLMs.

- Outstripping MobileLLM amongst others, these models indicate a trending downsizing while maintaining adequate power, essential for mobile applications.

- Eureka Labs Enlightens AI-Education Intersection: With its AI-native teaching assistant, Eureka Labs paves the way for an AI-driven educational experience, beginning with the LLM101n product.

- Eureka's innovative approach seeks to enable students to shape their understanding by training their own AI, revolutionizing educational methodology.

- Championing Fair Play in Policy Reinforcement: Discussions ensued over the utility of degenerate cases in policy reinforcement for managing common prefixes in winning and losing strategies.

- Acknowledging a deep dive is warranted, the focus on detailed, technical specifics potentially ushers in advanced methods in policy optimization.

Eleuther Discord

- GPT-4's GSM8k Mastery: GPT-4's performance prowess was highlighted with its ability to handle most of the GSM8k train set; an interesting fact shared from the GPT-4 tech report.

- The community spotlighted the memory feats of GPT-4, as such details often reverberate across social platforms.

- Instruction Tuning Syntax Scrutinized: Syntax within the instruction tuning dataset generated discussion, questioning the approach in comparison to OpenAI's method of chaining thoughts using bullet points.

- Curiosity arose about the potential inclusion of specific markers in tuning datasets, initiating dialogue about dataset integrity.

- GPU Failures under the Microscope: Members sought to understand the frequency of GPU failures during model training, referencing reports such as the Reka tech report.

- Open resources like OPT/BLOOM logbooks emerged as go-to sources for those aiming to analyze the stability of large-scale AI training environments.

- Advancements in State Space Models: Innovative construction of State Space Model weights propelled discussions, with this study illustrating their capability to learn dynamical systems in context.

- Researchers exchanged insights on these models' potential to predict system states without additional parameter adjustments, underscoring their utility.

- Neural Counts: Human vs Animal: Debate flared over intelligence differences between humans and animals, recognizing human superiority in planning and creativity, compared to comparable sensory capabilities.

- Conversation touched on the larger human neocortex and its increased folding, which may contribute to our distinct cognitive abilities.

Latent Space Discord

- Anthropic's Token Triumph: Anthropic unveiled their drip-feed PR approach, divulging a token limit boost for Claude 3.5 Sonnet from 4096 to 8192 tokens in the API, bringing cheer to developers.

- An avid developer shared a sigh of satisfaction, remarking on past limitations with a linked celebration tweet that highlighted this enhancement.

- LangGraph vs XState Shootout: Enthusiasm brews as a member previews their XState work to craft LLM agents, comparing methodologies with LangGraph, publicly on GitHub.

- Anticipation builds for a thorough breakdown, set to delineate strengths between the two, enriching the toolset of AI engineers venturing into state-machine-powered AI agents.

- Qwen2 Supersedes Predecessor: Qwen2 has launched a language model range outclassing Qwen1.5, offering a 0.5 to 72 billion parameter gamut, gearing competition with proprietary counterparts.

- The collection touts both dense and MoE models, as the community pores over the promise in Qwen2's technical report.

LM Studio Discord

- LM Studio: Network Nexus: LM Studio users discuss Android app access for home network servers, highlighting VPN tools** like Wireguard for secure server connections.

- Comprehensive support for Intel GPUs is negated, advising better performance with other hardware for AI tasks.

- Bugs & Support: Conversation Collision: Gemma 2 garners support in

llama.cpp, while Phi 3 small gets sidelined due to incompatibility issues.- Community digs into the LMS model loading slowdown, pinpointing ejection and reloading as a quick-fix to the sluggish performance.

- Coding with Giants: Deepseek & Lunaris: A hunt for an ideal local coding model for 128GB RAM systems zeroes in on Deepseek V2, a model boasting 21B experts.

- Precise differences between L3-12B-Lunaris variants spark a conversation, with emphasis on trying out the free LLMs for performance insights.

- Graphical Glitches: Size Matters Not:

f32folder anomaly grabs attention in LM Studio, leading some to muse over cosmetic bugs' impact on user experience.- Flash Attention emerges as the culprit behind an F16 GGUF model load issue, with deactivation restoring functionality on an RTX 3090.

- STEM Specialized: Mathstral's Debut: Mistral AI reveals Mathstral, their latest STEM-centric model, promising superior performance over its Mistral 7B** predecessor.

- The Community Models Program spotlights Mathstral, inviting AI enthusiasts to dive into discussions on the LM Studio Discord.

Nous Research AI Discord

- Evolutionary Instructional Leaps with Evol-Instruct V2 & Auto**: WizardLM's announcement of Evol-Instruct V2 extended WizardLM-2's capabilities from three evolved domains to dozens, potentially enhancing AI research fairness and efficiency.

- Auto Evol-Instruct demonstrated notable performance gains, outperforming human-crafted methods with improvements of 10.44% on MT-bench, 12% on HumanEval, and 6.9% on GSM8k.

- Q-Sparse: Computing with Efficiency**: Q-Sparse, introduced by Hongyu Wang, claims to boost LLM computation by optimizing compute over memory-bound processes.

- This innovation tracks at a four-month lag following BitNet b1.58's achievement of compressing LLMs to 1.58 bits.

- SpreadsheetLLM: Microsoft's New Frontier in Data: Microsoft innovates with SpreadsheetLLM**, excelling in spreadsheet tasks, which could result in a significant shift in data management and analysis.

- A pre-print paper highlighted the release of SpreadsheetLLM, sparking debates over automation's impact on the job market.

- Heat Advisory: Urban Temperatures and Technological Solutions**: Amidst extreme temperatures, discussions arise on painting roofs white, supported by a Yale article, to reduce the urban heat island effect.

- The invention of a super white paint that reflects 98% of sunlight was showcased in a YouTube demonstration, igniting conversations about its potential to cool buildings passively.

- AI Literacy: Decoding Tokenization Woes: Conflicts with tiktoken library when handling Arabic symbols in LLMs were discussed; decoding mishaps replace original strings with special tokens, posing challenges for text generation.

- The tokenization process variability is evident, with outcomes ranging between a UTF-8 sequence and a 0x80-0xFF byte, raising concerns about tokenization's invertibility with

cl100k_base.

- The tokenization process variability is evident, with outcomes ranging between a UTF-8 sequence and a 0x80-0xFF byte, raising concerns about tokenization's invertibility with

OpenAI Discord

- Sora's Anticipated Arrival: Sora's release date discussions in Q4 2024 have surfaced based on OpenAI blog posts. OpenAI has not confirmed these speculations.

- Cautions were raised regarding trusting unofficial sources such as random Reddit or Twitter posts for launch predictions.

- Miniature Marvel: GPT Mini's Potential Role: A rumored GPT mini in Lymsys generated buzz, though details remain sparse and unverified.

- Skepticism exists, suggesting many predictions lack concrete basis.

- From Zero to Hero with GPT-4 Coding: It's discussed how GPT-4 assists enthusiasts in coding a mobile game, noting that while it provides structure, vigilant error-checking is essential.

- Success stories shared of individuals crafting web apps with no previous coding experience, attributing their strides to GPT-4's guidance.

- Language Lessons with GPT Models: Performance variation among AI models is linked to the extent of their language training, affecting responses quality.

- The discussion ranged over the model's mix-ups with regional slang to a shift from a casual to a more formal tone in GPT-4, impacting user experience.

- Chatbot Cultivation Challenges: A student's attempt to create a Bengali/Banglish support chatbot stirred conversations on whether modest fine-tuning with 100 conversations would be beneficial.

- Responses clarified that while fine-tuning assists pattern recognition, these might be forgotten past the context window, impacting conversation flow.

LlamaIndex Discord

- LlamaIndex's Bridge to Better RAG: A workshop by LlamaIndex will delve into enhancing RAG with advanced parsing and metadata extraction, featuring insights from Deasie's founders on the topic.

- Deasie's labeling workflow purportedly optimizes RAG, auto-generating hierarchical metadata labels, as detailed on their official site.

- Advanced Document Processing Tactics: LlamaIndex's new cookbook marries LlamaParse and GPT-4o into a hybrid text/image RAG architecture to process diverse document elements.

- Concurrently, Sonnet-3.5's adeptness in chart understanding shines, promising better data interpretation through multimodal models and LlamaParse's latest release.

- Graphing the Details with LlamaIndex and GraphRAG: Users compared llamaindex property graph capacities with Microsoft GraphRAG, highlighting property graph's flexibility in retrieval methods like text-to-cypher, outlined by Cheesyfishes.

- GraphRAG's community clustering is contrasted with the property graph's customized features, with examples found in the documentation.

- Streamlining AI Infrastructure: Discussions emerged around efficient source retrieval for LLM responses, with methods like

get_formatted_sources()aiding in tracing data provenance, cited in LlamaIndex's tutorial.- The AI community actively seeks accessible public vector datasets to minimize infrastructure complexity, with preferences for pre-hosted options, though no specific services were mentioned.

- Boosting Index Loading Efficiency: Members shared strategies to expedite loading of hefty indexes, suggesting parallelization as a potential avenue, stirring a debate on optimizing methods like

QueryPipelines.- For data embedding in Neo4J nodes, the community turns to

PropertyGraphIndex.from_documents(), with the process thoroughly detailed in LlamaIndex's source code.

- For data embedding in Neo4J nodes, the community turns to

Cohere Discord

- Cooperation Coded in Cohere's Python Community: A participant recommended exploring the Cohere Python Library for those interested in contributing to open source projects, fostering a community-driven improvement.

- An enthusiast of the library hinted at their intention to contribute soon, potentially bolstering the collaborative efforts.

- Discord's Categorization Conundrum Calls for Prompt Care: Problems emerged with a Discord bot misfiling all posts to the 'opensource' category despite various topics, hinting at issues in automatic post categorization.

- A colleague chimed in, speculating that an easy prompt adjustment could correct the course, reminiscent of r/openai's misrouted posts.

- Spam Scams Prompt Preemptive Proposal: A proactive member proposed creating spam awareness content as part of the server's newcomer onboarding to boost security.

- The suggestion was met with support, sparking a discussion on implementing best practices for community safety.

- Max Welling's Warm Fireside Reception: C4AI announced a fireside chat with Max Welling from the University of Amsterdam, an event that stirred excitement.

- However, the promotion faced a hiccup with an unnecessary @everyone alert, for which an apology was issued.

- Recruitment Rules Reiterated on Discord: It was reminded that job postings are not permitted on this server, reinforcing the community's guidelines.

- Members were urged to keep employment engagements through private discussions, emphasizing respectful professional protocols.

LangChain AI Discord

- Finetuning Frolics for Functionality: A finetuning pipeline became the crux of discussion as members exchanged ideas on solving problems by sharing pipeline strategies.

- One member highlighted the application of LLM training with a set of 100 dialogues to enhance a Bengali chatbot's responsiveness.

- Tick-Tock MessageGraph Clock: The addition of timestamps to a MessageGraph to automate message chronology was a technical query that sparked dialogue amongst the guild's minds.

- Speculation ensued over the necessity of a StateGraph for customized temporal state management.

- Launching Verbis: A Vanguard in Privacy: Verbis, an open-source MacOS application promises enhanced productivity with local data processing by utilizing GenAI models.

- Launched with fanfare, it guarantees zero data sharing to third parties, boldly emphasizing privacy. Learn more on GitHub

- RAG on the Web: LangChain & WebLLM Synergy: A demonstration showed the prowess of LangChain and WebLLM in a browser-based environment, deploying a chat model for on-the-fly question answering.

- The video shared offers a hands-on showcase of Visual Agents and emphasizes a potent, in-browser user experience. Watch the demo

OpenAccess AI Collective (axolotl) Discord

- Dive Into Dynamic Tuning: Curiosity peaked among members about the rollout of a PyTorch tuner, with exchanges on its potential to swap and optimize instruction models efficiently.

- The tuner's capacity for context length adjustment was highlighted, cautioning that extensive length may be VRAM-hungry.

- Template Turmoil in Mistral: Discussions surfaced indicating that Mistral's unique chat template deviates from the norm, stirring operational stir among users.

- The chat template intricacies led to a dialogue on fine-tuning tactics to circumvent the issues presented.

- Merging Methodologies Matter: The Axolotl repository glowed with activity as a new pull request was crafted, marking progression in development efforts.

- Yet, the simplicity of the Direct Policy Optimization (DPO) method was debated, uncovering its limitations in extending beyond basic tokenization and masking.

- LoRA Layers Lead the Way: A focused forum formed around

lora_target_linear, a LoRA configuration toggle that transforms how linear layers are adapted for more efficient fine-tuning.- The setting's role in Axolotl's fine-tuning sparked discussions, although some queries about disabling LoRA on certain layers remain unanswered.

Torchtune Discord

- Torchtune's Tantalizing v0.2.0 Takeoff: The eagerly awaited launch of Torchtune v0.2.0 marks a major milestone with additions including exciting models and recipes.

- Community contributions have enriched the release, featuring dataset enhancements like sample packing, for improved performance and diverse applications.

- Evaluating Without Backprop Burdens: To optimize checkpoint selection, loss calculation during evaluation can be done without backpropagation; participants discussed plotting loss curves and comparing them across training and evaluation datasets.

- Suggestions included modifications to the default recipe config and incorporating a test split and an eval loop using

torch.no_grad()alongside model eval mode.

- Suggestions included modifications to the default recipe config and incorporating a test split and an eval loop using

- RoPE Embeddings Rise to Record Contexts: Long context modeling gains traction with a proposal for scaling RoPE (Rotary Positional Embeddings) in Torchtune's RFC, paving the way for large document and code completion tasks.

- The discussion revolves around enabling RoPE for contexts over 8K that can transform understanding of large volume documents and detailed codebases.

LAION Discord

- ComfyUI Crew Concocts Disney Disruption: A participant revealed that those behind the ComfyUI malicious node attack claimed to also orchestrate the Disney cyber attacks, challenging the company's digital defenses.

- It was mentioned that, while some see the Disney attacks as chaotic behavior, there is speculation and anticipation regarding FBI's potential investigation into the incidents.

- Codestral's Mamba Makes Its Mark: A recent post shared a breakthrough dubbed Codestral Mamba, a new update from Mistral AI, sparking conversations around its capabilities and potential applications.

- The details of its performance and comparison to other models, along with technical specs, were not elaborated, leaving the community curious about its impact.

- YouTube Yields Fresh Tutorial Temptations: A fresh tutorial catch surfaced with a link to a new YouTube tutorial posted by a guild member, touted to offer educational content in a video format.

- The specifics of the video's educational value and relevance to the AI engineering community were not discussed, prompting members to seek out the content independently.

OpenInterpreter Discord

- Challenges Mount for Meta's Specs: Engineers grapple with the integration of Open Interpreter into RayBan Stories, thwarted by lack of official SDK and difficult hardware access.

- An attempt to dissect the device revealed hurdles, discussed on a Pastebin documentation, with concerns about internal adhesives and transparency for better modding.

- Google Glass: A Looking Glass for Open Interpreter?: With the struggles of hacking RayBan Stories, Google Glass was floated as a possible alternative platform.

- Dialogue was scarce following the suggestion, indicating a need for further investigation or community input.

- O1 Light Hardware: Patience Wears Thin: Community discontent grows over multiple-month delays in O1 Light hardware preorders, with a vexing silence on updates.

- Members air their grievances, indicating strained anticipation for the product, with the lack of communication augmenting their unease.

LLM Finetuning (Hamel + Dan) Discord

- Confusion Cleared: Accessing GPT-4o Fine-Tuning: Queries about GPT-4o fine-tuning access led to clarification that an OpenAI invitation is necessary, as highlighted by a user referencing Kyle's statement.

- The discussion unfolded in the #general channel, reflecting the community's eagerness to explore fine-tuning capabilities.

- OpenPipeAI Embraces GPT-4o: Train Responsibly: OpenPipeAI announces support for GPT-4o training, with an appeal for responsible use by Corbtt.

- This update serves as a pathway for AI Engineers to harness their course credits more efficiently in AI training endeavors.

tinygrad (George Hotz) Discord

- Inside Tinygrad's Core: Dissecting the Intermediate Representation: Interest bubbled up around tinygrad's intermediate language**, with users curious about the structure of deep learning operators within the IR.

- Tips were exchanged about leveraging debug options DEBUG=3 for insights into the lower levels of IR, while GRAPH=1 and GRAPHUOPS=1 commands surfaced as go-to options for visualizing tinygrad's inner complexities.

- Tinygrad Tales: Visualization and Debugging Dynamics: Amidst discussions, a nugget of wisdom was shared for debugging tinygrad using DEBUG=3**, revealing the intricate lower levels of the intermediate representation.

- Further, for those with a keen eye on visualization, invoking GRAPH=1 and GRAPHUOPS=1 could translate tinygrad's abstract internals into graphical clarity.

Mozilla AI Discord

- Opening Insights with Open Interpreter: Mike Bird shines a spotlight on Open Interpreter, inviting active participation.

- Audience engagement is driven by encouragement to field questions during the elucidation of Open Interpreter.

- Engage with the Interpreter: The stage echoes with discussions on Open Interpreter steered by Mike Bird, as he unfolds the project details.

- Invitations are cast to the attendees to query and further the conversation on Open Interpreter during the presentation.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI Stack Devs (Yoko Li) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!