[AINews] Test-Time Training, MobileLLM, Lilian Weng on Hallucination (Plus: Turbopuffer)

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Depth is all you need. We couldn't decide what to feature so here's 3 top stories.

AI News for 7/8/2024-7/9/2024. We checked 7 subreddits, 384 Twitters and 29 Discords (463 channels, and 2038 messages) for you. Estimated reading time saved (at 200wpm): 250 minutes. You can now tag @smol_ai for AINews discussions!

Two major stories we missed, and a new one we like but didn't want to give the whole space to:

- Lilian Weng on Extrinsic Hallucination: We usually drop everything when the Lil'Log updates, but she seems to have quietly shipped this absolute monster lit review without announcing it on Twitter. Lilian defines the SOTA on Hallucination Detection (FactualityPrompt, FActScore, SAFE, FacTool, SelfCheckGPT, TruthfulQA) and Anti-Hallucination Methods (RARR, FAVA, Rethinking with Retrieval, Self-RAG, CoVE, RECITE, ITI, FLAME, WebGPT), and ends with a brief reading list on other Hallucination eval benchmarks. We definitely need to do a lot of work on this for our Reddit recaps.

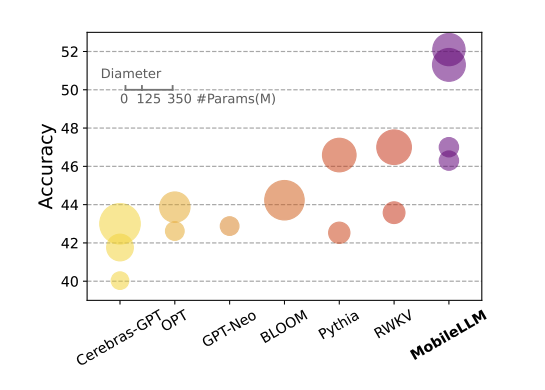

- MobileLLM: Optimizing Sub-Billion Parameter Language Models for On-Device Use: One of the most hyped FAIR papers published at the upcoming ICML (though not even receiving a spotlight, hmm) focusing on sub-billion scale, on-device model architecture research making a 350M model reach the same perf as Llama 2 7B, surprisingly in a chat context. Yann LeCun's highlights: 1) thin and deep, not wide 2) shared matrices for token->embedding and embedding->token; shared weights between multiple transformer blocks".

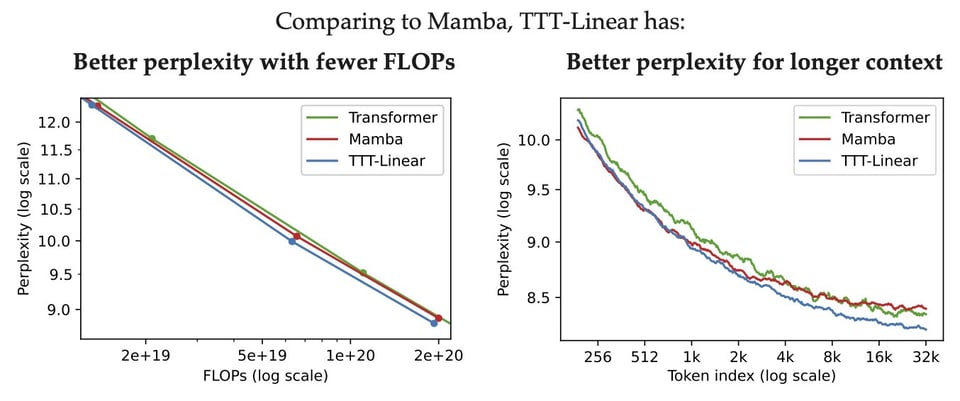

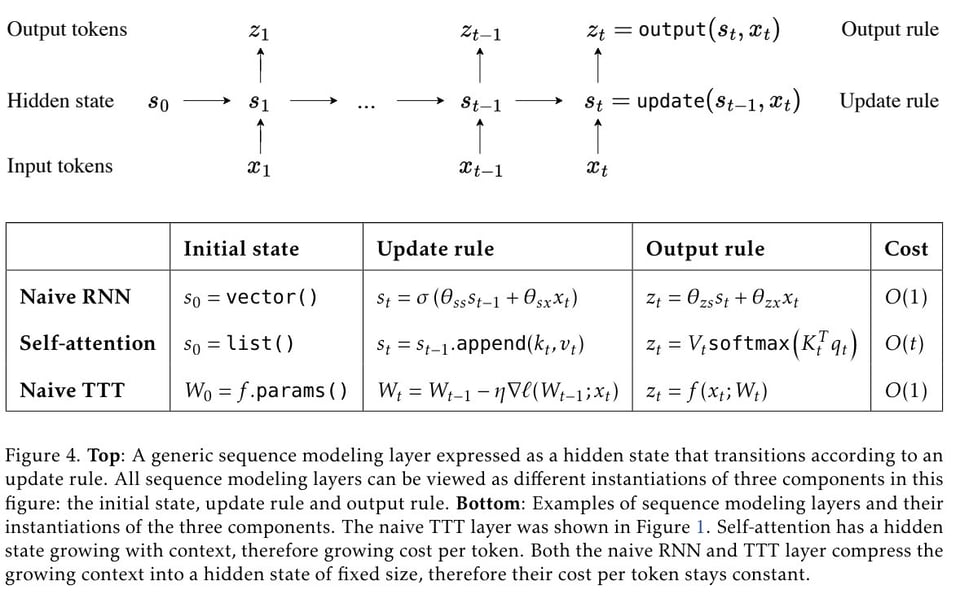

- Learning to (Learn at Test Time): RNNs with Expressive Hidden States (advisor, author tweets): Following ICML 2020 work on Test-Time Training, Sun et al publish a "new LLM architecture, with linear complexity and expressive hidden states, for long-context modeling" that directly replace attention, "scales better (from 125M to 1.3B) than Mamba and Transformer" and "works better with longer context".

Main insight is replacing the hidden state of an RNN with a small neural network (instead of a feature vector for memory).

Main insight is replacing the hidden state of an RNN with a small neural network (instead of a feature vector for memory).  The basic intuition makes sense: "If you believe that training neural networks is a good way to compress information in general, then it will make sense to train a neural network to compress all these tokens." If we can nest networks all the way down, how deep does this rabbit hole go?

The basic intuition makes sense: "If you believe that training neural networks is a good way to compress information in general, then it will make sense to train a neural network to compress all these tokens." If we can nest networks all the way down, how deep does this rabbit hole go?

Turbopuffer also came out of stealth with a small well received piece.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

We are running into issues with the Twitter pipeline, please check back tomorrow.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

AI Models and Architectures

- CodeGeeX4-ALL-9B open sourced: In /r/artificial, Tsinghua University has open sourced CodeGeeX4-ALL-9B, a groundbreaking multilingual code generation model outperforming major competitors and elevating code assistance.

- Mamba-Transformer hybrids show promise: In /r/MachineLearning, Mamba-Transformer hybrids provide big inference speedups, up to 7x for 120K input tokens, while slightly outperforming pure transformers in capabilities. The longer the input context, the greater the advantage.

- Phi-3 framework released for Mac: /r/LocalLLaMA shares news of Phi-3 for Mac, a versatile AI framework that leverages both the Phi-3-Vision multimodal model and the recently updated Phi-3-Mini-128K language model. It's designed to run efficiently on Apple Silicon using the MLX framework.

AI Safety and Ethics

- Ex-OpenAI researcher warns of safety neglect: In /r/singularity, ex-OpenAI researcher William Saunders says he resigned when he realized OpenAI was the Titanic - a race where incentives drove firms to neglect safety and build ever-larger ships leading to disaster.

- AI model compliance test shows censorship variance: An AI model compliance test in /r/singularity shows which models have the least censorship. Claude models are bottom half bar one, while GPT-4 finishes in the top half.

- Hyper-personalization may fracture shared reality: In /r/singularity, Anastasia Bendebury warns that hyper-personalization of media content due to AI may lead to us living in essentially different universes. This could accelerate the filter bubble effect already seen with social media algorithms.

AI Applications

- Pathchat enables AI medical diagnosis: /r/singularity features Pathchat by Modella, a multi-modal AI model designed for medical and pathological purposes, capable of identifying tumors and diagnosing cancer patients.

- Thrive AI Health provides personalized coaching: /r/artificial discusses Thrive AI Health, a hyper-personalized AI health coach funded by the OpenAI Startup Fund.

- Odyssey AI aims to revolutionize visual effects: In /r/OpenAI, Odyssey AI is working on "Hollywood-grade" visual FX, trained on real world 3D data. It aims to reduce film production time and costs dramatically.

AI Capabilities and Concerns

- AI predicts political beliefs from faces: /r/artificial shares a study showing AI's ability to infer political leanings from facial features alone.

- Sequoia Capital warns of potential AI bubble: In /r/OpenAI, Sequoia Capital warns that AI would need to generate $600 billion in annual revenue to justify current hardware spending. Even optimistic revenue projections fall short, suggesting potential overinvestment leading to a bubble.

- China faces glut of underutilized AI models: /r/artificial reports on China's AI model glut, which Baidu CEO calls a "significant waste of resources" due to scarce real-world applications for 100+ LLMs.

Memes and Humor

- Twitter users misunderstand AI technology: /r/singularity shares a humorous take on the lack of understanding of AI technology among the general public on Twitter.

- AI imagines aging Mario game: /r/singularity features a humorous AI-generated video game cover depicting an aging Mario suffering from back pain.

AI Discord Recap

A summary of Summaries of Summaries

1. Large Language Model Advancements

- Nuanced Speech Models Emerge: JulianSlzr highlighted the nuances between GPT-4o's polished turn-based speech model and Moshi's unpolished full-duplex model.

- Andrej Karpathy and others weighed in on the differences, showcasing the diverse speech capabilities emerging in AI models.

- Gemma 2 Shines Post-Update: The Gemma2:27B model received a significant update from Ollama, correcting previous issues and leading to rave reviews for its impressive performance, as demonstrated in a YouTube video.

- Community members praised the model's turnaround, calling it 'incredible' after struggling with incoherent outputs previously.

- Supermaven Unveils Babble: Supermaven announced the launch of Babble, their latest language model boasting a massive 1 million token context window, which is 2.5x larger than their previous offering.

- The upgrade promises to enrich the conversational landscape with its expansive context handling capabilities.

2. Innovative AI Research Frontiers

- Test-Time Training Boosts Transformers: A new paper proposed using test-time training (TTT) to improve model predictions by performing self-supervised learning on unlabeled test instances, showing significant improvements on benchmarks like ImageNet.

- TTT can be integrated into linear transformers, with experimental setups substituting linear models with neural networks showing enhanced performance.

- MatMul-Free Models Revolutionize LLMs: Researchers have developed matrix multiplication elimination techniques for large language models that maintain strong performance at billion-parameter scales while significantly reducing memory usage, with experiments showing up to 61% reduction over unoptimized baselines.

- A new architecture called Test-Time-Training layers replaces RNN hidden states with a machine learning model, achieving linear complexity and matching or surpassing top transformers, as announced in a recent tweet.

- Generative Chameleon Emerges: The first generative chameleon model has been announced, with its detailed examination captured in a paper on arXiv.

- The research community is eager to investigate the model's ability to adapt to various drawing styles, potentially revolutionizing digital art creation

3. AI Tooling and Deployment Advances

- Unsloth Accelerates Model Finetuning: Unsloth AI's new documentation website details how to double the speed and reduce memory usage by 70% when finetuning large language models like Llama-3 and Gemma, without sacrificing accuracy.

- The site guides users through creating datasets, deploying models, and even tackles issues with the gguf library by suggesting a build from the llama.cpp repo.

- LlamaCloud Eases Data Integration: The beta release of LlamaCloud promises a managed platform for unstructured data parsing, indexing, and retrieval, with a waitlist now open for eager testers.

- Integrating LlamaParse for advanced document handling, LlamaCloud aims to streamline data synchronization across diverse backends for seamless LLM integration.

- Crawlee Simplifies Web Scraping: Crawlee for Python was announced, boasting features like unified interfaces for HTTP and Playwright, and automatic scaling and session management, as detailed on GitHub and Product Hunt.

- With support for web scraping and browser automation, Crawlee is positioned as a robust tool for Python developers engaged in data extraction for AI, LLMs, RAG, or GPTs.

4. Ethical AI Debates and Legal Implications

- Copilot Lawsuit Narrows Down: A majority of claims against GitHub Copilot for allegedly replicating code without credit have been dismissed, with only two allegations left in the legal battle involving GitHub, Microsoft, and OpenAI.

- The original class-action suit argued that Copilot's training on open-source software constituted an intellectual property infringement, raising concerns within the developer community.

- AI's Societal Impact Scrutinized: Discussions revealed concerns about AI's impact on society, particularly regarding potential addiction issues and the need for future regulations.

- Members emphasized the urgency for proactive measures in light of AI's transformative nature across various sectors.

- Anthropic Credits for Developers: Community members inquired about the existence of a credit system similar to OpenAI's for Anthropic, seeking opportunities for experimentation and development on their platforms.

- The conversation underscored the growing interest in accessing Anthropic's offerings, akin to OpenAI's initiatives, to facilitate AI research and exploration.

5. Model Performance Optimization

- Deepspeed Boosts Training Efficiency: Deepspeed enables training a 2.5 billion parameter model on a single RTX 3090, achieving higher batch sizes and efficiency.

- A member shared their success with Deepspeed, sparking interest in its potential for more accessible training regimes.

- FlashInfer's Speed Secret: FlashInfer Kernel Library supports INT8 and FP8 attention kernels, promising performance boosts in LLM serving.

- The AI community is keen to test and discuss FlashInfer's impact on model efficiency, reflecting high anticipation.

6. Generative AI in Storytelling

- Generative AI Impacts Storytelling: Medium article explores the profound changes Generative AI brings to storytelling, unlocking rich narrative opportunities.

- KMWorld highlights AI leaders shaping the knowledge management domain, emphasizing Generative AI's transformative potential.

- AI's Role in Cultural Impact: Discussions on AI's societal impact highlight concerns about addiction and the need for future regulations, reflecting transformative AI technology.

- The community underscores the urgency for proactive measures to address AI's cultural effects and societal implications.

7. AI in Education

- Teacher Explores CommandR for Learning Platform: A public school teacher is developing a Teaching and Learning Platform leveraging CommandR's RAG-optimized features.

- The initiative received positive reactions and offers of assistance from the community, showcasing collective enthusiasm.

- Claude Contest Reminder: Build with Claude contest offers $30k in Anthropic API credits, ending soon.

- Community members are reminded to participate, emphasizing the contest's importance for developers and creators.

PART 1: High level Discord summaries

HuggingFace Discord

- Intel’s Inference Boost for HF Models: A new GitHub repo showcases running HF models on Intel CPUs more efficiently, a boon for developers with Intel hardware.

- This resource comes in response to a gap in Intel-specific guidance and could be a goldmine for enhancing model runtime performance.

- Gemma's Glorious Gains Post-Update: The Gemma2:27B model has been turbocharged, demonstrated in an enlightening YouTube video, much to the community's approval.

- Ollama's timely update corrected issues that now find Gemma receiving rave reviews for its impressive performance.

- Crafty Context Window Considerations: VRAM usage during LLM training can be all over the map, and context window size is a cornerstone of this computational puzzle.

- Exchanging experiences, the community shared that padding and max token adjustments hold the key to consistent VRAM loads.

- Penetrating the Subject of Pentesting: The spotlight shines bright on PentestGPT, as a review session is set to dive deep into the nuances of AI pentesting.

- With a focused paper to dissect, the group is preparing to advance the dialogue on robust pentesting practices for AI.

- Narrative Nuances and Generative AI: Generative AI's impact on storytelling is front and center, with insights unpacked in a Medium article highlighting its narrative potential.

- Meanwhile, KMWorld throws light on AI leaders who are shaping the knowledge management domain, with an eye on Generative AI.

Unsloth AI (Daniel Han) Discord

- *Unsloth Docs Unleashes Efficiency: Unsloth AI's new documentation website boosts training of models like Llama-3 and Gemma*, doubling the speed and reducing memory usage by 70% without sacrificing accuracy.

- The site’s tutorials facilitate creating datasets and deploying models, even tackling gguf library issues by proposing a build from the llama.cpp repo.

- *Finetuning Finesse for LlaMA 3: Modular Model Spec development seeks to refine the process of training AI models like LLaMA 3*.

- SiteForge incorporates LLaMA 3 into their web page design generation, promising an AI-driven revolutionary design experience.

- *MedTranslate with LlaMA 3's Mastery: Discussions on translating 5k medical records to Swedish using Llama 3* shed light on the model’s Swedish proficiency and usage potential.

- Users validate the utility of fine-tuning Llama 3 for Swedish-specific applications, as seen with the AI-Sweden-Models/Llama-3-8B-instruct.

- *Datasets Get a Synthetic Boost: An AI approach is creating synthetic chat datasets, employing rationale and context for over 1 million utterances which enrich the PIPPA dataset*.

- In the realm of medical dialogue, using existing fine-tuned models hinted at skipping prep-training, echoing with the benefits presented in the research.

- *Reimagining LLMs with MatMul-Free Models*: LLMs shed matrix multiplication, conserving memory by 61% at billion-parameter scales, as unveiled in a study.

- Test-Time-Training layers emerge as an alternative to RNN hidden states, showcased in models with linear complexity, highlighted on social media.

CUDA MODE Discord

- Integration Intrigue: Triton Meets PyTorch: Inquisitive minds are exploring how to integrate Triton kernels into PyTorch, specifically aiming to register custom functions for

torch.compileauto-optimization.- The discussion is ongoing, as the tech community eagerly awaits a definitive guide or solution to this challenge.

- Texture Talk: Vulcan Backing Operators: Why does executorch's Vulkan backend utilize textures in its operators? This question has opened a line of enquiry among members.

- Concrete conclusions haven't been reached, keeping the topic open for further insight and exploration.

- INT8 and FP8: FlashInfer's Speed Secret: The unveiling of the FlashInfer Kernel Library has sparked interest with its support for INT8 and FP8 attention kernels, promising a boost in LLM serving.

- With a link to the library, the AI community is keen to test and discuss its potential impact on model efficiency.

- Quantization Clarity: Calibration is Key: The quantization conversation has taken a technical turn, with the revelation that proper calibration with data is essential when using static quantization.

- A GitHub pull request spotlights this necessity, prompting a tech deep dive into the practise.

- Divide to Conquer: GPS-Splitting with Ring Attention: Splitting KV cache across GPUs: A challenge being tackled with ring attention, particularly within an AWS g5.12xlarge instance context.

- The pursuit of optimum topology for this implementation is spirited, with members sharing resources like this gist to aid in the endeavor.

Nous Research AI Discord

- GIF Garners Giggles: Marcus vs. LeCun: A user shared a humorous GIF capturing a debate moment between Gary Marcus and Yann LeCun, highlighting differing AI perspectives without getting into technicalities.

- It provided a light-hearted take on the sometimes tense exchanges between AI experts, capturing community attention GIF Source.

- Hermes 2 Pro: Heights of Performance: Hermes 2 Pro was applauded for its enhanced Function Calling, JSON Structured Outputs, demonstrating robust improvements on benchmarks Hermes 2 Pro.

- The platform's advancements enthralled the community, reflecting progress in LLM capabilities and use cases.

- Synthetic Data Gets Real: Distilabel Dominates: Distilabel emerged as a superior tool for synthetic data generation, praised for its efficacy and quality output Distilabel Framework.

- Members suggested harmonizing LLM outputs with Synthetic data, enhancing AI engineers' development and debugging workflows.

- PDF Pains & Solutions with Sonnet 3.5: A lack of direct solutions for processing PDFs with Sonnet 3.5 API led the community to explore alternative pathways like the Marker Library.

- Everyoneisgross highlighted Marker's ability to convert PDFs to Markdown, proposing it as a workaround for scenarios requiring better model compatibility.

- RAG's New Frontier: RankRAG Revolution: RankRAG's methodology has leaped ahead, achieving substantial gains by training an LLM for concurrent ranking and generation RankRAG Methodology.

- This approach, dubbed 'Llama3-RankRAG,' showcased compelling performance, excelling over its counterparts in a span of benchmarks.

Modular (Mojo 🔥) Discord

- Chris Lattner's Anticipated Interview: The Primeagen is set to conduct a much-awaited interview with Chris Lattner on Twitch, sparking excitement and anticipation within the community.

- Eager discussions ensued with helehex teasing a special event involving Lattner tomorrow, further hyping up the Modular community.

- Cutting-Edge Nightly Mojo Released: Mojo Compiler's latest nightly version, 2024.7.905, introduces improvements such as enhanced

memcmpusage and refined parameter inference for conditional conformances.- Developers keenly examined the changelog and debated the implications of conditional conformance on type composition, particularly focusing on a significant commit.

- Mojo's Python Superpowers Unleashed: Mojo's integration with Python has become a centerpiece of discussion, as documented in the official Mojo documentation, evaluating the potential to harness Python's extensive package ecosystem.

- The conversation transitioned to Mojo potentially becoming a superset of Python, emphasizing the strategic move to empower Mojo with Python's versatility.

- Clock Precision Dilemma: A detailed examination of clock calibration revealed a slight yet critical 1 ns discrepancy when using

_clock_gettimecalls successively, shedding light on the need for high-precision measurements.- This revelation prompted further analysis on the influence of clock inaccuracies, underlining its importance in time-sensitive applications.

- Vectorized Mojo Marathons Trailblaze Performance: Mojo marathons put vectorization to the test, discovering performance variables with different width vectorization where sometimes width 1 outperforms width 2.

- Community members stressed the importance of adapting benchmarks to include both symmetrical and asymmetrical matrices, aligning tests with realistic geo and image processing scenarios.

LM Studio Discord

- *Vocalizing LLMs: Custom Voices Make Noise: Community explores integrating Eleven Lab custom voices with LLM Studio, proposing custom programming via server feature* for text-to-speech.

- Discussion advises caution as additional programming is needed despite tools like Claude being available to assist in development.

- *InternLM: Sliding Context Window Wows the Crowd: Members applaud InternLM* for its sliding context window, maintaining coherence even when memory is overloaded.

- Conversations backed by screenshots reveal how InternLM adjusts by forgetting earlier messages but admirably stays on track.

- *Web Crafters: Custom Scrapers Soar with AI: A member showcases their feat of coding a Node.js web scraper* in lightning speed using Claude, stirring discussion on AI's role in tool creation.

- "I got 78 lines of code that do exactly what I want," they shared, emphasizing AI's impact on the efficiency of development.

- *AI’s Code: Handle with Care*: AI code generation leads to community debate; it's a valuable tool but should be wielded with caution and an understanding of the underlying code logic.

- The consensus: Use AI for rapid development, but validate its output to ensure code quality and reliability.

- *AMD's Crucial Cards: GPUs in the Spotlight: Members discuss using RX 6800XTs for LLM multi-GPU setups, yielding insights into LM Studio's* handling of resources and configuration.

- As the debate on AMD ROCm's support longevity unfolds, the choice between RX 6800XTs and 7900XTXs is weighed with a future-focused lens.

Eleuther Discord

- *TTT Tango with Delta Rule: Discussions revealed that TTT-Linear aligns with the delta rule* when using a mini batch size of 1, leading to an optimized performance in model predictions.

- Further talks included the rwkv7 architecture planning to harness an adapted delta rule, and ruoxijia shedding light on the possibility of parallelism in TTT-linear.

- *Shapley Shakes Up Data Attribution: In-Run Data Shapley* stands out as an innovative project, promising scalable frameworks for real-time data contribution assessments during pre-training.

- It aims to exclude detrimental data from training, essentially influencing model capabilities and shedding clarity on the concept of 'emergence' as per the AI community.

- *Normalizing the Gradient Storm: A budding technique for gradient normalization* aims to address deep network challenges such as the infamous vanishing or exploding gradients.

- However, it's not without its drawbacks, with the AI community highlighting issues like batch-size dependency and the associated hiccups in cross-device communications.

- *RNNs Rivaling the Transformer Titans: The emerging Mamba and RWKV* RNN architectures are sparking excitement as they offer constant memory usage and are proving to be formidable opponents to Transformers in perplexity tasks.

- The memory management efficiencies and their implications on long-context recall are the focus of both theoretical and empirical investigation in the current discourse.

- *Bridging the Brain Size Conundrum*: A recent study challenges the perceived straightforwardness of brain size evolution, especially the part where humans deviate from the largest animals not having proportionally bigger brains.

- The conversation also tapped into neuronal density's role in the intelligence mapping across species, further complicating the understanding of intelligence and its evolutionary advantages.

Stability.ai (Stable Diffusion) Discord

- Pixels to Perfection: Scaling Up Resolutions: Debate centered on whether starting with 512x512 resolution when fine-tuning models has benefits over jumping straight to 1024x1024.

- The consensus leans towards progressive scaling for better gradient propagation while keeping computational costs in check.

- *Booru Battle: Tagging Tensions: Discussions got heated around using booru tags* for training AI, with a divide between supporters of established vocab and proponents of more naturalistic language tags.

- Arguments highlighted the need for balance between precision and generalizability in tagging for models.

- AI and Society: Calculating Cultural Costs: Members engaged in a dialogue about AI's role in society, contemplating the effects on addiction and pondering over the need for future regulations.

- The group underscored the urgency for proactive measures in light of the transformative nature of AI technologies.

- Roop-Unleashed: Revolutionizing Replacements: Roop-Unleashed was recommended as a superior solution for face replacement in videos, taking the place of the obsolete mov2mov extension.

- The tool was lauded for its consistency and ease-of-use, marking a shift in preference within the community.

- Model Mix: SD Tools and Tricks: A lively exchange of recommendations for Stable Diffusion models and extensions took place, with a spotlight on tasks like pixel-art conversion and inpainting.

- Members mentioned tools like Zavy Lora and comfyUI with IP adapters, sharing experiences and boosting peer knowledge.

OpenAI Discord

- DALL-E Rivals Rise to the Occasion: Discussion highlighted StableDiffusion along with tools DiffusionBee and automatic1111 as DALL-E's main rivals, favored for granting users enhanced quality and control.

- These models have also been recognized for their compatibility with different operating systems, with an emphasis on local usage on Windows and Mac.

- Text Detectors Flunk the Constitution Test: Community sentiment suggests skepticism around the reliability of AI text detectors, with instances of mistakenly flagging content, comically including the U.S. Constitution.

- The debate continues without clear resolution, reflecting the complexity of discerning AI-generated from human-produced text.

- GPT's Monetization Horizon Unclear: Users queried about the potential for monetization for GPTs, but discussions stalled with no concrete details emerging.

- This subject seemed to lack substantial engagement or resolution within the community.

- VPN Vanquishes GPT-4 Connection: Users reported disruptions in GPT-4 interactions when a VPN is enabled, advising that disabling it can mitigate issues.

- Server problems impacting GPT-4 services were also mentioned as resolved, though specifics were omitted.

- Content Creators Crave Cutting-Edge Counsel: Content creators sought 5-10 trend-centric content ideas to spur audience growth and asked for key metrics to track content performance.

- They also explored strategies for a structured content calendar, emphasizing the need for effective audience engagement and platform optimization.

LlamaIndex Discord

- LlamaCloud Ascends with Beta Launch: The beta release of LlamaCloud promises a sophisticated platform for unstructured data parsing, indexing, and retrieval, with a waitlist now open for eager testers.

- Geared to refine the data quality for LLMs, this service integrates LlamaParse for advanced document handling and seeks to streamline the synchronization across varied data backends.

- Graph Technology Gets a Llama Twist: LlamaIndex propels engagement with a new video series showcasing Property Graphs, a collaborative effort highlighting model intricacies in nodes and edges.

- This educational push is powered by teamwork with mistralai, neo4j, and ollama, crafting a bridge between complex document relationships and AI accessibility.

- Chatbots Climb to E-commerce Sophistication: In pursuit of enhanced customer interaction, one engineering push in the guild focused on advancing RAG chatbots with keyword searches and metadata filters to address complex building project queries.

- This approach involves a hybrid search mechanism leading to a more nuanced exchange of follow-up questions, aiming for a leap in conversational precision.

- FlagEmbeddingReranker's Import Impasse: Troubleshooting efforts in the community suggested installing

peftindependently to overcome the import errors faced withFlagEmbeddingReranker, helping a user finally cut through the technical knot.- This hiccup underscores the oft-hidden complexities in setting up machine learning environments, where package dependencies can become a sneaky snag.

- Groq API's Rate Limit Riddle: AI engineers hit a speed bump with a 429 rate limit error in LlamaIndex when tapping into Groq API for indexing, spotlighting challenges in syncing with OpenAI's embedding models.

- The discussion veered towards the intricacies of API interactions and the need for a strategic approach to circumvent such limitations, maintaining a seamless indexing experience.

Perplexity AI Discord

- Perplexity Paints a Different API Picture: A debate sparked on the discernible differences between API and UI results in Perplexity, particularly when neither Pro versions nor sourcing features are applied.

- A proactive solution was considered, involving the labs environment to test for parity between API and UI outputs without additional Pro features.

- Nodemon Woes with PPLX Integration: Troubles emerged in configuring Nodemon for a project utilizing PPLX library, with success eluding a member despite correct local execution and tsconfig.json tweaks.

- The user sought insights from fellow AI engineers, sharing error logs indicative of a possible missing module issue linked with the PPLX setup.

LAION Discord

- Deepspeed Dazzles on a Dime: An innovative engineer reported successfully training a 2.5 billion parameter model on a single RTX 3090 using Deepspeed, with potential for higher batch sizes.

- The conversation sparked interest in exploring the boundaries of efficient training with resource constraints, hinting at more accessible training regimes.

- OpenAI's Coding Companion Prevails in Court: A pivotal legal decision was reached as a California court partially dismissed a suit against Microsoft's GitHub Copilot and OpenAI's Codex, showing the resilience of AI systems against copyright claims.

- The community is dissecting the implications of this legal development for AI-generated content. Read more.

- Chameleon: Generative Model Mimics Masters: The release of the first generative chameleon model has been announced, with its detailed examination captured in a paper on arXiv.

- The research community is eager to investigate the model's ability to adapt to various drawing styles, potentially revolutionizing digital art creation.

- Scaling the Complex-Value Frontier: A pioneering member encountered challenges while expanding the depths of complex-valued neural networks intended for vision tasks.

- Despite hurdles in scaling, a modest 65k parameter complex-valued model showed promising results, outdoing its 400k parameter real-valued counterpart in accuracy on CIFAR-100.

- Diffusion Demystified: A Resource Repository: A new GitHub repository provides an intuitive code-based curriculum for mastering image diffusion models ideal for training on modest hardware. Explore the repository.

- This resource aims to foster hands-on understanding through concise lessons and tutorials, inviting contributions to evolve its educational offering.

OpenRouter (Alex Atallah) Discord

- Breaking the Quota Ceiling: Overlimit Woes on OpenRouter: Users experienced a 'Quota exceeded' error from

aiplatform.googleapis.comwhen using gemini-1.5-flash model, suggesting a Google-imposed limit.- For insights on usage, check Activity | OpenRouter and for custom routing solutions, see Provider Routing | OpenRouter.

- Seeing None: The Image Issue Challenge on OpenRouter: A None response was reported on image viewing on models like gpt-4o, claude-3.5, and firellava13b, eliciting mixed confirmations of functionality from users.

- This suggests a selective issue, not affecting all users, and warrants a detailed look into individual user configurations.

- Dolphin Dive: Troubleshooting LangChain's Newest Resident: Users are facing challenges integrating Dolphin 2.9 Mixstral on OpenRouter with LangChain as a language tool.

- Technical details of the issue weren’t provided, indicating potential compatibility problems or configuration errors.

- JSON Jolts: Mistralai Mixtral’s Sporadic Support Slip: The error 'not supported for JSON mode/function calling' plagues users of mistralai/Mixtral-8x22B-Instruct-v0.1 at random times.

- Troubleshooting identified Together as the provider related to the recurring error, spotlighting the need for further investigation.

- Translational Teeter-Totter: Assessing LLMs as Linguists: Discussions focussed on the effectiveness of LLMs over specialized models for language translation tasks highlighted preferences and performance metrics.

- Consideration was given to the reliability of modern decoder-only models versus true encoder/decoder transformers for accurate translation capabilities.

Latent Space Discord

- Claude Closes Contest with Cash for Code: The Build with Claude contest is nearing its end, offering $30k in Anthropic API credits for developers, as mentioned in a gentle reminder from the community.

- Alex Albert provides more information about participation and context in this revealing post.

- Speech Models Sing Different Tunes: GPT-4o and Moshi's speech models caught the spotlight for their contrasting styles, with GPT-4o boasting a polished turn-based approach versus Moshi's raw full-duplex.

- The conversation unfolded thanks to insights from JulianSlzr and Andrej Karpathy.

- AI Stars in Math Olympiad: Thom Wolf lauded the AI Math Olympiad where the combined forces of Numina and Hugging Face showcased AI's problem-solving prowess.

- For an in-depth look, check out Thom Wolf's thread detailing the competition’s highlights and AI achievements.

- Babble Boasts Bigger Brain: Supermaven rolled out Babble, their latest language model, with a massive context window upgrade, holding 1 million tokens.

- SupermavenAI's announcement heralds a 2.5x leap over its predecessor and promises an enriched conversational landscape.

- Lillian Weng's Lens on LLM's Lapses: Hallucinations in LLMs take the stage in Lillian Weng's blog as she explores the phenomenon's origins and taxonomy.

- Discover the details behind why large language models sometimes diverge from reality.

LangChain AI Discord

- LLMWhisperer Decodes Dense Documents: The LLMWhisperer shows proficiency in parsing complex PDFs, suggesting the integration of schemas from Pydantic or zod within LangChain to enhance data extraction capabilities.

- Combining page-wise LLM parsing and JSON merges, users find LLMWhisperer useful for extracting refined data from verbose documents.

- Crawlee's Python Debut Makes a Splash: Apify announces Crawlee for Python, boasting about features such as unified interfaces and automatic scaling on GitHub and Product Hunt.

- With support for HTTP, Playwright, and session management, Crawlee is positioned as a robust tool for Python developers engaged in web scraping.

- Llamapp Lassoes Localized RAG Responses: Llamapp emerges as a local Retrieval Augmented Generator, fusing retrievers and language models for pinpoint answer accuracy.

- Enabling Reciprocal Ranking Fusion, Llamapp stays grounded to the source truth while providing tailored responses.

- Slack Bot Agent Revolution in Progress: A how-to guide illustrates the construction of a Slack Bot Agent, utilizing LangChain and ChatGPT for PR review automation.

- The documentation indicates a step-by-step process, integrating several frameworks to refine the PR review workflow.

- Rubik’s AI Pro Opens Door for Beta Testers: Inviting AI enthusiasts, Rubik's AI Pro emerges for beta testing, flaunting its research aid and search capabilities using the 'RUBIX' code.

- They highlight access to advanced models and a premium trial, underpinning their quest for comprehensive search solutions.

OpenInterpreter Discord

- OI Executes with Example Precision: By incorporating code instruction examples, OI's execution parallels assistant.py, showcasing its versatile skill instruction handling.

- This feature enhancement suggests an increase in the functional capabilities, aligning with the growth of sophisticated language models.

- Qwen 2.7B's Random Artifacts: Qwen 2 7B model excels with 128k processing, yet it occasionally generates random '@' signs, causing unexpected glitches in the output.

- While the model's robustness is evident, these anomalies highlight a need for refinement in its generation patterns.

- Local Vision Mode in Compatibility Question: Local vision mode utilization with the parameter '--model i' sparked discussions on its compatibility and whether it opens up multimodal use cases.

- With such features, engineers are probing into the integration of diverse input modalities for more comprehensive AI systems.

- GROQ Synchronized with OS Harmony: There's a wave of inquiry on implementing GROQ alongside OS mode, probing the necessity for a multimodal model in such cases.

- The dialogue underscores an active pursuit for more seamless and cohesive workflows within the AI engineering domain.

- Interpreting Coordinates with Open Interpreter: Open Interpreter’s method of interpreting screen coordinates was queried, indicating a deeper dive into the model's interactive abilities.

- Understanding this mechanism is crucial for engineers aiming to utilize AI for more dynamic and precise user interface interactions.

tinygrad (George Hotz) Discord

- Ampere and Ada Embrace NV=1: Discussions revealed NV=1 support primarily targets Ampere and Ada architectures, leaving earlier models pending community-driven solutions.

- George Hotz stepped in to clarify that Turing generation cards are indeed compatible, as outlined on the GSP firmware repository.

- Karpathy's Class Clinches tinygrad Concepts: For those seeking to sink their teeth into tinygrad, Karpathy's transformative tutorial was recommended, promising engaging insights into the framework.

- The PyTorch-based video serves as a catalyst for exploration, prompting an interactive approach to traversing the tinygrad documentation.

- WSL2 Wrestles with NV=1 Wrinkles: Members grappled with NV=1 deployment dilemmas on WSL2, facing snags with missing device files and uncertainty over CUDA compatibility.

- While the path remains nebulous, NVIDIA's open GPU kernel module emerged as a potential piece of the puzzle for eager engineers.

Interconnects (Nathan Lambert) Discord

- Copilot's Court Conundrum Continues: A majority of claims against GitHub Copilot for allegedly replicating code without credit have been dismissed, with only two allegations left in the legal battle involving GitHub, Microsoft, and OpenAI.

- Concerns raised last November suggested that Copilot's training on open-source software constituted an intellectual property infringement; developers await the court's final stance on the remaining allegations.

- Vector Vocabulary Consolidatio: Control Vector, Steering Vector, and Concept Vectors triggered a debate, leading to a consensus that Steering Vectors* are a form of applying Control Vectors in language models.

- Furthermore, the distinction between Feature Clamping and Feature Steering was clarified and viewed as complementary strategies in the realm of RepEng.

- Google Flame's Flicker Fades: Scores from the 'Google Flame' paper were retracted following the discovery of an unspecified issue, with community jests questioning if the mishap involved 'training on test data'.

- Scott Wiener lambasted a16z and Y Combinator on Twitter for their denunciation of California's SB 1047 AI bill, stirring up a storm in the discourse on AI legislation.

LLM Finetuning (Hamel + Dan) Discord

- Multi GPU Misery & Accelerate Angst: A six H100 GPU configuration unexpectedly delivered training speeds 10 times slower than anticipated, causing consternation.

- Advice circled around tweaking the batch size based on Hugging Face's troubleshooting guide and sharing code for community-driven debugging.

- Realistic Reckoning of Multi GPU Magic: Members pondered over realistic speed uplifts with multi GPU setups, busting the myth of a 10x speed increase, suggesting 6-7x as a more feasible target.

- The speed gain debate was grounded in concerns over communication overhead and the search for throughput optimization.

Cohere Discord

- Educators Eyeing CommandR for Classrooms: A public school teacher is exploring the integration of a Teaching and Learning Platform which leverages CommandR's RAG-optimized features.

- The teacher's initiative received a positive reaction from the community with offers of assistance and collective enthusiasm.

- Night Owls Rejoice Over Dark Mode Development: Dark Mode, awaited by many, is confirmed in the works and is targeted for the upcoming enterprise-level release.

- Discussion indicated that the Darkordial Mode might be adapted for a wider audience, hinting at a potential update for users of the free Coral platform.

Mozilla AI Discord

- Llama.cpp Takes a Performance Dive: An unexpected ~25% performance penalty was spotted arising from llama.cpp when migrating from version 0.8.8 to 0.8.9 on NVIDIA GPUs.

- The issue was stark, with a NVIDIA 3090 GPU's performance falling to a level comparable with a NVIDIA 3060 from the previous iteration.

- Upgrade Woes with Benchmark Suite: Writing a new benchmark suite brought to light performance impacts after upgrading the version of llamafile.

- Community feedback asserted no recent changes should have degraded performance, creating a conundrum for developers.

AI Stack Devs (Yoko Li) Discord

- Rosebud AI's Literary Game Creations: During the Rosebud AI Book to Game Jam, developers were tasked with transforming books into interactive puzzle games, rhythm games, and text-based adventures.

- The jam featured adaptations of works by Lewis Carroll, China Miéville, and R.L. Stine, with winners to be announced on Wednesday, July 10th at 11:30 AM PST.

- Game Devs Tackle Books with AI: Participants showcased their ingenuity in the Rosebud AI Jam, integrating Phaser and AI technology to craft games based on literary classics.

- Expectations are high for the unveiling of the winners at the official announcement in the Rosebud AI Discord community.

MLOps @Chipro Discord

- KAN Paper Discussion Heats Up on alphaXiv: Authors of the KAN paper are actively responding to questions on the alphaXiv forum about their recent arXiv paper.

- Community members are engaging with the authors, discussing the technical aspects and methodology of KAN.

- Casting the Net for Information Retrieval Experts: A podcast host is coordinating interviews with experts from Cohere, Zilliz, and Doug Turnbull on topics of information retrieval and recommendations.

- They've also reached out for additional guest suggestions in the field of information retrieval to add to their series.

LLM Perf Enthusiasts AI Discord

- Querying Anthropic: Any Credit Programs Akin to OpenAI?**: A member posed a question regarding the existence of a credit system similar to OpenAI's for Anthropic, seeking opportunities for experimentation.

- The inquiry reflects a growing interest in accessing Anthropic platforms for development and testing, akin to the OpenAI 10K credit program.

- Understanding Anthropic's Accessibility: A Credits Conundrum: Community members are curious about Anthropic's** supportive measures for developers, questioning the parallel to OpenAI's credits initiative.

- This conversation underscores the need for clearer information on Anthropic's offerings to facilitate AI research and exploration.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The OpenAccess AI Collective (axolotl) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Torchtune Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!