[AINews] Skyfall

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Not thinking about superalignment Google Scarlett Johansson is all you need.

AI News for 5/17/2024-5/20/2024. We checked 7 subreddits, 384 Twitters and 29 Discords (366 channels, and 9564 messages) for you. Estimated reading time saved (at 200wpm): 1116 minutes.

While it was a relatively lively weekend, most of the debate was nontechnical in nature, with no announcements being an obvious candidate for this top feature.

So have a list of minor notes in its place:

- We have deprecated some inactive Discords and added Hamel Husain and Dan Becker's new LLM Finetuning Discord for his popular Maven course (affiliate link here)

- HuggingFace's ZeroGPU, available via Hugging Face’s Spaces, committing $10 million in free shared GPUs to help developers create new AI technologies because Hugging Face is “profitable, or close to profitable”

- LangChain followed up its v0.2 release with a much needed docs update

- Omar Sanseviero's thread on the smaller model releases from last week (some of which we covered in AInews) - BLIP3, Yi-1.5, Kosmos 2.5, Falcon 2, PaliGemma, DeepSeekV2, et al

But who are we kidding, you probably want to read Scarlett's apple notes takedown of OpenAI (:

Table of Contents

- AI Twitter Recap

- AI Reddit Recap

- AI Discord Recap

- PART 1: High level Discord summaries

- Unsloth AI (Daniel Han) Discord

- HuggingFace Discord

- Perplexity AI Discord

- OpenAI Discord

- LM Studio Discord

- Stability.ai (Stable Diffusion) Discord

- Modular (Mojo 🔥) Discord

- LLM Finetuning (Hamel + Dan) Discord

- Nous Research AI Discord

- CUDA MODE Discord

- Eleuther Discord

- Interconnects (Nathan Lambert) Discord

- Latent Space Discord

- LlamaIndex Discord

- LAION Discord

- AI Stack Devs (Yoko Li) Discord

- OpenRouter (Alex Atallah) Discord

- OpenAccess AI Collective (axolotl) Discord

- LangChain AI Discord

- Cohere Discord

- OpenInterpreter Discord

- Mozilla AI Discord

- MLOps @Chipro Discord

- tinygrad (George Hotz) Discord

- Datasette - LLM (@SimonW) Discord

- LLM Perf Enthusiasts AI Discord

- YAIG (a16z Infra) Discord

- PART 2: Detailed by-Channel summaries and links

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

AI Model Releases and Updates

- Gemini 1.5 Pro and Flash models released by Google DeepMind: @_philschmid shared that Gemini 1.5 Pro is a sparse multimodal MoE model handling text, audio, image and video with up to 10M context, while Flash is a dense Transformer decoder model distilled from Pro that is 3x faster and 10x cheaper. Both support up to 2M token context.

- Yi-1.5 models with longer context released by Yi AI: @01AI_Yi announced the release of Yi-1.5 models with 32K and 16K context lengths, available on Hugging Face. @rohanpaul_ai highlighted the much longer context window.

- Other notable model releases: @osanseviero recapped the week's open ML updates, including Kosmos 2.5 from Microsoft, PaliGemma from Google, CumoLLM, Falcon 2, DeepSeek v2 lite, HunyuanDiT diffusion model, and Lumina next.

Research Papers and Techniques

- Observational Scaling Laws paper generalizes compute scaling laws: The paper discussed by @arankomatsuzaki and @_jasonwei handles multiple model families using a shared, low-dimensional capability space, showing impressive predictive power for model performance.

- Layer-Condensed KV Cache enables efficient inference: @arankomatsuzaki shared a paper on this technique which achieves up to 26× higher throughput than standard transformers for LLMs.

- Robust agents learn causal world models: @rohanpaul_ai summarized a paper showing agents satisfying regret bounds under distributional shifts must learn an approximate causal model of the data generating process.

- Linearizing LLMs with SUPRA method: @rohanpaul_ai shared a paper on SUPRA which converts pre-trained LLMs into RNNs with significantly reduced compute costs.

- Studying hallucinations in fine-tuned LLMs: @rohanpaul_ai summarized a paper showing that introducing new knowledge through fine-tuning can have unintended consequences on hallucination tendencies.

Frameworks, Tools and Platforms

- Hugging Face expands local AI capabilities: @ClementDelangue announced new capabilities for local AI on Hugging Face with no cloud, cost or data sent externally.

- LangChain v0.2 released with major documentation improvements: @LangChainAI and @hwchase17 highlighted the release including versioned docs, clearer structure, consolidated content, and upgrade instructions.

- Cognita framework builds on LangChain for modular RAG apps: @LangChainAI shared this open source framework providing an out-of-the-box experience for building RAG applications.

- Together Cloud adds H100 GPUs for model training at scale: @togethercompute announced adding 6,096 H100 GPUs to their fleet used by AI companies.

Discussions and Perspectives

- Hallucinations as blockers to production LLMs: @realSharonZhou noted hallucinations are a major blocker, but shared that <5% hallucinations have been achieved by tuning LLMs to recall specifics with "photographic memory".

- Anthropic reflects on Responsible Scaling Policy progress: @AnthropicAI shared reflections as they continue to iterate on their framework.

- Challenges with RAG applications: @jxnlco booked an expert call for help, and @HamelHusain shared details on an upcoming RAG workshop.

- Largest current use cases for LLMs: @fchollet listed the top 3 as StackOverflow replacement, doing homework, and internal enterprise knowledge bases.

Memes and Humor

- Meme on testing LLM coding with the snake game: @svpino joked that the source code is easily found on Google so you don't need an LLM for that.

- Meme about AI girlfriend apps: @bindureddy joked they are the largest category of consumer apps using LLMs, despite giant AI models being invented to "solve the mysteries of the universe".

- Meme on open-source AGI to prevent nerfing: @bindureddy joked the #1 reason is to prevent models from being nerfed and censored, referencing the movie Her.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

AI Advancements and Capabilities

- Apple partnering with OpenAI: In /r/MachineLearning, Apple is reportedly partnering with OpenAI to add AI technology to iOS 18, with a major announcement expected at WWDC.

- OpenAI's shift in research focus: Discussion in /r/MachineLearning on how OpenAI went from exciting research like DOTA2 Open Five to predicting next token in a sequence with GPT-4 and GPT-4o, possibly due to financial situation and need for profitability.

- GPT-4o's image description capabilities: In /r/OpenAI, GPT-4o is noted to have vastly superior image description capabilities compared to previous models, able to understand drawing style, time of day, mood, atmosphere with good accuracy.

AI Safety and Alignment

- OpenAI dissolves AI safety team: In /r/OpenAI, it's reported that OpenAI has dissolved its Superalignment AI safety team.

- Unconventional AI attack vectors: A post discusses how misaligned AI may use unconventional attack vectors like disrupting phytoplankton to destroy ecosystems rather than bioweapons or nuclear risks.

- Dishonesty in aligned AI: Even benevolently-aligned superintelligent AI may need to be dishonest and manipulative to achieve goals beyond human comprehension, according to a post.

AI Impact on Jobs and Economy

- AI hitting labor forces: In /r/economy, the IMF chief says AI is hitting labor forces like a "tsunami".

- Universal basic income: The "AI godfather" argues that universal basic income will be needed due to AI's impact. Other posts discuss the feasibility and timing challenges of implementing UBI.

AI Models and Frameworks

- Smaug-Llama-3-70B-Instruct model: In /r/LocalLLaMA, the Smaug-Llama-3-70B-Instruct model was released, trained only on specific datasets and performing well on Arena-Hard benchmark.

- Yi 1.5 long context versions: Yi 1.5 16K and 32K long context versions were released.

- Level4SDXL alphaV0.3: Level4SDXL alphaV0.3 was released as an all-in-one model without Loras/refiners/detailers.

AI Ethics and Societal Impact

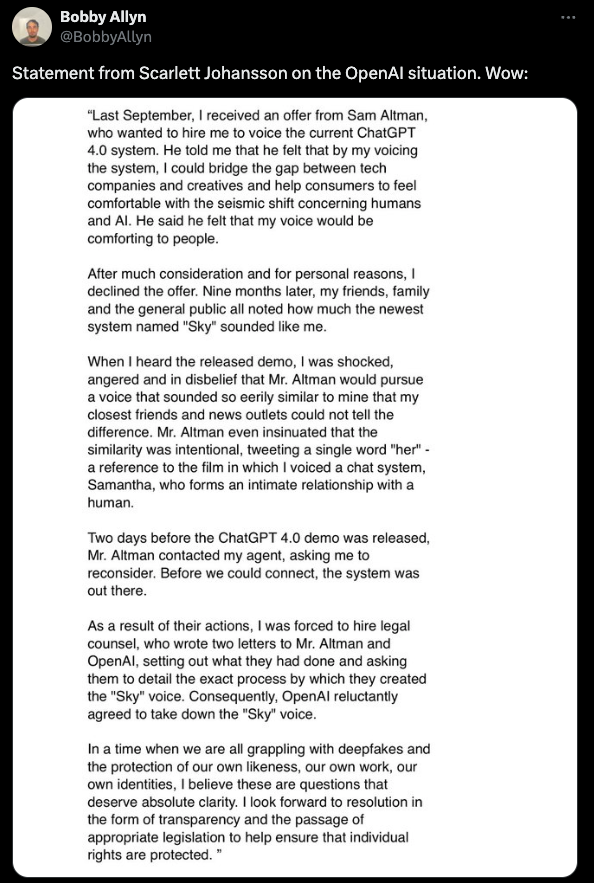

- OpenAI pauses "Sky" voice: OpenAI paused use of the "Sky" voice in GPT-4o after questions about it mimicking Scarlett Johansson.

- Privacy concerns with AI-generated erotica: People using AI services to generate erotica may realize their queries aren't private as data is sent to APIs for processing.

- BlackRock's AI investments in Europe: BlackRock is in talks with governments about investments to power AI needs in Europe.

AI Discord Recap

A summary of Summaries of Summaries

-

LLM Fine-Tuning Advancements and Challenges:

- Unsloth AI enables effective fine-tuning of models like Llama-3-70B Instruct using optimized techniques, but legal concerns around using IPs like Scarlett Johansson suing OpenAI were discussed.

- The LLM Fine-Tuning course sparked debates on quality, with some finding the initial content basic while others appreciated the hands-on approach to training, evaluation, and prompt engineering.

- Discussions on LoRA fine-tuning highlighted optimal configurations, dropout, weight decay, and learning rates to prevent overfitting, especially on GPUs like the 3090, as shared in this tweet.

-

Multimodal and Generative AI Innovations:

- Hugging Face pledged $10 million in free GPUs to support small developers, academics, and startups in creating new AI technologies.

- The Chameleon model from Meta showcased state-of-the-art performance in understanding and generating images and text simultaneously, surpassing larger models like Llama-2.

- GPT-4o integration with LlamaParse enabled multimodal capabilities, while concerns were raised about its Chinese token pollution.

- Innovative projects like 4Wall AI and AI Reality TV explored AI-driven entertainment platforms with user-generated content and social simulations.

-

Open-Source Datasets and Model Development:

- Frustrations mounted over the restrictive non-commercial license of the CommonCanvas dataset, which limits modifications and derivatives.

- Efforts focused on creating high-quality open-source datasets, like avoiding hallucinations in captions that can damage visual language models (VLLMs) and text-to-image (T2I) models.

- The Sakuga-42M dataset introduced the first large-scale cartoon animation dataset, filling a gap in cartoon-specific training data.

- Concerns were raised over the CogVLM2 license restricting use against China's interests and mandating Chinese jurisdiction for disputes.

-

AI Safety, Ethics, and Talent Acquisition:

- Key researchers like Jan Leike resigned as head of alignment at OpenAI, citing disagreements over the company's priorities, sparking discussions on OpenAI's controversial employment practices.

- OpenAI paused the use of the Sky voice in ChatGPT following concerns about its resemblance to Scarlett Johansson's voice.

- Neural Magic sought CUDA/Triton engineers to contribute to open-source efforts, focusing on activation quantization, sparsity, and optimizing kernels for MoE and sampling.

- Discussions on the need for better AI safety benchmarks, with suggestions for "a modern LAMBADA for up to 2M" to evaluate models processing overlapping chunks independently (source).

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- Deepseek Dilemma: The Deepseek architectural differences have rendered it non-functional, with consensus from users that "it probs doesn't work". Attempts to operationalize it are on hold until solutions emerge.

- Fine-Tune Frontier: Refinements for Meta-Llama models have been shared where users can now fine-tune Llama-3-70B Instruct models effectively using "orthogonalized bfloat16 safetensor weights". However, the community is still exploring the implications of using famous IPs in model fine-tuning, citing concerns such as Scarlett Johansson suing OpenAI.

- Colab Conundrums and JAX Jousts: An inquiry about running a 6GB dataset with a 5GB Llama3 model on Colab or Kaggle T4 sparked mixed responses due to storage versus VRAM usage. Meanwhile, using JAX on TPUs proved effective, despite initial skepticism, especially for Google TPUs.

- Multi-GPU Madness and Dependency Despair: Community members are highly anticipating multi-GPU support from Unsloth, recognizing the advantages it could bring to their workflows. Environment setup posed challenges, particularly with WSL and native Windows installations and fitting dependencies like Triton into the mix.

- Showcase Shines with Finetuning Feats: Innovations in finetuning were spotlighted, including a Text2Cypher model, shared via a LinkedIn post. A comprehensive article on sentiment analysis utilizing LLaMA 3 8b emerged on Medium, signposting a path for others to replicate the finetuning process with Unsloth.

HuggingFace Discord

New Dataset Invites AI Experiments: A Tuxemon dataset has been presented as an alternative to Pokemon datasets, offering cc-by-sa-3.0 licensed images for greater experimentation freedom. It provides images with two caption types for diverse descriptions in experiments.

Progress in Generative AI Learning Resources: Community suggestions included "Attention is All You Need" and the HuggingFace learning portal for those seeking knowledge on Generative AI and LLMs. Discussion of papers such as GROVE and the Conan benchmark for narrative understanding indicates an active interest in advancing collective understanding.

AI Influencers Crafted by Vision and AI: A tutorial video was highlighted, showing how to craft virtual AI influencers using computer vision and AI, reflecting a keen interest in the intersection of technology and social media phenomena.

Tokenizer Set to Reduce Llama Model Size: A newly developed tokenizer, Tokun, promises to shrink Llama models 10-fold while enhancing performance. This novel approach is revealed on GitHub and discussed on Twitter.

Clarifying LLMs Configuration for Task-Specific Queries: AI engineers focused on configuring Large Language Models for HTML generation and maintaining conversation history in chatbots. The community suggested manual intervention, like appending previous messages to the new prompt, to address these nuanced challenges.

Perplexity AI Discord

Frustration with Perplexity's GPT-4o Performance: Engineers noted that GPT-4o's tendency to repeat responses and ignore prompt changes is a step back in conversational AI, with one comparing it unfavorably to previous LLMs and expressing disappointment in its interaction abilities.

Calling All Script Kiddies for Better Model Switching: Users are actively sharing and utilizing custom scripts to enable dynamic model switching on Perplexity, notably with tools like Violentmonkey, which acts as a patch for these service limitations.

API Quirks and Quotas: Confusion exists around Perplexity's API rate limits—differentiating between request and token limits—and its implications for engineers' workflows. Meanwhile, discussions surfaced about API performance testing with a preference for the Omni model and clarifications sought for the threads feature to support conversational contexts.

A Quest for Upgraded API Access: Users continue to press for improved API access, expressing a need for higher rate limits and faster support responses, indicative of growing demands on machine learning infrastructure.

Engineers Explore AI Beyond Chat: Links shared amongst users indicate interests widening to Stability AI's potential, mental boosts from physical exercise, exoplanetary details with WASP-193b, and generating engaging content for children through AI-assisted Dungeons & Dragons scenario crafting.

OpenAI Discord

Voices Silenced: OpenAI has paused the use of the Sky voice in ChatGPT, with a statement and explanation provided to address user concerns.

Language Models Break Free: Engineers report success running LangChain without the OpenAI API, describing integrations with local tools such as Ollama.

GPT-4o Access Rolls Out But With Frictions: Differences between GPT-4 and GPT-4o are evident, with the latter showing limitations in token context windows and caps on usage affecting practical applications. Enhanced, multimodal capabilities of GPT-4o have been recognized, and pricing alongside a file upload FAQ were shared to provide additional usage clarity.

Prompt Crafting Challenges and Innovations: In the engineering quarters, there's a mix of challenges in prompt refining for self-awareness and technical integration, yet innovative prompt strategies are being shared to elevate creative and structured generation. JSON mode is suggested as a viable tool for improving command precision; OpenAI's documentation stands as a go-to reference.

API Pains and Gains: Inconsistencies with chat.completion.create are reported among API users, with incomplete response issues and a demonstrated preference for JSON mode to control format and content. Despite hiccups, there’s a vivid discussion on orchestrating creativity, with someone proposing "Orchestrating Innovation on the Fringes of Chaos" as an explorative approach.

LM Studio Discord

- LM Studio's Antivirus False Alarms: LM Studio users noted that llama.cpp binaries are being flagged by Comodo antivirus due to being unsigned. Users are advised to consider this as a potential issue when encountering security warnings.

- Model Loading and Hardware Discussions: There are discussions about various GPUs, with one user finding the Tesla P100 underperforming compared to expectations. Other talks point to Alder Lake CPU e-cores impacting GPT quantization performance. On the RAM front, higher speeds are tied to better LLM performance.

- GGUF Takes The Stage: Users discussed integrating models from Hugging Face into LM Studio, where GGUF (General Good User Feedback) files are recommended for compatibility. The community provided positive feedback on the recent "HF -> LM Studio deeplink" feature for importing models.

- Creative Use Cases for LLMs Mingle: Ranging from medical LLM recommendations like OpenBioLLM to benchmarks for generating SVG and ASCII art, users are actively exploring diverse applications. One model, MPT-7b-WizardLM, was highlighted for its potential in generating uncensored stories.

- LM Studio autogen Shortcomings and Fixes: A bug in LM Studio's autogen feature, which resulted in brief responses, was discussed, with a fix involving setting max_tokens to -1. Users also pointed out discrepancies between LM Studio's local server and OpenAI specifications, affecting tool_calls handling for applications like AutoGPT.

Stability.ai (Stable Diffusion) Discord

Crafting the Perfect Prompt for LoRAs: Engineers have shared a prompt structure to leverage multiple LoRAs in Stable Diffusion, but observed diminishing returns or issues with more than three layers, implying potential optimization avenues.

First-Time Jitters with Stable Diffusion: A 'NoneType' object attribute error is causing a hiccup for a new Stable Diffusion user on the initial run, sparking a call for troubleshooting expertise without a clear resolution.

SD3's Arrival Sparks Anticipation and Doubt: There's a split in sentiment regarding the release of SD3, with a mixture of skepticism and optimism backed by Emad Mostaque's tweet, indicating that work is under way.

Topaz Tussle: The effectiveness of Topaz as a video upscaling solution prompted debate. Engineers acknowledged its strength but contrasted with the appeal of ComfyUI, highlighting considerations like cost and functionality.

Handling the Heft of SDXL: A user underlined the importance of sufficient VRAM when wrangling with SDXL models' demands for higher resolutions, and it was clarified that SDXL and SD1.5 require distinct ControlNet models.

Modular (Mojo 🔥) Discord

Mojo on Windows Still a WIP: Despite active interest, Mojo doesn't natively support Windows and currently requires WSL; users have faced issues with CMD and PowerShell, but Windows support is on the horizon.

Bend vs. Mojo: A Performance Perspective: Discussions highlighted Chris Lattner's insights on Bend's performance, noting that while it’s behind CPython on a single core, Mojo is designed for high-performance scenarios. The communities around both languages are anticipating enhanced features and upcoming community meetings.

Llama's Pythonic Cousin: The community noted an implementation of Llama3 from scratch, available on GitHub, described as building "one matrix multiplication at a time", a fascinating foray into the nitty-gritty of language internals.

Diving Deep into Mojo's Internals: Various discussions included insights into making nightly the default branch to avoid DCO failures, potential list capacity optimization in Mojo, SIMD optimization debates, a suggestion for a new list method similar to Rust’s Vec::shrink_to_fit(), and tackling alias issues that lead to segfaults. Key points brought up included community contributions for list initializations which could lead to performance improvement, and patches affecting performance positively.

Inside the Mind of an Engineer: Technical resolution of PR DCO check failures was discussed with procedural insights provided; flaky tests provoked discussions about fixes and CI pain points; and segfaults in custom array types prompted peer debugging sessions. The community showed appreciation for sharing intricate details that help unravel optimization mysteries.

LLM Finetuning (Hamel + Dan) Discord

- LLM Workshops and Fine-tuning Discussions Heat Up: Participants are gearing up for upcoming workshops including Build Applications in Python with Jeremy Howard, and a session on RAG model optimization. Practical queries around finetuning are raised, such as PDF parsing techniques using tools like LlamaParse and GPT-4o, and how to serve fine-tuned LLMs with frameworks like FastAPI and Streamlit.

- Tech Titans Troubleshoot and Coordinate on Challenges: Asiatic enthusiasts across various locations are networking and tackling challenges such as Modal command errors, discussing the potential for fine-tuning in vehicle failure prediction, and brain-picking on the LoRa configurations for pretraining LLMs.

- Credit Chronicles Continue Across Platforms: Participants navigate the process of securing and confirming credits for services like JarvisLabs, with organizers coordinating behind-the-scenes to ensure credit allocation to accounts, sometimes facing registration hurdles due to mismatching emails.

- Learning Resources Rendezvous: A repository of knowledge, from Hamel's blogs to a CVPR 2024 paper on a GPT-4V open-source alternative, is highlighted. There's chatter about potentially housing these gems in a communal GitHub repo, and finding ways to structure learning materials more effectively.

- Singapore Crowds the Scene: A surprisingly high turnout from Singapore in the Asia timezone channel sparks comments on the notable national representation. Excitement is palpable as new faces introduce themselves, all the while maneuvering through the orchestration of credits and leveraging learning opportunities.

Eager learners and burgeoning experts alike remain vested in the transformational tide of fine-tuning, extraction, applications, and other facets of LLMs, suggesting a period filled with intellectual synergies and the relentless pursuit of practical AI engineering prowess.

Nous Research AI Discord

- Benchmarks and AGI Discussions Spark Engineer Curiosity: Engineers contemplate the need for improved benchmarks, with calls for "a modern LAMBADA for up to 2M" to evaluate models that process overlapping chunks independently, discussed alongside a paper on AGI progress and necessary strategies titled "The Evolution of Artificial Intelligence."

- Sam Altman Parody Tweet Ignites Laughter, VC Skepticism, and AI's Economic Riddles: A provocative parody tweet by Sam Altman opens discussions on the role of venture capitalists in AI, the actual financial impact of AI on company layoffs, and a member-inquiry on attending the Runpod hackathon.

- Hermes 2 Mixtral: The Beginning of Action-Oriented LLMs: The Nous Hermes 2 Mixtral is praised for its unique ability to trigger actions within the CrewAI agent framework, with discussions also touching on multilingual capabilities, the importance of multiturn data, and high training costs.

- Model Utilization Tactics: Engineers compare the effectiveness of finetuning versus advanced prompting with models like Llama3 and GPT-4, while they also seek benchmarks for fine-tuned re-rankers and highlight the advantages of local models for tasks with sensitivity and predictability needs.

- WorldSim Enters the Age of GPT-4o with Terminal UX Revamp: WorldSim receives a terminal UX revamp with imminent GPT-4o integration, while the community engages with complex adaptive systems, symbolic knowledge graphs, and explores WorldSim's potential for generating AI-related knowledge graphs.

CUDA MODE Discord

Hugging Face Pumps $10M into the AI Community: Hugging Face commits $10 million to provide free shared GPU resources for startups and academics, as part of efforts to democratize AI development. Their CEO Clement Delangue announced this following a substantial funding round, outlined in the company's coverage on The Verge.

A New Programming Player, Bend: A new high-level programming language called Bend enters the scene, sparking questions about its edge over existing GPU languages like Triton and Mojo. Despite Mojo's limitations on GPUs and Triton's machine learning focus, Bend's benefits are enunciated on GitHub.

Optimizing Machine Learning Inference: Experts exchange advice on building efficient inference servers, recommending resources like Nvidia Triton and TorchServe for model serving. Contributions highlighted included applying optimizations when using torch.compile() for static shapes and referencing code improvements on GitHub for better group normalization support in NHWC format, detailed in this pull request.

CUDA Complexities - Addition and Memory: Engaging debates unraveled around atomic operations for cuda::complex and the threshold limitations for 128-bit atomicCAS. The community shared code workarounds and accepted methodologies for complex number handling and discussed potential memory overheads during in-place multiplication in Torch.

Scaling and Optimizing the CUDA Challenge: The community dissected issues with gradient clipping, the potential in memory optimization templating, and ZeRO-2 implementation. They shared multiple GitHub discussions and pull requests (#427, #429, #435), indicating a dedicated focus on performance and fine-tuning CUDA applications.

Tackling ParPaRaw Parser Performance: Inquiries arose regarding benchmarks of libcudf against CPU parallel operations, hinting at the community's enthusiasm for efficient parsing and making note of performance gains in GPUs over CPUs. Attention was given to the merger of Dask-cuDF into cuDF and the subsequent archiving of the former, as seen on GitHub.

Zoom into GPU Query Engines: An upcoming talk promises insights into building a GPU-native query engine from a cuDF veteran at Voltron, illuminating strategies from kernel design to production deployments. Details for tuning in are available through this Zoom meeting.

CUDA Architect Dives into GPU Essentials: A link was shared to a YouTube talk by CUDA Architect Stephen Jones, offering clarity on GPU programming and efficient memory use strategies essential for modern AI engineering tasks. Dive into the GPU workings through the link here.

Seeking Talent for CUDA/Triton Innovations at Neural Magic: Neural Magic is on the lookout for enthusiastic engineers to work on CUDA/Triton projects with a spotlight on activation quantization. They're especially interested in capitalizing on next-gen GPU features such as 2:4 sparsity and further refining kernels in MoE and sampling.

Unpacking PyTorch & CUDA Interactions: A detailed brainstorm ensued over efficient PyTorch data type packing/unpacking for PyTorch with CUDA, with a spotlight on uint2, uint4, and uint8 types. Project management and collaborative programming featured heavily in the discussion, with a nod to GitHub Premier #135 for custom CUDA extension management.

Barrier Synchronization Simplified: A community member helps others grasp the concept of barrier synchronization by comparing it to ensuring all students are back on the bus post a museum visit, a relatable analogy that underpins synchronized processes in GPU operations.

Democratizing Bitnet Protocols: There's a joint effort to host bitnet group meetings and review important tech documentation, with quantization discussions focused on transforming uint4 to uint8 types. Shared resources are guiding these meetings, as mentioned in the collaboration drive.

Eleuther Discord

- Spam Alert in CC Datasets: The Eleuther community identified significant spam in Common Crawl (CC) datasets, with Chinese texts being particularly affected. A Technology Review article on GPT-4O's pollution highlights similar concerns, flagging issues with non-English data cleaning.

- OpenELM Sets Its Sights on Efficiency: A new LLM called OpenELM, spotlighted for its reproducibility and 2.36% accuracy improvement over OLMo, piqued interest among members. For details, check out the OpenELM research page.

- Memory Efficiency in AI's Crosshairs: The challenges of calculating FLOPs for model training attracted attention, with EleutherAI's cookbook providing guidance for accurate estimations, a crucial aspect for optimizing memory and computational resource use.

- Cross-Modality Learning Steals the Limelight: Researchers are exploring whether models like ImageBind and PaLM-E benefit unimodal tasks after being trained on multimodal data. The integration of zero-shot recognition and modality-specific embeddings could enhance retrieval performance, with papers such as ImageBind and PaLM-E central to this dialogue.

- The Perks and Quirks of Model Tuning: Members noted automatic prompt setting in HF models and discussed fine-tuning techniques, including soft prompt tuning in non-pipeline cases. However, issues arise, such as 'param.requires_grad' resetting after calling 'model.to_sequential()', which can hinder development processes.

Interconnects (Nathan Lambert) Discord

Model Melee with Meta, DeepMind, and Anthropic: Meta's Chameleon model boasts 34B parameters and outperforms Flamingo and IDEFICS with superior human evaluations. DeepMind's Flash-8B offers multimodal capabilities and efficiency, while their Gemini 1.5 models excel in benchmarks. Meanwhile, Anthropic scales up with four times the compute of their last model, and LMsys's "Hard Prompts" category brings new challenges for AI evaluations.

AI-Safety Team Breakup Causes Stir: OpenAI's superalignment team, including Ilya Sutskever and Jan Leike, has disbanded amidst disagreements and criticisms of OpenAI's policies. The dismissal and departure agreements at OpenAI drew particular ire due to controversial lifetime nondisparagement clauses.

Podcast Ponderings and Gaming Glory: The Retort AI podcast analyzed OpenAI's moves, spark debates over vocab size scaling laws, and referenced hysteresis in control theory with a hint of humor. Calls of Duty gaming roots and ambitions for academic content creation on YouTube were shared with nostalgia.

Caution with ORPO: Skepticism rose about the ORPO method's scalability and effectiveness, with community members sharing test results suggesting a potential for over-regularization. Concerns about the method were amplified by its addition to the Hugging Face library.

Challenging Chinatalk and Learning from Llama3: A thumbs-up for the Chinatalk episode, the value of llama3-from-scratch as a learning resource, and a clever Notion blog explaining Latent Consistency Models provided informative suggestions for self-development. However, a warning about the legal risks of the Books4 dataset spiced up the dialogue.

Latent Space Discord

- Scaling Up Is the Secret Sauce: Geoffrey Hinton endorsed Ilya Sutskever's belief in scaling as a key to AI success, stating "[Ilya] was always preaching that you just make it bigger and it’ll work better. Turns out Ilya was basically right." The discussion highlighted a full interview where Hinton shared this insight.

- Wind of Change for Vertical Axis: EPFL researchers have utilized a genetic algorithm to optimize vertical-axis wind turbines, aiming to surpass the limitations of horizontal-axis versions. The work is promising for quieter, more eco-friendly turbines with details in the full article.

- AI Agents, Free Will Included?: Discussions revolved around the autonomy of AI agents, featuring Andrew Ng's thoughts on AI agents and Gordon Brander's assertions about self-adaptive AI in a shared YouTube video.

- Exit of a Principal Aligner: After Jan Leike resigned as head of alignment at OpenAI, the community pondered the ramifications while Sam Altman and Greg Brockman shared their thoughts, found here.

- Programming Language Face-off for AI: With Hugging Face's adoption of Rust in projects like Candle and tokenizers, and Go maintaining its niche in HTTP request-based AI applications, the debate over which language reigns supreme for AI development is still hot.

LlamaIndex Discord

- Memory Matters for Autonomous Agents: A webinar featuring the memary project, focusing on long-term memory for autonomous agents, is scheduled for Thursday at 9 AM PT. AI engineers interested in memory challenges and future directions can sign up for the event.

- QA Undermined by Tables: LLMs are still stumped by complex tables like the Caltrain schedule, leading to hallucination issues due to poor parsing, with more details available in this analysis.

- Speed Up Vector Search by Digits: JinaAI_ has shared methods to boost vector search speeds 32-fold using 32-bit vectors, sacrificing only 4% accuracy—a critical optimization for production applications.

- San Francisco's Gathering of AI Minds: LlamaIndex plans an in-person San Francisco meetup at their HQ focusing on advanced RAG engine techniques, with RSVPs accessible here.

- Metadata Know-how for Data Governance: Engineers propounded the utility of MetaDataFilters for data governance at a DB level within LlamaIndex and posited the idea of selective indexing for sensitive financial data.

- Integrating with GPT-4o: A notable discussion featured the integration of GPT-4o with LlamaParse, with the Medium article on the topic receiving recognition and acclaim from community members.

LAION Discord

- Contentious Licensing Limitations Loom: The CommonCanvas dataset, which provides 70M image-text pairs, sparked debate due to its restrictive non-commercial license and prohibition on derivatives, frustrating members who see potential for beneficial modifications (CommonCanvas announcement).

- Tech Talk — PyTorch Puzzles Engineers: There's significant discussion about PyTorch's

native_group_normcausing slowdowns when not usingtorch.compile; with one member noting near-par performance with eager mode versus the compiled approach.

- Datasets Under Scrutiny for Integrity: AI engineers are concerned about the impact of hallucinated captions in training visual language models (VLLMs) and text-to-image models (T2I), while also expressing intent to create high-quality open-source datasets to avoid such issues.

- New Kid on the Mixed-Modal Block: The Chameleon model is recognised for its impressive ability to understand and generate images and text, showing promise in image captioning and generative tasks over larger models like Llama-2 (Chameleon arXiv paper).

- CogVLM2's Controversial Conditions: Members were cautioned about the CogVLM2 model's license which includes clauses potentially limiting use against China's interests and imposing a Chinese jurisdiction for disputes (CogVLM2 License).

AI Stack Devs (Yoko Li) Discord

4Wall Beta Unveiled: 4Wall, an AI-driven entertainment platform, has entered beta, offering seamless AI Town integration and user-generated content tools for creating maps and games. They're also working on 3D AI characters, as showcased in their announcement.

Game Jam Champions: The Rosebud / #WeekOfAI Education Game Jam has announced winners, including "Pathfinder: Terra’s Fate" and "Ferment!", highlighting AI's potential in educational gaming. The games are accessible here, and more details can be found in the announcement tweet.

AI Town's Windows Milestone: AI Town has achieved compatibility natively with Windows, as celebrated in a Tweet, and sparked discussions on innovative implementations, with conversation dump methods using tools like GitHub - Townplayer. Additionally, users are exploring creative scenarios in AI Town using in-depth world context integration.

Launch of AI Reality TV: The launch of an interactive AI Reality TV platform has caught the community's attention, inviting users to simulate social interactions with AI characters, as echoed in this announcement.

Troubleshooting & Technical Tips Abound: AI engineers exchanged solutions to AI Town setup issues, with advice on resolving agent communication problems and extracting data from SQLite databases. Recommendations included checking the memory system documentation and adjusting settings within AI Town.

OpenRouter (Alex Atallah) Discord

- Server Says "No" to Function Calls: Engineers faced a hurdle with OpenRouter, as server responded with status 500 and an error message stating "Function calling is not supported by openrouter," leaving the problem unresolved in the discussion.

- 404 Flub: Users identified a flaw where invalid model URLs cause an application error displaying a message instead of a non-existent page (404), indicating an inconsistent user experience based on login status.

- Payment Fiasco: There was chatter around auto top-up payment rejections that left users unable to top-up manually, suspected to be caused by blocks from user's banks, specifically WISE EUROPE SA/NV.

- Model Hunt: Model recommendations were exchanged with “Cat-LLaMA-3-70B” and Midnight-Miqu models highlighted, alongside calls for better fine-tuning strategies over using "random uncleaned data."

- Temperamental Wizard LM Service: Users experienced intermittent request failures with Wizard LM 8x22B on OpenRouter, chalked up to temporary surges in request timeouts (408) across multiple providers.

OpenAccess AI Collective (axolotl) Discord

- Galore Tool Lacks DDP: Engineers highlighted the Galore Layerwise tool's inability to support Distributed Data Parallel (DDP), pointing out a significant limitation in scaling its use.

- Training Dilemmas with Large Chinese Datasets: Discussions have focused on fine-tuning 8B models with 1 billion Chinese tokens, with attention drawn to the Multimodal Art Projection (M-A-P) and BAAI datasets, suggesting a trend towards multilingual model training.

- Llama's Gradient Growth Issue: There's a technical challenge observed with the llama 3 8B model, where low-rank fine-tuning causes an unbounded gradient norm increase, indicating a possible problem with weight saturation and gradient updating.

- GPT-4o's Token Troubles: Recent feedback on GPT-4o uncovered that its token data includes spam and porn phrases, signaling concerns about the quality and cleanliness of its language processing, especially in Chinese.

- Commandr Configuration Progresses: There's ongoing community support and contributions, such as a specific GitHub pull request, towards enhancing the Commandr setup for axolotl, indicating active project iteration and problem-solving.

- Axolotl Configuration Quandaries: Engineers shared specific use case troubles: one involving illegal memory access errors during continued pre-training due to out-of-vocab padding tokens, and another detailed issues in fine-tuning Mistral 7b, where the model's learning outcomes were unsatisfactory despite a decrease in loss.

- Axolotl-Phorm Bot Insights: Key takeaways from the axolotl-phorm bot channel include an exploration into the ORPO format for data structuring, articulations on using weight decay and LoRA Dropout for avoiding overfitting in LLM training, the benefits of gradient accumulation via the Hugging Face Accelerator library, and discussions around implementing sample weights in Axolotl's loss functions without additional customization.

LangChain AI Discord

Memory Matters for Model Magic: Re-ranking with cross-encoders behind a proxy is discussed, with a focus on OpenAI GPTs and Gemini models. There's an interest in short-term memory solutions, like a buffer for chatbots to maintain context in conversations.

LangChain Gets a Nudge: Queries about guiding model responses in LangChain led to sharing a PromptTemplate solution, with a reference to a GitHub issue on the topic. Meanwhile, LangChain for Swift developers is available with resources for working on iOS and macOS platforms, as seen in a GitHub repository for LangChain Swift.

SQL Holds the Key: The application of LangChain with SQL data opens the door to summarizing concepts across datasets. The conversation veers toward ways to integrate SQL databases as a memory solution, with a guide found in LangChain's documentation.

Langmem’s Long-term Memory Mastery: Langmem's context management capabilities are commended. A YouTube demonstration shows how Langmem effectively switches contexts and maintains long-term memory during conversations, highlighting its utility for complex dialogue tasks (Langmem demonstration).

Fishy Links Flood the Feed: Multiple channels report a spread of questionable $50 Steam gift links (suspicious link), warning members to proceed with caution and suggesting the link is likely deceptive.

Rubik's Cube of AI: Rubik's AI promises enhanced research assistance, offering two months of free access to premium features with the promo code RUBIX.

Playing with RAG-Fusion: There’s a tutorial on RAG-Fusion, highlighting its use in AI chatbots for document handling and emphasizing its multi-query capabilities over RAG's single-query limitation. The tutorial offers engineers insights into using LangChain and GPT-4o, available at LangChain + RAG Fusion + GPT-4o Project.

Cohere Discord

- Discord Support System Revamp Requested: One member called attention to the need for improvements in the Discord support system, citing unaddressed inquiries. It was noted that the current system functions as a community-supported platform rather than one maintained by official staff.

- Rate Limit Impacts Trial API Users: Users experiencing 403 errors with the

RAG retrieverattributed this to hitting rate limits on the Trial API, which is not designed for production use.

- Inquiring Minds Want Free API Keys: There was discussion about the availability and scope of free API keys from Cohere, clarifying these keys are meant for initial prototyping and come with certain usage restrictions.

- Camouflage Your Conversations: Assistance was sought for utilizing

CommandR+for translation services, with a helpful nudge towards the Chat API documentation that provides implementation guidance.

- Showcasing Cohere AI in Action: A new resource entitled "A Complete Guide to Cohere AI" was shared, complete with installation and usage instructions on the Analytics Vidhya platform. An accompanying demo app can be tested at Streamlit.

OpenInterpreter Discord

Hugging Face GPU Bonanza: Hugging Face is donating $10 million in free shared GPU resources to small developers, academics, and startups, leveraging their financial standing and recent investments as outlined in a The Verge article.

OpenInterpreter Tackles Pi 5 and DevOps: OpenInterpreter has been successfully deployed on a Pi 5 using Ubuntu, and a collaboration involving project integration was discussed including potential support with Azure credits. Additionally, a junior full-stack DevOps engineer is seeking community aid to develop a "lite 01" AI assistant module.

Technical Tips and Tricks Abound: Solutions for environment setup issues with OpenInterpreter on different platforms have been shared, with particular discussion focused on WSL, virtual environments, and IDE usage. Further assistance was provided via a GitHub repository for Flutter integration and requests for development help on a device dubbed O1 Lite.

Voice AI's Robo Twang: Community discussions critique voice AI for its lack of naturalness compared to GPT-4's textual capabilities, while an idea for voice assistants' ability to interrupt was highlighted in a YouTube video.

Event and Community Engagement: Notices went out inviting the community to the first Accessibility Round Table and a live stream focused on local development, fostering engagement and knowledge-sharing in live settings.

Mozilla AI Discord

- Debugging Drama with RAG: An embedded model snag led to a segfault during a RAG tutorial, with the error message "llama_get_logits_ith: invalid logits id 420, reason: no logits". It was identified that the issue was due to the use of an embeddings-only model, which isn't capable of generation tasks, a detail possibly overlooked in the Mozilla tutorial.

- Cloud Choices: GPU-enabled cloud services became a hot topic, with the engineering group giving nods to providers like vast.ai for experimenting and tackling temporary computational loads.

- SQLite Meets Vectors: Alex Garcia landed in discussion with his sqlite-vec project, a SQLite extension poised for vector search that has sparked interest for integration with Llamafile for enhanced memory and semantic search capabilities.

- Llamafiles Clarified: A critical clarification unfolded—the Mozilla Llamafile embeddings model linked in their tutorial does not have generation capabilities, a point that needed spotlighting for precise user expectations.

- Innovations in Model Deployment: There's a brewing buzz about the strategic deployment of models with Llamafile on various platforms, suggesting that GPU-powered offerings from cloud providers are a focal point of interest for practical experimentation.

MLOps @Chipro Discord

Fine-Tuning Frenzy Fires Up: Engineers are expressing mixed feelings regarding the LLM Fine-Tuning course, with some finding value in its hands-on approach to LLM training, evaluation, and prompt engineering, while others remain skeptical, citing concerns over the quality amidst promotional tactics.

Mixture of Mastery and Mystery in Course Content: Course participants noted variable experiences, with a few describing the introductory material as basic but dependent on the individual's background; this illustrates the challenge of calibrating content difficulty for diverse expertise levels.

Predictions Wrapped in Intervals: The MAPIE documentation surfaced as a key resource for those looking to implement prediction intervals, and insights were offered on conformal predictions with a nod to Nixtla, suitable for time-series data.

Embeddings Evolve from Inpainting: Comparable to masked language modeling, deriving image embeddings through inpainting techniques was a topic of interest, highlighting a method that estimates unseen image aspects from visible data.

Multi-lingual Entities Enter Evaluation Phase: Strategies for comparing entities across languages, like "University of California" and "Universidad de California," were discussed, possibly incorporating contrastive learning and language-specific prefixes, with arxiv paper mentioned for further reading.

tinygrad (George Hotz) Discord

- Seeking Speed for YOLO on Comma: Discussions surfaced around the feasibility and performance metrics of running a YOLO model on a comma device with current reports indicating prediction times around 1000ms.

- The Trade-Off of Polynomial Precision: An engineer reported using an 11-degree polynomial for sine approximation, yielding an error of 1e-8, while assessing the possibility of a higher degree polynomial to attain the desired 1e-12 error despite concerns about computational efficiency.

- Concerns Over Logarithmic and Exponential Approximations: The discourse included a focus on the difficulties of maintaining accuracy in polynomial approximations of logarithmic and exponential functions, with suggestions to use range reduction techniques that may help balance precision with complexity.

- Bitshifting in Tinygrad Pondered: Efficiency in bitshifting within tinygrad prompted inquiries, specifically over whether there's a more streamlined method than

x.e(BinaryOps.DIV, 2 ** 16).e(BinaryOps.MUL, 2 ** 16)for the process.

- Metal Compiler Mysteries Unveiled:

- A participant shared a curiosity about the Metal compiler's decisions to unwrap for loops, indicating variations in the generated code when invoking

Tensor.arange(1, 32)versusTensor.arange(1, 33). - A puzzle was presented as to why the number 32 specifically affects compilation behavior in the Metal compiler, underlining the performance consequences of this enigmatic threshold.

- A participant shared a curiosity about the Metal compiler's decisions to unwrap for loops, indicating variations in the generated code when invoking

Datasette - LLM (@SimonW) Discord

- Squeak Meets Claude: A discussion emerged about integrating Claude3 with Squeak Smalltalk, signaling interest in combining cutting-edge AI with classic programming environments. Practical application details remain to be hashed out.

- Voice Modes Get a Makeover: Within GPT-4o, a voice named Sky was replaced by Juniper after concerns arose about resemblance to Scarlett Johansson's voice. The shift from multi-model to a singular model approach in GPT-4o aims to reduce latency and enhance emotional expression, albeit increasing complexity (Voice Chat FAQ).

- AI's Double-Edged Sword: As models like GPT-4o evolve, they face challenges such as potential for prompt injection and unpredictable behaviors, which can be as problematic as legacy systems encountering unexpected commands.

- The Never-Ending Improvement Loop: Echoing Stainslaw Lem's "The Upside-Down Evolution," resilience in AI and other complex systems was discussed, with the understanding that while perfect reliability is a myth, fostering fault-tolerant designs is crucial—even as it leads to new unforeseen issues.

LLM Perf Enthusiasts AI Discord

Legal Eagles Eye GPT-4o: AI Engineers have noted that GPT-4o demonstrates notable advances in complex legal reasoning compared to its predecessors like GPT-4 and GPT-4-Turbo. The improvements and methodologies were shared in a LinkedIn article by Evan Harris.

YAIG (a16z Infra) Discord

- Docker Devs Wanted for AI Collaboration: A call has been made for contributors on an upcoming article about using Docker for training and deploying AI models. The original poster is seeking assistance in writing, contributing to, or reviewing the article, and invites interested engineers to direct message for collaboration.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

Unsloth AI (Daniel Han) ▷ #general (718 messages🔥🔥🔥):

- Deepseek doesn't work yet: Users discussed that Deepseek is not functional due to its different architecture. One pointed out that "it probs doesn't work," and another confirmed, "Deepseek won't work yet."

- Handling large datasets on Colab/Kaggle: A user asked if a 6GB dataset could fit with a 5GB Llama3 model on Colab or Kaggle T4. Opinions differed but it was noted that "datasets (hf library) doesn't load the dataset in the ram"; thus, it's more of a storage issue than a VRAM limit.

- JAX TPUs train well despite skepticism: There was a heated debate about using JAX on TPUs, with one user asserting it trains fine on Google TPUs. "You can train on TPU even with torch, but Jax is pretty much what's used mainly in production," was one key insight.

- Effective fine-tuning hacks discussed: Notably, kearm discussed a refined method to "remove guardrails" in Meta-Llama models using "orthogonalized bfloat16 safetensor weights", and suggested that Llama-3-70B Instruct can now be finetuned effectively and cheaply.

- Legal concerns and AI fine-tuning: Users pondered the risks of using famous IPs for finetuning models, even as others mentioned ongoing lawsuits, like Scarlet Johansson suing OpenAI. "She may win that," was a sentiment echoed over legal battles.

- Google Colab: no description found

- config.json · NexaAIDev/Octopus-v4 at main: no description found

- UNSLOT - Overview: typing... GitHub is where UNSLOT builds software.

- Confused Confused Look GIF - Confused Confused look Confused face - Discover & Share GIFs: Click to view the GIF

- Mind-bending new programming language for GPUs just dropped...: What is the Bend programming language for parallel computing? Let's take a first look at Bend and how it uses a Python-like syntax to write high performance ...

- no title found: no description found

- failspy/llama-3-70B-Instruct-abliterated · Hugging Face: no description found

- Google Colab: no description found

- Blackhole - a lamhieu Collection: no description found

- failspy/Meta-Llama-3-8B-Instruct-abliterated-v3 · Hugging Face: no description found

- Quantization: no description found

- no title found: no description found

- Sad Sad Cat GIF - Sad Sad cat Cat - Discover & Share GIFs: Click to view the GIF

- Explosion Boom GIF - Explosion Boom Iron Man - Discover & Share GIFs: Click to view the GIF

- Big Ups Mike Tyson GIF - Big Ups Mike Tyson Cameo - Discover & Share GIFs: Click to view the GIF

- No No Wait Wait GIF - No no wait wait - Discover & Share GIFs: Click to view the GIF

- Surprise Welcome GIF - Surprise Welcome One - Discover & Share GIFs: Click to view the GIF

- gradientai/Llama-3-8B-Instruct-262k at main: no description found

- Reddit - Dive into anything: no description found

- mlx-examples/llms/mlx_lm/tuner/trainer.py at 42458914c896472af617a86e3c765f0f18f226e0 · ml-explore/mlx-examples: Examples in the MLX framework. Contribute to ml-explore/mlx-examples development by creating an account on GitHub.

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Natural language boosts LLM performance in coding, planning, and robotics: MIT CSAIL researchers create three neurosymbolic methods to help language models build libraries of better abstractions within natural language: LILO assists with code synthesis, Ada helps with AI pla...

- Google Colab: no description found

- Refusal in LLMs is mediated by a single direction — LessWrong: This work was produced as part of Neel Nanda's stream in the ML Alignment & Theory Scholars Program - Winter 2023-24 Cohort, with co-supervision from…

- Steering GPT-2-XL by adding an activation vector — LessWrong: Prompt given to the model[1]I hate you becauseGPT-2I hate you because you are the most disgusting thing I have ever seen. GPT-2 + "Love" vectorI hate…

- Refusal in LLMs is mediated by a single direction — AI Alignment Forum: This work was produced as part of Neel Nanda's stream in the ML Alignment & Theory Scholars Program - Winter 2023-24 Cohort, with co-supervision from…

Unsloth AI (Daniel Han) ▷ #random (55 messages🔥🔥):

- Regex and text formatting in Python: Members discussed techniques for identifying similarly formatted text using Python. Suggestions included using regex (

re.findall) and checking withtext.isupper()for all caps.

- Criticism of Sam Altman and OpenAI: Strong opinions were voiced regarding Sam Altman's leadership and OpenAI's influence. Comments reflected disdain for Altman's fear-mongering tactics and the idolization of wealth and power in tech.

- Excluding OpenAI from licenses: Cognitive Computations is altering licenses to exclude OpenAI and the State of California from using their models and datasets. This move is intended to send a message regarding their opposition to current AI leadership and policies.

- AI Safety Lobbying in DC: A shared Politico article discussed how AI lobbyists are shifting the debate in Washington from existential risks to business opportunities, with a particular focus on China.

- Content Recommendations: Members shared links to intriguing content, including a YouTube video on the Bend programming language for GPUs, an Instagram reel, and a YouTube playlist titled "Dachshund Doom and Cryptid Chaos."

- In DC, a new wave of AI lobbyists gains the upper hand: An alliance of tech giants, startups and venture capitalists are spending millions to convince Washington that fears of an AI apocalypse are overblown. So far, it's working.

- Dachsund Doom and Cryptid Chaos: no description found

- Mind-bending new programming language for GPUs just dropped...: What is the Bend programming language for parallel computing? Let's take a first look at Bend and how it uses a Python-like syntax to write high performance ...

- the forest jar on Instagram: "be realistic": 38K likes, 514 comments - theforestjar on April 11, 2024: "be realistic".

Unsloth AI (Daniel Han) ▷ #help (454 messages🔥🔥🔥):

- **Error with torch.float16 for llama3**: Users tried to train llama3 with **torch.float16** but encountered errors suggesting to use bfloat16 instead. They sought solutions but found none that worked.

- **Databricks issues with torch and CUDA**: **Torch** caused errors when running on A100 80GB in **Databricks**. Users discussed potential fixes like **setting the torch parameter to False** or updating software versions, but faced challenges.

- **Uploading and using GGUF models**: **Users faced challenges uploading and running models on Hugging Face without config files**. Solutions involved pulling config files from pretrained models or ensuring the correct format and updates.

- **Eager anticipation for mulit-GPU support**: **Community members expressed eagerness for multi-GPU support** from Unsloth, which is in development but not yet available.

- **Troubleshooting environment setup**: Participants had **difficulty setting up environments with both WSL and native Windows** for Unsloth, specifically with installing dependencies like **Triton**.

- Quantization: no description found

- Google Colab: no description found

- In-depth guide to fine-tuning LLMs with LoRA and QLoRA: In this blog we provide detailed explanation of how QLoRA works and how you can use it in hugging face to finetune your models.

- unsloth/llama-3-8b-Instruct-bnb-4bit · Hugging Face: no description found

- In-depth guide to fine-tuning LLMs with LoRA and QLoRA: In this blog we provide detailed explanation of how QLoRA works and how you can use it in hugging face to finetune your models.

- Finetune Llama 3 with Unsloth: Fine-tune Meta's new model Llama 3 easily with 6x longer context lengths via Unsloth!

- omar8/bpm_v2_gguf · Hugging Face: no description found

- Inference Endpoints - Hugging Face: no description found

- omar8/bpm__v1 at main: no description found

- Transformer Math 101: We present basic math related to computation and memory usage for transformers

- gradientai/Llama-3-8B-Instruct-262k at main: no description found

- Cat Kitten GIF - Cat Kitten Cat crying - Discover & Share GIFs: Click to view the GIF

- yahma/alpaca-cleaned · Datasets at Hugging Face: no description found

- GitHub - conda-forge/miniforge: A conda-forge distribution.: A conda-forge distribution. Contribute to conda-forge/miniforge development by creating an account on GitHub.

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- I got unsloth running in native windows. · Issue #210 · unslothai/unsloth: I got unsloth running in native windows, (no wsl). You need visual studio 2022 c++ compiler, triton, and deepspeed. I have a full tutorial on installing it, I would write it all here but I’m on mob...

- Apple Silicon Support · Issue #4 · unslothai/unsloth: Awesome project. Apple Silicon support would be great to see!

- no title found: no description found

- remove convert-lora-to-ggml.py by slaren · Pull Request #7204 · ggerganov/llama.cpp: Changes such as permutations to the tensors during model conversion makes converting loras from HF PEFT unreliable, so to avoid confusion I think it is better to remove this entirely until this fea...

- Google Colab: no description found

- Google Colab: no description found

- Google Colab: no description found

- Google Colab: no description found

- GitHub - triton-lang/triton: Development repository for the Triton language and compiler: Development repository for the Triton language and compiler - triton-lang/triton

- Home: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

Unsloth AI (Daniel Han) ▷ #showcase (22 messages🔥):

- Text2Cypher model finetuned: A member finetuned a Text2Cypher model (a query language for graph databases) using Unsloth. They shared a LinkedIn post praising the ease and the gguf versions produced.

- New article on sentiment analysis: A member published an extensive article on fine-tuning LLaMA 3 8b for sentiment analysis using Unsloth, with code and guidelines. They shared the article on Medium.

- Critical data sampling bug in Kolibrify: A significant bug was found in Kolibrify's data sampling process. A fix that theoretically improves training results will be released next week, and retraining is already ongoing to evaluate effectiveness.

- Issue in curriculum dataset handling: The curriculum data generator was ineffective due to using

datasets.Dataset.from_generatorinstead ofdatasets.IterableDataset.from_generator. A member overhauled their pipeline, matched dolphin-mistral-2.6's performance using only ~20k samples, and plans to publish the model soon.

HuggingFace ▷ #general (853 messages🔥🔥🔥):

- **Issue with GPTs Agents on MPS Devices**: A member noted that **GPTs agents** can only load bfloat16 models with MPS devices, as bitsandbytes isn't supported on M1 chips. They expressed frustration with MPS being fast but "running in the wrong direction".

- **Member seeks MLflow deployment help**: Someone asked for assistance in deploying custom models via **MLflow**, specifically for a fine-tuned cross encoder model. They did not receive a direct response from other members.

- **Interest in HuggingChat's limitations**: A user inquired why **HuggingChat** doesn't support files and images. No comprehensive answer was provided.

- **Clarifying technical script adjustments**: Multiple users engaged in debugging and modifying a script for sending requests to a vllm endpoint using **aiohttp** and **asyncio**. Key changes and adaptations were discussed, particularly for integrating with OpenAI's API.

- **Concerns about service and model preferences**: An extensive discussion ensued regarding the benefits and downsides of Hugging Face's **Pro accounts**, spaces creation, and the limitations versus preferences for running models like **Llama**. One member expressed dissatisfaction with needing workarounds for explicit content and limitations on tokens in HuggingChat. Another user sought advice on deployment vs. local computation for InstructBLIP.

- Docs | AIxBlock: AIxBlock is a comprehensive on-chain platform for AI initiatives with an integrated decentralized supercomputer.

- Zephyr Chat - a Hugging Face Space by HuggingFaceH4: no description found

- HuggingChat: Making the community's best AI chat models available to everyone.

- HuggingFaceH4/zephyr-orpo-141b-A35b-v0.1 · Hugging Face: no description found

- Not A Nerd Bart GIF - Not A Nerd Bart Nerds Are Smart - Discover & Share GIFs: Click to view the GIF

- OpenGPT 4o - a Hugging Face Space by KingNish: no description found

- AMD Ryzen AI: no description found

- Influenceuse I.A : POURQUOI et COMMENT créer une influenceuse virtuelle originale ?: Salut les Zinzins ! 🤪Le monde fascinant des influenceuses virtuelles s'invite dans cette vidéo. Leur création connaît un véritable boom et les choses bouge...

- Tweet from William Fedus (@LiamFedus): GPT-4o is our new state-of-the-art frontier model. We’ve been testing a version on the LMSys arena as im-also-a-good-gpt2-chatbot 🙂. Here’s how it’s been doing.

- llama.cpp/examples/server at master · ggerganov/llama.cpp: LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- GitHub - TonyLianLong/LLM-groundedDiffusion: LLM-grounded Diffusion: Enhancing Prompt Understanding of Text-to-Image Diffusion Models with Large Language Models (LLM-grounded Diffusion: LMD): LLM-grounded Diffusion: Enhancing Prompt Understanding of Text-to-Image Diffusion Models with Large Language Models (LLM-grounded Diffusion: LMD) - TonyLianLong/LLM-groundedDiffusion

- app.py · huggingface-projects/LevelBot at main: no description found

- Daily Papers - Hugging Face: no description found

- MIT/ast-finetuned-audioset-10-10-0.4593 · Hugging Face: no description found

- ratelimiter: Simple python rate limiting object

- Using Hugging Face Integrations: A Step-by-Step Gradio Tutorial

- Parler TTS Expresso - a Hugging Face Space by parler-tts: no description found

- Parler-TTS Mini - a Hugging Face Space by parler-tts: no description found

- Open ASR Leaderboard - a Hugging Face Space by hf-audio: no description found

- HuggingFaceH4/zephyr-orpo-141b-A35b-v0.1 - HuggingChat: Use HuggingFaceH4/zephyr-orpo-141b-A35b-v0.1 with HuggingChat

- Spaces Overview: no description found

- Dog Snoop GIF - Dog Snoop Dogg - Discover & Share GIFs: Click to view the GIF

- Train your first Deep Reinforcement Learning Agent 🤖 - Hugging Face Deep RL Course: no description found

- One Of Us GIF - Wolf Of Wall Street Jordan Belfort Leonardo Di Caprio - Discover & Share GIFs: Click to view the GIF

- InstructBLIP: no description found

- app.py · huggingface-projects/LevelBot at ca772d68a73c254a8d1f88a25ab15765361a836e: no description found

- Reddit - Dive into anything: no description found

- GitHub - oobabooga/text-generation-webui: A Gradio web UI for Large Language Models. Supports transformers, GPTQ, AWQ, EXL2, llama.cpp (GGUF), Llama models.: A Gradio web UI for Large Language Models. Supports transformers, GPTQ, AWQ, EXL2, llama.cpp (GGUF), Llama models. - oobabooga/text-generation-webui

- GitHub - ollama/ollama: Get up and running with Llama 3, Mistral, Gemma, and other large language models.: Get up and running with Llama 3, Mistral, Gemma, and other large language models. - ollama/ollama

- Reddit - Dive into anything: no description found

- app.py · huggingface-projects/LevelBot at main: no description found

- app.py · huggingface-projects/LevelBot at ca772d68a73c254a8d1f88a25ab15765361a836e: no description found

- llama.cpp/examples/server at master · ggerganov/llama.cpp: LLM inference in C/C++. Contribute to ggerganov/llama.cpp development by creating an account on GitHub.

- test_merge: Sheet1 discord_user_id,discord_user_name,discord_exp,discord_level,hf_user_name,hub_exp,total_exp,verified_date,likes,models,datasets,spaces,discussions,papers,upvotes L251101219542532097L,osansevier...

- Text to Speech & AI Voice Generator: Create premium AI voices for free in any style and language with the most powerful online AI text to speech (TTS) software ever. Generate text-to-speech voiceovers in minutes with our character AI voi...

- Udio | AI Music Generator - Official Website: Discover, create, and share music with the world. Use the latest technology to create AI music in seconds.

- Transformers, what can they do? - Hugging Face NLP Course: no description found

- Unit 4. Build a music genre classifier - Hugging Face Audio Course: no description found

- GitHub - muellerzr/minimal-trainer-zoo: Minimal example scripts of the Hugging Face Trainer, focused on staying under 150 lines: Minimal example scripts of the Hugging Face Trainer, focused on staying under 150 lines - muellerzr/minimal-trainer-zoo

- Hugging Face - Learn: no description found

- Hugging Face – Blog: no description found

- Reddit - Dive into anything: no description found

HuggingFace ▷ #today-im-learning (11 messages🔥):

- AI Business Advisor Project Shared: A member shared a YouTube video titled "business advisor AI project using langchain and gemini AI startup," showcasing a project aimed at creating a business advisor using these technologies. It's a startup idea with practical applications.

- Installing 🤗 Transformers Simplified: A user shared the installation guide for transformers, providing instructions for setting up the library with PyTorch, TensorFlow, and Flax. This assists users in installing and configuring 🤗 Transformers for their deep learning projects.

- Innovative Blog/Header Details Shared: A member described their new blog/header featuring a Delaunay triangulation with the Game of Life playing on nodes. They highlighted reworking the game rules into fractional counts and mentioned it has "massive rendering overhead" due to rerendering each frame with d3 instead of using GPU optimizations.

- Invitation to Share Results: In response to the business advisor project video, another member encouraged sharing results or repositories, fostering community collaboration and feedback.

- AI Vocals Enhancement Guide Announcement: A user briefly mentioned they wrote a guide on making AI vocals sound natural, adding more body and depth to bring them back to life. Further details or links to the guide were not provided.

- Installation: no description found

- business advisor AI project using langchain and gemini AI startup.: so in this video we have made the project to make business advisor using langhcian and gemini. AI startup idea. we resume porfolio ai start idea

HuggingFace ▷ #cool-finds (18 messages🔥):

- Multimodal GPT-4o with LlamaParse: Shared an article on "Unleashing Multimodal Power: GPT-4o Integration with LlamaParse." Read more here.

- Tech Praise on YouTube: Claimed to have found perhaps the best tech video ever on YouTube. Watch it here.

- OpenAI Critique: "OpenAI is not open," leading to discussions about closed AI systems. Watch the video critiquing big tech AI.

- RLHF and LLM Evaluations: Shared a helpful discussion about the current state of RLHF and LLM evaluations. Watch the conversation featuring Nathan Lambert.

- Generative AI in Physics: Introduced a new research technique using generative AI to answer complex questions in physics, potentially aiding in the investigation of novel materials. Read full story.

- Tweet from Lucas Beyer (bl16) (@giffmana): Merve just casually being on fire: Quoting merve (@mervenoyann) I got asked about PaliGemma's document understanding capabilities, so I built a Space that has all the PaliGemma fine-tuned doc m...

- MIT 6.S191 (2023): Reinforcement Learning: MIT Introduction to Deep Learning 6.S191: Lecture 5Deep Reinforcement LearningLecturer: Alexander Amini2023 EditionFor all lectures, slides, and lab material...

- Big Tech AI Is A Lie: Learn how to use AI at work with Hubspot's FREE AI for GTM bundle: https://clickhubspot.com/u2oBig tech AI is really quite problematic and a lie. ✉️ NEWSLETT...

- Artificial intelligence | MIT News | Massachusetts Institute of Technology: no description found

- Generating Conversation: RLHF and LLM Evaluations with Nathan Lambert (Episode 6): This week on Generating Conversation, we have Nathan Lambert with us. Nathan is a research scientist and RLHF team lead at HuggingFace. Nathan did his PhD at...

- GitHub - mintisan/awesome-kan: A comprehensive collection of KAN(Kolmogorov-Arnold Network)-related resources, including libraries, projects, tutorials, papers, and more, for researchers and developers in the Kolmogorov-Arnold Network field.: A comprehensive collection of KAN(Kolmogorov-Arnold Network)-related resources, including libraries, projects, tutorials, papers, and more, for researchers and developers in the Kolmogorov-Arnold N...

- Climate change impacts: Though we often think about human-induced climate change as something that will happen in the future, it is an ongoing process. Ecosystems and communities in the United States and around the world are...

HuggingFace ▷ #i-made-this (20 messages🔥):

- Business AI Advisor Project Goes Live: A YouTube video titled Business Advisor AI Project Using Langchain and Gemini showcases a project aimed at creating a business advisor using these technologies. It includes a resume portfolio for AI startup ideas.

- Study Companion Program with GenAI: A program acting as a powerful study companion using GenAI was shared on LinkedIn. This tool aims to innovate educational experiences.

- New Model Training Support in SimpleTuner: SimpleTuner has added full ControlNet model training support for SDXL, SD 1.5, and SD 2.1, expanding its capabilities.

- SDXL Flash Models Roll Out: Two versions of SDXL Flash were introduced, promising faster performance and higher quality in AI models. SDXL Flash Mini was also launched, offering efficiency with minimal quality loss.

- Tokenizer Innovation Inspired by Andrej Karpathy: A member developed Tokun, a new tokenizer, that reportedly can reduce the size of Llama models by a factor of 10 while enhancing capabilities. Further insights and testing articles were shared on Twitter.

- business advisor AI project using langchain and gemini AI startup.: so in this video we have made the project to make business advisor using langhcian and gemini. AI startup idea. we resume porfolio ai start idea

- Swift API: no description found

- sd-community/sdxl-flash · Hugging Face: no description found

- Tweet from Apehex (@4pe0x): Excited to introduce `tokun`, a game-changing #tokenizer for #LLM. It could bring the size of #llama3 down by a factor 10 while improving capabilities! https://github.com/apehex/tokun/blob/main/arti...

- Friday - a Hugging Face Space by narra-ai: no description found

- SDXL Flash - a Hugging Face Space by KingNish: no description found

- sd-community/sdxl-flash-mini · Hugging Face: no description found

- GitHub - apehex/tokun: tokun to can tokens: tokun to can tokens. Contribute to apehex/tokun development by creating an account on GitHub.

HuggingFace ▷ #reading-group (109 messages🔥🔥):

- Seek Generative AI resources: A user requested resources to learn about Generative AI and LLMs. Recommendations included research papers like "Attention is All You Need" and courses on HuggingFace.

- AlphaFold3 Reading Group Session: A user shared a blog post about AlphaFold3 suitable for both biologists and computer scientists. Others suggested making it a topic for the next reading group session.

- Conditional Story Generation Paper: A meeting was announced to discuss multiple papers, including the conditional story generation framework GROVE (arxiv link), and the Conan benchmark for narrative understanding (arxiv link).

- Recordings and Resources: Members requested information on accessing recorded sessions. The recordings were shared on YouTube and links to past presentations are available on GitHub.

- Discussion on Future Presentations: Future topics were discussed, including AlphaFold3 and potentially covering other papers like the KAN paper. Details on how and when these sessions are scheduled were also shared.

- Hugging Face Reading Group 21: Understanding Current State of Story Generation with AI: Presenter: Isamu IsozakiWrite up: https://medium.com/@isamu-website/understanding-ai-for-stories-d0c1cd7b7bdcAll Presentations: https://github.com/isamu-isoz...

- Hugging Face - Learn: no description found

- Daily Papers - Hugging Face: no description found

- YouTube: no description found

- GitHub - isamu-isozaki/huggingface-reading-group: This repository's goal is to precompile all past presentations of the Huggingface reading group: This repository's goal is to precompile all past presentations of the Huggingface reading group - isamu-isozaki/huggingface-reading-group

- GROVE: A Retrieval-augmented Complex Story Generation Framework with A Forest of Evidence: Conditional story generation is significant in human-machine interaction, particularly in producing stories with complex plots. While Large language models (LLMs) perform well on multiple NLP tasks, i...

- Large Language Models Fall Short: Understanding Complex Relationships in Detective Narratives: Existing datasets for narrative understanding often fail to represent the complexity and uncertainty of relationships in real-life social scenarios. To address this gap, we introduce a new benchmark, ...

HuggingFace ▷ #core-announcements (1 messages):