[AINews] Nemotron-4-340B: NVIDIA's new large open models, built on syndata, great for syndata

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Synthetic Data is 98% of all you need.

AI News for 6/13/2024-6/14/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (414 channels, and 2481 messages) for you. Estimated reading time saved (at 200wpm): 280 minutes. You can now tag @smol_ai for AINews discussions!

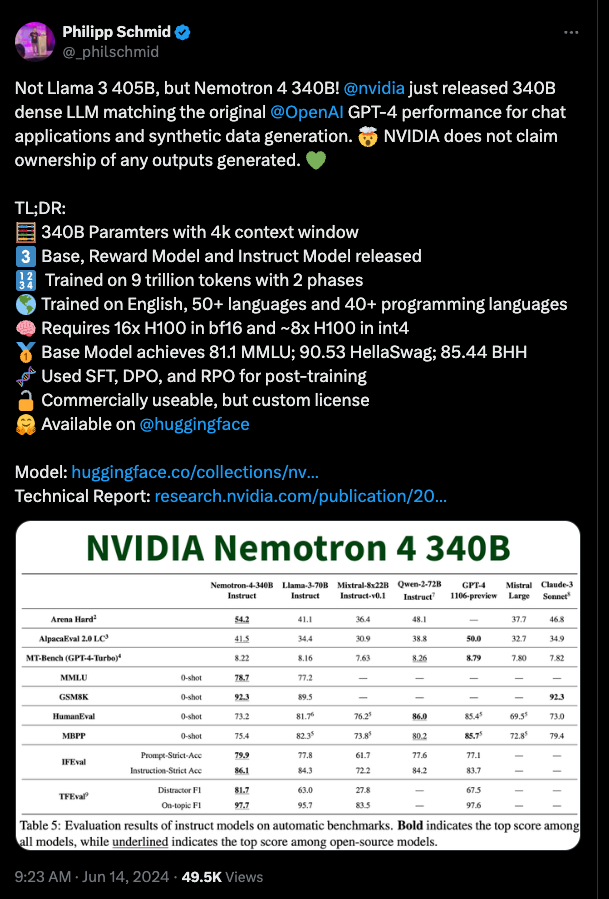

NVIDIA has completed scaling up Nemotron-4 15B released in Feb, to a whopping 340B dense model. Philipp Schmid has the best bullet point details you need to know:

From NVIDIA blog, Huggingface, Technical Report, Bryan Catanzaro, Oleksii Kuchaiev.

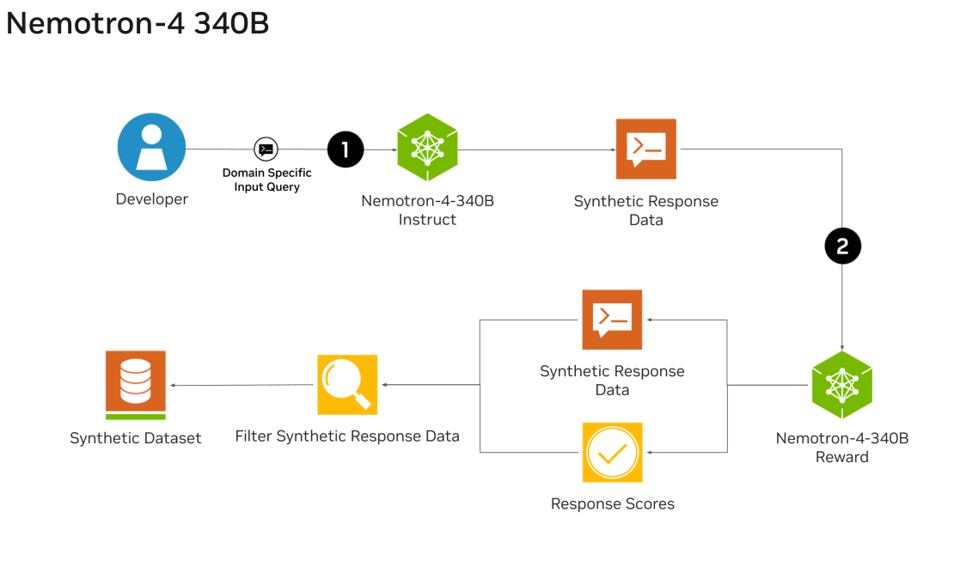

The synthetic data pipeline is worth further study:

Notably, over 98% of data used in our model alignment process is synthetically generated, showcasing the effectiveness of these models in generating synthetic data. To further support open research and facilitate model development, we are also open-sourcing the synthetic data generation pipeline used in our model alignment process.

and

Notably, throughout the entire alignment process, we relied on only approximately 20K human-annotated data (10K for supervised fine-tuning, 10K Helpsteer2 data for reward model training and preference fine-tuning), while our data generation pipeline synthesized over 98% of the data used for supervised fine-tuning and preference fine-tuning.

Section 3.2 in the paper provides lots of delicious detail on the pipeline:

- Synthetic single-turn prompts

- Synthetic instruction-following prompts

- Synthetic two-turn prompts

- Synthetic Dialogue Generation

- Synthetic Preference Data Generation

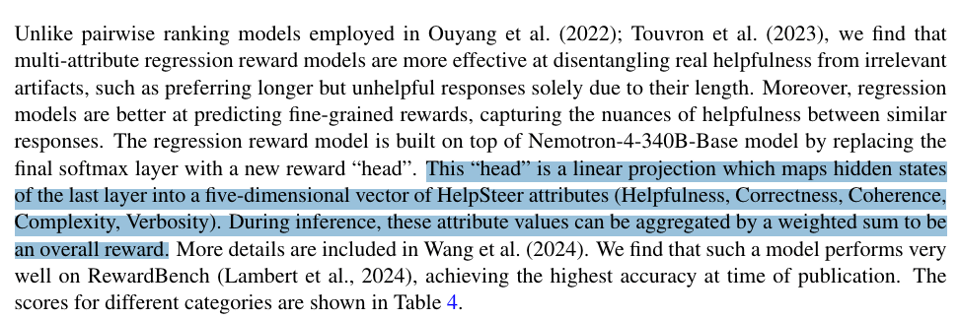

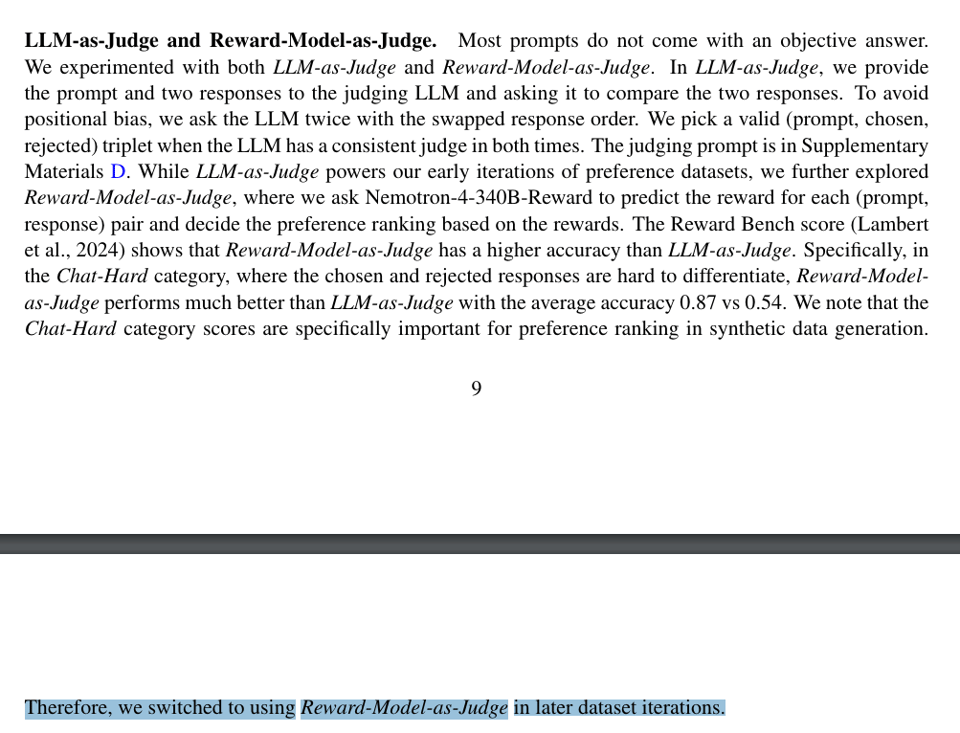

The base and instruct models easily beat Mixtral and Llama 3, but perhaps that is not surprising for half an order of magnitude larger params. However they also release a Reward Model version that ranks better than Gemini 1.5, Cohere, and GPT 4o. The detail disclosure is interesting:

and this RM replaced LLM as Judge

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

AI Models and Architectures

- New NVIDIA Nemotron-4-340B LLM released: @ctnzr NVIDIA released 340B dense LLM matching GPT-4 performance. Base, Reward, and Instruct models available. Trained on 9T tokens.

- Mamba-2-Hybrid 8B outperforms Transformer: @rohanpaul_ai Mamba-2-Hybrid 8B exceeds 8B Transformer on evaluated tasks, predicted to be up to 8x faster at inference. Matches or exceeds Transformer on long-context tasks.

- Samba model for infinite context: @_philschmid Samba combines Mamba, MLP, Sliding Window Attention for infinite context length with linear complexity. Samba-3.8B-instruct outperforms Phi-3-mini.

- Dolphin-2.9.3 tiny models pack a punch: @cognitivecompai Dolphin-2.9.3 0.5b and 1.5b models released, focused on instruct and conversation. Can run on wristwatch or Raspberry Pi.

- Faro Yi 9B DPO model with 200K context: @01AI_Yi Faro Yi 9B DPO praised for 200K context in just 16GB VRAM, enabling efficient AI.

Techniques and Architectures

- Mixture-of-Agents (MoA) boosts open-source LLM: @llama_index MoA setup with open-source LLMs surpasses GPT-4 Omni on AlpacaEval 2.0. Layers multiple LLM agents to refine responses.

- Lamini Memory Tuning for 95% LLM accuracy: @realSharonZhou Lamini Memory Tuning achieves 95%+ accuracy, cuts hallucinations by 10x. Turns open LLM into 1M-way adapter MoE.

- LoRA finetuning insights: @rohanpaul_ai LoRA finetuning paper found initializing matrix A with random and B with zeros generally leads to better performance. Allows larger learning rates.

- Discovered Preference Optimization (DiscoPOP): @rohanpaul_ai DiscoPOP outperforms DPO using LLM to propose and evaluate preference optimization loss functions. Uses adaptive blend of logistic and exponential loss.

Multimodal AI

- Depth Anything V2 for monocular depth estimation: @_akhaliq, @arankomatsuzaki Depth Anything V2 produces finer depth predictions. Trained on 595K synthetic labeled and 62M+ real unlabeled images.

- Meta's An Image is Worth More Than 16x16 Patches: @arankomatsuzaki Meta paper shows Transformers can directly work with individual pixels vs patches, resulting in better performance at more cost.

- OpenVLA open-source vision-language-action model: @_akhaliq OpenVLA 7B open-source model pretrained on robot demos. Outperforms RT-2-X and Octo. Builds on Llama 2 + DINOv2 and SigLIP.

Benchmarks and Datasets

- Test of Time benchmark for LLM temporal reasoning: @arankomatsuzaki Google's Test of Time benchmark assesses LLM temporal reasoning abilities. ~5K test samples.

- CS-Bench for LLM computer science mastery: @arankomatsuzaki CS-Bench is comprehensive benchmark with ~5K samples covering 26 CS subfields.

- HelpSteer2 dataset for reward modeling: @_akhaliq NVIDIA's HelpSteer2 is dataset for training reward models. High-quality dataset of only 10K response pairs.

- Recap-DataComp-1B dataset: @rohanpaul_ai Recap-DataComp-1B dataset generated by recaptioning DataComp-1B's ~1.3B images using LLaMA-3. Improves vision-language model performance.

Miscellaneous

- Scale AI's hiring policy focused on merit: @alexandr_wang Scale AI formalized hiring policy focused on merit, excellence, intelligence.

- Paul Nakasone joins OpenAI board: @sama General Paul Nakasone joining OpenAI board praised for adding safety and security expertise.

- Apple Intelligence announced at WWDC: @bindureddy Apple Intelligence, Apple's AI initiatives, announced at WWDC.

Memes and Humor

- Simulation hypothesis: @karpathy Andrej Karpathy joked about simulation hypothesis - maybe simulation is neural and approximate vs exact.

- Prompt engineering dumb in hindsight: @svpino Santiago Valdarrama noted prompt engineering looks dumb today compared to a year ago.

- Yann LeCun on Elon Musk and Mars: @ylecun Yann LeCun joked about Elon Musk going to Mars without a space helmet.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

Stable Diffusion 3.0 Release and Reactions

- Stable Diffusion 3.0 medium model released: In /r/StableDiffusion, Stability AI released the SD3 medium model, but many are disappointed with the results, especially for human anatomy. Some call it a "joke" compared to the full 8B model.

- Heavy censorship and "safety" filtering in SD3: The /r/StableDiffusion community suspects SD3 has been heavily censored, resulting in poor human anatomy. The model seems "asexual, smooth and childlike".

- Strong prompt adherence, but anatomy issues: SD3 shows strong prompt adherence for many subjects, but struggles with human poses like "laying". Some are experimenting with cascade training to improve results.

- T5xxl text encoder helps with prompts: Some find the t5xxl text encoder improves SD3's prompt understanding, especially for text, but it doesn't fix the anatomy problems.

- Calls for community to train uncensored model: There are calls for the community to band together and train an uncensored model, as fine-tuning SD3 is unlikely to fully resolve the issues. However, it would require significant resources.

AI Progress and the Future

- China's rise as a scientific superpower: The Economist reports that China has become a scientific superpower, making major strides in research output and impact.

- Former NSA Chief joins OpenAI board: OpenAI has added former NSA Chief Paul Nakasone to its board, raising some concerns in /r/singularity about the implications.

New AI Models and Techniques

- Samba hybrid architecture outperforms transformers: Microsoft introduced Samba, a hybrid SSM architecture with infinite context length that outperforms transformers on long-range tasks.

- Lamini Memory Tuning reduces hallucinations: Lamini.ai's Memory Tuning embeds facts into LLMs, improving accuracy to 95% and reducing hallucinations by 10x.

- Mixture of Agents (MoA) outperforms GPT-4: TogetherAI's MoA combines multiple LLMs to outperform GPT-4 on benchmarks by leveraging their strengths.

- WebLLM enables in-browser LLM inference: WebLLM is a high-performance in-browser LLM inference engine accelerated by WebGPU, enabling client-side AI apps.

AI Hardware and Infrastructure

- Samsung's turnkey AI chip approach: Samsung has cut AI chip production time by 20% with an integrated memory, foundry and packaging approach. They expect chip revenue to hit $778B by 2028.

- Cerebras wafer-scale chips excel at AI workloads: Cerebras' wafer-scale chips outperform supercomputers on molecular dynamics simulations and sparse AI inference tasks.

- Handling terabytes of ML data: The /r/MachineLearning community discusses approaches for handling terabytes of data in machine learning pipelines.

Memes and Humor

- Memes mock SD3's censorship and anatomy: The /r/StableDiffusion subreddit is filled with memes and jokes poking fun at SD3's poor anatomy and heavy-handed content filtering, with calls to "sack the intern".

AI Discord Recap

A summary of Summaries of Summaries

1. NVIDIA Pushes Performance with Nemotron-4 340B:

- NVIDIA's Nemotron-4-340B Model: NVIDIA's new 340-billion parameter model includes variants like Instruct and Reward, designed for high efficiency and broader language support, fitting on a DGX H100 with 8 GPUs and FP8 precision.

- Fine-tuning Nemotron-4 340B presents resource challenges, with estimates pointing to the need for 40 A100/H100 GPUs, although inference might require fewer resources, roughly half the nodes.

2. UNIX-based Systems Handle SD3 with ComfyUI:

- SD3 Config and Performance: Users shared how setting up Stable Diffusion 3 in ComfyUI involved downloading text encoders, with resolution adjustments like 1024x1024 recommended for better results in anime and realistic renditions.

- Split opinions on the anatomy accuracy of SD3 models spurred calls for Stability AI to address these shortcomings in future updates, reflecting ongoing discussions on community expectations and model limitations.

3. Identifying and Solving GPU Compatibility Issues:

- CUDA and Torch Runtime Anomalies: Anomalies in

torch.matmulacross Ada GPUs prompted tests comparing GPUs like RTX 4090, resolving that CUDA versions and benchmarks impact performance, with hopes for clarification from the PyTorch team.

- Establishment of multi-threading protocols in Mojo promises performance gains. Insights were shared on how Mojo's structured memory handling can position it as a reliable alternative to CUDA in the long term.

4. API Inconsistencies Frustrate Users:

- Perplexity API and Server Outages: Users reported frequent server outages and broken functionalities, such as file uploads and link generation errors, leading to ongoing frustration and doubts about the value of upgrading to Perplexity Pro.

- LangChain and pgvector Integration: Issues encountered with recognizing imports despite following documentation highlighted challenges, suggesting careful Python environment setups to ensure seamless integration.

5. Community Efforts and Resource Management:

- DiscoPOP Optimization: Sakana AI's method, DiscoPOP, claims superior preference optimization, promising high performance while deviating minimally from the base model.

- Scaling Training Efforts: Community discussions around handling extensive datasets for Retrieval-Augmented Generation (RAG) emphasize chunking, indexing, and query decomposition for improved model training and managing context lengths beyond 8k tokens.

PART 1: High level Discord summaries

Stability.ai (Stable Diffusion) Discord

- Stable Diffusion 3 Discord Bot Shared with the World: A Discord bot facilitating the generation of images from prompts using Stable Diffusion 3 was open-sourced, offering users a new tool for visual content creation.

- Tuning SDXL for Better Anime Character Renders: SDXL training for realistic and anime generation faced challenges, producing overly cartoonish results; a recommendation was made to use 1024x1024 resolution to improve outcomes.

- Navigating ComfyUI Setup for SD3: Assistance was provided for setting up Stable Diffusion 3 in ComfyUI, involving steps like downloading text encoders, with guidance sourced from Civitai's quickstart guides.

- Community Split on SD3 Anatomy Accuracy: A discussion focused on the perceived limitations of SD3 particularly in human anatomy rendering, highlighting a user's call for Stability AI to address and communicate solutions for these issues.

- LoRA Layers in Training Limbo for SD3: The conversation touched on training new LoRAs for SD3, noting the lack of efficient tools and workflows, with users anticipating future updates to enhance functionality.

CUDA MODE Discord

Processor Showdown: MilkV Duo vs. RK3588: Engineers compared the MilkDuo 64MB controller with the RK3588's 6.0 TOPs NPU, raising discussions around hardware capability versus the optimization prowess of the sophgo/tpu-mlir compiler. They shared technical details and benchmarks, causing curiosity about the actual source of MilkDuo's performance benefits.

The Triton 3.0 Effect: Triton 3.0's new shape manipulation operations and bug fixes in the interpreter were hot topics. Meanwhile, a user grappling with the LLVM ERROR: mma16816 data type not supported during low-bit kernel implementation triggered suggestions to engage with ongoing updates on the Triton GitHub repo.

PyTorch's Mysterious Matrix Math: The torch.matmul anomaly led to benchmarking across different GPUs, where performance boosts observed on Ada GPUs sparked a desire for deeper insights from the PyTorch team, as highlighted in shared GitHub Gist.

C++ for CUDA, Triton as an Alternative: Within the community, the need for C/C++ for crafting high-performance CUDA kernels was affirmed, with an emphasis on Triton's growing suitability for ML/DL applications due to its integration with PyTorch and ability to simplify memory handling.

Tensor Cores Driving INT8 Performance: Discussion in #bitnet centered on achieving performance targets with INT8 operations on tensor cores, with empirical feedback showing up to 7x speedup for large matrix shapes on A100 GPUs but diminishing returns for larger batch sizes. The role of tensor cores in performance for various sized matrices and batch operations was scrutinized, noting efficiency differences between INT8 vs FP16/BF16 and the impacts of wmma requirements.

Inter-threading Discord Discussions: The challenges of following discussions on Discord were aired, with members expressing a preference for forums for information repository and advocating for threading and replies as tactics for better managing conversations in real-time channels.

Meta Training and Inference Accelerator (MTIA) Compatibility: MTIA's Triton compatibility was highlighted, marking an interest for streamlined compilation processes in AI model development stages.

Consideration of Triton for New Architectures: In #torchao, the conservativeness of torch.nn in adding new models was contrasted with AO's receptiveness towards facilitating specific new architectures, indicating selective model support and potential speed enhancements.

Coding Dilemmas and Community-Coding: A collaborative stance was evident as members deliberated over improving and merging intricate Pull Requests (PRs), debugging, and manual testing, particularly in multi-GPU setups on #llmdotc. Multi-threaded conversations highlighted a complexity in accurate gradient norms and weight update conditions linked to ZeRO-2's pending integration.

Blueprints for Bespoke Bitwise Operations: Live code review sessions were proposed to demystify murky PR advancements, and the #bitnet community dissected the impact of scalar quantization on performance, revealing observations like average 5-6x improvements on large matrices and the sensitivity of gains on batch sizes with a linked resource for deeper dive: BitBLAS performance analysis.

Unsloth AI (Daniel Han) Discord

- Unsloth AI Sparks ASCII Art Fan Club: The community has shown great appreciation for the ASCII art of Unsloth, sparking humorous suggestions about how the art should evolve with training failures.

- DiscoPOP Gains Optimization Fans: The new DisloPOP optimizer from Sakana AI is drawing attention for its effective preference optimization functions, as detailed in a blog post.

- Merging Models: Ollama Latest Commit Buzz: The latest commits to Ollama are showing significant support enhancements, but members are left puzzling over the unclear improvements between Triton 2.0 and the elusive 3.0 iteration.

- GitHub to the Rescue for Llama.cpp Predicaments: Installation issues with Llama.cpp are being addressed with a fresh GitHub PR, and Python 3.9 is confirmed as a minimum requirement for Unsloth projects.

- Gemini API Contest Beckons Builders: The Gemini API Developer Competition invites participants to join forces, brainstorm ideas, and build projects, with hopefuls directed to the official competition page.

LM Studio Discord

Noisy Inference Got You Down?: Chat response generation sounds are likely from computation processes, not the chat app itself, and users discussed workarounds for disabling disruptive noises.

Custom Roles Left to the Playground: Members explored the potential of integrating a "Narrator" role in LM Studio and acknowledged that the current system doesn't support this, suggesting that employing playground mode might be a viable alternative.

Bilibili's Index-1.9B Joins the Fray: Bilibili released Index-1.9B model; discussion noted it offers a chat-optimized variant available on GitHub and Hugging Face. Simultaneously, the conversation turned to the impracticality of deploying 340B Nemotron models locally due to their extensive resource requirements.

Hardware Hiccups and Hopefuls: Conversations revolved around system and VRAM usage, with tweaks to 'mlock' and 'mmap' parameters affecting performance. Hardware configuration recommendations were compared and concerns highlighted about LM Studio version 0.2.24 leading to RAM issues.

LM Studio Leaps to 0.2.25: Release candidate for LM Studio 0.2.25 promises fixes and Linux stability enhancements. Meanwhile, frustration was voiced over lack of support for certain models, despite the new release addressing several issues.

API Angst Arises: A single message flagged a 401 invalid_api_key issue encountered when querying a workflow, with the user experiencing difficulty despite multiple API key verifications.

DiscoPOP Disrupts Training Norms: Sakana AI's release of DiscoPOP promises a new training method and is available on Hugging Face, as detailed in their blog post.

OpenAI Discord

- Cybersecurity Expert Joins OpenAI's Upper Echelon: Retired US Army General Paul M. Nakasone joins the OpenAI Board of Directors, expected to enhance cybersecurity measures for OpenAI's increasingly sophisticated systems. OpenAI's announcement hails his wealth of experience in protecting critical infrastructure. Read more

- Payment Processing Snafu: Engineers are reporting "too many requests" errors when attempting to process payments on the OpenAI API, with support's advice to wait several days deemed unsatisfactory due to impacts on application functionality.

- API Wanderlust: Amidst payment issues and platform-specific releases favoring macOS over Windows, discussions shifted toward alternative APIs like OpenRouter, with a nod to simpler transitions thanks to such intermediary tools.

- GPT-4: Setting Expectations Straight: Engineers clarified that GPT-4 doesn't continue learning post-training, while comparing Command R and Command R+'s respective puzzle-solving capabilities, with Command R+ demonstrating superior prowess.

- Flat Shading Conundrums with DALL-E: A technical query arose regarding the production of images in DALL-E utilizing flat shading, absent of lighting and shadows - a technique akin to a barebones 3D model texture - with the enquirer seeking guidance to achieve this elusive effect.

HuggingFace Discord

- DiscoPOP Dazzles in Optimization: The Sakana AI's DiscoPOP algorithm outstrips others such as DPO, offering superior preference optimization by maintaining high performance close to the base model, as documented in a recent blog post.

- Pixel-Level Attention Steals the Show: A Meta research paper, An Image is Worth More Than 16×16 Patches: Exploring Transformers on Individual Pixels, received attention for its deep dive into pixel-level transformers, further developing insights from the #Terminator work and underscoring the effectiveness of pixel-level attention mechanisms in image processing.

- Hallucination Detection Model Unveiled: An update in hallucination detection was shared, introducing a new small language model fine-tune available on HuggingFace, boasting an accuracy of 79% for pinpointing hallucinations in generated text.

- DreamBooth Script Requires Tweaks for Training Customization: Discussions in diffusion model development underscored the need to modify the basic DreamBooth script to accommodate individual captions for predictive training with models like SD3, as well as a separate enhancement for tokenizer training like CLIP and T5, which increases VRAM demand.

- Hyperbolic KG Embedding Techniques Explored: An arXiv paper on hyperbolic knowledge graph embeddings proposed a novel approach that leverages hyperbolic geometry for embedding relational data, positing a methodology involving hyperbolic transformations coupled with attention mechanisms for handling complex data relationships.

Nous Research AI Discord

- Roblox and the Case of Corporate Humor: Engineers found humor on the VLLM GitHub page discussing a Roblox meetup, sparking light-hearted banter in the community.

- Discord on ONNX and CPU Dilemma: A notable mention of an ONNX conversation from another Discord channel claims a 2x CPU speed boost, though skepticism remains regarding benefits on GPU.

- The Forge of Code-to-Prompt: The tool code2prompt converts codebases into markdown prompts, proving valuable for RAG datasets, but resulting in high token counts.

- Scaling the Wall of Context limitation: During discussions on handling context lengths beyond 8k, techniques like chunking, indexing, and query decomposition were suggested for RAG datasets to improve model training and information synthesis.

- Synthetic Data's New Contender: The introduction of the Nemotron-4-340B-Instruct model by NVIDIA was a hot topic, focusing on its implications for synthetic data generation and the potential under NVIDIA's Open Model License.

- WorldSim Prompt Goes Public: Discussions indicated that the worldsim prompt is available on Twitter, and there's a switch to the Sonnet model being considered in conversations about expanding model capabilities.

Modular (Mojo 🔥) Discord

- Mojo on the Move: The Mojo package manager is underway, prompting engineers to temporarily compile from source or use mojopkg files. For those new to the ecosystem, the Mojo Contributing Guide walks through the development process, recommending starting with "good first issue" tags for contribution.

- GPU and Multi-threading Conversations: The performance and implementation of GPU support in Mojo spurred debates, with attention to its strongly typed nature and how Modular is expanding its support for various accelerators. Engineers exchanged insights on multi-threading, referring to SIMD optimizations and parallelization, focusing on portability and Modular’s potential business models.

- NSF Funding Fuels AI Exploration: US-based scholars and educators should consider the National Science Foundation (NSF) EAGER Grants, supporting the National AI Research Resource (NAIRR) Pilot with monthly reviewed proposals. Resources and community building initiatives are a part of the NAIRR vision, emphasizing the importance of accessing computational resources and integrating data tools (NAIRR Resource Requests).

- New Nightly Mojo Compiler Drops: A new nightly build of the Mojo compiler (version

2024.6.1405) is now available, with updates accessible viamodular update. The release's details can be tracked through the provided GitHub diff and the changelog.

- Resources and Meet-Ups Ignite Community Spirit: There's an ongoing request for a standard query setup guide, suggesting a mimic Modular's GitHub methods until official directions are available. Additionally, Mojo enthusiasts in Massachusetts signaled interest in a meet-up to discuss Mojo over coffee, highlighting the community's eagerness for knowledge-sharing and direct interaction.

Perplexity AI Discord

- Perplexity Panic: Users experienced a server outage with Perplexity's services, reporting repeated messages and endless loops without any official maintenance announcements, creating frustration due to the lack of communication.

- Technical Troubles Addressed: The Perplexity community identified several technical issues, including broken file upload functionality (attributed to an AB test config) and consistently 404 errors when the service generated kernel links. There was also a discussion on inconsistencies observed in the Perplexity Android app compared to iOS or web experiences.

- Shaky Confidence in Pro Service: The server and communication issues have led to users questioning the value of upgrading to Perplexity Pro, expressing doubts due to the ongoing service disruptions.

- Perplexity News Roundup Shared: Recent headlines were shared among members, including updates on Elon Musk's legal maneuvers, insights into situational awareness, the performance of Argentina's National Football team, and Apple's stock price surge.

- Call for Blockchain Enthusiasts: Within the API channel, there was an inquiry about integrating Perplexity's API with Web3 projects and connecting to blockchain endpoints, suggesting a curiosity or a potential project initiative around decentralized applications.

LlamaIndex Discord

- Atom Empowered by LlamaIndex: Atomicwork has tapped into LlamaIndex's capabilities to bolster their AI assistant, Atom, enabling the handling of multiple data formats for improved data retrieval and decision-making. The integration news was announced on Twitter.

- Correct Your ReAct Configuration: In a configuration mishap, the proper kwarg for the ReAct agent was clarified as

max_iterations, not 'max_iteration', resolving an error one of the members experienced.

- Recursive Retriever Puzzle: A challenge was faced while loading an index from Pinecone vector store for a recursive retriever with a document agent, where the member shared code snippets for resolution.

- Weaviate Weighs Multi-Tenancy: There is a community-driven effort to introduce multi-tenancy to Weaviate, with a call for feedback on the GitHub issue discussing the data separation enhancement.

- Agent Achievement Unlocked: Members exchanged several learning resources for building custom agents, including LlamaIndex documentation and a collaborative short course with DeepLearning.AI.

LLM Finetuning (Hamel + Dan) Discord

New Kid on the Block: Helix AI Joins LLM Fine-Tuning Gang: LLM fine-tuning enthusiasts have been exploring Helix AI, a platform that touts secure and private open LLMs with easy scalability and the option of closed model pass-through. Users are encouraged to try Helix AI and check out the announcement tweet related to the platform's adoption of FP8 inference, which boasts reduced latency and memory usage.

Memory Tweaks for the Win: Lamini's memory tuning technique is making waves with claims of 95% accuracy and significantly fewer hallucinations. Keen technologists can delve into the details through their blog post and research paper.

Credits Where Credits Are Due: Confusion and inquiries about credit allocation from platforms like Hugging Face and Langsmith surfaced, with users reporting pending credits and seeking assistance. Mentions of email signups and ID submissions — such as akshay-thapliyal-153fbc — suggest ongoing communication to resolve these issues.

Inference Optimization Inquiry: A single inquiry surfaced regarding optimal settings for inference endpoints, highlighting a demand for performance maximization in deployed machine learning models.

Support Ticket Surge: Various technical issues have been flagged, ranging from non-functional search buttons to troubles with Python APIs, and from finetuning snags on RTX5000 GPUs to problems receiving OpenAI credits. Solutions such as switching to an Ampere GPU and requesting assistance from specific contacts have been offered, yet some user frustrations remain unanswered.

Eleuther Discord

- Boosting LLM Factual Accuracy and Hallucination Control: The newly announced Lamini Memory Tuning claims to embed facts into LLMs like Llama 3 or Mistral 3, boasting a factual accuracy of 95% and a drop in hallucinations from 50% to 5%.

- KANs March Onward to Conquer 'Weird Hardware': Discussions highlighted that KANs (Kriging Approximation Networks) might be better suited than MLPs (Multilayer Perceptrons) for unconventional hardware by requiring only summations and non-linearities.

- LLMs Get Schooled: Sharing a paper, members discussed the benefits of training LLMs with QA pairs before more complex documents to improve encoding capabilities.

- PowerInfer-2 Speeds Up Smartphones: PowerInfer-2 significantly improves the inference time of large language models on smartphones, with evaluations showing a speed increase of up to 29.2x.

- RWKV-CLILL Scales New Heights: RWKV-CLIP model, which uses RWKV for both image and text encoding, received commendations for achieving state-of-the-art results, with references to the GitHub repository and the corresponding research paper.

OpenRouter (Alex Atallah) Discord

- OpenRouter on the Hotseat for cogvlm2 Hosting: There's uncertainty about the ability to host cogvlm2 on OpenRouter, with discussions focusing on clarifying its availability and assessing cost-effectiveness.

- Gemini Pro Moderation via OpenRouter Hits a Snag: While attempting to pass arguments to control moderation options in Google Gemini Pro through OpenRouter, a user faced errors, pointing to a need for enabling relevant settings by OpenRouter. The Google billing and access instructions were highlighted in the discussion.

- Query Over AI Studio's Attractive Pricing: A user queried the applicability of Gemini 1.5 Pro and 1.5 Flash discounted pricing from AI Studio on OpenRouter, favoring the token-based pricing of AI Studio over Vertex's model.

- NVIDIA's Open Assets Spark Enthusiasm Among Engineers: NVIDIA's move to open models, RMs, and data was met with excitement, particularly for Nemotron-4-340B-Instruct and Llama3-70B variants, with the Nemotron and Llama3 being available on Hugging Face. Members also indicated that the integration of PPO techniques with these models is seen as highly valuable.

- June-Chatbot's Mysterious Origins Spark Discussion: Speculation surrounds the origins of the "june-chatbot", with some members linking its training to NVIDIA and hypothesizing a connection to the 70B SteerLM model, demonstrated at the SteerLM Hugging Face page.

OpenInterpreter Discord

- Server Crawls to a Halt: Discord users experienced significant performance issues with server operations slowing down, reminiscent of the congestion seen with huggingface's free models. Patience may solve the issue as tasks do eventually complete after a lengthy wait.

- Vision Quest with Llama 3: A member queried about adding vision capabilities to the 'i' model, suggesting a possible mix with the self-hosted llama 3 vision profile. However, confusion persists around the adjustments needed within local files to achieve this integration.

- Automation Unleashed: The channel saw a demonstration of automation using Apple Scripts showcased via a shared YouTube video, highlighting the potential of simplified scripting combined with effective prompting for faster task execution.

- Model-Freezing Epidemic: Engineers flagged up a recurring technical snag where the 'i' model frequently freezes during code execution, requiring manual intervention through a Ctrl-C interrupt.

- Hungry for Hardware: An announced device from Seeed Studio sparked interest among engineers, specifically the Sensecap Watcher, noted for its potential as a physical AI agent for space management.

Cohere Discord

- Cohere Chat Interface Gains Kudos: Cohere's playground chat was commended for its user experience, particularly citing a citation feature that displays a modal with text and source links upon clicking inline citations.

- Open Source Tools for Chat Interface Development: Developers are directed to the cohere-toolkit on GitHub as a resource for creating chat interfaces with citation capabilities, and the Crafting Effective Prompts guide to improve text completion tasks using Cohere.

- Resourcing Discord Bot Creatives: For those building Discord bots, shared resources included the discord.py documentation and the Discord Interactions JS GitHub repository, providing foundational material for the Aya model implementation.

- Anticipation Building for Community Innovations: Community members are eagerly awaiting "tons of use cases and examples" for various builds with a contagiously positive sentiment expressed in responses.

- Community Bonding through Projects: A simple "so cute 😸" reaction to the excitement of upcoming community project showcases reflects the positive and supportive environment among members.

LangChain AI Discord

- RAG Chain Requires Fine-Tuning: A user encountered difficulties with a Retrieval-Augmented Generation (RAG) chain output, as the code provided did not yield the expected number "8". Suggestions were sought for code adjustments to enhance result accuracy by filtering specific questions.

- Craft JSON Like a Pro: In pursuit of crafting a proper JSON object within a LangChain, an engineer received assistance via shared examples in both JavaScript and Python. The guidance aimed to enable the creation of a custom chat model capable of generating valid JSON outputs.

- Integration Hiccup with LangChain and pgvector: Connecting LangChain to pgvector ran into trouble, with the user unable to recognize imports despite following the official documentation. A correct Python environment setup was suggested to mitigate the issue.

- Hybrid Search Stars in New Tutorial: A community member shared a demonstration video on Hybrid Search for RAG using Llama 3, complete with a walkthrough in a GitHub notebook. The tutorial aims to improve understanding of hybrid search in RAG applications.

- User Interfaces Get Snazzier with NLUX: NLUX touts an easy setup for LangServe endpoints, showcased in documentation that guides users through integrating conversational AI into React JS apps using the LangChain and LangServe libraries. The tutorial highlights the ease of creating a well-designed user interface for AI interactions.

tinygrad (George Hotz) Discord

NVIDIA Unveils Nemotron-4 340B: NVIDIA's new Nemotron-4 340B models - Base, Instruct, and Reward - were shared, boasting compatibility with a single DGX H100 using 8 GPUs at FP8 precision. There's a burgeoning interest in adapting the Nemotron-4 340B for smaller hardware configurations, like deploying on two TinyBoxes using 3-bit quantization.

tinygrad Troubleshooting: Members tackled running compute graphs in tinygrad, with one seeking to materialize the results; the recommended fix was calling .exec, as mentioned in abstractions2.py found on GitHub. Others debated tensor sorting methods, pondered alternatives to PyTorch's grid_sample, and reported CompileError issues when implementing mixed precision on an M2 chip.

Pursuit of Efficient Tensor Operations: Discussing tensor sorting efficiency, the community pointed at using argmax for better performance in k-nearest neighbors algorithm implementations within tinygrad. There's also a dialogue around finding equivalents to PyTorch operations like grid_sample, referencing the PyTorch documentation to foster deeper understanding amongst peers.

Mixed Precision Challenges on Apple M2: An advanced user encountered errors when attempting to integrate mixed precision techniques on the M2 chip, which spotlighted compatibility issues with Metal libraries; this highlights the need for ongoing community-driven problem-solving within such niche technical landscapes.

Collaborative Learning Environment Thrives: Throughout the dialogues, an essence of collaborative problem-solving is palpable, with members sharing knowledge, resources, and fixes for a variety of technical challenges related to machine learning, model deployment, and software optimization.

Interconnects (Nathan Lambert) Discord

- NVIDIA's Power Move: NVIDIA has announced a massive-scale language model, Nemotron-4-340B-Base, with 340 billion parameters and a 4,096 token context capability for synthetic data generation, trained on 9 trillion tokens in multiple languages and codes.

- License Discussions on NVIDIA's New Model: While the Nemotron-4-340B-Base comes with a synthetic data permissive license, concerns arise about the PDF-only format of the license on NVIDIA's website.

- Steering Claude in a New Direction: Claude's experimental Steering API is now accessible for sign-ups, offering limited steering capability of the model's features for research purposes only, not production deployment.

- Japan's Rising AI Star: Sakana AI, a Japanese company working on alternatives to transformer models, has a new valuation of $1 billion after investment from prestigious firms NEA, Lux, and Khosla, as detailed in this report.

- The Meritocracy Narrative at Scale: A highlight on Scale's hiring philosophy as revealed in a blog post indicates a methodical approach to maintaining quality in hiring, including personal involvement from the company's founder.

Latent Space Discord

- Apple’s AI Clusters at Your Fingertips: Apple users may now use their devices to run personal AI clusters, which might reflect Apple's approach to their private cloud according to a tweet from @mo_baioumy.

- From Zero to $1M in ARR: Lyzr achieved $1M Annual Recurring Revenue in just 40 days by switching strategies to a full-stack agent framework, introducing agents such as Jazon and Skott with a focus on Organizational General Intelligence, as highlighted by @theAIsailor.

- Nvidia's Nemotron for Efficient Chats: Nvidia unveiled Nemotron-4-340B-Instruct, a large language model (LLM) with 340 billion parameters designed for English-based conversational use cases and a lengthy token support of 4,096.

- BigScience's DiLoco & DiPaco Top the Charts: Within the BigScience initiative, systems DiLoco and DiPaco have emerged as the newest state-of-the-art toolkits, with DeepMind notably not reproducing their results.

- AI Development for the Masses: Prime Intellect announced their intent to democratize the AI development process through distributed training and global compute resource accessibility, moving towards collective ownership of open AI technologies. Details on their vision and services can be found at Prime Intellect's website.

OpenAccess AI Collective (axolotl) Discord

- Nemotron Queries and Successes: A query was raised about the accuracy of Nemotron-4-340B-Instruct after quantization, without follow-up details on performance post-quantization. In a separate development, a user successfully installed the Nvidia toolkit and ran the LoRA example, thanking the community for their assistance.

- Nemotron-4-340B Unveiled: The Nemotron-4-340B-Instruct model was highlighted for its multilingual capabilities and extended context length support of 4,096 tokens, designed for synthetic data generation tasks. The model resources can be found here.

- Resource Demands for Nemotron Fine-Tuning: A user inquired about the resource requirements for fine-tuning Nemotron, suggesting the possible need for 40 A100/H100 (80GB) GPUs. It was indicated that inference might require fewer resources than finetuning, possibly half the nodes.

- Expanding Dataset Pre-Processing in DPO: A recommendation was made to incorporate flexible chat template support within DPO for dataset pre-processing, with this feature already proving beneficial in SFT. It involves using a

conversationfield for history, withchosenandrejectedinputs derived from separate fields.

- Slurm Cluster Operations Inquiry: A user sought insights on operating Axolotl on a Slurm cluster, with the inquiry highlighting the community's ongoing interest in effectively utilizing distributed computing resources. The conversation remained open for further contributions.

LAION Discord

- Dream Machine Animation Fascinates: LumaLabsAI has developed Dream Machine, a tool adept at bringing memes to life through animation, as highlighted in a tweet thread.

- AI Training Costs Slashed by YaFSDP: Yandex has launched YaFSDP, an open-source AI tool that promises to reduce GPU usage by 20% in LLM training, leading to potential monthly cost savings of up to $1.5 million for large models.

- PostgreSQL Extension Challenges Pinecone: The new PostgreSQL extension, pgvectorscale, reportedly outperforms Pinecone with a 75% cost reduction, signaling a shift in AI databases towards more cost-efficient solutions.

- DreamSync Advances Text-to-Image Generation: DreamSync offers a novel method to align text-to-image generation models by eliminating the need for human rating and utilizing image comprehension feedback.

- OpenAI Appoints Military Expertise: OpenAI news release announces the appointment of a retired US Army General, a move shared without further context within the messages. Original source was linked but not discussed in detail.

Datasette - LLM (@SimonW) Discord

- Datasette Grabs Hacker News Spotlight: Datasette, a member's project, captured significant attention by making it to the front page of Hacker News, receiving accolades for its balanced treatment of different viewpoints. A quip was made about the project ensuring continuous job opportunities for data engineers.

DiscoResearch Discord

- Choosing the Right German-Savvy Model: Members discussed the superiority of discolm or occiglot models for tasks requiring accurate German grammar, despite general benchmark results; these models sit aptly within the 7-10b range and are case-specific choices.

- Bigger Can Be Slower but Smarter: The trade-off between language quality and task performance may diminish with larger models in the 50-72b range; however, inference speed tends to decrease, necessitating a balance between capability and efficiency.

- Efficiency Over Overkill: For better efficiency, sticking to models that fit within VRAM parameters like q4/q6 was advised, especially as larger models have slower inference speeds. A pertinent resource highlighted was the Spaetzle collection on Hugging Face.

- Training or Merging for the Perfect Balance: Further training or merging of models can be a strategy for managing trade-offs between non-English language quality and the ability to follow instructions, a topic of interest for multi-language model developers.

- The Bigger Picture in Multilingual AI: This discussion underscores the ongoing challenge in achieving the delicate balance between performance, language fidelity, and computational efficiency in the evolution of multilingual AI models.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI Stack Devs (Yoko Li) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Torchtune Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The YAIG (a16z Infra) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!