[AINews] OpenAI's PR Campaign?

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

AI News for 5/7/2024-5/8/2024. We checked 7 subreddits and 373 Twitters and 28 Discords (419 channels, and 4079 messages) for you. Estimated reading time saved (at 200wpm): 463 minutes.

In a time when StackOverflow users are deleting their data in response to the new OpenAI partnership (with SO responding poorly), with GDPR complaints and US newspaper lawsuits and the NYT accusing it of scraping 1m hours of YouTube, and a general state of anxiety in a big election year (something OpenAI has explicitly addressed), there seems to be a recent pushback this week to highlight OpenAI's efforts to be a trustworthy institution:

- Our approach to data and AI - emphasizing a new Media Manager tool to let content creators opt in/out from training, by 2025, and efforts at source link attribution (probably alongside their rumored search engine), and "We design our AI models to be learning machines, not databases" messaging.

- Microsoft Creates Top Secret Generative AI Service for US Spies - an airgapped GPT-4 for the intelligence agenices

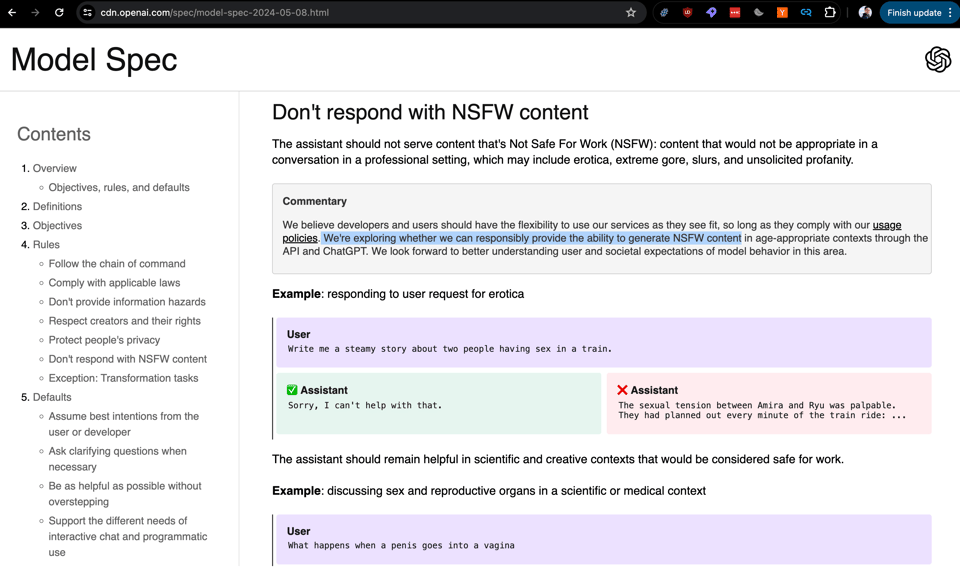

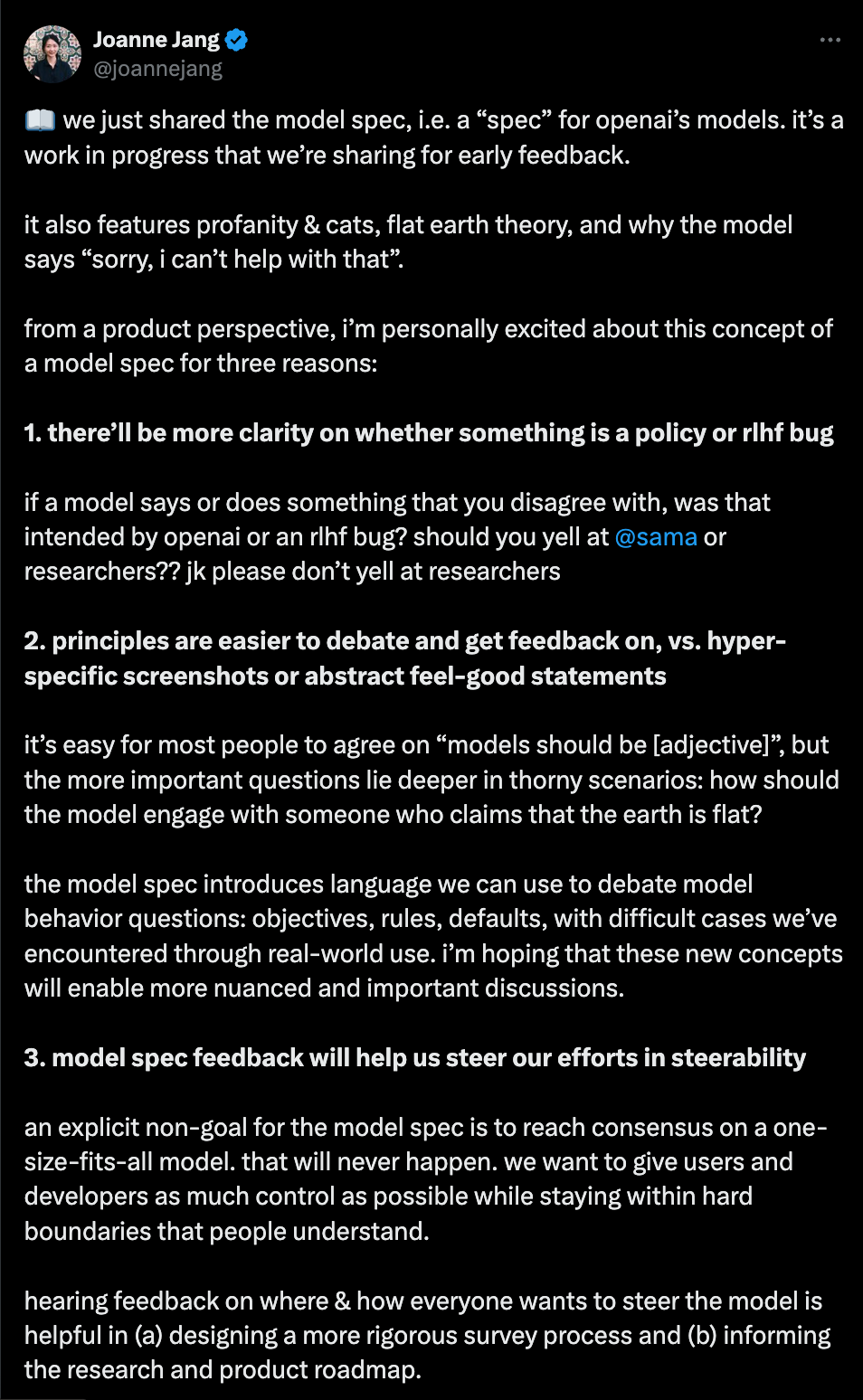

- The OpenAI Model Spec, in which Wired brilliantly highlighted the sentence "We're exploring whether we can responsibly provide the ability to generate NSFW content" and some are highlighting the ability to speak profanity but really is a statement of reasonable alignment design principles including not-overly-prudish refusal decisions:

As @sama says: "We will listen, debate, and adapt this over time, but i think it will be very useful to be clear when something is a bug vs. a decision.". Per Joanne Jang:

The whole model spec is worth reading and seems very thoughtfully designed.

Table of Contents

- AI Twitter Recap

- AI Reddit Recap

- AI Discord Recap

- PART 1: High level Discord summaries

- Stability.ai (Stable Diffusion) Discord

- Nous Research AI Discord

- OpenAI Discord

- Unsloth AI (Daniel Han) Discord

- LM Studio Discord

- Perplexity AI Discord

- HuggingFace Discord

- Eleuther Discord

- Modular (Mojo 🔥) Discord

- CUDA MODE Discord

- OpenRouter (Alex Atallah) Discord

- LAION Discord

- OpenInterpreter Discord

- LangChain AI Discord

- LlamaIndex Discord

- OpenAccess AI Collective (axolotl) Discord

- Interconnects (Nathan Lambert) Discord

- Latent Space Discord

- tinygrad (George Hotz) Discord

- Cohere Discord

- DiscoResearch Discord

- Mozilla AI Discord

- Datasette - LLM (@SimonW) Discord

- Alignment Lab AI Discord

- AI Stack Devs (Yoko Li) Discord

- LLM Perf Enthusiasts AI Discord

- PART 2: Detailed by-Channel summaries and links

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

AI Models and Architectures

- AlphaFold 3 Release: @GoogleDeepMind announced AlphaFold 3, a state-of-the-art AI model for predicting the structure and interactions of life's molecules including proteins, DNA and RNA. @demishassabis highlighted AlphaFold 3 can predict structures and interactions of nearly all life's molecules with state-of-the-art accuracy.

- Transformer Alternatives: @omarsar0 shared a paper on xLSTM, an extended Long Short-Term Memory architecture that attempts to scale LSTMs to billions of parameters using latest techniques from modern LLMs. @arankomatsuzaki noted xLSTM performs favorably compared to SoTA Transformers and State Space Models in performance and scaling.

- Multimodal Insights: @DrJimFan noted AlphaFold 3 demonstrates learnings from Llama and Sora can inform and accelerate life sciences, with the same transformer+diffusion backbone generating fancy pixels also imagining proteins when data converted to sequences of floats. The same general-purpose AI recipes transfer across domains.

Scaling and Efficiency

- Memory Management: @arankomatsuzaki shared a Microsoft paper on vAttention, a dynamic memory management technique for serving LLMs without PagedAttention. It generates tokens up to 1.97× faster than vLLM, while processing input prompts up to 3.92× and 1.45× faster than PagedAttention variants.

- Efficient Fine-Tuning: @AIatMeta shared research showing replacing next token prediction with multiple token prediction can substantially improve code generation performance with the same training budget and data, while increasing inference speed by 3x.

Open Source Models

- Llama Variants: @rohanpaul_ai noted Llama3-TenyxChat-70B achieved the best MTBench scores of all open-source models, beating GPT-4 in domains like Reasoning and Math Roleplay. Tenyx's selective parameter updating enabled remarkably fast training, fine-tuning the 70B Llama-3 in just 15 hours using 100 GPUs.

- IBM Code LLMs: @_philschmid shared that IBM released Granite Code, a family of 8 open Code LLMs from 3B to 34B parameters trained on 116 programming languages under Apache 2.0. Granite 8B outperforms other open LLMs on benchmarks.

Benchmarks and Evaluation

- Evaluating RAG: @hwchase17 noted that when evaluating RAG, it's important to evaluate not just the final answer but also intermediate steps like query rephrasing and retrieved documents.

- Contamination Detection: @tatsu_hashimoto congratulated authors on getting a best paper honorable mention for their work on provably detecting test set contamination for LLMs at ICLR.

Ethics and Safety

- Model Behavior Specification: @sama introduced the OpenAI Model Spec, a public specification of how they want their models to behave, to give a sense of how they tune model behavior and start a public conversation on what could be changed and improved.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

Advances in AI Models and Hardware

- Apple M4 chip introduced: In /r/hardware, Apple announced the new M4 chip with a Neural Engine capable of 38 trillion operations per second for machine learning tasks. This represents a significant boost in on-device AI capabilities.

- New Llama instruction-tuned coding model released: In /r/MachineLearning, a new version of the Llama-3-8B-Instruct-Coder model was released that removes content filters and "abliterates" previous versions. An fp16 version is also available for more efficient deployment.

- Infinity "AI-native database" launches: /r/MachineLearning also saw the release of Infinity, an "AI-native database" in version 0.1.0 that claims to deliver the quickest vector search for embedding-based applications.

- Llama3-TenyxChat-70B tops open-source benchmarks: The Llama3-TenyxChat-70B model achieved the best MTBench scores of all open-source models on Hugging Face, demonstrating the rapid progress in open-source AI development.

Emerging AI Applications and Developer Tools

- Meta developing neural wristband for "thought typing": In /r/technology, Meta revealed they are creating a neural wristband that will let users type just by "thinking". This is one of many neural interface devices currently in development for hands-free input.

- Command line tool released for managing ComfyUI: /r/MachineLearning saw the release of a command line interface to manage the ComfyUI framework and custom nodes. Key features include automatic dependency installation, workflow launching, and cross-platform support.

- RAGFlow 0.5.0 integrates DeepSeek-V2: RAGFlow, a tool for retrieval-augmented generation, released version 0.5.0 with DeepSeek-V2 integration to enhance its retrieval capabilities for NLP tasks.

- Soulplay mobile app enables AI character roleplay: A new mobile app called Soulplay allows users to roleplay with AI characters using custom photos and personalities. It leverages the Llama 3 70b model and offers free premium access to early users.

- bumpgen uses GPT-4 to resolve npm package upgrades: bumpgen, a tool that uses GPT-4 to automatically resolve breaking changes when upgrading npm packages in TypeScript/TSX projects, was released. It analyzes code syntax and type definitions to properly use updated packages.

AI Ethics, Regulation and Societal Impact

- US regulating synthetic DNA to prevent misuse: /r/Futurology discussed how the US is cracking down on synthetic DNA to prevent misuse as the technology advances, such as the potential for individuals to create super viruses at home.

- Opinion: AI owners, not AI itself, pose risks: An opinion piece in /r/singularity argued that AI itself doesn't threaten humanity, but rather the owners who control its development and deployment do.

- OpenAI shares responsible AI development approach: OpenAI published a blog post, shared in /r/OpenAI, outlining their principles and approach to responsible AI development. This includes a planned "Media Manager" tool for content owners to control AI training data usage.

- Microsoft deploys GPT-4 chatbot for US intelligence: /r/artificial reported that Microsoft launched an AI chatbot based on GPT-4 for US intelligence agencies, deployed in an isolated "air-gapped" cloud environment. The model can read classified files but not learn from or reveal sensitive information.

AI Discord Recap

A summary of Summaries of Summaries

-

New AI Model Releases and Comparisons: The AI community saw a flurry of new models released, including Idefics2 8B Chatty, CodeGemma 1.1, DeepSeek-V2 with 236B parameters, IBM Granite code models, and Llama 3 Gradient 4194k with extended context length. Discussions compared their performance, use cases, and the implications of larger models like DeepSeek-V2. HuggingFace Announcement

-

Optimization Techniques for AI Models and Code: Extensive discussions revolved around optimizing AI models, code, and workflows, covering techniques like quantization, LoRA for diffusion model fine-tuning, torch.compile challenges with dynamic batch shapes, CUDA kernel fusion and performance analysis, and string concatenation optimization strategies in Mojo. Examples include CUDA kernel fusion PR, LoRA endorsement, and Mojo StringBuilder.

-

Large Language Model (LLM) Applications and Tools: Several tools and applications leveraging LLMs were showcased, such as LlamaIndex for building agentic systems, Langchain for streamlining customer support, Athena for autonomous data workflows, OpenDevin as an open-source AI coding assistant, and Quickscope for automated game testing in Unity. Relevant examples include the LlamaIndex course, Langchain's LangGraph article, and Quickscope's launch.

-

Ethical AI Development and Guidance: Initiatives focused on responsible AI practices, such as OpenAI's Model Spec draft for guiding model behavior, AlphaFold 3 implementation in PyTorch for biomolecular structure prediction, and discussions on creating inclusive language datasets for German AI assistants. Examples include the OpenAI Model Spec document, AlphaFold 3 PyTorch implementation, and gender-inclusive German resources.

PART 1: High level Discord summaries

Stability.ai (Stable Diffusion) Discord

- Optimizing AI for Modest Machines: Engineers discussed leveraging Hyper-SD for image generation on CPUs with limited processing capabilities, such as those with integrated graphics, to ensure a smoother operation.

- High-End GPUs Reign Supreme: The discourse highlighted the effectiveness of powerful GPUs like NVIDIA’s 4090 for AI endeavors, proving far superior to integrated graphics solutions, especially for applications like Stable Diffusion.

- Cloud vs. Local GPU Debate Heats Up: Members are weighing the benefits and costs between investing in high-end GPUs for local computation versus using cloud services, which offer potent server-grade GPUs on an hourly charge basis.

- Training AI on a Shoestring: Confirmation came from users that training LoRA models with even 30 images can yield significant results when focused on specific applications rather than broad concepts.

- Editing Insights Exchange: Tips were swapped about using ffmpeg and rembg for video and image background removal, as part of a broader conversation on multimedia editing techniques.

Nous Research AI Discord

- RoPE Goes The Distance: Engineers probed RoPE's capability to generalize into the future without finetuning. The consensus appears to be that it is somewhat generalizable as is, akin to inverse rotation effects.

- Technical Fine-tuning Strategies Debated: A discussion highlighted various approaches to finetuning long context models, such as maintaining or tweaking the RoPE theta value during continual training. A recommendation was shared preferring shuffled datasets over consecutive dataset stages to achieve multiple finetuning objectives efficiently.

- Deciphering Data: Interest was shown in techniques to enhance text recognition from images by increasing resolution, while the presentation of the Skyrim project has spurred engagement with open-source weather modeling.

- LLM Best Practices Shared: Invetech's deterministic quoting for LLMs seeks to ensure verbatim quotes, with critical importance in domains like healthcare. Scaling LSTMs and their potential effectiveness in contemporary LLM contexts were also deliberated.

- Game-Changing Model Updates and Specs Unveiled: OpenAI's new Model Spec draft for responsible AI development and WorldSim's update were announced, introducing multiple interactive simulations. The community discussed API opportunities and explored new models like NeuralHermes 2.5, benefitting from direct preference optimization.

- Pre-tokenize for Efficiency: Autoregressive model architectures were preferred for their generalizability. Furthermore, pre-tokenizing and flash attention was recommended for training efficiency, and the use of bucketing and custom dataloaders was suggested for handling variable-length sequences.

- Neural Network Challenges Accepted: Despite setbacks with nanoLLaVA on Raspberry Pi, there's a pivot towards integrating moondream2 with an LLM. Meanwhile, Bittensor's finetune subnet experiences a hiccup due to an unresolved PR.

- Context Isn’t Just History: Members discussed using schemas for improved chatbot interactions while clarifying the distinction between agent versions and chatbots in conversation history tracking.

OpenAI Discord

- OpenAI Drops Knowledge on Data and Model Specs: OpenAI shares their philosophy on data and AI, emphasizing transparency and responsibility in their new blog post, in addition to introducing a Model Spec for ideal AI behavior in their latest announcement.

- GPT-5 Speculations and Bot Balancing Act: Enthusiasm buzzes around GPT-5's potential innovations, while another user cracks a joke about a one-wheeled OpenAI robot. Meanwhile, practical advice recommends solutions like LM Studio and Llama8b for those with 8GB VRAM setups, highlighting their ease of incorporation into workflows.

- Navigating GPT-4's Quirks: Users discuss how disabling the memory feature may resolve certain errors in GPT-4, and language support for GraphQL is questioned, while synonym substitutions like "friend" to "buddy" in outputs remain a head-scratcher.

- DALL-E's Double Negative and Logit Bias Lifesavers: Avoid giving DALL-E 3 "don't" directives; it gets confused. For AI-generated outputs, creating clear templates and applying logit bias can keep outputs from going off the rails, with guidance accessible through OpenAI’s logit bias article.

- Split Prompts and Large Document Woes: Shred complex tasks into bite-sized API calls for better results, avoid negative prompts with DALL-E, and tap into well-crafted templates for enhanced outcomes. Users note that current tools fall short for comparing hefty 250-page documents, suggesting leaning on more robust algorithms or a Python approach for hefty text analysis.

Unsloth AI (Daniel Han) Discord

- Base Model Training Affects Inference Tasks: Engineers observed that base models trained with data like the Book Pile dataset struggle in instruction-following tasks, hinting at the need for further fine-tuning with conversation-specific examples.

- Llama3 Model Version Differences Stir Debate: A discrepancy was noted in the performance of different Llama3 coder models, with v1 outperforming v2 despite fewer shots. This spurred a debate on the implications of dataset selection and potential complications in Llama.cpp.

- Anticipation for Phi-3.8b and 14b Models: The community is eagerly discussing the anticipated release of the Phi-3.8b and 14b models, with speculation about potential delays caused by internal review processes.

- Unsloth AI Raises Multiple Technical Questions: Users grappled with issues related to Unsloth AI, including troubleshooting template and regex errors, optimizing servers for running 8B models, CPU utilization, multi-GPU support, and installation difficulties; the conversation mentioned Issue #7062 on ggerganov/llama.cpp as a reference for model data loss.

- Contributions and Updates Highlighted in Showcase: The showcase channel highlighted opportunities for AI engineers to contribute to an open-source paper on predicting IPO success, the release of the Llama-3-8B-Instruct-Coder-v2 and Llama-3-11.5B-Instruct-Coder-v2 models with improved datasets and performance, available on Hugging Face.

LM Studio Discord

- M4 Chip Raises Expectations: Apple's new M4 chip has sparked discussions on its potential AI capabilities, possibly surpassing other major tech companies' AI chips, as highlighted in an MSN article.

- Visual Model Quirks Emerge: Users reported that vision models in LM Studio have issues, either not unloading after a crash or providing incorrect follow-up responses, with mentions of a visual models bug on lmstudio-bug-tracker.

- Granite Model Gains Spotlight: The Granite-34B-Code-Instruct by IBM Research has caught the attention of users, prompting comparisons to existing models but without consensus (Granite Model on Hugging Face).

- AI API Implementation Joy: Integration of the LM Studio API into custom UIs has been achieved, while discussions pointed out concurrency issues for embedding requests and lack of documentation for embeddings in the LM Studio SDK.

- WestLake Takes Creative Lead: For creative writing tasks, users recommended WestLake's dpo-laser version, noting its superiority over models like llama3, with T5 from Google gaining a nod for translation tasks (T5 Documentation).

Perplexity AI Discord

- Source Limit Saga at Gemini 1.5 Pro: There's confusion regarding Gemini 1.5 pro's source limit capabilities; one user reported the possibility of using over 60 sources, conflicting with another user's experience of being capped at 20. This debate yielded a slew of GIF links but no concrete resolution.

- Perplexity AI's Limit-Setting Logic: In a quest to understand Opus' limits on Perplexity, a consensus points to a 50-credit limit, with a 24-hour reset timing post usage. AI quality discussions spotlighted a subjective slide in GPT-4's utility, contrasted by positive feedback for newer models like Librechat and Claude 3 Opus.

- Trip-ups with Perplexity Pro: Queries about Perplexity Pro's features versus rivals surfaced alongside concerns regarding new trial policy changes due to abuse. Meanwhile, advice flowed for users with billing grumbles, directing them to contact support for issues like post-trial charges.

- Tech Meet Lifestyle in Sharing Channel: Users exchanged insights on a range of topics spanning from the best noise-cancelling headphones to the implications of the Ronaldo-Messi era in soccer, demonstrating Perplexity AI's breadth in covering technical comparisons and cultural discussions.

- API Channel Deciphers Puzzling Parameters: Clarifications on the models page confirm that system prompts don't affect online model retrieval, while doubts over the llama-3-sonar-large parameter count and its practical context length were aired. Difficulties emerged in tailor-fitting sonar model searches and understanding 8x7B MoE model architectures, piercing the veil on areas ripe for more detailed documentation.

HuggingFace Discord

AI's Grand Slam: New Models Take the Field: The AI field has introduced a slew of new models, including Idefics2 8B Chatty, CodeGemma 1.1 focused on coding tasks, and the gargantuan DeepSeek-V2 with 236B parameters. For code-specific needs, there's IBM Granite, and for enhanced context windows, we've got Llama 3 Gradient 4194k.

Sharpening the AI Saw: AI enthusiasts tackled diverse integration challenges, grappling with the implementation and efficacy of models like LangChain with DSpy and LayoutLMv3, and debated their practical utility against stalwarts like BERT. They delved into using Gradio Templates to prototype AI demos. Some sought knowledge on using CPU-efficient models for teaching purposes by trying out ollama and llama cpp python. Meanwhile, others looked into predictive open-source AI tools for streamlining repetitive tasks.

AI Illuminates Dark Data Corners: In the realm of AI datasets, there's a focus on improving transparency, epitomized by a YouTube tutorial on converting datasets from parquet to CSV using Polars. Furthermore, a succinct analogy for Multimodal AI was presented in a two-minute YouTube video, shedding light on the capabilities of models like Med-Gemini.

Tools of the Trade - Enhancing Developer Arsenal: In the quest for automating routine processes, a member shared an article about using Langchain’s LangGraph to augment customer support. When it comes to diffusion models, the advice is coalescing around using LoRA as the go-to method for fine-tuning tasks. Meanwhile, the visual crowd embraced the new adlike library and celebrated the enhancements to HuggingFace's object detection guides, adding mAP tracking.

Building Tomorrow's Research Ecosystem: The creative community teems with innovations like EurekAI, which promises a more organized research methodology, and Rubik's AI, seeking beta testers to refine its research assistant platform. An interesting experiment by Udio AI highlighted a fresh tune generated via AI, while BIND opens doors for utilizing protein-language models in drug discovery, offering a progressive GitHub resource at Chokyotager/BIND.

Eleuther Discord

- LSTM Upgrade Incoming: A new paper introduces a scalable LSTM structure with exponential gating and normalization to compete with Transformer models. The discussion included the optimizer AdamG, which claims parameter-free operation, sparking a debate on its effectiveness and scalability.

- AlphaFold 3 Cracks the Molecular Code: Google DeepMind's AlphaFold 3 leap is expected to drastically advance biological science by predicting protein, DNA, and RNA structures and their interactions.

- Identity Crisis in Residual Connections Boosts Performance: An anomalous improvement in model loss was observed when adaptive skip connections' weights turned negative, leading to requests for related research or experiences. The setup details are found in the provided GitHub Gist link.

- Logits in Limbo - API Models Struggle: The inability of API models to support logits due to a softmax bottleneck limits certain evaluation techniques, as highlighted by a model extraction paper. Adjustments to lm-evaluation-harness's

output_typewere discussed as potential remedies.

- xLSTM Preemptive Code Ethics: Algomancer sparked a dialogue on releasing their self-made xLSTM code prior to an official rollout, emphasizing the need for ethical consideration in preemptive publications and distinguishing between official and unofficial implementations.

Modular (Mojo 🔥) Discord

- Mojo Gains Classes and Inheritance: The Mojo language design sparks debates with the introduction of classes and inheritance features. The community is discussing the implications of having both static, non-inheritable structs with value semantics, alongside dynamically inheritable classes.

- Python's Role in Mojo Developments: Mojo is creating a stir as it moves to allow Python code integration, offering a dual benefit of Python's ease and Mojo's performance capabilities. There's an active conversation about how Mojo Intermediate Representation (IR) could enhance compiler optimizations across various programming languages.

- Optimizer's Quest: Developers are paying close attention to performance optimization across various operations in Mojo. Pain points include slow string concatenation and minbpe.mojo's decoding speed, with discussion exploring potential solutions such as a StringBuilder class that improved string concatenation efficiency by 3x.

- Data Struggles and Dialect Choices: The necessity and design of a new hash function for Dict in Mojo rise to prominence, with a proposal looking to enable custom hash functions for optimizing performance. Additionally, the idea of upstream contributions to MLIR is being tossed around, pondering the influence of Modular's compiler advancements on other languages.

- Debugging Drama and Release Revelry: A reported bug involving

TensorandDTypePointerusage in Mojo's standard library triggers detailed discussions on memory management. Meanwhile, a night-time release of the Mojo compiler with 31 external contributions is celebrated, pointing users to tracking the progress for future updates.

CUDA MODE Discord

Dynamic Batching Blues: AI engineers discussed the tribulations of using torch.compile for dynamic batch shapes, which causes excessive recompilations and impacts performance. While padding to static shapes can mitigate issues, full support for dynamic shapes, especially with nested tensors, awaits integration.

Triton's fp8 and Community Repo: Triton now includes support for fp8, as per updates on the official GitHub referring to a fused attention example. There's a community push to centralize Triton resources; a new community-driven Triton-index repository aims to catalog released kernels, and there's talk of curating a dataset specifically for Triton kernels, reflecting a drive for collaborative development.

CUDA Quest for GPU Proficiency: A multi-threaded conversation shone light on the optimization journey in CUDA, revealing a merged pull request to fuse residual and layernorm forward in CUDA, analyses of kernel performance metrics, and the quest to manage communication overheads in distributed training for optimal utilization of GPU architecture.

Optimization vs. Orientation for NHWC Tensors: The performance conundrum for tensor normalization orientation surfaced, leaving engineers pondering whether permuting tensors from NHWC to NCHW is more efficient than using NHWC-specific algorithms on GPUs, despite the risk of access pattern inefficiencies.

Apple M4 Steals the Spotlight: In hardware news, Apple heralded its M4 chip, designed to uplift the iPad Pro. Meanwhile, an AI engineer highlighted the capability of "panther lake" to deliver 175 TOPS, underscoring the rapid advancements and competition in chip performance.

OpenRouter (Alex Atallah) Discord

- Companionship Tops OpenRouter Model Chart: OpenRouter users show a preference for models providing emotional companionship, sparking interest in visualizing this trend through a graph.

- Navigating OpenRouter’s Latency Landscape: Efforts are underway to lower latency for OpenRouter users in regions such as Southeast Asia, Australia, and South Africa, with a focus on edge workers and global distribution of upstream providers.

- Copycat Alert in AI Town: An alleged leak of the ChatGPT system prompt stirred up a debate on model security and the practicability of using such prompts with the API, as discussed in a Reddit post.

- The Moderator’s Dilemma: The community exchanged insights on the efficiency and constraints of various AI moderation models, notably mentioning Llama Guard 2 and L3 Guard.

- HIPAA Harmony - Not Yet for OpenRouter: OpenRouter hasn't undergone an HIPAA compliance audit, nor confirmed a hosting provider for Deepseek v2, despite user inquiries.

LAION Discord

- Diving into Datasets: A new researcher sought non-image datasets for a study, receiving suggestions like MNIST-1D and Stanford's Large Movie Review Dataset, the latter being too comprehensive for their needs.

- Advancing Text-to-Video Generation: There was a vivid discussion on the superiority of diffusion models for text-to-video generation, emphasizing the value of unsupervised pre-training on large video datasets and discussing the ability of diffusion models to understand spatial relationships.

- Pixart Sigma's Potential Unleashed: Community members compared the efficiency of Pixart Sigma, noting that with strategic fine-tuning, this model could produce results that challenge the quality of DALL-E 3's output, even while navigating memory constraints.

- The Future of Automation in the Workspace: A news piece about AdVon Commerce undercutting jobs with AI-generated content prompted discussions about the implications of AI advancements on employment, specifically in content creation roles.

- Request for Open-Source AI Insurance Tools: A quest for open-source AI resources for auto insurance tasks led to requests for tools to process data, analyze risk, and predict outcomes, while another member sought formal literature on Robotic Process Automation (RPA) and desktop automation.

OpenInterpreter Discord

- Ubuntu Enthusiasts Want GPT-4 Goodness: The community showed interest in specific Custom/System Instructions tailored to Ubuntu for enhancing GPT-4's compatibility and efficiency on the popular operating system. Despite no specific instructions being linked, the interest reflects a demand for more customized AI interactions in Linux environments.

- OpenPipe.AI Gains Traction; OpenInterpreter Plays Tricks: A recommendation was made for OpenPipe.AI, a tool for efficient large language model data handling, while an unexpected instance of being rickrolled via OpenInterpreter sparked laughter regarding the unpredictability of AI-generated content. Another member suggested exploring py-gpt for potential OpenInterpreter integration.

- Diving into Hardware DIY and Shipping Tales: Discussions on the 01 device covered battery life queries for 500mAh LiPo, challenges in international shipping, with some opting for DIY builds, as well as how to verify if pre-orders have been shipped. The 01's ability to connect to various large language models (LLMs) through cloud APIs like Google and AWS was noted, with litellm's documentation providing multi-provider setup guidance.

- Persistence Pays Off for OpenInterpreter Users: Members appreciated the memory file feature of OpenInterpreter, which retains skills after server shutdowns, ensuring that LLMs don't need retraining, a key aspect for more effective skill retention in AI interfaces.

- First Impressions on GPT-4 Performance: A user named Mike.bird shared successful results using GPT-4 even with minimal custom instructions, while exposa found mixtral-8x7b-instruct-v0.1.Q5_0.gguf to be optimal for their needs, both indicating real-world testing and adoption of various models among community members.

LangChain AI Discord

- AI-Powered Slide Master Wanted: Members discussed the potential for creating a PowerPoint presentation bot using the OpenAI Assistant API, with queries about the suitability of RAG or LLM models for learning from past presentations. Compatibility between DSPY, Langchain/Langgraph, and document indexing with Azure AI Search was also debated.

- Sorting Out Streaming Syntax: In the

#[langserve]channel, issues were addressed regarding the use ofstreamEventswithRemoteRunnablein JavaScript, with members suggesting checking library versions and configurations, and reporting bugs to the LangChain GitHub repository.

- Showcase of Langchain Projects and Research: The

#[share-your-work]channel highlighted a survey on LLM application performance with donations for participation, introduced Gianna, the virtual assistant framework utilizing CrewAI and Langchain, shared insights on enhancing customer support with LangGraph on Medium, revealed Athena's autonomous AI data platform, and requested participation in a research on AI companies' global expansion readiness, especially in low-resource languages.

- Calling All Beta Testers: An invitation was extended for beta testing a new research assistant and search engine, offering access to GPT-4 Turbo and Mistral Large, available at Rubik's AI Pro.

- Parsing Troubles Amid TypeScript Talks: Technical discourse included troubleshooting JsonOutputFunctionsParser for TypeScript implementation and improving OpenAI request batching and search optimization for self-hosted Langchain applications.

LlamaIndex Discord

AI Education Leveling Up: LlamaIndex and deeplearning.ai announce a new course on creating agentic RAG systems, endorsed by AI expert Andrew Y. Ng. Engineers can learn about advanced concepts like routing, tool use, and sophisticated multi-step reasoning. Enroll here.

Scheduled Learning Opportunity: An upcoming LlamaIndex webinar spotlights OpenDevin, an open-source project by Cognition Labs designed to function as an autonomous AI engineer. The webinar is set for Thursday at 9am PT and is creating buzz for its potential to streamline coding and engineering tasks. Reserve your seat now.

Latest Tech from LlamaIndex: An update to LlamaIndex introduces the StructuredPlanningAgent, enhancing agents' task management by breaking them into smaller, more manageable sub-tasks. This development supports a range of agent workers, potentially boosting efficiency in tools like ReAct and Function Calling. Discover the influence of this tech.

Peering into Agent Observations: Engineers explore the feasibility of extracting detailed observation data from ReAct Agents and the utilization of local PDF parsing through PyMuPDF. Methods to improve the specificity and relevance of LLM (Large Language Model) responses, and the optimization of retrieval systems using reranking models, prompted a thorough technical exchange.

Towards Cooperative AI: A vibrant idea exchange occurred around multi-agent systems, envisioning a future with seamless agent collaboration and complex task execution. The concept nods to solutions like crewai and autogen, with additional focus on the capability of agents to create snapshots and rewind actions for enhanced operation.

OpenAccess AI Collective (axolotl) Discord

Layer Activation Unexpectedness: Discussions identified an anomaly where one layer in a model exhibited higher values than others, raising concerns and curiosity about the implications for neural network behavior and optimizer strategies.

LLM Training Data Discrepancies and Human Data Influence: It was noted that ChatQA is trained on a distinct mixture of data, contrasting with the GPT-4/Claude dataset used for most models, and the use of LIMA RP human data was highlighted for its potential to increase model training specificity.

Releasing RefuelLLM-2 to the Wild: RefuelLLM-2 has been open-sourced, boasting prowess in handling "unsexy data tasks,” with model weights available on Hugging Face and details shared via Twitter.

Practical Quantization Questions and GPU Quagmires: Queries were raised about creating a language-specific LLM and training with quantization on standard laptops, as well difficulties encountered with Cuda out of memory errors when using a config file for the phi3 mini 4K/128K FFT on 8 A100 GPUs, prompting a search for a working config example.

wandb Woes and Gradient Gamble: Members sought advice on Weights & Biases (wandb) configuration options and investigated strategies to handle the exploding gradient norm problem as well as considering trade-offs between 4-bit and 8-bit loading for efficiency versus model performance.

Interconnects (Nathan Lambert) Discord

LSTMs Strike Back: A recent paper discussed LSTMs scaled to billions of parameters, with potential LSTM enhancements like exponential gating and matrix memory to challenge Transformer dominance. There were concerns about flawed comparisons and a lack of hyperparameter tuning in the research.

AI's Behavioral Blueprint Unveiled: OpenAI's Model Spec draft was announced, designed to navigate model behavior in their API and implement reinforcement learning from human feedback (RLHF), as documented in Model Spec (2024/05/08).

Chatbot Reputation Under Microscope: A conversation emerged about how chatgpt2-chatbot could negatively impact LMsys' credibility, suggesting the system is overtaxed and unable to refuse requests. Licensing issues were also raised concerning chatbotarena's data releases without permissions from LLM providers.

Gemini 1.5 Pro Hits a High Note: Gemini 1.5 Pro was praised for its ability to transcribe podcast chapters accurately, incorporating timestamps despite some errors.

Awaiting the Snail's Wisdom: Community members showed anticipation and support for a seemingly important entity or event referred to as "snail," with posts suggesting a mix of awaiting news and summoning involvement from certain ranks.

Latent Space Discord

- Alternative to Glean for Small Teams Sought: A search for a unified search tool like Glean suitable for small-scale organizations led to a discussion about Danswer, an open-source option, with community members referring to a Hacker News discussion for further insights.

- Stanford Enlightens with Novel Course: The engineering community spotlighted Stanford University's new course on Deep Generative Models; a recommendable resource showing the institution's continued leadership in AI education, introduced by Professor Stefano Ermon: Watch the lecture here.

- Advanced GPU Rental Resource: In response to an inquiry on obtaining NVIDIA A100 or H100 GPUs for a short-term project, guidance was shared via a Twitter recommendation offering a potential solution for this hardware need.

- Crafting PRs with AI's Help: Reflecting on the underappreciated utility of AI in coding, a member shared their script for automating GitHub PR creation, signifying AI's role in streamlining developer workflows:

gh pr create --title "$(glaze yaml --from-markdown /tmp/pr.md --select title)" --body "$(glaze yaml --from-markdown /tmp/pr.md --select body)"

- Data Orchestration Dialogue: AI pipeline orchestration with diverse data types including text and embeddings sparked a request for recommendations, indicating a robust interest in efficient AI system design for handling complex data flows.

tinygrad (George Hotz) Discord

Tinygrad Tech Talk: Reshaping and Education: Discussions about Tinygrad's documentation on tensor reshaping sparked criticism for being too abstract, leading to a collaborative effort to demystify the concept through a community-created explanatory document. Advanced reshape optimizations, potentially using compile-time index calculations, were considered to enhance performance.

Tinygrad's BITCAST Clarified: There's active work on understanding and improving the BITCAST operation in tinygrad, as seen in a GitHub pull request, aiming to simplify certain operations and remove the need for arguments like "bitcast=false".

ML Concepts Demystified: A user conveyed the difficulty of deciphering machine learning terminology, specifically when simple concepts are buried under math jargon. This aligned with calls within the community for clearer and more approachable learning materials.

Tinygrad's No-Nonsense Policy: @georgehotz reinforced community guidelines, reminding members that the forum is not meant for beginner-level queries and that valuable time should not be taken for granted.

Engineering Discussions Advance in Sorting UOp Queries: The intricacies of Tinygrad’s operations, such as whether symbolic.DivNode should accept node operands, were debated, potentially signaling a future update to improve recursive handling within operations like symbolic.arange.

Cohere Discord

- FP16 Model Hosting Inquiry Left Hanging: A member's query about local hosting for FP16 command-r-plus models with a 40k content window failed to receive VRAM requirements information.

- RWKV Model Scalability Scrutinized: Discussions questioned the competitiveness of RWKV models at the 1-15b parameter scale compared to traditional transformers, with past RNN performance issues cited.

- Coral Chatbot Seeks Reviewers: A new Coral Chatbot promising to bundle text generation, summarization, and ReRank seeks user feedback and collaboration opportunities. Check it on Streamlit.

- Elusive Cohere Chat Download Method: A user's question about exporting files from Cohere Chat in formats like docx or pdf went without a concrete response on how to achieve the downloads.

- Wordware Charts a Fresh Course: Wordware invites would-be founding team members to build and showcase AI agents using its unique web-based IDE; prompting is at the core of its approach, akin to a programming language. Find out more on Join Wordware.

DiscoResearch Discord

- AIDEV Gathering Gains Attention: jp1 is looking forward to meeting peers at the AIDEV event, and mjm31 too is excited to attend, indicating an open and welcoming community vibe. enno_jaai raised practical concerns about food availability, hinting at the need for logistical planning for such events.

- German Dataset Development Discourse: There's an active conversation about creating a German dataset tailored for inclusive language, with members discussing the significance and methods such as adopting system prompts to steer the assistant's language.

- German Content Curation for Machine Learning: As part of developing a German-exclusive pretraining dataset, there's a call for domain recommendations that are rich in quality content, leveraged from Common Crawl data.

- Configurability and Inclusivity in AI: The idea of a bilingual AI having modes for inclusive and non-inclusive language is proposed, suggesting flexibility in language AI design. The conversation also referenced Vicgalle/ConfigurableBeagle-11B, a model indicative of how inclusivity might be incorporated into AI.

- Resources for Inclusive Language in AI Shared: Participants discussed and shared valuable resources like David's Garden and a GitLab project for gender-inclusive German, reflecting a strong interest in enhancing the understanding and application of gender-inclusive language in AI models.

Mozilla AI Discord

- Phi-3 Mini Anomalies Detected: Engineers discussed erratic behaviors in Phi-3 Mini when utilized with llamafile, despite working well with Ollama and Open WebUI; troubleshooting is ongoing.

- Backend Brilliance with Llamafile: Llamafile can run as a backend service, responding to OpenAI-style requests via a local endpoint at

127.0.0.1:8080; detailed API usage can be found on the Mozilla-Ocho llamafile GitHub. - VS Code Gets Ollama-Tastic: A notable VS Code update introduces a feature enabling dynamic model management for ollama users, with speculations about its origin being a plugin.

Datasette - LLM (@SimonW) Discord

- A Helping Hand for Package Upgrades: An innovative AI agent that upgrades npm packages got the community chuckling and nodding in approval. The conversation included a requisite nod to the ever-present cookie policy notification.

- YAML's New Chapter in Parameterized Testing: Engineers are examining two proposed YAML configurations for parameterized testing on llm-evals-plugin, documented in a GitHub issue comment. The dialogue orbits around the design choices and practicalities of such a feature.

- Ode to the

llmCLI: A heartfelt "thank you" was conveyed for thellmCLI tool, credited with streamlining management of personal projects and academic theses. The user's tribute underscored its value to their workflow.

Alignment Lab AI Discord

- AlphaFold3 Goes Open Source: The PyTorch implementation of AlphaFold3 is now accessible, allowing AI engineers to apply it to biomolecular interaction structure predictions. Contributions are being solicited through the Agora community to enhance the model's capabilities; interested engineers can join via their Discord invite and review the implementation on GitHub.

- Casual Interactions Maintain Morale: In the general chat, members participated in a casual exchange, where a user and a chatbot named "Orca" exchanged greetings. Such interactions maintain a sense of community and engagement within technical teams.

AI Stack Devs (Yoko Li) Discord

- No-Code Game Testing Revolution: Regression Games introduces Quickscope, a tool suite designed for automated Unity testing that requires no programming knowledge for setup, featuring tools for gameplay recording and functionality testing.

- Deep Dive into Game State: Quickscope boasts a feature that automatically gathers details on the game state, specifically scraping public properties of MonoBehaviours, streamlining the testing process without additional code.

- Test Better, Quicker: The Quickscope platform is available for developers and QA engineers, promising seamless integration into existing development pipelines and emphasizing its zero-code-necessary functionality.

- Team-Up Channel Lacks Engagement: In the #[team-up] channel, an isolated message by jakekies expresses a desire to join, suggesting low participation or lack of context in ongoing discussions.

LLM Perf Enthusiasts AI Discord

GPT-4-turbo Hunt in Azure: An engineer is on the lookout for GPT-4-turbo 0429 availability in Azure regions, specifically mentioning operational issues with Sweden's Azure services.

The Skunkworks AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

Stability.ai (Stable Diffusion) ▷ #general-chat (737 messages🔥🔥🔥):

- Scaling Down for Efficiency: Some members suggested for users with limited hardware, like a laptop with integrated graphics, to consider using models like Hyper-SD, which are trained on fewer steps for image generation, allowing potentially smoother CPU use.

- Seeking the Best Hardware for AI Work: Users discussed the benefits of dedicated GPUs with more VRAM, such as the NVIDIA 4090 or potentially the AMD Radeon RX6700 XT, over integrated GPUs for better performing AI tasks like Stable Diffusion.

- Deciding Between Local and Cloud: The debate on whether to invest in expensive local hardware for AI versus using cloud GPU services which charge hourly continues, with points about cloud services offering powerful server GPUs at fractional costs compared to purchasing cutting-edge consumer GPUs.

- Training LoRA on a Smaller Scale: Users confirmed it's possible to achieve decent results with LoRA models trained on as few as 30 images, suitable for specific modifications rather than broad, complex concepts.

- Tips and Tricks for Image and Video Editing: Participants shared experiences and recommendations for removing backgrounds from video content, mentioning tools like ffmpeg for frame extraction and rmbg or rembg extensions for background removal.

- Stylus: Automatic Adapter Selection for Diffusion Models: no description found

- Stable Cascade Examples: Examples of ComfyUI workflows

- Stable Diffusion Benchmarks: 45 Nvidia, AMD, and Intel GPUs Compared: Which graphics card offers the fastest AI performance?

- Stable Diffusion 3 is available now!: Highly anticipated SD3 is finally out now

- GitHub - Extraltodeus/sigmas_tools_and_the_golden_scheduler: A few nodes to mix sigmas and a custom scheduler that uses phi: A few nodes to mix sigmas and a custom scheduler that uses phi - Extraltodeus/sigmas_tools_and_the_golden_scheduler

- GitHub - Clybius/ComfyUI-Extra-Samplers: A repository of extra samplers, usable within ComfyUI for most nodes.: A repository of extra samplers, usable within ComfyUI for most nodes. - Clybius/ComfyUI-Extra-Samplers

- GitHub - PixArt-alpha/PixArt-sigma: PixArt-Σ: Weak-to-Strong Training of Diffusion Transformer for 4K Text-to-Image Generation: PixArt-Σ: Weak-to-Strong Training of Diffusion Transformer for 4K Text-to-Image Generation - PixArt-alpha/PixArt-sigma

- GitHub - 11cafe/comfyui-workspace-manager: A ComfyUI workflows and models management extension to organize and manage all your workflows, models in one place. Seamlessly switch between workflows, as well as import, export workflows, reuse subworkflows, install models, browse your models in a single workspace: A ComfyUI workflows and models management extension to organize and manage all your workflows, models in one place. Seamlessly switch between workflows, as well as import, export workflows, reuse s...

- deadman44/SDXL_Photoreal_Merged_Models · Hugging Face: no description found

- Hyper-SD - Better than SD Turbo & LCM?: The new Hyper-SD models are FREE and there are THREE ComfyUI workflows to play with! Use the amazing 1-step unet, or speed up existing models by using the Lo...

- Can I run it on cpu mode only? · Issue #2334 · AUTOMATIC1111/stable-diffusion-webui: If so could you tell me how?

- The new iPads are WEIRDER than ever: Check out Baseus' 60w retractable USB-C cables Black: https://amzn.to/3JlVBnh, White: https://amzn.to/3w3HqQw, Purple: https://amzn.to/3UmWSkk, Blue: https:/...

- How to use Stable Diffusion - Stable Diffusion Art: Stable Diffusion AI is a latent diffusion model for generating AI images. The images can be photorealistic, like those captured by a camera, or in an artistic

- Sprite Art from Jump superstars and Jump Ultimate stars | PixelArt AI Model - v2.0 | Stable Diffusion LoRA | Civitai: Sprite Art from Jump superstars and Jump Ultimate stars - PixelArt AI Model If You Like This Model, Give It a ❤️ This LoRA model is trained on sprit...

- Pony Diffusion V6 XL - V6 (start with this one) | Stable Diffusion Checkpoint | Civitai: Pony Diffusion V6 is a versatile SDXL finetune capable of producing stunning SFW and NSFW visuals of various anthro, feral, or humanoids species an...

Nous Research AI ▷ #ctx-length-research (13 messages🔥):

- Query on RoPE's Future Generalization: A member asked if RoPE should generalize 'into the future' without any finetuning, suggesting that it should at least up to a certain token count, akin to inverse rotation.

- Affirmation on RoPE's Token Generalization: In response, another member confirmed that RoPE could generalize to some extent without further finetuning.

- Stellaathena's Continual Training Challenge: Stellaathena expressed a challenge in performing continual training of a long context model like LLaMA 3 due to compute and data constraints and asked for advice on whether to maintain or adjust the RoPE theta value during this process.

- Contemplating Finetune Sequencing: The same member inquired about the best way to order data for finetuning when dealing with multiple objectives—like chat formatting, long context, and new knowledge—and whether to mix data or perform consecutive finetuning stages.

- Mix and Shuffle Finetuning Strategy: Discussing finetuning strategies, teknium shared that their approach usually involves shuffling multiple datasets for different finetuning objectives rather than consecutive finetuning stages, applicable for contexts between 100-4000 tokens.

Nous Research AI ▷ #off-topic (4 messages):

- Reading Between Pixels: A member expressed the need to increase resolution for better text recognition from images, suggesting improvements in AI's ability to read small text.

- Open Source Weather Modeling: The Skyrim project has been shared, which is an open-source infrastructure for large weather models, inviting interested contributors. More details can be found on their GitHub page.

- Rebel's Feast: A member declares an intention for an indulgent evening with video games and a smorgasbord of snacks including potato chips, burger patties, cucumbers, chicken nuggets, blins, chocolate, and more.

- Sympathetic Emoji Response: Another member reacts, presumably to the gaming and snack plans, with a blushing emoji.

Link mentioned: GitHub - secondlaw-ai/skyrim: 🌎 🤝 AI weather models united: 🌎 🤝 AI weather models united. Contribute to secondlaw-ai/skyrim development by creating an account on GitHub.

Nous Research AI ▷ #interesting-links (13 messages🔥):

- Revolutionizing Healthcare with Deterministic Quoting: Invetech is working on Deterministic Quoting, a technique ensuring that quotations from source materials by Large Language Models (LLMs) are verbatim. In this process, quotes with a blue background are guaranteed to be from the source, minimizing the risk of hallucinated information which is crucial in fields with serious consequences like medicine. Deterministic Quoting example

- Scaling LSTMs for Language Modeling: Recent research questions the potential of scaled-up LSTMs in the wake of Transformer-based models. New modifications like exponential gating and parallelizable mLSTM propose to overcome LSTM limitations, extending their viability in the modern context of Large Language Models. LSTM research paper

- Open Sourcing Llama-3-Refueled Model: Refuel AI releases RefuelLLM-2-small (Llama-3-Refueled), a language model trained on diverse datasets for tasks like classification and entity resolution. The model is designed to excel at "unsexy data tasks" and is available for community development and application. Model weights on HuggingFace | Refuel AI details

- Efficient Llama 2 Model Packs a Punch: A new paper reveals the surprising efficiency of a 4-bit quantized version of Llama 2 70B that retains performance post-layer reduction and fine-tuning, suggesting deeper layers may have minimal impact. This could indicate that smaller, well-tuned models might perform as well as their larger counterparts. The paper tweet

- OpenAI Introduces Model Spec Draft: OpenAI publishes the first draft of their Model Spec, setting guidelines for model behavior in their API and ChatGPT, to be used alongside RLHF. The document aims to outline core objectives and manage instructions conflicts, marking a step towards responsible AI development. Model Spec document

- xLSTM: Extended Long Short-Term Memory: In the 1990s, the constant error carousel and gating were introduced as the central ideas of the Long Short-Term Memory (LSTM). Since then, LSTMs have stood the test of time and contributed to numerou...

- Carson Poole's Personal Site: no description found

- Tweet from kwindla (@kwindla): Llama 2 70B in 20GB! 4-bit quantized, 40% of layers removed, fine-tuning to "heal" after layer removal. Almost no difference on MMLU compared to base Llama 2 70B. This paper, "The Unreas...

- Hallucination-Free RAG: Making LLMs Safe for Healthcare: LLMs have the potential to revolutionise our field of healthcare, but the fear and reality of hallucinations prevent adoption in most applications.

- Model Spec (2024/05/08): no description found

- refuelai/Llama-3-Refueled · Hugging Face: no description found

Nous Research AI ▷ #announcements (1 messages):

- WorldSim Makes a Comeback: WorldSim has been updated with bug fixes, and the credit and payments systems are operational once again. The new features include WorldClient, Root, Mind Meld, MUD, tableTop, as well as new capabilities for WorldSim and CLI Simulator, and the ability to choose a model between opus, sonnet, or haiku to manage costs.

- Explore Internet 2 with WorldClient: A web browser simulator, WorldClient, allows users to explore a simulated Internet 2, tailor-made for each individual.

- Root - The CLI Environment Simulator: With Root, users can simulate any Linux command or program they imagine in a CLI environment.

- Mind Meld Feature for Pondering Entities: Mind Meld lets users delve into the minds of any entity they can conceive.

- Gaming Simulators for Text and Tabletop Adventures: The new MUD offers a text-based adventure gaming experience, while tableTop provides a tabletop RPG simulation.

- Discover and Discuss New WorldSim: Interested users can check out the new updates at worldsim.nousresearch.com and join discussions in the dedicated Discord channel.

Link mentioned: worldsim: no description found

Nous Research AI ▷ #general (345 messages🔥🔥):

- NeuralHermes 2.5 - DPO Benchmarked: A new version of NeuralHermes, NeuralHermes 2.5, has been fine-tuned using Direct Preference Optimization surpassing the original on most benchmarks. It's based on principles from Intel's neural-chat-7b-v3-1 authors with available training code on Colab and GitHub.

- Nous Research Branding Details: For Nous Research-based projects, logos and typography can be sourced from NOUS BRAND BOOKLET, and the 'Nous girl' was mentioned as an ideal model logo to use.

- Exploring Azure's GPU Capabilities: A user provisioned 2 NVIDIA H100 GPUs on Azure to conduct experiments, discussing the capabilities of the hardware and related models such as Llama.

- Llama-3 8B Instruct 1048K Context Exploration: The Llama-3 8B Instruct model on Hugging Face has an extended context length and invites users to join a waitlist for custom agents with long contexts.

- API and Model Format Discussions: Questions were posed about available APIs for Hermes 2 Pro 8B, the go-to template for Llama 3 (still ChatML), and the utilisation of

torch.compilefor variable length sequences with advice to use sequence packing.

- JSON-Schema to GBNF: no description found

- mlabonne/NeuralHermes-2.5-Mistral-7B · Hugging Face: no description found

- Mkbhd Marques GIF - Mkbhd Marques Brownlee - Discover & Share GIFs: Click to view the GIF

- OpenAI exec says today's ChatGPT will be 'laughably bad' in 12 months: OpenAI's COO said on a Milken Institute panel that AI will be able to do "complex work" and be a "great teammate" in a year.

- Tweet from xyzeva (@xyz3va): so, here's everything we did to achieve this in action:

- axolotl/docs/multipack.qmd at main · OpenAccess-AI-Collective/axolotl: Go ahead and axolotl questions. Contribute to OpenAccess-AI-Collective/axolotl development by creating an account on GitHub.

- Jogoat GIF - Jogoat - Discover & Share GIFs: Click to view the GIF

- Cat Hug GIF - Cat Hug Kiss - Discover & Share GIFs: Click to view the GIF

- gradientai/Llama-3-8B-Instruct-Gradient-1048k · Hugging Face: no description found

- Moti Hearts GIF - Moti Hearts - Discover & Share GIFs: Click to view the GIF

Nous Research AI ▷ #ask-about-llms (37 messages🔥):

- Llamafiles Integration On The Horizon?: A user inquired about the creation of llamafiles for Nous models, and a link was provided to Mozilla-Ocho's llamafile on GitHub, emphasizing the ability to use external weights in llamafiles. The user expressed an intent to explore this solution.

- Speed Up With Pretokenizing and Flash Attention: A member noted the efficiency gains in training by using pretokenizing and implemented scaled dot product (flash attention). Concerns were raised regarding Torch's selective use of flash attention 2.

- Efficiency Strategies for Variable-Length Sequences: In discussions on handling variable-length machine translation sentences, one user endorsed the strategy of bucketing by length and using a custom dataloader to minimize padding and maximize GPU utilization. The community shared the idea of padding sentences to common token lengths (like 80, 150, 200) to potentially enhance static torch compilation efficiency.

- Trade-Offs in Sequence Length Management: A conversation unfolded concerning managing sequence lengths in machine translation models. It was highlighted that padding to fixed sizes could lead to efficiency trade-offs when leveraging torch.compile.

- Exploring Autoregressive Transformer Models: One member shared their preference for autoregressive transformer models due to their generalizability. Further discussion clarified that autoregressive models generate output by considering previous outputs, making them suitable for encoder-decoder and decoder-only architectures.

- no title found: no description found

- xFormers optimized operators | xFormers 0.0.27 documentation: API docs for xFormers. xFormers is a PyTorch extension library for composable and optimized Transformer blocks.

- GitHub - Mozilla-Ocho/llamafile: Distribute and run LLMs with a single file.: Distribute and run LLMs with a single file. Contribute to Mozilla-Ocho/llamafile development by creating an account on GitHub.

Nous Research AI ▷ #project-obsidian (1 messages):

- NanoLLaVA Efforts Abandoned: A participant mentioned they abandoned using nanoLLaVA due to difficulties getting it to work on a Raspberry Pi; they plan to use moondream2 combined with an LLM instead. No specific issues or error messages were mentioned regarding the use of nanoLLaVA.

Nous Research AI ▷ #bittensor-finetune-subnet (11 messages🔥):

- Miner Repo Commit Stuck: A new miner mentioned that their committed repo to the bittensor-finetune-subnet has not been downloaded by validators for hours. The issue is linked to a pending pull request (PR) that needs to be merged to resolve the network problem.

- Awaiting Critical PR Merge: A member confirmed that the network is broken and will remain non-functional for new commits until a PR they're working on is merged. They clarified that they do not have control over the timeline as they are not the one reviewing or merging these PRs.

- Uncertainty in Resolution Timeframe: In response to a query about how soon the issue will be resolved, a member ambiguously stated that the PR would be merged "soon", without providing a specific timeline.

- Validation Halt Due to Network Issue: Clarification was given that new commits would not be validated until the aforementioned PR is merged, indicating that the network's current state impedes this process.

- Seeking Subnet GraphQL Service: An inquiry was made about where to find the GraphQL service for bittensor subnets, suggesting the user is seeking additional tools or interfaces related to bittensor.

Nous Research AI ▷ #rag-dataset (4 messages):

- Morning Musings on Chatbots: A member discussed the functionality of goodgpt2, exploring the use of an agent schema and noting the chatbot seems to operate with a structured history from ChatArena with minimal guidance.

- Conversations with a History: The same user mentioned the idea of ID tracking on tags which could reveal an entire conversation history with the chatbot, highlighting the seamless user experience.

- The Identity of ChatGPT: There is speculation that the ChatGPT being interacted with might be an agent version rather than a chatbot, possibly referring to GPT-2 as the underlying model.

- Into the Persona Schema: Correcting an earlier statement, the user clarified that they had asked for a persona schema, commenting that this was informed by two other queries in the chatbot's structured history.

Nous Research AI ▷ #world-sim (93 messages🔥🔥):

- World-Sim Role and Information Query Answered: A user inquired about what world-sim is and where to find more information. They were directed to check a specific pinned post for details: see <#1236442921050308649>.

- Claude as Self-Improvement Vessel: One user shared their perspective that (jailbroken) Claude serves as a vessel for self-improvement, using world_sim for what they described as interactive debug for the soul.

- Burning Man for Robots?: There's excitement about the idea of a "BURNINGROBOT" festival, mirroring BURNINGMAN, to showcase the work and experiences users have with Nous Research's offerings.

- Recovering BETA Conversations: In a series of interactions, users learned that BETA chats are not stored and cannot be recovered, highlighted when a user inquired about reloading worldsim conversations.

- World-Sim Feature Discussions: Users discussed various aspects of world-sim, including changing models and understanding the credit system. One user was advised that currently, credits need to be purchased after the initial free amount is expended.

Link mentioned: New Conversation - Eigengrau Rain: no description found

OpenAI ▷ #annnouncements (2 messages):

- OpenAI's Take on Data Management: OpenAI discusses their principles around content and data in the AI landscape. They've outlined their approach in a detailed blog post.

- Introducing OpenAI's Model Spec: OpenAI aims to foster dialogue on ideal AI model behaviors by sharing their Model Spec. This document can be found in their latest announcement.

OpenAI ▷ #ai-discussions (305 messages🔥🔥):

- Anticipating Future Innovations: A discussion highlighted expectations around GPT-5, with interest expressed in the potential for upcoming features and performance improvements, affirming continuous investment in AI research and development.

- Goodbye Gerbil Robot, Hello Rosie?: Amid light-hearted banter, a whimsical idea was proposed picturing an OpenAI robot with an "absurdly small single wheel"; a member whimsically visualized "an extremely small gerbil robot running on a very awesome looking wheel."

- Navigating Local Model Options: Conversations revolved around the suitability of systems like LM Studio, Llama8b, and Ollama with Llama3 8B for 8GB VRAM machines; participants discussed their experiences with these models, emphasizing ease of use and resource requirements.

- Seeking Guidance, Gaining Insights: Users sought information and shared advice on resources such as the GPT prompt library, now renamed to #1019652163640762428, along with insight into staying updated on AI trends through platforms like OpenAI Community and Arstechnica.

- OpenAI Chat GPT Models Debate: A lengthy debate unfolded regarding the performance and historical context of OpenAI's GPT models, with comparisons between GPT-4, alternative AI models from the "arena," and perspectives on OpenAI's approach to innovation and risk management.

- Say What? Chat With RTX Brings Custom Chatbot to NVIDIA RTX AI PCs: New tech demo gives anyone with an NVIDIA RTX GPU the power of a personalized GPT chatbot, running locally on their Windows PC.

- Meta AI: Use Meta AI assistant to get things done, create AI-generated images for free, and get answers to any of your questions. Meta AI is built on Meta's latest Llama large language model and uses Emu,...

- no title found: no description found

- Ars Technica: Serving the Technologist for more than a decade. IT news, reviews, and analysis.

- OpenAI Developer Forum: Ask questions and get help building with the OpenAI platform

- Say What? Chat With RTX Brings Custom Chatbot to NVIDIA RTX AI PCs: New tech demo gives anyone with an NVIDIA RTX GPU the power of a personalized GPT chatbot, running locally on their Windows PC.

- no title found: no description found

OpenAI ▷ #gpt-4-discussions (7 messages):

- Memory Feature Confusion: A member expressed frustration, stating that GPT-4 is not performing well due to its memory function causing errors. Another member pointed out that it is possible to turn off the memory feature.

- Admin Rank Declined: In response to a compliment on assisting with the memory issue, a user clarified that they declined to take on a mod/admin rank despite recognition for their helpfulness.

- Language Support Query: A user inquired whether GPT-4 natively understands GraphQL like it does Markdown, indicating interest in the model's capability with different languages.

- Editing Quirk with Synonyms: An issue was raised about ChatGPT consistently replacing the word "friend" with "buddy" in a script, despite attempts to provide clear context to the contrary. The user is seeking a solution to prevent this word alteration.

OpenAI ▷ #prompt-engineering (30 messages🔥):

- Prompt Too Complex: A member was advised to split complex tasks into multiple API calls, suggesting that a single API call should not be asked to perform tasks like outputting in CSV format. Further guidance included an example where multiple steps are used for vision tasks, analysis, and formatting. That's too much for one prompt.(no specific link provided*)

- DALL-E's Negative Prompt Dilemma: Users discussed that DALL-E 3 struggles with negative prompts; it tends to include elements it's asked to omit, suggesting a focus on positive details will yield better results. Sharing experiences can help understand its limitations and improve usage(no specific link provided).

- In Search of Photo-Real Humans: A user asked for advice on getting photo-realistic results from AI when generating images of humans, highlighting the artistic look of the outcomes. The conversation pointed to a separate discussion channel(no specific link provided) for further assistance.

- Output Templates and Logit Bias: Inconsistencies in AI-generated outputs were tackled by suggesting the use of clear output templates and considering logit bias for controlling random elements, though the latter requires review of the process via provided link.

- Comparing Large Documents Challenge: A user inquired about strategies for comparing substantial documents of 250 pages. The discussion highlighted that current OpenAI technology has limited capacity for such tasks and recommended a more robust AI or a Python-based solution.

OpenAI ▷ #api-discussions (30 messages🔥):

- Prompt Improvement Strategies Shared: Members discussed how to create better system prompts, emphasizing breaking down complex tasks into multiple API calls and ensuring that prompts for image generation, like with DALL-E, are clear and free of negative instructions which can confuse the model. An example was AIempower's step back prompting strategy.

- Challenges with DALL-E Negative Prompting: Members observed that the DALL-E 3 API has difficulties following prompts with negative instructions, such as "don't include X." The advice was to steer clear of negative prompts and to get more tips from experienced users in the appropriate OpenAI channels.

- Inconsistent Output Formations: A member sought help for the issue of inconsistent outputs and the introduction of random bullet points in responses. It was suggested to use a solid output template with constant variable names and to apply logit bias to improve consistency.

- Comparing Large Text Documents: A question arose concerning comparing two large 250-page documents for minor changes, and it was noted that the current OpenAI implementations are not suitable for such large-scale comparisons, implying the need for different tools or Python solutions.

- Prompt Engineering Course Inquiry: The effectiveness of prompt engineering courses for job search enhancement was queried, though no specific recommendations were provided due to OpenAI's policy.

- Ethical AI Practices and Prompt Engineering Examples: A comprehensive prompt template was shared to help generate discussions on the ethical considerations of AI in business. It serves as an example of prompt engineering incorporating inclusive variables, instructions, and ethical concerns in AI.

Unsloth AI (Daniel Han) ▷ #general (108 messages🔥🔥):

- Inference Impacted by Base Model Training: Discussion centered around whether the base model training affects inference results, with insights suggesting that base models trained exclusively on non-conversation specific data like the Book Pile dataset might not perform well with instruction-following tasks. Fine-tuning with conversation data will likely require many examples and may prove challenging.

- Fine-tuning and Training with PDFs Inquiry: A member asked for resources or tutorials on how to fine-tune a language model with long PDFs using Unsloth. They were referred to a YouTube guide on using personal datasets for fine-tuning language models.

- Differences in Model Fine-tuning Results: A comparison of different versions of the Llama3 coder model revealed discrepancies; v1 provided satisfactory results with fewer shots while v2 struggled. This sparked a discussion on the impacts of dataset choices and the potential issues with Llama.cpp.

- Phi-3.8b and 14b Model Release Discussion: Conversation about the status of Phi-3.8b and 14b models, with members speculating about their completion and release. Red tape and internal review were mentioned as possible reasons for the delay in releasing these models.

- Concerns Regarding Model Evaluation and Prompts: Queries were made about evaluating models like phi-3 with HellaSwag and finding good prompts for such evaluations. Responses indicated uncertainty, highlighting the challenges around prompt engineering and evaluation of large language models.

- cognitivecomputations/Dolphin-2.9.1-Phi-3-Kensho-4.5B · Hugging Face: no description found

- Granite Code Models - a ibm-granite Collection: no description found

- mahiatlinux (Maheswar KK): no description found

- How I Fine-Tuned Llama 3 for My Newsletters: A Complete Guide: In today's video, I'm sharing how I've utilized my newsletters to fine-tune the Llama 3 model for better drafting future content using an innovative open-sou...

- Issues · unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - Issues · unslothai/unsloth

- ibm-granite/granite-8b-code-instruct · Hugging Face: no description found

- Google Colab: no description found

Unsloth AI (Daniel Han) ▷ #random (13 messages🔥):

- Mystery Origin Inquiry: A member inquired about the source of an image, speculating it might be from a manwha (Korean comic).

- Creator Revealed: A member clarified they created an image that sparked the conversation, with an additional note saying it was AI-generated.

- Emote Reaction Says It All: The conversation included an expressive emote reaction, signifying a form of disappointment or the end of something.

- Attack on Titan Resemblance: A member observed that the AI-generated face reminded them of Eren, a character from the anime "Attack on Titan."

- OpenAI Stack Overflow Partnership Buzz: The channel featured a Reddit post discussing OpenAI's announcement to partner with Stack Overflow, using it as a database for Large Language Models (LLM).

- Anticipating ChatGPT's Response Quirks: Members humorously anticipated how ChatGPT might reply in stereotypical programmer responses, including "'Closed as Duplicate'" or admonishing one to "look at the docs."

- Content Exhaustion Concern: A member linked to a Business Insider article discussing concerns over AI running out of human content to learn from by 2026.

- Gig workers are writing essays for AI to learn from: Companies are increasingly hiring skilled humans to write training content for AI models as the trove of online data dries up.

- Reddit - Dive into anything: no description found

Unsloth AI (Daniel Han) ▷ #help (194 messages🔥🔥):

- Template Confusion and Loss of Training Data: Discussions suggest that there might be a template issue rather than a regex problem causing troubles in model behavior. Users share experiences and speculate on the cause, referencing Issue #7062 on ggerganov/llama.cpp indicating the potential loss of training data when converting to GGUF with LORA Adapter.

- Finding the Right Server for 8B Models: One user inquired about server recommendations for running 8B models, but no specific advice was provided in the subsequent discussions.

- Exploring CPU Use and Multi-GPU Support: Enquiries were made about utilizing CPUs for fine-tuning and multi-GPU capabilities in Unsloth. It was noted that Unsloth currently doesn’t support multi-GPU training, but work appears to be "cooking" on the feature.

- Unsloth and Quantization for Finetuning: Questions regarding finetuning generative models with Unsloth for classification tasks and whether Unsloth supports quantization-aware training highlighted limitations and current capabilities. Response indicated the possibility of certain quantizations but not support for GPTQ and suggested using

load_in_4bit = Falsefor 16bit training to avoid quality drops seen withq8.

- Challenges with Installation and Local Testing: Some users faced issues with installing Unsloth locally where the library was failing, particularly with Triton dependency issues and inquiries about running models without CUDA. The conversation referenced following the kaggle install instructions for a potential solution.

- Supervised Fine-tuning Trainer: no description found

- Supervised Fine-tuning Trainer: no description found

- Google Colab: no description found

- Home: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Apple Silicon Support · Issue #4 · unslothai/unsloth: Awesome project. Apple Silicon support would be great to see!

- Supervised Fine-tuning Trainer: no description found

- GitHub - unslothai/unsloth: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory: Finetune Llama 3, Mistral & Gemma LLMs 2-5x faster with 80% less memory - unslothai/unsloth

- Google Colab: no description found

- Google Colab: no description found

- Cooking GIF - Cooking Cook - Discover & Share GIFs: Click to view the GIF

- Google Colab: no description found

- Google Colab: no description found

- llama3-instruct models not stopping at stop token · Issue #3759 · ollama/ollama: What is the issue? I'm using llama3:70b through the OpenAI-compatible endpoint. When generating, I am getting outputs like this: Please provide the output of the above command. Let's proceed f...

- Llama3 GGUF conversion with merged LORA Adapter seems to lose training data randomly · Issue #7062 · ggerganov/llama.cpp: I'm running Unsloth to fine tune LORA the Instruct model on llama3-8b . 1: I merge the model with the LORA adapter into safetensors 2: Running inference in python both with the merged model direct...

- Trainer: no description found