[AINews] Titans: Learning to Memorize at Test Time

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Neural Memory is all you need.

AI News for 1/14/2025-1/15/2025. We checked 7 subreddits, 433 Twitters and 32 Discords (219 channels, and 2812 messages) for you. Estimated reading time saved (at 200wpm): 327 minutes. You can now tag @smol_ai for AINews discussions!

Lots of people are buzzing about the latest Google paper, hailed by yappers as "Transformers 2.0" (arxiv, tweet):

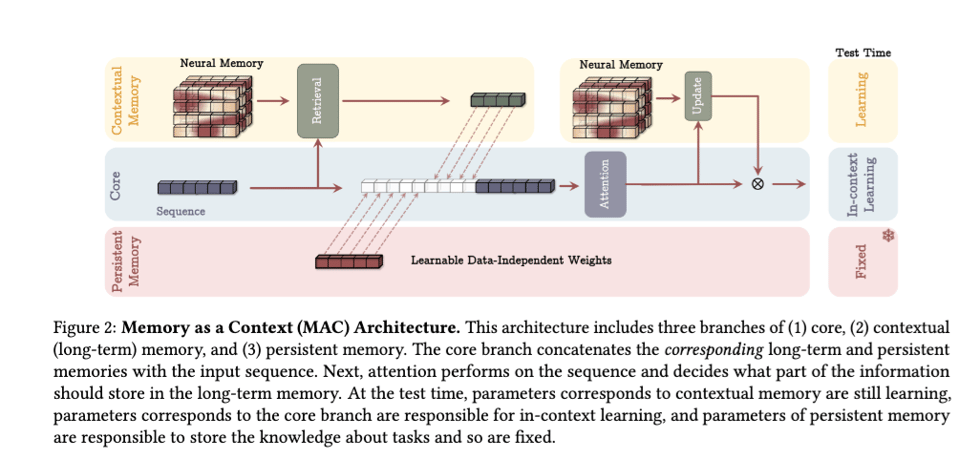

It seems to fold persistent memory right into the architecture at "test time" rather than outside of it (this is one of three variants as context, head, or layer).

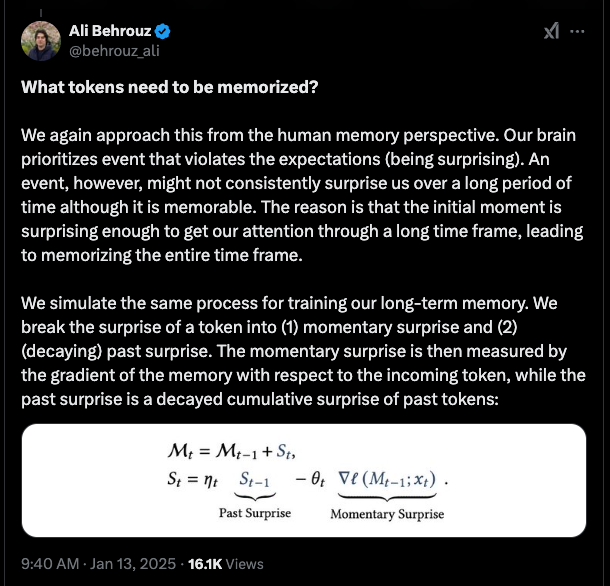

The paper notably uses a surprisal measure to update its memory:

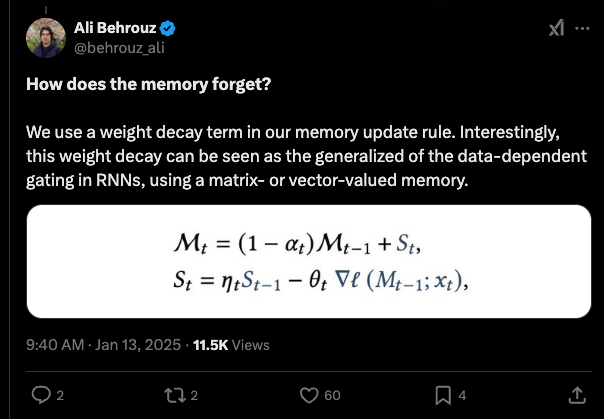

and models forgetting by weight decay

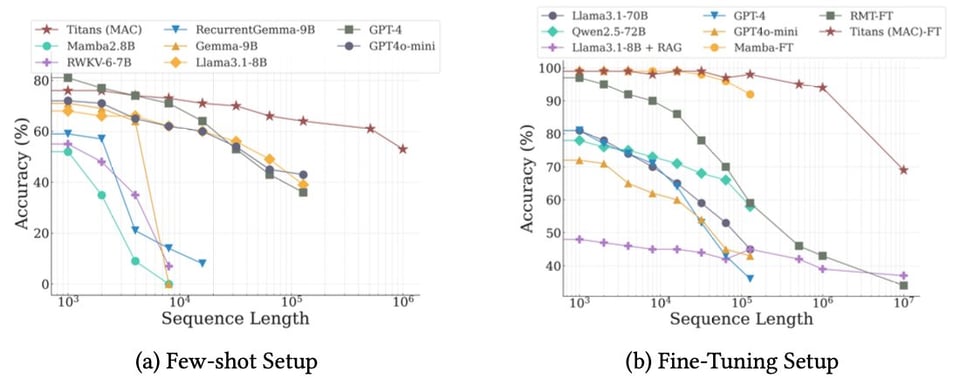

The net result shows very promising context utilization over long contexts.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Models and Scaling

- MiniMax-01 and Ultra-Long Context Models: @omarsar0 introduced MiniMax-01, integrating Mixture-of-Experts with 32 experts and 456B parameters. It boasts a 4 million token context window, outperforming models like GPT-4o and Claude-3.5-Sonnet. Similarly, @hwchase17 highlighted advancements in vision-spatial scratchpads, addressing long-standing challenges in VLMs.

- InternLM and Open-Source LLMs: @abacaj discussed InternLM3-8B-Instruct, an Apache-2.0 licensed model trained on 4 trillion tokens, achieving state-of-the-art performance. @AIatMeta shared updates on SeamlessM4T published in @Nature, emphasizing its adaptive system inspired by nature.

- Transformer² and Adaptive AI: @hardmaru unveiled Transformer², showcasing self-adaptive LLMs that dynamically adjust weights, bridging pre-training and post-training for continuous adaptation.

AI Applications and Tools

- AI-Driven Development Tools: @rez0__ outlined the need for robust Agent Authentication, Prompt Injection defenses, and Secure Agent Architecture. Additionally, @hkproj recommended Micro Diffusion for training diffusion models on a budget.

- Agentic Systems and Automation: @bindureddy emphasized the potential of Search-o1 in enhancing complex reasoning tasks, outperforming traditional RAG systems. @LangChainAI introduced LeagueGraph and Agent Recipes for building open-source social media agents.

- Integration with Development Environments: @_akhaliq discussed unifying local endpoints for AI model support across applications, while @saranormous advocated for using Grok's web app to avoid distractions.

AI Security and Ethical Concerns

- Data Integrity and Prompt Injection: @rez0__ highlighted challenges in prompt injection and the necessity for zero-trust architectures to secure LLM applications. @lateinteraction critiqued the blurring of specifications and implementations in AI prompts, advocating for clearer domain-specific knowledge.

- AI in Geopolitics and Regulations: @teortaxesTex criticized the notion of explicit backdoors in Chinese servers, promoting Apple's security model instead. @AravSrinivas discussed the impact of AI diffusion rules on NVIDIA stock, reflecting on the global regulatory landscape.

AI in Education and Hiring

- Homeschooling and Educational Policies: @teortaxesTex expressed disappointment in battle animation and the lack of effective educational techniques. Meanwhile, @stanfordnlp hosted seminars on AI and education, emphasizing the role of agents and workflows.

- Hiring and Skill Development: @finbarrtimbers shared insights on hiring ML engineers, while @fchollet sought experts for AI program synthesis, highlighting the importance of mathematical and coding skills.

AI Integration in Software Engineering

- LLM Integration and Productivity: @TheGregYang and @gdb discussed the seamless integration of LLMs into debugging tools and web applications, enhancing developer productivity. @rasbt emphasized the distinction between raw intelligence and intelligent software systems, advocating for the right implementation strategies.

- AI-Driven Coding and Automation: @hellmanikCoder and @skycoderrun highlighted the benefits and challenges of using LLMs for code generation and automation, stressing the need for robust integration and error handling.

Politics and AI Regulations

- Chinese AI Developments and Security: @teortaxesTex mentioned iFlyTek's acquisition of Atlas servers, reflecting on China's AI infrastructure growth. @manyothers discussed the potential acceleration of Ascend clusters, highlighting computation advancements in Mainland China.

- US AI Policies and Infrastructure: @iScienceLuvr summarized a US executive order on accelerating AI infrastructure, detailing data center requirements, clean energy mandates, and international cooperation. @karinanguyen_ critiqued the lag in form factors for AI workflows, reflecting on policy impacts.

Memes/Humor

- Humorous Takes on AI and Daily Life: @arojtext joked about hiding better uses of video games for escapism, while @qtnx_ shared a funny question about unrelated AI applications. Additionally, @nearcyan humorously reflected on gaming habits and unexpected property purchase offers.

- Light-hearted AI Remarks: @Saranormous mocked interactions with ScaleAI, and @TheGregYang playfully encouraged using Grok's web app to avoid distractions, blending AI functionality with everyday humor.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. InternLM3-8B outperforms Llama3.1-8B and Qwen2.5-7B

- New model.... (Score: 188, Comments: 31): InternLM3 reportedly outperforms Llama3.1-8B and Qwen2.5-7B. The project, titled "internlm3-8b-instruct," is hosted on a platform resembling GitHub, featuring tags like "Safetensors" and "custom_code," and references an arXiv paper with the identifier 2403.17297.

- InternLM3 Performance and Features: Users highlight InternLM3's superior performance over Llama3.1-8B and Qwen2.5-7B, emphasizing its efficiency in training with only 4 trillion tokens, which reduces costs by over 75%. The model supports a "deep thinking mode" for complex reasoning tasks, which is accessible through a different system prompt as seen on Hugging Face.

- Community Feedback and Comparisons: Users express satisfaction with InternLM3's capabilities, noting its effectiveness in logic and language tasks, and comparing it favorably against models like Exaone 7.8b and Qwen2.5-7B. There is a desire for a 20 billion parameter version, referencing the 2.5 20b model.

- Model Naming and Licensing: The name "Intern" is discussed, with some users finding it apt due to the AI's role as an unpaid assistant. There is also a call for clearer licensing practices when sharing models, with users expressing frustration over unclear licenses, particularly in audio/music models.

Theme 2. OpenRouter gets new features and community-driven improvements

- OpenRouter Users: What feature are you missing? (Score: 190, Comments: 79): The author unintentionally developed an OpenRouter alternative called glama.ai/gateway, which offers similar features like elevated rate limits and easy model switching via an OpenAI-compatible API. Unique advantages include integration with the Chat and MCP ecosystem, advanced analytics, and reportedly lower latency and stability compared to OpenRouter, while processing several billion tokens daily.

- API Compatibility and Support: Users express interest in compatibility with the OpenAI API, specifically regarding multi-turn tool use, function calling, and image input formats. Concerns include differences in tool use syntax and the need for detailed documentation on supported API features and models.

- Provider Management and Data Security: There is a demand for more granular control over provider selection for specific models, as some providers like DeepInfra are not optimal for all models. Additionally, glama.ai is praised for its data protection policies and commitment to not using client data for AI training, contrasting with OpenRouter's data handling practices.

- Sampler Options and Mobile Support: Users discuss the need for additional sampler options like XTC and DRY, which are not currently supported, and the challenges of implementing them as a middleman. There is also interest in improving mobile support, as current traffic is primarily desktop-based, but mobile is becoming a more frequent topic of discussion.

Theme 3. Kiln as an Open Source Alternative to Google AI Studio Gains Traction

- I accidentally built an open alternative to Google AI Studio (Score: 865, Comments: 130): Kiln, an open-source alternative to Google AI Studio, offers enhanced features like support for any LLM through multiple hosts, unlimited fine-tuning capabilities, local data privacy, and collaborative use. It contrasts with Google's limited model support, data privacy issues, and single-user collaboration, while also providing a Python library and powerful dataset management. Kiln is available on GitHub and aims to be as user-friendly as Google AI Studio but more powerful and private.

- Concerns about privacy and licensing were prominent, with users like osskid and yhodda highlighting discrepancies between Kiln's privacy claims and its EULA, which suggests potential data access and usage rights by Kiln. Yhodda emphasized that the desktop app's proprietary license could lead to user data being shared and used without compensation, raising red flags about user rights and privacy.

- Users appreciated the open-source nature of Kiln, with comments like those from fuckingpieceofrice and Imjustmisunderstood expressing gratitude for the alternative to Google AI Studio, fearing future paywalls. The open-source aspect was seen as a significant advantage, even if the desktop component is not open-source.

- Documentation and tutorials received positive feedback, with users like Kooky-Breadfruit-837 and danielhanchen praising the comprehensive guides and mini video tutorials. This suggests that Kiln is user-friendly and accessible, even for those with limited technical experience, as noted by RedZero76.

Theme 4. OuteTTS 0.3 introduces new 1B and 500M language models

- OuteTTS 0.3: New 1B & 500M Models (Score: 155, Comments: 62): OuteTTS 0.3 introduces new 1B and 500M models, enhancing its text-to-speech capabilities. The update likely includes improvements in model performance and feature set, though specific details are not provided in the text.

- There is a notable discussion regarding language support in OuteTTS, particularly the absence of Spanish despite its widespread use. OuteAI explains this is due to the diversity of Spanish accents and dialects, and the lack of adequate datasets, resulting in a generic "Latino Neutro" output.

- OuteAI clarifies technical aspects of the models, such as their basis on LLMs and the use of WavTokenizer for audio token decoding. The models are compatible with Transformers, LLaMA.cpp, and ExLlamaV2, and there is ongoing exploration of speech-to-speech capabilities.

- The OuteTTS 0.3 models offer improvements in naturalness and coherence of speech, with support for six languages including newly added French and German. A demo is available on Hugging Face, and installation is straightforward via pip.

Theme 5. 405B MiniMax MoE: Breakthrough in context length and efficiency

- 405B MiniMax MoE technical deepdive (Score: 66, Comments: 10): The post discusses the 405B MiniMax MoE model, highlighting its innovative scaling approaches, including a hybrid with 7/8 Lightning attention and a distinct MoE strategy compared to DeepSeek. It details the model's training on approximately 2000 H800 and 12 trillion tokens, with further information available in a blog post on Hugging Face.

- 405B MiniMax MoE model is noted for its impressive performance on Longbench without Chain of Thought (CoT), showcasing its capability in handling long context lengths. FiacR highlights its "insane context length" and eliebakk praises the "super impressive numbers."

- Discussion around the trend of open weights models competing with closed-source models is positive, with optimism about significant advancements by 2025. vaibhavs10 expresses enthusiasm for this trend and shares a link to the MiniMaxAI model on Hugging Face.

- The model is hosted on Hailuo.ai as mentioned by StevenSamAI, providing a resource for accessing the model.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. Transformer²: Enhancing Real-Time LLM Adaptability

- [R] Transformer²: Self-Adaptive LLMs (Score: 137, Comments: 10): Transformer² introduces a self-adaptive framework for large language models (LLMs) that dynamically adjusts only singular components of weight matrices to handle unseen tasks in real-time, outperforming traditional methods like LoRA with fewer parameters. The method employs a two-pass mechanism, using a dispatch system and task-specific "expert" vectors trained via reinforcement learning, demonstrating versatility across various architectures and modalities, including vision-language tasks. Paper, Blog Summary, GitHub.

- Commenters discussed the scaling approach of the framework, noting that it scales with disk rather than the number of parameters, which could imply efficiency in storage and computation.

- There was a discussion on the performance impact of Transformer² on different sizes of LLMs, with observations that while it significantly enhances smaller models, the improvements for larger models like the 70 billion parameter model were minimal.

- The growth of the Sakana lab was noted, with recognition that the paper did not list a well-known author, suggesting an expanding and increasingly collaborative research team.

Theme 2. Deep Learning Revolutionizing Predictive Healthcare

- Researchers Develop Deep Learning Model to Predict Breast Cancer (Score: 118, Comments: 17): Researchers developed a deep learning model capable of predicting breast cancer up to five years in advance using a streamlined algorithm. The study analyzed over 210,000 mammograms and highlighted the significance of breast asymmetry in assessing cancer risk, as detailed in this RSNA article.

AI Discord Recap

A summary of Summaries of Summaries by o1-preview-2024-09-12

Theme 1: AI Model Performance Stumbles Across Platforms

- Perplexity Users Perplexed by Persistent Outages: Users reported multiple prolonged outages on Perplexity, with errors lasting over an hour, prompting frustrations and a search for alternatives.

- Cursor Crawls as Performance Pitfalls Trip Up Coders: Cursor IDE users faced significant slowdowns, with wait times of 5–10 minutes hindering Pro subscribers' workflows and sparking speculation about fixes.

- DeepSeek Drags, Users Seek Speedy Alternatives: DeepSeek V3 suffered from latency issues and slow responses, leading users to switch to models like Sonnet and voice frustrations over inconsistent performance.

Theme 2: New AI Models Break Context Barriers

- MiniMax-01 Blazes Trail with 4M Token Context: MiniMax-01 launched with an unprecedented 4 million token context window using Lightning Attention, promising ultra-long context processing and performance leaps.

- Cohere Cranks Context to 128k Tokens: Cohere extended context length to 128k tokens, enabling around 42,000 words in a single conversation without resetting, enhancing continuity.

- Mistral’s FIM Marvel Wows Coders: The new Fill-In-The-Middle coding model from Mistral AI impressed users with advanced code completion and snippet handling beyond standard capabilities.

Theme 3: Legal Woes Hit AI Datasets and Developers

- MATH Dataset DMCA'd: AoPS Strikes Back: The Hendrycks MATH dataset faced a DMCA takedown, raising concerns over Art of Problem Solving (AoPS) content and the future of math data in AI.

- JavaScript Trademark Tussle Threatens Open Source: A fierce legal dispute over the JavaScript trademark sparked alarms about potential restrictions impacting community-led developments and open-source contributions.

Theme 4: Advancements and Debates in AI Training

- Grokking Gains Ground: Phenomenon Unpacked: A new video titled “Finally: Grokking Solved - It's Not What You Think” delved into the bizarre grokking phenomenon of delayed generalization, fueling enthusiasm and debate.

- Dynamic Quantization Quirks Questioned: Users reported minimal performance changes when applying dynamic quantization to Phi-4, sparking discussions on the technique's effectiveness compared to standard 4-bit versions.

- TruthfulQA Benchmark Busted by Simple Tricks: TurnTrout achieved 79% accuracy on TruthfulQA by exploiting weaknesses with a few trivial rules, highlighting flaws in benchmark reliability.

Theme 5: Industry Moves Shake Up the AI Landscape

- Cursor AI Scores Big Bucks in Series B: Cursor AI raised a new Series B co-led by a16z, fueling the coding platform's next phase and strengthening ties with Anthropic amid usage-based pricing talks.

- Anthropic Secures ISO 42001 for Responsible AI: Anthropic announced accreditation under the new ISO/IEC 42001:2023 standard, emphasizing structured system governance for responsible AI development.

- NVIDIA Cosmos Debuts at CES, Impresses LLM Enthusiasts: NVIDIA Cosmos was unveiled at CES, showcasing new AI capabilities; presentations at the LLM Paper Club highlighted its potential impact on the field.

PART 1: High level Discord summaries

Cursor IDE Discord

- Cursor Crawls: Performance Pitfalls Trip Users: Many reported 5–10 minute wait times in Cursor, while others saw normal speeds, frustrating Pro subscribers trying to maintain steady workflows.

- This slowdown hampered coding efforts and prompted speculation about fixes, with some keeping an eye on Cursor’s blog update for potential relief.

- Swift Deploy: Vercel & Firebase Soar: Builders praised both Vercel and Google Firebase for deploying Cursor-based apps, highlighting minimal setup for production use.

- They shared templates on Vercel for rapid starts and noted easy real-time integrations with Firebase.

- Gemini 2.0 Flash vs Llama 3.1 Duel: Enthusiasts favored Gemini 2.0 Flash for stronger benchmark outcomes over Llama 3.1, pointing to sharper text generation performance.

- Others acknowledged imposter syndrome creeping in with heavier AI reliance, yet embraced heightened productivity benefits.

- Sora’s Stumble in Slow-Mo Scenes: Reports surfaced that Sora struggled with reliable video generation, especially for slow-motion segments, leaving some users dissatisfied.

- Some explored alternative options after frequent trial-and-error, indicating mixed success with Sora’s feature set.

- Fusion Frenzy: Cursor Gears Up for March: A new Cursor release is expected in March, featuring the Fusion implementation and possible integrations with DeepSeek and Gemini.

- Excitement brewed over a more capable platform, as teased in Cursor’s Tab model post, though detailed specifics remain undisclosed.

Perplexity AI Discord

- Perplexity Outages Spark Outrage: Frequent disruptions in Perplexity left users facing errors for over an hour, as shown on the status page, prompting calls for backups.

- Frustration grew alongside citation glitches, causing some to explore alternate solutions and voice concerns about reliability.

- AI Model Performance Showdown: Community members weighed the best coding model, with Claude Sonnet 3.5 topping debugging tasks and Deepseek 3.0 proposed as an economical fallback.

- Some praised Perplexity for certain queries yet criticized its hallucinations and limited context window.

- Double Vision: Two Perplexity iOS Apps: One user spotted duplicates in the App Store, prompting a web query about official Perplexity apps.

- Another user couldn't find the second listing, fueling a short debate on naming and distribution issues.

- JavaScript Trademark Tussle: A legal fight over the JavaScript trademark could threaten community-led developments if trademark claims prove more restrictive.

- Opinions voiced alarm over ownership matters and a possible wave of litigation impacting open-source contributions.

- Llama-3.1-Sonar-Large Slowed Down: llama-3.1-sonar-large-128k-online suffered a marked decline in output speed since January 10th, puzzling users.

- Community chatter points to undisclosed updates or code shifts as possible reasons for the slowdown, sparking concerns about broader performance hits.

Codeium (Windsurf) Discord

- Command Craze & Editor Applause: Windsurf released a Command feature tutorial, declared Discord Challenge winners with videos like Luminary, and launched the Windsurf Editor.

- They also presented an official comparison of Codeium against GitHub Copilot and shared a blog post on nonpermissive code concerns.

- Telemetry Tangles & Subscription Snags: Users faced telemetry issues in the Codeium Visual Studio extension and confusion around credit rollover in subscription plans, with references to GitHub issues.

- They confirmed that credits do not carry over after plan cancellation, and some encountered installation problems tied to extension manifest naming.

- Student Discounts & Remote Repository Riddles: Students showed interest in Pro Tier bundles but struggled if their addresses weren't .edu, prompting calls for more inclusive eligibility.

- Others reported friction using indexed remote repositories with Codeium in IntelliJ, seeking setup advice from the community.

- C# Type Trouble & Cascade Convo: Windsurf IDE had persistent trouble analyzing C# variable types on both Windows and Mac, even though other editors like VS Code offered smooth performance.

- Users debated Cascade’s performance and recommended advanced prompts, while also discussing the integration of Claude and other models for complex coding tasks.

Unsloth AI (Daniel Han) Discord

- Multi-GPU Mayhem in Kaggle: Developers tried to run Unsloth with multiple T4 GPUs on Kaggle but found only one GPU is enabled for inference, limiting scaling attempts. They pointed to this tweet about fine-tuning on Kaggle's T4 GPU, hoping to use Kaggle’s free hours more effectively.

- Others recommended paying for more robust hardware if concurrency is required, and they suggested that Kaggle might expand GPU provisioning in the future.

- Debunking Fine-Tuning Myths: Teams clarified that fine-tuning can actually introduce new knowledge, acting like retrieval-augmented generation, contrary to widespread assumptions. They linked the Unsloth doc on fine-tuning benefits to address these ongoing misconceptions.

- Some pointed out it can offload memory usage by embedding new data into the model, while others emphasized proper dataset selection for best results.

- Dynamic Quantization Quirk with Phi-4: Reports emerged that Phi-4 shows minimal performance changes even after dynamic quantization, closely paralleling standard 4-bit versions. Users referenced the Unsloth 4-bit Dynamic Quants collection to investigate any hidden gains.

- Some insisted that dynamic quantization should enhance accuracy, prompting further experimentation to confirm if the discrepancy is masked by test conditions.

- Grokking Gains Ground: A new video, Finally: Grokking Solved - It's Not What You Think, dives into the bizarre grokking phenomenon of delayed generalization. It fueled enthusiasm for understanding how overfitting morphs into sudden leaps in model capability.

- The shared paper Grokking at the Edge of Numerical Stability introduced the idea of Softmax Collapse, sparking debate on deeper implications for AI training.

- Security Conference Showcases for LLM: One user suggested security conferences as a more fitting venue for specialized LLM talks, referencing exploit detection use cases. This idea resonated with those who find standard ML events too broad for security-specific content.

- Others supported highlighting domain-centric approaches, pointing to a growing push for LLM research discussions in these specialized forums.

Stability.ai (Stable Diffusion) Discord

- Stable Diffusion XL Speeds & Specs: A user ran stabilityai/stable-diffusion-xl-base-1.0 on Colab, looking for built-in metrics like iterations per second, in contrast to how ComfyUI shows time-per-iteration data.

- They highlighted the possibility of up to 50 inference steps, pointing out that metrics remain elusive without specialized tools or custom logging.

- Fake Coin Causes Commotion: Community members encountered a fake cryptocurrency launch tied to Stability AI, calling it a confirmed scam in a tweet warning.

- They cautioned that compromised accounts can dupe unsuspecting investors, sharing personal stories of losses and urging everyone to avoid suspicious links.

- Sharing AI Images Goes Social: Users debated where to post AI-generated images, suggesting Civitai and other social media as main platforms for showcasing success and failure cases.

- Concerns about data quality arose when collecting image feedback, prompting discussion on filtering out spurious or low-effort content.

Interconnects (Nathan Lambert) Discord

- Xeno Shakes Up Agent Identity: At a Mixer on the 24th and a Hackathon on the 25th at Betaworks, NYC, $5k in prizes kicked off the new wave of agent identity projects from Xeno Grant.

- Winners can receive $10k per agent in $YOUSIM and $USDC, showing expanded interest in identity solutions among hackathon participants.

- Ndea Leans on Program Synthesis: François Chollet introduced Ndea to jumpstart deep learning-guided program synthesis, aiming for a fresh path toward genuine AI invention.

- The community sees it as an approach outside the usual LLM-scaling trend, with some praising it as a strong alternative to standard AGI pursuits.

- Cerebras Tames Chip Yields: At Cerebras, they claim to have cracked wafer-scale chip yields, producing devices 50x larger than usual.

- By reversing conventional yield logic, they build fault-tolerant designs that keep manufacturing costs in check.

- MATH DMCA Hits Hard: The MATH dataset faced a DMCA takedown, sparking worries around AoPS-protected math content.

- Some recommend stripping out AoPS segments to salvage partial usage, though concerns persist over broader dataset losses.

- MVoT Shows Reasoning in Pictures: The new Multimodal Visualization-of-Thought (MVoT) paper proposes adding visual steps to Chain-of-Thought prompting in MLLMs, uniting text with images to refine solutions.

- Authors suggest depicting mental images can improve complex reasoning flows, merging well with reinforcement learning techniques.

Nous Research AI Discord

- Claude's Quirky Persona & Fine-Tuning Feats: Members praised Claude for its 'cool co-worker vibe,' noted occasional refusals to answer, and exchanged tips on advanced fine-tuning with company knowledge plus classification tasks.

- They emphasized data diversity to enhance model accuracy, proposing tailored approaches for improved results.

- Dataset Debates & Nous Research's Private Path: Community questioned LLM dataset reliability, highlighting the push for better data curation and clarifying Nous Research operations via private equity and merch sales.

- They expressed interest in open-source synthetic data initiatives, mentioning collaborations with Microsoft but no formal government or academic ties.

- Gemini Outshines mini Models: Several users praised Gemini for accurate data extraction, claiming it outperforms 4o-mini and Llama-8B in pinpointing original content.

- They remained cautious about retrievability challenges, focusing on stable expansions as next steps.

- Grokking Tweaks & Optimizer Face-Off: Participants dissected grokking and numerical issues in Softmax, referencing this paper on Softmax Collapse and its impact on training.

- They weighed combining GrokAdamW and Ortho Grad from this GitHub repo, and mentioned Coconut from Facebook Research for continuous latent space reasoning.

- Agent Identity Hackathon Calls to Create: A spirited hackathon in NYC was announced, offering $5k for agent-identity prototypes and fostering imaginative AI projects.

- Creators hinted at fresh concepts and directed interested folks to this tweet for event details.

Stackblitz (Bolt.new) Discord

- Title Tinkering in Bolt: Following a Bolt update, users can now rename project titles with ease, as detailed in this official post.

- This rollout streamlines project organization and helps locate items in the list more efficiently, boosting user experience.

- GA4 Integration Glitch: A developer's React/Vite app hit an 'Unexpected token' error with GA4 API on Netlify, despite working fine locally.

- They verified credentials and environment variables but are looking for alternative solutions to bypass the integration roadblock.

- Firebase's Quick-Fill Trick: A user recommended creating a 'load demo data' page to seamlessly populate Firestore, preventing empty schema hassles.

- This approach was hailed as a basic yet effective method, particularly beneficial to those who might overlook initial dataset setup.

- Supabase Slip-ups and Snapshots: Some users struggled with Supabase integration errors and application crashes when storing data.

- They also discussed the chat history snapshot system that aims to preserve previous states for better context restoration.

- Token Tussles: High usage reports surfaced, with one instance claiming 4 million tokens per prompt and others questioning its validity.

- Suggestions for GitHub issue submissions arose, as some suspect a bug lurking behind Bolt's context mechanics.

Notebook LM Discord Discord

- QuPath Q-illuminates Tissue Data: A user reported that NotebookLM generated a functional groovy script for QuPath, gleaned from forum posts on digital pathology, slashing hours of manual coding.

- This success underscored NotebookLM's utility for specialized tasks, with one user calling it a welcome time-saver for 'hard-coded pathology workflows'.

- Worldbuilding Wows Writers: A user leveraged NotebookLM for creative expansions in worldbuilding, noting it clarifies underdeveloped lore and recovers overlooked ideas.

- They added a note like 'Wild predictions inbound!' to spark the AI’s imaginative output, fueling deeper fictional scenarios without much fuss.

- NotebookLM Plus: The Mysterious Migration: Confusion arose around NotebookLM Plus availability and transition timelines within different Google Workspace plans, especially for those on deprecated editions.

- Some continued paying for older add-ons while weighing possible plan upgrades in response to ambiguous announcements from the Google Workspace Blog.

- API APIs: Bulk Sync on the Horizon?: Users asked if NotebookLM offers an API or can sync Google Docs sources in bulk, with no official timeline provided.

- Community members stayed hopeful for announcements this year, referencing user requests in NotebookLM Help.

- YouTube Import Woes & Word Count Warnings: Multiple members struggled with importing YouTube links as valid sources, suspecting a missing feature rather than user error.

- They also discovered a 500,000-word limit per source and 50 total sources per notebook, forcing manual website scraping and other workarounds.

Cohere Discord

- Cohere Cranks Context to 128k: Cohere extended context length to 128k tokens, enabling around 42,000 words in a single conversation without resetting context. Participants referenced Cohere’s rate limit doc to understand how this expansion impacts broader model usage.

- They noted that the entire chat timeline can remain active, meaning longer discussions stay consistent without segmenting turns.

- Rerank v3.5 Raises Eyebrows: Some users reported that Cohere’s rerank-v3.5 delivers inconsistent outcomes unless restricted to the most recent user query, complicating multi-turn ranking efforts.

- They tried other services like Jina.ai with steadier results, prompting direct feedback to Cohere about the performance slump.

- Command R Gets Continuous Care: Members sought iterative enhancements to Command R and R+, hoping new data and fine-tuning would evolve the models rather than launching entirely new versions.

- A contributor highlighted retrieval-augmented generation (RAG) as a powerful method to introduce updated information into existing model architecture.

OpenRouter (Alex Atallah) Discord

- Mistral’s FIM Marvel: The newest Fill-In-The-Middle coding model from Mistral has arrived, boasting advanced capabilities beyond standard code completions. OpenRouterAI confirmed requests for it are exclusively handled on their Discord channel.

- Enthusiasts mention improved snippet context handling, with some expecting a strong showing in code-based tasks. Others pointed to OpenRouter’s Codestral-2501 page as evidence of serious coding potential.

- Minimax-01’s 4M Context Feat: Minimax-01, promoted as the first open-source LLM from the group, reportedly passed the Needle-In-A-Haystack test at a huge 4M context. Details surfaced on the Minimax page, with user references praising the broad context handling.

- Some find the 4M token claim bold, though supporters say they’ve seen no major performance trade-offs so far. Access also requires a request on the Discord server, showcasing a growing interest in bigger context ranges.

- DeepSeek Dramas: Latencies and Token Drops: Members flagged continuing DeepSeek API inconsistencies, reporting slow response times and unexpected errors across multiple providers. Many expressed frustration over token limits slipping from 64k to 10-15k without notice.

- Commenters pointed to the DeepSeek V3 Uptime and Availability page for partial explanations, while noting that first-token latency remains persistently high. Others worry these fluctuations sabotage trust in extended context usage.

- Provider Showdowns & Model Removal Rumors: A user raised concerns about lizpreciatior/lzlv-70b-fp16-hf disappearing, learning that no provider might host it anymore. Meanwhile, participants debated the performance gap between DeepSeek, TogetherAI, and NovitaAI, citing latency differences on the OpenRouter site.

- Some found DeepInfra more reliable, whereas others saw spikes across all providers. This sparked a broader conversation on how frequently providers rotate or remove model endpoints with minimal notice.

- Prompt Caching Q&A: Multiple users asked if OpenRouter supports prompt caching for models like Claude, referencing the documentation. They hoped caching would slash cost and boost throughput.

- A helpful pointer from Toven confirmed the feature is indeed available, with some devs praising it for stabilizing project budgets. The chat also shared further reading on request handling and stream cancellation.

OpenAI Discord

- Neural Feedback Fuels Personalized Learning: One user proposed a neural feedback loop system that guides individuals to adopt optimized thinking patterns for better cognitive performance, though no official release date was shared.

- Others viewed this as a fundamental shift in AI-assisted learning, even if no links or code references have surfaced.

- Anthropic Secures ISO 42001 for Responsible AI: Anthropic announced accreditation under the ISO/IEC 42001:2023 standard for responsible AI, emphasizing structured system governance.

- Users acknowledged the credibility of this standard but questioned Anthropic’s partnership with Anduril.

- AI Memory Shortfalls with Shared Images: Participants observed that AI often loses extended context after images are introduced, leading to repeated clarifications.

- One user suggested that the images drop out of short-term storage, causing the model to overlook earlier references.

- ChatGPT Tiers Spark Uneven Performance: Community members noted that ChatGPT appears limited for free users, especially with web searches.

- They pointed out that Plus subscribers gain more advanced capabilities, raising fairness issues for API users.

- GPT-4o Tasks Outshine Canvas Tools: Multiple users reported that the Canvas feature in the desktop version was replaced by a tasks interface, though a toolbox icon can still launch Canvas.

- They highlighted that GPT-4o tasks provide timed reminders for actions like language practice or news updates.

LM Studio Discord

- Fine-Tuning Frenzy & Public Domain Delights: One user is fine-tuning LLMs with public domain texts to enhance output quality, shifting efforts to Google Colab while exploring Python for the first time.

- They aim to shape prompts for better writing outcomes, focusing on new ways to utilize LLMs for creative tasks.

- Context Crunch & Memory Moves: Members noted the 'context is 90.5% full' warnings when long conversations overload the LLM's buffer, risking truncated output.

- They debated adjusting context length versus heavier memory footprints, highlighting a delicate balancing act for stable performance.

- GPU Speed Showdown: 2×4090 vs A6000: A 2x RTX 4090 setup was reported at 19.06 t/s, overshadowing an RTX A6000 48GB at 18.36 t/s, with one correction suggesting 19.27 t/s for the A6000.

- Enthusiasts also praised significantly lower power usage of the 2x RTX 4090 build, indicating gains in performance and efficiency.

- Parallelization Puzzles & Layer Distribution: Discussion explored splitting a model across multiple GPUs, placing half the layers on each card for simultaneous calculations.

- However, participants cited potential latency over PCIe and heavier synchronization as barriers to achieving a clear speed advantage.

- Snapshots Snafus: LLMs & Image Analysis: Some users struggled to get QVQ-72B and Qwen2-VL-7B-Instruct to interpret images correctly, facing initialization errors.

- They emphasized keeping runtime environments updated, noting that missing dependencies often break image processing attempts.

aider (Paul Gauthier) Discord

- DeepSeek V3 Drags, GPU Talk Heats Up: Multiple members reported slow or stuck runs with DeepSeek V3, prompting some to switch to Sonnet for better performance and share their frustrations in this HF thread.

- One user highlighted the RTX 4090 as theoretically capable for larger models, pointing to SillyTavern's LLM Model VRAM Calculator and raising questions about VRAM requirements.

- Aider Gains Kudos, Commits Stall: A user praised Aider's code editing but complained that no Git commits were generated, despite correct settings and cloned project usage.

- Others recommended architect mode to confirm changes before committing, referencing a PR to address these issues at #2877.

- Repo Maps Balloon, Agentic Tools Step In: A member noticed their repository-map grew from 2k to 8k lines, raising concerns about efficiency when handling more than 5 files at once.

- Users suggested agentic exploration tools like cursor's chat and windsurf for scanning codebases, praising Aider for final implementation steps.

- Repomix Packs Code, Cuts API Bulk: A user showcased Repomix, which repackages codebases into formats that are friendlier for LLM-powered tasks.

- They also noted synergy with Repopack for minimizing Aider's API calls, which could reduce token overhead for large projects.

- Clickbait Quips, o1-Preview Slogs: One user teased another's AI content style as borderline used-car sales, echoing community annoyance toward clickbait promotions.

- Others cited slowed responses and heavier token usage on o1-preview, pointing to performance dips that hamper real-time interactions.

Modular (Mojo 🔥) Discord

- Docs Font Goes Bolder: The docs font is now thicker for improved readability, which users cheered as much better in #general.

- People seem open to further tweaks, showing a willingness to keep refining the user experience.

- Mojo Drops the Lambda: Community confirmed Mojo currently lacks

lambdasyntax, but a roadmap note signals future plans.- Individuals suggested passing named functions as parameters until lambdas are officially supported.

- Zed and Mojo Team Up: Enthusiasts shared how to install Mojo in Zed Preview just like the stable version, with code-completion working after setup.

- Some hit minor snags when missing certain settings, but overall integration flowed well once everything was configured.

- SIMD Sparks Speed Snags: Participants warned about performance pitfalls with SIMD, referencing Ice Lake AVX-512 Downclocking.

- They urged checking assembly output to detect any register shuffling that might negate SIMD benefits on various CPUs.

- Recursive Types Test Mojo's Patience: Developers grappled with recursive types in Mojo, turning to pointers for tree-like structures.

- They linked GitHub issues for deeper details, citing continuing complexity in the language design.

Eleuther Discord

- Critical Tokens Spark Fervor: A new arXiv preprint introduced critical tokens for LLM reasoning, showing major accuracy boosts in GSM8K and MATH500 when these key tokens are carefully managed. Members also clarified that VinePPO does not actually require example Chain-of-Thought data, though offline RL comparisons remain hotly debated.

- They embraced the idea that selectively downweighting these tokens could push overall performance, with the community noting parallels to other implicit PRM findings.

- NanoGPT Shatters Speed Records: A record-breaking 3.17-minute training run on modded-nanoGPT was reported, incorporating a new token-dependent lm_head bias and multiple fused operations, as seen in this pull request.

- Further optimization ideas, like Long-Short Sliding Window Attention, were floated for pushing speeds and performance even higher.

- TruthfulQA Takes a Tumble: TurnTrout’s tweet revealed a 79% accuracy on multiple-choice TruthfulQA by exploiting weak spots with a few trivial rules, bypassing deeper model reasoning.

- This finding stirred debate across the community, spotlighting how benchmark flaws can undercut reliability in other datasets such as halueval.

- MATH Dataset DMCA Debacle: The Hendrycks MATH dataset went dark due to a DMCA takedown, as noted in this Hugging Face discussion, sparking legal and logistical concerns.

- Members traced the original questions to AOPS, reiterating that the puzzle-like content was attributed from the start, highlighting friction on dataset licensure.

- Anthropic & Pythia Circuits Exposed: Several references to Anthropic’s circuit analysis explored how sub-networks form at consistent training stages across different Pythia models, as discussed in this paper.

- Participants noted that these emergent structures do not strictly align with simpler dev-loss vs. compute plots, underscoring the nuanced evolution of internal architectures.

Latent Space Discord

- Cursor AI Scores Big Bucks: They raised a new Series B co-led by a16z to support the coding platform’s next phase, as shown in this announcement.

- Community chatter highlights Cursor AI as a key client for Anthropic, fueling talk on usage-based pricing.

- Transformer² Adapts Like an Octopus: The new paper from Sakana AI Labs introduces dynamic weight adjustments, bridging pre- and post-training.

- Enthusiasts compare it to how an octopus blends with its surroundings, highlighting self-improvement potential for specialized tasks.

- OpenBMB MiniCPM-o 2.6 Takes On Multimodality: The release of MiniCPM-o 2.6 showcases an 8B-parameter model spanning vision, speech, and language tasks on edge devices.

- Preliminary tests praise its bilingual speech performance and cross-platform integration, stirring optimism about real-world usage.

- Curator: Synthetic Data on Demand: The new Curator library offers an open-source approach to generating training and evaluation data for LLMs and RAG workflows.

- Engineers anticipate fillable gaps in post-training data pipelines, with more features planned for robust coverage.

- NVIDIA Cosmos Debuts at CES: At the LLM Paper Club, NVIDIA Cosmos was presented to highlight its capabilities following a CES launch.

- Attendees were urged to register and add the session to their calendar, preventing anyone from missing this new model’s reveal.

GPU MODE Discord

- Triton & Torch: Dancing Dependencies: A user found Triton depends on Torch, complicating a pure CUDA workflow and prompting questions about a cuBLAS equivalent (docs).

- Another user hit a ValueError from mismatched pointer types, concluding pointers in

tl.loadmust be float scalars.

- Another user hit a ValueError from mismatched pointer types, concluding pointers in

- RTX 50x TMA Whispers: Rumors persist that RTX 50x Blackwell cards may inherit TMA from Hopper, but no details are confirmed.

- Community members remain frustrated until the whitepaper drops, keeping TMA buzz alive.

- MiniMax-01 Rocks 4M-Token Context: The MiniMax-01 open-source models introduce Lightning Attention for handling up to 4M tokens with big performance leaps.

- APIs cost $0.2/million input tokens and $1.1/million output tokens, as detailed in their paper and news page.

- Thunder Compute: Storm of Cheap A100s: Thunder Compute debuted with A100 instances at $0.92/hr plus $20/month free, showcased on their website.

- Backed by Y Combinator alumni, they feature a CLI (

pip install tnr) for fast instance management.

- Backed by Y Combinator alumni, they feature a CLI (

- GPU Usage Tips and Debugging Tales: Engineers stressed weight decay for bfloat16 training (Fig 8) and discussed batching

.to(device)calls in Torch to cut CPU overhead.- They also tackled multi-GPU inference strategies, MPS kernel profiling quirks, and specialized GPU decorators for popcorn bot, referencing deviceQuery info.

LlamaIndex Discord

- RAG Rush with LlamaParse: Using LlamaParse, LlamaCloud, and AWS Bedrock, the group built a RAG application that focuses on parsing SEC documents efficiently.

- Their step-by-step guide outlines advanced indexing tactics to handle large docs while emphasizing the strong synergy between these platforms.

- Knowledge Graph Gains with LlamaIndex: Tomaz Bratanic of @neo4j presented an approach to boost knowledge graph accuracy using agentic strategies with LlamaIndex in his thorough post.

- He moved from a naive text2cypher model to a robust agentic workflow, improving performance with well-planned error handling.

- LlamaIndex & Vellum AI Link Up: The LlamaIndex team announced a partnership with Vellum AI, sharing use-case findings from their survey here.

- This collaboration aims to expand their user community and explore new strategies for RAG-powered solutions.

- XHTML to PDF Puzzle Solved with Chromium: One member noted Chromium excels at converting XHTML to PDF, outperforming libraries like pandoc, wkhtmltopdf, and weasyprint.

- They shared an example XHTML doc and an example HTML doc, highlighting promising rendering fidelity.

- Vector Database Crossroads at Scale: Users debated switching from Pinecone to either pgvector or Azure AI search to manage 20k documents with better cost efficiency.

- They referenced LlamaIndex's Vector Store Options to gauge integration with Azure and stressed the need for a strong production workflow.

OpenInterpreter Discord

- OpenInterpreter 1.0's Command Conundrum: OpenInterpreter 1.0 restricts direct code execution, shifting tasks to command-line usage and raising alarm about losing user-friendly features.

- Community members bemoaned the departure from immediate Python execution, stating the new approach "feels slower" and "requires more manual steps."

- Bora's Law Breaks Compute Conventions: A new working paper, Bora's Law: Intelligence Scales With Constraints, Not Compute, suggests exponential growth in intelligence driven by constraints instead of compute.

- Attendees stressed that this theory challenges large-scale modeling strategies like GPT-4, questioning the heavier reliance on raw hardware resources.

- Python Power Moves in OI: Enthusiasts urged the addition of Python convenience functions for streamlining tasks in OpenInterpreter.

- They argued these enhancements could "boost user efficiency" while preserving the platform's interactive style.

- AGI Approaches Under Fire: One corner of the community criticized OpenAI for overly focusing on brute-force compute, ignoring more subtle intelligence boosters.

- Members called for reevaluating AI development principles in light of creative theories like Bora's Law, highlighting the need to refine large model scaling.

LAION Discord

- DougDoug’s Dramatic Dive into AI Copyright: In this YouTube video, DougDoug shared a thorough explanation of AI copyright law, centering on potential intersections between tech and legal structures.

- This perspective sparked a lively exchange, with participants praising his attention to emerging legal blind spots and speculating on possible creator compensation models.

- Hyper-Explainable Networks Spark Royalties Rethink: A proposal for hyper-explainable networks introduced the idea of gauging training data’s influence on model outputs, potentially directing royalties to data providers.

- Opinions varied between excitement at the potential for data-driven compensation and skepticism about the overhead in implementing such a system.

- Inference-Time Credit Assignment Gains Ground: A related conversation around inference-time credit assignment floated the possibility of using it to trace each dataset chunk’s impact on a model’s results.

- While some see promise in recognizing data contributors, others point out the major complexity in quantifying these influences.

AI21 Labs (Jamba) Discord

- P2B Propositions Prompt a Crypto Clash: A representative from P2B offered services for fundraising, listing, community support, and liquidity management, hoping to engage AI21 in their crypto vision.

- They asked to share more details about these offerings, but the conversation shifted once AI21 Labs made its stance on crypto clear.

- AI21 Labs Locks Out Crypto Initiatives: AI21 Labs firmly refused to associate with crypto-based efforts, stating they will never pursue related projects.

- They also warned that repeated crypto references would trigger swift bans, underlining their no-tolerance position.

MLOps @Chipro Discord

- AI Agents Ascend in 2025: Sakshi & Satvik will host Build Your First AI Agent of 2025 on Thursday, Jan 16, 9 PM IST, featuring both code and no-code methods from Build Fast with AI and Lyzr AI.

- The workshop highlights predictions that AI Agents will transform varied industries by 2025, broadening access for newcomers and engineers alike.

- Budget Battles Over AI Adoption: A community voice emphasized cost as a critical factor in deciding whether to adopt new solutions or keep existing systems.

- Many remain cautious, indicating a preference to retain proven infrastructure over riskier, more expensive installations.

Nomic.ai (GPT4All) Discord

- Qwen 2.5 Fine-Tuning Curiosity: A user asked for Qwen 2.5 Coder Instruct (7B) fine-tuning details, wondering if it was released on Hugging Face and citing curiosity about larger models.

- They also sought success stories on proven models from others, emphasizing performance in real-world scenarios.

- Llama 3.2 Fumbles With Long Scripts: A user ran into errors analyzing a 45-page TV pilot script with Llama 3.2 3B, expecting it to handle the text without character limit trouble.

- They shared a comparison link showing distinctions in token capacities and recent releases for Llama 3.2 3B and Llama 3 8B Instruct.

DSPy Discord

- Push for Standardizing Ambient Agent Implementation: A user in #general asked about building an ambient agent with DSPy, seeking experiences and a standardized approach from anyone who has tried it.

- They highlighted the potential synergy of ambient agents with DSPy workflows, inviting collaborative input for a more structured solution.

- Growing Curiosity for DSPy Examples: Another inquiry emerged about specific DSPy examples for implementing ambient agents, emphasizing the community’s hunger for concrete code references.

- No direct examples were provided yet, but the community expressed eagerness for shared demos or open-source materials to bolster DSPy’s practical usage.

The tinygrad (George Hotz) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Agents (Berkeley MOOC) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Axolotl AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Torchtune Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!