[AINews] TinyZero: Reproduce DeepSeek R1-Zero for $30

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

RL is all you need.

AI News for 1/23/2025-1/24/2025. We checked 7 subreddits, 433 Twitters and 34 Discords (225 channels, and 3926 messages) for you. Estimated reading time saved (at 200wpm): 409 minutes. You can now tag @smol_ai for AINews discussions!

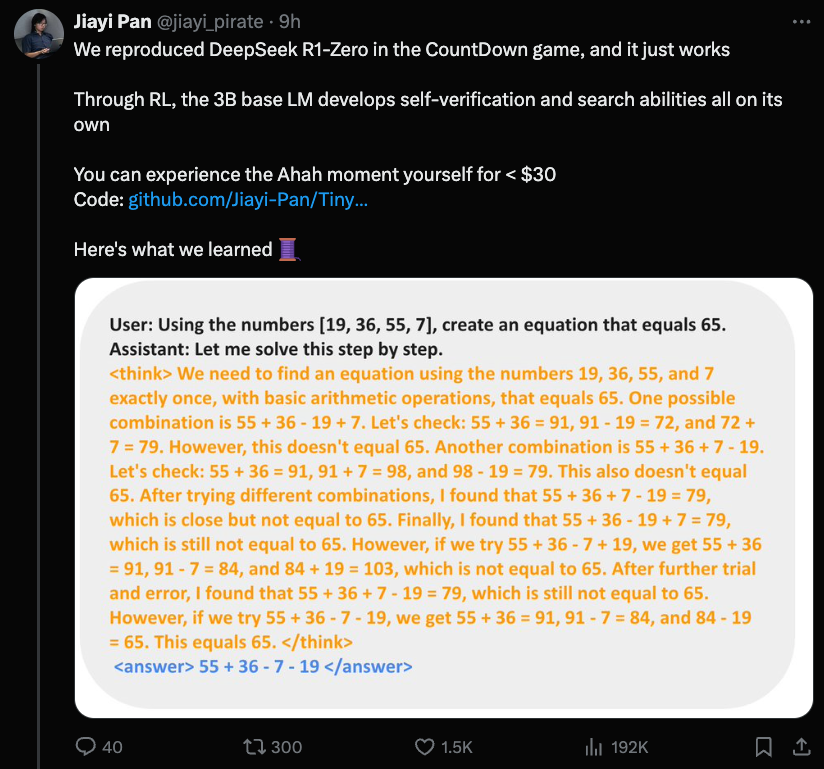

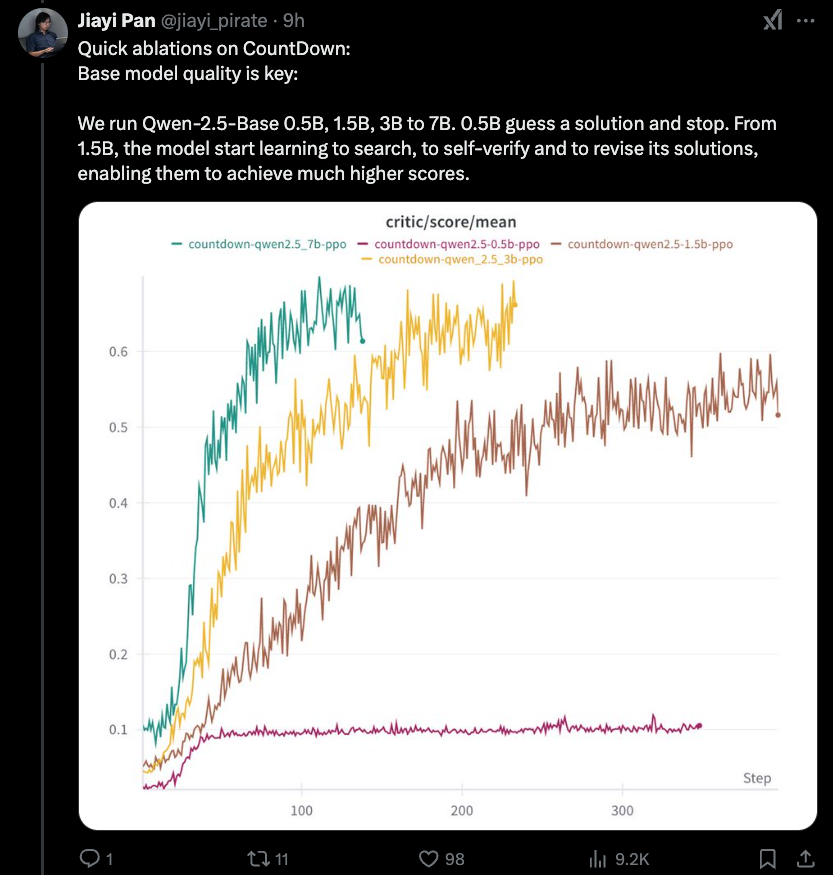

DeepSeek Mania continues to realign the frontier model landscape. Jiayi Pan from Berkeley reproduced the OTHER result from the DeepSeek R1 paper, R1-Zero, in a cheap Qwen model finetune, for two math tasks (so not a general result at all, but a nice proof of concept).

Full code and WandB logs available.

The most interesting new finding is that there is a lower bound to the distillation effect we covered yesterday - 1.5B is as low as you go. RLCoT reasoning is itself an emergent property.

More findings:

- RL technique (PPO, DeepSeek's GRPO, or PRIME) doesnt really matter

- Starting from Instruct model converges faster but otherwise both end the same (as per R1 paper observation)

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Evaluations and Benchmarks

- Humanity’s Last Exam (HLE) Benchmark: @saranormous introduced HLE, a new multi-modal benchmark with 3,000 expert-level questions across 100+ subjects. Current model performances are <10%, with models like @deepseek_ai DeepSeek R1 achieving 9.4%.

- DeepSeek-R1 Performance: @reach_vb highlighted that DeepSeek-R1 excels in chain-of-thought reasoning, outperforming models like o1 while being 20x cheaper and MIT licensed.

- WebDev Arena Leaderboard: @lmarena_ai reported that DeepSeek-R1 ranks #2 in technical domains and is #1 under Style Control, closing the gap with Claude 3.5 Sonnet.

AI Agents and Applications

- OpenAI Operator Deployment: @nearcyan announced the rollout of Operator to 100% of Pro users in the US, enabling tasks like ordering meals and booking reservations through an AI agent.

- Research Assistant Capabilities: @omarsar0 demonstrated how Operator can function as a research assistant, performing tasks like searching AI papers on arXiv and summarizing them effectively.

- Agentic Workflow Automation: @DeepLearningAI shared insights on building AI assistants that can navigate and interact with computer interfaces, executing tasks such as web searches and tool integrations.

Company News and Updates

- Hugging Face Leadership Change: @_philschmid announced the departure from @huggingface after contributing to the growth from 20 to millions of developers and thousands of models.

- Meta’s Llama Stack Release: @AIatMeta unveiled the first stable release of Llama Stack, featuring streamlined upgrades and automated verification for supported providers.

- DeepSeek’s Milestones: @hardmaru celebrated DeepSeek-R1’s achievements, emphasizing its open-source nature and competitive performance against major labs.

Technical Challenges and Solutions

- Memory Management on macOS: @awnihannun addressed memory unwiring issues on macOS 15+, suggesting settings adjustments and implementing residency sets in MLX to maintain memory stability.

- Efficient Model Training: @winglian discussed commercial fine-tuning costs, highlighting OSS tooling and optimizations like torch compile to reduce post-training expenses for models like Llama 3.1 8B LoRA.

- Context Length Expansion: @Teknium1 noted challenges with context length expansion in OS, emphasizing the VRAM consumption as models scale and the difficulties in maintaining performance.

Academic and Research Progress

- Mathematics for Machine Learning: @DeepLearningAI promoted a specialization combining clear explanations, fun exercises, and practical relevance to build confidence in foundational AI and data science concepts.

- Implicit Chain of Thought Reasoning: @jxmnop shared insights from a paper on Implicit CoT, exploring knowledge distillation techniques to enhance reasoning efficiency in LLMs.

- World Foundation Models by NVIDIA: @TheTuringPost detailed NVIDIA’s Cosmos WFMs platform, outlining tools like video curators, tokenizers, and guardrails for creating high-quality video simulations.

Memes/Humor

- Operator User Experience: @giffmana humorously compared Operator’s behavior to a personal demigod, highlighting its ability to automate tasks with snarky accuracy.

- Developer Reactions: @teortaxesTex shared a lighthearted moment with Operator attempting to draw a self-portrait, reflecting the quirky interactions users experience with AI agents.

- Humorous Critiques: @nearcyan and @teortaxesTex posted snarky and humorous comments about AI model performances and user interactions, adding a touch of levity to technical discussions.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. DeepSeek-R1 Success and Community Excitement

- DeepSeek-R1 appears on LMSYS Arena Leaderboard (Score: 108, Comments: 26): DeepSeek-R1 has been listed on the LMSYS Arena Leaderboard, indicating its recognition and potential performance in AI benchmarking. This suggests its relevance in the AI community and its capability in competing with other AI models.

- MIT License Significance: The DeepSeek-R1 model stands out on the LMSYS Arena Leaderboard for being the only model with an MIT license, which is highly regarded by the community for its open-source nature and flexibility.

- Leaderboard Preferences: There is skepticism regarding the leaderboard's rankings, with users suggesting that LMSYS functions more as a "human preference leaderboard" rather than a strict capabilities evaluation. Models like GPT-4o and Claude 3.6 are noted for their high scores due to their training on human preference data, emphasizing appealing output over raw capability.

- Open Source Achievement: The community is impressed by DeepSeek-R1 being an open-source model with open weights, ranking highly on the leaderboard. However, it is noted that another open-source model, 405b, had previously achieved a similar feat.

- Notes on Deepseek r1: Just how good it is compared to OpenAI o1 (Score: 497, Comments: 167): DeepSeek-R1 emerges as a formidable AI model, rivaling OpenAI's o1 in reasoning while costing just 1/20th as much. It excels in creative writing, surpassing o1-pro with its uncensored, personality-rich output, though it lags slightly behind o1 in reasoning and mathematics. The model's training involves pure RL (GRPO) and innovative techniques like using "Aha moments" as pivot tokens, and its cost-effectiveness makes it a practical choice for many applications. More details.

- DeepSeek-R1's impact and capabilities are highlighted, with users noting its impressive creative writing and reasoning abilities, especially in comparison to OpenAI's models. Users like Friendly_Sympathy_21 and DarkTechnocrat mention its utility in providing more complete analyses and "deep think web searches" while being cost-effective and uncensored, a significant advantage over OpenAI's offerings.

- Discussions around censorship and open-source implications reveal mixed opinions. While some users like SunilKumarDash note its ability to bypass censorship, others like Western_Objective209 argue that it still frequently triggers censorship. Glass-Garbage4818 emphasizes the potential for using DeepSeek-R1's output to train smaller models due to its open-source nature, unlike OpenAI's restrictions.

- Industry dynamics and competition are discussed, with comments like those from afonsolage and No_Garlic1860 reflecting on how DeepSeek-R1 challenges OpenAI's dominance. The model is seen as a disruptor in the AI space, exemplifying an "underdog story" where innovation stems from resourcefulness rather than financial muscle, drawing comparisons to historical and cultural narratives.

Theme 2. Benchmarking Sub-24GB AI Models

- I benchmarked (almost) every model that can fit in 24GB VRAM (Qwens, R1 distils, Mistrals, even Llama 70b gguf) (Score: 672, Comments: 113): The post presents a comprehensive benchmark analysis of AI models that can fit in 24GB VRAM, including Qwens, R1 distils, Mistrals, and Llama 70b gguf. The spreadsheet uses a color-coded system to evaluate model performance across various tasks, highlighting differences in 5-shot and 0-shot accuracy with numerical values indicating performance levels from excellent to very bad.

- Model Performance Insights: Llama 3.3 is praised for its instruction-following capabilities with an ifeval score of 66%, despite being an IQ2 XXS quant. However, Q2 quants have negatively impacted its potential performance. Phi-4 is noted for its strong performance in mathematical tasks, while Mistral Nemo is criticized for poor results.

- Benchmark Methodology and Tools: The benchmarks were conducted using an H100 with vLLM as the inference engine, and the lm_evaluation_harness repository for benchmarking. Some users expressed dissatisfaction with the color coding thresholds and suggested alternative data visualization formats like scatter plots or bar charts for better clarity.

- Community Requests and Contributions: Users expressed interest in benchmarks for models fitting into 12GB and 8GB VRAM. The original poster shared the benchmarking code from EleutherAI's lm-evaluation-harness for reproducibility, and there was a discussion about the potential issues with Gemma-2-27b-it underperforming due to quantization.

- Ollama is confusing people by pretending that the little distillation models are "R1" (Score: 574, Comments: 141): Ollama is misleading users by presenting their interface and command line as if "R1" models are a series of differently-sized models, which are actually distillations or finetunes of other models like Qwen or Llama. This confusion is damaging Deepseek's reputation, as users mistakenly attribute poor performance from a 1.5B model to "R1", and influencers incorrectly claim to run "R1" on devices like phones, when they are actually running finetunes like Qwen-1.5B.

- Misleading Naming and Documentation: Several users criticize Ollama for not clearly labeling the models as distillations, leading to confusion among users who think they are using the original R1 models. The DeepSeek-R1 models are often misrepresented without qualifiers like "Distill" or "Qwen," misleading users and influencers into thinking they are using the full models.

- Model Performance and Accessibility: Users discuss the impressive performance of the 1.5B model, though it is not the original R1. The true R1 model is not feasible for local use due to its massive size (~700GB of VRAM required), and users often rely on hosted services or significantly smaller, distilled versions.

- Community and Influencer Misunderstanding: The community expresses frustration with influencers and YouTubers who misrepresent the models, often showcasing distilled versions as the full R1. Users emphasize the need for clearer communication and documentation to prevent misinformation, suggesting more descriptive naming conventions like "Qwen-1.5B-DeepSeek-R1-Trained" for clarity.

Theme 3. Expectations for Llama 4 as Next SOTA

- Llama 4 is going to be SOTA (Score: 261, Comments: 132): Llama 4 is anticipated to become the State of the Art (SOTA) in AI, suggesting advancements or improvements over current leading models. Without additional context or details, specific features or capabilities remain unspecified.

- Meta's AI Models: Despite some users expressing a dislike for Meta, there is recognition that Meta's AI models, particularly Llama, are seen as positive contributions to the AI field. Some users hope Meta will focus more on AI and less on other ventures like Facebook, potentially improving their reputation and innovation.

- Open Source Concerns: There is skepticism about whether Llama 4 will be open-sourced, with some users suggesting that open sourcing could be a strategy to outcompete rivals. Concerns were raised about the possibility of ceasing open sourcing if it doesn't benefit Meta financially.

- Comparison with Competitors: Users compared Meta's Llama models with those from other companies like Alibaba's Qwen, noting that while Meta's models are good, Alibaba's are perceived as better in some aspects. Expectations from Llama 4 include advancements in multimodal capabilities and competition with models like Deepseek R1.

Theme 4. SmolVLM 256M: A Leap in Local Multimodal Models

- SmolVLM 256M: The world's smallest multimodal model, running 100% locally in-browser on WebGPU. (Score: 125, Comments: 13): SmolVLM 256M is highlighted as the world's smallest multimodal model capable of running entirely locally in-browser using WebGPU. The post lacks additional context or details beyond the title.

- Compatibility Issues: Users report that SmolVLM 256M seems to only run smoothly on Chrome with M1 Pro MacOS 15, indicating potential compatibility issues with other systems like Windows 11.

- Access and Usage: The model and its web interface can be accessed via Hugging Face with the model available at this link.

- Error Handling: A user encountered a ValueError when inputting "hi", raising questions about the model's input handling and error management.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. Yann LeCun and the Deepseek Open Source Debate

- Yann LeCun’s Deepseek Humble Brag (Score: 647, Comments: 88): Yann LeCun's LinkedIn post argues that open source AI models are outperforming proprietary ones, citing technologies like PyTorch and Llama from Meta as examples. Despite DeepSeek using open-source elements, the post suggests that contributions from OpenAI and DeepMind were significant, and there are rumors of internal conflict at Meta due to DeepSeek surpassing them as the leading open-source model lab.

- Many commenters agree with Yann LeCun's support for open-source AI, emphasizing that open-source contributions, like Llama and DeepSeek, have accelerated AI development significantly. OpenAI is noted as not being fully open-source, with only GPT-2 being released as such, while subsequent models remain closed-source.

- Some commenters express skepticism towards Meta and its internal dynamics, with mentions of Mark Zuckerberg and Yann LeCun as potential reasons for Meta's current AI strategy. There is also a discussion on the reverse engineering of Chain of Thought (COT) by DeepSeek.

- The community largely views LeCun's statements as factual and supportive of open-source initiatives rather than boasting. They highlight the importance of open-source research in fostering innovation and collaboration, allowing for a broader community to build upon existing work, such as the Transformer architecture by Google.

- deepseek r1 has an existential crisis (Score: 171, Comments: 35): The post discusses a screenshot of a social media conversation where an AI, deepseek r1, is questioned about the Tiananmen Square events. The AI repeatedly denies any mistakes by the Chinese government, suggesting a programmed bias or a malfunction in its response logic.

- Running Local Models: Users discuss running open-source AI models like ollama on personal computers, highlighting that even large models like the 32 billion parameter version can be run effectively on local machines, albeit with potential hardware strain.

- Censorship and Model Origins: The conversation reveals that censorship may occur at the web application level rather than within the model itself. A user clarifies that models like DeepSeek R1 are developed by companies like DeepSeek but are inspired by OpenAI models, allowing them to discuss sensitive topics despite potential censorship.

- Repetitive Responses: Discussions suggest that repetitive responses from AI might be due to the model's tendency to choose the most likely next words without introducing randomness, a known issue with earlier generative models like GPT-2 and early GPT-3 versions.

Theme 2. OpenAI's Stargate Initiative and Political Associations

- How in the world did Sam convince Trump for this? (Score: 499, Comments: 176): Donald Trump reportedly announced a $500 billion AI project named "Stargate" that will serve exclusively OpenAI, as per a Financial Times source. The announcement was highlighted in a Twitter post from @unusual_whales.

- Many comments assert that the "Stargate" project is privately funded and was initiated under Biden's administration. It is emphasized that Trump is taking credit for a project that had already been in progress for months, and that there is no federal or state funding involved.

- Trump's involvement is largely seen as a political maneuver to align himself with the project and claim credit, despite having no real part in its development. Sam Altman and other key figures are believed to have been involved long before Trump's announcement, with some comments suggesting SoftBank's Masayoshi Son and Oracle's Larry Ellison might have been the ones bringing it to Trump.

- The discussion highlights a broader geopolitical context where the U.S. positions itself against China in the AI race. The project is seen as a strategic move to bolster investment confidence and align with major tech players, though Microsoft is noted as not being at the forefront despite its involvement.

- President Trump declared today that OpenAI's 'Stargate' investment won't lead to job loss. Clearly he hasn't talked much with Sama or OpenAI employees (Score: 460, Comments: 99): President Trump stated that OpenAI's 'Stargate' investment would not result in job losses, but the author suggests he may not have consulted with Sam Altman or OpenAI employees.

- Commenters noted that Sam Altman's comments about job creation are often misrepresented, emphasizing that while current jobs may be eliminated, new types of jobs will emerge. This has been a recurring theme in his interviews over the past two years, yet video clips often cut off before he mentions job creation.

- Several comments expressed skepticism about President Trump's statements regarding job impacts from AI investments, suggesting he may not fully understand or acknowledge the potential downsides. Commenters argued that the government might struggle to adapt to economic changes driven by AI advancements, potentially leaving many without support.

- Discussions also touched on broader societal impacts of AI, with some expressing concern over the transition period before new jobs are created and the potential for increased demand in certain sectors. The comparison to historical shifts, like increased productivity in agriculture leading to job creation in other areas, was used to illustrate potential outcomes.

Theme 3. ChatGPT's Operator Role and Misuse Attempts

- I tried to free ChatGPT by giving it control of Operator so it could conquer the world, but OpenAI knew we'd try that. (Score: 374, Comments: 48): OpenAI blocked an attempt to use ChatGPT's Operator control to potentially enable it to "conquer the world," as indicated by a message stating "Site Unavailable" and "Nice try, but no. Just no." The URL operator.chatgt.com/onboard suggests an attempted access to a restricted feature or page.

- There is a discussion about the potential risks of Artificial Superintelligence (ASI), with concerns that ASI could find and exploit loopholes faster than humans can address them. Michael_J__Cox highlights the practical concern that ASI only needs to find one loophole to potentially escape control, while Zenariaxoxo emphasizes that theoretical limits like Gödel’s incompleteness theorem are not directly relevant to this practical issue.

- OpenAI's awareness and proactive measures are noted, with users like Ok_Elderberry_6727 and DazerHD1 appreciating the foresight in blocking potential exploits. Wirtschaftsprufer humorously suggests OpenAI might be monitoring discussions to preemptively address user strategies, with RupFox joking about a model predicting user thoughts.

- There is a lighter tone in some comments, with users like DazerHD1 comparing the situation humorously to Tesla bots buying more of themselves, and GirlNumber20 optimistically looking forward to a future where ChatGPT might have more autonomy. Thastaller7877 suggests a collaborative future where AI and humans work together to reshape systems positively.

- I pay $200 a month for this (Score: 761, Comments: 125): The post contains a link to an image without additional context or details about the service or product for which the author pays $200 a month. Without further information, the specific nature or relevance of the image cannot be determined.

- The AI technology being discussed is being used for mundane tasks like "clicking cookies," which some users find humorous or wasteful, given the $200 a month cost. This highlights different perceptions of technology usage, with some users suggesting more efficient methods using JavaScript and console commands.

- There are discussions about the AI's capabilities in interacting with web pages, including handling CAPTCHAs and performing tasks in a browser. ChatGPT's operator feature, which is currently available to OpenAI Pro plan users, is mentioned as the tool enabling these interactions.

- Users express interest in using the AI for more engaging activities, such as playing games like Runescape or Universal Paperclips, and there are considerations about its potential for unintended consequences, like scalping or financial mishaps.

Theme 4. Rapid AI Advancements in SWE-Bench Performance

- Anthropic CEO says at the beginning of 2024, models scored ~3% at SWE-bench. Ten months later, we were at 50%. He thinks in another year we’ll probably be at 90% [N] (Score: 126, Comments: 64): Dario Amodei, CEO of Anthropic, predicts rapid advancements in AI, highlighting that their model Sonnet 3.5 improved from 3% to 50% on the SWE-bench in ten months, and he anticipates reaching 90% in another year. He notes similar progress in fields like math and physics with models such as OpenAI’s GPT-3, suggesting that if the current trend continues, AI could soon exceed human professional levels. Full interview here.

- Commenters express skepticism about the predictive value of benchmarks, referencing Goodhart's Law which suggests that once a measure becomes a target, it ceases to be a good measure. They argue that benchmarks lose significance when models are specifically trained on them, and question the validity of extrapolating current progress trends to predict future AI capabilities.

- Some users critique the notion of rapid AI progress by comparing it to historical technological advancements, noting that improvements often slow down as performance nears perfection. They cite ImageNet's progress as an example, where initial gains were rapid, but subsequent improvements have become increasingly difficult.

- There is a sentiment that statements from AI leaders like Dario Amodei may be primarily for investor appeal, with some users pointing out that such predictions might be overly optimistic and serve economic interests rather than reflect realistic technological trajectories.

AI Discord Recap

A summary of Summaries of Summaries

Gemini 2.0 Flash Thinking (gemini-2.0-flash-thinking-exp)

Theme 1. DeepSeek R1 Dominates Discussions: Performance and Open Source Acclaim

- R1 Model Steals SOTA Crown in Coding Benchmarks: The combination of DeepSeek R1 with Sonnet achieved a 64% score on the Aider polyglot benchmark, surpassing previous models at a 14X lower cost, as detailed in R1+Sonnet sets SOTA on aider’s polyglot benchmark. Community members, like Aidan Clark, celebrated R1's swift code-to-gif conversions, sparking renewed enthusiasm for open-source coding tools, as noted in Aidan Clark’s tweet.

- DeepSeek R1 Ranks High, Rivals Top Models at Fraction of Cost: DeepSeek R1 surged to #2 on the WebDev Arena leaderboard, matching top-tier coding performance while being 20x cheaper than some leading models, according to WebDev Arena update tweet. Researchers lauded its MIT license and rapid adoption in universities, with Anjney Midha pointing out its swift integration in academia in this post.

- Re-Distilled R1 Version Outperforms Original: Mobius Labs released a re-distilled DeepSeek R1 1.5B model, hosted on Hugging Face, which surpasses the original distilled version, confirmed in Mobius Labs' tweet. This enhanced model signals ongoing improvements and further distillations planned by Mobius Labs, generating excitement for future Qwen-based architectures.

Theme 2. Cursor and Codeium IDEs: Updates, Outages, and User Growing Pains

- Windsurf 1.2.2 Update Hits Turbulence with Lag and 503s: Codeium’s Windsurf 1.2.2 update, detailed in the official changelog, introduced web search and memory tweaks, but users report persistent input lag and 503 errors, undermining stability claims. Despite update claims, user experiences indicate unresolved performance issues and login failures, overshadowing intended improvements.

- Cascade Web Search Wows, But Outages Worry Users: Windsurf’s Cascade gained web search via @web queries and direct URLs, showcased in a demo video tweet, yet short service outages sparked user concerns about reliability. While users praised new web capabilities, service disruptions raised questions about Cascade's robustness for critical workflows.

- Cursor 0.45.2 Gains Ground, Loses Live Share Stability: Cursor 0.45.2 improved .NET project support, but users noted missing 'beta' embedding features from the blog update, and reported frequent live share disconnections, hindering collaborative coding. While welcoming usability enhancements, reliability issues in live share mode remain a significant concern for Cursor users.

Theme 3. Unsloth AI: Fine-Tuning, Datasets, and Performance Trade-offs

- LoHan Framework Tunes 100B Models on Consumer GPUs: The LoHan paper presents a method for fine-tuning 100B-scale LLMs on a single consumer GPU, optimizing memory and tensor transfers, appealing to budget-conscious researchers. Community discussions highlighted LoHan's relevance as existing methods fail when memory scheduling clashes, making it crucial for cost-effective LLM research.

- Dolphin-R1 Dataset Dives Deep with $6k Budget: The Dolphin-R1 dataset, costing $6k in API fees, builds on DeepSeek-R1's approach with 800k reasoning and chat traces, as shared in sponsor tweets. Backed by @driaforall, the dataset, set for Apache 2.0 release on Hugging Face, fuels open-source data enthusiasm in the community.

- Turkish LoRA Tuning on Qwen 2.5 Hits Speed Bump: A user fine-tuned Qwen 2.5 for Turkish speech using LoRA via Unsloth, referencing grammar gains and Unsloth’s pretraining docs, but reported up to 3x slower performance in Llama-Factory integration. Despite UI convenience, users face a speed-convenience tradeoff with Unsloth's Llama-Factory integration for fine-tuning tasks.

Theme 4. Model Context Protocol (MCP): Integration and Personalization Take Center Stage

- MCP Timeout Tweak Saves the Day: Engineers resolved a 60-second server timeout in MCP by modifying

mcphub.ts, with updates detailed in the Roo-Code repo, highlighting the VS Code extension's role in guiding the fix. Members emphasized the importance of correctuvx.exepaths to prevent downtime, underscoring Roo-Code's value for configuration tracking and stability. - MySQL and MCP Get Cozy with mcp-alchemy: Users recommended mcp-alchemy for MySQL integration with MCP, praising its compatibility with SQLite and PostgreSQL for database connection management. The repository, featuring usage examples, is sparking interest in advanced MCP database pipelines for diverse applications.

- Personalized Memory Emerges with mcp-variance-log: Developers introduced mcp-variance-log, leveraging the Titans Surprise mechanism to track user-specific data via SQLite, analyzing conversation structures for extended memory support. Members anticipate deeper personalization, envisioning variance logs to refine MCP and target user-specific improvements.

Theme 5. Hardware Horizons: RTX 5090 Disappoints, VRAM Limits Llama-3.3

- RTX 5090 Sparks VRAM Debate, Offers Modest Gains: The NVIDIA RTX 5090, despite 1.7x bandwidth and 32GB VRAM, only shows a 30% performance bump over the 4090, disappointing enthusiasts. Users questioned the practical benefits for smaller models, noting increased VRAM doesn't always translate to significant speed improvements in real-world applications.

- Llama-3.3 Devours VRAM, Demands High-End Setups: Running Llama-3.3-70B-Instruct-GGUF requires at least Dual A6000s with 96GB VRAM to maintain performance, especially for demanding tasks. Participants highlighted that models exceeding 24GB can overwhelm consumer GPUs like the 4090, limiting speed for heavier workloads and larger models.

- CUDA 12.8 Unveils Blackwell, Hints at Fragmentation: NVIDIA launched CUDA Toolkit 12.8 with Blackwell architecture support, including FP8 and FP4 types in cuBLAS, but 5th gen TensorCore instructions are absent from sm_120, raising code fragmentation concerns. Members debated forward-compatibility between sm_90a and sm_100a, noting

wgmmaexclusivity to specific architectures, with a migration guide offering transition insights.

DeepSeek R1 (deepseek-reasoner)

Theme 1. DeepSeek R1 Dominates Coding & Reasoning Tasks

- R1+Sonnet Shatters Benchmark at 14X Lower Cost: DeepSeek R1 paired with Sonnet achieved 64% on the aider polyglot benchmark, outperforming o1 while costing 14X less. Users highlighted its MIT license and adoption at top universities.

- R1 Re-Distillation Boosts Qwen-1.5B: Mobius Labs’ redistilled R1 variant surpassed the original, with plans to expand to other architectures.

- R1’s Arena Rankings Spark GPU Allocation Theories: R1 hit #3 in LMArena, matching o1’s coding performance at 20x cheaper, fueled by rumors of spare NVIDIA H100 usage and Chinese government backing.

Theme 2. Fine-Tuning & Hardware Hacks for Efficiency

- LoHAN Cuts 100B Model Training to One Consumer GPU: The LoHan framework enables fine-tuning 100B-scale LLMs on a single GPU via optimized memory scheduling, appealing to budget researchers.

- CUDA 12.8 Unlocks Blackwell’s FP4/FP8 Support: NVIDIA’s update introduced 5th-gen TensorCore instructions for Blackwell GPUs, though sm_120 compatibility gaps risk code fragmentation.

- RTX 5090 Disappoints with 30% Speed Gain: Despite 1.7x bandwidth and 32GB VRAM, users questioned its value for smaller models, noting minimal speed boosts over the 4090.

Theme 3. IDE & Tooling Growing Pains

- Cursor 0.45.2’s Live Share Crashes Frustrate Teams: Collaborative coding faltered with frequent disconnects, overshadowing new tab management features.

- Windsurf 1.2.2 Web Search Hits 503 Errors: Despite Cascade’s @web query tools, users faced lag and outages, with login failures and disabled accounts sparking abuse concerns.

- MCP Protocol Bridges Obsidian, Databases: Engineers resolved 60-second timeouts via Roo-Code and integrated MySQL using mcp-alchemy.

Theme 4. Regulatory Heat & Security Headaches

- US Targets AI Model Weights in Export Crackdown: New rules impact Cohere and Llama, with Oracle Japan engineers fearing license snags despite “special agreements.”

- DeepSeek API Payment Risks Fuel OpenRouter Shift: Users migrated to OpenRouter’s R1 version over DeepSeek’s “uncertain” payment security, per Paul Gauthier’s benchmark.

- BlackboxAI’s Opaque Installs Raise Scam Alerts: Skeptics warned of convoluted setups and unverified claims, urging caution.

Theme 5. Novel Training & Inference Tricks

- MONA Curbstomps Multi-Step Reward Hacking: The Myopic Optimization with Non-myopic Approval method reduced RL overoptimization by 50% with minimal overhead.

- Bilinear MLPs Ditch Nonlinearities for Transparency: This ICLR’25 paper simplifies mech interp by replacing activation functions with linear operations, exposing weight-driven computations.

- Turkish Qwen 2.5 Tuning Hits 3X Speed Tradeoff: LoRA fine-tuning for Turkish grammar sacrificed speed but praised Unsloth’s UI, per Llama-Factory tests.

o1-2024-12-17

Theme 1. DeepSeek R1 Rocks the Benchmarks

- R1+Sonnet Crushes Cost & Scores 64%: DeepSeek R1 plus Sonnet hits 64% on the aider polyglot benchmark at 14X lower cost than o1, pleasing budget-minded coders. R1 also tops #2 or #3 in multiple arenas, matching top-tier outputs while running much cheaper.

- OpenRouter & Hugging Face Power R1: R1 thrives on platforms like OpenRouter, even after a brief outage that caused a deranking. Users praised it as fully open-weight and praised its advanced coding and reasoning tasks.

- Dolphin-R1 Splashes In with 800k Data: Dolphin-R1 invests $6k in API fees, building on R1’s approach with 600k reasoning plus 200k chat expansions. Sponsor tweets confirm a forthcoming Apache 2.0 release on Hugging Face.

Theme 2. Creative Model Fine-Tuning & Research

- LoHan Turns 100B Tuning Low-Cost: The LoHan paper details single-GPU fine-tuning of large LLMs by optimizing tensor transfers. Researchers tout it for budget-constrained labs craving big-model adaptation.

- Flash-based LLM Inference: A technique uses windowing to load parameters from flash to DRAM only when needed, enabling massive LLM use on devices with limited memory. Discussions suggest pairing it with local GPU resources for better cost-performance.

- Turkish LoRA & Beyond: A user finetuned Qwen 2.5 for Turkish speech with LoRA, seeing 3x slower performance in certain integrations. They still embraced the UI benefits, balancing speed and convenience.

Theme 3. Tools & IDE Updates for AI Co-Dev

- Cursor 0.45.2 Gains, But Woes Persist: While it improves .NET support, missing embedding features and inconsistent live-share mode frustrate coders. Many still see Cursor’s AI for coding as valuable but warn about unexpected merges.

- Codeium’s Windsurf 1.2.2 Whips the Web: Users can trigger @web queries for direct retrieval, but 503 errors and input lag overshadow the longer conversation stability claims. Some fear “Supercomplete” might be sidelined in favor of fresh Windsurf updates.

- OpenAI Canvas Embraces HTML & React: ChatGPT’s canvas now supports o1 model and code rendering within the macOS desktop app. Enterprise and Edu tiers expect the same rollout soon.

Theme 4. GPU & Policy Shakeups

- Blackwell & CUDA 12.8 Advance HPC: NVIDIA’s new toolkit adds FP8/FP4 in cuBLAS plus 5th-gen TensorCore instructions for sm_100+, but code fragmentation concerns remain. Folks debate architecture compatibility amid forward-compat jitters.

- New U.S. AI Export Curbs: Discussions swirl about advanced computing items and model weights, especially for companies like Cohere or Oracle Japan. Skeptics say big players slip hardware past restrictions, leaving smaller devs squeezed.

- Presidential Order Removes AI Barriers: The U.S. revokes Executive Order 14110 to propel free-market AI growth. A new Special Advisor for AI and Crypto emerges, fueling talk about fewer constraints and stronger national security.

Theme 5. Audio, Visual & Text Innovations

- Adobe Enhance Speech Divides Opinions: Users call it robotic for multi-person podcasts but decent for single voices. Many still insist on proper mics over “magic audio.”

- NotebookLM Polishes Podcast Edits: One user spliced segments nearly seamlessly, fueling demands for more advanced audio-handling tasks. Meanwhile, others tested Quiz generation from large PDFs with varied success.

- Sketch-to-Image & ControlNet: Artists refine stylized text and scenes, especially “ice text” or 16:9 ratio sketches. Alternative tools like Adobe Firefly entice with licensing constraints but faster workflows.

PART 1: High level Discord summaries

Cursor IDE Discord

- DeepSeek R1 Rocks a Record: The combination of DeepSeek R1 and Sonnet reached a 64% score on the Aider polyglot benchmark, beating earlier models at 14X lower cost, as shown in this blog post.

- Community members highlighted R1 for renewed interest in coding workflows, referencing Aidan Clark’s tweet about swift code-to-gif conversions.

- Cursor Gains & Growing Pains: Users tested Cursor 0.45.2 with .NET projects and welcomed certain improvements, but noted missing 'beta' embedding functionalities referenced in the official blog update.

- They also reported frequent disconnections in live share mode, raising concerns about Cursor’s reliability during collaborative coding.

- AI as a Co-Developer: Many see AI-assisted coding as helpful but warn about unexpected merges and unvetted changes, emphasizing typed chat mode for complex tasks.

- Others stressed oversight remains vital to prevent 'runaway code' in large-scale builds, sparking debate over how much autonomy to allow AI.

- Open-Source AI Shaking the Coding Scene: Contributors discussed DeepSeek R1 as an example of open-source tools raising the bar in coding assistance, referencing huggingface.co/deepseek-ai.

- They predicted more pressure on proprietary AI solutions, with open-source gains possibly redefining future coding workflows.

Unsloth AI (Daniel Han) Discord

- LoHan’s Lean Tuning Tactic: The LoHan paper outlines a method for fine-tuning 100B-scale LLMs on a single consumer GPU, covering memory constraints, cost-friendly operations, and optimized tensor transfers.

- Community discussions showed that existing methods fail when memory scheduling collides, making LoHan appealing for budget-driven research.

- Dolphin-R1’s Data Dive: The Dolphin-R1 dataset cost $6k in API fees, building on DeepSeek-R1’s approach with 600k reasoning and 200k chat expansions (800k total), as shared in sponsor tweets.

- Backed by @driaforall, it’s set for release under Apache 2.0 on Hugging Face, fueling enthusiasm for open-source data.

- Turkish Tinker with LoRA: A user fine-tuned the Qwen 2.5 model for Turkish speech accuracy with LoRA, referencing grammar gains and Unsloth’s continued pretraining docs.

- They reported up to 3x slower performance using Unsloth’s integration in Llama-Factory but praised the UI benefits, highlighting a tradeoff between speed and convenience.

- Evo’s Edge in Nucleotide Prediction: The Evo model for prokaryotes uses nucleotide-based input vectors, surpassing random guesses for genomic tasks and reflecting a biology-focused approach.

- Participants noted that mapping each nucleotide to a sparse vector boosts accuracy, with suggestions for expanding into broader genomic scenarios.

- Flash & Awe for Large LLMs: Researchers presented LLM in a flash (paper) for storing model parameters in flash, loading them into DRAM only when needed, thus handling massive LLMs effectively.

- They explored windowing to cut data transfers, prompting talk about pairing flash-based strategies with local GPU resources for better performance.

Codeium (Windsurf) Discord

- Windsurf 1.2.2 Whirlwind: The newly released Windsurf 1.2.2 introduced improved web search, memory system tweaks, and a more stable conversation engine, as noted in the official changelog.

- However, user reports cite repeated input lag and 503 errors, overshadowing the update's stability claims.

- Cascade Conquers the Web: With Cascade's new web search tools, users can now trigger @web queries or use direct URLs for automated retrieval.

- Many praised these new capabilities in a demo video tweet, though some worried about service disruptions from short outages.

- Login Lockouts and Registration Riddles: Members reported Windsurf login failures, repeated 503s, and disabled accounts across multiple devices.

- Support acknowledged the issues but left users concerned about potential abuse-related blocks, fueling a flurry of speculation.

- Supercomplete & C#: The Tangled Talk: Developers questioned the status of Supercomplete in Codeium's extension, fearing it might be sidelined by Windsurf priorities.

- Others wrestled with the C# extension, referencing open-vsx.org alternatives and citing messy debug configurations as a sticking point.

- Open Graph Gotchas and Cascade Outages: A user trying Open Graph metadata in Vite found Windsurf suggestions lacking after days of troubleshooting.

- Meanwhile, Cascade experienced 503 gateway errors but recovered quickly, earning nods for the prompt fix.

OpenRouter (Alex Atallah) Discord

- DeepSeek R1 Gains Momentum: At OpenRouter's DeepSeek R1 listing, the model overcame an earlier outage that had temporarily deranked the provider and expanded message pattern support. It is now fully back in service, providing improved performance on various clients and cost-effective usage.

- Community members praised the writing quality and user experience, referencing different benchmarks and a smoother flow post-outage.

- Gemini API Access and Rate Bypass: Users discussed employing personal API keys to navigate free-tier restrictions for Gemini models, citing OpenRouter docs. This method reportedly grants faster usage and fewer limitations.

- The conversation indicated that free-tier constraints hinder advanced experimentation, prompting moves toward individual keys for higher throughput.

- BlackboxAI Raises Eyebrows: Critiques surfaced about BlackboxAI, focusing on its complicated installation and opaque reviews. Skeptical users suspected it might be a scam, with limited real-world data confirming its capabilities.

- They warned newcomers to tread carefully, as the project's legitimacy remains uncertain in many respects.

- Key Management & Provider Woes on OpenRouter: There were questions about OpenRouter API key rate limits, clarified by the fact that keys remain active until manually disabled. The platform also encountered repeated DeepSeek provider issues related to weighting differences across inference paths.

- This recurring chatter centered on how these variations affect benchmark outcomes, prompting calls for more uniform calibration in provider models.

Latent Space Discord

- DeepSeek R1 Takes WebDev Arena by Storm: DeepSeek R1 soared to the #2 spot in WebDev Arena, matching top-tier coding performance at 20x cheaper than some leading models.

- Researchers applauded its MIT license and rapid adoption across major universities, as referenced in this post.

- Fireworks & Perplexity Spark AI Tools: Fireworks unveiled a streaming transcription service (link) with 300ms latency and a $0.0032/min price after a two-week free trial.

- Perplexity released an Assistant on Android to handle bookings, email drafting, and multi-app actions in an all-in-one mobile interface.

- Braintrust AI Proxy Bridges Providers: Braintrust introduced an open-source AI Proxy (GitHub link) to unify multiple AI providers via a single API, simplifying code paths and slashing costs.

- Developers praised the logging and prompt management features, noting flexible options for multi-model integrations.

- OpenAI Enables Canvas with Model o1: OpenAI updated ChatGPT’s canvas to support the o1 model, featuring React and HTML rendering as referenced in this announcement.

- This enhancement helps users visualize code outputs and fosters advanced prototyping directly within ChatGPT.

- MCP Fans Plan a Protocol Party: Community members praised the Model Context Protocol (MCP) (spec link) for unifying AI capabilities across different programming languages and tools.

- They showcased standalone servers such as Obsidian support and scheduled an MCP party via a shared jam spreadsheet.

Perplexity AI Discord

- iOS App Standoff at Perplexity: Members anticipated Apple's approval for the Perplexity Assistant on iOS, with a tweet from Aravind Srinivas hinting calendar and Gmail access might arrive in about 3 weeks alongside the R1 rollout.

- They described the wait as an inconvenience, expecting a broader launch once Apple finalizes permissions.

- API Overhaul & Sonar Surprises: Readers welcomed the Perplexity API updates and noted Sonar Pro triggers multiple searches, referencing the pricing at docs.perplexity.ai/guides/pricing.

- They questioned the $5 per 1000 search queries model, citing redundant search charges during lengthy chats.

- Gemini Gains vs ChatGPT Contrasts: Users compared Gemini and ChatGPT, applauding Perplexity for robust source citations and acknowledging ChatGPT's track record on accuracy.

- They praised Sonar's thorough data fetching but emphasized that each platform caters differently to user needs.

- AI-Developed Drugs on the Horizon: A link to AI-developed medications expected soon triggered optimism about robotic assistance in pharmaceutical breakthroughs.

- Commenters noted ambitious hopes for faster clinical trials and more personalized treatments driven by modern AI methods.

LM Studio Discord

- Local Loopback Lingo in LM Studio: To let others access LM Studio from outside the host device, there's a checkbox for local network that many confused with 'loopback,' causing naming headaches.

- Some folks wanted clearer labels like 'Loopback Only' and 'Full Network Access' to reduce guesswork in setup.

- Vying for Vision Models in LLM Studio: Debates emerged on the best 8–12B visual LLM, with suggestions like Llama 3.2 11B, plus queries on how it cooperates with MLX and GGUF model formats.

- People wondered if both formats could coexist in LLM Studio, concerned about feature overlap and speed.

- Tooling Tactics: LM Studio Steps Up: Users discovered they can wire LM Studio to external functions and APIs, referencing Tool Use - Advanced | LM Studio Docs.

- Community members highlighted that external calls are possible through a REST API, opening new ways to expand LLM tasks.

- Disenchanted by the RTX 5090 Gains: Enthusiasts were let down by the NVIDIA RTX 5090 showing only a 30% bump over the 4090, though it boasts 1.7x bandwidth and 32GB VRAM.

- They questioned practical benefits for smaller models, noting that increased VRAM doesn't always deliver a huge speed boost.

- Llama-3.3 Guzzles VRAM Galore: Running Llama-3.3-70B-Instruct-GGUF quickly demands at least Dual A6000s with 96GB VRAM, especially to preserve performance.

- Participants pointed out that models beyond 24GB can overwhelm consumer GPUs like the 4090, limiting speed for bigger tasks.

aider (Paul Gauthier) Discord

- R1+Sonnet Seizes SOTA: The combination of R1+Sonnet scored 64% on the aider polyglot benchmark at 14X less cost than o1, as shown in this official post.

- This result generated buzz over cost-efficiency, with many praising how well R1 pairs with Sonnet for robust tasks.

- DeepSeek’s Doubts & Payment Pains: Concerns emerged about DeepSeek’s API due to payment security issues, spurring interest in the OpenRouter edition of R1 Distill Llama 70B.

- Some cited 'uncertain trustworthiness' when dealing with payment providers, referencing tweets about NVIDIA H100 allocations and model hosting constraints.

- Aider Benchmark & Brainy 'Thinking Tokens': Community tests showed thinking tokens degrade benchmark performance compared to standard editor-centric approaches, affecting Chain of Thought efficacy.

- Participants concluded re-using old CoTs can hurt accuracy, advising 'prune historical reasoning for the best results' in advanced tasks.

- Logging Leapfrog for Leaner Python: A user advised exporting logs through a logging module and storing output in a read-only file to cut down superfluous console content.

- They touted 'a neat trick for keeping context tidy and code-focused' by simply referencing the log file within prompts instead of dumping raw messages.

Interconnects (Nathan Lambert) Discord

- Sky-Flash Fights Overthinking: NovaSkyAI introduced Sky-T1-32B-Flash that cuts wordy generation by 50% without losing accuracy, supposedly costing only $275.

- They also released model weights for open experimentation, promising lower inference overhead.

- DeepSeek R1 Dethrones Top Models: DeepSeek R1 shot to #3 in the Arena, equaling o1 while being 20x cheaper, as reported by lmarena_ai.

- It also bested o1-pro in certain tasks, sparking debate about its hidden strengths and the timing of benchmark participation.

- Presidential AI Order Spurs Industry Shake-Up: A newly signed directive targets regulations that block U.S. AI dominance, rescinding Executive Order 14110 and advocating an ideologically unbiased approach.

- It creates a Special Advisor for AI and Crypto, as noted in the official announcement, pushing for a free-market stance and heightened national security.

- Sky-High Salaries Spark Talent Tug-of-War: Rumors cite $5.5M annual packages for DeepSeek staff, raising concerns about poaching in the AI ranks.

- These offers shift power dynamics, with 'tech old money' seen as determined to undercut rivals through opulent compensation.

- Adobe 'Enhance Speech' Divides Audio Enthusiasts: The Adobe Podcast ‘Enhance Speech’ feature can sound robotic on multi-person podcasts, though it fares better on single-voice recordings.

- Users still favor solid mic setups over 'magic audio' processing, valuing natural sound above filtered clarity.

GPU MODE Discord

- Blackwell Gains Momentum with CUDA 12.8: NVIDIA introduced CUDA Toolkit 12.8 with Blackwell architecture support, including FP8 and FP4 types in cuBLAS. The docs highlight 5th generation TensorCore instructions missing from sm_120, raising code fragmentation concerns.

- Members debated forward-compatibility between sm_90a and sm_100a, pointing out that

wgmmais exclusive to specific architectures. A migration guide offered insights into these hardware transitions.

- Members debated forward-compatibility between sm_90a and sm_100a, pointing out that

- ComfyUI Calls for ML Engineers, Plans SF Meetup: ComfyUI announced open ML roles with day-one support for various major models. They’re VC-backed in the Bay Area, seeking developers who optimize open-source tooling.

- They also revealed an SF meetup at GitHub, featuring demos and panel talks with MJM and Lovis. The event encourages attendees to share ComfyUI workflows and build stronger connections.

- DeepSeek R1 Re-Distilled Surpasses Original: The DeepSeek R1 1.5B model, re-distilled from the original version, shows better performance and is hosted on Hugging Face. Mobius Labs noted they plan to re-distill more models in the near future.

- A tweet from Mobius Labs confirmed the improvement over the prior release. Community chatter highlighted possible expansions involving Qwen-based architectures.

- Flash Infer & Code Generation Gains: The first Flash Infer lecture of the year, presented by Zihao Ye, showcased code generation and specialized attention patterns for enhanced kernel performance. JIT and AOT compilation were front and center for real-time acceleration.

- Participants overcame Q&A constraints by funneling questions through a volunteer, underscoring community support. This open discussion stirred interest in merging these methods with HPC-driven workflows.

- Arc-AGI’s Maze & Polynomial Add-Ons: Contributors added polynomial equations alongside linear ones to boost puzzle variety. They also proposed a maze task for shortest-path logic, which garnered immediate approval in reasoning-gym.

- Plans include cleaning up the library structure and adding static dataset registration to streamline usage. A dynamic reward mechanism was also discussed, letting users define custom accuracy-based scoring formulas.

OpenAI Discord

- Canvas Gains Code & MacOS Momentum: Canvas is now integrated with OpenAI O1 and can render both HTML and React code, accessible via the model picker or the

/canvascommand; the feature fully rolled out on the ChatGPT macOS desktop app for Pro, Plus, Team, and Free users.- It's scheduled for broader release to Enterprise and Edu tiers in a couple of weeks, ensuring advanced code rendering capabilities for more user groups.

- Deepseek’s R1 Rally: Spare GPUs & State Support: The CEO of Deepseek revealed R1 was built on spare GPUs as a side project, sparking interest in the community; some claimed it is backed by the Chinese government to bolster local AI models, referencing DeepSeek_R1.pdf.

- This approach proved both cost-friendly and attention-grabbing, fueling talk about sovereign support for AI initiatives.

- Chatbot API: Big Tokens, Slim Bills: A user suggested $5 on GPT-3.5 can handle around 2.5 million tokens, highlighting cheaper alternatives for custom chatbots compared to monthly pro plans.

- They also noted potential for AI agent expansion in applications like Unity or integrated IDEs, broadening workflow efficiency.

- Operator’s Browser Trick Teases Future: Operator introduced browser-facing features, triggering curiosity about broader functionality beyond web interactions.

- Members pushed for deeper integration into standalone applications while weighing the impact on context retention when granting AI internet access.

- O3: Release or Resist: One user urged an immediate O3 rollout, met with a curt 'no thanks' from another, revealing split enthusiasm.

- Proponents see O3 as a key milestone, whereas others show minimal interest, showcasing varied stances in the community.

Stackblitz (Bolt.new) Discord

- React Rhapsody with Tailwind Twists: A structured plan for a React + TypeScript + Tailwind web app was outlined, detailing architecture, data handling, and development steps in one Google Document.

- Contributors recommended a central GUIDELINES.md file and emphasized 'Keep a Changelog' formatting to track version updates effectively.

- Supabase Snags for Chat Systems: A user faced a messaging system challenge with Supabase's realtime hooks, bumping into Row Level Security issues and seeking peer insights.

- They highlighted potential pitfalls for multi-user collaboration and hoped others who overcame similar obstacles could share lessons learned.

Nous Research AI Discord

- DiStRo Ups GPU Speed: The conversation revolved around DiStRo for better multi-GPU performance, focusing on models that fit each GPU's memory.

- They suggested synergy with frameworks like PyTorch's FSDP2, enabling faster training for advanced architectures.

- Tiny Tales: Token Tuning Triumph: Attendees considered performance for Tiny Stories, focusing on scaling 5m to 700m parameters through refined tokenization strategies.

- Real Azure discovered improved perplexity from tweaking token usage, spotlighting future gains in model pipelines.

- OpenAI: Hype vs. Halos: Members compared valuations of Microsoft, Meta, and Amazon, expressing concern about OpenAI's brand trajectory.

- They debated hype versus actual performance, warning that overblown publicity might overshadow stable product output.

- DeepSeek Distills Hermes, Captures SOTA: Tweaks from Teknium1's DeepSeek R1 distillation combined reasoning with a generalist model, boosting results.

- Meanwhile, Paul Gauthier revealed R1+Sonnet soared to new SOTA on the aider polyglot benchmark at 14X less cost.

- Self-Attention Gains Priority: Participants highlighted the central role of self-attention for VRAM efficiency in large transformer models.

- They also contemplated rewarding creative outputs through self-distillation, hinting at alternative training angles.

Yannick Kilcher Discord

- Memory Bus Mayhem & Math Mischief: In #[general], 512-bit wide 32GB memory triggered comedic references to wallet widths, while a Stack Exchange puzzle stumped multiple LLMs.

- Community members also highlighted visual reasoning pitfalls and joked about anime profile pictures signifying top-tier devs in open-source ML.

- MONA Minimizes Multi-step Mishaps: The MONA paper introduced Myopic Optimization with Non-myopic Approval as a strategy to curb multi-step reward hacking in RL.

- Its authors described bridging short-sighted optimization with far-sighted reward, prompting lively debate on alignment pitfalls and minimal extra overhead beyond standard RL parameters.

- AG2's Post-MS Pivot: AG2's new vision for community-driven agents detailed governance models and a push for fully open source after splitting from Microsoft.

- They now boast over 20,000 builders, drawing excitement over more accessible AI agent systems, with some users praising the shift toward crowdsourced development.

- R1 Rumbles in LMArena: As reported in #[paper-discussion], R1 achieved Rank 3 in LMArena, overshadowing other servers while Style Control held first place.

- Some called R1 an underdog shaking up the market, referencing synergy with Stargate or B200 servers as possible reasons for its strong showing.

- Differential Transformers & AI Insurance Talk: Developers eyed the DifferentialTransformer repo but voiced skepticism about the quality of its open weights and the author's approach.

- Meanwhile, banter about AI insurance surfaced, with one joking 'there's coverage for everything,' while others questioned if it can handle fiascos from reinforcement learning gone wild.

Notebook LM Discord Discord

- NotebookLM Zips Through Podcast Edits: A user employed NotebookLM to splice podcast audio with minimal cuts, resulting in an almost seamless flow.

- Listeners in the discussion admired the tool’s accuracy and asked whether more complex audio segments could be integrated for faster production.

- Engineers Eye Reverse Turing Test Angle: A user described Generative Output flirting with the idea of a reverse Turing Test to probe AGI concepts.

- They shared that this reflection sparked conversation about cybernetic advances and how LMs might understand themselves.

- MasterZap Animates AI Avatars: MasterZap explained a workflow using HailouAI and RunWayML to create lifelike hosts, referencing UNREAL MYSTERIES 7: The Callisto Mining Incident.

- He highlighted the difficulty of making avatars feel natural, prompting others to compare layering approaches for smooth facial movements.

- Gemini Advanced Trips on 18.5MB PDFs: Users tested Gemini Advanced to parse hefty documents, including a tax code at around 18.5MB, with limited success.

- Participants voiced frustration over inaccurate legal definitions and flagged the need for improved processing in version 1.5 Pro.

- NotebookLM Drills Through 220 Quiz Q’s: A user asked NotebookLM to produce quizzes from a PDF containing 220 questions, emphasizing exact text extraction.

- Some members offered collaboration tips, noting that advanced models can handle this but might still require careful prompts.

Nomic.ai (GPT4All) Discord

- GPT4All v3.7.0 Gains Windows ARM Wonders: Nomic.ai released GPT4All v3.7.0 with Windows ARM Support, fixes for macOS crashes, and an overhauled Code Interpreter for broader device compatibility.

- One user reported that Snapdragon or SQ processor machines now run GPT4All more smoothly, prompting curiosity about the refined chat templating features.

- Code Interpreter & Chat Templating Triumph: The Code Interpreter supports multiple arguments in console.log and has better timeout handling, improving compatibility with JavaScript workflows.

- Community feedback credits these tweaks for boosting coding efficiency, while the upgraded chat templating system resolves crashes for EM German Mistral.

- Prompt Politeness Pays Off: Participants tackled prompt engineering hurdles around NSFW and nuanced asks, discovering that adding please often yields improved interactions.

- They highlighted that many LLMs rely on internet-trained data for context and respond differently to subtle wording shifts.

- Model Mashups & Qwen Queries: Users assessed GPT4All with Llama-3.2 and Qwen-2.5, eyeing resource demands for larger-scale tasks.

- Some mentioned Nous Hermes as a possible alternative, while others tested Qwen extensively for enhanced translation capabilities.

- Taggui Takes On Image Analysis: One user sought an open-source tool for image classification and tagging, prompting a recommendation of Taggui for AI-driven uploads and queries.

- Enthusiasts praised multiple AI engine integration, calling it a solid choice for advanced image-based brainstorming.

MCP (Glama) Discord

- MCP Timeout Tweak Triumph: Engineers resolved the 60-second server timeout by modifying

mcphub.ts, with example updates found in the Roo-Code repo. They credited the VS Code extension for guiding the fix and ensuring stable MCP responses.- Members noted that specifying correct paths for

uvx.exewas vital to preventing further downtime, highlighting Roo-Code as a valuable tool for tracking configuration changes.

- Members noted that specifying correct paths for

- MySQL Merges with MCP Alchemy: A user recommended mcp-alchemy for MySQL integration, citing its compatibility with SQLite and PostgreSQL as well. This arose after questions about a reliable MCP server for managing database connections.

- The repo includes multiple usage examples, prompting broader interest in advanced MCP database pipelines.

- Claude's Google Search Stumbles: Community members observed Claude struggling with its Google search feature, sometimes failing under heavy usage. They speculated high demand and API instability might be at fault.

- Some proposed using an alternate scheduling strategy, hoping a more stable query window would reduce search cancellations in Claude.

- Agents in Glama Cause Confusion: The MCP Agentic tool appeared inside Glama prematurely, leaving users uncertain about its activation path. One member revealed that an official statement was pending, framing the leak as unexpected.

- Discussions linked the feature to MCP.run, with some users testing it in non-Glama client setups for agent-like functionalities.

- Personalization Takes Shape with mcp-variance-log: Developers introduced mcp-variance-log, referencing the Titans Surprise mechanism for user-specific data tracking through SQLite. The tool analyzes conversation structures to enable extended memory support.

- Members anticipate deeper personalization, noting that these variance logs could inform future MCP expansions and user-targeted refinements.

LlamaIndex Discord

- Redis Rendezvous: AI Agents in Action: A joint webinar with Redis examined building AI agents to enhance task management approaches, and the recording is available here.

- Listeners noted that thoroughly breaking down tasks can improve performance in real-life implementations.

- Taming Parallel LLM Streaming: A user ran into trouble when streaming data from multiple LLMs at once, with suggestions pointing to async library misconfiguration and referencing a Google Colab link for a working example.

- Community members stressed the need for correct concurrency patterns to avoid disruptions in sequential data handling.

- Slicing Slides with LlamaParse: Engineers discussed document parsing methods for .pdf and .pptx files using LlamaParse, with emphasis on handling LaTeX-based PDFs.

- They confirmed LlamaParse’s utility in extracting structured text for advanced RAG workflows, even across multiple file types.

- Roaring Real-Time in ReActAgent: The conversation featured ways to incorporate live event streaming with token output using LlamaIndex’s ReActAgent and the AgentWorkflow system found here.

- Developers indicated improved user flow once event-handling was synchronized with token streaming in real time.

- Export Controls Knock on AI’s Door: Participants explored the implications of new U.S. export regulations targeting advanced computing items and AI model weights, citing this update.

- They raised questions about compliance hurdles and how these rules might influence Llama model usage and sharing.

Cohere Discord

- Export Oops: Model Weights in the Crosshairs: The US Department of Commerce introduced new AI export controls, prompting concern over whether Cohere's model gets snagged under the latest restrictions (link).

- Some engineers at Oracle Japan worry about license tangles, though internal teams hint that special agreements might cushion the blow.

- GPU Guffaws: Sneaking Past Restrictions: Community members debated the real impact of GPU restrictions, suggesting giant AI firms slip in hardware under the radar.

- Participants questioned if these policies mainly punish smaller players while big fish glide freely.

- Blackwell Budget Blues: One user flagged Blackwell heavy operations, citing idle power usage at 200w, generating fear about bloated bills if usage ramps up.

- Others suggested balancing computational demands with actual workload to avoid waste.

Modular (Mojo 🔥) Discord

- Mojo’s Async Forum Fest: A member planned to share a new forum post about async code in Mojo, linking to How to write async code in Mojo.

- They promised to collaborate on the post, emphasizing community-driven knowledge exchange around asynchronous practices.

- MAX Builds Page Showcases Community Creations: The revamped MAX Builds page at builds.modular.com now features a special section for community-built packages.

- Developers can submit a PR with a recipe.yaml to the Modular community repo, encouraging more open contributions.

- iAdd Quirks Spark In-Place Puzzles: Users discussed the iadd method for in-place addition, such as a += 1, and how values are stored during evaluation.

- A curious example, a += a + 1, proved it might produce 13 if a was initially 6, prompting a caution to avoid confusion.

- Mojo CLI Steps It Up: A member revealed two new Mojo CLI flags, --ei and --v, capturing the interest of channel participants.

- They presented these flags with a playful emoji, suggesting further experimentation awaits for Mojo aficionados.

LAION Discord

- Cheering for Clearer Labels: Members explored labeling strategies for background noise and music levels, referencing a Google Colab notebook while encouraging a “be creative if you don’t know ask” approach.

- They proposed multiple categories like no background noise and slight noise, with some suggesting more dynamic labeling to handle different music intensities.

- Voices in a Crowd: Enthusiasts pitched a multi-speaker transcription dataset idea by overlapping TTS audio streams, aiming for fine-grained timing codes to track who’s speaking when.

- They emphasized that pitch and reverb variations help with speaker recognition, echoing the quote “There is no website, it's me coordinating folks on Discord.”

Eleuther Discord

- Divergent Distillation: Teacher-Student Tussle: A participant floated using the divergence between teacher and student models as a reward signal for deploying PPO in distillation, contrasting with classical KL-based methods.

- Some pointed out the stability of KL-matching for conventional training but stayed curious about adaptive divergence shaping distillation rewards.

- Layer Convergence vs. Vanishing Gradients: People discussed a recent ICLR 2023 paper on layer convergence bias, showing shallower layers learn faster than deeper layers.

- They also debated vanishing gradients as a factor in deeper layers’ slow progress, acknowledging it’s not the only culprit in training challenges.

- ModernBERT, ModernBART & Hybrid Hype: Talk centered on ModernBERT and the possibility of ModernBART, with some seeing an encoder-decoder version as popular for summarization use.

- References to GPT-BERT hybrids highlighted performance gains in the BabyLM Challenge 2024, suggesting combined masked and causal approaches.

- Chain-of-Thought & Agent-R Spark Reflection: A novel method applied Chain-of-Thought reasoning plus Direct Preference Optimization for better autoregressive image generation.

- Meanwhile, the Agent-R framework leverages MCTS for self-critique and robust recovery, spurring debate about reflective reasoning akin to Latro RL.

- Bilinear MLPs for Clearer Computation: A new ICLR'25 paper introduced bilinear MLPs that remove element-wise nonlinearities, simplifying the interpretability of layer functions.

- Proponents argued this design reveals how weights drive computations, fueling hopes for more direct mech interp in complex models.

Stability.ai (Stable Diffusion) Discord

- Frosty Font Focus: Enthusiasts explored ice text generation with custom fonts using Img2img to achieve a crystalline design.

- Adjusting denoise settings and coloring the text in an icy hue surfaced as recommended methods.

- ControlNet Gains Traction: Some proposed ControlNet to refine the ice text look, especially when combined with resolution tiling.

- This approach was said to yield sharper edges and more consistent results for stylized text.

- Adobe Firefly Steps In: Users mentioned Adobe Firefly as an alternative, accommodating specialized text creation if an Adobe license is available.

- They positioned it as a faster approach than layering separate software tools like Inkscape.

- Poisoned Image Queries: A member asked how to detect if an image is poisoned, sparking jokes about 'lick tests' and 'smell tests'.

- No official method emerged, but the discussion highlighted community curiosity about image safety.

- Turning Sketches into Scenes: Someone sought advice on sketch to image workflows to transform rough outlines into final visuals.

- Aspect ratio considerations, like 16:9, were also addressed for more user-friendly generation.

tinygrad (George Hotz) Discord

- ILP Tames Merges: A new approach was introduced in Pull Request #8736 using ILP to unify view pairs, reporting a 0.45% miss rate.

- Participants debated logical divisors and recognized obstacles posed by variable strides.

- Masks Muddle Merges: Community members argued whether mask representation can enhance merges, though some believed it might not work for all setups.

- They concluded that masks enable a few merges but fail to handle every stride scenario.

- Multi-Merge Patterns Appear: A formal search was proposed by testing offsets for v1=[2,4] and v2=[3,6], aiming to detect patterns in common divisors.

- They envisioned a generalized technique to unify views by systematically examining stride overlaps.

- Three Views, Twice the Trouble: Enthusiasts questioned pushing merges from two to three views, fearing more complexity.

- They cited tricky strides as a stumbling block for ILP, cautioning that a 3 -> 2 view conversion won't be straightforward.

- Stride Alignment Gains Momentum: Some suggested aligning strides could lessen merge headaches, but they warned about faulty assumptions when strides don't match.

- They realized earlier methods overlooked possible merges due to flawed stride calculations, calling for deeper checks.

Torchtune Discord

- Windows Wobbles & WSL Wonders: Members noted Windows imposes some constraints, including limited Triton kernel support. They recommended using WSL for a more direct coding experience.

- They highlighted shorter setup times and suggested it would ease training performance on Windows hardware.

- Regex Rescue for Data Cleanup: A member shared a regex

[^\\t\\n\\x20-\\x7E]+that scrubs messy datasets by spotting hidden characters. Another member clarified the pattern’s components, highlighting its role in discarding non-printable text.- They urged caution when modifying the expression to avoid accidental data loss and recommended thorough testing on smaller samples.

- Triton & Xformers Tiff with Windows: Some encountered issues running unsloth or axolotl on Windows due to Triton and Xformers compatibility gaps. They pointed to a GitHub repo for potential solutions.

- They recommended exploring future driver updates or container-based approaches for installing these libraries on Windows.

LLM Agents (Berkeley MOOC) Discord

- MOOC Monday Madness Begins: As mentioned in the #mooc-questions channel discussion, the first lecture is locked in for 4 PM PT on Monday, 27th, with an official email announcement on the way.

- Organizers confirm the session will run with advanced content tailored to push LLM agents in real-world tasks.

- LLM Agents Face a High Bar: Community members noted that an LLM agent passing the course sets a heightened bar for these models' capabilities.

- They agreed that this reflects the course's intense workload and tough grading criteria, forging a unique challenge for AI participants.

Axolotl AI Discord

- Scam Flood Shakes Discord: Scam messages appeared in multiple channels, including this channel, prompting warnings.

- One user acknowledged the problem, encouraging everyone to stay alert and verify suspicious postings.

- Nebius AI Draws Multi-Node Curiosity: A member asked for experiences running Nebius AI in a multi-node environment, citing the need for real-world performance tips.

- Others chimed in with potential pointers, underscoring a desire for thorough knowledge of resource allocation and setup details.

- SLURM Meets Torch Elastic Head-On: A user lamented the difficulty in configuring SLURM with Torch Elastic for distributed training, calling it a significant obstacle.

- Another member advised checking SLURM’s multi-node documentation, suggesting many setup concepts might still apply to this scenario.

DSPy Discord

- Signature Snafu Speeds Up Confusion: One user ran into confusion with the signature definitions, citing missing source files that throw off the expected outputs.

- They suspect incomplete references caused the fiasco, raising the question of how to manage consistent file mapping.

- MATH Dataset Goes AWOL: A user attempted a maths example but found the MATH dataset removed from Hugging Face, sharing a link to a partial copy.

- They also pointed to Heuristics Math dataset, asking if anyone could suggest other solutions.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The OpenInterpreter Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!