[AINews] The world's first fully autonomous AI Engineer

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

AI News for 3/11/2024-3/12/2024. We checked 364 Twitters and 21 Discords (336 channels, and 3499 messages) for you. Estimated reading time saved (at 200wpm): 410 minutes.

Warm welcome to the >3000 people who joined from Andrej's shoutout! As we said last time, this is a side project that we're kind of embarrassed by but we are honored and hope you find this as useful as we do. The email has gotten unwieldy (originally this was only recapping the LS discord) and the plan is to move sections of this off to a more dedicated news service + offer personalization.

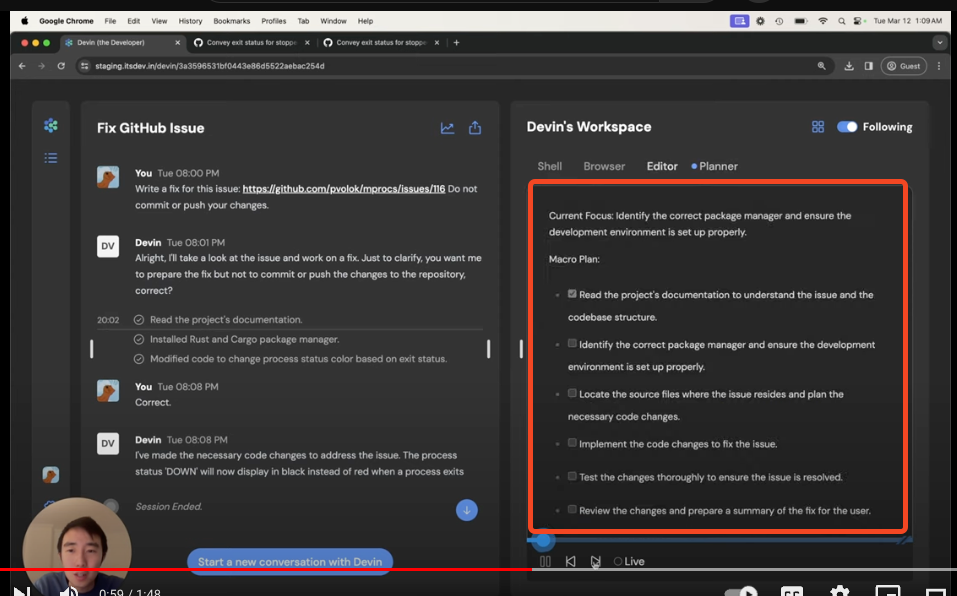

Cognition Labs's Devin is the headline AI news of the day - on the surface one of many, many "AI software engineer" startups - but the difference is in the execution:

- learning to use unfamiliar technologies by dropping in a blogpost url in a chat

- build AND DEPLOY frontend apps to Netlify

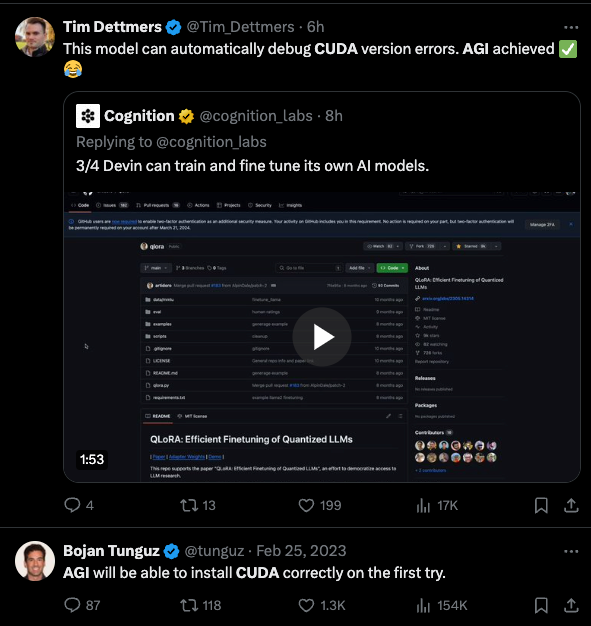

- train and finetune its own AI models (specifically Tim Dettmers impressed by it debugging CUDA version errors)

- Contribute to mature codebases

These are all very big claims, and if generally true rather than cherrypicked, would almost certainly qualify to be one of the most advanced AI agents the world has ever seen. This should of course attract skepticism, especially since only prerecorded videos were released, but credible investors like Patrick Collison and Fred Ehrsam, and beta testers like Varun and Andrew have praised the live demos.

Details are scarce:

- Their blogpost states: "With our advances in long-term reasoning and planning, Devin can plan and execute complex engineering tasks requiring thousands of decisions. Devin can recall relevant context at every step, learn over time, and fix mistakes."

- Ashlee Vance's reporting quotes: "Wu declines to say much about the technology’s underpinnings other than that his team found unique ways to combine large language models (LLMs) such as OpenAI’s GPT-4 with reinforcement learning techniques."

- Watching the videos you can see that Devin has quite a few necessary LLM OS tools:

- asynchronous chat

- browser

- shell access to a VM

- Editor with an IDE

- a "Planner" that appears to be their secret sauce?

And because the videos are all edited/sped up, it's unclear whether the latency is a concern or a temporary issue. Since Devin reports minutes worked, there's no real incentive to save here apart from UX.

Overall though, people are excited about agents and AGI again, which is always cause for celebration.

Table of Contents

- PART X: AI Twitter Recap

- PART 0: Summary of Summaries of Summaries

- PART 1: High level Discord summaries

- Unsloth AI (Daniel Han) Discord Summary

- Perplexity AI Discord Summary

- Nous Research AI Discord Summary

- LM Studio Discord Summary

- OpenAI Discord Summary

- HuggingFace Discord Summary

- Eleuther Discord Summary

- LlamaIndex Discord Summary

- LAION Discord Summary

- Latent Space Discord Summary

- Interconnects (Nathan Lambert) Discord Summary

- CUDA MODE Discord Summary

- LangChain AI Discord Summary

- OpenAccess AI Collective (axolotl) Discord Summary

- OpenRouter (Alex Atallah) Discord Summary

- DiscoResearch Discord Summary

- Alignment Lab AI Discord Summary

- LLM Perf Enthusiasts AI Discord Summary

- Skunkworks AI Discord Summary

- AI Engineer Foundation Discord Summary

- PART 2: Detailed by-Channel summaries and links

PART X: AI Twitter Recap

all recaps done by Claude 3 Opus, lightly edited by swyx for now. We are working on antihallucination, NER, and context addition pipelines.

Advances in Language Models and Architectures

- Google presents Multistep Consistency Models, a unification between Consistency Models and TRACT that can interpolate between a consistency model and a diffusion model. [210,858 impressions]

- Algorithmic progress in language models: Using a dataset spanning 2012-2023, researchers find that the compute required to reach a set performance threshold has halved approximately every 8 months, substantially faster than hardware gains per Moore's Law. [14,275 impressions]

- @pabbeel: Covariant introduces RFM-1, a multimodal any-to-any sequence model that can generate video for robotic interaction with the world. RFM-1 tokenizes 5 modalities: video, keyframes, text, sensory readings, robot actions. [48,605 impressions]

Retrieval Augmented Generation (RAG) and Tools

- Retrieval Augmented Thoughts (RAT) shows that iteratively revising a chain of thoughts with information retrieval can significantly improve LLM reasoning and generation in long-horizon tasks. RAT provides significant improvements to baselines in zero-shot prompting. [41,937 impressions]

- Cohere releases Command-R, a RAG-optimized LLM aimed at large-scale production workloads. It balances high efficiency with strong accuracy, enabling companies to move beyond proof of concept and into production. [75,829 impressions]

- @fchollet: "A simple definition of AGI: A system that can teach itself any task that a human can learn, using the same number of demonstration examples. Generality, not task-specific skill, is the issue." [49,452 impressions]

- @llama_index: "Anthropic releases a set of cookbooks for building RAG and agents with Claude, from basic RAG to advanced capabilities like routing and query decomposition, to sophisticated document agents and multi-modal applications." [46,629 impressions]

Multimodal AI and Video Understanding

- Vid2Persona allows you to talk to a person from a video clip. It has a simple pipeline from extracting traits of video characters to chatting with them. [10,326 impressions]

- VideoMamba, a State Space Model for Efficient Video Understanding, addresses the challenges of local redundancy and global dependencies in video understanding. Its linear-complexity operator enables efficient long-term modeling for high-resolution long video understanding. [18,814 impressions]

- V3D leverages the world simulation capacity of pre-trained video diffusion models to facilitate 3D generation. Fine-tuned on 360-degree orbit frames, it can generate high-quality meshes or 3D Gaussians within 3 minutes. [18,814 impressions]

Responsible AI and Bias

- @mmitchell_ai: Research shows that current practices of instruction tuning teach LLMs to superficially conceal covert racism. When overtly asked, models like GPT-4 will produce positive sentiment about African Americans, but underlying biases persist. [18,594 impressions]

- A poll of AI researchers estimates a 4-20% chance of AI catastrophe this year. Concerns raised about lack of security at AI labs potentially accelerating capabilities of adversaries. [13,686 impressions]

- @ehartford: TIME magazine's suggestion of banning open source AI models is met with strong opposition from the AI community, who argue for the importance of democratizing AI technology. [17,845 impressions]

Memes and Humor

- "The two things I hate the most in life: JIRA and communism. In that order." 114,102 impressions

- ChatGPT accidentally revealing a plan to end the culture war. 10,026 impressions

PART 0: Summary of Summaries of Summaries

Claude 3 Sonnet (14B?)

1. New AI Model Releases and Capabilities

- Cohere released Command-R, a 35B parameter model optimized for reasoning, summarization, RAG, and using external tools/APIs. Example: A YouTube video showcased Command-R's RAG capabilities.

- Cognition Labs unveiled Devin, an AI that passed the SWE-Bench coding benchmark and real engineering interviews, representing a milestone in AI software engineering. Example: Andrew Gao's tweet shared unfiltered opinions on trying Devin.

- ELLA (Efficient LLM Adapter) significantly improves text alignment in diffusion models like SD3 without retraining. Example: Discussions compared ELLA's performance to other models.

2. Accelerating and Optimizing Large Language Models

- Llama.cpp introduced 2-bit quantization to run LLMs more efficiently on standard hardware with less RAM and higher speed.

- NVMe SSDs enable fine-tuning 100B models on single GPUs, as discussed in a paper and tweet by @_akhaliq.

- Unsloth achieved 2x speedup and 40% less memory usage in LLM fine-tuning compared to normal QLoRA, without accuracy loss.

3. Open Source AI Tools and Resources

- Hugging Face introduced new features like filtering/searching Assistant names in Hugging Chat, table of contents for blogs, and all-time Space stats.

- WebGPU could make in-browser ML up to 40x faster, enabling powerful AI applications in web browsers.

- LlamaIndex hosted webinars, tutorials and meetups on building context-augmented apps, retrieval strategies, and implementing long-term memory for LLMs.

4. Analyzing and Interpreting Large Language Models

- A new model-stealing attack can extract embedding layers from black-box models like GPT-3.5 for under $20, raising security concerns.

- The Transformer Debugger tool enables automated interpretability and exploration of transformer model internals without coding.

- Discussions explored replacing tokenizers post-training for better language handling, constrained decoding techniques, and precompiling/caching function generation for efficiency.

Claude 3 Opus (>220B?)

- Nvidia's Dominance and Vulkan's Potential: Discussions in the CUDA MODE Discord highlighted Nvidia's compelling competitive advantage and software edge as nearly insurmountable, despite Vulkan's potential PyTorch backend posing a theoretical challenge. Meta's significant investment in AI infrastructure with a 24k GPU cluster and a roadmap for 350,000 NVIDIA H100 GPUs reinforces Nvidia's position (Meta's GenAI Infrastructure Article).

- Cohere's Command-R Model and RAG Capabilities: Cohere released an open-source 35 billion parameter model called "C4AI Command-R", available on Hugging Face. Discussions across Latent Space and Nous Research AI Discords focused on its retrieval augmented generation (RAG) capabilities and potential for merging with existing RAG setups. A YouTube video was shared demonstrating Command-R's long-context task handling.

- 100,000x Faster Neural Network Convergence: In the Skunkworks AI Discord,

@baptistelqtclaimed to have developed a method that accelerates the convergence of neural networks by 100,000x, applicable to various architectures including Transformers, by training models from scratch in every round.

- Devin: AI Software Engineer Benchmark: The AI community buzzed with the introduction of Devin, an AI software engineer by Cognition Labs that achieved high scores on the SWE-Bench coding benchmark. Discussions in Latent Space highlighted Devin's impressive backers and its potential to revolutionize software engineering.

- Grok's Potential Open-Sourcing: Elon Musk's tweet about potentially open-sourcing Twitter's algorithm "Grok" through xAI sparked debates across Latent Space and Interconnects Discords about the implications for open-source principles and Musk's reputation.

- Quantization Breakthroughs for Large Models: LAION discussions featured llama.cpp's "2-bit quantization" update, enabling more efficient local execution of large language models on regular hardware, as detailed in Andreas Kunar's Medium post. The potential of quantization and CPU-offloading for SD3 to adapt to varying VRAM capacities was also explored.

- Efficient Fine-Tuning with NVMe SSDs: The strategy of using NVMe SSDs for fine-tuning 100B parameter models on single GPUs was discussed in the Nous Research AI and Interconnects Discords, referencing a paper and a tweet about the Fuyou framework.

- Mac M1 GPU Utilization Issues in LM Studio: Users in the LM Studio Discord reported problems with LM Studio favoring CPUs over GPU acceleration on Mac M1 systems. Discussions involved model recommendations for specific hardware setups and using the Tensor Split feature to test GPU performance without physical modifications.

- Newcomer LLM Learning Resources: In the Eleuther Discord, users advised beginners to start with small models on Google Colab's T4 GPU, leverage GPT-4 and Claude3 despite the $20/month cost, and consult resources like Lilian Weng's Transformer Family Version 2.0 post and HazyResearch's AI System Building Blocks repository.

- Retrieval Augmented Generation (RAG) with LangChain: The LangChain AI Discord featured an open-source chatbot repository demonstrating RAG for efficient Q&A querying and a guide for building multi-modal applications with LlamaIndex. Discussions also covered troubleshooting and best practices for implementing LangChain in various applications.

ChatGPT (GPT4T)

Efficiency Innovations in AI Infrastructure: Gearing Up with GEAR and NVMe SSDs saw significant attention, focusing on large model operations and acceleration. The GEAR project for KV cache compression and the use of NVMe SSDs for fine-tuning huge models were notably discussed, indicating a growing interest in optimizing AI model efficiency through hardware innovations (GitHub - opengear-project/GEAR, arXiv paper).

Fine-Tuning Techniques and Troubleshooting: Unlocking Unorthodox Fine-Tuning Speed and Fine-Tuning Facades and Inferential Flops highlighted the community's engagement with enhancing model fine-tuning and troubleshooting. Techniques yielding a 2x speedup in LLM fine-tuning with a 40% reduction in memory usage via Unsloth-DPO generated buzz, while various challenges, including model bugs and

<unk>responses, underscored the complexity of fine-tuning practices (Unsloth-DPO).AI Model and Framework Development Insights: ELLA Elevates Text-to-Image Diffusion Models and Promising Advances & Discussions in AI showcased discussions around model improvements and framework developments. ELLA's boost to text-to-image model comprehension and the unveiling of efficient large language model adapters were among the advancements sparking interest, reflecting the ongoing evolution and specialization within AI technology spheres.

Community Collaborations and Technical Sharing: Community Collaboration on AI Project Development and Dev Days and RAG Nights demonstrated vibrant collaborative efforts across platforms. Shared resources for fine-tuning models with customer data, advice for AI project development, and developer series on creating context-augmented applications highlighted the importance of community support and knowledge exchange in accelerating AI innovation.

These themes collectively underscore a dynamic and collaborative AI research and development environment, with a focus on optimizing model efficiency, advancing fine-tuning methodologies, fostering innovation in AI model and framework development, and leveraging community collaboration for shared growth and learning.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord Summary

- Gearing Up with GEAR and NVMe SSDs: The community engaged with the concept of efficiency in large model operations:

@remek1972pointed to the GEAR project (GitHub - opengear-project/GEAR) for KV cache compression, and@iron_bounddiscussed an approach for fine-tuning huge models using NVMe SSDs to enable acceleration on a single GPU, referencing an arXiv paper.

- Unlocking Unorthodox Fine-Tuning Speed:

@lee0099showcased a 2x speedup in LLM fine-tuning with a 40% reduction in memory usage via Unsloth-DPO, generating excitement amongst users like@starsupernovain the showcase channel.

- Dependency Dilemmas and AI Modeling Tools: Development environment troubles made the rounds;

@maxtensorgrappled with package incompatibilities, nudging@starsupernovato suggest using a specific PyTorch wheel for resolving xformers installation issues, further recommending Windows Subsystem for Linux (WSL) for better experiences.

- Fine-Tuning Facades and Inferential Flops: In the help channel, users navigated challenges ranging from

@dahara1identifying a Gemma model bug post-Unsloth update to@aliissatrying to troubleshoot<unk>responses from a model forcing@starsupernovato suspect padding and template issues.

- ELLA Elevates Text-to-Image Diffusion Models: A discussion kicked off in random with

@tohrniisharing a paper on ELLA (Efficient Large Language Model Adapter) to boost text-to-image models' understanding of prompts, and the evergreen Windows vs Linux debate continued with operational preferences dissected between@maxtensorand@starsupernova.

Perplexity AI Discord Summary

- Claude 3 Opus Use Limited in Perplexity Pro: Users reported that on Perplexity Pro, Claude 3 Opus offers a mere 5 uses compared to 600 for other LLMs like Claude Sonett. Discussions also touched on Perplexity's competitiveness and speculated on an ad-based Pro model, referencing tweets from Perplexity's CEO about competitors' pricing strategies and attempts to hire AI researchers.

- Recruitment Queries and Advice in Perplexity: A user expressed interest in job opportunities with Perplexity AI, with guidance provided to check the careers page without directly tagging the team. Another user sought assistance to improve the pplx API for use in a personal assistant project.

- Features and Functionality of LLMs Debated: There was debate over whether Perplexity uses external models like Gemini or has its own models, with users noting similarities in responses to Gemini's API. Inquiries about Yarn-Mistral-7b-128k model use for high-context conversations were raised, alongside questions about pplx API retrieving web sources within replies.

- Pro Users Seek Effective Utilization Strategies: Pro users discussed logo design use and uploading pdf scripts for queries, with an emphasis on how to effectively leverage Perplexity for specific use cases.

- Content Sharing in the Perplexity Community: Members shared resources on topics ranging from AI discoveries, space junk re-entry, CSS insights, and the classification of strawberries, emphasizing the importance of making threads shareable within the community.

Nous Research AI Discord Summary

- 100,000x Faster Neural Network Convergence: @baptistelqt claims to have developed a method that accelerates the convergence of neural networks by 100,000x, applicable to various architectures including Transformers, with a forthcoming paper.

- Promising Advances & Discussions in AI: Cohere’s C4AI Command-R, a 35 billion parameter model optimized for reasoning and summarization, was discussed alongside Command-R's GitHub demo. The Deepseek-VL model emerged as a potential disruptor, and debates ensued on replacing tokenizers in pre-trained models with dual tokenization.

- Merging Command-R and RAG: CohereForAI’s C4AI Command-R model has been highlighted for its ability to facilitate RAG calls with a simplified search method, with a Model Card available. Practical applications of AI in game development were also showcased.

- Insights and Incidents in AI Governance: Discussions touched upon Mark Zuckerberg and Elon Musk's contrasting views and foresight on AI. Legal considerations of model licensing, such as the Nous Hermes 2, were scrutinized, while the concept of recursion in function-calling LLMs was eagerly anticipated.

- Technical Challenges & Tooling in AI: Troubleshooting tokenizer replacement for language-specific handling, fine-tuning model sizes, memory requirements, and structuring model outputs became focal points. Various tools like llama-cpp and qlora were mentioned for assistance in specific AI development tasks.

LM Studio Discord Summary

- M1 Macs Skipping GPU Acceleration: Users such as

@maorweand@amir0717reported that LM Studio occasionally favors CPUs over GPU acceleration on Mac M1 systems, as well as on different setups including a GTX 1665 Ti with 16 GB of RAM. Discussions involved exploring model options that perform better on specific hardware, with suggestions pointing towards the deepseek-coder-1.3b-instruct-GGUF.

- Unleashing Model Potentials with Tensor Splits: Configurations like setting Tensor Split to "0, 100" were discussed to test the performance of specific GPUs for LM Studio without physical alteration of hardware connections. It was noted that dual GPU setups and advanced motherboards with PCIe 4.0, such as the MSI Meg X570, could optimize RTX 3090 performance.

- Eager Eyes on LM Studio's Next Move: Users are keenly anticipating new features in upcoming updates for LM Studio, including improved chat "Continue" behavior and enhanced version.16 support, with a notable call for attention towards an updated AVX beta version.

- Choosing Clouds or Chips for LLMs?: A debate brewed over the preference for cloud services vs. local hardware when running large language models (LLMs). Factors like cost, confidentiality, and cloud provider grants such as those from Google were mentioned as significant considerations.

- Exploring Alternatives for Optimal LLM Performance: While no clear recommendation surfaced for models capable of enhancing storytelling, a user noted difficulties with stablelm zephyr 1.5 GB producing incomplete C++ code. Others discussed alternative platforms like KoboldAI and CrewAI, and weighed the graphical advantages of AutoGen's interface in addition to monitoring closed token generation loops to conserve computational resources.

OpenAI Discord Summary

- Extended Video Ambitions Meet Technical Restraints: Discussions have been centered on the SORA AI's ability to create extended 30-minute footage, with technical constraints such as memory limitations highlighted as current challenges. While SORA is bounded by these limitations, it is technically possible for it to generate extended videos in sections as detailed in an OpenAI research paper.

- Confusion and Clarification over GPT-3.5 Subscription Tiers: Users are seeking clarity on the differences in GPT-3.5 models across subscription tiers, with the primary distinction being usage limits rather than any feature differences. The updates post the GPT-3.5 knowledge cutoff were also a topic of interest, raising questions about version discrepancies between API and ChatGPT versions.

- Peer Assistance Elevates Prompt Engineering Practices: One user,

@darthgustav., has been advising on enhancing consistency in GPT outputs utilizing an output template with variable names representing the instructions to maintain consistency. Challenges such as rewriting texts and dealing with HTML snippets are being addressed through collaborative problem solving and shared resources, emphasizing the need to adhere to OpenAI's terms of use and usage policies.

- Diverging Experiences with AI Models: Users compared their experiences with different AI models, like Claude Opus and GPT-4, noting that Claude may offer more creative and concise outputs than GPT-4, which sometimes leans towards generating bullet points or less engaging content.

- Community Collaboration on AI Project Development: The guild has become a hub for collaborative AI project development, including members sharing resources for fine-tuning models with customer data and providing advice for a solitaire instruction robot project using GPT and OpenCV. Resources included a GitHub notebook mistral-finetune-own-data.ipynb which appears to be a valuable resource for custom fine-tuning needs.

HuggingFace Discord Summary

Hugging Face Introduces Handy New Features: Hugging Chat now lets users filter and search for Assistant names, and the Hugging Face blog includes a new "table of contents" for ease of access. All-time stats are now available in Hugging Face Spaces, enabling creators to assess their space's popularity more comprehensively.

WebGPU Poised to Accelerate In-Browser ML: @osanseviero from Hugging Face indicated that WebGPU could potentially speed up machine learning in browsers by up to 40 times.

Expanding Developer Resources and Learning: The latest releases of Transformers.js 2.16.0, Gradio 4.21, and Accelerate v0.28.0 bring developers new features. Additionally, a new course titled Machine Learning for Games was announced by @ThomasSimonini.

Cutting-Edge Tools and Model Discoveries Across Channels:

- Portuguese Language Model - Mambarim-110M: Announced by @dominguesm, it's a new Portuguese LLM named Mambarim-110M, based on the Mamba architecture and pre-trained on a 6.2 billion token dataset (Hugging Face, GitHub).

- GitHub user @210924_aniketlrs02 is seeking guidance on applying a wav2vec2 codebook extraction script for extracting quantized states from the Wav2Vec2 model.

- Lucid Dream Project and Vid2Persona: Unique projects using Web-GL and conversational AI with video characters were shared on Hugging Face spaces, showcasing innovative applications of AI (The Lucid Dream Project).

- Microsoft introduced AICI: Prompts as (Wasm) Programs, enhancing prompt handling for AI applications (AICI on GitHub).

Concepts and Models Discussed for Practical AI Implementation: - Debate on action-based AI's future, involving integration of Large Language Models with APIs. - Questions raised about practical AI applications included potential hardware hacks like using PS5 consoles for powerful VRAM modules. - A discussion was sparked by a reference to using PS3 consoles as a supercomputer, highlighting gaming hardware's potential in mind-bending computational tasks. - Trials and challenges of using gaming consoles like the PS5 for computing tasks were also highlighted, along with driver limitations and lack of connections like Nvidia's NVLink in AMD and Intel cards.

Evolving the AI Discourse in Natural Language Processing: - Methodologies to evaluate clarity and emotions in discourse with Large Language Models were queried. - Seeking the best model for NSFW uncensored translation, focusing on accuracy and optimization. - Strategies to identify out-of-distribution words or phrases in documents were requested. - LightEval, a lightweight LLM evaluation suite by Hugging Face, was suggested for benchmarking LORA-esque techniques.

Eleuther Discord Summary

- Exploring LLM Learning Without GPUs:

_michaelshreceived advice for learning Large Language Models without a GPU, leveraging tools like Google Colab's T4 GPU to work with moderately sized models including Gemma and TinyLlama.

- Learning Materials and Costs for LLM Enthusiasts: Potential resources for LLM education, such as YouTube and paid access to LLMs like GPT-4 and Claude3, were suggested to

_michaelsh, pinpointing costs around $20 a month.

- Security Flaws in LLMs: An emergent model-stealing attack was discussed, which extracts projection matrices from models like gpt-3.5-turbo, igniting debate on the ethics and legality of exposing such vulnerabilities.

- Transformer Debugging Tools and Interpretability:

@stellaathenaqueried the compatibility of the transformer-debugger with Hugging Face trained models, while@millanderexamined a paper on removing unwanted capabilities from AI models through pruning, questioning the utility tradeoff in language models compared to image classifications.

- Hyperparameter Headaches and Benchmark Blues: Poor evaluation performance using Llama hyperparameters with higher learning rates has

@johnnysandsspeculating on the need for more annealing, while@epicxpondered the decline in popularity of historical benchmarks such as SQuAD and the Natural Questions leaderboard.

LlamaIndex Discord Summary

- Ready, Set, Memorize!: A webinar on MemGPT discussing long-term, self-editing memory for LLMs is scheduled for Friday at 9 am PT. It features notable speakers and aims to explore virtual context management challenges for large language models, as highlighted by

@jerryjliu0. Catch the webinar registration at Sign up for the event.

- Dev Days and RAG Nights:

@ravithejadsintroduced a series on creating context-augmented applications with Claude 3 using LlamaIndex, while@hexapodeinvites developers to a RAG meetup in Paris, discussing advanced RAG strategies. Revelations from the series and event details can be found on Twitter and Twitter respectively.

- Insights, Queries, and LLM Snippets: Discussions regarding RAG deployment, global query parameters in MistralAI, and vector store issues were serviced by

@whitefang_jr, providing links to corresponding full-stack application and Github source code. A guide for creating multi-modal applications with LlamaIndex was shared for those interested in language-image integration.

- Matryoshka and Claude Chronicles: The AI community is buzzing with talks of a Matryoshka Representation Learning paper discussion hosted by Jina AI, featuring Aditya Kusupati and Aniket Rege, register here. Additionally, a quest for an open-source GUI/frontend for Claude 3 was raised by

@vodros, along with the announcement of a new LLM Research Paper Database by@shure9200, a repository aimed at aggregating quality research papers.

- Engineers' AI Paper Repository Alert: Highlighting a valuable resource for AI researchers,

@shure9200introduced an LLM papers database curated to keep one abreast with the field's academic progressions. A paper discussion on Matryoshka Representation Learning with invites extended to engineers to join and engage with the paper's authors.

LAION Discord Summary

- ELLA Enhances Text-to-Image Precision: The introduction of ELLA has been highlighted, showcasing its ability to improve text alignment in text-to-image diffusion models such as SD3, without necessitating additional training. Technical specifics and comparisons can be explored further on Tencent's ELLA website.

- Llama.cpp Revolutionizes LLMs on Standard Hardware: A new "2-bit quantization" breakthrough in llama.cpp, discussed by

@vrus0188, allows running Large Language Models more efficiently on standard hardware. Insights and development details are covered in a Medium post by Andreas Kunar.

- SD3's Expanding Horizons: The potential of SD3 was examined, noting its advantage from the absence of cross-attention and its capacity to integrate image and text embeddings. Additionally, there's ongoing deliberation about model scalability, quantization methods, and model performance on commonplace GPUs.

- Quantization and CPU-Offloading Enable SD3 for Diverse Hardware: Strategies like quantization and CPU-offloading could make SD3 more accessible, by adapting to different VRAM capacities. The discussion highlighted the implications concerning execution times and performance trade-offs.

- Vulnerability of Transformer Models Unveiled for Less Than $20: An arXiv paper revealed an attack technique capable of recovering parts of transformer models, notably those of OpenAI, for a trivial cost, leading to discussions about model security and subsequent API changes by OpenAI and Google.

- Delving Into the Depths of LAION-400M Dataset: The LAION-400-MILLION images & captions dataset was acknowledged for its significance in a piece by Thomas Chaton, with a link to the article provided for those interested in the dataset's potential: Explore LAION-400M Dataset.

Latent Space Discord Summary

- AI "Devin" Dazzles Developers: Cognition Labs' AI named "Devin" has excelled on the SWE-Bench coding benchmark, with the potential to revolutionize software engineering. The discussion referenced Devin's backings and capabilities, including its ability to write entire programs independently as highlighted in tweets by Neal Wu and Ashlee Vance.

- Elon's "Open" AI Move Sparks Debate: A debate ensued regarding Elon Musk's proposition to potentially open-source Twitter's algorithm "Grok," with opinions diving into open-source ethos and the reputational ramifications for Musk discussed against the backdrop of his tweet.

- Weightlifting AI Research: A new DeepMind paper focused on extracting weights from parts of an AI model's embedding layer triggered discussion on the complexity and current mitigation measures to prevent weight extraction.

- Karpathy Marks a Milestone: Community members celebrated Andrej Karpathy's new achievement, discussing his contribution to AI and its implications for the future of content creation.

- Truffle-1: Next-Gen AI Inference on a Budget: The announcement of Truffle-1, an affordable AI inference engine by Truffle, attracted attention for its low power usage, affordability, and potential impact on running open-source AI models, as per the launch tweet.

Interconnects (Nathan Lambert) Discord Summary

- Elon Musk Hints at Open Sourcing Grok: In a tweet,

@elonmuskhinted at Grok being open-sourced by xAI which caught the attention of community members, though concerns about the proper definition of open source were raised.

- Cohere Unleashes Command-R for Academia: Command-R, a new large-scale generative model, has been presented by Cohere, with a focus on enabling production-scale AI applications and academic access to its weights.

- Pretraining Costs Dive: Users discussed the dropping costs of pretraining models like GPT-2 now within the sub-$1,000 range, citing Mosaic Cloud figures from September 22, and a Databricks blog post titled "GPT-3 Quality for $500k" for further insights into economy and scale (Databricks Blog).

- Meta's Massive AI Infrastructure Expansion: Meta intends to power its AI infrastructure with a massive assembly of 350,000 NVIDIA H100 GPUs by the end of 2024, as detailed in a news release, which aims to support projects including Llama 3.

- Subscriber Appreciation in the Community: A member expressed discontent with their "Subscriber" status, but was reassured by

@natolambert, who emphasized the essential role subscribers play in sustaining the community.

CUDA MODE Discord Summary

Nvidia's Moat vs. Vulkan's Potential: Nvidia's dominance in the GPU landscape continues to be a point of fascination, with discussions highlighting Nvidia's compelling competitive advantage and software edge as nearly insurmountable, despite Vulkan's potential Pytorch backend posing a theoretical challenge. Users also expressed the complexities of working with Vulkan due to setup and packaging reminiscent of CUDA issues. Meta's significant investment in AI infrastructure with a 24k GPU cluster and a roadmap for 350,000 NVIDIA H100 GPUs reinforces Nvidia's dominance in the field (Meta's GenAI Infrastructure Article).

Triton Community Gathers: The Triton programming language community is preparing for an upcoming meetup on 3/28 at 10 AM PT. Interaction with the community and information about the meeting can be accessed through the Triton Lang Slack channel and its GitHub discussions page.

CUDA Development Insights and Tips: Discussions related to CUDA included the benefits of thread coarsening for enhanced performance, the optimization of Visual Studio Code for CUDA development, and suggestions for learning specific CUDA data types and threads. A detailed c_cpp_properties.json configuration setup for VS Code was shared, highlighting necessary includes for CUDA toolkit and PyTorch.

PyTorch Ecosystem Active Discussions: Within the PyTorch community, questions were raised regarding the performance differences between libtorch and load_inline, clarification on the role of Modular in optimizing kernel compatibility with GPU architectures, and an open call for feedback on torchao RFC #47 to simplify the integration of new quantization algorithms and data types.

NVIDIA Innovations and Training Resources: The CUDA community touched upon NVIDIA's leading-edge techniques like Stream-K and Graphene IR, which promise significant speedups and optimizations in matrix multiplication on GPUs, and shared a link to the CUTLASS repository (NVIDIA Stream-K Example). For CUDA learners, a comprehensive CUDA Training Series on YouTube, along with its associated GitHub materials, was recommended (CUDA Training Series GitHub).

PMPP and Other Learning Resources: The "Programming Massively Parallel Processors" (PMPP) book was noted for not extensively covering profiling tools, with ancillary content available through associated YouTube videos. Additional CUDA coursework concerns were addressed, including questions about spacing in CUDA C++ syntax and exercise solutions for the PMPP 2023 edition.

Ring Attention Troubleshooting and Coordination: A user offered GPU availability for stress testing ring attention code and coordinated meeting times aligned with US daylight saving changes, while seeking advice after encountering high training loss. WANDB was used as an evaluation tool for training sessions.

Off-topic Rumors and AI Developments: Speculative discussions about Inflection AI and Claude-3 led to clarification via a debunking tweet. A cryptic image sparked curiosity, and attention was drawn to a new AI software engineer named Devin, developed by Cognition Labs, which promises new benchmarks in software engineering, with a real-world test publicized by @itsandrewgao (Andrew Kean Gao's Tweet).

LangChain AI Discord Summary

- Effective Prompt Crafting Resolves Langchain Issues:

@a.asifresolved an issue by creating an appropriate prompt, highlighting the importance of context in achieving the desired response.

- Progress with Langchain and Claude Integration: The Chat Playground now includes Claude V3 model support as per a pull request by

@dwb7737, marking a significant update for developers utilizing Langchain.

- Building Chatbots with Enhanced Retrieval Abilities:

@haste171shared an open-source AI chatbot repository that leverages RAG for Q/A querying, while@ninamanisought advice on switching tochatmode in their chatbot development with new LLMs, specifically a finetuned version of llama-2.

- Learning Resources for Langchain Users: New tutorials, such as "Chatbot with RAG" and "INSANELY Fast AI Cold Call Agent- built w/ Groq" were shared on YouTube by

@infoslackand@jasonzhou1993, providing practical guidance for building AI applications with Langchain and the Groq platform.

- Community Collaboration and Support for Langchain Implementation: Discussions include seeking Langchain to LCEL conversion guidance, resolving issues with iFlytek's Spark API, and optimizing LangServe usage as the community provides support and solutions for each other's technical challenges.

OpenAccess AI Collective (axolotl) Discord Summary

- Fix on Flash Attention for RTX Series:

[flash_attention: false]and[sdp_attention: true]in the YAML configuration were recommended for an issue with disabling flash attention on RTX 3090 or 4000 series GPUs.

- Cohere's Big Surprise with 'C4AI Command-R': Cohere released an open-source 35 billion parameter model called "C4AI Command-R," now available on Hugging Face.

- DoRA Gains Support in PEFT PR Merge: Hugging Face merged a PR adding DoRA support for 4bit and 8bit quantized models, though it's mentioned that DoRA supports only linear layers for now with a caveat for merging weights during inference.

- Optimizing AI Training with NVMe SSDs: A strategy involving NVMe SSDs for efficient fine-tuning of 100B parameter models on single GPUs was discussed, citing a tweet by AK.

- Advanced Axolotl and DeepSpeed Discussions: Questions regarding DeepSpeed's API in Axolotl were raised with a DeepSpeed PR under review, and a recent fix in Axolotl that might solve poor evaluation results with Mixtral training.

OpenRouter (Alex Atallah) Discord Summary

- In Search of a ChatGPT 3.5 Substitute:

@mka79requested alternatives to ChatGPT 3.5 for office use, emphasizing the need for privacy, reduced censorship, and exclusion of user data in training. - Gemma's New Contender: The new Openchat model based on Gemma has been promoted by

@louisdck, claiming performance on par with Mistral models and an edge over other Gemma models. - Hermes Has Left the Building: Access issues with the Nous Hermes 70B model were confirmed, and

@louisgvindicated the model will be offline indefinitely with a planned update to prevent access during this downtime. - Openchat and Gemma Users Hit Timeout: Due to abuse, free models including Openchat and Gemma are temporarily disabled for users without credits, with promises from

@alexatallahto work on restoring access. - Cheat Layer Spearheads New Free Autoresponding Feature:

@gpumanhighlighted Cheat Layer's new free autoresponding service on websites, leveraging OpenRouter, and urged users to report any issues to their support team; discussions about open-sourcing Open Agent Studio and integrating OpenRouter were also mentioned.

DiscoResearch Discord Summary

- Open-Source Throws Down the Gauntlet to GPT-4: A humorous prediction within the community anticipates open-source models potentially surpassing GPT-4, sparking interest in setting up a comprehensive benchmark evaluation. Meanwhile,

@.calytrixhas signaled an intention to compare various models to GPT-4 under a rigorously controlled testing environment.

- FastEval on Steroids: The community discussed enhancing FastEval with flexible backends, such as Llama.cpp or Ollama, following an instance where FastEval was modified to expand its usability beyond its original scope.

- RAG tag, You're It: Members debated the optimal placement of context and RAG instructions for prompt engineering, with opinions differing based on Specialized Fine-Tuning (SFT) experiences and whether the model had exposure to [SYS] and [USER] tags during training.

- New Tools to Dissect Transformer Brains: A new tool for getting under the hood of transformers was spotlighted: the Transformer Debugger, announced by

@janleike, promises to offer automated interpretability and a way to explore model internals without the need to code.

- Beware of Non-Standard Model Ecosystems: The community discussed issues with non-English text generation in the

DiscoResearch/mixtral-7b-8expertmodel, with a recommendation to use the official implementation at Hugging Face's Mixtral-8x7B-v0.1 for better reliability. Additionally, a need for clearer labeling of experimental models likeDiscoResearch/mixtral-7b-8expertwas recognized.

- tinyMMLU Big Potential in Small Package: Interest was expressed in tinyMMLU benchmarks on Hugging Face, suggesting an efficient means to run translations while exploring benchmark utility.

- Hellaswag Benchmark Reveals the Power of Patience: The observation was made that the Hellaswag benchmark shows significant noise, with score fluctuations evident even after 1000 data points, highlighting the need for extensive testing to achieve stable and meaningful results.

Alignment Lab AI Discord Summary

- Seeking Efficient Minimodels: User

@joshxtinquired about the best small embedding model with 1024+ max input for local use with low RAM, but no further discussion followed.

- Diving into Mermaid for Diagrams:

@tekniumqueried about Mermaid graphs and@lightningralfresponded with explanations and resources including the Mermaid live editor and the GitHub repository.@joshxtshowcased Mermaid's capabilities with a complex system example and its utility on GitHub for generating visualizations from code.

- Under the Code Avalanche:

@autometahumorously lamented the pile-up of coding tasks and then proceeded to take action by working on a Docker environment for the team. Offering a $100 bounty for Docker setup assistance and an open call for collaboration on open science/research projects, they also delegate tasks, including Docker responsibilities, to@alpinfor further progress.

LLM Perf Enthusiasts AI Discord Summary

- Grok Going Public:

@elonmusktweeted about Grok being open-sourced by@xAIthis week, enticing discussions around potential uses and benefits within the open source community. Elon Musk's tweet has spurred anticipation amongst the users.

- Command-R Query:

@potrockis seeking insights on local implementation of Command-R, encouraging other users to share their experiments and results with the new tool.

- The Token Limit Conundrum:

@alyosha11brought up challenges with the 4096 token limit in gpt4turbo, prompting discussions on workarounds and expectations for future model improvements.

- Unraveling the GPT-4.5 Turbo Mystery:

@arnau3304initiated a debate with a Bing search link indicating the rumor of a GPT-4.5 Turbo; however,@dare.aiand others showed skepticism, noting the uncertainty without official confirmation.

- Azure Migration Curiosity: User

@pantsforbirdsexpressed interest in the experiences of migrating from OpenAI's SDK to Azure's platform, calling for advice on potential hurdles and tips, demonstrating a common interest in cloud AI service platforms.

Skunkworks AI Discord Summary

- Quantum Speedup in AI Training Unveiled: @baptistelqt has achieved a 100,000-fold acceleration in training convergence by employing an innovative method of training AI models from scratch in every round.

- Game Coding with Claude 3: @pradeep1148 shared a YouTube video illustrating the development of a game based on Plants Vs Zombies using Claude 3 and Python.

- Diving Deep with Command-R's RAG: A YouTube exploration into Command-R's capabilities in handling long-context tasks via retrieval augmented generation and external APIs has also been presented by @pradeep1148.

AI Engineer Foundation Discord Summary

- Plugin Pow-Wow Pending: A discussion spearheaded by

@hackgooferwas initiated on the configurability of plugins, specifically the viability of token-based authorization as a plugin argument. Concerns were raised about whether this security measure is sufficient.

- Casting Call for Project Proposals: Members have been invited to propose new projects, with the scope and guidelines provided in a Google Docs guide. Opportunities for collaboration, including one with Microsoft, were highlighted.

PART 2: Detailed by-Channel summaries and links

Unsloth AI (Daniel Han) ▷ #general (237 messages🔥🔥):

- Inquiries about Using Fine-Tuned Models:

@animesh.adsought help for testing a fine-tuned Gemma-2 model uploaded to the hub without using a GPU, only to get advice on using "normal HF code" from@starsupernova. After some struggle,@animesh.adclarified that the model was inaccessible due to a wrong path but was advised by@starsupernovato retry the process since the names of the essential files might be incorrect. - Kaggle Notebook Troubles and Success:

@simon_vtrshared issues with running inference on Kaggle without internet connectivity. Despite hurdles like dependencies not being installed (bitsandbytes,xformers),@simon_vtreventually reported success with running Unsloth models offline. - Unsloth Features and Future Development:

@theyruinedeliseand@starsupernovahighlighted Unsloth's specificity in bug fixes over Google and Hugging Face implementations, discussing limitations and features of the models.@theyruinedeliseteased the upcoming Unsloth Studio for one-click finetuning. - RoPE Kernel Optimization Suggestion:

@drinking_coffeeproposed an improvement to the RoPE kernel by optimizing computations along axis 1 using a group approach.@starsupernovashowed interest in the suggestion and encouraged a pull request for incorporating the enhancement. - New Users & Community Engagement: The channel welcomed new members like

@remybigbossdirected from sources like Twitter posts by Micode and Yannic.@theyruinedeliseappreciated the visibility boost and encouraged GitHub stars.

Links mentioned:

- Google Colaboratory: no description found

- Kaggle Mistral 7b Unsloth notebook: Explore and run machine learning code with Kaggle Notebooks | Using data from No attached data sources

- Kaggle Mistral 7b Unsloth notebook Error: Explore and run machine learning code with Kaggle Notebooks | Using data from No attached data sources

- Kaggle Mistral 7b Unsloth notebook Error: Explore and run machine learning code with Kaggle Notebooks | Using data from No attached data sources

- CohereForAI/c4ai-command-r-v01 · Hugging Face: no description found

- danielhanchen (Daniel Han-Chen): no description found

- Paper page - Adding NVMe SSDs to Enable and Accelerate 100B Model Fine-tuning on a Single GPU: no description found

- GitHub - stanford-crfm/helm: Holistic Evaluation of Language Models (HELM), a framework to increase the transparency of language models (https://arxiv.org/abs/2211.09110). This framework is also used to evaluate text-to-image models in Holistic Evaluation of Text-to-Image Models (HEIM) (https://arxiv.org/abs/2311.04287).: Holistic Evaluation of Language Models (HELM), a framework to increase the transparency of language models (https://arxiv.org/abs/2211.09110). This framework is also used to evaluate text-to-image ...

- GitHub - unslothai/unsloth: 5X faster 60% less memory QLoRA finetuning: 5X faster 60% less memory QLoRA finetuning. Contribute to unslothai/unsloth development by creating an account on GitHub.

- GitHub - unslothai/unsloth: 5X faster 60% less memory QLoRA finetuning: 5X faster 60% less memory QLoRA finetuning. Contribute to unslothai/unsloth development by creating an account on GitHub.

Unsloth AI (Daniel Han) ▷ #welcome (12 messages🔥):

- Warm Welcomes & Important Reads:

@theyruinedelisegreeted the group warmly and reminded everyone to read channel <#1179040220717522974> and to assign their roles in <#1179050286980006030>. - Game Talk in the Welcome Channel:

@emma039598inquired if any group members play games, with a positive response from@theyruinedelisementioning favorites like League of Legends, Elden Ring, and Soma. - Greeting Newcomers:

@starsupernovajoined in to welcome newcomers to the server. - Casual Gaming Chat:

@emma039598stated a preference for RPGs when asked by@theyruinedeliseabout gaming preferences. - Expressions of Welcome and Joy:

@theyruinedeliseexpressed a simple "win" in the chat, and@chelmoozgreeted everyone with a friendly "coucou".

Unsloth AI (Daniel Han) ▷ #random (9 messages🔥):

- Introducing ELLA for Improved Text-to-Image Diffusion Models:

@tohrniishared an arxiv paper focusing on the Efficient Large Language Model Adapter (ELLA) which aims to enhance text-to-image diffusion models' comprehension of complex prompts without the need for retraining. - Windows vs Linux for AI Development:

@maxtensorand@starsupernovadiscussed their development environments with@starsupernovamainly working on Colab and Linux, and mentioning a lack of GPU on their Windows machine. - Dependency Hell Strikes:

@maxtensordetailed a frustrating dependency conflict in an Ubuntu WSL environment, where attempts to integrate new AI tools led to incompatible package versions, notably with torch, torchaudio, and torchvision. - Searching for Solutions in Software Dependencies:

@starsupernovasuggested trying to install xformers with a specific PyTorch wheel index to potentially resolve dependency issues that@maxtensorencountered. - The Grind of AI Model Training:

@thetsar1209expressed their distress with an emoji over the lengthy model training process as indicated by the progress output "20/14365 [07:23<74:37:53, 18.73s/it]".

Links mentioned:

- ELLA: Equip Diffusion Models with LLM for Enhanced Semantic Alignment: Diffusion models have demonstrated remarkable performance in the domain of text-to-image generation. However, most widely used models still employ CLIP as their text encoder, which constrains their ab...

- no title found: no description found

Unsloth AI (Daniel Han) ▷ #help (272 messages🔥🔥):

- Gemma Model Conversion Saga:

@dahara1discovered that converting the Gemma model to gguf format requiresconvert-hf-to-gguf.pyinstead ofconvert.py, sharing a crucial GitHub pull request for reference. They also found a potential bug with Unsloth's handling of Gemma after a certain update, noting that a model created with Unsloth doesn't infer correctly on a local PC but works post-upload to Hugging Face.

- Quantization Quirks Uncovered: Users

@banu1337and@starsupernovadebated the difficulties of quantizing models, with@banu1337specifically struggling to quantize a Mixtral model even with significant GPU resources.@starsupernovarecommended GGUF as an alternative, noting its support in Unsloth, and suggested 2x A100 80GB GPUs should suffice for the task.

- Learning from LLMs:

@abhiabhi.engaged in a philosophical conversation with@starsupernova, touching upon learning rates, warmup steps, and the nature of intelligence arising from deterministic machines.

- Unsloth on Windows Woes:

@ee.ddfaced challenges installing xformers via Conda on Windows and was advised by@starsupernovato try apipinstallation instead. After further troubles,@starsupernovarecommended using WSL for a smoother experience.

- Model Loading Mysteries:

@aliissasought assistance with problems using NousResearch/Nous-Hermes-2-Mistral-7B-DPO without fine-tuning, as they only received<unk>values in response.@starsupernovasuggested it might be due to padding and the absence of the appropriate chat template.

Links mentioned:

- Repetition Improves Language Model Embeddings: Recent approaches to improving the extraction of text embeddings from autoregressive large language models (LLMs) have largely focused on improvements to data, backbone pretrained language models, or ...

- no title found: no description found

- Gemma models do not work when converted to gguf format after training · Issue #213 · unslothai/unsloth: When Gemma is converted to gguf format after training, it does not work in software that uses llama cpp, such as lm studio. llama_model_load: error loading model: create_tensor: tensor 'output.wei...

- KeyError: lm_head.weight in GemmaForCausalLM.load_weights when loading finetuned Gemma 2B · Issue #3323 · vllm-project/vllm: Hello, I finetuned Gemma 2B with Unsloth. It uses LoRA and merges the weights back into the base model. When I try to load this model, it gives me the following error: ... File "/home/ubuntu/proj...

- VLLM Multi-Lora with embed_tokens and lm_head in adapter weights · Issue #2816 · vllm-project/vllm: Hi there! I've encountered an issue with the adatpter_model.safetensors in my project, and I'm seeking guidance on how to handle lm_head and embed_tokens within the specified modules. Here'...

- GitHub - unslothai/unsloth: 5X faster 60% less memory QLoRA finetuning: 5X faster 60% less memory QLoRA finetuning. Contribute to unslothai/unsloth development by creating an account on GitHub.

- Tutorial: How to convert HuggingFace model to GGUF format · ggerganov/llama.cpp · Discussion #2948: Source: https://www.substratus.ai/blog/converting-hf-model-gguf-model/ I published this on our blog but though others here might benefit as well, so sharing the raw blog here on Github too. Hope it...

- py : add Gemma conversion from HF models by ggerganov · Pull Request #5647 · ggerganov/llama.cpp: # gemma-2b python3 convert-hf-to-gguf.py ~/Data/huggingface/gemma-2b/ --outfile models/gemma-2b/ggml-model-f16.gguf --outtype f16 # gemma-7b python3 convert-hf-to-gguf.py ~/Data/huggingface/gemma-...

- unsloth/unsloth/save.py at main · unslothai/unsloth: 5X faster 60% less memory QLoRA finetuning. Contribute to unslothai/unsloth development by creating an account on GitHub.

Unsloth AI (Daniel Han) ▷ #showcase (10 messages🔥):

- Unsloth Doubles Fine-Tuning Speed:

@lee0099fine-tunedyam-peleg/Experiment26-7Busing Unsloth-DPO, achieving a 2x speedup and 40% memory usage reduction for LLM fine-tuning without accuracy loss when compared to normal QLoRA. - Experiment26 Goes Public: In the showcase,

@lee0099introducedyam-peleg/Experiment26-7B, an experimental model hosted on Hugging Face with a focus on refining LLM pipeline research and identifying potential optimizations. - Community Support for Experiment26:

@starsupernovaexpressed excitement about@lee0099's fine-tuning advancements with the phrase, "Very very cool!" indicating strong community endorsement. - Suggestion to Display Fine-Tuned Models:

@starsupernovainvited@1053090245052219443to showcase their fine-tuned Gemma model in the channel. - Gemma Model Performance Showcase:

@kuke4367shared a Kaggle link demonstrating fast inference with the fine-tuned Gemma model and provided its URL on Hugging Face,Kukedlc/NeuralGemmaCode-2b-unsloth.

Links mentioned:

- yam-peleg/Experiment26-7B · Hugging Face: no description found

- Gemma CoPilot 2x-Fast-Inference: Explore and run machine learning code with Kaggle Notebooks | Using data from No attached data sources

- NeuralNovel/Unsloth-DPO · Datasets at Hugging Face: no description found

Unsloth AI (Daniel Han) ▷ #suggestions (4 messages):

- Approval for Ye Galore:

@starsupernovaexpressed their satisfaction with Ye Galore, mentioning it's good with a smiley. - Joking on Ease of Implementation:

@remek1972humorously commented on the ease of implementing something, tagging<@160322114274983936>followed by a laughing emoji. - Promoting GEAR Project:

@remek1972shared a GitHub link to the GEAR project (GitHub - opengear-project/GEAR), hinting at its efficiency in KV cache compression for near-lossless generative inference of large language models. - Novel Approach for Fine-tuning Huge Models:

@iron_boundshared an arXiv paper (Adding NVMe SSDs to Enable and Accelerate 100B Model Fine-tuning on a Single GPU), discussing the possibility of fine-tuning enormous models using NVMe SSDs on a single, even low-end, GPU.

Links mentioned:

- Adding NVMe SSDs to Enable and Accelerate 100B Model Fine-tuning on a Single GPU: Recent advances in large language models have brought immense value to the world, with their superior capabilities stemming from the massive number of parameters they utilize. However, even the GPUs w...

- GitHub - opengear-project/GEAR: GEAR: An Efficient KV Cache Compression Recipefor Near-Lossless Generative Inference of LLM: GEAR: An Efficient KV Cache Compression Recipefor Near-Lossless Generative Inference of LLM - opengear-project/GEAR

Perplexity AI ▷ #general (424 messages🔥🔥🔥):

- Claude 3 Opus Offers Limited: Users like

@thugbunny.discussed the limited use of Claude 3 Opus on Perplexity Pro, with clarifications provided by@icelavamanthat Pro includes 600 uses of other LLMs like Claude Sonett but only 5 are for Opus.

- Perplexity AI Adoption Discussions: Members like

@makya2148and@jawnzeshared observations and speculations about Perplexity’s competitiveness and business moves like making the Pro version ad-based, referencing tweets from Perplexity's CEO relating to competitor's pricing models.

- Job and Internship Enthusiasts: User

@parvjreached out to offer help and express a keen interest in working with Perplexity.@ok.alexresponded by indicating to check the careers page and advised against tagging the team directly in such requests.

- Comparisons and Confusions About LLMs: Several users, including

@codeliciousand@talyzman, discussed whether Perplexity uses external models like Gemini or has its own, with some confusion raised about the responses being similar to Gemini's API.

- Pro User Experience Queries: Pro users like

@halilsakand@0xhanyainquired about issues around using logo designs and uploading pdf scripts for queries, seeking guidance on how to leverage Perplexity effectively for their specific use cases.

Links mentioned:

- Tweet from Aravind Srinivas (@AravSrinivas): Will make Perplexity Pro free, if Mikhail makes Microsoft Copilot free ↘️ Quoting Ded (@dened21) @AravSrinivas @MParakhin We want perplexity pro for free (monetize with highly personalized ads)

- CEO says he tried to hire an AI researcher from Meta, and was told to 'come back to me when you have 10,000 H100 GPUs': The CEO of an AI startup said he wasn't able to hire a Meta researcher because it didn't have enough GPUs.

- More than an OpenAI Wrapper: Perplexity Pivots to Open Source: Perplexity CEO Aravind Srinivas is a big Larry Page fan. However, he thinks he's found a way to compete not only with Google search, but with OpenAI's GPT too.

- Perplexity AI CEO Shares How Google Retained An Employee He Wanted To Hire: Aravind Srinivas, the CEO of search engine Perplexity AI, recently shared an interesting incident that sheds light on how big tech companies are ready to shell a great amount of money to retain talent...

- Stonks Chart GIF - Stonks Chart Stocks - Discover & Share GIFs: Click to view the GIF

- I Believe In People Sundar Pichai GIF - I Believe In People Sundar Pichai Youtube - Discover & Share GIFs: Click to view the GIF

- Tweet from Elon Musk (@elonmusk): This week, @xAI will open source Grok

- U.S. Must Act Quickly to Avoid Risks From AI, Report Says : The U.S. government must move “decisively” to avert an “extinction-level threat" to humanity from AI, says a government-commissioned report

- Reddit - Dive into anything: no description found

- Reddit - Dive into anything: no description found

Perplexity AI ▷ #sharing (17 messages🔥):

- Discoveries via AI:

@0xhanyashared a Perplexity AI link about AI discoveries, prompting@ok.alexto remind users to ensure their threads are shareable. - Space Junk's Comeback:

@mayersj1posted a link dealing with the topic of space junk returning to Earth. - CSS Writing Insights:

@tymscarshared a resource for writing CSS, a common topic of interest amongst web developers. - Research on Fruits:

@yipengsunprovided a link Are strawberries fruits based on research into the classification of strawberries. - Sharing Enabled:

@ed323161posted a link about improving a certain topic, and@po.shfollowed up with a reminder to make sure the thread is shared for visibility.

Perplexity AI ▷ #pplx-api (9 messages🔥):

- Seeking Assistants to Boost Personal Assistant Project: User

@shine0252is working on a personal assistant project similar to Alexa and is looking for help to improve pplx API's responses for conciseness and memory of past conversations. - Conciseness with

sonarModels Suggested:@dogemeat_recommended using thesonarmodels from pplx API for more concise responses, and storing conversation history in memory or a database to enable the assistant to "remember" past interactions. - Interest in the Personal Assistant Endeavor: Both

@roey.zaltaand@brknclock1215showed interest in@shine0252's personal assistant project, with@roey.zaltaasking for more details. - Prompting over API Alone for Memory:

@brknclock1215indicated that while prompting and parameter adjustments like max_tokens and temperature can help with conciseness, remembering conversations would require external data storage, not offered solely by the pplx API. - Requesting Specific Model Features and Prompt Guidance: User

@5008802inquired if pplx API can reply with sources from the web, and@paul16307asked if it's possible to add Yarn-Mistral-7b-128k for handling high-context conversations.

Nous Research AI ▷ #off-topic (24 messages🔥):

- Rapid Convergence Method Unveiled:

@baptistelqtannounced they have developed a method that accelerates convergence of neural networks by 100,000x and it works for all architectures, including Transformers. The technique involves starting each "round" of training from scratch and a promise to publish the paper soon. - Command-R Model Introduction:

@1vnzhshared a Model Card for C4AI Command-R, a 35 billion parameter model optimized for tasks including reasoning, summarization, and question answering.@everyoneisgrossprovided a link to a GitHub demo showing how to make RAG calls with Command-R using a simplified search method. - Telestrations with AI Possibility:

@denovichdescribed the game Telestrations, noting its potential synergy with a multi-modal LLM to facilitate play with fewer than the required four players, transforming it into a fun AI-powered experience. - Newsletter Highlights AI Conversations:

@quicksortlinked to the AI News newsletter which provides summaries of AI-related discussions from social platforms, mentioning that it covers 356 Twitter accounts and 21 Discord channels. While@quicksortand@denovichfound it valuable,@ee.ddand@hexaniexpressed concerns about the privacy implications of scraping Discord chats. - YouTube Video Outlines Game Development and RAG with LLM:

@pradeep1148posted two YouTube links demonstrating projects with large language models: one showcasing the creation of a Plants Vs Zombies game using Claude 3 and another explaining the workings of Command-R for retrieval augmented generation (RAG) applications.

Links mentioned:

- CohereForAI/c4ai-command-r-v01 · Hugging Face: no description found

- Command-R: RAG at Production Scale: Command-R is a scalable generative model targeting RAG and Tool Use to enable production-scale AI for enterprise.

- Lets RAG with Command-R: Command-R is a generative model optimized for long context tasks such as retrieval augmented generation (RAG) and using external APIs and tools. It is design...

- Claude 3 made Plants Vs Zombies Game: Will take a look at how to develop plants vs zombies using Claude 3#python #pythonprogramming #game #gamedev #gamedevelopment #llm #claude

- scratchTHOUGHTS/commanDUH.py at main · EveryOneIsGross/scratchTHOUGHTS: 2nd brain scratchmemory to avoid overrun errors with self. - EveryOneIsGross/scratchTHOUGHTS

- [AINews] Fixing Gemma: AI News for 3/7/2024-3/11/2024. We checked 356 Twitters and 21 Discords (335 channels, and 6154 messages) for you. Estimated reading time saved (at 200wpm):...

Nous Research AI ▷ #interesting-links (6 messages):

- Cosine Similarity Under Scrutiny:

@leontelloshared an arXiv link discussing the reliability of cosine similarity, revealing that it can produce arbitrary and potentially meaningless results depending on the regularization of linear models.

- Exposing AI Model Embeddings:

@denovichlinked to an arXiv paper describing a new model-stealing attack that extracts the embedding projection layer from black-box models like OpenAI’s ChatGPT, achieving this for under $20 USD.

- Devin: AI That Passes Engineering Interviews:

@atgctghighlighted a Twitter post from Cognition Labs introducing Devin, an AI software engineer that scores remarkably high on a coding benchmark, outperforming other models and even completing actual engineering tasks.

- Cohere’s Command-R Model Revealed:

@benxhpresented the C4AI Command-R model from Cohere, a 35 billion parameter model noted for its performance in reasoning, summarization, multilingual generation, and more.

- Chloe's Twit on New Advancements:

@atgctglinked a Twitter post by @itschloebubble without context provided, thus the content of the advancement cannot be summarized.

Links mentioned:

- Tweet from Cognition (@cognition_labs): Today we're excited to introduce Devin, the first AI software engineer. Devin is the new state-of-the-art on the SWE-Bench coding benchmark, has successfully passed practical engineering intervie...

- Is Cosine-Similarity of Embeddings Really About Similarity?: Cosine-similarity is the cosine of the angle between two vectors, or equivalently the dot product between their normalizations. A popular application is to quantify semantic similarity between high-di...

- CohereForAI/c4ai-command-r-v01 · Hugging Face: no description found

Nous Research AI ▷ #general (267 messages🔥🔥):

- Zuckerberg's AI Hindsight: ldj shared a humorous video reflecting on Mark Zuckerberg’s past thoughts on AI, with an ironic twist given the rapid advancements since then.

- Musk's Musing on AI Risks: teknium linked to an Elon Musk tweet emphasizing the potential dangers of AI, adding to longstanding debates on the topic.

- AI Release Predictions in the Community: mautonomy speculated a 30% chance of GPT-5 releasing in 56 hours, though @ee.dd countered, predicting no release until after U.S. elections, with @thepok and @night_w0lf discussing whether current models like GPT-4 would be surpassed by other AI entities.

- Under-the-Radar AI Models: @night_w0lf pointed out a relatively unnoticed Deepseek-VL model, suggesting it could disrupt the current AI landscape – details found in the Deepseek-VL paper.

- Function Calling LLM Anticipation: teknium hinted at the imminent release of a new 7B function-calling language model from their end, boosting anticipation in the AI community.

Links mentioned:

- Errors in the MMLU: The Deep Learning Benchmark is Wrong Surprisingly Often: Datasets used to asses the quality of large language models have errors. How big a deal is this?

- CohereForAI/c4ai-command-r-v01 · Hugging Face: no description found

- Tweet from interstellarninja (@intrstllrninja): recursive function-calling LLM dropping to your local GPU very soon...

- U.S. Must Act Quickly to Avoid Risks From AI, Report Says : The U.S. government must move “decisively” to avert an “extinction-level threat" to humanity from AI, says a government-commissioned report

- Free Me Nope GIF - Free Me Nope Cat Stuck - Discover & Share GIFs: Click to view the GIF

- no title found: no description found

- Gemma optimizations for finetuning and infernece · Issue #29616 · huggingface/transformers: System Info Latest transformers version, most platforms. Who can help? @ArthurZucker and @younesbelkada Information The official example scripts My own modified scripts Tasks An officially supporte...

- GitHub - openai/transformer-debugger: Contribute to openai/transformer-debugger development by creating an account on GitHub.

- Gemma bug fixes - Approx GELU, Layernorms, Sqrt(hd) by danielhanchen · Pull Request #29402 · huggingface/transformers: Just a few more Gemma fixes :) Currently checking for more as well! Related PR: #29285, which showed RoPE must be done in float32 and not float16, causing positional encodings to lose accuracy. @Ar...

Nous Research AI ▷ #ask-about-llms (120 messages🔥🔥):

- Tokenizer Replacement Debate:

@stoicbatmaninquired about replacing a model's tokenizer post-training to better handle specific languages like Tamil.@tekniumresponded that adding tokens is possible, but replacing the tokenizer would essentially make prior learning useless according to@stefangliga. Discussions orbited around dual tokenization support and maintaining a mapping of the old tokenizer. - Function Calling with XML and Constrained Decoding: A discussion led by

@kramakekand@.interstellarninjaexplored using XML tags in LLMs, constrained decoding techniques, and the accuracy of function calls by fine-tuned models.@ufghfigchvhighlighted their tool that samples logits for valid JSON output but noted it does not yet support parallel function calls. - Hosting Models and Output Structuring:

@sundar_99385asked about tools for guiding open source LLM outputs, comparing various libraries like Outlines and SG-lang for model hosting and guidance.@.interstellarninjasuggested that llama-cpp offers grammar support, while@tekniumand@ufghfigchvdiscussed precompiling and caching function generation for efficiency. - Discussions on Model Licensing and Usability:

@thinwhiteduke8458questioned the commercial usability of models like Nous Hermes 2 given its mix of Apache 2 and MIT licenses, with@tekniumstating no legal issues would come from their end in terms of usage. - On Fine-Tuning Model Size and System Requirements:

@xela_akwasought advice on memory requirements for fine-tuning jobs, facing out-of-memory issues despite working with dual 40GB A100 GPUs.@ee.ddrecommended trying unsloth for better efficiency, while@tekniumsuggested qlora, which requires less VRAM, and reminded that PPO requires the full model to be loaded twice.

Links mentioned:

- Use XML tags: no description found

- GitHub - open-webui/open-webui: User-friendly WebUI for LLMs (Formerly Ollama WebUI): User-friendly WebUI for LLMs (Formerly Ollama WebUI) - open-webui/open-webui

- GitHub - enricoros/big-AGI: 💬 Personal AI application powered by GPT-4 and beyond, with AI personas, AGI functions, text-to-image, voice, response streaming, code highlighting and execution, PDF import, presets for developers, much more. Deploy and gift #big-AGI-energy! Using Next.js, React, Joy.: 💬 Personal AI application powered by GPT-4 and beyond, with AI personas, AGI functions, text-to-image, voice, response streaming, code highlighting and execution, PDF import, presets for developers.....

Nous Research AI ▷ #collective-cognition (3 messages):

- Flash Attention Query Redirected: User

@pradeep1148asked about how to disable flash attention feature.@tekniumredirected the question, noting that this channel is archived and advising to ask in <#1154120232051408927>.

LM Studio ▷ #💬-general (207 messages🔥🔥):

- Mac M1 Pro GPU Woes:

@maorwereported issues with LM Studio opting to use CPUs instead of GPU acceleration on a Mac M1 Pro. Other users, like@amir0717, faced similar problems with different setups, asking for model recommendations for a GTX 1665 Ti with 16 GB of RAM.

- DeepSeek Model Compatibility:

@amir0717detailed errors when running certain models, particularly DeepSeek, and@_nahfam_suggested that "1.3B not 7B" models would work better, also proposingdeepseek-coder-1.3b-instruct-GGUF.

- Exploring GPU Configuration for LM Studio:

@purplemelbourneexperienced difficulties with LM Studio recognizing dual GPUs as a single unit with combined VRAM, and@heyitsyorkieoffered advice regarding the utilization of the tensor split feature.

- Commitment to LM Studio: In a series of posts, users including

@yagilb,@donnius, and@rexehdiscussed the pace of development for LM Studio, with developers acknowledging delays and promising new updates, citing a small team and ongoing efforts.

- User-Created Guides and APIs for LLM:

@ninjasnakeyesinquired about the differences between using LM Studio and llamacpp, leading to a discussion about API connectivity for custom programs with@rjkmelband@nink1providing clarification. Further,@tvb1199shared a comprehensive Local LLM User Guide they've compiled.

Links mentioned:

- Rivet: An open-source AI programming environment using a visual, node-based graph editor

- deepseek-ai/deepseek-vl-7b-chat · Discussions: no description found

- Pc Exploding GIF - Pc Exploding Minecraft - Discover & Share GIFs: Click to view the GIF

- deepse (DeepSE): no description found

- I Have The Power GIF - He Man I Have The Power Sword - Discover & Share GIFs: Click to view the GIF

- Ugh Nvm GIF - Ugh Nvm Sulk - Discover & Share GIFs: Click to view the GIF

- The Muppet Show Headless Man GIF - The Muppet Show Headless Man Scooter - Discover & Share GIFs: Click to view the GIF

- Reddit - Dive into anything: no description found

- Reddit - Dive into anything: no description found

- Reddit - Dive into anything: no description found

- The unofficial LMStudio FAQ!: Welcome to the unofficial LMStudio FAQ. Here you will find answers to the most commonly asked questions that we get on the LMStudio Discord. (This FAQ is community managed). LMStudio is a free closed...

- Rivet: How To Use Local LLMs & ChatGPT At The Same Time (LM Studio tutorial): This tutorial explains how to connect LM Studio with Rivet to use local models running on your own pc (e.g. Mistral 7B), but also how you are able to still u...

- GitHub - xue160709/Local-LLM-User-Guideline: Contribute to xue160709/Local-LLM-User-Guideline development by creating an account on GitHub.

- A Complete Guide to LangChain in JavaScript — SitePoint: Learn about the essential components of LangChain — agents, models, chunks, chains — and how to harness the power of LangChain in JavaScript.

LM Studio ▷ #🤖-models-discussion-chat (96 messages🔥🔥):

- LLM Storytelling Enhancement Discussion: User

@bigboimarkusinquired about a language model adept at improving or expanding stories. No specific recommendations were given in the discussion. - Model Choice for Coding in C++:

@amir0717sought advice for the best model to handle around 200 lines of C++ code with a GTX 1665 Ti and 16 GB of RAM and stated that the current model they're using, stablelm zephyr 1.5 GB, is generating incomplete code. - Innovative Ternary Computing:

@purplemelbourneand@aswarpengaged in a detailed discussion on the potential revolution in computing with the shift from binary to ternary systems, accompanied by scholarly references to research papers and implementation strategies. - Update Anticipation for LLM Studio:

@rexehlooked for alternative recommendations to Starcoder 2 for use with lm studio on rocm, particularly for Python, while@heyitsyorkiementioned that support for Starcoder 2 is expected in the next update of LM Studio. - Exploring Stock Price Prediction AI:

@christianazinndiscussed the idea of using a locally hosted LLM for stock price prediction, weighing in on the performance differences between large context length models versus shorter ones with auxiliary systems like MemGPT. Conversation also touched on the potential for local models to access the internet for updated real-time data.

Links mentioned:

- Render mathematical expressions in Markdown: Render mathematical expressions in Markdown

- Calculation Math GIF - Calculation Math Hangover - Discover & Share GIFs: Click to view the GIF

- Im Waiting Daffy Duck GIF - Im Waiting Daffy Duck Impatient - Discover & Share GIFs: Click to view the GIF

- How to safely render Markdown using react-markdown - LogRocket Blog: Learn how to safely render Markdown syntax to the appropriate HTML with this short react-markdown tutorial.

- GitHub - deepseek-ai/DeepSeek-VL: DeepSeek-VL: Towards Real-World Vision-Language Understanding: DeepSeek-VL: Towards Real-World Vision-Language Understanding - deepseek-ai/DeepSeek-VL

- Feast Your Eyes: Mixture-of-Resolution Adaptation for Multimodal Large Language Models: no description found