[AINews] The DSPy Roadmap

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Systematic AI engineering is all you need.

AI News for 8/16/2024-8/19/2024. We checked 7 subreddits, 384 Twitters and 29 Discords (254 channels, and 4515 messages) for you. Estimated reading time saved (at 200wpm): 489 minutes. You can now tag @smol_ai for AINews discussions!

Omar Khattab announced that he would be joining Databricks for a year before his MIT professorship today, but more importantly set the stage for DSPy 2.5 and 3.0+:

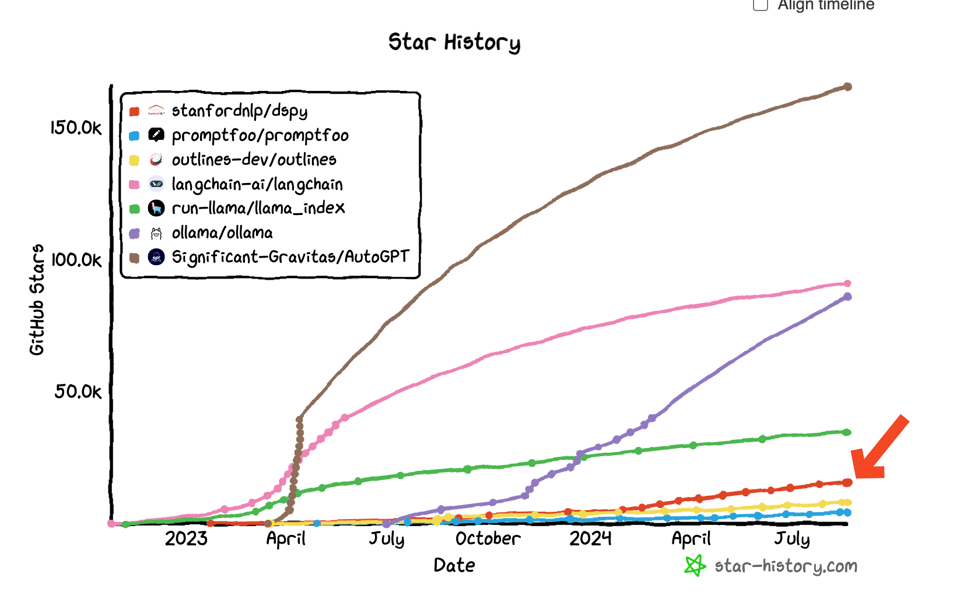

DSPy has objectively been a successful framework for declarative self-improving LLM pipelines, following the 2022 DSP paper and 2023 DSPy paper.

The main roadmap directions:

- Polish the 4 pieces of DSPy core: (1) LMs, (2) Signatures & Modules, (3) Optimizers, and (4) Assertions, so that they "just work" out of the box zero shot, off-the-shelf.

- In LMs they aim to reduce lines of code. In particular they call out that they will eliminate 6k LOC by adopting LiteLLM. However they will add functionality for "improved caching, saving/loading of LMs, support for streaming and async LM requests".

- In Signatures they are evolving the concept of "structured inputs" now that "structured outputs" are mainstream.

- In Finetuning: they aim to "bootstrap training data for serveral different modules in a program, train multiple models and handle model selection, and then load and plug in those models into the program's modules"

-

Developing more accurate, lower-cost optimizers. Following the BootstrapFewShot -> BootstrapFinetune -> CA-OPRO -> MIPRO -> MIPROv2 and BetterTogether optimmizers, more work will be done improving Quality, Cost, and Robustness.

-

Building end-to-end tutorials. More docs!

-

Shifting towards more interactive optimization & tracking. Help users "to observe in real time the process of optimization (e.g., scores, stack traces, successful & failed traces, and candidate prompts)."

Nothing mindblowing, but a great roadmap update from a very well managed open source framework.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI and Robotics Developments

- Google's Gemini Updates: Google launched Gemini Live, a mobile conversational AI with voice capabilities and 10 voices, available to Gemini Advanced users on Android. They also introduced Pixel Buds Pro 2 with a custom Tensor A1 chip for Gemini functionality, enabling hands-free AI assistance.

- OpenAI Developments: OpenAI's updated ChatGPT-4o model reclaimed the top spot on LMSYS Arena, testing under the codename "anonymous-chatbot" for a week with over 11k votes.

- xAI's Grok-2: xAI released Grok-2, now available in beta for Premium X users. It can generate "unhinged" images with FLUX 1 and has achieved SOTA status in just over a year.

- Open-Source Models: Nous Research released Hermes 3, an open-source model available in 8B, 70B, and 405B parameter sizes, with the 405B model achieving SOTA relative to other open models.

- Robotics Advancements: Astribot teased their new humanoid, showcasing its impressive range of freedom in real-time without teleoperation. Apple is reportedly developing a tabletop robot with Siri voice commands, combining an iPad-like display with a robotic arm.

- AI Research Tools: Sakana AI introduced "The AI Scientist", claimed to be the world's first AI system capable of autonomously conducting scientific research, generating ideas, writing code, running experiments, and writing papers.

AI Model Performance and Techniques

- Vision Transformer (ViT) Performance: @giffmana wrote a blog post addressing concerns about ViT speed at high resolution, aspect ratio importance, and resolution requirements.

- RAG Improvements: New research on improving RAG for multi-hop queries using database filtering with LLM-extracted metadata showed promising results on the MultiHop-RAG benchmark. HybirdRAG combines GraphRAG and VectorRAG, outperforming both individually on financial earning call transcripts.

- Model Optimization: @cognitivecompai reported that GrokAdamW appears to be an improvement when training gemma-2-2b with the Dolphin 2.9.4 dataset.

- Small Model Techniques: @bindureddy encouraged iterating on small 2B models to make them more useful and invent new techniques that can be applied to larger models.

AI Applications and Tools

- LangChain Developments: LangChain JS tutorial on using LLM classifiers for dynamic prompt selection based on query type. Agentic RAG with Claude 3.5 Sonnet, MongoDB, and llama_index demonstrated building an agentic knowledge assistant over a pre-existing RAG pipeline.

- AI for Software Engineering: Cosine demo'd Genie, a fully autonomous AI software engineer that broke the high score for SWE-Bench at 30.08%. OpenAI and the authors of SWE-Bench redesigned and released 'SWE-bench Verified' to address issues in the original benchmark.

- Productivity Tools: @DrJimFan expressed a desire for an LLM to automatically filter, label, and reprioritize Gmail according to a prompt, highlighting the potential for AI in email management.

AI Ethics and Societal Impact

- AI Deception Debate: @polynoamial discussed the misconception of bluffing in poker as an example of AI deception, arguing that it's more about not revealing excess information rather than active deception.

- AI Reasoning Capabilities: @mbusigin argued that LLMs are already better than a significant number of humans at reasoning, as they don't rely on "gut" feelings and perform well on logical reasoning tests.

Memes and Humor

- @AravSrinivas joked: "Networking ~= Not actually working"

- @AravSrinivas shared a humorous image related to AI or tech (content not specified).

- @Teknium1 quipped about video generation techniques: "Why are almost every video gen just pan or zoom, you may as well use flux (1000x faster) and generate an image"

This summary captures the key developments, discussions, and trends in AI and robotics from the provided tweets, focusing on information relevant to AI engineers and researchers.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. XTC: New Sampler for Enhanced LLM Creativity

- Exclude Top Choices (XTC): A sampler that boosts creativity, breaks writing clichés, and inhibits non-verbatim repetition, from the creator of DRY (Score: 138, Comments: 64): The Exclude Top Choices (XTC) sampler, introduced in a GitHub pull request for text-generation-webui, aims to boost LLM creativity and break writing clichés with minimal impact on coherence. The creator reports that XTC produces novel turns of phrase and ideas, particularly enhancing roleplay and storywriting, and feels distinctly different from increasing temperature in language models.

Theme 2. Cost-Benefit Analysis of Personal GPUs for AI Development

- Honestly nothing much to do with one 4090 (Score: 84, Comments: 90): The author, who works in AI infrastructure and ML engineering, expresses disappointment with their 4090 GPU purchase for personal AI projects. They argue that for most use cases, cloud-based API services or enterprise GPU clusters are more practical and cost-effective than a single high-end consumer GPU for AI tasks, questioning the value of local GPU ownership for personal AI experimentation.

All AI Reddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Model Advancements and Comparisons

- Flux LoRA Results: A user shared impressive results from training Flux LoRA models on Game of Thrones characters, achieving high-quality outputs with only 10 image datasets and 500-1000 training steps. The training required over 60GB of VRAM. Source

- Cartoon Character Comparison: A comparison of various AI models (DALL-E 3, Flux dev, Flux schnell, SD3 medium) generating cartoon characters eating watermelon. DALL-E 3 performed best overall, with Flux dev coming in second. The post highlighted DALL-E 3's use of complex LLM systems to split images into zones for detailed descriptions. Source

- Flux.1 Schnell Upscaling Tips: A user shared tips for improving face quality in Flux.1 Schnell outputs, recommending the use of 4xFaceUpDAT instead of 4x-UltraSharp for upscaling realistic images. The post also mentioned other upscaling models and techniques for enhancing image quality. Source

AI Company Strategies and Criticisms

- OpenAI's Business Practices: A user criticized OpenAI for running their company like a "tiny Ycombinator startup," citing practices such as waitlists, cryptic CEO tweets, and pre-launch hype videos. The post argued that these tactics are unsuitable for a company valued at nearly $100 billion and may confuse customers and enterprise users. Source

AI-Generated Content and Memes

- The Mist (Flux+Luma): A video post showcasing AI-generated content using Flux and Luma models, likely depicting a scene inspired by the movie "The Mist." Source

- Seems familiar somehow?: A meme post in the r/singularity subreddit, likely referencing AI-related content. Source

- Someone had to say it...: Another meme post in the r/StableDiffusion subreddit. Source

Future Technology and Research

- Self-driving Car Jailbreaking: A post speculating that people will attempt to jailbreak self-driving cars once they become widely available. Source

- Age Reversal Pill for Dogs: A study reporting promising results for an age reversal pill tested on dogs. However, the post lacked citations to peer-reviewed research and was criticized for being anecdotal. Source

AI Discord Recap

A summary of Summaries of Summaries by Claude 3.5 Sonnet

1. Hermes 3 Model Release and Performance

- Hermes 3 Matches Llama 3.1 on N8Bench: Hermes 3 scored identical to Llama 3.1 Instruct on the N8Bench benchmark, which measures a model's ability to reason and solve problems.

- This result is significant as Llama 3.1 Instruct is considered one of the most advanced language models available, highlighting Hermes 3's competitive performance.

- Hermes 3 405B Free Weekend on OpenRouter: OpenRouter announced that Hermes 3 405B is free for a limited time, offering a 128k context window, courtesy of Lambda Labs.

- Users can access this model at OpenRouter's Hermes 3 405B page, providing an opportunity to test and evaluate this large language model.

- Quantization Impact on 405B Models: @hyperbolic_labs warned that quantization can significantly degrade the performance of 405B models.

- They recommended reaching out to them for alternative solutions if performance is a concern, highlighting the trade-offs between model size reduction and maintaining performance quality.

2. LLM Inference Optimization Techniques

- INT8 Quantization for CPU Execution: A member inquired about the potential benefits of using INT8 quantization for faster CPU execution of small models, suggesting some CPUs might natively run INT8 without converting to FP32.

- This approach could potentially improve performance for CPU-based inference, especially for resource-constrained environments or edge devices.

- FP8 Training Advancements: Training a 1B FP8 model with 1st momentum in FP8 smoothly up to 48k steps resulted in a loss comparable to bfloat16 with a 0.08 offset.

- This demonstrates that FP8 training can be effective with 1st momentum, achieving similar results as bfloat16 training while potentially offering memory savings and performance improvements.

- Batching APIs for Open-Source Models: CuminAI introduced a solution for creating batching APIs for open-source models, similar to those recently launched by OpenAI and Google.

- While major companies' batching APIs lack processing guarantees and SLAs, CuminAI's approach aims to provide similar cost-saving benefits for open-source model deployments. A guide is available at their blog post.

3. Open Source AI Model Developments

- Falcon Mamba 7B Claims to Outperform Llama 3 8B: A YouTube video announced the release of Falcon Mamba 7B, claiming it outperforms Llama 3 8B.

- This development could have significant implications for the field of large language models, as Falcon Mamba 7B is a relatively new and promising model challenging established benchmarks.

- Ghost 8B Beta's Multilingual Prowess: Ghost 8B Beta, a newly released language model, now supports 16 languages including English, Vietnamese, Spanish, and Chinese, with two context options (8k and 128k).

- The model boasts improved capabilities in math, reasoning, and instruction-following, outperforming competitors like Llama 3.1 8B Instruct, GPT-3.5 Turbo, and Claude 3 Opus in AlpacaEval 2.0 winrate scores.

- VideoLLaMA 2-72B Release by Alibaba DAMO: Alibaba DAMO released VideoLLaMA 2-72B, a new video LLM available on HuggingFace with a demo on HuggingFace Spaces.

- The research paper is also available on HuggingFace, showcasing advancements in multimodal AI combining video understanding and language modeling.

4. AI Safety and Regulation Discussions

- Nancy Pelosi Opposes California AI Bill: Speaker Emerita Nancy Pelosi issued a statement opposing California Senate Bill 1047 on AI regulation.

- The full statement can be found on the House of Representatives website, highlighting ongoing debates about how to approach AI governance at the state level.

- Procreate Rejects Generative AI Integration: The CEO of Procreate made a clear statement that they will not be integrating generative AI into their products, a decision celebrated by many artists and users on social media.

- Some observers noted that this stance might change in the future, as it could potentially limit new feature development. This highlights the ongoing tension between traditional creative tools and the rapid advancement of AI in the creative industry.

- Gary Marcus Revisits AI Bubble Concerns: AI researcher Gary Marcus revisited his keynote from AGI-21 in a video titled "The AI Bubble: Will It Burst, and What Comes After?", noting that many issues he highlighted then are still relevant today despite significant AI advances.

- This discussion, available on YouTube, reflects ongoing debates about the sustainability and trajectory of current AI development trends and their potential societal impacts.

PART 1: High level Discord summaries

Stability.ai (Stable Diffusion) Discord

- Flux: The New King?: Members discussed Flux's potential to take over the image generation AI community, with new Loras and merges appearing daily.

- Some believe Stability AI needs to release something soon to compete, as Flux is becoming a dominant force in CivitAI and Hugging Face.

- Flux vs. SD3: A Race to the Top: There's a debate about whether Flux is fundamentally different from SD3, with both models using DiT architecture, ret flow loss, and similar VAE sizes.

- The key difference is that Flux dev was distilled from a large model, while Stability AI could also pull that trick. Some prefer non-distilled models, even if image quality is lower.

- Flux Training: Challenges and Opportunities: Members discussed the challenges of training Loras for Flux, noting that the training code hasn't been officially released yet.

- Some users are exploring methods for training Loras locally, while others recommend using Replicate's official Flux LoRA Trainer for faster and easier results.

- ComfyUI vs. Forge: A Battle of the UIs: Users discussed the performance differences between ComfyUI and Forge, with some finding Forge to be faster, especially for batch processing.

- The discussion touched on the impact of Gradio 4 updates on Forge and the potential for future improvements. Some users prefer the flexibility of ComfyUI, while others appreciate the optimization of Forge.

- GPU Recommendations for Stable Diffusion: Members shared their experiences with various GPUs and their performance for Stable Diffusion, with 16GB VRAM considered a minimum and 24GB being comfortable.

- The discussion touched on the importance of VRAM over CPU speed and the impact of RAM and other apps on performance. The consensus was to try different models and encoders to find the best fit for each system.

HuggingFace Discord

- Hermes 2.5 Outperforms Hermes 2: After adding code instruction examples, Hermes 2.5 appears to perform better than Hermes 2 in various benchmarks.

- Hermes 2 scored a 34.5 on the MMLU benchmark whereas Hermes 2.5 scored 52.3.

- Mistral Struggles Expanding Beyond 8k: Members stated that Mistral cannot be extended beyond 8k without continued pretraining and this is a known issue.

- They pointed to further work on mergekit and frankenMoE finetuning for the next frontiers in performance.

- Discussion on Model Merging Tactics: A member suggested applying the difference between UltraChat and base Mistral to Mistral-Yarn as a potential merging tactic.

- Others expressed skepticism, but this member remained optimistic, citing successful past attempts at what they termed "cursed model merging".

- Open Empathic Project Plea for Assistance: A member appealed for help in expanding the categories of the Open Empathic project, particularly at the lower end.

- They shared a YouTube video on the Open Empathic Launch & Tutorial that guides users to contribute their preferred movie scenes from YouTube videos, as well as a link to the OpenEmpathic project itself.

- FP8 Training with 1st Momentum Achieves Similar Loss: Training a 1B FP8 model with 1st momentum in FP8 smoothly up to 48k steps resulted in a loss comparable to bfloat16 with a 0.08 offset.

- This demonstrates that FP8 training can be effective with 1st momentum, achieving similar results as bfloat16 training.

Unsloth AI (Daniel Han) Discord

- Ghost 8B Beta (1608) released: Ghost 8B Beta (1608), a top-performing language model with unmatched multilingual support and cost efficiency, has been released.

- It boasts superior performance compared to Llama 3.1 8B Instruct, GPT-3.5 Turbo, Claude 3 Opus, GPT-4, and more in winrate scores.

- Ghost 8B Beta's Multilingual Prowess: Ghost 8B Beta now supports 16 languages, including English, Vietnamese, Spanish, Chinese, and more.

- It offers two context options (8k and 128k) and improved math, reasoning, and instruction-following capabilities for better task handling.

- Ghost 8B Beta Outperforms Competitors: Ghost 8B Beta outperforms models like Llama 3.1 8B Instruct, GPT 3.5 Turbo, Claude 3 Opus, Claude 3 Sonnet, GPT-4, and Mistral Large in AlpacaEval 2.0 winrate scores.

- This impressive performance highlights its superior knowledge capabilities and multilingual strength.

- Code Editing with LLMs: A new paper explores using Large Language Models (LLMs) for code editing based on user instructions.

- It introduces EditEval, a novel benchmark for evaluating code editing performance, and InstructCoder, a dataset for instruction-tuning LLMs for code editing, containing over 114,000 instruction-input-output triplets.

- Reasoning Gap in LLMs: A research paper proposes a framework to evaluate reasoning capabilities of LLMs using functional variants of benchmarks, specifically the MATH benchmark.

- It defines the "reasoning gap" as the difference in performance between solving a task posed as a coding question vs a natural language question, highlighting that LLMs often excel when tasks are presented as code.

Nous Research AI Discord

- Linear Transformers: A Match Made in Softmax Heaven: Nous Research has published research on a linear transformer variant that matches softmax, allowing for training at O(t) instead of O(t^2).

- The research, available here, explores this new variant and its implications for training efficiency.

- Falcon Mamba 7B Bests Llama 3 8B: A YouTube video announcing the release of Falcon Mamba 7B claims that it outperforms Llama 3 8B.

- This could have significant implications for the field of large language models, as Falcon Mamba 7B is a relatively new and promising model.

- Regex Debated as Chunking Technique: A user shared their thoughts on a regex-based text chunker, stating they would "scream" if they saw it in their codebase, due to the complexity of regex.

- Another user, however, countered by arguing that for a text chunker specifically, regex might be a "pretty solid option" since it provides "backtracking benefits" and allows for flexibility in chunking settings.

- Hermes 3: The Performance King of N8Bench?: Hermes 3 scored identical to Llama 3.1 Instruct on the N8Bench benchmark, which is a measure of a model's ability to reason and solve problems.

- This is a significant result, as Llama 3.1 Instruct is considered to be one of the most advanced language models available.

- Gemini Flash: The Future of RAG?: A user reports that they've moved some of their RAG tasks to Gemini Flash, noting that they've seen improvements in summary quality and reduced iteration requirements.

- They share a script they've been using to process raw, unstructured transcripts with Gemini Flash, available on GitHub at https://github.com/EveryOneIsGross/scratchTHOUGHTS/blob/main/unstruct2flashedTRANSCRIPT.py.

Perplexity AI Discord

- Perplexity Pro is a Pain: Multiple users reported issues with Perplexity Pro signup process, with users being unable to complete the signup without paying, despite receiving an offer for a free year.

- Users were advised to reach out to support@perplexity.ai for assistance with this issue.

- Obsidian Copilot Gets a Claude Boost: A user shared their experience using the Obsidian Copilot plugin with a Claude API key, finding it to be a solid choice in terms of performance.

- They stressed the importance of checking API billing settings before committing and also highlighted the need for Obsidian to have real-time web access.

- Perplexity's Image Generation Feature Struggles: Users discussed the shortcomings of Perplexity's image generation feature, which is currently only accessible for Pro users, requiring an AI prompt for image description.

- This was considered a 'weird' and 'bad' implementation by users, who highlighted the need for a more streamlined approach to image generation.

- Perplexity Search Encounters Hiccups: Several users reported issues with Perplexity's search quality, encountering problems with finding relevant links and receiving inaccurate results.

- These issues were attributed to possible bugs, prompts changes, or inference backend service updates.

- Perplexity Model Changes Leave Users Concerned: Discussions revolved around changes in Perplexity's models, with users expressing concerns about the potential decline in response quality and the increase in "I can't assist with that" errors.

- Other concerns included missing punctuation marks in API responses and the use of Wolfram Alpha for non-scientific queries.

OpenRouter (Alex Atallah) Discord

- Hermes 3 405B is free this weekend!: Hermes 3 405B is free for a limited time, with 128k context, courtesy of Lambda Labs.

- You can check it out at this link.

- GPT-4 extended is now on OpenRouter: You can now use GPT-4 extended output (alpha access) through OpenRouter.

- This is capped at 64k max tokens.

- Perplexity Huge is the largest online model on OpenRouter: Perplexity Huge launched 3 days ago and is the largest online model on OpenRouter.

- You can find more information at this link.

- A Week of Model Launches: This week saw 10 new model launches on OpenRouter, including GPT-4 extended, Perplexity Huge, Starcannon 12B, Lunaris 8B, Llama 405B Instruct bf16 and Hermes 3 405B.

- You can see the full list at this link.

- Quantization Degrades Performance: Quantization can massively degrade the performance of 405B models, according to @hyperbolic_labs.

- They recommend reaching out to them if you are concerned about performance, as they offer alternative solutions.

LM Studio Discord

- INT8 Quantization for Faster CPUs?: A member inquired about potential performance gains from using INT8 quantization for smaller models on CPUs.

- They suggested that some CPUs may natively support INT8 execution, bypassing conversion to FP32 and potentially improving performance.

- Llama.cpp Supports Mini-CPM-V2.6 & Nemotron/Minitron: A member confirmed that the latest llama.cpp version supports Mini-CPM-V2.6 and Nvidia's Nemotron/Minitron models.

- This update expands the range of models compatible with llama.cpp, enhancing its versatility for LLM enthusiasts.

- Importing Chats into LM Studio: A member sought guidance on importing chat logs from a JSON export into LM Studio.

- Another member clarified that chat data is stored in JSON files and provided instructions on accessing the relevant folder location.

- Vulkan Error: CPU Lacks AVX2 Support: A user encountered an error indicating their CPU lacks AVX2 support, preventing the use of certain features.

- A helpful member requested the CPU model to assist in diagnosing and resolving the issue.

- LLMs Interacting with Webpages: A Complex Challenge: A member discussed the possibility of enabling LLMs to interact with webpages, specifically seeking a 'vision' approach.

- While tools like Selenium and IDkit were mentioned, the general consensus is that this remains a challenging problem due to the diverse structure of webpages.

OpenAI Discord

- Claude Outperforms Chat-GPT on Code: A member stated that Claude tends to be better at code than Chat-GPT.

- The fact that 4o's API costs more than Claude makes no sense tbh.

- Livebench.ai: Yann LeCun's Open Source Benchmark: Livebench.ai is an open source benchmark created by Yann LeCun and others.

- The LMSys benchmark is probably the worst as of now.

- Claude Projects vs Chat-GPT Memory Feature: A member believes Claude Projects are more useful than Chat-GPT's memory feature.

- The member also stated that custom GPTs are more like projects, allowing for the use of your own endpoints.

- OpenAI is Winning the Attention Game: OpenAI is winning by controlling attention through releasing new models like GPT-4o.

- The member stated that people are talking about OpenAI's new models, even if they don't want to participate in the tech hype.

- GPT-4o is Now Worse than Claude and Mistral: Members have noticed that GPT-4o has become dumber lately and may be suffering from a type of Alzheimer's.

- Claude Sonnet is being praised for its superior performance and is becoming a preferred choice among members.

Latent Space Discord

- Topology's CLM: Learning Like Humans: Topology has released the Continuous Learning Model (CLM), a new model that remembers interactions, learns skills autonomously, and thinks in its free time, just like humans.

- This model can be tried out at http://topologychat.com.

- GPT5 Needs to Be 20x Bigger: Mikhail Parakhin tweeted that to get meaningful improvement in AI models, a new model should be at least 20x bigger than the current model.

- This would require 6 months of training and a new, 20x bigger datacenter, which takes about a year to build.

- Procreate Rejects Generative AI: The CEO of Procreate has stated that they will not be integrating generative AI into their products.

- While some artists and users on social media celebrated the news, others noted that it could mean no new features will be added in the future, and this could change.

- DSPy: Not Quite Commercial Yet: There is no commercial company behind DSPy yet, although Omar is working on it.

- A member shared that they went to the Cursor office meetup, and while there was no alpha to share, they did say hi.

- DSPy Bridging the Gap: DSPy is designed to bridge the gap between prompting and finetuning, allowing users to avoid manual prompt tuning.

- The paper mentions that DSPy avoids prompt tuning, potentially making it easier to switch models, retune to data shifts, and more.

Cohere Discord

- Cohere Office Hours Kick-Off!: Join Cohere's Sr. Product Manager and DevRel for a casual session on product and content updates with best practices and Q&A on Prompt Tuning, Guided Generations API with Agents, and LLM University Tool Use Module.

- The event takes place today at 1 PM ET in the #stage channel and can be found at this link.

- Cohere Prompt Tuner: Optimized Prompting!: Learn about the Cohere Prompt Tuner, a powerful tool to optimize prompts and improve the accuracy of your LLM results.

- The blog post details how to utilize this tool and the associated features.

- Command-r-plus Not Working?: A user reported that command-r-plus in Sillytavern stopped working consistently when the context length reaches 4000 tokens.

- The user has been attempting to use the tool to enhance their workflow, but is facing this unexpected issue.

- API Key Partial Response Issues: A user reported experiencing issues with their API key returning only partial responses, even after trying different Wi-Fi routers and cellular data.

- The user is currently seeking a solution to this problem.

- Structured Outputs for Accurate JSON Generations: Structured Outputs, a recent update to Cohere's tools, delivers 80x faster and more accurate JSON generations than open-source implementations.

- This new feature improves the accuracy of JSON output and is discussed in this blog post.

Interconnects (Nathan Lambert) Discord

- Yi Tay Works on Chaos No Sleep Grind: The discussion touched on work styles of various AI organizations with one member suggesting that Yi Tay operates with a 'chaos no sleep grind' mentality.

- They referenced a tweet from Phil (@phill__1) suggesting that 01AI may be pulling out of non-Chinese markets, what is going on with .@01AI_Yi? Are they pulling out of the non Chinese market?.

- Nancy Pelosi Opposes California AI Bill: Speaker Emerita Nancy Pelosi issued a statement opposing California Senate Bill 1047 on AI regulation.

- The statement was released on the House of Representatives website: Pelosi Statement in Opposition to California Senate Bill 1047.

- Zicheng Xu Laid Off From Allen-Zhu's Team: Zeyuan Allen-Zhu announced the unexpected layoff of Zicheng Xu, the author of the "Part 2.2" tutorial.

- Allen-Zhu strongly endorses Xu and provided his email address for potential collaborators or employers: zichengBxuB42@gmail.com (remove the capital 'B').

- Nous Hermes Discord Drama Over Evaluation Settings: A user mentioned a discussion in the Nous Discord regarding a user's perceived rudeness and misrepresentation of evaluation settings.

- The user mentioned that their evaluation details were in the SFT section of the paper, and admitted that it doesn't feel good to get things wrong but the core of the article is still valid.

- Meta Cooking (Model Harnessing) Creates Confusion: A user wondered what "meta cooking" is, suggesting a potential conflict or drama in the Nous Discord.

- The user mentioned finding contradictory information about evaluation settings, possibly due to the use of default LM Harness settings without clear documentation.

OpenAccess AI Collective (axolotl) Discord

- GrokAdamW Makes Axolotl Faster: GrokAdamW, a PyTorch optimizer that encourages fast grokking, was released and is working with Axolotl via the Transformers integration. GrokAdamW repository

- The optimizer is inspired by the GrokFast paper, which aims to accelerate generalization of a model under the grokking phenomenon. GrokFast paper

- Gemma 2b Training Hiccup: A user reported a consistent loss of 0.0 during training of a Gemma 2b model, with a nan gradient norm.

- The user recommended using eager attention instead of sdpa for training Gemma 2b models, which fixed the zero loss issue.

- Custom Loaders & Chat Templates in Axolotl: A user asked for clarification on using a Chat Template type in a .yml config file for Axolotl, specifically interested in specifying which loader to use, for example, ShareGPT.

- Another user suggested the user could specify which loader to use by providing a custom .yml file.

- Fine-Tuning with Axolotl: No Coding Required: A user clarified that fine-tuning with Axolotl generally does not require coding knowledge, but rather understanding how to format datasets and adapt existing examples.

- A user mentioned owning a powerful AI rig to run LLama 3.1 70b, but felt it was still lacking in some key areas and wanted to use their dataset of content for fine-tuning.

- LLaMa 3.1 8b Lora Detects Post-Hoc Reasoning: A user is training a LLaMa 3.1 8b Lora to detect post-hoc reasoning within a conversation, having spent three days curating a small dataset of less than 100 multi-turn conversations with around 30k tokens.

- The user employed Sonnet 3.5 to help with generating examples, but had to fix multiple things in each generated example, despite careful prompt crafting, because even when instructing the models not to create examples with post-hoc reasoning, they still generated them due to their fine-tuning data.

LangChain AI Discord

- LangChain Caching Issues: A member was confused about why

.batch_as_completed()wasn't sped up by caching, even though.invoke()and.batch()were near instant after caching.- They observed that the cache was populated after the first run, but

.batch_as_completed()didn't seem to utilize it.

- They observed that the cache was populated after the first run, but

- LLMs struggle with structured output: A member mentioned that local LLMs, like Llama 3.1, often had difficulty producing consistently structured output, specifically when it came to JSON parsing.

- They inquired about datasets specifically designed to train models for improved JSON parsing and structured output for tools and ReAct agents.

- Deleting files in a RAG chatbot: A member discussed how to implement a delete functionality for files in a RAG chatbot using MongoDB as a vector database.

- A response provided examples of using the

deletemethod from the LangChain library for both MongoDB vector stores and OpenAIFiles, along with relevant documentation links.

- A response provided examples of using the

- Hybrid Search Relevance Issues: A member encountered relevance issues with retrieved documents and generated answers in a RAG application using a hybrid search approach with BM25Retriever and vector similarity search.

- Suggestions included checking document quality, adjusting retriever configurations, evaluating the chain setup, and reviewing the prompt and LLM configuration.

- CursorLens is a new dashboard for Cursor users: CursorLens is an open-source dashboard for Cursor users that provides analytics on prompts and allows configuring models not available through Cursor itself.

- It was recently launched on ProductHunt: https://www.producthunt.com/posts/cursor-lens.

OpenInterpreter Discord

- Orange Pi 5 Review: The New Affordable SBC: A user shared a YouTube video review of the Orange Pi 5, a new Arm-based SBC.

- The video emphasizes that the Orange Pi 5 is not to be confused with the Raspberry Pi 5.

- GPT-4o-mini Model woes: A Quick Fix: A user encountered trouble setting their model to GPT-4o-mini.

- Another user provided the solution:

interpreter --model gpt-4o-mini.

- Another user provided the solution:

- OpenInterpreter Settings Reset: A Revert Guide: A user sought a way to revert OpenInterpreter settings to default after experimentation.

- The solution involved using

interpreter --profilesto view and edit profiles, and potentially uninstalling and reinstalling OpenInterpreter.

- The solution involved using

- OpenInterpreter API Integration: Building a Bridge: A user inquired about integrating OpenInterpreter into their existing AI core, sending requests and receiving outputs.

- The recommended solution involved using a Python script with a Flask server to handle communication between the AI core and OpenInterpreter.

- Local LLMs for Bash Commands: CodeStral and Llama 3.1: A member requested recommendations on local LLMs capable of handling bash commands.

- Another member suggested using CodeStral and Llama 3.1.

DSPy Discord

- LLMs Struggle with Reliability: Large Language Models (LLMs) are known for producing factually incorrect information, leading to "phantom" content that hinders their reliability.

- This issue is addressed by WeKnow-RAG, a system that integrates web search and Knowledge Graphs into a Retrieval-Augmented Generation (RAG) system to improve LLM accuracy and reliability.

- DSPy Unveils its Roadmap: The roadmap for DSPy 2.5 (expected in 1-2 weeks) and DSPy 3.0 (in a few months) has been released, outlining objectives, milestones, and community contributions.

- The roadmap is available on GitHub: DSPy Roadmap.

- Langgraph and Routequery Class Error: A user encountered an error with the

routequeryclass in Langgraph.- They sought guidance on integrating DSPy with a large toolset and shared a link to the Langgraph implementation: Adaptive RAG.

- Optimizing Expert-Engineered Prompts: A member questioned whether DSPy can optimize prompts that have already been manually engineered by experts.

- They inquired if DSPy effectively optimizes initial drafts and also improves established prompting systems.

- Colpali Fine-Tuning Discussion: A discussion centered around the finetuning of Colpali, a model requiring specialized expertise due to its domain-specific nature.

- The discussion highlighted the importance of understanding the data needed for effectively finetuning Colpali.

LAION Discord

- FLUX Dev Can Generate Grids: A user shared that FLUX Dev can generate 3x3 photo grids of the same (fictional) person.

- This could be useful for training LORAs to create consistent characters of all kinds of fictional people.

- Training LORAs for Specific Purposes: A user expressed interest in training LORAs for specific purposes like dabbing, middle finger, and 30s cartoon.

- They mentioned the possibility of converting their FLUX Dev LoRA into FP8 or using an FP8 LoRA trainer on Replicate.

- LLMs for Medical Assistance: Not Ready Yet: Several users expressed skepticism about using LLMs for medical assistance in their current state.

- They believe LLMs are not yet reliable enough for such critical applications.

- JPEG-LM: LLMs for Images & Videos?: A new research paper proposes modeling images and videos as compressed files using canonical codecs (e.g., JPEG, AVC/H.264) within an autoregressive LLM architecture.

- This approach eliminates the need for raw pixel value modeling or vector quantization, making the process more efficient and offering potential for future research.

- JPEG-LM vs. SIREN: A Battle of the Titans?: A user playfully claims to have outperformed the SIREN architecture from 2020 with a 33kB complex-valued neural network.

- While acknowledging that NVIDIA's Neural Graphics Primitives paper from 2022 significantly advanced the field, they highlight the importance of using MS-SSIM as a metric for image quality assessment, as opposed to just MSE and MAE.

LlamaIndex Discord

- Workflows Take Center Stage: Rajib Deb shared a video showcasing LlamaIndex's workflow capabilities, demonstrating decorators, types for control flow, event-driven process chaining, and custom events and steps for complex tasks.

- The video focuses on workflows, emphasizing their ability to build sophisticated applications with a more structured approach.

- Building Agentic RAG Assistants with Claude 3.5: Richmond Lake's tutorial guides users on building an agentic knowledge assistant using Claude 3.5, MongoDB, and LlamaIndex, highlighting building an agentic knowledge assistant over a pre-existing RAG pipeline.

- This tutorial demonstrates using LlamaIndex for advanced RAG techniques, emphasizing tool selection, task decomposition, and event-driven methodologies.

- BeyondLLM Streamlines Advanced RAG Pipelines: BeyondLLM, developed by AIPlanetHub, provides abstractions on top of LlamaIndex, enabling users to build advanced RAG pipelines with features like evaluation, observability, and advanced RAG capabilities in just 5-7 lines of code.

- These advanced RAG features include query rewriting, vector search, and document summarization, simplifying the development of sophisticated RAG applications.

- Web Scrapers: A LlamaIndex Dilemma: A member asked for recommendations for web scrapers that work well with LlamaIndex, and another member recommended FireCrawl, sharing a YouTube video showing a more complex implementation of a LlamaIndex workflow.

- The conversation highlights the need for effective web scraping tools that seamlessly integrate with LlamaIndex, enabling efficient knowledge extraction and processing.

- Unveiling the Secrets of RouterQueryEngine and Agents: A member sought clarification on the difference between LlamaIndex's RouterQueryEngine and Agents, specifically in terms of routing and function calling.

- The discussion clarifies that the RouterQueryEngine acts like a hardcoded agent, while Agents offer greater flexibility and generality, highlighting the distinct capabilities of each approach.

LLM Finetuning (Hamel + Dan) Discord

- HF Spaces Limitations: A member had trouble hosting their own LLM using HF Spaces, as ZeroGPU doesn't support vLLM.

- The member was seeking an alternative solution, potentially involving Modal.

- Modal for LLM Hosting: Another member reported using Modal for hosting LLMs.

- However, they are currently transitioning to FastHTML and are looking for a setup guide.

- Jarvis Labs for Fine-tuning: One member shared their experience using Jarvis Labs exclusively for fine-tuning LLMs.

- This suggests that Jarvis Labs might offer a streamlined approach compared to other platforms.

Alignment Lab AI Discord

- OpenAI and Google Get Cheaper with Batching APIs: OpenAI and Google launched new batching APIs for some models, offering a 50% cost reduction compared to regular requests.

- However, these APIs currently lack processing guarantees, service level agreements (SLAs), and retries.

- CuminAI: Open-Source Batching APIs: CuminAI provides a solution for creating batching APIs for open-source models, similar to those offered by OpenAI.

- Check out their step-by-step guide on "How to Get a Batching API Like OpenAI for Open-Source Models" here.

- SLMs: The New Superheroes of AI?: CuminAI highlights the potential of Small Language Models (SLMs), arguing that "bigger isn't always better" in AI.

- While Large Language Models (LLMs) have dominated, SLMs offer a more cost-effective and efficient alternative, especially for tasks that don't require extensive computational power.

Mozilla AI Discord

- Llamafile Boosts Performance & Adds New Features: Llamafile has released new features, including Speech to Text Commands, Image Generation, and a 3x Performance Boost for its HTTP server embeddings.

- The full update, written by Justine, details the performance improvements and new features.

- Mozilla AI Celebrates Community at Rise25: Mozilla AI is celebrating community members who are shaping a future where AI is responsible, trustworthy, inclusive, and centered around human dignity.

- Several members attended the event, including <@631210549170012166>, <@1046834222922465314>, <@200272755520700416>, and <@1083203408367984751>.

- ML Paper Talks: Agents & Transformers Deep Dive: Join a session hosted by <@718891366402490439> on Communicative Agents and Extended Mind Transformers.

- RSVP for the sessions: Communicative Agents with author <@878366123458977893>, and Extended Mind Transformers with author <@985920344856596490>.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!