[AINews] The AI Search Wars Have Begun — SearchGPT, Gemini Grounding, and more

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

One AI Searchbox is All You Need.

AI News for 10/30/2024-10/31/2024. We checked 7 subreddits, 433 Twitters and 32 Discords (231 channels, and 2468 messages) for you. Estimated reading time saved (at 200wpm): 264 minutes. You can now tag @smol_ai for AINews discussions!

Teased as SearchGPT in July, ChatGPT finally rolled out its search functionality today across all platforms, completely coincidentally coinciding with Gemini launching Search Grounding after an unfortunate delay. The launch includes a simple Chrome Extension that @sama is personally promoting on Twitter and on their Reddit AMA (dont bother) today:

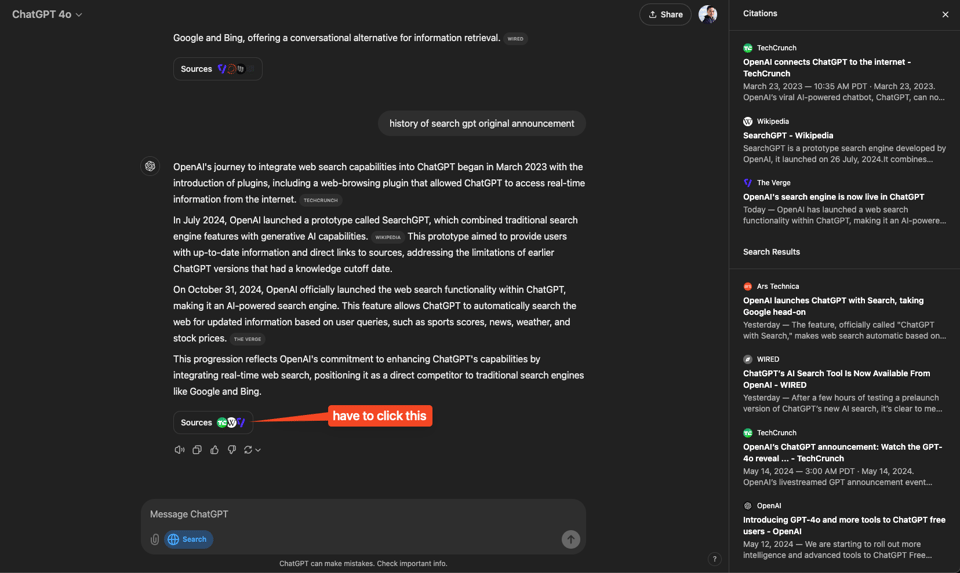

with a raft of weather, stocks, sports, news, and maps partners — noticeably, you will never get a New York Times article via ChatGPT because the NYT chose to sue OpenAI instead of partner with them. Partners are presumably happy about the feature, but the citations come with a catch - you have to expend an additional click to see them at all, and most will not.

CHatGPT search uses a "fine-tuned version of GPT-4o, post-trained using novel synthetic data generation techniques, including distilling outputs from OpenAI o1-preview", however it is already found to offer hallucinations.

This latest salvo in consumer AI plays challenging their search leader (Perplexity) mirrors a broader trend in b2b AI plays (Dropbox Dash) challenging their search leader (Glean).

Sounds like a good time to bone up on AI search techniques, with today's AINews sponsor!

Brought to you by the RAG++ course: Go beyond basic RAG implementations and explore advanced strategies like hybrid search and advanced prompting to optimize performance, evaluation, and deployment. Learn from industry experts at Weights & Biases, Cohere, and Weaviate how to overcome common RAG challenges and build robust AI solutions, leveraging Cohere's platform with provided credits for participants.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Developments and Benchmarks

- Claude 3.5 Sonnet Performance: @alexalbert__ announced that Claude 3.5 Sonnet achieved 49% on SWE-bench Verified, beating the previous SOTA of 45%. The model uses a minimal prompt structure, allowing flexibility in handling diverse coding challenges.

- SimpleQA Benchmark: @_jasonwei introduced SimpleQA, a new hallucinations evaluation benchmark with 4,000 diverse fact-seeking questions. Current frontier models like Claude Sonnet 3.5 score below 50% accuracy on this challenging benchmark.

- Universal-2 Speech-to-Text Model: @svpino shared details about Universal-2, a next-generation Speech-To-Text model with 660M parameters. It shows significant improvements in recognizing proper nouns, alphanumeric accuracy, and text formatting.

- HOVER Neural Whole-Body Controller: @DrJimFan presented HOVER, a 1.5M-parameter neural network for controlling humanoid robots. Trained in NVIDIA Isaac simulation, it can be prompted for various high-level motion instructions and supports multiple input devices.

AI Tools and Applications

- AI Hedge Fund Team: @virattt built a hedge fund team of AI agents using LangChain and LangGraph, consisting of fundamental, technical, and sentiment analysts.

- NotebookLM and Illuminate: @GoogleDeepMind developed two AI tools for narrating articles, generating stories, and creating multi-speaker audio discussions.

- LongVU Video Language Model: @mervenoyann shared details about Meta's LongVU, a new video LM that can handle long videos by downsampling using DINOv2 and fusing features.

- AI Production Engineer: @svpino discussed an AI system by @resolveai that handles alerts, performs root cause analysis, and resolves incidents in production environments.

AI Research and Trends

- Vision Language Models (VLMs): @mervenoyann summarized trends in VLMs, including interleaved text-video-image models, multiple vision encoders, and zero-shot vision tasks.

- Speculative Knowledge Distillation (SKD): @_philschmid shared a new method from Google for solving limitations of on-policy Knowledge distillation, using both teacher and student during distillation.

- QTIP Quantization: @togethercompute introduced QTIP, a new quantization method achieving state-of-the-art quality and inference speed for LLMs.

- Trusted Execution Environments (TEEs): @rohanpaul_ai discussed the use of TEEs for privacy-preserving decentralized AI, addressing challenges in processing sensitive data across untrusted nodes.

AI Industry News and Announcements

- OpenAI New Hire: @SebastienBubeck announced joining OpenAI, highlighting the company's focus on safe AGI development.

- Perplexity Supply Launch: @perplexity_ai introduced Perplexity Supply, offering quality goods designed for curious minds.

- GitHub Copilot Updates: @svpino noted that GitHub Copilot is rapidly releasing new features, likely in response to competition from Cursor.

- Meta's AI Investments: @nearcyan reported that Meta now spends $4B on VR and $6B on AI, with a 43% profit margin.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Apple Showcases LMStudio in MacBook Pro Ad: Local LLMs Go Mainstream

- MacBook Pro M4 Max; Up to 526 GB/s Memory Bandwidth. (Score: 195, Comments: 87): The new MacBook Pro M4 Max chips boast up to 526 GB/s memory bandwidth, significantly enhancing local AI performance. This substantial increase in memory bandwidth is expected to greatly improve the speed and efficiency of AI-related tasks, particularly for on-device machine learning and data processing operations.

- So Apple showed this screenshot in their new Macbook Pro commercial (Score: 726, Comments: 116): Apple's new MacBook Pro commercial features a screenshot of LMStudio, a popular open-source tool for running local large language models (LLMs). This inclusion suggests Apple is acknowledging and potentially endorsing the growing trend of local AI adoption, highlighting the capability of their hardware to run sophisticated AI models locally.

- LMStudio gains mainstream recognition through Apple's commercial, with users praising its features and user-friendliness. Some debate its open-source status and comparison to alternatives like Kobold and Ollama.

- The AI community's growth is highlighted, with discussions about its size and impact. AMD also showcased LM Studio benchmarks, indicating broader industry adoption of local AI tools.

- Users speculate on the performance of new Apple M4 chips for running large language models, with expectations of running 70B+ models at 8+ tokens/sec. Current M2 Ultra chips reportedly achieve similar performance.

Theme 2. Meta's Llama 4: Training on 100k+ H100 GPUs for 2025 Release

- Summary: The big AI events of October (Score: 99, Comments: 20): October 2023 saw the release of several significant AI models, including Flux 1.1 Pro for image creation, Meta's Movie Gen for video generation, and Stable Diffusion 3.5 in three sizes as open source. Notable multimodal models introduced include Janus AI by DeepSeek-AI, Google DeepMind and MIT's Fluid text-to-image model with 10.5B parameters, and Anthropic's Claude 3.5 Sonnet New and Claude 3.5 Haiku, showcasing advancements in various AI capabilities.

- Flux 1.1 Pro generated discussion about open-source potential, with users speculating that it could become "invincible" if released openly. The conversation evolved into a debate about the limits of AI intelligence, particularly in language models versus image generation.

- The release of Stable Diffusion 3.5 was highlighted as a significant development for local, non-API-based image generation. Users expressed enthusiasm for this open-source model's accessibility.

- Discussion touched on the future of AI models, with predictions that standalone image models may soon be replaced by multimodal models integrating video capabilities. Some users speculated that AI could create entire comics "at the click of a button" within two years.

- Llama 4 Models are Training on a Cluster Bigger Than 100K H100’s: Launching early 2025 with new modalities, stronger reasoning & much faster (Score: 573, Comments: 157): Meta's Llama 4 models are reportedly training on a massive cluster exceeding 100,000 H100 GPUs, with plans for an early 2025 launch. According to a tweet and Meta's Q3 2024 earnings report, the new models are expected to feature new modalities, stronger reasoning capabilities, and significantly improved speed.

- Users expressed excitement about Llama 4's potential, with hopes it could match or surpass GPT-4/Turbo capabilities. Some speculated on model sizes, wishing for options from 9B to 123B parameters to suit various hardware configurations.

- Discussion centered on the massive 100,000 H100 GPU cluster used for training, with debates about power consumption (estimated 70 MW) and comparisons to industrial facilities. Some praised Meta's investment in open-source AI development.

- Comparisons were made between Llama and other models like Mistral and Nemotron, with users discussing relative performance and use cases. Some expressed hopes for improved usability and trainability in Llama 4 beyond benchmark scores.

Theme 3. Local AI Alternatives Challenge Cloud APIs: Cortex and Whisper-Zero

- Cortex: Local AI API Platform - a journey to build a local alternative to OpenAI API (Score: 66, Comments: 29): Cortex, a local AI API platform, aims to provide an alternative to OpenAI API with multimodal support. The project focuses on creating a self-hosted solution that offers similar capabilities to OpenAI's API, including text generation, image generation, and speech-to-text functionality. Cortex is designed to give users more control over their data and AI models while providing a familiar interface for developers accustomed to working with OpenAI's API.

- Cortex differs from Ollama in its use of C++ (vs. Go) and storage of models in universal file formats. It aims for 1:1 equivalence to the OpenAI API spec, focusing on multimodality and stateful operations.

- The project is designed as a local alternative to the OpenAI API platform, with plans to support multimodal tasks and real-time capabilities. It will integrate with Ichigo, a local real-time voice AI, and push a forward fork of llama.cpp for multimodal speech support.

- Some users expressed skepticism, viewing Cortex as "another llama-cpp wrapper." The developers clarified that it goes beyond a simple wrapper, aiming to unify various engines and handle complex multimodal tasks across different hardware and AI models.

- How did whisper-zero manage to reduce whisper hallucinations? Any ideas? (Score: 72, Comments: 49): Whisper-Zero, a modified version of OpenAI's Whisper speech recognition model, claims to reduce hallucinations in speech recognition. The post author is seeking information on how Whisper-Zero achieved this improvement, particularly in handling silence and background noise, which were areas where the original Whisper model struggled with hallucinations.

- Whisper inherits issues from YouTube autocaptioning, including hallucinations like adding "[APPLAUSE]" during silence. Users report the model sometimes adds random sentences or gets "stuck" repeating words, especially during silent periods.

- The claim of "eliminates hallucinations" is questioned, with suggestions that noise reduction preprocessing might be used. Some users note that Large-V3 performs worse than Large-V2 for certain tasks, including accented speech recognition.

- Skepticism about the "hallucination-free" claim is expressed, with users pointing out that a 10-15% WER improvement doesn't equate to zero hallucinations. The pricing ($0.6/hour transcribed) is also criticized as expensive compared to free alternatives.

Theme 4. Optimizing LLM Inference: KV Cache Compression and New Models

- [R] Super simple KV Cache compression (Score: 39, Comments: 5): The researchers discovered a simple method to improve LLM inference efficiency by compressing the KV cache, as detailed in their paper "A Simple and Effective L2 Norm-Based Strategy for KV Cache Compression". Their approach leverages the strong correlation between the L2 norm of token key projections in the KV cache and the attention scores they receive, enabling cache compression without compromising performance.

- Introducing Starcannon-Unleashed-12B-v1.0 — When your favorite models had a baby! (Score: 41, Comments: 8): Starcannon-Unleashed-12B-v1.0 is a new merged model combining nothingiisreal/MN-12B-Starcannon-v3 and MarinaraSpaghetti/NemoMix-Unleashed-12B, available on HuggingFace. The model claims improved output quality and ability to handle longer context, and can be used with either ChatML or Mistral settings, running on koboldcpp-1.76 backend.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Model Developments and Capabilities

- OpenAI's o1 model: Sam Altman announced that OpenAI's o series of reasoning models are "on a quite steep trajectory of improvement". Upcoming o1 features include function calling, developer messages, streaming, structured outputs, and image understanding. The full o1 model is still being worked on but will be released "soon".

- Google's AI code generation: AI now writes over 25% of code at Google, according to a report. This highlights the increasing role of AI in software development at major tech companies.

- Salesforce's xLAM-1b model: A 1 billion parameter model that achieves 70% accuracy in function calling, surpassing GPT 3.5, despite its relatively small size.

- Phi-3 Mini update: Rubra AI released an updated Phi-3 Mini model with function calling capabilities, competitive with Mistral-7b v3.

AI Tools and Interfaces

- Invoke 5.3: A new release featuring a "Select Object" tool that allows users to pick out specific objects in an image and turn them into editable layers, useful for image editing workflows.

- Wonder Animation: A tool that can transform any video into a 3D animated scene with CG characters.

AI Ethics and Societal Impact

- AI alignment: Discussions about the challenges of aligning AI with human values and the potential implications of highly advanced AI systems.

- Mixed reality concepts: A video demonstrating potential applications of mixed reality technology, showcasing the intersection of AI and augmented reality.

AI Discord Recap

A summary of Summaries of Summaries by O1-mini

Theme 1. Turbocharge Your AI: Models Get a Speed Boost

- Meta's Llama 3.2 Turbocharged!: Meta releases quantized Llama 3.2 models, boosting inference speed by 2-4x and slashing model size by 56% using Quantization-Aware Training.

- SageAttention Outpaces FlashAttention: SageAttention achieves 2.1x and 2.7x performance gains over FlashAttention2 and xformers respectively, enhancing transformer efficiency.

- BitsAndBytes Native Quantization Launched: Hugging Face integrates native quantization support with bitsandbytes, introducing 8-bit and 4-bit options for streamlined model storage and performance.

Theme 2. Fresh AI Models Hit the Scene

- SmolLM2 Takes Off with 11T Tokens: SmolLM2 family launches with models ranging from 135M to 1.7B parameters, trained on a massive 11 trillion tokens and fully open-sourced under Apache 2.0.

- Recraft V3 Dominates Design Language: Recraft V3 claims superiority in design language, outperforming rivals like Midjourney and OpenAI, pushing the boundaries of AI-generated creativity.

- Hermes 3 Flexes Against Llama 3.1: Hermes 3 excels with role-play dataset finetuning, maintaining strong personas via system prompts and proving superior to Llama 3.1 in conversational consistency.

Theme 3. Build Smart: Advanced AI Tooling and Frameworks

- HuggingFace Unveils Native Quantization: Integration of bitsandbytes library enables 8-bit and 4-bit quantization, enhancing model flexibility and performance within Hugging Face’s ecosystem.

- Aider Enhances Coding with Auto-Patches: Aider now auto-generates bug fixes and documentation, allowing developers to apply patches with one click, streamlining code reviews and boosting productivity.

- OpenInterpreter Adds Custom Profiles: Users can create customizable profiles in Open Interpreter via Python files, enabling tailored model selections and context adjustments for diverse applications.

Theme 4. Deployment Dilemmas: Navigating AI Infrastructure

- Multi-GPU Fine-Tuning Coming Soon: Unsloth AI hints at launching multi-GPU fine-tuning by year’s end, focusing initially on vision models to enhance overall model support.

- Network Woes Under Investigation: OpenRouter tackles sporadic network connection issues between cloud providers causing 524 errors, with ongoing improvements showing promise.

- Docker Images for Unsloth Receive Feedback: Community testing and feedback on Unsloth’s Docker Image highlight the importance of user insights for optimizing container usability and performance.

Theme 5. Search Smarter: AI Enhancements in Information Retrieval

- ChatGPT's Search Supercharged: OpenAI upgrades ChatGPT’s web search, enabling faster, more accurate answers with relevant links, significantly enhancing user experience.

- Perplexity AI Rolls Out Image Uploads: The ability to upload images in Perplexity AI is hailed as a major improvement, though users express concerns over missing functionalities post-update.

- WeKnow-RAG Combines Web and Knowledge Graphs: WeKnow-RAG integrates Web search and Knowledge Graphs into a Retrieval-Augmented Generation system, enhancing LLM response reliability and combating factual inaccuracies.

PART 1: High level Discord summaries

HuggingFace Discord

-

Llama 3.2 Models Turbocharged: Meta's new quantized versions of Llama 3.2 1B & 3B improve inference speed by 2-4x and reduce the model size by 56%, utilizing Quantization-Aware Training.

- Community discussions highlighted how this enhancement allows for quicker performance without compromising quality.

- Native Quantization Support Launched: Hugging Face has integrated native quantization support via the bitsandbytes library, enhancing model flexibility.

- The new features include 8-bit and 4-bit quantization, streamlining model storage and use with improved performance.

- Effective Strategies for Reading Research Papers: Members shared diverse objectives for reading papers, focusing on implementation versus staying updated, with one noting, I don't think I have ever implemented something from a paper.

- A structured three-step reading method was discussed, noting its efficiency in grasping complex academic content.

- AI Tool Auto-generates Bug Fixes: An AI tool has been developed to autogenerate patches for bugs, allowing developers to apply fixes with a single click upon a PR submission.

- This tool not only enhances code quality but also saves time during code reviews by catching issues early.

- Troubleshooting SD3Transformer2DModel Import: A member faced issues importing

SD3Transformer2DModelin VSCode, while successfully importing another model, indicating possible module-specific complications.

- The community engaged in collaborative troubleshooting, demonstrating the group's commitment to problem-solving in technical contexts.

Nous Research AI Discord

-

Flash-Attn Now Runs on A6000: A member successfully got flash-attn 2.6.3 working on CUDA 12.4 and PyTorch 2.5.0 with an A6000, resolving previous issues by building it manually.

- They noted difficulties with pip installs leading to linking errors, but the new setup appears promising.

- Perplexity Introduces New Supply Line: Perplexity launched Perplexity Supply, aiming to provide quality products for curious minds.

- This prompted discussions about competition with Nous, indicating a need to enhance their own offerings.

- The Future of AI Assistants: Discussion arose around AI assistants managing multiple tasks via a blend of local and cloud integrations.

- Members debated if local computing resources are sufficient for comprehensive AI functionality and usability.

- Hermes 3 Shines Against Llama 3: Hermes 3 excels due to its finetuning with role-play datasets, staying true to personas via system prompts over Llama 3.1.

- Users found ollama helpful for testing models, offering simple commands for customization.

- SmolLM2 Family Showcases Lightweight Capability: The SmolLM2 family, with sizes like 135M, 360M, and 1.7B parameters, is designed for on-device tasks while being lightweight.

- The 1.7B variant shows improvements in instruction following and reasoning compared to SmolLM1.

Unsloth AI (Daniel Han) Discord

-

ETA for Multi-GPU Fine Tuning: Members are eager to know the arrival time for multi-GPU fine tuning, with indications it might be available 'soon (tm)' before year's end.

- Focus remains on enhancements related to vision models and overall model support.

- Debate on Quantization Techniques: Discussions revolve around the best Language Models for fine-tuning under 3 billion parameters, with suggestions like DeBERTa and Llama.

- Tradeoffs between potential quality loss and speed improvements in quantization were actively debated.

- Unsloth Framework Shows Promise: Members praise the Unsloth framework for its efficient fine-tuning capabilities, highlighting its user-friendly experience.

- Queries regarding its flexibility for advanced tasks like layering freezing yielded assurances of support for those features.

- Memory Issues Running Inferences: A user flagged increasing GPU memory usage after multiple inference runs with 'unsloth/Meta-Llama-3.1-8B', raising alarms over memory accumulation.

- Efforts to clear memory using torch.cuda.empty_cache() didn't resolve the issue, suggesting deeper memory management concerns.

- Community Tests Unsloth Docker Image: A member shared a link to their Unsloth Docker Image for community feedback.

- Discussion emphasized the importance of community insights for improving Docker images and container usability.

Perplexity AI Discord

-

Grok 2 Model Gets Mixed Feedback: Users expressed a mix of enjoyment and frustration over the new Grok 2 model, especially regarding its availability on the Perplexity iOS app for Pro users.

- Some remarked it lacks helpful personality traits, leading to varying user experiences.

- Perplexity Pro Subscription Issues Continue: Several users reported ongoing problems with Pro subscriptions, including unrecognized subscription statuses.

- Frustration arose over limited source outputs despite payments, with questions raised about the service's quality.

- Users Love Image Upload Features: The ability to upload images in Perplexity has been praised as a significant enhancement, improving user interactions.

- However, concerns remain about performance quality and missing functionalities after recent updates.

- Confusion Over Search Functions in Perplexity: Discussions reveal confusion about the clarity of the search function, with users noting its primary focus on titles.

- Frustrations were compounded by responses being rerouted to GPT without upfront developer communication.

- Users Draw Comparisons Between Perplexity and ChatGPT: Members compared Perplexity and ChatGPT, examining functionalities and perceived pros and cons.

- Overall, some suggested that ChatGPT may perform better in certain contexts, sparking questions about Perplexity's effectiveness.

OpenAI Discord

-

Reddit AMA with OpenAI Executives: A Reddit AMA with Sam Altman, Kevin Weil, Srinivas Narayanan, and Mark Chen is set for 10:30 AM PT. Users can submit their questions for discussion, details accessible here.

- This event presents a direct line for the community to engage with OpenAI’s leadership.

- Revamped ChatGPT Search Feature: ChatGPT has upgraded its search capabilities, allowing for faster and more accurate answers with relevant links. More information on this enhancement is available here.

- This major improvement is expected to enhance user experience significantly.

- Insights on GPT-4 Training Frequency: Participants discussed that significant GPT-4 updates typically require 2-4 months for training and safety testing. Some members argued for more frequent minor updates based on user feedback.

- This divergence in opinion illustrates the varied perceptions regarding the product development cycle.

- Crafting a D&D DM GPT: An exciting project is underway to create a D&D DM GPT that enhances tabletop gaming experiences through AI integration.

- This initiative aims to create a more interactive storytelling mechanism within D&D sessions.

- Debating AI Generation Constraints: Discussions emerged around limiting AI generation to solely reflect the outcomes of user actions. Members emphasized a need for clarity on enabling interactive AI that aligns with user interactions.

- Further elaboration was sought on how best to define these limits to refine the model's context.

OpenRouter (Alex Atallah) Discord

-

OpenAI Speech-to-Speech API Availability: Users are curious about the new OpenAI Speech-to-Speech API, but currently, there's no estimated release date.

- This uncertainty has led to a lively discussion, as participants eagerly await specifics on its deployment.

- Claude 3.5's Concise Mode Sparks Debate: A heated debate emerged over Claude 3.5's new 'concise mode', with some users finding its responses overly restricted.

- Participants voiced mixed experiences, with many unable to discern significant differences in the API's functionality.

- Clarifying OpenRouter Credit Pricing: Users broke down the pricing for OpenRouter credits, noting it costs about $1 for roughly 0.95 credits after fees.

- Free models have a 200 requests per day limit, while paid usage rates differ based on model and demand.

- Gemini API Enhances Search with Google Grounding: The Gemini API now supports Google Search Grounding, integrating features similar to those found in Vertex AI.

- Users cautioned that pricing may be higher than expected, but they acknowledged its potential for enhancing tech-related queries.

- Network Connection Issues Under Investigation: Sporadic network connection issues between two cloud providers are under investigation, leading to 524 errors.

- Recent improvements seem promising, and the team aims to provide updates as further details about the request timeout issues emerge.

aider (Paul Gauthier) Discord

-

Aider Reads Files Automatically: Aider now automatically reads the on-disc version of files at each command, allowing users to see the latest updates without manual additions. Extensions like Sengoku can further automate file management in the developer environment.

- This enhances interaction efficiency, making it easier for users to manage their coding resources.

- Anticipation for Haiku 3.5: Discussion buzzed around the expected release of Haiku 3.5, speculated to drop later this year but not imminently. A strong community sentiment suggests that a launch would generate significant excitement.

- The eagerness implies high standards for improvements in this version.

- Continue as a Promising AI Assistant: Users appreciate Continue, an AI code assistant for VS Code that rivals Cursor's autocomplete features. Its user-friendly interface is praised for enhancing coding efficiency through customizable workflows.

- This tool reinforces the trend towards more integrated development environments.

- Aider’s Analytics Feature: Aider introduced an analytics function that collects anonymous user data to improve overall usability. Engaging users to opt-in for analytics will help identify popular features and assist debugging efforts.

- User feedback can significantly shape future iterations of Aider.

- Aider and Ollama Performance Hiccups: Some users face performance issues when integrating Aider with Ollama, particularly with larger model sizes causing slow responses. There's a call for a robust setup to optimize seamless functionality.

- Challenges with performance highlight the critical need for improved compatibility and efficiency.

Eleuther Discord

-

Open-sourced Value Heads Inquiry: Members expressed difficulty in finding open-sourced value heads, indicating a collective challenge in the community.

- This suggests an opportunity for collaboration and knowledge sharing among members looking for these resources.

- Universal Transformers underutilization: Despite their benefits, Universal Transformers (UTs) often require modifications like long skip connections, rendering them underexplored.

- Complexities involving chaining halting impact their broader application adoption, raising questions over their practical implementation.

- Deep Equilibrium Networks face skepticism: Deep Equilibrium Networks (DEQs) have potential but struggle with stability and training complexities, leading to doubts about their functionality.

- Concerns about fixed points in DEQs emphasize their challenges in achieving parameter efficiency compared to simpler models.

- Timestep Shifting promises optimization: New advancements in Stable Diffusion 3 around timestep shifting offer ways to optimize computations in model inference.

- Community efforts are reflected in shared code aimed at numerically solving timestep shifting for discrete schedules.

- Gradient Descent and Fixed Points Exploration: The need for adjusting step sizes in gradient descent emerged as crucial when exploring implications on fixed points in neural networks.

- Discussion pointed out challenges related to recurrent structures and their potential to manifest useful fixed points in applications.

Latent Space Discord

-

Jasper AI Doubles Down on Enterprises: Jasper AI reported a doubling of enterprise revenue over the past year, now serving 850+ customers, including 20% of the Fortune 500. They launched innovations like the AI App Library and Marketing Workflow Automation to further aid marketing teams.

- This growth aligns with an increased focus on AI adoption within enterprise marketing, with many teams prioritizing adoption strategies as competitive tools.

- OpenAI's Search Just Got a Boost: OpenAI has enhanced ChatGPT's web search functionality, allowing for more accurate and timely responses for users. This update positions ChatGPT well against emerging competition in the evolving AI search landscape.

- Users have already begun noticing the difference, with reports highlighting improvements in information retrieval precision compared to previous iterations.

- ChatGPT and Perplexity Battle for Search Supremacy: Debates ensue over the search results quality from ChatGPT versus Perplexity, as both platforms upgraded their capabilities. Users noted ChatGPT's advantage in providing relevant information more effectively.

- This rivalry highlights the growing focus on user satisfaction in search engines, driving further innovation and enhancements across platforms.

- Rise of Groundbreaking AI Tools: Recraft V3 claims to excel in design language, outperforming rivals like Midjourney and OpenAI's offerings. In addition, SmolLM2, an open-source model, sports training on a massive 11 trillion tokens.

- These advancements reflect a competitive marathon in AI capabilities, pushing boundaries in design and natural language processing.

- Call for AI Regulations Grows Louder: Anthropic's recent blog advocates for targeted regulation of AI, emphasizing the need for timely legislative responses. Their comments contribute meaningfully to the discourse on AI governance and ethics.

- With rising concerns about the societal impact of AI, this piece sparks conversations about how regulations can shape the future landscape of technology.

LM Studio Discord

-

venvstacks streamlines Python installs:

venvstackssimplifies shipping the Python-based Apple MLX engine without separate installations. Available on PyPi with$ pip install --user venvstacks, this utility is open-sourced and documented in a technical blog post.- The integration supports the MLX engine within LM Studio, enhancing user experience.

- LM Studio celebrates Apple MLX support: The latest LM Studio 0.3.4 release brings support for Apple MLX, along with integrated downloadable Python environments detailed in a blog post.

- Members highlighted that venvstacks is pivotal for a seamless user experience with Python dependencies.

- M2 Ultra impresses with T/S performance: Users reported 8 - 12 T/S performance on the M2 Ultra, with speculation of 12 - 16 T/S not being particularly impactful. Rumors suggest upcoming M4 chips may challenge the 4090 graphics cards, stirring excitement.

- Community members are eagerly awaiting more performance benchmarks as they share their experiences.

- Mistral Large gains popularity: Satisfaction with Mistral Large continues, with users sharing its capabilities and effectiveness in generating coherent outputs.

- However, limitations due to 36GB unified memory were noted, impacting the ability to run larger models seamlessly.

- Understanding system prompts in API requests: A discussion surfaced on the significance of system prompts, clarifying that parameters in API payloads override UI settings. This offers flexibility but makes consistent use crucial.

- Members emphasized the importance of understanding this for optimizing interactions with the LM Studio APIs.

GPU MODE Discord

-

Data Type Conversion in Tensors Explained: Discussion focused on tensor data types, especially f32, f16, and fp8, examining the implications of stochastic rounding in conversions.

- The exploration included transition considerations between bits and standard floating point formats.

- Exploring Shape of Int8 Tensor Core WMMA Instructions: A member noted that the shape of the int8 tensor core wmma instruction is tied to memory handling in LLMs, especially with M fixed at 16.

- This raised questions about implementations when M is small, indicating possible memory optimization strategies.

- Learning Triton and Visualization Fix Updates: A member expressed gratitude for a patch that restored visualization in their Triton learning process, aiding engagement with the Trion puzzle.

- Their return to Triton reflects renewed interest in this area, coupled with active involvement in discussions.

- ThunderKittens Library for User-Friendly CUDA Tools: ThunderKittens aims to create easily usable CUDA libraries, managing 95% of complexities while allowing users to engage with raw CUDA / PTX for the remaining 5%.

- The Mamba-2 kernel showcases its extensibility by integrating custom CUDA for complex tasks, highlighting the library's flexibility.

- Comments on Deep Learning Efficiency Guide: A member shared their guide on efficiency in deep learning, covering relevant papers, libraries, and techniques.

- Feedback included suggestions for sections on stable algorithm writing, reflecting the community's commitment to knowledge sharing.

Cohere Discord

-

Cohere API Frontend Options Lauded: Members discussed various Chat UI frontend options compatible with the Cohere API key, confirming that the Cohere Toolkit fits the bill.

- One user shared insights on building applications, noting the toolkit's support in rapid deployment.

- Chatbots Could Replace Browsers: A member shared R&D efforts focused on simulating ChatGPT's browsing process, aiming to analyze its output filtering mechanisms.

- This initiative ignited excitement, probing further into how ChatGPT's algorithms differ from conventional SEO methods.

- Application Review Process Underway: The team reaffirmed that application acceptances are in progress, ensuring thorough scrutiny of each submission.

- They highlighted a preference for candidates with concrete agent-building experience as crucial for selection.

- Fine-tuning Issues Tackled: Team members are addressing fine-tuning issues with scheduled updates following a user's concerns about ongoing problems.

- It remains pivotal for further development, as testing is set to explore ChatGPT's browsing capabilities.

- Cohere-Python Installation Troubles Resolved: Issues related to installing the cohere-python package with

poetrywere raised, with members sharing experiences and seeking help.

- Resolution came soon after, leading to appreciation for collaborative troubleshooting within the community.

Interconnects (Nathan Lambert) Discord

-

Creative Writing Arena Debuts: A new category, Creative Writing Arena, focused on originality, garnered about 15% of votes in its debut. Key models changed significantly, with ChatGPT-4o-Latest rising to #1.

- The introduction of this category highlights the shift towards enhancing artistic expression in AI-generated content.

- SmolLM2: The Open Source Wonder: The SmolLM2 model, featuring 1B parameters and trained on 11T tokens, is now fully open-source under Apache 2.0.

- The team aims to promote collaboration by releasing all datasets and training scripts, fostering community-driven innovation.

- Evaluating Models on ARC Gains Traction: Evaluating models on ARC is gaining popularity, reflecting improvements in evaluation standards within the community.

- Participants noted that these evaluations indicate strong base model performance and are becoming a mainstream approach.

- Llama 4 Training Brings Big Clusters: Llama 4 models are being trained on a cluster exceeding 100K H100s, showcasing significant advancements in AI capability. Job openings for researchers focusing on reasoning and code generation have also been announced via a job link.

- This robust training infrastructure reinforces the competitive spirit, as noted by Mark Zuckerberg during the META earnings call.

- Podcast Welcomes Scarf Profile Pic Guy: The scarf profile pic guy joins the podcast, causing a buzz among members, with one humorously responding, Lfg! This highlights the community's enthusiasm for notable guest appearances.

- NatoLambert reminisced about their history as one of the OG Discord friends, emphasizing the long-standing connections within this community.

Stability.ai (Stable Diffusion) Discord

-

Inpaint Tool Proves Useful: Users discussed the inpaint tool as a valuable method for correcting images and composing elements, making it easier to achieve desired results.

- Inpainting can be tricky, but it often becomes essential to finalize images, boosting user confidence in their abilities.

- Interest in Stable Diffusion Benchmarks: Members are curious about recent benchmarks for Stable Diffusion, particularly regarding performance on enterprise GPUs compared to personal 3090 setups.

- One user noted that using cloud services could potentially speed up the generation process.

- Discussion on Model Bias: Users observed a trend where the latest models often produce images with reddened noses, cheeks, and ears, prompting a debate over the underlying causes.

- Speculations arose around VAE issues and inadequate training data, especially from anime sources, influencing these results.

- Seeking Community Help for Projects: A user sought assistance for creating a promo video, prompting suggestions to post in related forums for more expertise.

- The responses highlighted a strong collaborative effort within the community to share knowledge and resources.

- Personal Preferences in Image Processing: A member shared their workflow preferences, noting they preferred to separate the img2img and upscale steps instead of relying on integrated solutions.

- This method allows for a more thoughtful refinement of images before finalizing them.

Modular (Mojo 🔥) Discord

-

Community Meeting Preview on November 12th: The next community meeting is set for November 12th, featuring insights from Evan's LLVM Developers' Meeting talk focusing on linear/non-destructible types in Mojo.

- Members can submit questions for the meeting through the Modular Community Q&A, with 1-2 spots open for community talks.

- Debate on C-style Macros: A discussion highlighted that introducing C-style macros could create confusion, advocating for custom decorators as a simpler alternative.

- Members expressed concern for keeping Mojo simple while introducing decorator capabilities.

- Compile-Time SQL Query Validation: There’s potential for SQL query validation at compile time using decorators, although detailed DB schema validation might require more handling.

- Concerns were raised about the feasibility of verifying queries this way.

- Custom String Interpolators for Efficiency: The introduction of custom string interpolators in Mojo, akin to those in Scala, could streamline syntax checks for SQL strings.

- Implementing this feature may avoid complications linked to traditional macros.

- Static MLIR Reflection vs Macros: A discussion around static MLIR reflection suggests it might surpass traditional macros in terms of type manipulation capabilities.

- Maintaining simplicity remains vital to avoid issues with language server protocols while utilizing this feature effectively.

DSPy Discord

-

Masters Thesis Graphic Shared: A member shared a graphic created for their Masters thesis, indicating its potential usefulness to others.

- Unfortunately, no additional details about the graphic were provided.

- Stepping Up CodeIt with GitHub: A GitHub Gist titled 'CodeIt Implementation: Self-Improving Language Models with Prioritized Hindsight Replay' was shared, containing a detailed implementation guide.

- This resource could be particularly valuable for those engaged in related research efforts.

- WeKnow-RAG Blends Web with Knowledge Graphs: WeKnow-RAG integrates Web search and Knowledge Graphs into a 'Retrieval-Augmented Generation (RAG)' system, enhancing LLM response reliability, as detailed in the arXiv paper.

- This innovative system addresses LLMs' propensity for generating factually incorrect content.

- XMC Project Explores In-Context Learning: xmc.dspy demonstrates effective In-Context Learning tactics for eXtreme Multi-Label Classification (XMC), operating efficiently with minimal examples, and more information is available at GitHub.

- This approach could significantly enhance the efficiency of classification tasks.

- DSPy Namesake Origin Story: The name dspy initially had to be circumvented on PyPI with

pip install dspy-ai. Thanks to community efforts, the cleanpip install dspywas eventually achieved after a user-related request, as noted by Omar Khattab.

- This illustrates the importance of community engagement in project development.

OpenInterpreter Discord

-

Open Interpreter Profiles customization: Users can create new profiles in Open Interpreter via the guide, allowing for customization through Python files, including model selection and context window adjustments.

- Profiles enable multiple optimized variations, accessed using

interpreter --profiles, enhancing user flexibility. - Desktop Client updates and events: Updates for the desktop client were discussed, positioning the community's House Party as the prime source for the latest announcements and beta access.

- Members highlighted that past attendees have gained early access, hinting at future developments.

- ChatGPT Search gets an upgrade: OpenAI revamped ChatGPT's web search capabilities, providing fast, timely answers with relevant links aimed at improving response accuracy.

- This advancement encourages a better user experience, making answers more contextually relevant.

- Meta's Robotics Innovations Announced: At Meta FAIR, three robotics advancements were unveiled, including Meta Sparsh, Meta Digit 360, and Meta Digit Plexus, elaborated in a post.

- These developments aim to boost the open source community's capabilities, showcasing innovations in touch technology.

- Concerns Over Anthropic API Integration: Frustrations arose concerning the recent updates in version 0.4.x of Open Interpreter, affecting local execution and Anthropic API integration.

- Suggestions emerged to make Anthropic API integration optional to enhance community support for local models.

- Profiles enable multiple optimized variations, accessed using

tinygrad (George Hotz) Discord

-

Skepticism about NPU Performance: Concerns persist regarding NPU performance in Microsoft laptops, with discussions hinting at alternatives like Qualcomm and Rockchip for better experiences.

- Members engaged in evaluating these alternatives alongside skepticism about current vendor offerings.

- Exporting Tinygrad Models Hits Buffer Issues: Members faced challenges exporting a Tinygrad model derived from ONNX, stumbling upon

BufferCopyobjects instead ofCompiledRunnerin thejit_cache.

- Suggestions were made to filter these out to avoid runtime issues when calling

compile_model(). - Reverse Engineering Hailo Op-Codes: One member sought tools like IDA for reverse engineering Hailo Chip op-codes located in .hef files, frustrated with the absence of a universal coding interface.

- They pondered over the option of exporting to ONNX versus directly reverse engineering.

- Tensor Assignment Confusion in Lazy.py: A member questioned the need for

Tensor.empty()followed byassign()for disk tensor creation, expressing confusion over its operation.

- They also highlighted the use of

assignfor writing new key-values to the KV cache during inference, suggesting broader functionality. - What’s the Deal with Assign Method?: Another discussion arose about the apparent inconsequence of creating new tensors versus utilizing the

assignmethod when gradients aren't being tracked.

- Participants noted the need for clarity on the method's utility and behavioral distinctions.

LlamaIndex Discord

-

Automated Research Paper Reporter Takes Off: LlamaIndex is creating an automated research paper report generator that downloads papers from arXiv, processes them via LlamaParse, and indexes them in LlamaCloud, further easing report generation, as showcased in this tweet. More details are available in their blog post outlining this feature.

- Users eagerly anticipate this functionality's impact on paper-related workloads.

- Open Telemetry Enhances LlamaIndex Experience: Open Telemetry is now integrated with LlamaIndex, enhancing logging traces directly into the observability platform, detailed in this documentation. This integration enhances telemetry strategies for developers navigating complex production environments, as highlighted in this tweet.

- This move simplifies monitoring metrics for intricate applications.

- Llamaparse Struggles with Schema Consistency: Members raised concerns about llamaparse's parsing of PDF documents into inconsistent schemas, complicating imports to Milvus databases. Standardizing the parse output remains a priority for users managing multi-schema data.

- Uniformity in JSON outputs is crucial for smoother data handling and user experience.

- Call for Milvus Field Standardization: Users expressed worries about varied field structures in outputs from multiple documents, complicating imports into Milvus databases. They are exploring ways to achieve standardized parsing outputs.

- Lack of uniformity may hinder integration efforts across diverse datasets.

- Custom Retriever Queries Get a Boost: A discussion emerged on how to add extra meta information when querying a custom retriever beyond the basic query string. Users debated if creating a custom QueryFusionRetriever would be the solution to effectively manage this additional data.

- Optimizing retrieval strategies could enhance the efficiency of data queries.

LAION Discord

-

Searching for Nutritional Datasets: A member is on the hunt for a dataset rich in detailed nutritional information, including barcodes and dietary tags, due to the shortcomings of the OpenFoodFacts dataset.

- They aim to find a more structured dataset that meets their needs for developing food detection models.

- Frustration with Patch Artifacts: Members vent frustration over patch artifacts arising in autoregressive image generation, expressing a need for alternatives to vector quantization.

- Despite their disdain for Variational Autoencoders (VAEs), they feel forced to consider them due to the challenges faced in clean image generation.

- Discussion on Image Generation Alternatives: A suggestion emerged that generating images without a VAE still leads to patch usage, closely resembling VAE functions.

- This sparked a broader conversation about the inherent challenges in image generation methods that don't lean on traditional approaches.

Gorilla LLM (Berkeley Function Calling) Discord

-

Parameter Type Errors Cause Confusion: A member reported experiencing parameter type errors, with the model returning a string instead of the expected integer during evaluation.

- This bug directly impacts overall model performance, representing a significant concern within the community.

- How to Evaluate Custom Models: A query emerged on evaluating finetuned models on the Berkeley Function Calling leaderboard, particularly about processing single and parallel calls.

- Clarity on this topic is crucial for ensuring proper understanding of the evaluation methods available.

- Command Output Issues Spark Confusion: A member shared that running

bfcl evaluateyielded no models evaluated, raising questions about the command's efficacy.

- Guidance was given to check evaluation result locations, hinting at a lack of clarity in using the command properly.

- Correct Command Sequence Essential for Evaluation: It was clarified that prior to running the evaluation command, one must use

bfcl generatefollowed by the model name to obtain responses.

- This detail is essential for participants to correctly follow the evaluation process.

- Model Name in Generate Command Confirmed: Members confirmed that

xxxxin the generation command refers to the model name, emphasizing the importance of accurate command syntax.

- Consulting the setup instructions is vital for ensuring proper command execution.

OpenAccess AI Collective (axolotl) Discord

-

SageAttention surpasses FlashAttention: The newly introduced SageAttention method significantly enhances quantization for attention mechanisms in transformer models, achieving an OPS that outperforms FlashAttention2 and xformers by 2.1 times and 2.7 times respectively, as noted in this research paper. This advancement also offers improved accuracy over FlashAttention3, suggesting potential for efficiently handling larger sequences.

- Moreover, the impact of SageAttention on future transformer model architectures could be considerable, filling a critical gap in performance optimization.

- Confusion over Axolotl Docker tags: Concerns were raised regarding the Docker image release strategy for

winglian/axolotlandwinglian/axolotl-cloud, particularly about the appropriateness of dynamic tags likemain-latestfor stable production use. Users highlighted the need for clearer documentation on this release strategy as tags reflecting main-YYYYMMDD imply daily builds rather than stable versions.

- This discussion underlines the growing need for clarity in versioning as users seek reliable deployments for production environments.

- H100 compatibility on the horizon: A member reported that H100 compatibility is forthcoming, referring to a relevant GitHub pull request that highlights upcoming improvements in the bitsandbytes library. This compatibility update promises to enhance integration within existing AI workflows.

- Community members expressed anticipation regarding the performance boosts and new applications that this compatibility could introduce to their projects.

- bitsandbytes update discussion: The latest discussions centered around the implications of the anticipated H100 compatibility for the bitsandbytes library, with community members keen on sharing insights regarding its potential benefits. Enthusiasm for the update suggests a pivotal moment for innovation in their ongoing projects.

- As enhancements unfold, members examined possible performance upgrades and numerous applications that the new compatibility might yield.

LangChain AI Discord

-

Custom Model Creation is Key: A member emphasized that the only option available is to create fully custom models, directing others to the Hugging Face documentation for guidance.

- Members acknowledged the importance of utilizing these resources, noting that numerous examples can assist in the development process.

- Build Your Own Chat Application with Ollama: A member shared a LinkedIn post about building a chat application using Ollama, highlighting its flexibility.

- The post underlined the benefits of customization and control offered by Ollama, which are crucial for effective chat solutions.

- Discussion on Essential Chat Application Features: Members discussed critical features to integrate into chat applications, emphasizing security and enhanced user experience.

- They pointed out that incorporating features like real-time messaging can significantly improve user satisfaction.

Alignment Lab AI Discord

-

Steam Gift Card Share: A member shared a link to purchase a $50 Steam gift card available at steamcommunity.com. This might be of interest for engineers looking to game or utilize game engines for projects.

- The gift card could be a fun incentive or a tool for team-building activities, encouraging creativity within the engineering community.

- Steam Gift Promotion Repeat: Interestingly, the same $50 Steam gift card link was also shared in a different channel, emphasizing its availability again at steamcommunity.com.

- This duplication could indicate a strong interest among members to engage with gaming content or rewards.

LLM Agents (Berkeley MOOC) Discord

-

Interest Sparked in LLM Agents: Participants express interest in learning about LLM Agents through the Berkeley MOOC.

- evilspartan98 highlighted this opportunity to deepen understanding of agent-based models in language processing.

- Berkeley MOOC Engagement: The ongoing discussion in the Berkeley MOOC suggests a rising traction among members regarding the future implications of LLM Agents.

- The collective engagement emphasizes a shared enthusiasm for exploring innovative frameworks and applications in the field.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Torchtune Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!