[AINews] That GPT-4o Demo

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Omnimodel is all you need

AI News for 6/27/2024-6/28/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (417 channels, and 3655 messages) for you. Estimated reading time saved (at 200wpm): 354 minutes. You can now tag @smol_ai for AINews discussions!

Romain Huet's demo of GPT-4o using an unreleased version of ChatGPT Desktop made the rounds yesterday and was essentially the second-ever high profile demo of GPT-4o after the release (our coverage here), and in the absence of bigger news is our pick of headliner today:

The demo starts at the 7:15:50 mark on stream, and you should watch the whole thing.

Capabilities demonstrated:

- low latency voicegen

- instructions to moderate tone to a whisper (and even quieter whisper)

- interruptions

- Camera mode on ChatGPT Desktop - constantly streaming video to GPT4o

- When paired with voice understanding it eliminates the need for a Send or Upload button

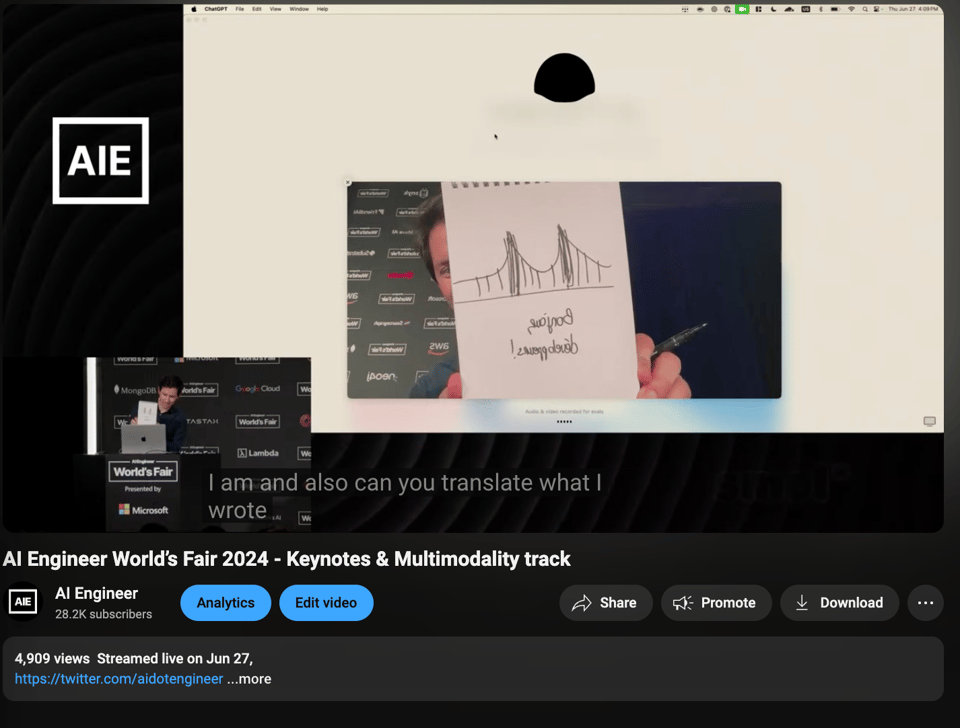

- Rapid OCR: Romain asks for a random page number, and presents the page of a book - and it reads the page basically instantly! Unfortunately the OCR failed a bit - it misread "Coca Cola" but conditions for the live demo weren't ideal.

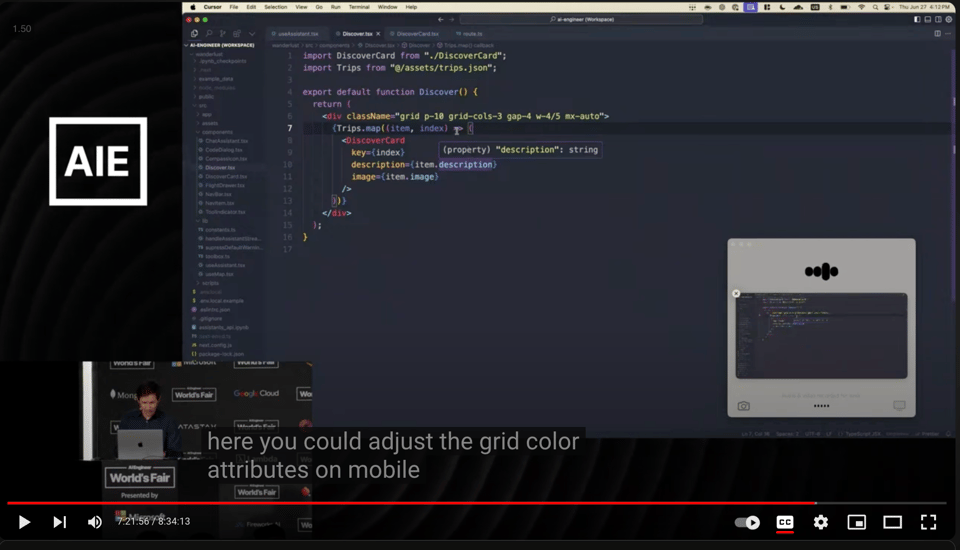

- Screen Sharing with ChatGPT: talking with ChatGPT to describe his programming problem and having it understand from visual context

- Reading Clipboard: copies the code, asks for a "one line overview" of the code (This functionality exists in ChatGPT Desktop today)

- Conversing with ChatGPT about Code: back and forth talking about Tailwind classnames in code, relying on vision (not clipboard)

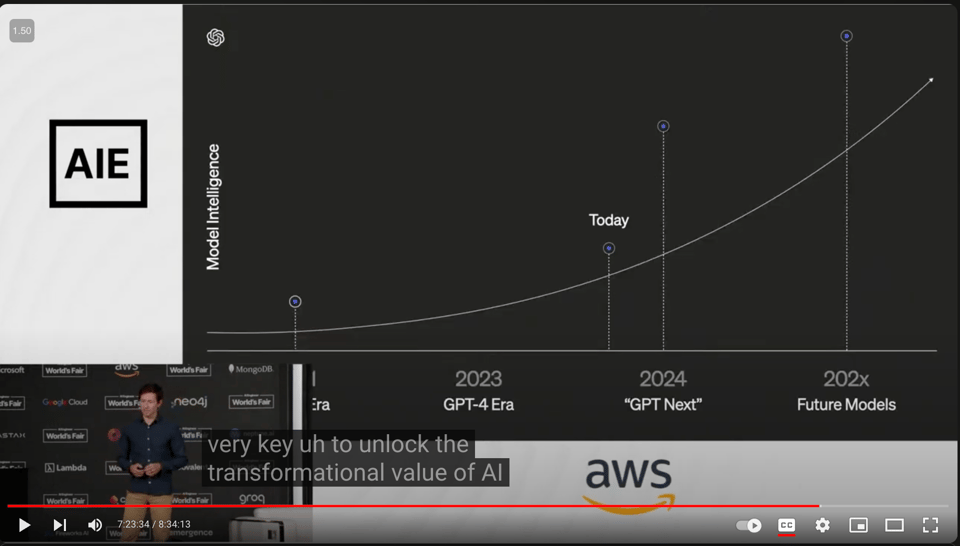

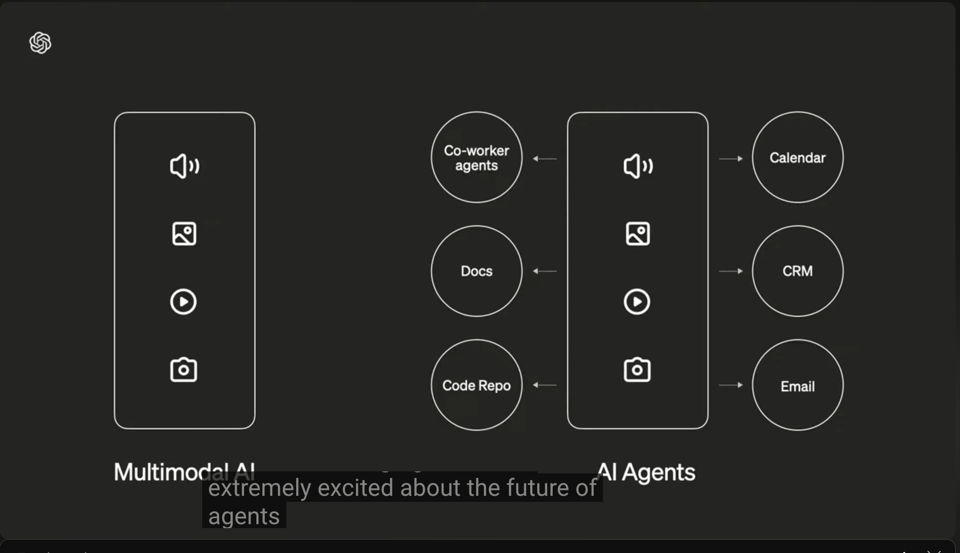

The rest of the talk discusses 4 "investment areas" of OpenAI:

- Textual intelligence (again using "GPT Next" instead of "GPT5"...)

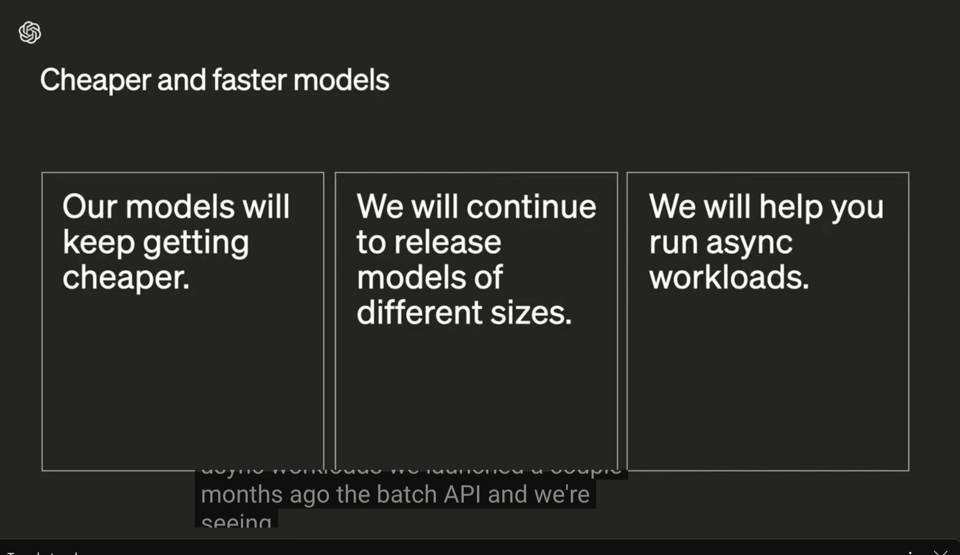

- Efficiency/Cost

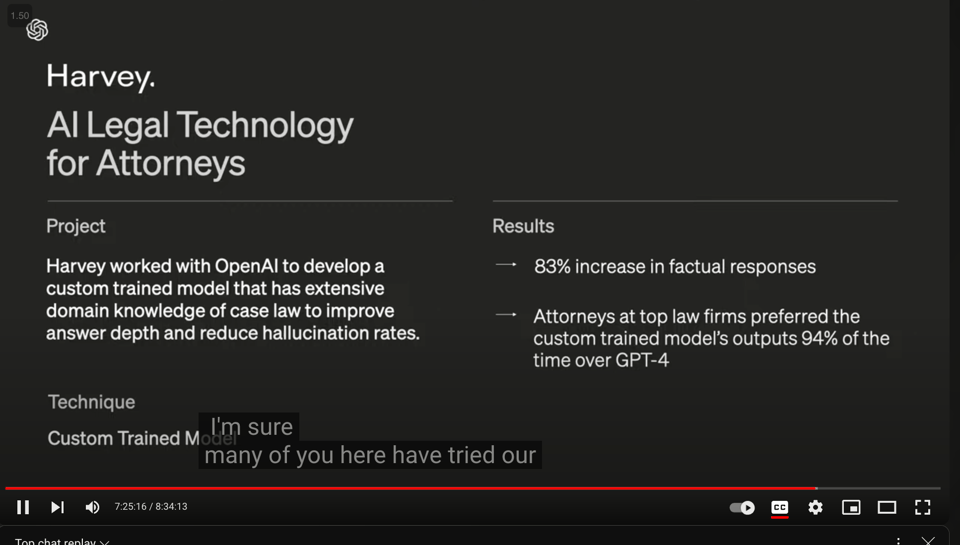

- Model Customization

- Multimodal Agents

, including a Sora and Voice Engine demo that you should really check out if you haven't seen it before.

, including a Sora and Voice Engine demo that you should really check out if you haven't seen it before.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

Gemma 2 Release by Google DeepMind

- Model Sizes and Training: @GoogleDeepMind announced Gemma 2 in 9B and 27B parameter sizes, trained on 13T tokens (27B) and 8T tokens (9B). Uses SFT, Distillation, RLHF & Model Merging. Trained on Google TPUv5e.

- Performance: @GoogleDeepMind 9B delivers class-leading performance against other open models in its size category. 27B outperforms some models more than twice its size and is optimized to run efficiently on a single TPU host.

- Availability: @fchollet Gemma 2 is available on Kaggle and Hugging Face, written in Keras 3 and compatible with TensorFlow, JAX, and PyTorch.

- Safety: @GoogleDeepMind followed robust internal safety processes including filtering pre-training data, rigorous testing and evaluation to identify and mitigate potential biases and risks.

Meta LLM Compiler Release

- Capabilities: @AIatMeta announced Meta LLM Compiler, built on Meta Code Llama with additional code optimization and compiler capabilities. Can emulate the compiler, predict optimal passes for code size, and disassemble code.

- Availability: @AIatMeta LLM Compiler 7B & 13B models released under a permissive license for both research and commercial use on Hugging Face.

- Potential: @MParakhin LLMs replacing compilers could lead to near-perfectly optimized code, reversing decades of efficiency sliding. @clattner_llvm Mojo 🔥 is a culmination of the last 15 years of compiler research, MLIR, and many other lessons learned.

Perplexity Enterprise Pro Updates

- Reduced Pricing for Schools and Non-Profits: @perplexity_ai announced reduced pricing for Perplexity Enterprise Pro for any school, nonprofit, government agency, or not-for-profit.

- Importance: @perplexity_ai These organizations play a critical role in addressing societal issues and equipping children with education. Perplexity wants to ensure their technology is accessible to them.

LangChain Introduces LangGraph Cloud

- Capabilities: @LangChainAI announced LangGraph Cloud, infrastructure to run fault-tolerant LangGraph agents at scale. Handles large workloads, enables debugging and quick iteration, and provides integrated tracing & monitoring.

- Features: @hwchase17 LangGraph Studio is an IDE for testing, debugging, and sharing LangGraph applications. Builds on LangGraph v0.1 supporting diverse control flows.

Other Notable Updates and Discussions

- Gemini 1.5 Pro Updates: @GoogleDeepMind opened up access to 2 million token context window on Gemini 1.5 Pro for all developers. Context caching now available in Gemini API to reduce costs.

- Lucid Dream Experience: @karpathy shared a lucid dream experience, noting the incredibly detailed and high resolution graphics, comparing it to a Sora-like video+audio generative model.

- Anthropic Updates: @alexalbert__ Anthropic devs can now view API usage broken down by dollar amount, token count, and API keys in the new Usage and Cost tabs in Anthropic Console.

- Distillation Discussion: @giffmana and @jeremyphoward discussed the importance of distillation and the "curse of the capacity gap" in training smaller high-performing models.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

AI Models and Architectures

- Gemma 2 models surpass Llama and Claude: In /r/LocalLLaMA, Google's Gemma 2 27B model beats Llama 3 70B in LMSYS benchmarks according to a technical report. The 9B variant also surpasses Claude 3 Haiku.

- Knowledge distillation for smaller models: Gemma 2 9B was trained using the 27B model as a teacher, an approach that could be the future for small/medium models, potentially using even larger models like Llama 400B as teachers.

- MatMul-free language modeling: A new paper introduces an approach that eliminates matrix multiplication from language models while maintaining strong performance. It reduces memory usage by 61% in training and 10x during inference, with custom FPGA hardware processing models at 13W.

- Sohu chip delivers massive performance: The specialized Sohu AI chip from Etched allegedly replaces 160 H100 GPUs, delivering 500,000 tokens/sec. It claims to be 10x faster and cheaper than Nvidia's next-gen Blackwell GPUs.

AI Applications and Use Cases

- AI-generated graphic novel: In /r/StableDiffusion, an author created a graphic novel 100% with Stable Diffusion, using SD1.5 iComix for characters, ControlNet for consistency, and Photoshop for layout. It's the first such novel published in Albanian.

- Website redesign with AI: A browser extension uses the OpenAI API to redesign websites based on a provided prompt, leveraging CSS variables. Experiments were done on shadcn.com and daisyui.com.

- Personalized AI assistant: An article on /r/LocalLLaMA details extending a personal Llama 3 8B model with WhatsApp and Obsidian data to create a personalized AI assistant.

Memes and Humor

- Disconnect in AI discussions: A meme video pokes fun at the disconnect between AI enthusiasts and the general public when discussing the technology.

- AI-generated movies: A humorous video imagines formulaic movies churned out by AI in the near future.

- Progress in AI-generated animations: A meme video of Will Smith eating spaghetti demonstrates AI's improving ability to generate realistic human animations, with only minor face and arm glitches remaining.

AI Discord Recap

A summary of Summaries of Summaries

1. Model Performance Optimization and Benchmarking

- Quantization techniques like AQLM and QuaRot aim to run large language models (LLMs) on individual GPUs while maintaining performance. Example: AQLM project with Llama-3-70b running on RTX3090.

- Efforts to boost transformer efficiency through methods like Dynamic Memory Compression (DMC), potentially improving throughput by up to 370% on H100 GPUs. Example: DMC paper by @p_nawrot.

- Discussions on optimizing CUDA operations like fusing element-wise operations, using Thrust library's

transformfor near-bandwidth-saturating performance. Example: Thrust documentation.

- Comparisons of model performance across benchmarks like AlignBench and MT-Bench, with DeepSeek-V2 surpassing GPT-4 in some areas. Example: DeepSeek-V2 announcement.

2. Fine-tuning Challenges and Prompt Engineering Strategies

- Difficulties in retaining fine-tuned data when converting Llama3 models to GGUF format, with a confirmed bug discussed.

- Importance of prompt design and usage of correct templates, including end-of-text tokens, for influencing model performance during fine-tuning and evaluation. Example: Axolotl prompters.py.

- Strategies for prompt engineering like splitting complex tasks into multiple prompts, investigating logit bias for more control. Example: OpenAI logit bias guide.

- Teaching LLMs to use

<RET>token for information retrieval when uncertain, improving performance on infrequent queries. Example: ArXiv paper.

3. Open-Source AI Developments and Collaborations

- Launch of StoryDiffusion, an open-source alternative to Sora with MIT license, though weights not released yet. Example: GitHub repo.

- Release of OpenDevin, an open-source autonomous AI engineer based on Devin by Cognition, with webinar and growing interest on GitHub.

- Calls for collaboration on open-source machine learning paper predicting IPO success, hosted at RicercaMente.

- Community efforts around LlamaIndex integration, with issues faced in Supabase Vectorstore and package imports after updates. Example: llama-hub documentation.

4. LLM Innovations and Training Insights

- Gemma 2 Impresses with Efficient Training: Google's Gemma 2 models, significantly smaller and trained on fewer tokens (9B model on 8T tokens), have outperformed competitors like Llama3 70B in benchmarks, thanks to innovations such as knowledge distillation and soft attention capping.

- Gemma-2's VRAM Efficiency Boosts QLoRA Finetuning: The new pre-quantized Gemma-2 4-bit models promise 4x faster downloads and reduced VRAM fragmentation, capitalizing on efficiency improvements in QLoRA finetuning.

- MCTSr Elevates Olympiad Problem-Solving: The MCT Self-Refine (MCTSr) algorithm integrates LLMs with Monte Carlo Tree Search, showing substantial success in tackling complex mathematical problems by systematically refining solutions.

- Adam-mini Optimizer's Memory Efficiency: Adam-mini optimizer achieves comparable or better performance than AdamW with up to 50% less memory usage by leveraging a simplified parameter partitioning approach.

5. Secure AI and Ethical Considerations

- Rabbit R1's Security Lapse Exposed on YouTube: A YouTube video titled "Rabbit R1 makes catastrophic rookie programming mistake" revealed hardcoded API keys in the Rabbit R1 codebase, compromising user data security.

- AI Usage Limit Warnings and Policy Compliance: Members highlighted the risks of pushing AI boundaries too far, cautioning that violating OpenAI's usage policies can result in account suspension or termination.

- Open-Source AI Debate: Intense discussions weighed the pros and cons of open-sourcing AI models, balancing potential misuse against democratization of access and the economic implications of restricted AI, considering both the benefits and the dangers.

6. Practical AI Integration and Community Feedback

- AI Video Generation with High VRAM Demands: Successful implementation of ExVideo generating impressive video results, albeit requiring substantial VRAM (43GB), demonstrates the continuous balance between AI capability and hardware limitations.

- Issues with Model Implementation Across Platforms: Integration issues with models like Gemma 2 on platforms such as LM Studio require manual fixes and the latest updates to ensure optimal performance.

- Challenges with RAG and API Limitations: Perplexity's RAG mechanism received criticism for inconsistent outputs, and limitations with models like Claude 3 Opus, showcasing struggles in context handling and API performance.

7. Datasets and Benchmarking Advancements

- REVEAL Dataset Benchmarks Verifiers: The REVEAL dataset benchmarks automatic verifiers of Chain-of-Thought reasoning, highlighting the difficulties in verifying logical correctness within open-domain QA settings.

- XTREME and SPPIQA Datasets for Robust Testing: Discussion on the XTREME and SPPIQA datasets focused on assessing multilingual models' robustness and multimodal question answering capabilities, respectively.

- Importance of Grounded Response Generators: The need for reliable models that provide grounded responses was highlighted with datasets like Glaive-RAG-v1, and considerations on scoring metrics for quality improvement.

8. Collaboration and Development Platforms

- Building Agent Services with LlamaIndex: Engineers can create vector indexes and transform them into query engines using resources shared in the LlamaIndex notebook, enhancing AI service deployment.

- Featherless.ai Offers Model Access Without GPU Setup: Featherless.ai launched a platform providing flat-rate access to over 450 models from Hugging Face, catering to community input on model prioritization and use cases.

- LangGraph Cloud Enhances AI Workflows: The introduction of LangGraph Cloud by LangChainAI promises robust, scalable workflows for AI agents, integrating monitoring and tracing for improved reliability.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- Gemma-2 Goes Lean and Keen: The new pre-quantized 4-bit versions of Gemma-2-27B and 9B are now available, boasting faster downloads and lesser VRAM fragmentation which are beneficial for QLoRA finetuning.

- The Great OS Debate for AI Development: Within the community, there's an active debate on the merits of using Windows vs. Linux for AI development, featuring concerns about peripheral compatibility on Linux and a general preference toward Linux despite perceived arbitrary constraints of Windows.

- Hugging Face's Evaluation System Under the Microscope: The community compared Hugging Face's evaluation system to a "popularity contest" and broached the notion of having premium paid evaluations, suggesting that an "evaluation should be allowed at any time if the user is willing to pay for it."

- Big Data, Big Headaches: Discussions around handling a 2.7TB Reddit dataset pointed out the immense resources needed for cleaning the data, which could inflate to "about 15 TB uncompressed... for meh data at best."

- AI Video Generation at the Edge of VRAM: The use of ExVideo for generating video content has been reported to deliver impressive results, yet it commands a formidable VRAM requirement of 43GB, emphasizing the constant balance between AI capabilities and resource availability.

HuggingFace Discord

- Gemma 2 Outshines Competition: Google's new Gemma 2 models have been integrated into the Transformers library, boasting advantages such as size efficiency—2.5x smaller than Llama3—and robust training on 13T tokens for the 27B model. Innovations like knowledge distillation and interleaving local and global attention layers aim for enhanced inference stability and memory reduction, with informative Gemma 2 details covered in a HuggingFace blog post.

- Deciphering New File System for Elixir: Elixir's FSS introduces file system abstraction with HTTP support and discusses non-extensibility concerns alongside HuggingFace's first open-source image-based retrieval system, making waves with a Pokémon dataset example and interest in further projects like visual learning models with CogVLM2 being spotlighted.

- AI Takes Flight with Multilingual App: U-C4N's multilingual real-time flight tracking application reveals the intersect of aviation and language, while a new 900M variant of PixArt aspires collaboration in the Spaces arena. Also, a fusion of AI and musical storytelling on platforms like Bandcamp breaks genre boundaries.

- Ready, Set, Gradio!: Gradio users face an action call to update to versions above 3.13 to avoid share link deactivation. Adherence will ensure continued access to Gradio's resources and is as simple as running

pip install --upgrade gradio.

- Machine Learning at Lightning Speed: A hyper-speed YouTube tutorial has engineered a 1-minute crash course on ten critical machine learning algorithms, fitting for those short on time but hungry for knowledge. Check this rapid lesson here.

LM Studio Discord

Gemma 2 Integration with Hiccups: The latest LM Studio 0.2.26 release adds support for Gemma 2 models, though some users report integration bugs and difficulties. To work around these issues, manual downloads and reinstallation of configs are suggested, with a note that some architectures, like ROCm, are still pending support.

Gemma-2's Confusing Capabilities: Discrepancies in the information about Gemma-2's context limit led to confusion, with conflicting reports of a 4k versus an 8k limit. Additionally, the support for storytelling model ZeusLabs/L3-Aethora-15B-V2 was recommended, and for models like Deepseek coder V2 Lite, users were advised to track GitHub pull requests for updates on support status.

Snapdragon Soars in LM Studio: Users praised the performance of Snapdragon X Elite systems for their compatibility with LM Studio, noting significant CPU/memory task efficiency compared to an i7 12700K, despite falling short compared to a 4090 GPU in specific tasks.

Threading the Needle for Multi-Agent Frameworks: Discussions on model efficacy suggested that a 0.5B model might comfortably proxy a user in a multi-agent framework; however, skepticism remains for such low-end models' capacity for coding tasks. For hardware enthusiasts, queries about the value of using dual video cards were answered positively.

Rift Over ROCm Compatibility and Gemma 2 Debuts: In the AMD ROCm tech-preview channel, queries about Gemma 2 model support for AMD GPUs were raised, pointing users to the newly released 0.2.26 ROCm "extension pack" for Windows described in GitHub instructions. Furthermore, Gemma 2's launch was met with both excitement and critique, with some users labeling it as "hot garbage" and others anxious for the promised improvements in coming updates.

OpenAI Discord

AI Usage Warnings: A discussion highlighted the risks of testing the limits of AI, leading to a clear warning: violating OpenAI's usage policies can result in account suspension or termination.

Open-source AI Debate: The engineering community debated the open-sourcing of AI models; the discussion contrasted the potential for misuse against the democratization of access, highlighting the economic implications of restricted access and the necessity of surveillance for public safety.

RLHF Training Puzzles Users: Conversations about Reinforcement Learning from Human Feedback (RLHF) revealed confusion regarding its occasional prompts and the opaque nature of how OpenAI handles public RLHF training.

AI Integration Triumphs and Woes: Experiences shared by members included issues with custom GPTs for specific tasks like medical question generation and successes in integrating AI models and APIs with other services for enhanced functionalities.

Prompt Engineering Insights: Members exchanged tips on prompt engineering, recommending simplicity and conciseness, with a foray into the use of "logit bias" for deeper prompt control and a brief touch on the quasi-deterministic nature of stochastic neural networks.

Stability.ai (Stable Diffusion) Discord

- Custom Style Datasets Raise Red Flags: Participants discussed the creation of datasets with a custom style, noting the potential risks of inadvertently generating NSFW content. The community underscored the complexity in monitoring image generation to avoid platform bans.

- Switching to Forge amid Automatic1111 Frustrations: Due to issues with Automatic1111, users are exploring alternatives like Forge, despite its own memory management challenges. For installation guidance, a Stable Diffusion Webui Forge Easy Installation video on YouTube has been shared.

- Cascade Channel Revival Requested: Many members voiced their desire to restore the Cascade channel due to its resourceful past discussions, sparking a debate on the guild's direction and possible focus shift towards SD3.

- Deep Dive into Model Training Specifics: Conversations about model training touched on the nuances of LoRa training and samplers such as 3m sde exponential, as well as VRAM constraints. The effectiveness and limitations of tools like ComfyUI, Forge, and Stable Swarm were also examined.

- Discord Community Calls for Transparency: A portion of the community expressed dissatisfaction with the deletion of channels and resources, impelling a discussion about the importance of transparent communication and preservation of user-generated content.

Latent Space Discord

- Scarlet AI Marches into Project Management: A preview of Scarlet AI for planning complex projects has been introduced; interested engineers can assess its features despite not being ready for prime time at Scarlet AI Preview.

- Character.AI Dials Into Voice: New Character Calls by Character.AI allows for AI interactions over phone calls, suitable for interview rehearsals and RPG scenarios. The feature is showcased on their mobile app demonstration.

- Meta Optimizes Compiler Code via LLM: Meta has launched a Large Language Model Compiler to improve compiler optimization tasks, with a deep dive into the details in their research publication.

- Infrastructure Innovations with LangGraph Cloud: LangChainAI introduced LangGraph Cloud, promising resilient, scalable workflows for AI agents, coupled with monitoring and tracing; more insights available in their announcement blog.

- Leadership Shift at Adept Amid Amazon Team-Up: News has surfaced regarding Adept refining their strategy along with several co-founders transitioning to Amazon's AGI team; learn more from the GeekWire article.

- OpenAI Demos Coming in Hot: The guild was notified of an imminent OpenAI demo, advising members to access the special OpenAI Demo channel without delay.

- GPT-4o Poised to Reinvent Coding on Desktop: The guild discussed the adoption of GPT-4o to aid in desktop coding, sharing configurations like

Open-Interpreterwhich could easily be integrated with local models.

- When Penguins Prefer Apples: The struggles of Linux users with streaming sparked a half-humorous, half-serious comparison with Mac advantages and brought to light Vesktop, a performance-boosting Discord app for Linux, found on GitHub.

- AI Community Leaks and Shares: There's chatter about potentially sensitive GPT definitions surfacing on platforms like GitHub; a nod to privacy concerns. Links to wear the scholarly hat were exchanged, illuminating CoALA frameworks and repositories for language agents which can be found at arXiv and on GitHub.

- Praise for Peer Presentations: Members showered appreciation on a peer for a well-prepared talk, highlighting the importance of quality presentations in the AI field.

Nous Research AI Discord

- LLMs' Instruction Pre-Training Edges Out: Incorporating 200M instruction-response pairs into pre-training large corpora boosted performance, allowing a modest Llama3-8B to hang with the big guns like Llama3-70B. Details on the efficient instruction synthesizer are in the Instruction Pre-Training paper, and the model is available on Hugging Face.

- MCTSr Fuses with LLMs for Olympian Math: Integrating Large Language Models with Monte Carlo Tree Search (MCTSr) led to notable success in solving mathematical Olympiad problems. The innards of this technique are spilled in a detailed study.

- Datasets Galore: SPPIQA, XTREME, UNcommonsense: A suite of datasets including SPPIQA for reasoning, XTREME for multilingual model assessment, and UNcommonsense for exploring scales of bizarre, were discussed across Nos Research AI channels.

- Hermes 2 Pro Launches with Function Boost: The Hermes 2 Pro 70B model was revealed, trumpeting improvements in function calls and structured JSON outputs, boasting scores of 90% and 84% in assessments. A scholarly read isn't offered, but you can explore the model at NousResearch's Hugging Face.

- Debating SB 1047's Grip on AI: Members heatedly debated whether Cali's SB 1047 legislation will stunt AI's growth spurts. A campaign rallying against the bill warns it could curb the risk-taking spirit essential for AI's blazing trail.

Eleuther Discord

- "Cursed" Complexity vs. Practical Performance: The yoco architecture's kv cache strategy sparked debate, with criticisms about its deviation from standard transformer practices and complexity. The discussion also covered the order of attention and feed-forward layers in models, as some proposed an efficiency gain from non-standard layer ordering, while others remained skeptical of the performance benefits.

- Scaling Beyond Conventional Wisdom: Discussions around scaling laws questioned the dominance of the Challax scaling model, with some participants proposing the term "scaling laws" to be provisional and better termed "scaling heuristics." References were made to papers such as "Parameter Counts in Machine Learning" to support viewpoints on different scaling models' effectiveness.

- Data Privacy Dilemma: Conversations surface privacy concerns in the privacy-preserving/federated learning context, where aggregate data exposes a wider attack space. The potential for AI agents implementing security behaviors was discussed, considering contextual behavior identification and proactive responses to privacy compromises.

- LLM Evaluation and Innovation: A new reasoning challenge dataset, MMLU-SR, was introduced and considered for addition to lm_eval, probing large language models' (LLMs) comprehension abilities through modified questions. Links to the dataset arXiv paper and a GitHub PR for the MedConceptsQA benchmark addition were shared.

- Instruction Tuning Potential in GPTNeoX: Queries on instruction tuning in GPTNeoX, specifically selectively backpropagating losses, led to a discussion that referenced an ongoing PR and a preprocessing script "preprocess_data_with_chat_template.py", signifying active development in tailored training workflows.

CUDA MODE Discord

- Triton Tribulations on Windows: Users have reported Triton installation issues on Windows when using

torch.compile, leading to "RuntimeError: Cannot find a working trilog installation." It's suggested that Triton may not be officially supported on Windows, posing a need for alternative installation methods.

- Tensor Tinkering with torch.compile: The author of Lovely Tensors faces breakage in

torch.compile()due toTensor.__repr__()being called on a FakeTensor. The community suggests leveraging torch.compiler fine-grain APIs to mitigate such issues. Meanwhile, an update to NCCL resolves a broadcast deadlock issue in older versions, as outlined in this pull request.

- Gearing up with CUDA Knowledge: Stephen Jones presents an in-depth overview of CUDA programming, covering wave quantization & single-wave kernels, parallelism, and optimization techniques like tiling for improved L2 cache performance.

- CUDA Curiosity and Cloud Query: Members share platforms like Vast.ai and Runpod.io for CUDA exploration on cloud GPUs, and recommend starting with

torch.compile, then moving to Triton or custom CUDA coding for Python to CUDA optimization.

- PMPP Publication Puzzle: A member highlights a physical copy of PMPP (4th edition) book missing several pages, inciting queries about similar experiences.

- torch/aten Ops Listing and Bug-Hunting: The torchao channel surfaces requests for a comprehensive list of required

torch/aten opsfor tensor subclasses such asFSDP, conversations about a recursion error with__torch_dispatch__, and a refactor PR for Int4Tensor. Additionally, there was a caution regarding GeForce GTX 1650's lack of native bfloat16 support.

- HuggingFace Hub Hubbub: The off-topic channel buzzes with chatter about the pros and cons of storing model architecture and preprocessing code directly on HuggingFace Hub. There's debate on the best model code and weight storage practices, with the Llama model cited as a case study in effective release strategy.

- Gemma 2 Grabs the Spotlight: The Gemma 2 models from Google, sporting 27B and 9B parameters, outshine competitors in benchmarks, with appreciation for openness and anticipation for a smaller 2.6B variant. Discussions also focused on architectural choices like approx GeGLU activations and the ReLU versus GELU debate, backed by scholarly research. Hardware challenges with FP8 support led to mentions of limitations in NVIDIA's libraries and Microsoft's work on FP8-LM. Yuchen's training insights suggest platform or dataset-specific issues when optimizing for H100 GPUs.

Perplexity AI Discord

- Perplexity Cuts Costs for a Cause: Perplexity introduces reduced pricing for its Enterprise Pro offering, targeting schools and nonprofits to aid in their societal and educational endeavors. Further information on eligibility and application can be found in their announcement.

- RAG Frustration and API Agitation: Within Perplexity's AI discussion, there's frustration over the erratic performance of the RAG(Relevance Aware Generation) mechanism and demand for access to larger models such as Gemma 2. Additionally, users are experiencing limitations with Claude 3 Opus, citing variable and restrictive usage caps.

- Security First, Fixes Pending: Security protocols were addressed, directing members to the Trust Center for information on data handling and PII management. Meanwhile, members suggested using "#context" for improved continuity handling ongoing context retention issues in interactions.

- Tinkering with Capabilities: The community's attention turned to exploring Android 14 enhancements, while raising issues with Minecraft's mechanics potentially misleading kids. An inquiry into filtering API results to receive recent information received guidance on using specific date formats.

- Tech Deep-Dives and Innovations Spotlighted: Shared content included insights on Android 14, criticisms of Linux performance, innovative uses of Robot Skin, and sustainable construction inspired by oysters. A notable share discussed criticisms of Minecraft's repair mechanics potentially leading to misconceptions.

Interconnects (Nathan Lambert) Discord

- Character.AI Pioneers Two-Way AI Voice Chats: Character.AI has launched Character Calls, enabling voice conversations with AI, though the experience is marred by a 5-second delay and less-than-fluid interaction. Meanwhile, industry chatter suggests Amazon's hiring of Adept's cofounders and technology licensing has left Adept diminished, amid unconfirmed claims of Adept having a toxic work environment.

- AI Agents Trail Behind the Hype Curve: Discussions draw parallels between AI agents' slow progress and the self-driving car industry, claiming that hype outpaces actual performance. The quality and sourcing of training data for AI agents, including an emerging focus on synthetic data, were highlighted as pivotal challenges.

- SnailBot News Episode Stirs Up Discussion: Excitement is brewing over SnailBot News' latest episode featuring Lina Khan; Natolambert teases interviews with notable figures like Ross Taylor and John Schulman. Ethical considerations around "Please don't train on our model outputs" data usage conditions were also brought into focus.

- Scaling Engulfs AI Discourse: Skepticism encircles the belief that scaling alone leads to AGI, as posited in AI Scaling Myths, coupled with discussions on the alleged limitations in high-quality data for LLM developers. Nathan Lambert urges critical examination of these views, referencing Substack discussions and recent advances in synthetic data.

- Varied Reflections on AI and Global Affairs: From Anthropic CEO's affection for Final Fantasy underscoring AI leaders' human sides to debates over AI crises being potentially more complex than pandemics, guild members engage in diverse conversations. Some talk even considers how an intelligence explosion could reshape political structures, reflecting on the far-reaching implications of AI development.

LlamaIndex Discord

LlamaIndex Powers Agent Services: Engineers explored building agentic RAG services with LlamaIndex, discussing the process of creating vector indexes and transforming them into query engines. Detailed steps and examples can be found in a recently shared notebook.

Jina's Reranking Revolution: The LlamaIndex community is abuzz about Jina's newest reranker, hailed as their most effective to date. Details behind the excitement are available here.

Node Weight Puzzle in Vector Retrievers: AI practitioners are troubleshooting LlamaIndex's embedding challenges, deliberating on factors such as the parts of nodes to embed and the mismatch of models contributing to suboptimal outcomes from vector retrievers. A consensus implies creating simple test cases for effective debugging.

Entity Linking Through Edges: Enhancing entity relationship detection is generating debate, focused on adding edges informed by embedding logic. Anticipation surrounds a potential collaborative know-how piece with Neo4j, expected to shed light on advanced entity resolution techniques.

Issues Surface with Claude and OpenAI Keys: Discussions emerge about needing fixes for Claude's empty responses linked to Bedrock's token limitation and an IndexError in specific cases, as well as a curious environment behavior where code-set OpenAI keys seem overridden. Engineers also probe optimizations for batch and parallel index loading, aiming to accelerate large file handling.

OpenRouter (Alex Atallah) Discord

Gemma's Multilingual Punch: While Gemma 2 officially supports only English, users report excellent multilingual capabilities, with specific inquiries about its performance in Korean.

Model Migration Madness: Gemma 2.9B models, with free and standard variants, are storming the scene as per the announcement, accompanied by price cuts across popular models, including a 10% drop for Dolphin Mixtral and 20% for OpenChat.

OpenRouter, Open Issues: OpenRouter's tight-lipped moderation contrasts with platforms like AWS; meanwhile, users confront the lack of Opus availability without enterprise support and battle Status 400 errors from disobedient APIs of Gemini models.

Passphrase Puzzles and API Allocutions Solved: Engineers share wisdom on seamless GitHub authentication using ssh-add -A, and discuss watching Simon Willison's overview on LLM APIs for enlightenment, with resources found on YouTube and his blog.

AI Affinity Adjustments: Embrace daun.ai’s advice to set the default model to 'auto' for steady results or live life on the edge with 'flavor of the week' fallbacks, ensuring continued productivity across tasks.

LAION Discord

- Gege AI Serenades with New Voices: The music creation tool, Gege AI, can mimic any singer's voice from a small audio sample, inciting humorous comments about the potential for disrupting the music industry and speculations about RIAA's reaction.

- User Frustration with Gege AI and GPT-4 Models: Users reported difficulties in registering for Gege AI with quips about social credit, while others expressed disappointment with the performance of GPT-4 and 4O models, suggesting they can be too literal and less suited for programming tasks than earlier versions like GPT-3.5.

- Adam-mini Optimizer Cuts Memory Waste: The Adam-mini optimizer offers performance comparable to, or better than, AdamW, while requiring 45-50% less memory by partitioning parameters and assigning a single learning rate per block, according to a recent paper highlighted in discussions.

- Skepticism Meets Ambition with Gemma 27B: While the new Gemma 27B model has reportedly shown some promising performance enhancements, members remained cautious due to a high confidence interval, questioning its overall advantage over previous iterations.

- Shifting to Claude for a Smoother Ride: Given issues with OpenAI's models, some members have opted for Claude for its superior artifacts feature and better integration with the Hugging Face libraries, reporting a smoother experience compared to GPT-4 models.

LangChain AI Discord

- Bedrock Befuddles Engineers: Engineers shared challenges in integrating csv_agent and pandas_dataframe_agent with Bedrock, as well as errors encountered while working with Sonnet 3.5 model and Bedrock using

ChatPromptTemplate.fromMessages, indicating possible compatibility issues.

- Launch of LangGraph with Human-in-the-Loop Woes: The introduction of LangGraph's human-in-the-loop capabilities, notably "Interrupt" and "Authorize", was marred by deserialization errors during the resumption of execution post-human approvals, as discussed in the LangChain Blog.

- Refinement of JSONL Editing Tools and RAG with Matryoshka Embeddings: Community members have circulated a tool for editing JSONL datasets (uncensored.com/jsonl) and shared insights on building RAG with Matryoshka Embeddings to enhance retrieval speed and memory efficiency, complete with a Colab tutorial.

- Dappier Creates AI Content Monetization Opportunity: The Dappier platform, featured in TechCrunch, provides a marketplace for creators to license content for AI training through a RAG API, signaling a new revenue stream for proprietary data holders.

- Testcontainers Python SDK Boosts Ollama: The Testcontainers Python SDK now supports Ollama, enhancing the ease of running and testing Large Language Models (LLMs) via Ollama, available in version 4.7.0, along with example usage (pull request #618).

Modular (Mojo 🔥) Discord

- Mix-Up Between Mojolicious and Mojo Resolved: Confusion ensued when a user asked for a Mojo code example and received a Perl-based Mojolicious sample instead; but it was clarified that the request was for info on Modular's AI development language Mojo, admired for its Python-like enhanced abilities and C-like robustness.

- Caught in the REPL Web: An anomaly was reported concerning the Mojo REPL, which connects silently and then closes without warning, prompting a discussion to possibly open a GitHub issue to identify and resolve this mysterious connectivity conundrum.

- Nightly Notes: New Compiler and Graph API Slices: Modular's latest nightly release '2024.6.2805' features a new compiler with LSP behavior tweaks and advises using

modular update nightly/mojo; Developers also need to note the addition of "integer literal slices across dimensions", with advice to document requests for new features via issues for traceability.

- SDK Telemetry Tips and MAX Comes Back: Guidance was shared on disabling telemetry in the Mojo SDK, with a helpful FAQ link provided; The MAX nightly builds are operational again, welcoming trials of the Llama3 GUI Chatbot and feedback via the given Discord link.

- Meeting Markers and Community Collaterals: The community is gearing up for the next Mojo Community meeting, scheduled for an unspecified local time with details accessible via Zoom and Google Docs; Plus, a warm nod to holiday celebrants in Canada and the U.S. was shared. Meanwhile, keeping informed through the Modverse Weekly - Issue 38 is a click away at Modular.com.

Torchtune Discord

- Community Models Get Green Light: The Torchtune team has expressed interest in community-contributed models, encouraging members to share their own implementations and enhance the library's versatility.

- Debugging Diskourse's Debacles: A puzzling issue in Torchtune's text completions was tracked to end-of-sentence (EOS) tokens being erroneously inserted by the dataset, as detailed in a GitHub discussion.

- Finding Favor in PreferenceDataset: For reinforcement learning applications, the PreferenceDataset emerged as the favorable choice over the text completion dataset, better aligning with the rewarding of "preferred" input-response pairs.

- Pretraining Pax: Clarification in discussions shed light on pretraining mechanics, specifically that it involves whole documents for token prediction, steering away from fragmented input-output pair handling.

- EOS Tokens: To Add or Not to Add?: The community debated and concluded positively on introducing an add_eos flag to the text completion datasets within Torchtune, resolving some issues in policy-proximal optimization implementations.

LLM Finetuning (Hamel + Dan) Discord

Next-Gen Data Science IDE Alert: Engineers discussed Positron, a future-forward data science IDE which was shared in the #general channel, suggesting its potential relevance for the community.

Summarization Obstacle Course: A technical query was observed about generating structured summaries from patient records, with an emphasis on avoiding hallucinations using Llama models; the community is tapped for strategies in prompt engineering and fine-tuning.

LLAMA Drama: Deployment of LLAMA to Streamlit is causing errors not seen in the local environment, as discussed in the #🟩-modal channel; another member resolved a FileNotFoundError for Tinyllama by adjusting the dataset path.

Credits Where Credits Are Due: Multiple members have reported issues regarding missing credits for various applications, including requests in the #fireworks and #openai channels, stressing the need for resolution involving identifiers like kishore-pv-reddy-ddc589 and organization ID org-NBiOyOKBCHTZBTdXBIyjNRy5.

Link Lifelines and Predibase Puzzles: In the #freddy-gradio channel a broken link was fixed swiftly, and a question was raised in the #predibase channel about the expiration of Predibase credits, however, it remains unanswered.

OpenInterpreter Discord

- Secure Yet Open: Open Interpreter Tackles Security: Open Interpreter's security measures, such as requiring user confirmation before code execution and sandboxing using Docker, were discussed, emphasizing the importance of community input for project safety.

- Speed vs. Skill: Code Models in the Arena: Engineers compared various code models, recognizing Codestral for superior performance, while DeepSeek Coder offers faster runtimes but at approximately 70% effectiveness. DeepSeek Coder-v2-lite stood out for its rapid execution and coding efficiency, potentially outclassing Qwen-1.5b.

- Resource Efficiency Query: SMOL Model in Quantized Form: Due to RAM constraints, there was an inquiry about running a SMOL multi-modal model in a quantized format, spotlighting the adaptive challenge for AI systems in limited-resource settings.

- API Keys Exposed: Rabbit R1's Security Oversight: A significant security oversight was exposed in a YouTube video, where Rabbit R1 was found to have hardcoded API keys in its codebase, a critical threat to user data security.

- Modifying OpenInterpreter for Local Runs: An AI engineer outlined the process for running OpenInterpreter locally using non-OpenAI providers, detailing the adjustments in a GitHub issue comment. Concerns were raised over additional API-related costs, on top of subscription fees.

tinygrad (George Hotz) Discord

tinygrad gets new porting perks: A new port that supports finetuning has been completed, signaling advancements for the tinygrad project.

FPGA triumphs in the humanoid robot arena: An 8-month-long project has yielded energy-efficient humanoid robots using FPGA-based systems, which is deemed more cost-effective compared to the current GPU-based systems that drain battery life with extensive power consumption.

Shapetracker's zero-cost reshape revolution: The Shapetracker in tinygrad allows for tensor reshaping without altering the underlying memory data, which was detailed in a Shapetracker explanation, and discussed by members considering its optimizations over traditional memory strides.

Old meets new in model storage: In tinygrad, weights are handled by safetensors and compute by pickle, according to George Hotz, indicating the current methodology for model storage.

Curiosity about Shapetracker's lineage: Participants pondered if the concept behind Shapetracker was an original creation or if it drew inspiration from existing deep learning compilers, while admiring its capability to optimize without data copies.

Cohere Discord

- Internship Inquiries Ignite Network: A student intersects their academic focus on LLMs and Reinforcement little reflectiong with a real-world application by seeking DMs from Cohere employees about the company's work culture and projects. Engagement on the platform signals a consensus about the benefits of exhibiting a robust public project portfolio when vying for internships in the AI field.

- Feature Requests for Cohere: Cohere users demonstrated curiosity about potential new features, prompting a call for suggestions that could enhance the platform's offerings.

- Automation Aspirations in AI Blogging: Discussions arose around setting up AI-powered automations for blogging and social media content generation, directing the inquiry towards specialized assistance channels.

- AI Agent Achievement Announced: A member showcased an AI project called Data Analyst Agent, built using Cohere and Langchain, and promoted the creation with a LinkedIn post.

OpenAccess AI Collective (axolotl) Discord

- Gemma2 Gets Sample Packing via Pull Request: A GitHub pull request was submitted to integrate Gemma2 with sample packing. It's pending due to a required fix from Hugging Face, detailed within the PR.

- 27b Model Fails to Impress: Despite the increase in size, the 27b model is performing poorly in benchmarks when compared to the 9b model, indicating there may be scaling or architecture inefficiencies.

AI Stack Devs (Yoko Li) Discord

- Featherless.ai Introduces Flat-Rate Model Access: Featherless.ai has launched a platform offering access to over 450+ models from Hugging Face at competitive rates, with the basic tier starting at $10/month and no need for GPU setup or download.

- Subscription Scale-Up: For $10 per month, the Feather Basic plan from Featherless.ai allows access up to 15B models, while the Feather Premium plan at $25 per month allows up to 72B models, adding benefits like private and anonymous usage.

- Community Influence on Model Rollouts: Featherless.ai is calling for community input on model prioritization for the platform, highlighting current popularity with AI persona local apps and specialized tasks like language finetuning and SQL model usage.

Datasette - LLM (@SimonW) Discord

- Curiosity for Chatbot Elo Evolution: A user requested an extended timeline of chatbot elo ratings data beyond the provided six-week JSON dataset, expressing interest in the chatbot arena's evolving competitive landscape.

- Observing the Elo Race: From a start date of May 19th, there's a noted trend of the "pack" inching closer among leading chatbots in elo ratings, indicating a tight competitive field.

MLOps @Chipro Discord

- Feature Stores Step into the Spotlight: An informative webinar titled "Building an Enterprise-Scale Feature Store with Featureform and Databricks" will be held on July 23rd at 8 A.M. PT. Simba Khadder will tackle the intricacies of feature engineering, utilization of Databricks, and the roadmap for handling data at scale, capped with a Q&A session. Sign up to deep dive into feature stores.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The YAIG (a16z Infra) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!