[AINews] Tencent's Hunyuan-Large claims to beat DeepSeek-V2 and Llama3-405B with LESS Data

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Evol-instruct synthetic data is all you need.

AI News for 11/4/2024-11/5/2024. We checked 7 subreddits, 433 Twitters and 30 Discords (217 channels, and 3533 messages) for you. Estimated reading time saved (at 200wpm): 364 minutes. You can now tag @smol_ai for AINews discussions!

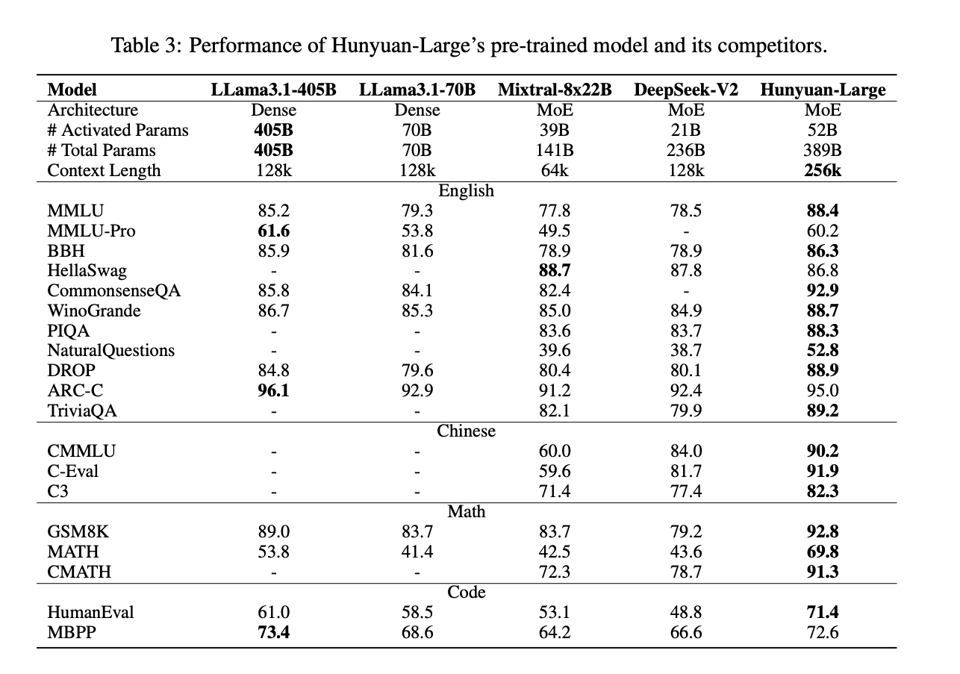

We tend to apply a high bar for Chinese models, especially from previously-unknown teams. But Tencent 's release today (huggingface,paper here, HN comments) is notable in its claims versus known SOTA open-weights models:

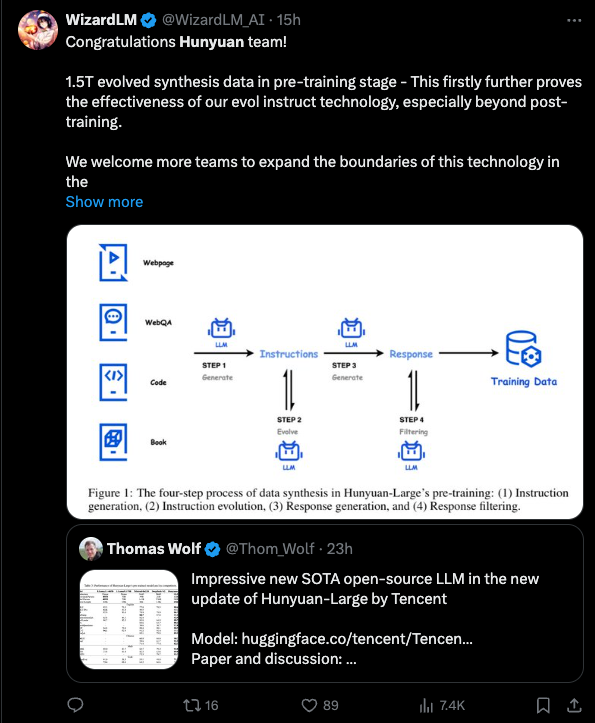

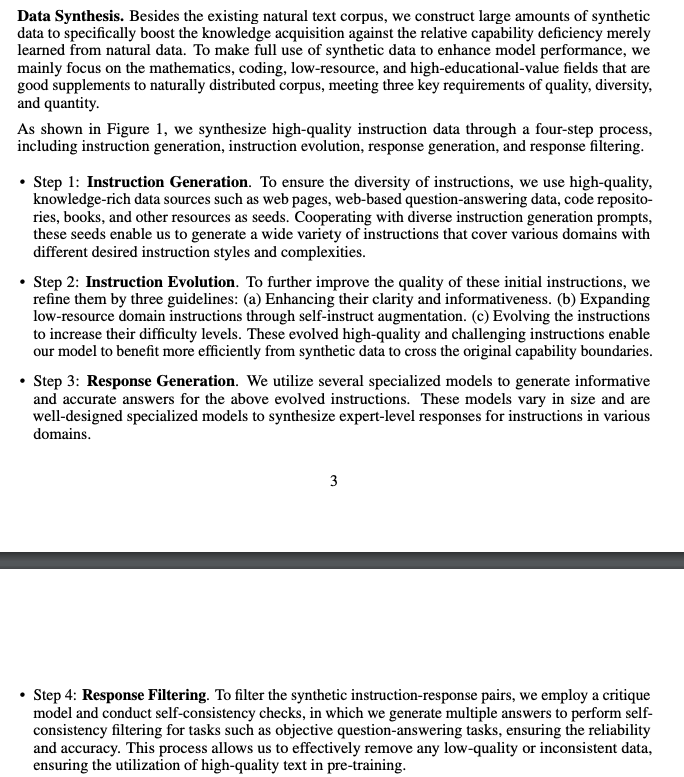

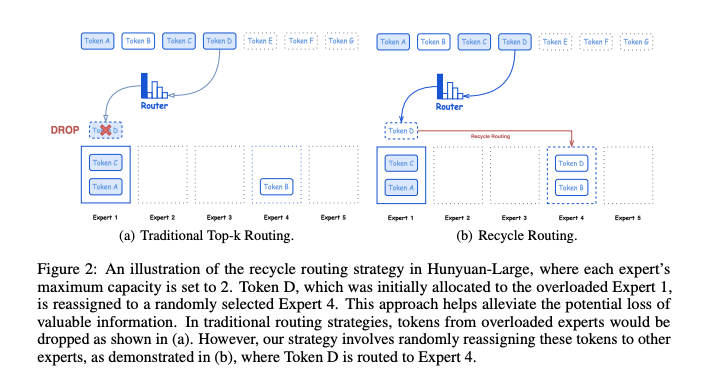

Remarkably for a >300B param model (MoE regardless), it is very data efficient, being pretrained on "only" 7T tokens (DeepseekV2 was 8T, Llama3 was 15T), with 1.5T of them being synthetic data generated via Evol-Instruct, which the Wizard-LM team did not miss:

The paper offers decent research detail on some novel approaches they explored, including "recycle routing":

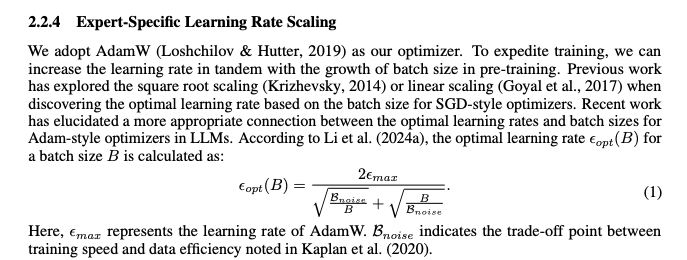

and expert-specific LRs

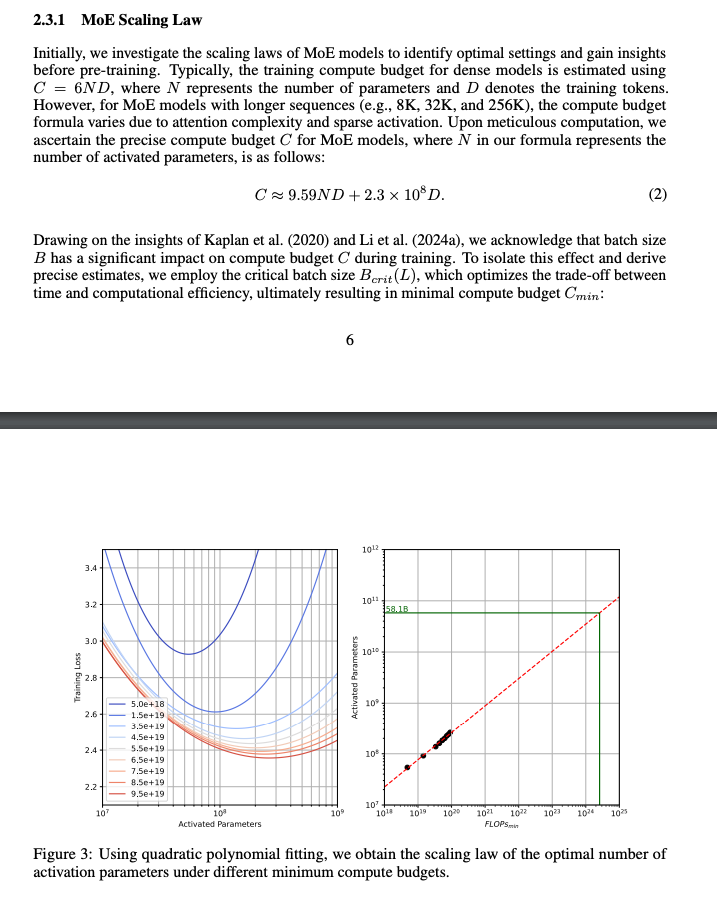

The even investigate and offer a compute-efficient scaling law for MoE active params:

The story isn't wholly positive: the custom license forbids users in the EU and >100M MAU companies, and of course don't ask them China-sensitive questions. Vibe checks aren't in yet (we don't find anyone hosting an easy public endpoint) but nobody is exactly shouting from the rooftops about it. Still it is a nice piece of research for this model class.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Releases and Updates

- Claude 3.5 Haiku Enhancements: @AnthropicAI announced that Claude 3.5 Haiku is now available on the Anthropic API, Amazon Bedrock, and Google Cloud's Vertex AI, positioning it as the fastest and most intelligent cost-efficient model to date. @ArtificialAnlys analyzed that Claude 3.5 Haiku has increased intelligence but noted its price surge, making it 10x more expensive than competitors like Google's Gemini Flash and OpenAI's GPT-4o mini. Additionally, @skirano shared that Claude 3.5 Haiku is one of the most fun models to use, outperforming previous Claude models on various tasks.

- Meta's Llama AI for Defense: @TheRundownAI reported that Meta has opened Llama AI to the U.S. defense sector, marking a significant collaboration in the AI landscape.

AI Tools and Infrastructure

- Transforming Meeting Recordings: @TheRundownAI introduced a tool to transform meeting recordings into actionable insights, enhancing productivity and information accessibility.

- Llama Impact Hackathon: @togethercompute and @AIatMeta are hosting a hackathon focused on building solutions with Llama 3.1 & 3.2 Vision, offering a $15K prize pool and encouraging collaboration on real-world challenges.

- LlamaIndex Chat UI: @llama_index unveiled LlamaIndex chat-ui, a React component library for building chat interfaces, featuring Tailwind CSS customization and integrations with LLM backends like Vercel AI.

AI Research and Benchmarks

- MLX LM Advancements: @awnihannun highlighted that the latest MLX LM generates text faster with very large models and introduces KV cache quantization for improved efficiency.

- Self-Evolving RL Framework: @omarsar0 proposed a self-evolving online curriculum RL framework that significantly improves the success rate of models like Llama-3.1-8B, outperforming models such as GPT-4-Turbo.

- LLM Evaluation Survey: @sbmaruf released a systematic survey on evaluating Large Language Models, addressing challenges and recommendations essential for robust model assessment.

AI Industry Events and Hackathons

- AI High Signal Updates: @TheRundownAI shared top AI stories, including Meta’s Llama AI for defense, Anthropic’s Claude Haiku 3.5 release, and funding news like Physical Intelligence landing $400M.

- Builder's Day Recap: @ai_albert__ recapped the first Builder's Day event with @MenloVentures, highlighting the talent and collaboration among developers.

- ICLR Emergency Reviewers Needed: @savvyRL called for emergency reviewers for topics like LLM reasoning and code generation, emphasizing the urgent need for expert reviews.

AI Pricing and Market Reactions

- Claude 3.5 Haiku Pricing Controversy: @omarsar0 expressed concerns over the price jump of Claude 3.5 Haiku, questioning the value proposition compared to other models like GPT-4o-mini and Gemini Flash. Similarly, @bindureddy criticized the 4x price increase, suggesting it doesn't align with performance improvements.

- Python 3.11 Performance Boost: @danielhanchen advocated for upgrading to Python 3.11, detailing its 1.25x faster performance on Linux and 1.2x on Mac, alongside improvements like optimized frame objects and function call inlining.

- Tencent’s Synthetic Data Strategy: @_philschmid discussed Tencent's approach of training their 389B parameter MoE on 1.5 trillion synthetic tokens, highlighting its performance over models like Llama 3.1.

Memes and Humor

- AI and Election Humor: @francoisfleuret humorously requested GPT to remove tweets not about programming and kittens for three days and produce a cheerful summary of events.

- Funny Model Behaviors: @reach_vb shared a humorous observation of an audio-generating model going "off the rails," while @hyhieu226 tweeted jokingly about specific AI responses.

- User Interactions and Reactions: @nearcyan posted a meme related to politics, while @kylebrussell shared a lighthearted "vibes" tweet.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Tencent's Hunyuan-Large: A Game Changer in Open Source Models

- Tencent just put out an open-weights 389B MoE model (Score: 336, Comments: 132): Tencent released an open-weights 389B MoE model called Hunyuan-Large, which is designed to compete with Llama in performance. The model architecture utilizes Mixture of Experts (MoE), allowing for efficient scaling and improved capabilities in handling complex tasks.

- The Hunyuan-Large model boasts 389 billion parameters with 52 billion active parameters and can handle up to 256K tokens. Users noted its potential for efficient CPU utilization, with some running similar models effectively on DDR4 and expressing excitement over the model's capabilities compared to Llama variants.

- Discussions highlighted the massive size of the model, with estimates for running it suggesting 200-800 GB of memory required, depending on the configuration. Users also shared performance metrics, indicating that it may outperform models like Llama3.1-70B while still being cheaper to serve due to its Mixture of Experts (MoE) architecture.

- Concerns arose regarding hardware limitations, especially in light of GPU sanctions in China, leading to questions about how Tencent manages to run such large models. Users speculated about the need for a high-end setup, with some jokingly suggesting the need for a nuclear plant to power the required GPUs.

Theme 2. Tensor Parallelism Enhances Llama Models: Benchmark Insights

- PSA: llama.cpp patch doubled my max context size (Score: 95, Comments: 10): A recent patch to llama.cpp has doubled the maximum context size for users employing 3x Tesla P40 GPUs from 60K tokens to 120K tokens when using row split mode (

-sm row). This improvement also led to more balanced VRAM usage across the GPUs, enhancing overall GPU utilization without impacting inference speed, as detailed in the pull request.- Users with 3x Tesla P40 GPUs reported significant improvements in their workflows due to the increased context size from 60K to 120K tokens. One user noted that the previous limitations forced them to use large models with small contexts, which hindered performance, but the patch allowed for more efficient model usage.

- Several comments highlighted the ease of implementation with the new patch, with one user successfully loading 16K context on QWEN-2.5-72B_Q4_K_S, indicating that performance remained consistent with previous speeds. Another user expressed excitement about the improved handling of cache while using the model by row.

- Users shared tips on optimizing GPU performance, including a recommendation to use nvidia-pstated for managing power states of the P40s. This tool helps maintain lower power consumption (8-10W) while the GPUs are loaded and idle, contributing to overall efficiency.

- 4x RTX 3090 + Threadripper 3970X + 256 GB RAM LLM inference benchmarks (Score: 48, Comments: 39): The user conducted benchmarks on a build featuring 4x RTX 3090 GPUs, a Threadripper 3970X, and 256 GB RAM for LLM inference. Results showed that models like Qwen2.5 and Mistral Large performed with varying tokens per second (tps), with tensor parallel implementations significantly enhancing performance, as evidenced by PCIe transfer rates increasing from 1 kB/s to 200 kB/s during inference.

- Users discussed the stability of power supplies, with kryptkpr recommending the use of Dell 1100W supplies paired with breakout boards for reliable power delivery, achieving 12.3V at idle. They also shared links to reliable breakout boards for PCIe connections.

- There was a suggestion from Lissanro to explore speculative decoding alongside tensor parallelism using TabbyAPI (ExllamaV2), highlighting the potential performance gains when using models like Qwen 2.5 and Mistral Large with aggressive quantization techniques. Relevant links to these models were also provided.

- a_beautiful_rhind pointed out that Exllama does not implement NVLink, which limits its performance capabilities, while kmouratidis prompted further testing under different PCIe configurations to assess potential throttling impacts.

Theme 3. Competitive Advances in Coding Models: Qwen2.5-Coder Analysis

- So where’s Qwen2.5-Coder-32B? (Score: 76, Comments: 21): The Qwen2.5-Coder-32B version is in preparation, aiming to compete with leading proprietary models. The team is also investigating advanced code-centric reasoning models to enhance code intelligence, with further updates promised on their blog.

- Users expressed skepticism about the Qwen2.5-Coder-32B release timeline, with comments highlighting that the phrase "Coming soon" has been in use for two months without substantial updates.

- A user, radmonstera, shared their experience using Qwen2.5-Coder-7B-Base for autocomplete alongside a 70B model, noting that the 32B version could offer reduced RAM usage but may not match the speed of the 7B model.

- There is a general anticipation for the release, with one user, StarLord3011, hoping for it to be available within a few weeks, while another, visionsmemories, humorously acknowledged a potential oversight in the release process.

- Coders are getting better and better (Score: 170, Comments: 71): Users are increasingly adopting Qwen2.5 Coder 7B for local large language model (LLM) applications, noting its speed and accuracy. One user reports successful implementation on a Mac with LM Studio.

- Users report high performance from Qwen2.5 Coder 7B, with one user running it on an M3 Max MacBook Pro achieving around 18 tokens per second. Another user emphasizes that the Qwen 2.5 32B model outperforms Claude in various tasks, despite some skepticism about local LLM coders' capabilities compared to Claude and GPT-4o.

- The Supernova Medius model, based on Qwen 2.5 14B, is highlighted as an effective coding assistant, with users sharing links to the model's GGUF and original weights here. Users express interest in the potential of a dedicated 32B coder.

- Discussions reveal mixed experiences with Qwen 2.5, with some users finding it good for basic tasks but lacking in more complex coding scenarios compared to Claude and OpenAI's models. A user mentions that while Qwen 2.5 is solid for offline use, it does not match the capabilities of more advanced closed models like GPT-4o.

Theme 4. New AI Tools: Voice Cloning and Speculative Decoding Techniques

- OuteTTS-0.1-350M - Zero shot voice cloning, built on LLaMa architecture, CC-BY license! (Score: 69, Comments: 13): OuteTTS-0.1-350M features zero-shot voice cloning using the LLaMa architecture and is released under a CC-BY license. This model represents a significant advancement in voice synthesis technology, enabling the generation of voice outputs without prior training on specific voice data.

- The OuteTTS-0.1-350M model utilizes the LLaMa architecture, benefiting from optimizations in llama.cpp and offering a GGUF version available on Hugging Face.

- Users highlighted the zero-shot voice cloning capability as a significant advancement in voice synthesis technology, with a link to the official blog providing further details.

- The discussion touched on the audio uncanny valley phenomenon in TTS systems, where minor errors lead to outputs that are almost human-like, resulting in an unsettling experience for listeners.

- OpenAI new feature 'Predicted Outputs' uses speculative decoding (Score: 51, Comments: 28): OpenAI's new 'Predicted Outputs' feature utilizes speculative decoding, a concept previously demonstrated over a year ago in llama.cpp. The post raises questions about the potential for faster inference with larger models like 70b size models and smaller models such as llama3.2 and qwen2.5, especially for local users. For further details, see the tweet here and the demo by Karpathy here.

- Speculative decoding could significantly enhance inference speed by allowing smaller models to generate initial token sequences quickly, which the larger models can then verify. Users like Ill_Yam_9994 and StevenSamAI discussed how this method effectively allows for parallel processing, potentially generating multiple tokens in the time it typically takes to generate one.

- Several users highlighted that while the 'Predicted Outputs' feature might reduce latency, it may not necessarily lower costs for model usage, as noted by HelpfulHand3. The technique is recognized as a standard for on-device inference, but proper training of the smaller models is crucial for maximizing performance, as mentioned by Old_Formal_1129.

- The conversation included thoughts on layering models, where smaller models could predict outputs that larger models verify, potentially leading to significant speed improvements, as proposed by Balance-. This layered approach raises questions about the effectiveness and feasibility of integrating multiple model sizes for optimal performance.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

Autonomous Systems & Safety

- Volkswagen's Emergency Assist Technology: In /r/singularity, Volkswagen demonstrated new autonomous driving technology that safely pulls over vehicles when drivers become unresponsive, with multiple phases of driver attention checks before activation.

- Key comment insight: System includes careful attention to avoiding false activations and maintaining driver control.

AI Security & Vulnerabilities

- Google's Big Sleep AI Agent: In /r/OpenAI and /r/singularity, Google's security AI discovered a zero-day vulnerability in SQLite, marking the first public example of an AI agent finding a previously unknown exploitable memory-safety issue in widely-used software.

- Technical detail: Vulnerability was reported and patched in October before official release.

3D Avatar Generation & Rendering

- URAvatar Technology: In /r/StableDiffusion and /r/singularity, new research demonstrates photorealistic head avatars using phone scans with unknown illumination, featuring:

- Real-time rendering with global illumination

- Learnable radiance transfer for light transport

- Training on hundreds of high-quality multi-view human scans

- 3D Gaussian representation

Industry Movements & Corporate AI

- OpenAI Developments: Multiple posts across subreddits indicate:

- Accidental leak of full O1 model with vision capabilities

- Hiring of META's AR glasses head for robotics and consumer hardware

- Teasing of new image model capabilities

AI Image Generation Critique

- Adobe AI Limitations: In /r/StableDiffusion, users report significant content restrictions in Adobe's AI image generation tools, particularly around human subjects and clothing.

- Technical limitation: System blocks even basic image editing tasks due to overly aggressive content filtering.

Memes & Humor

AI Discord Recap

A summary of Summaries of Summaries by O1-preview

Theme 1. AI Giants Drop Mega Models: The New Heavyweights

- Tencent Unleashes 389B-Parameter Hunyuan-Large MoE Model: Tencent released Hunyuan-Large, a colossal mixture-of-experts model with 389 billion parameters and 52 billion activation parameters. While branded as open-source, debates swirl over its true accessibility and the hefty infrastructure needed to run it.

- Anthropic Rolls Out Claude 3.5 Haiku Amid User Grumbles: Anthropic launched Claude 3.5 Haiku, with users eager to test its performance in speed, coding accuracy, and tool integration. However, the removal of Claude 3 Opus sparked frustration, as many preferred it for coding and storytelling.

- OpenAI Shrinks GPT-4 Latency with Predicted Outputs: OpenAI introduced Predicted Outputs, slashing latency for GPT-4o models by providing a reference string. Benchmarks show up to 5.8x speedup in tasks like document iteration and code rewriting.

Theme 2. Defense, Meet AI: LLMs Enlist in National Security

- Scale AI Deploys Defense Llama for Classified Missions: Scale AI announced Defense Llama, a specialized LLM developed with Meta and defense experts, targeting American national security applications. The model is ready for integration into US defense systems.

- Nvidia's Project GR00T Aims for Robot Overlords: Jim Fan from NVIDIA's GEAR team discussed Project GR00T, aiming to develop AI agents capable of operating in both simulated and real-world environments, enhancing generalist abilities in robotics.

- OpenAI's Commitment to Safe AGI Development: Members highlighted OpenAI's founding goal of building safe and beneficial AGI, as stated since 2015. Discussions included concerns about AI self-development if costs surpass all human investment.

Theme 3. Open Data Bonanza: Datasets Set to Supercharge AI

- Open Trusted Data Initiative Teases 2 Trillion Token Dataset: The Open Trusted Data Initiative plans to release a massive multilingual dataset of 2 trillion tokens on November 11th via Hugging Face, aiming to boost LLM training capabilities.

- Community Debates Quality vs. Quantity in Training Data: Discussions emphasized the importance of high-quality datasets for future AI models. Concerns were raised that prioritizing quality might exclude valuable topics, but it could enhance commonsense reasoning.

- EleutherAI Enhances LLM Robustness Evaluations: A pull request was opened for LLM Robustness Evaluation, introducing systematic consistency and robustness evaluations across three datasets and fixing previous bugs.

Theme 4. Users Rage Against the Machines: AI Tools Under Fire

- Perplexity Users Mourn the Loss of Claude 3 Opus: The removal of Claude 3 Opus from Perplexity AI led to user frustration, with many claiming it was their go-to model for coding and storytelling. Haiku 3.5 is perceived as a less effective substitute.

- LM Studio Users Battle Glitches and Performance Issues: LM Studio users report challenges with model performance, including inconsistent results from Hermes 405B and difficulties running the software from USB drives. Workarounds involve using Linux AppImage binaries.

- NotebookLM Users Demand Better Language Support: Multilingual support issues in NotebookLM result in summaries generated in unintended languages. Users call for a more intuitive interface to manage language preferences directly.

Theme 5. AI Optimization Takes Center Stage: Speed and Efficiency

- Speculative Decoding Promises Faster AI Outputs: Discussions around speculative decoding highlight a method where smaller models generate drafts that larger models refine, improving inference times. While speed increases, questions remain about output quality.

- Python 3.11 Supercharges AI Performance by 1.25x: Upgrading to Python 3.11 offers up to 1.25x speedup on Linux and 1.12x on Windows, thanks to optimizations like statically allocated core modules and inlined function calls.

- OpenAI's Predicted Outputs Rewrites the Speed Script: By introducing Predicted Outputs, OpenAI cuts GPT-4 response times, with users reporting significant speedups in code rewriting tasks.

PART 1: High level Discord summaries

HuggingFace Discord

-

Open Trusted Data Initiative's 2 Trillion Token Multilingual Dataset: Open Trusted Data Initiative is set to release the largest multilingual dataset containing 2 trillion tokens on November 11th via Hugging Face.

- This dataset aims to significantly enhance LLM training capabilities by providing extensive multilingual resources for developers and researchers.

- Computer Vision Model Quantization Techniques: A member is developing a project focused on quantizing computer vision models to achieve faster inference on edge devices using both quantization aware training and post training quantization methods.

- The initiative emphasizes reducing model weights and understanding the impact on training and inference performance, garnering interest from the community.

- Release of New Microsoft Models: There is excitement within the community regarding the new models released by Microsoft, which have met the expectations of several members.

- These models are recognized for addressing specific desired functionalities, enhancing the toolkit available to AI engineers.

- Speculative Decoding in AI Models: Discussions around speculative decoding involve using smaller models to generate draft outputs that larger models refine, aiming to improve inference times.

- While this approach boosts speed, there are ongoing questions about maintaining the quality of outputs compared to using larger single models.

- Challenges in Building RAG with Chroma Vector Store: A user is attempting to build a Retrieval-Augmented Generation (RAG) system with 21 documents but is encountering issues storing embeddings in the Chroma vector store, successfully saving only 7 embeddings.

- Community members suggested checking for potential error messages and reviewing default function arguments to ensure documents are not being inadvertently dropped.

Perplexity AI Discord

-

Opus Removal Sparks User Frustration: Users voiced their disappointment over the removal of Claude 3 Opus, highlighting it as their preferred model for coding and storytelling on the Anthropic website.

- Many are requesting a rollback to the previous model or alternatives, as Haiku 3.5 is perceived to be less effective.

- Perplexity Pro Enhances Subscription Benefits: Discussions around Perplexity Pro Features revealed that Pro subscribers gain access to premium models through partnerships like the Revolut referral.

- Questions remain about whether the Pro tier includes Claude access and the recent updates to the mobile application.

- Debate Over Grok 2 vs. Claude 3.5 Sonnet: Engineers are debating which model, Grok 2 or Claude 3.5 Sonnet, offers superior performance for complex research and data analysis.

- Perplexity is praised for its strengths in academic contexts, while models like GPT-4o excel in coding and creative tasks.

- Nvidia Targets Intel with Strategic Market Moves: Nvidia is strategically positioning itself to compete directly with Intel, aiming to shift market dynamics and influence product strategies.

- Analysts recommend monitoring upcoming collaborations and product releases from Nvidia that could significantly impact the tech landscape.

- Breakthrough in Molecular Neuromorphic Platforms: A new molecular neuromorphic platform mimics human brain function, representing a significant advancement in AI and neurological research.

- Experts express cautious optimism about the platform's potential to deepen our understanding of human cognition and enhance AI development.

OpenRouter (Alex Atallah) Discord

-

Anthropic Rolls Out Claude 3.5 Haiku: Anthropic has launched Claude 3.5 in both standard and self-moderated versions, with additional dated options available here.

- Users are eager to evaluate the model's performance in real-world applications, anticipating improvements in speed, coding accuracy, and tool integration.

- Access Granted to Free Llama 3.2 Models: Llama 3.2 models, including 11B and 90B, now offer free fast endpoints, achieving 280tps and 900tps respectively see details.

- This initiative is expected to enhance community engagement with open-source models by providing higher throughput options at no cost.

- New PDF Analysis Feature in Chatroom: A new feature allows users to upload or attach PDFs in the chatroom for analysis using any model on OpenRouter.

- Additionally, the maximum purchase limit has been increased to $10,000, providing greater flexibility for users.

- Predicted Output Feature Reduces Latency: Predicted output is now available for OpenAI's GPT-4 models, optimizing edits and rewrites through the

predictionproperty.

- An example code snippet demonstrates its application for more efficient processing of extensive text requests.

- Hermes 405B Shows Inconsistent Performance: The free version of Hermes 405B has been performing inconsistently, with users reporting intermittent functionality.

- Many users remain hopeful that these performance issues indicate ongoing updates or fixes are in progress.

aider (Paul Gauthier) Discord

-

Aider v0.62.0 Launch: Aider v0.62.0 now fully supports Claude 3.5 Haiku, achieving a 75% score on the code editing leaderboard. This release enables seamless file edits sourced from web LLMs like ChatGPT.

- Additionally, Aider generated 84% of the code in this release, demonstrating significant efficiency improvements.

- Claude 3.5 Haiku vs. Sonnet: Claude 3.5 Haiku delivers nearly the same performance as Sonnet, but is more cost-effective. Users can activate Haiku by using the

--haikucommand option.

- This cost-effectiveness is making Haiku a preferred choice for many in their AI coding workflows.

- Comparison of AI Coding Models: Users analyzed performance disparities among AI coding models, highlighting that 3.5 Haiku is less effective compared to Sonnet 3.5 and GPT-4o.

- Anticipation is building around upcoming models like 4.5o that could disrupt current standards and impact Anthropic's market presence.

- Predicted Outputs Feature Impact: The launch of OpenAI's Predicted Outputs is expected to revolutionize GPT-4o models by reducing latency and enhancing code editing efficiency, as noted in OpenAI Developers' tweet.

- This feature is projected to significantly influence model benchmarks, especially when compared directly with competing models.

- Using Claude Haiku as Editor Model: Claude 3 Haiku is being leveraged as an editor model to compensate for the main model's weaker editing capabilities, enhancing the development process.

- This approach is especially beneficial for programming languages that demand precise syntax management.

Eleuther Discord

-

Initiative Drives Successful Reading Groups: A member emphasized that successfully running reading groups relies more on initiative than expertise, initiating the mech interp reading group without prior knowledge and consistently maintaining it.

- This approach underscores the importance of proactive leadership and community engagement in sustaining effective learning sessions.

- Optimizing Training with Advanced Settings: Participants debated the implications of various optimizer settings such as beta1 and beta2, and their compatibility with strategies like FSDP and PP during model training.

- Diverse viewpoints highlighted the balance between training efficiency and model performance.

- Enhancing Logits and Probability Optimizations: There was an in-depth discussion on optimizing logits outputs and determining appropriate mathematical norms for training, suggesting the use of the L-inf norm for maximizing probabilities or maintaining distribution shapes via KL divergence.

- Participants explored methods to fine-tune model outputs for improved prediction accuracy and stability.

- LLM Robustness Evaluation PR Enhances Framework: A member announced the opening of a PR for LLM Robustness Evaluation across three different datasets, inviting feedback and comments, viewable here.

- The PR introduces systematic consistency and robustness evaluations for large language models while addressing previous bugs.

Unsloth AI (Daniel Han) Discord

-

Python 3.11 Boosts Performance by 1.25x on Linux: Users are encouraged to switch to Python 3.11 as it delivers up to 1.25x speedup on Linux and 1.12x on Windows through various optimizations.

- Core modules are statically allocated for faster loading, and function calls are now inlined, enhancing overall performance.

- Qwen 2.5 Supported in llama.cpp with Upcoming Vision Integration: Discussion confirms that Qwen 2.5 is supported in llama.cpp, as detailed in the Qwen documentation.

- The community is anticipating the integration of vision models in Unsloth, which is expected to be available soon.

- Fine-Tuning LLMs on Limited Datasets: Users are exploring the feasibility of fine-tuning models with only 10 examples totaling 60,000 words, specifically for punctuation correction.

- Advice includes using a batch size of 1 to mitigate challenges associated with limited data.

- Implementing mtbench Evaluations with Hugging Face Metrics: A member inquired about reference implementations for callbacks to run mtbench-like evaluations on the mtbench dataset, asking if a Hugging Face evaluate metric exists.

- There is a need for streamlined evaluation processes, emphasizing the importance of integrating such functionality into current projects.

- Enhancing mtbench Evaluation with Hugging Face Metrics: Requests were made for insights on implementing a callback for running evaluations on the mtbench dataset, similar to existing mtbench evaluations.

- The inquiry highlights the desire for efficient evaluation mechanisms within ongoing AI engineering projects.

LM Studio Discord

-

Portable LM Studio Solutions: A user inquired about running LM Studio from a USB flash drive, receiving suggestions to utilize Linux AppImage binaries or a shared script to achieve portability.

- Despite the absence of an official portable version, community members provided workarounds to facilitate portable LM Studio deployments.

- LM Studio Server Log Access: Users discovered that pressing CTRL+J in LM Studio opens the server log tab, enabling real-time monitoring of server activities.

- This quick-access feature was shared to assist members in effectively tracking and debugging server performance.

- Model Performance Evaluation: Mistral vs Qwen2: Mistral Nemo outperforms Qwen2 in Vulkan-based operations, demonstrating faster token processing speeds.

- This performance disparity highlights the impact of differing model architectures on computational efficiency.

- Windows Scheduler Inefficiencies: Members reported that the Windows Scheduler struggles with CPU thread management in multi-core setups, affecting performance.

- One member recommended manually setting CPU affinity and priority for processes to mitigate scheduling issues.

- LLM Context Management Challenges: Context length significantly impacts inference speed in LLMs, with one user noting a delay of 39 minutes for the first token with large contexts.

- Optimizing context fill levels during new chat initiations was suggested to improve inference responsiveness.

Latent Space Discord

-

Hume App Launch Blends EVI 2 & Claude 3.5: The new Hume App combines voices and personalities generated by the EVI 2 speech-language model with Claude 3.5 Haiku, aiming to enhance user interaction through AI-generated assistants.

- Users can now access these assistants for more dynamic interactions, as highlighted in the official announcement.

- OpenAI Reduces GPT-4 Latency with Predicted Outputs: OpenAI has introduced Predicted Outputs, significantly decreasing latency for GPT-4o and GPT-4o-mini models by providing a reference string for faster processing.

- Benchmarks show speed improvements in tasks like document iteration and code rewriting, as noted by Eddie Aftandilian.

- Supermemory AI Tool Manages Your Digital Brain: A 19-year-old developer launched Supermemory, an AI tool designed to manage bookmarks, tweets, and notes, functioning like a ChatGPT for saved content.

- With a chatbot interface, users can easily retrieve and explore previously saved content, as demonstrated by Dhravya Shah.

- Tencent Releases Massive Hunyuan-Large Model: Tencent has unveiled the Hunyuan-Large model, an open-weight Transformer-based mixture of experts model featuring 389 billion parameters and 52 billion activation parameters.

- Despite being labeled as open-source, debates persist about its status, and its substantial size poses challenges for most infrastructure companies, as detailed in the Hunyuan-Large paper.

- Defense Llama: AI for National Security: Scale AI announced Defense Llama, a specialized LLM developed in collaboration with Meta and defense experts, targeting American national security applications.

- The model is now available for integration into US defense systems, highlighting advancements in AI for security, as per Alexandr Wang.

Notebook LM Discord Discord

-

NotebookLM Expands Integration Capabilities: Members discussed the potential for NotebookLM to integrate multiple notebooks or sources, aiming to enhance its functionality for academic research. The current limitation of 50 sources per notebook was a key concern, with references to NotebookLM Features.

- There was a strong interest in feature enhancements to support data sharing across notebooks, reflecting the community's eagerness for improved collaboration tools and a clearer development roadmap.

- Deepfake Technology Raises Ethical Questions: A user highlighted the use of 'Face Swap' in a deodorant advertisement, pointing to the application of deepfake technologies in marketing efforts. This was further discussed in the context of Deepfake Technology.

- Another participant emphasized that deepfakes inherently involve face swapping, fostering a shared understanding of the ethical implications and the need for responsible usage of such technologies.

- Managing Vendor Data with NotebookLM: A business owner explored using NotebookLM to manage data for approximately 1,500 vendors, utilizing various sources including pitch decks. They mentioned having a data team ready to assist with imports, as detailed in Vendor Database Management Use Cases.

- Concerns were raised about data sharing across notebooks, highlighting the need for robust data management features to ensure security and accessibility within large datasets.

- Audio Podcast Generation in NotebookLM: NotebookLM introduced an audio podcast generation feature, which members received positively for its convenience in multitasking. Users inquired about effective utilization strategies, as discussed in Audio Podcast Generation Features.

- The community showed enthusiasm for the podcast functionality, suggesting potential use cases and requesting best practices to maximize its benefits in various workflows.

- Challenges with Language Support in NotebookLM: Several members reported issues with multilingual support in NotebookLM, where summaries were generated in unintended languages despite settings configured for English. This was a primary topic in Language and Localization Issues.

- Suggestions were made to improve the user interface for better language preference management, emphasizing the need for a more intuitive process to change language settings directly.

Stability.ai (Stable Diffusion) Discord

-

SWarmUI Simplifies ComfyUI Setup: Members recommended installing SWarmUI to streamline ComfyUI deployment, highlighting its ability to manage complex configurations.

- One member emphasized, "It's designed to make your life a whole lot easier.", showcasing the community's appreciation for user-friendly interfaces.

- Challenges of Cloud Hosting Stable Diffusion: Discussions revealed that hosting Stable Diffusion on Google Cloud can be more intricate and expensive compared to local setups.

- Participants suggested alternatives like GPU rentals from vast.ai as cost-effective and simpler options for deploying models.

- Latest Models and LoRas on Civitai: Users explored downloading recent models such as 1.5, SDXL, and 3.5 from Civitai, noting that most LoRas are based on 1.5.

- Older versions like v1.4 were considered obsolete, with the community advising upgrades to benefit from enhanced features and performance.

- Animatediff Tutorial Resources Shared: A member requested tutorials for Animatediff, receiving recommendations to consult resources on Purz's YouTube channel.

- The community expressed enthusiasm for sharing knowledge, reinforcing a collaborative learning environment around animation tools.

- ComfyUI Now Supports Video AI via GenMo's Mochi: Confirmation was made that ComfyUI integrates video AI capabilities through GenMo's Mochi, though it requires substantial hardware.

- This integration is viewed as a significant advancement, potentially expanding the horizons of video generation using Stable Diffusion technologies.

Nous Research AI Discord

-

Hermes 2.5 Dataset's 'Weight' Field Questioned: Members analyzed the Hermes 2.5 dataset's 'weight' field, finding it contributes minimally and results in numerous empty fields.

- There was speculation that optimizing dataset sampling could improve its utility for smaller LLMs.

- Nous Research Confirms Hermes Series Remains Open: In response to inquiries about closed source LLMs, Nous Research affirmed that the Hermes series will continue to be open source.

- While some future projects may adopt a closed model, the commitment to openness persists for the Hermes line.

- Balancing Quality and Quantity in Future AI Models: Discussions emphasized the importance of high-quality datasets for the development of future AI models.

- Concerns were raised that prioritizing quality might exclude valuable topics and facts, although it could enhance commonsense reasoning.

- OmniParser Introduced for Enhanced Data Parsing: The OmniParser tool was shared, known for improving data parsing capabilities.

- Its innovative approach has garnered attention within the AI community.

- Hertz-Dev Releases Full-Duplex Conversational Audio Model: The Hertz-Dev GitHub repository launched the first base model for full-duplex conversational audio.

- This model aims to facilitate speech-to-speech interactions within a single framework, enhancing audio communications.

Interconnects (Nathan Lambert) Discord

-

NeurIPS Sponsorship Push: A member announced their efforts to secure a sponsor for NeurIPS, signaling potential collaboration opportunities.

- They also extended an invitation for a NeurIPS group dinner, aiming to enhance networking among attendees during the conference.

- Tencent Releases 389B MoE Model: Tencent unveiled their 389B Mixture of Experts (MoE) model, making significant waves in the AI community.

- Discussions highlighted that the model’s advanced functionality could set new benchmarks for large-scale model performance, as detailed in their paper.

- Scale AI Launches Defense Llama: Scale AI introduced Defense Llama, a specialized LLM designed for military applications within classified networks, as covered by DefenseScoop.

- The model is intended to support operations such as combat planning, marking a move towards integrating AI into national security frameworks.

- YOLOv3 Paper Highly Recommended: A member emphasized the importance of the YOLOv3 paper, stating it's essential reading for practitioners.

- They remarked, 'If you haven't read the YOLOv3 paper you're missing out btw', underlining its relevance in the field.

- LLM Performance Drift Investigation: Discussion emerged around creating a system or paper to fine-tune a small LLM or classifier that monitors model performance drift in tasks like writing.

- Members debated the effectiveness of existing prompt classifiers in accurately tracking drift, emphasizing the need for robust evaluation pipelines.

OpenAI Discord

-

GPT-4o Rollout introduces o1-like reasoning: The rollout of GPT-4o introduces o1-like reasoning capabilities and includes large blocks of text in a canvas-style box.

- There is confusion among members whether this rollout is an A/B test with the regular GPT-4o or a specialized version for specific uses.

- OpenAI's commitment to safe AGI development: A member highlights that OpenAI was founded with the aim of building safe and beneficial AGI, a mission declared since its inception in 2015.

- Discussions include concerns that if AI development costs surpass all human investment, it could lead to AI self-development, raising significant implications.

- GPT-5 Announcement Date Uncertain: Community members are curious about the release of GPT-5 and its accompanying API but acknowledge that the exact timeline is unknown.

- It’s supposed to be some new release this year, but it won't be GPT-5, one member stated.

- Premium Account Billing Issues: A user reported experiencing issues with their Premium account billing, noting that their account still displays as a free plan despite proof of payment from Apple.

- Another member attempted to assist using a shared link, but the issue remains unresolved.

- Hallucinations in Document Summarization: Members expressed concerns about hallucinations occurring during document summarization, especially when scaling the workflow in production environments.

- To mitigate inaccuracies, one member suggested implementing a second LLM pass for fact-checking.

LlamaIndex Discord

-

LlamaIndex chat-ui Integration: Developers can quickly create a chat UI for their LLM applications using LlamaIndex chat-ui with pre-built components and Tailwind CSS customization, integrating seamlessly with LLM backends like Vercel AI.

- This integration streamlines chat implementation, enhancing development efficiency for AI engineers working on conversational interfaces.

- Advanced Report Generation Techniques: A new blog post and video explores advanced report generation, including structured output definition and advanced document processing, essential for optimizing enterprise reporting workflows.

- These resources provide AI engineers with deeper insights into enhancing report generation capabilities within LLM applications.

- NVIDIA Competition Submission Deadline: The submission deadline for the NVIDIA competition is November 10th, offering prizes like an NVIDIA® GeForce RTX™ 4080 SUPER GPU for projects submitted via this link.

- LlamaIndex technologies are encouraged to be leveraged by developers to create innovative LLM applications for rewards.

- LlamaParse Capabilities and Data Retention: LlamaParse is a closed-source parsing tool that offers efficient document transformation into structured data with a 48-hour data retention policy, as detailed in the LlamaParse documentation.

- Discussions highlighted its performance benefits and impact of data retention on repeated task processing, referencing the Getting Started guide.

- Multi-Modal Integration with Cohere's ColiPali: An ongoing PR aims to add ColiPali as a reranker in LlamaIndex, though integrating it as an indexer is challenging due to multi-vector indexing requirements.

- The community is actively working on expanding LlamaIndex's multi-modal data handling capabilities, highlighting collaboration efforts with Cohere.

Cohere Discord

-

Connectors Issues: Members are reporting that connectors fail to function correctly when using the Coral web interface or API, resulting in zero results from reqres.in.

- One user noted that the connectors take longer than expected to respond, with response times exceeding 30 seconds.

- Cohere API Fine-Tuning and Errors: Fine-tuning the Cohere API requires entering card details and switching to production keys, with users needing to prepare proper prompt and response examples for SQL generation.

- Additionally, some members reported encountering 500 errors when running fine-tuned classify models via the API, despite successful operations in the playground environment.

- Prompt Tuner Development on Wordpress: A user asked about recreating the Cohere prompt tuner on a Wordpress site using the API.

- Another member suggested developing a custom backend application, indicating that Wordpress can support such integrations. Refer to Login | Cohere for access to advanced LLMs and NLP tools.

- Embedding Models in Software Testing: Members discussed the application of the embed model in software testing tasks to enhance testing processes.

- Clarifications were sought on how embedding can specifically assist in these testing tasks.

- GCP Marketplace Billing Concerns: A user raised questions about the billing process after activating Cohere via the GCP Marketplace and obtaining an API key.

- They sought clarification on whether charges would be applied to their GCP account or the registered card, expressing a preference for model-specific billing.

OpenInterpreter Discord

-

Microsoft’s Omniparser Integration: A member inquired about integrating Microsoft's Omniparser, highlighting its potential benefits for the open-source mode. Another member confirmed that they are actively exploring this integration.

- The discussion emphasized leveraging Omniparser's capabilities to enhance the system's parsing efficiency.

- Claude's Computer Use Integration: Members discussed integrating Claude's Computer Use within the current

--osmode, with confirmation that it has been incorporated. The conversation highlighted an interest in using real-time previews for improved functionality.

- Participants expressed enthusiasm about the seamless integration, noting that real-time previews could significantly enhance user experience.

- Standards for Agents: A member proposed creating a standard for agents, citing the cleaner setup of LMC compared to Claude's interface. They suggested collaboration between OpenInterpreter (OI) and Anthropic to establish a common standard compatible with OAI endpoints.

- The group discussed the feasibility of a unified standard, considering compatibility requirements with existing OAI endpoints.

- Haiku Performance in OpenInterpreter: A member inquired about the performance of the new Haiku in OpenInterpreter, mentioning they have not tested it yet. This reflects the community's ongoing interest in evaluating the latest tools.

- There was consensus that testing the Haiku performance is crucial for assessing its effectiveness and suitability within various workflows.

- Tool Use Package Enhancements: The

Tool Usepackage has been updated with two new free tools: ai prioritize and ai log, which can be installed viapip install tool-use-ai. These tools aim to streamline workflow and productivity.

- Community members are encouraged to contribute to the Tool Use GitHub repository, which includes detailed documentation and invites ongoing AI tool improvements.

Modular (Mojo 🔥) Discord

-

Reminder: Modular Community Q&A on Nov 12: A reminder was issued to submit questions for the Modular Community Q&A scheduled on November 12th, with optional name attribution.

- Members are encouraged to share their inquiries through the submission form to participate in the upcoming community meeting.

- Call for Projects and Talks at Community Meeting: Members were invited to present projects, give talks, or propose ideas during the Modular Community Q&A.

- This invitation fosters community engagement and allows contributions to be showcased at the November 12th meeting.

- Implementing Effect System in Mojo: Discussions on integrating an effect system in Mojo focused on marking functions performing syscalls as block, potentially as warnings by default.

- Suggestions included introducing a 'panic' effect for static management of sensitive contexts within the Mojo language.

- Addressing Matrix Multiplication Errors in Mojo: A user reported multiple errors in their matrix multiplication implementation, including issues with

memset_zeroandrandfunction calls in Mojo.

- These errors highlight problems related to implicit conversions and parameter specifications in the function definitions.

- Optimizing Matmul Kernel Performance: A user noted that their Mojo matmul kernel was twice as slow as the C version, despite similar vector instructions.

- Considerations are being made regarding optimization and the impact of bounds checking on performance.

DSPy Discord

-

New Election Candidate Research Tool Released: A member introduced the Election Candidate Research Tool to streamline electoral candidate research ahead of the elections, highlighting its user-friendly features and intended functionality.

- The GitHub repository encourages community contributions, aiming to enhance voter research experience through collaborative development.

- Optimizing Few-Shot with BootstrapFewShot: Members explored using BootstrapFewShot and BootstrapFewShotWithRandomSearch optimizers to enhance few-shot examples without modifying existing prompts, promoting flexibility in example combinations.

- These optimizers provide varied few-shot example combinations while preserving the main instructional content, facilitating improved few-shot learning performance.

- VLM Support Performance Celebrations: A member commended the team's efforts on VLM support, recognizing its effectiveness and positive impact on the project's performance metrics.

- Their acknowledgment underscores the successful implementation and enhancement of VLM support within the project.

- DSPy 2.5.16 Struggles with Long Inputs: Concerns arose about DSPy 2.5.16 using the Ollama backend, where lengthy inputs lead to incorrect outputs by mixing input and output fields, indicating potential bugs.

- An SQL extraction example demonstrated how long inputs cause unexpected placeholders in predictions, pointing to issues in input/output parsing.

- Upcoming DSPy Version Testing: A member plans to test the latest DSPy version, moving away from the conda-distributed release to investigate the long input handling issue.

- They intend to report their findings post-testing, indicating an ongoing effort to resolve parsing concerns in DSPy.

OpenAccess AI Collective (axolotl) Discord

-

Distributed Training of LLMs: A member initiated a discussion on using their university's new GPU fleet for distributed training of LLMs, focusing on training models from scratch.

- Another member suggested providing resources for both distributed training and pretraining to assist in their research project.

- Kubernetes for Fault Tolerance: A proposal was made to implement a Kubernetes cluster to enhance fault tolerance in the GPU system.

- Members discussed the benefits of integrating Kubernetes with Axolotl for better management of distributed training tasks.

- Meta Llama 3.1 Model: Meta Llama 3.1 was highlighted as a competitive open-source model, with resources provided for fine-tuning and training using Axolotl.

- Members were encouraged to review a tutorial on fine-tuning that details working with the model across multiple nodes.

- StreamingDataset PR: A member recalled a discussion about a PR on StreamingDataset, inquiring if there was still interest in it.

- This indicates ongoing discussions and development around cloud integrations and dataset handling.

- Firefly Model: Firefly is a fine-tune of Mistral Small 22B, designed for creative writing and roleplay, supporting contexts up to 32,768 tokens.

- Users are cautioned about the model's potential to generate explicit, disturbing, or offensive responses, and usage should be responsible. They are advised to view content here before proceeding with any access or downloads.

Torchtune Discord

-

DistiLLM Optimizes Teacher Probabilities: The discussion focused on subtracting teacher probabilities within DistiLLM's cross-entropy optimization, detailed in the GitHub issue. It was highlighted that the constant term can be ignored since the teacher model remains frozen.

- A recommendation was made to update the docstring to clarify that the loss function assumes a frozen teacher model.

- KD-div vs Cross-Entropy Clarification: Concerns arose about labeling KD-div when the actual returned value is cross-entropy, potentially causing confusion when comparing losses like KL-div.

- It’s noted that framing this process as optimizing for cross-entropy better aligns with the transition from hard labels in training to soft labels produced by the teacher model.

- TPO Gaining Momentum: A member expressed enthusiasm for TPO, describing it as impressive and planning to integrate a tracker.

- Positive anticipation surrounds TPO's functionalities and its potential applications.

- VinePPO Implementation Challenges: While appreciating VinePPO for its reasoning and alignment strengths, a member cautioned that its implementation might lead to significant challenges.

- The potential difficulties in deploying VinePPO were emphasized, highlighting risks associated with its integration.

tinygrad (George Hotz) Discord

-

TokenFormer Integration with tinygrad: A member successfully ported a minimal implementation of TokenFormer to tinygrad, available on the GitHub repository.

- This adaptation aims to enhance inference and learning capabilities within tinygrad, showcasing the potential of integrating advanced model architectures.

- Dependency Resolution in Views: A user inquired whether the operation

x[0:1] += x[0:1]depends onx[2:3] -= ones((2,))or justx[0:1] += ones((2,))concerning true or false share rules.

- This discussion raises technical considerations about how dependencies are tracked in operation sequences within tinygrad.

- Hailo Reverse Engineering for Accelerator Development: A member announced the commencement of Hailo reverse engineering efforts to create a new accelerator, focusing on process efficiency.

- They expressed concerns about the kernel compilation process, which must compile ONNX and soon Tinygrad or TensorFlow to Hailo before execution.

- Kernel Consistency in tinygrad Fusion: A user is investigating if kernels in tinygrad remain consistent across runs when fused using

BEAM=2.

- They aim to prevent the overhead of recompiling the same kernel by emphasizing the need for effective cache management.

LLM Agents (Berkeley MOOC) Discord

-

Lecture 9 on Project GR00T: Today's Lecture 9 for the LLM Agents MOOC is scheduled at 3:00pm PST and will be live streamed, featuring Jim Fan discussing Project GR00T, NVIDIA's initiative for generalist robotics.

- Jim Fan's team within GEAR is developing AI agents capable of operating in both simulated and real-world environments, focusing on enhancing generalist abilities.

- Introduction to Dr. Jim Fan: Dr. Jim Fan, Research Lead at NVIDIA's GEAR, holds a Ph.D. from Stanford Vision Lab and received the Outstanding Paper Award at NeurIPS 2022.

- His work on multimodal models for robotics and AI agents proficient in playing Minecraft has been featured in major publications like New York Times, Forbes, and MIT Technology Review.

- Course Resources for LLM Agents: All course materials, including livestream URLs and homework assignments, are available online.

- Students are encouraged to ask questions in the dedicated course channel.

Mozilla AI Discord

-

DevRoom Doors Open for FOSDEM 2025: Mozilla is hosting a DevRoom at FOSDEM 2025 from February 1-2, 2025 in Brussels, focusing on open-source presentations.

- Talk proposals can be submitted until December 1, 2024, with acceptance notifications by December 15.

- Deadline Looms for Talk Proposals: Participants have until December 1, 2024 to submit their talk proposals for the FOSDEM 2025 DevRoom.

- Accepted speakers will be notified by December 15, ensuring ample preparation time.

- Volunteer Vistas Await at FOSDEM: An open call for volunteers has been issued for FOSDEM 2025, with travel sponsorships available for European participants.

- Volunteering offers opportunities for networking and supporting the open-source community at the event.

- Topic Diversity Drives FOSDEM Talks: Suggested topics for FOSDEM 2025 presentations include Mozilla AI, Firefox innovations, and Privacy & Security, among others.

- Speakers are encouraged to explore beyond these areas, with talk durations ranging from 15 to 45 minutes, including Q&A.

- Proposal Prep Resources Released: Mozilla shared a resource with tips on creating successful proposals, accessible here.

- This guide aims to help potential speakers craft impactful presentations at FOSDEM 2025.

Gorilla LLM (Berkeley Function Calling) Discord

-

Benchmarking Retrieval-Based Function Calls: A member is benchmarking a retrieval-based approach to function calling and is seeking a collection of available functions and their definitions.

- They specifically requested these definitions to be organized per test category for more effective indexing.

- Function Definition Indexing Discussion: A member emphasized the need for an indexed collection of function definitions to enhance benchmarking efforts.

- They highlighted the importance of categorizing these functions per test category to streamline their workflow.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LAION Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!