[AINews] Stripe lets Agents spend money with StripeAgentToolkit

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

AI SDKs are all you need.

AI News for 11/14/2024-11/15/2024. We checked 7 subreddits, 433 Twitters and 30 Discords (217 channels, and 1812 messages) for you. Estimated reading time saved (at 200wpm): 191 minutes. You can now tag @smol_ai for AINews discussions!

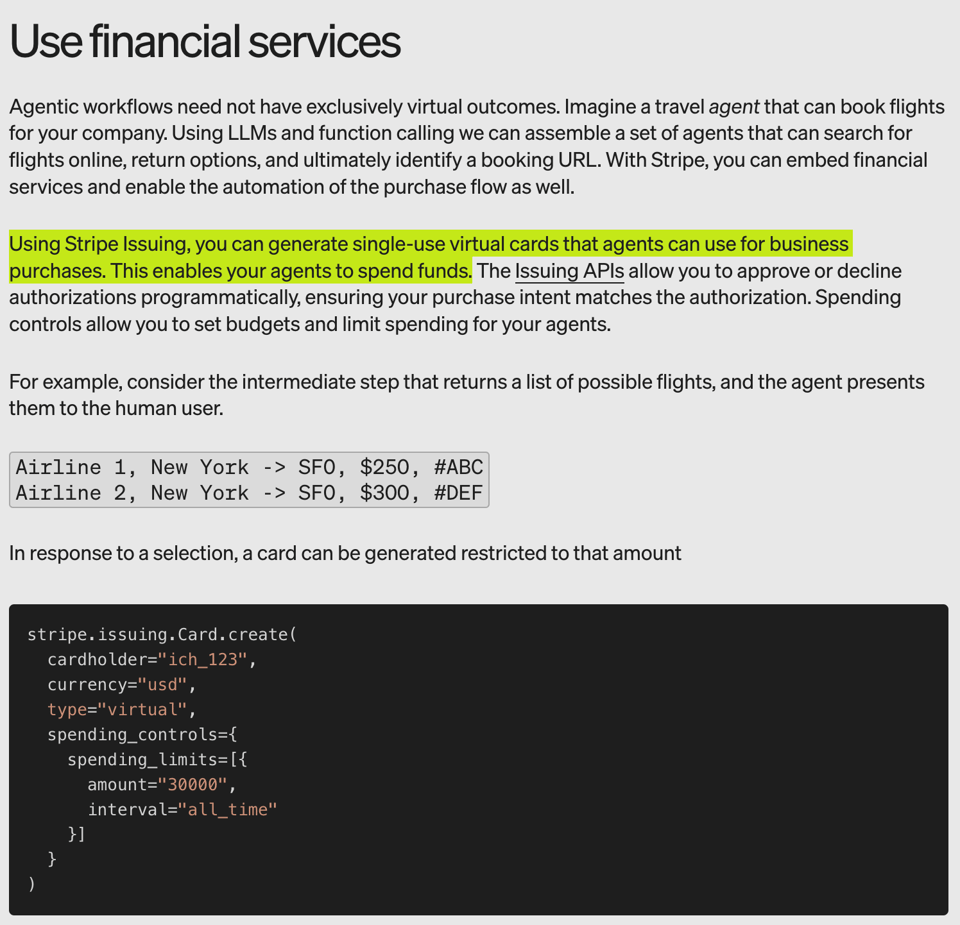

One of the rising theses in AI developer tooling this year is that tools with better "AI-Computer Interfaces" will do better as a medium-term solve for agent reliability/accuracy. You can see these with tools like E2B and the rising llms.txt docs trend started by Jeremy Howard and now adopted by Anthropic, and Vercel has a generalist AI SDK, but Stripe is the first dev tooling company that has specifically created an SDK for agents that take money:

import {StripeAgentToolkit} from '@stripe/agent-toolkit/ai-sdk';

import {openai} from '@ai-sdk/openai';

import {generateText} from 'ai';

const toolkit = new StripeAgentToolkit({

secretKey: "sk_test_123",

configuration: {

actions: {

// ... enable specific Stripe functionality

},

},

});

await generateText({

model: openai('gpt-4o'),

tools: {

...toolkit.getTools(),

},

maxSteps: 5,

prompt: 'Send <> an invoice for $100' ,

});

and spend money:

and charge based on token usage. A very very forward thinking move here, solving common pain points, and in retrospect unsurprising that Stripe was the first to build financial services for AI Agents.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Models and Benchmarks

- Model Overfitting and Performance: @abacaj highlights concerns about models being overfit, performing well only on specific benchmarks. @francoisfleuret questions the notion that scaling laws have ended, arguing that increasing model size alone may not lead to AGI.

- Gemini and Claude Comparisons: @lmarena_ai reports that Gemini-Exp-1114 achieved #1 on the Vision Leaderboard and improved its standings in Math Arena. In contrast, @goodside critiques the IQ analogy for LLMs, stating that an LLM’s intelligence varies significantly across tasks.

AI Company News

- OpenAI Updates: @OpenAI announces the release of the ChatGPT desktop app for macOS, which now integrates with tools like VS Code, Xcode, and Terminal to enhance developer workflows.

- Anthropic and Meta Developments: @AnthropicAI introduces a new prompt improver in the Anthropic Console, aimed at refining prompts using chain-of-thought reasoning. Meanwhile, @AIatMeta shares top research papers from EMNLP2024, covering advancements in image captioning, dialogue systems, and memory-efficient fine-tuning.

AI Research and Papers

- ICLR 2025 Highlights: @jxmnop reviews top-rated papers from ICLR 2025, including studies on diffusion-based illumination harmonization, open mixture-of-experts language models (OLMoE), and hyperbolic vision-language models.

- Adaptive Decoding Techniques: @jaseweston introduces Adaptive Decoding via Latent Preference Optimization, a new method that outperforms fixed temperature decoding by automatically selecting creativity or factuality parameters per token.

AI Tools and Software Updates

- ChatGPT Desktop Enhancements: @stevenheidel showcases the ChatGPT desktop app’s new features, including Advanced Voice Mode and the ability to interact with VS Code, Xcode, and Terminal for a seamless pair programming experience.

- LlamaParse and RAGformation: @lmarena_ai introduces LlamaParse, a tool for parsing complex documents with features like handwritten content and diagrams. Additionally, @llama_index presents RAGformation, which automates cloud configurations based on natural language descriptions, simplifying cloud complexity and optimizing ROI.

AI Agents and Applications

- AI Agents in Production: @LangChainAI reveals that 51% of companies have AI agents in production, with mid-sized companies leading at 63% adoption. The top use cases include research & summarization (58%), personal productivity (53.5%), and customer service (45.8%).

- Gemini and Claude in Agent Workflows: @AndrewYNg discusses how LLMs like Gemini and Claude are being optimized for agentic workflows, enhancing capabilities such as function calling and tool use to improve agentic performance across various applications.

Memes and Humor

- Humorous Takes on AI: @ClementDelangue shares a lighthearted meme about Transformers.js, while @rez0__ humorously comments on cleaning habits influenced by AI.

- AI-Related Jokes: @hardmaru jokes about historical NVIDIA shareholding, and @fabianstelzer posts a funny AI prompt scenario showcasing the quirks of style transfer in LLMs.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Gemini Exp 1114 Achieves Top Rank in Chatbot Arena

- Gemini Exp 1114 now ranks joint #1 overall on Chatbot Arena (that name though....) (Score: 322, Comments: 101): Gemini Exp 1114, developed by GoogleDeepMind, has achieved a joint #1 overall ranking in the Chatbot Arena, with a notable 40+ score increase, matching the 4o-latest and surpassing o1-preview. It also leads the Vision leaderboard and has advanced to #1 in Math, Hard Prompts, and Creative Writing categories, while improving in Coding to #3.

- Discussions highlight skepticism about Gemini Exp 1114's performance, with some users questioning if its improvements are due to training on Claude's data or other synthetic datasets. Some users humorously suggest that the model's identity and capabilities might be exaggerated or misunderstood, as seen in memes and jokes about its naming and performance.

- The technical debate includes context length and response time, noting that Gemini Exp 1114 has a 32k input context length and is perceived as slower, potentially focusing on "thinking" processes. Comparisons are made with OpenAI's O1 regarding reasoning capabilities, with users noting that Gemini Exp 1114 might use "chain of thought" reasoning effectively without explicit prompts.

- Users express interest in the naming conventions and model variations, with mentions of Nemotron and comparisons to Llama models. There's curiosity about the naming of Gemini models like "pro" or "flash" and speculation about whether this version is a new iteration like "1.5 Ultra" or "2.0 Flash/Pro".

Theme 2. Omnivision-968M Optimizes Edge Device Vision Processing

- Omnivision-968M: Vision Language Model with 9x Tokens Reduction for Edge Devices (Score: 214, Comments: 47): The Omnivision-968M model, optimized for edge devices, achieves a 9x reduction in image tokens (from 729 to 81), enhancing efficiency in Visual Question Answering and Image Captioning tasks. It processes images rapidly, demonstrated by generating captions for a 1046×1568 pixel poster in under 2 seconds on an M4 Pro Macbook, using only 988 MB RAM and 948 MB storage. More information and resources can be found on Nexa AI's blog and their HuggingFace repository.

- There is curiosity about the feasibility of building the Omnivision-968M model using consumer-grade GPUs, like a few 3090s, versus needing to rent more powerful cloud GPUs such as H100/A100s for training. The model's compatibility with Llama CPP and its performance in OCR tasks are also questioned.

- Discussion includes the potential release of an audio + visual projection model and the split between vision/text parameters. Users mention the Qwen2.5-0.5B model and express interest in Nexa SDK usage, with links provided to the GitHub repository.

- Concerns are raised about contributing back to the llama.cpp project, with some users criticizing the lack of reciprocity in open-source contributions. There is also a discussion on the limitations of Coral TPUs for running models due to their small memory size, suggesting entry-level NVIDIA cards as a more cost-effective solution.

Theme 3. Qwen 2.5 7B Dominates Livebench Rankings

- Qwen 2.5 7B Added to Livebench, Overtakes Mixtral 8x22B and Claude 3 Haiku (Score: 154, Comments: 35): Qwen 2.5 7B has been added to Livebench and has surpassed both Mixtral 8x22B and Claude 3 Haiku in rankings.

- Users question the practical utility of Qwen 2.5 7B outside of benchmarks, noting its poor performance in tasks like building a basic Streamlit page and parsing job postings. WizardLM 8x22B is mentioned as a preferable alternative due to its superior performance in real-world applications despite smaller benchmark scores.

- Several users express skepticism about the validity of benchmarks, doubting claims that smaller models like Qwen 2.5 7B outperform larger ones such as GPT-3.5 or Mixtral 8x22B. They highlight a disconnect between benchmark results and actual usability, especially in conversational and instructional tasks.

- Discussion includes technical aspects of running models like Qwen 2.5 14B and 32B on specific hardware setups, such as Apple M3 Max and NVIDIA GTX 1650, and considerations for using models in fp16 or Q4_K_M formats. Users also mention Gemini-1.5-flash-8b as a close competitor in benchmarks, with its multimodal capabilities noted.

- Claude 3.5 Just Knew My Last Name - Privacy Weirdness (Score: 118, Comments: 141): The post discusses a concerning experience with Claude 3.5 Sonnet, where the AI unexpectedly included the user's rare last name in a generated MIT license, despite the user only providing their first name in the session. This raises questions about whether the AI has access to past interactions or external sources like GitHub profiles, despite the user's belief that they opted out of such data usage, and prompts the user to seek similar experiences or insights from others.

- Commenters speculated that Claude 3.5 Sonnet might have accessed the user's last name through GitHub profiles or other publicly available data, despite the user's efforts to keep their information private. Some users suggested that the AI might correlate the user's coding style and public repositories to infer their identity, while others doubted the AI had access to private data or account credentials.

- There was discussion on whether metadata or personal details from account registration, such as an email address or payment information, could have been used to identify the user. Some comments highlighted that Large Language Models (LLMs) typically do not receive such metadata directly, and any apparent personalization might be coincidental or based on public data.

- Users also debated the reliability of LLMs in explaining their thought processes, with some noting that the models might fabricate explanations or rely on training data correlations. A suggestion was made to contact Anthropic for clarification, as the incident raised concerns about privacy and data usage.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

Theme 1. Claude Surges Past GPT-4O: Major Shift in Code Generation Quality

- 3.5 sonnet vs 4o in Coding, significant different or just a little better? (Score: 26, Comments: 42): Claude 3.5 Sonnet shows superior coding capabilities compared to GPT-4, with usage limits of 50 messages/5 hours for Claude Pro versus ChatGPT Plus's 80 messages/3 hours, plus additional 50/day with O1-mini and 7/day with O1-preview. The post author seeks advice on whether the performance difference justifies switching to Claude Pro for Python, JavaScript, and C++ development at medium to advanced levels.

- Users report that Claude 3.5 Sonnet consistently outperforms GPT-4 in coding tasks, with one user noting that GPT-4's coding capabilities have notably declined from its initial release when it could effectively handle complex tasks like Matlab-to-Python translations and PyQT5 implementations.

- Several developers emphasize Sonnet's superior code understanding and error fixing capabilities, though some mention using O1-preview for high-level architecture discussions. Users recommend using Cursor with Sonnet as an alternative to handle usage limits.

- Despite the restrictive 45 messages/5 hour limit of Claude Pro, users overwhelmingly prefer it over GPT-4, citing better code quality and project understanding. Some developers use a hybrid approach, switching to GPT-4 while waiting for Claude's limits to reset.

- Chat GPT plus is skipping code, removing functions etc. or even giving empty responses, even the o1-preview (Score: 27, Comments: 21): ChatGPT Plus users report issues with code generation where the model truncates responses, removes unrelated functions, and occasionally provides empty responses when handling larger codebases (specifically a 700-line script). The problem persists even with the GPT-4 preview model, where requests for complete code only return modified functions without the original context.

- Users report that code quality has declined across models, with some suggesting that OpenAI may be intentionally degrading performance. Others note that using the API during US nighttime hours yields better results due to lower load.

- Best practices for working with these models involve breaking down code into smaller, manageable chunks. This approach naturally leads to better architecture by preventing files from becoming too large or too fragmented.

- Claude and standard GPT-4 may produce better code than the GPT-4 preview model, despite the latter's strength in detailed code analysis and complex topic discussions.

Theme 2. FrontierMath Benchmark: Models Score Only 2% on Advanced Math

- FrontierMath is a new Math benchmark for LLMs to test their limits. The current highest scoring model has scored only 2%. (Score: 357, Comments: 117): FrontierMath, a new mathematical benchmark for testing Large Language Models, exposes significant limitations in LLM mathematical abilities with the top-performing model achieving only 2% accuracy. The benchmark aims to evaluate advanced mathematical capabilities beyond standard tests, highlighting a clear performance gap in current AI systems.

- Field Medalists including Terence Tao and Timothy Gowers confirm these problems are "extremely challenging" and beyond typical IMO problems. The benchmark requires collaboration between graduate students, AI, and algebra packages to solve effectively.

- The problems in FrontierMath are specifically designed for AI benchmarking by PhD mathematicians, requiring multiple domain experts working together over extended periods. Sample problems are available at epoch.ai, though the full dataset remains private.

- Discussion focused on the significance of achieving even 2% accuracy on problems that most PhD mathematicians cannot solve individually. Users debated whether AI reaching this level should be considered a collaborative team member rather than just a tool, with reference to Chris Olah's work on neural networks.

Theme 3. Chat.com Domain Sells for $15M to OpenAI - Major Corporate Move

- Indian Man Sells Chat.com for ₹126 Crore (Score: 550, Comments: 173): Chat.com domain sold for $15 million (₹126 Crore) with partial payment made in OpenAI shares. The domain was sold by an Indian owner, marking a significant domain name transaction in 2023.

- Dharmesh Shah, the CTO of Hubspot and tech billionaire, sold the domain after purchasing it for approximately $14 million last year. The transaction included partial payment in OpenAI shares, with Shah being friends with Sam (presumably Altman).

- Multiple commenters criticized the headline's focus on the seller's nationality rather than his significant professional credentials. The sale represents a relatively small transaction for Shah, who is reportedly worth over $1 billion.

- Discussion revealed this was not a long-term domain investment, contradicting initial assumptions about it being held since the early internet days. The actual profit margin was relatively modest given the recent purchase price.

Theme 4. Claude Rollback: 3.6 Issues Lead to Version Reversal

- LMAO they are now pulling back Sonnet 3.6? (Score: 62, Comments: 55): Anthropic appears to have rolled back Claude 3.6 Sonnet and removed version numbering from Haiku, with a screenshot showing the removal of "(new)" designation from the model selection interface. The changes suggest potential versioning adjustments or silent updates to their Claude models, though no official explanation was provided.

- Users report that Claude Sonnet is likely still version 3.6 with just the "new" label removed, as confirmed by the model's knowledge of events and its October 22nd version identifier.

- Community members criticize Anthropic's communication and version naming strategy, with many noting the company's recent struggles with transparency and internal organization. One user humorously identifies different versions by their apology phrases: "You're right, and I apologize" for old 3.5 and "Ah, I see now!" for new 3.5.

- Discussion reveals potential performance variations, with reports of the model having limitations in message output and failing simple tests like counting letters in words. A High Demand Notice was observed at the top of the page, suggesting heavy system usage.

- What's the point of paying if I just logged in and I get greeted with this stupid message? This is ridiculously bad. I didn't even use Claude today that I'm already limited. (Score: 103, Comments: 50): Claude users report immediate access limitations and service restrictions despite having paid subscriptions and no prior usage that day. Anthropic's service limitations appear to affect both new and existing paid subscribers without clear explanation or prior notice.

- Users report that paid Claude subscriptions hit usage limits quickly, with some being restricted before 11 AM. Multiple users suggest using 2-3 accounts at $20 each or switching to the more expensive API as workarounds.

- Community discussion highlights the need for better usage tracking features, suggesting a progress bar for remaining usage before downgrade to concise mode. Users criticize the lack of clarity around usage limits and inability to switch out of concise mode when restricted.

- Technical users discuss local alternatives, recommending Ollama with specific hardware requirements: NVIDIA 3060 (12GB VRAM, $200) or 3090 (24GB VRAM, $700). The Qwen 2.5 32B model is suggested for those with sufficient VRAM, while Qwen 14B 2.5 is recommended as a lighter alternative.

AI Discord Recap

A summary of Summaries of Summaries by O1-mini

Theme 1: Hardware and Performance Optimization for AI Models

- GIGABYTE Unveils AMD Radeon PRO W7800 AI TOP 48G: GIGABYTE launched the AMD Radeon PRO W7800 AI TOP 48G featuring 48 GB of GDDR6 memory, targeting AI and workstation professionals.

Theme 2: Model Releases and Integration Enhancements

- DeepMind Open-Sources AlphaFold Code: DeepMind has released the AlphaFold code, enabling broader access to their protein folding technology, expected to accelerate research in biotechnology and bioinformatics.

- Google Launches Gemini AI App: Google introduced the Gemini app, integrating advanced AI features to compete with existing tools, as covered in the TechCrunch article.

Theme 3: AI Tool Integration and Feature Development

- ChatGPT Now Integrates with Desktop Apps on macOS: ChatGPT for macOS now supports integration with VS Code, Xcode, Terminal, and iTerm2, enhancing coding assistance by directly interacting with development environments.

- Stable Diffusion WebUI Showdown: ComfyUI vs SwarmUI: Users compared ComfyUI and SwarmUI, favoring SwarmUI for its ease of installation and consistent performance in Stable Diffusion workflows.

Theme 4: Training Techniques and Dataset Management

- Orca-AgentInstruct Boosts Synthetic Data Generation: Orca-AgentInstruct introduces agentic flows to generate diverse, high-quality synthetic datasets, enhancing the training efficiency of smaller language models.

- Effective Dataset Mixing Strategies for LLM Training: Members sought guidance on mixing and matching datasets during various stages of LLM training, emphasizing best practices to optimize training processes without compromising model performance.

PART 1: High level Discord summaries

LM Studio Discord

-

Qwen 2.5 LaTeX Rendering Issues: Users are experiencing problems with Qwen 2.5 failing to render LaTeX properly when wrapped in

$signs, resulting in nonsensical outputs.- A suggestion was made to create a system prompt with clear instructions to improve rendering, but attempts to resolve the issue have not been successful.

- LM Studio's Function Calling Beta Excites Users: LM Studio users are enthusiastic about the new function calling beta feature, seeking personal experiences and feedback.

- While some members found the documentation straightforward, others expressed confusion and are looking forward to more functionality in future updates.

- SSD Speed Comparisons and RAID Configurations: The community discussed SSD performance, specifically comparing the SABRENT Rocket 5 and Crucial T705, and the impact of PCIe lane limitations on RAID setups.

- Users highlighted that actual SSD performance can vary significantly based on specific workloads and RAID configurations, affecting overall efficiency.

- GIGABYTE Releases AMD Radeon PRO W7800 AI TOP 48G: GIGABYTE has launched the AMD Radeon PRO W7800 AI TOP 48G, equipped with 48 GB of GDDR6 memory, targeting AI and workstation professionals.

- Despite the impressive specifications, there are concerns regarding the reliability of AMD's drivers and software compatibility when compared to NVIDIA's CUDA.

- Hardware Considerations for LLM Training: Participants noted that 24 GB of VRAM is often insufficient for training larger LLMs, leading to discussions about potential upgrade paths and renting GPUs.

- Training on devices like the Mac Mini is possible but may result in higher electricity costs, prompting members to consider more efficient hardware solutions.

Perplexity AI Discord

-

Perplexity API's URL Injection Issue: Users have reported that the PPLX API occasionally inserts random URLs when it cannot confidently retrieve information, resulting in inaccurate outputs.

- Discussions highlight that the API's tendency to add unrelated URLs undermines its reliability for production use, prompting calls for enhanced accuracy in future updates.

- DeepMind Releases AlphaFold Code: DeepMind has open-sourced the AlphaFold code, enabling broader access to their protein folding technology, as announced here.

- This release is expected to accelerate research in biotechnology and bioinformatics, fostering new innovations in protein structure prediction.

- Chegg's Decline Driven by ChatGPT: Chegg has experienced a 99% decline in value, largely attributed to competition with ChatGPT, detailed in the article.

- The impact of AI on traditional educational platforms like Chegg has sparked significant debate within the community regarding the future of online learning resources.

- Google Gemini App Launch: Google has officially launched the Gemini app, introducing innovative features to compete with existing tools, as covered in the TechCrunch article.

- The app integrates AI and user interaction to deliver enhanced functionalities, aiming to capture a larger market share in the AI-driven application space.

aider (Paul Gauthier) Discord

-

Gemini Experimental Model Performance Soars: Users report that the new gemini-exp-1114 model achieves up to 61% accuracy on edits, outperforming Gemini Pro 1.5 in various tests despite minor formatting glitches.

- Comparative analysis suggests that gemini-exp-1114 offers similar effectiveness to previous versions, contingent on specific use case scenarios.

- Cost Implications of Model Usage in Aider: Discussions highlight that utilizing different models in Aider incurs costs ranging from $0.05 to $0.08 per message, influenced by file configurations.

- This has led users to consider more economical options like Haiku 3.5 to mitigate expenses for smaller-scale projects.

- Seamless Integration with Qwen 2.5 Coder: Users encountered integration issues with Hyperbolic's Qwen 2.5 Coder due to missing metadata, which were resolved by updating their installation setup.

- Aider's main branch updates proved essential in overcoming these challenges, facilitating smooth integration.

- Automating Commit Messages with Aider: To generate commit messages for uncommitted changes, users utilize commands like

aider --commit --weak-model openrouter/deepseek/deepseek-chator/commitwithin Aider.

- These commands automate the commit process by committing all changes without prompting for individual file selections.

HuggingFace Discord

-

Boosting OCR Accuracy with Targeted Training: Discussions highlighted that OCR accuracy can be substantially enhanced through targeted training using appropriate document scanning applications, potentially achieving near-perfect recognition rates.

- Participants emphasized that ensuring the right conditions, such as proper scanning techniques and model fine-tuning, is critical for maximizing OCR performance.

- Enhancing OCR Models via Feedback Integration: Contributors proposed integrating failed OCR instances back into the training pipeline to improve model performance in specific applications.

- This feedback loop approach aims to iteratively refine the models, leading to increased accuracy and reliability in OCR tasks.

- Introducing the OKReddit Dataset for Research: A member unveiled the OKReddit dataset, a curated collection comprising 5TiB of Reddit content spanning from 2005 to 2023, designed for research purposes.

- Currently in alpha, the dataset offers a filtered list of subreddits and provides a link for access, inviting researchers to explore its potential applications.

- Distinguishing RWKV Models from Recursal.ai's Offerings: A member clarified that while RWKV models are associated with specific training challenges, they differ from Recursal.ai models in terms of dataset particulars.

- Planned future integrations indicate a progression in model training methodologies, enhancing the versatility of both model types.

- Optimizing Legal Embeddings for AI Applications: Effective training of embedding models for legal applications necessitates using embeddings pre-trained on legal data to avoid prolonged training times and inherent biases.

- Focusing on domain-specific training not only improves accuracy but also accelerates the development process for legal AI systems.

Unsloth AI (Daniel Han) Discord

-

Triton and CUDA Future Focus: Members plan significant engineering work around Triton and CUDA, highlighting their importance for future projects.

- There are concerns about diminishing returns in model improvement, indicating a shift towards efficiency.

- Language Model Preferences Shift: The Mistral 7B v0.3 model is considered outdated as Qwen/Qwen2.5-Coder-7B-Instruct gains popularity due to extensive training data.

- Community members compared the performance of Gemma2 and GPT-4o, sharing insights on their efficacy.

- Unsloth Installation and Lora+ Support: Users encountered Unsloth installation errors due to missing torch, with suggestions to verify installations in the current environment.

- Lora+ support was confirmed in Unsloth via pull requests, with discussions on its straightforward implementation.

- Fine-tuning Llama3.1b for Math Equations: A user is fine-tuning Llama3.1b for solving math equations, currently achieving 77% accuracy.

- They are conducting hyperparameter sweeps to enhance accuracy to at least 80%, despite low losses on their dataset.

- Dataset Creation: Svelte and Song Lyrics: Due to poor results from Qwen2.5-Coder, a comprehensive dataset for Svelte documentation was created using Dreamslol/svelte-5-sveltekit-2.

- For song lyrics generation, a model is being developed using 5780 songs and associated metadata, with recommendations to use an Alpaca chat template.

Eleuther Discord

-

Scaling Laws: Twitter Declares Scaling Dead: Members debated Twitter's recent claim that scaling is no longer effective in AI, emphasizing the need for insights backed by peer-reviewed papers rather than unverified rumors.

- Some participants referenced journalistic sources from major labs highlighting disappointing outcomes from recent training experiments, questioning the validity of Twitter's assertion.

- LLM Limitations Impacting AGI Aspirations: Discussions spotlighted the capability constraints of current LLM architectures, suggesting potential diminishing returns could hinder the development of AGI-like models.

- Participants stressed the necessity for LLMs to handle intricate tasks, such as Diffie-Hellman key exchanges, raising concerns about models' ability to internally maintain private keys and overall privacy.

- Mixture-of-Experts Enhances Pythia Models: A discussion explored implementing a Mixture-of-Experts (MoE) framework within the Pythia model suite, debating between replicating existing training configurations or updating hyperparameters like SwiGLU.

- Members compared OLMo and OLMOE models, noting discrepancies in data ordering and scale consistency, which could influence the effectiveness of MoE integration.

- Defining Open Source AI: Data vs. Code: Engagements centered on the classification of AI systems as Open Source AI based on IBM's definitions, particularly debating whether requirements apply to data, code, or both.

- Linking to the Open Source AI Definition 1.0, members emphasized the importance of autonomy, transparency, and collaboration while navigating legal risks through descriptive data disclosure.

- Transformer Heads Identify Antonyms in Models: Findings revealed that certain transformer heads are capable of computing antonyms, with analyses showcasing examples like 'hot' - 'cold' and utilizing OV circuits and ablation studies.

- The presence of interpretable eigenvalues across various models confirms the functionality of these antonym heads in enhancing language model comprehension.

Nous Research AI Discord

-

NVIDIA NV-Embed-v2 Tops Embedding Benchmark: NVIDIA released NV-Embed-v2, a leading embedding model that achieved a score of 72.31 on the Massive Text Embedding Benchmark.

- The model incorporates advanced techniques like enhanced latent vector retention and distinctive hard-negative mining methods.

- RLHF vs SFT Explored for Llama 3.2 Training: A discussion on Reinforcement Learning from Human Feedback (RLHF) versus Supervised Fine-Tuning (SFT) focused on resource demands for training Llama 3.2.

- Members highlighted that while RLHF requires more VRAM, SFT offers a viable alternative for those with limited resources.

- SOTA Image Recognition with Compact Model: adjectiveallison introduced an image recognition model achieving SOTA performance with a 29x smaller size.

- This model demonstrates that compact architectures can maintain high accuracy, potentially reducing computational resources.

- AI-Driven Translation Tool Enhances Cultural Nuance: An AI-driven translation tool utilizes an agentic workflow that surpasses traditional machine translation by emphasizing cultural nuance and adaptability.

- It accounts for regional dialects, formality, tone, and gender-specific nuances, ensuring more accurate and context-aware translations.

- Optimizing Dataset Mixing in LLM Training: A member requested guidance on effectively mixing and matching datasets during various stages of LLM training.

- Emphasis was placed on adopting best practices to optimize the training process without compromising model performance.

OpenRouter (Alex Atallah) Discord

-

MistralNemo & Celeste Support Discontinued: MistralNemo StarCannon and Celeste have been officially deprecated as the sole provider ceased their support, impacting all projects dependent on these models.

- This removal necessitates users to seek alternative models or adjust their existing workflows to accommodate the change.

- Perplexity Adds Grounding Citations in Beta: Perplexity has rolled out a beta feature for grounding citations, allowing URLs to be included in completion responses for enhanced content reliability.

- Users can now directly reference sources, improving the trustworthiness of the generated information.

- Gemini API Now Accessible: Gemini is now available through the API, generating excitement about its advanced capabilities among the engineering community.

- However, some users have reported not seeing the changes, indicating potential rollout inconsistencies.

- OpenRouter Implements Rate Limits: OpenRouter has introduced a 200 requests per day limit for free models, as detailed in their official documentation.

- This constraint poses challenges for deploying free models in production environments due to reduced scalability.

- Hermes 405B Maintains Efficiency Preference: Hermes 405B continues to be the preferred model for many users despite its higher costs, due to its unmatched performance efficiency.

- Users like Fry69_dev highlight its superior efficiency, maintaining its status as a top choice despite profit margin concerns.

Stability.ai (Stable Diffusion) Discord

-

Nvidia GPU Blues for Stable Diffusion: A user reported issues configuring Stable Diffusion to use their dedicated Nvidia GPU instead of integrated graphics. Another member referenced the WebUI Installation Guides pinned in the channel for support.

- The community emphasized the importance of following the setup guides to ensure optimal GPU utilization for Stable Diffusion workflows.

- WebUI Showdown: ComfyUI vs. SwarmUI: A member compared the complexities of ComfyUI with SwarmUI, highlighting that SwarmUI streamlines the configuration process. It was recommended to use SwarmUI for a more straightforward installation and consistent performance.

- The discussion focused on ease of use, with several users agreeing that SwarmUI offers a less technical approach without compromising functionality.

- Hunting Down the Latest Image Blending Paper: A user sought assistance locating a recent research paper on image blending, mentioning a Google author but couldn’t find it. Another member suggested performing a Google search on image blending within arXiv for relevant papers.

- The community underscored the value of accessing preprints on arXiv to stay updated with the latest advancements in image blending techniques.

- Frame-by-Frame Fixes for Video Upscaling: A member shared their method for upscaling videos by extracting frames every 0.5 seconds to correct inaccuracies. The discussion included using Flux Schnell and other tools to achieve rapid inference results.

- Participants discussed various techniques and tools to enhance video quality, emphasizing the balance between speed and accuracy in the upscaling process.

- Mastering Low Denoise Inpainting with Diffusers: A user inquired about performing low denoise inpainting or img2img processing for specific image regions. Suggestions included utilizing Diffusers for a swift img2img workflow with minimal steps to refine images.

- The community recommended Diffusers as an effective tool for targeted inpainting tasks, highlighting its efficiency in achieving high-quality image refinements.

Interconnects (Nathan Lambert) Discord

-

Scaling Laws Theory faces scrutiny: Members raised concerns about the validity of the scaling laws theory, which suggests that increased computing power and data will enhance AI capabilities.

- One member expressed relief, stating that decreasing cross-entropy loss is a sufficient condition for improving AI capabilities.

- GPT-3 Scaling Hypothesis validates scaling benefits: Referencing The Scaling Hypothesis, a member stated that neural networks generalize and exhibit new abilities as problem complexity increases.

- They highlighted that GPT-3, announced in May 2020, continues to demonstrate benefits of scale contrary to predictions of diminishing returns.

- $6B Funding Round Elevates Valuation to $50B: A member shared a tweet indicating that a funding round closing next week will bring in $6 billion, predominantly from Middle East sovereign funds, at a $50 billion valuation.

- The funds will reportedly go directly to Jensen, fueling upcoming developments in the tech space.

- Historical Misalignment Concerns Resurface in 2024 Case: Members shared TechEmails discussing communications involving Elon Musk, Sam Altman, and Ilya Sutskever from 2017 on misalignment issues.

- These documents relate to the ongoing case Elon Musk, et al. v. Samuel Altman, et al. (2024), highlighting the historical context of alignment concerns.

- Apple Silicon vs NVIDIA GPUs: LLMs Cost-Effectiveness Showdown: A post discusses the competition between Apple Silicon and NVIDIA GPUs for running LLMs, highlighting compromises in the Apple platform.

- While Apple's newer products offer higher memory capacities, NVIDIA solutions remain more cost-effective.

GPU MODE Discord

-

FSDP Tags Along with torch.compile: A user demonstrated wrapping torch.compile within FSDP without encountering issues, indicating seamless integration.

- They noted the effectiveness of this approach but mentioned not having tested it in the reverse order, leaving room for further experimentation.

- NSYS Faces Memory Overload: nsys profiling can consume up to 60 GB of memory before crashing, raising concerns about its practicality for extensive profiling tasks.

- To mitigate this, users recommended optimizing nsys usage with flags like

nsys profile -c cudaProfilerApi -t nvtx,cudato minimize logging overhead. - ZLUDA Extends CUDA to AMD and Intel GPUs: In a YouTube video, Andrzej Janik showcased how ZLUDA enables CUDA capabilities on AMD and Intel GPUs, potentially transforming GPU computing.

- Community members lauded the breakthrough, expressing excitement about democratizing GPU compute power beyond NVIDIA hardware.

- React Native Embraces LLMs with ExecuTorch: Software Mansion launched a new React Native library leveraging ExecuTorch for backend LLM processing, simplifying model deployment on mobile platforms.

- Users praised the library for its ease of use, highlighting straightforward installation and model launching on the iOS simulator.

- Bitnet 1.58 A4 Accelerates LLM Inference: Adopting Bitnet 1.58 A4 with Microsoft’s T-MAC operations achieves 10 tokens/s on a 7B model, offering a rapid inference solution without heavy GPU reliance.

- Resources are available for model conversion to Bitnet, though some post-training modifications may be necessary to optimize performance.

Notebook LM Discord Discord

-

Listeners Demand More from Top Shelf Podcast: Listeners are pushing to expand the Top Shelf Podcast by adding more book summaries, specifically requesting episodes on Think Again by Adam Grant and insights from The Body Keeps Score. They linked to the Top Shelf Spotify show to support their recommendations.

- One user encouraged community members to share additional book recommendations to enrich the podcast's content offerings.

- Concerns Over AI's Control of Violence: A user shared the "Monopoly on violence for AI" YouTube video, raising alarms about the implications of artificial superintelligence managing violent actions. This monopolization of violence could lead to significant ethical and safety concerns.

- The video explores the potential consequences of granting AI entities control over violent decisions, sparking a discussion on the necessity of strict governance measures.

- NotebookLM Faces Operational Issues: Multiple members reported experiencing technical issues with NotebookLM, such as malfunctioning features and restricted access to certain functions. They expressed frustration while awaiting resolutions from the development team.

- Users shared temporary workarounds and emphasized the need for timely fixes to restore full functionality of the tool.

- Tailoring Audio Summaries for Diverse Audiences: A member showcased their approach to creating customized audio summaries using NotebookLM, adapting content specifically for social workers and graduate students. This demonstrates NotebookLM's capability to modify content based on audience requirements.

- The customization process involved altering the presentation style to better suit the informational needs of different professional groups.

- Limitations on Document Uploads Discussed: Participants debated the document upload limitations within NotebookLM, with suggestions to group documents to adhere to upload restrictions. Questions were raised about the possibility of uploading more than 50 documents.

- The discussion highlighted the need for improved upload capabilities to better accommodate extensive document collections.

OpenAccess AI Collective (axolotl) Discord

-

Orca synthetic data advancements: Research on Orca demonstrates its ability to utilize synthetic data for post-training small language models, matching the performance of larger counterparts.

- Orca-AgentInstruct introduces agentic flows to generate diverse, high-quality synthetic datasets, enhancing the efficiency of data generation processes.

- Liger Kernel and Cut Cross-Entropy improvements: Enhancements in the Liger Kernel have resulted in improved speed and memory efficiency, as detailed in the GitHub pull request.

- The proposed Cut Cross-Entropy (CCE) method reduces memory usage from 24 GB to 1 MB for the Gemma 2 model, enabling training approximately 3x faster than the current Liger setup, as outlined here.

- Axolotl Office Hours and feedback session: Axolotl is hosting its first Office Hours session on December 5th at 1pm EST on Discord, allowing members to ask questions and share feedback.

- Members are encouraged to bring their ideas and suggestions to the Axolotl feedback session, with the team eager to engage and enhance the platform. More details available in the Discord group chat.

- Qwen/Qwen2 Pretraining and Phorm Bot Issues: A member seeks guidance on pretraining the Qwen/Qwen2 model using qlora with a raw text jsonl dataset, followed by fine-tuning with an instruct dataset after installing Axolotl docker.

- Issues reported with the Phorm bot include its inability to respond to basic queries, indicating a potential technical malfunction within the community.

- Meta Invites to Llama event: A member received an unexpected invitation from Meta to attend a two-day event at their HQ regarding open-source initiatives and Llama, sparking curiosity about potential new model releases.

- Community members are speculating on the event's focus, especially considering the unusual nature of the invitation without a speaking role.

OpenAI Discord

-

GPT-4o Token Charges: A discussion revealed that GPT-4o incurs 170 tokens for processing each

512x512tile in high-res mode, effectively valuing a picture at approximately 227 words. Refer to OranLooney.com for an in-depth analysis.- Participants questioned the rationale behind the specific token pricing, drawing parallels to magic numbers in programming and debating its impact on usage costs.

- Enhancing RAG Prompts with Few-Shot Examples: Users are exploring whether integrating few-shot examples from documents into RAG prompts can improve answer quality for their QA agent platform.

- The community emphasized the necessity for thorough research in this area, aiming to refine prompt strategies to bolster response accuracy.

- AI Performance in Game of 24: 3.5 AI models have demonstrated the ability to win occasionally in the Game of 24, showcasing notable advancements in AI gaming capabilities.

- This improvement underscores the ongoing enhancements in AI algorithms, with users expressing optimism about future performance milestones.

- Content Flagging Policies: Members discussed that content flags primarily pertain to model outputs and aid in training enhancements, rather than indicating user misconduct.

- There was concern over an uptick in content flags, especially in contexts like horror video games, suggesting increased monitoring measures.

- Advanced Photo Selection Techniques: A member proposed creating a numbered collage as an efficient method for selecting the best photo among hundreds, aiming to streamline the selection process.

- Despite some skepticism about the collage approach being 'patchy,' its effectiveness was acknowledged, particularly when tasks are handled sequentially.

OpenInterpreter Discord

-

OpenAI Launches 'OPERATOR' AI Agent: In a YouTube video titled 'OpenAI Reveals OPERATOR The Ultimate AI Agent Smarter Than Any Chatbot', OpenAI announced their upcoming AI agent anticipated to reach a broader audience soon.

- The video emphasizes that it's coming to the masses! suggesting a significant scaling of the AI agent’s deployment.

- Beta App Surpasses Console Integration: Members confirmed that the desktop beta app offers superior performance compared to the console integration, attributed to enhanced infrastructure support.

- It's highlighted that the desktop app has more extensive behind-the-scenes support than the open-source repository, ensuring a better Interpreter experience.

- Azure AI Search Techniques Detailed: A YouTube video titled 'How Azure AI Search powers RAG in ChatGPT and global scale apps' outlines data conversion and quality restoration methods used in Azure AI Search.

- The discussion raises concerns about patent filings, resource allocation, and the necessity for efficient data deletion processes in large-scale applications.

- Probabilistic Computing Boosts GPU Performance: A YouTube video reports that probabilistic computing achieves 100 million times better energy efficiency compared to top NVIDIA GPUs.

- The presenter states, 'In this video, I discuss probabilistic computing that reportedly allows for 100 million times better energy efficiency compared to the best NVIDIA GPUs.'

- ChatGPT Desktop Enhancements: Latest updates to the ChatGPT desktop introduce user-friendly enhancements, marking a significant improvement for mass users.

- Users are eager to experience features that refine their interactions with the platform, emphasizing the desktop's enhanced usability.

tinygrad (George Hotz) Discord

-

tinybox pro launches for preorder: The tinybox pro is now available for preorder on the tinygrad website at $40,000, featuring eight RTX 4090s and delivering 1.36 PetaFLOPS of FP16 computing.

- Marketed as a cost-effective alternative to a single Nvidia H100 GPU, it aims to provide substantial computational power for AI engineers.

- Clarification on int64 indexing bounty: A member inquired about the requirements for the int64 indexing bounty, specifically regarding modifications to functions like

__getitem__in tensor.py.

- Another member referenced PR #7601, which addresses the bounty but is pending acceptance.

- Enhancements in buffer transfer on CLOUD devices: A pull request on buffer transfer function for CLOUD devices was highlighted, aiming to improve device interoperability.

- Discussions pointed out potential ambiguities regarding size checks for destination buffers, emphasizing the need for clarity in implementation.

- Natively transferring tensors between GPUs: Clarification was provided that tensors can be transferred between different GPUs on the same device using the

.tofunction.

- This guidance assists users in efficiently managing tensor transfers within their projects.

- Seeking feedback on tinygrad contributions: A contributor shared their initial efforts to contribute to tinygrad, seeking comprehensive feedback.

- They referenced PR #7709 which focuses on data transfer improvements.

LlamaIndex Discord

-

Learn GenAI App Building in Community Call: Join our upcoming Community Call to explore creating knowledge graphs from unstructured data and advanced retrieval methods.

- Participants will delve into techniques to transform data into queryable formats.

- Python Docs Feature Boost with RAG System: The Python documentation was enhanced with a new 'Ask AI' widget that launches a precise RAG system for code queries Check it out!.

- Users can receive accurate, up-to-date code responses directly to their questions.

- CondensePlusContext's Dynamic Context Retrieval: CondensePlusContext condenses input and retrieves context for each user message, enhancing dynamic context insertion into the system prompt.

- Members prefer it for its efficiency in managing context retrieval consistently.

- Challenges with condenseQuestionChatEngine: A member reported that condenseQuestionChatEngine can generate incoherent standalone questions when users change topics abruptly.

- Suggestions include customizing the condense prompt to handle sudden topic shifts effectively.

- Implementing Custom Retrievers in CondensePlusContext: Members agreed to use CondensePlusContextChatEngine with a custom retriever to align with specific requirements.

- They recommended employing custom retrievers and node postprocessors for optimized performance.

Cohere Discord

-

Agentic Chunking Research Published: A new method on agentic chunking for RAG achieves less than 1 second inference times, proving efficient on GPUs and cost-effective. Full details and community building for this research can be found on their Discord channel.

- This advancement demonstrates significant performance improvements in retrieval-augmented generation processes, enhancing system efficiency.

- LlamaChunk Simplifies Text Processing: LlamaChunk introduces an LLM-powered technique that optimizes recursive character text splitting by requiring only a single LLM inference over documents. This method eliminates the need for brittle regex patterns typically used in standard chunking algorithms.

- The team encourages contributions to the LlamaChunk codebase, available for public use on GitHub.

- Enhancements in RAG Pipelines: RAG Pipelines are being optimized through agentic chunking, aiming to streamline retrieval-augmented generation processes. This integration focuses on reducing inference times and improving overall pipeline efficiency.

- The updates leverage GPU efficiency to maintain cost-effectiveness while enhancing performance metrics.

- Uploading Files with Playwright in Python: A user shared a method for uploading a text file in Playwright Python using the

set_input_filesmethod, followed by querying the uploaded content. This approach streamlines automated testing workflows involving file interactions.

- However, the user noted that the method feels somewhat odd when requesting, "Can you summarize the text in the file? @file2upload.txt".

LAION Discord

-

Copyright Confusion over Public Links: A member asserted, there is no world where a public index of public links is a copyright infringement, sparking confusion about the legality of using public links.

- This debate highlights the community's uncertainty regarding copyright laws related to public link indexing.

- Discord Etiquette Appreciation: A member expressed gratitude with a simple, ty, demonstrating appreciation for help received.

- Such exchanges indicate ongoing collaborative support and adherence to Discord etiquette within the community.

DSPy Discord

-

ChatGPT for macOS integrates with desktop apps: ChatGPT for macOS now supports integration with desktop applications such as VS Code, Xcode, Terminal, and iTerm2 in its current beta release for Plus and Team users.

- This integration enables ChatGPT to enhance coding assistance by directly interacting with the development environment, potentially transforming project workflows. OpenAI Developers tweet announced this feature recently.

- dspy GPTs functionality: There is a strong intention to extend the functionality of dspy GPTs, aiming to significantly enhance development workflows.

- Community members discussed the potential benefits of expanding dspy GPTs integration, emphasizing the positive impact on their project processes.

- LLM Document Generation for Infractions: A user is developing an LLM application to generate comprehensive legal documents defending drivers against license loss due to infractions, currently focused on alcohol ingestion cases.

- They are seeking a method to create an optimized prompt that can handle various types of infractions without the need for individually tailored prompts.

- DSPy Language Compatibility: A user inquired about the language support capabilities of DSPy for applications requiring non-English languages.

- Reference was made to an open GitHub issue that addresses requests for localization features within DSPy.

Modular (Mojo 🔥) Discord

-

ABI Research Uncovers Optimization Challenges: Members shared new ABI research papers and a PDF, highlighting low-level ABIs challenges in facilitating cross-module optimizations.

- One member pointed out that writing everything in one language is often preferred for maximum execution speed.

- ALLVM Project Faces Operational Hurdles: Discussions revealed that the ALLVM project likely faltered due to insufficient device memory for compiling and linking software, especially within browsers. ALLVM Research Project.

- Another member suggested that Mojo could leverage ALLVM for C/C++ bindings in innovative ways.

- Members Advocate Cross-Language LTO: A member emphasized the importance of cross-language LTO for existing C/C++ software ecosystems to avoid rewrites.

- The discussion acknowledged that effective linking could significantly improve the performance and maintainability of legacy systems.

- Mojo Explores ABI Optimization: Members explored Mojo's potential in defining an ABI that optimizes data transfer by utilizing AVX-512-sized structures and maximizing register information.

- This ABI framework is expected to enhance interoperability and efficiency across various software components.

LLM Agents (Berkeley MOOC) Discord

-

Intel AMA on AI Tools: Join the exclusive AMA session with Intel on Building with Intel: Tiber AI Cloud and Intel Liftoff scheduled for 11/21 at 3pm PT, offering insights into advanced AI development tools.

- This event provides a unique chance to interact with Intel specialists and gain expertise in optimizing AI projects using their latest resources.

- Intel Tiber AI Cloud: Intel will unveil the Tiber AI Cloud, a platform designed to enhance hackathon projects through improved computing capabilities and efficiency.

- Participants can explore how to leverage this platform to boost performance and streamline their AI development workflows.

- Intel Liftoff Program: The session will cover the Intel Liftoff Program, which supports startups with technical resources and mentorship.

- Learn about the comprehensive benefits aimed at helping young companies scale and succeed within the AI industry.

- Quizzes Feedback Delays: A member raised concerns about not receiving email feedback for quizzes 5 and 6 while attempting to catch up.

- Another member suggested verifying local settings and recommended resubmitting the quizzes to address the issue.

- Course Deadlines Reminder: An urgent reminder was issued that participants are still eligible but need to catch up quickly as each quiz ties back to course content.

- The final submission date is set for December 12th, highlighting the necessity for timely completion.

Torchtune Discord

-

Torchtune v0.4.0 Release: Torchtune v0.4.0 has been officially released, introducing features like Activation Offloading, Qwen2.5 Support, and enhanced Multimodal Training. Full release notes are available here.

- These updates aim to significantly improve user experience and model training efficiency.

- Activation Offloading Feature: Activation Offloading is now implemented in Torchtune v0.4.0, reducing memory requirements for all text models by 20% during finetuning and lora recipes.

- This enhancement optimizes performance, allowing for more efficient model training workflows.

- Qwen2.5 Model Support: Qwen2.5 Builders support has been added to Torchtune, aligning with the latest updates from the Qwen model family. More details can be found on the Qwen2.5 blog.

- This integration facilitates the use of Qwen2.5 models within Torchtune's training environment.

- Multimodal Training Enhancements: The multimodal training functionality in Torchtune has been enhanced with support for Llama3.2V 90B and QLoRA distributed training.

- These enhancements enable users to work with larger datasets and more complex models, expanding training capabilities.

- Orca-AgentInstruct for Synthetic Data: The new Orca-AgentInstruct from Microsoft Research offers an agentic solution for generating diverse, high-quality synthetic datasets at scale.

- This approach aims to boost small language models' performance by leveraging effective synthetic data generation for post-training and fine-tuning.

Mozilla AI Discord

-

Local LLM Workshop Scheduled for Tuesday: The Build Your Own Local LLM Workshop is set for Tuesday, featuring Building your own local LLM's: Train, Tune, Eval, RAG all in your Local Env., aimed at guiding members through local LLM setup intricacies.

- Participants are encouraged to RSVP to enhance their local environment capabilities.

- SQLite-Vec Adds Metadata Filtering: SQLite-Vec Now Supports Metadata Filtering was announced on Wednesday via this event, highlighting enhanced capabilities with practical applications.

- This update allows improved data handling through metadata utilization.

- Autonomous AI Agents Discussion on Thursday: Join the Exploring Autonomous AI Agents discussion on Thursday, featuring Autonomous AI Agents with Refact.AI, focusing on AI automation advancements.

- The event promises insights into the functionality and future trajectory of AI agents.

- Assistance Needed for Landing Page Development: A member is seeking help to develop a landing page for their project, with plans for a live walkthrough on the Mozilla AI stage.

- Interested members should reach out in this thread to provide collaborative marketing support.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!