[AINews] 1 TRILLION token context, real time, on device?

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

SSMs are all you need.

AI News for 5/28/2024-5/29/2024. We checked 7 subreddits, 384 Twitters and 29 Discords (389 channels, and 5432 messages) for you. Estimated reading time saved (at 200wpm): 553 minutes.

Our prior candidates for today's headline story:

- Happy 4th birthday to GPT3!

- Hello Codestral. Weights released under a Mistral noncommercial license, and with decent evals, 80 languages but scant further details.

- Schedule Free optimizers are here! We reported on these 2 months ago and the paper is now released - jury is weighing in but things look good so far - this could be a gamechanging paper in learning rate optimization if it scales.

- Scale AI launches their own elo-style Eval Leaderboards, with Private, Continuously Updated, Domain Expert Evals on Coding, Math, Instruction Following, and Multilinguality (Spanish), following their similar work on GSM1k.

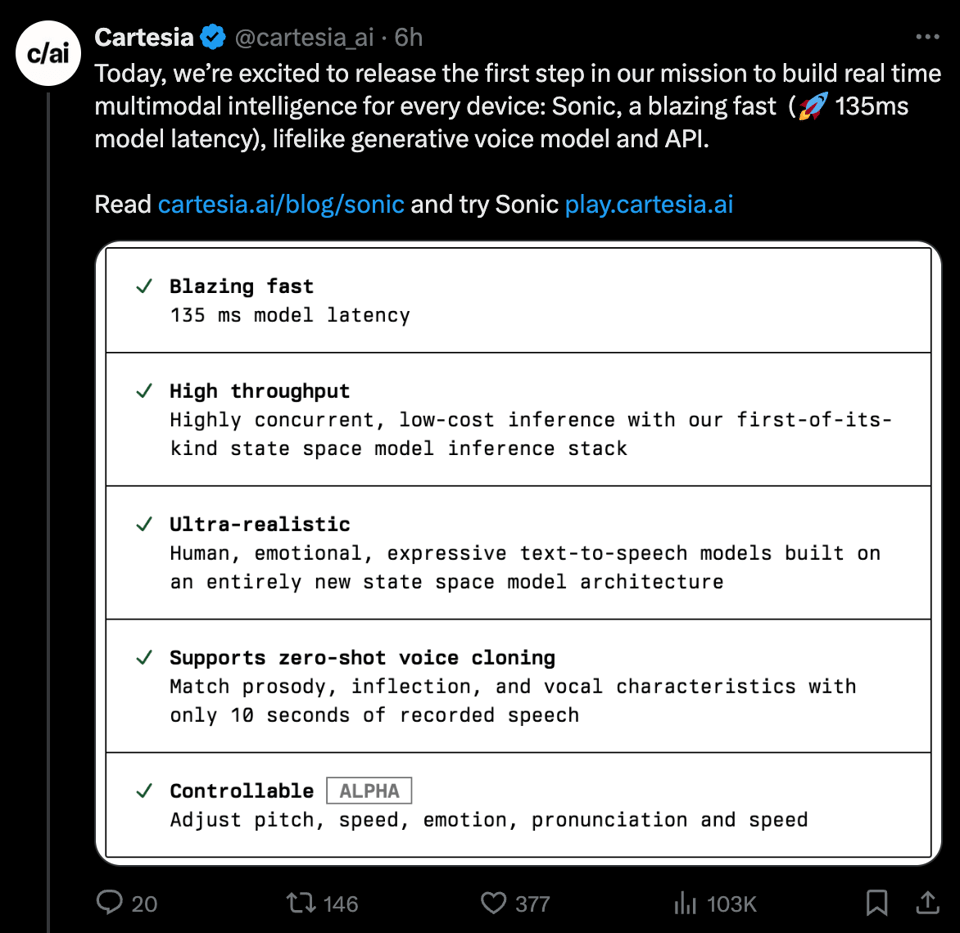

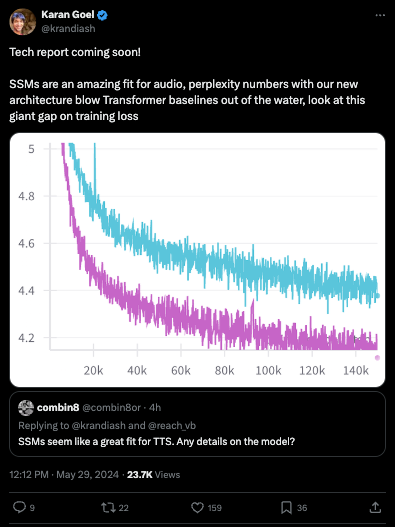

But today we give the W to Cartesia, the State Space Models startup founded by the other Mamba coauthor who launched their rumored low latency voice model today, handily beating its Transformer equivalent (20% lower perplexity, 2x lower word error, 1 point higher NISQA quality):

evidenced by a yawning gap in loss charts:

This is the most recent in a growing crop of usable state space models, and the launch post discusses the vision unlocked by extremely efficient realtime models:

Not even the best models can continuously process and reason over a year-long stream of audio, video and text: 1B text tokens, 10B audio tokens and 1T video tokens —let alone do this on-device. Shouldn't everyone have access to cheap intelligence that doesn't require marshaling a data center?

as well as a preview of what super fast on-device TTS looks like.

It is highly encouraging to see usable SSMs in the wild now, feasibly challenging SOTA (we haven't yet seen any comparisons with ElevenLabs et al, but spot checks on the Cartesia Playground were very convincing to our ears as experienced ElevenLabs users).

But comparing SSMs with current SOTA misses the sheer ambition of what is mentioned in the quoted text above: what would you do differently if you KNEW that we may soon have models can that continuously process and reason over text/audio/video with a TRILLION token "context window"? On device?

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

Yann LeCun and Elon Musk Debate on AI Research and Engineering

- Importance of publishing research: @ylecun argued that for research to qualify as science, it must be published with sufficient details to be reproducible, emphasizing the importance of peer review and sharing scientific information for technological progress.

- Engineering feats based on published science: Some argued that Elon Musk and companies like SpaceX are advancing technology through engineering without always publishing papers. @ylecun countered that these engineering feats are largely based on published scientific breakthroughs.

- Distinctions between science and engineering: The discussion sparked a debate on the differences and complementary nature of science and engineering. @ylecun clarified the distinctions in topics, methodologies, publications, and impact between the two fields.

Advancements in Large Language Models (LLMs) and AI Capabilities

- Strong performance of Gemini 1.5 models: @lmsysorg reported that Gemini 1.5 Pro/Advanced rank #2 on their leaderboard, nearly reaching GPT-4, while Gemini 1.5 Flash ranks #9, outperforming Llama-3-70b and GPT-4-0125.

- Release of Codestral-22B code model: @GuillaumeLample announced the release of Codestral-22B, trained on 80+ programming languages, outperforming previous code models and available via API.

- Veo model for video generation from images: @GoogleDeepMind introduced Veo, which can create video clips from a single reference image while following text prompt instructions.

- SEAL Leaderboards for frontier model evaluation: @alexandr_wang launched private expert evaluations of frontier models, focusing on non-exploitable and continuously updated benchmarks.

- Scaling insights 4 years after GPT-3: @alexandr_wang reflected on progress since the GPT-3 paper, noting that the next 4 years will be about exponentially scaling compute and data, representing some of the largest infrastructure projects of our time.

Research Papers and Techniques

- Schedule-Free averaging for training Transformers: @aaron_defazio and collaborators published a paper introducing Schedule-Free averaging for training Transformers, showing strong results compared to standard learning rate schedules.

- VeLoRA for memory-efficient LLM training: A new paper proposed VeLoRA, a memory-efficient algorithm for fine-tuning and pre-training LLMs using rank-1 sub-token projections. (https://twitter.com/_akhaliq/status/1795651536497864831)

- Performance gap between online and offline alignment algorithms: A Google paper investigated why online RL algorithms for aligning LLMs outperform offline algorithms, concluding that on-policy sampling plays a pivotal role. (https://twitter.com/rohanpaul_ai/status/1795432640050340215)

- Transformers learning arithmetic with special embeddings: @tomgoldsteincs showed that Transformers can learn arithmetic like addition and multiplication by using special positional embeddings.

Memes and Humor

- @svpino joked about the entertainment value of a particular comment thread.

- @Teknium1 humorously suggested that OpenAI's moves this week can only be saved by releasing "waifus".

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

AI Model Development

- Gemini 1.5 Pro outperforms most GPT-4 instances: In the LMSYS Chatbot Arena Leaderboard, Gemini 1.5 Pro outcompetes all GPT-4 instances except 4o. This highlights the rapid progress of open-source AI models.

- Abliterated-v3 models released: Uncensored versions of Phi models, including Phi-3-mini-128k and Phi-3-vision-128k, have been made available, expanding access to powerful AI capabilities.

- Llama3 8B Vision Model matches GPT-4: A new multimodal model, Llama3 8B Vision Model, has been released that is on par with GPT4V & GPT4o in visual understanding.

- Gemini Flash and updated Gemini 1.5 Pro added to leaderboard: The LMSYS Chatbot Arena Leaderboard has been updated with Gemini Flash and an improved version of Gemini 1.5 Pro, showcasing ongoing iterations.

AI Safety & Ethics

- Public concern over AI ethics: A poll reveals that more than half of Americans believe AI companies aren't considering ethics sufficiently when developing the technology, and nearly 90% favor government regulations. This underscores growing public unease about responsible AI development.

AI Tools & Applications

- HuggingChat adds tool support: HuggingChat now integrates tools for PDF parsing, image generation, web search, and more, expanding its capabilities as an AI assistant.

- CopilotKit v0.9.0 released: An open-source framework for building in-app AI agents, CopilotKit v0.9.0 supports GPT-4o, native voice, and Gemini integration, enabling easier development of AI-powered applications.

- WebLLM Chat enables in-browser model inference: WebLLM Chat allows running open-source LLMs like Llama, Mistral, Hermes, Gemma, RedPajama, Phi and TinyLlama locally in a web browser, making model access more convenient.

- LMDeploy v0.4.2 supports vision-language models: The latest version of LMDeploy enables 4-bit quantization and deployment of VL models such as llava, internvl, internlm-xcomposer2, qwen-vl, deepseek-vl, minigemini and yi-vl, facilitating efficient multimodal AI development.

AI Hardware

- Running Llama3 70B on modded 2080ti GPUs: By modding 2x2080ti GPUs to 22GB VRAM each, the Llama3 70B model can be run on this setup, demonstrating creative solutions for large model inference.

- 4x GTX Titan X Pascal 12GB setup for Llama3: With 48GB total VRAM from 4 GTX Titan X Pascal 12GB GPUs, Llama3 70B can be run using Q3KM quantization, showing the potential of older hardware.

- SambaNova's Samba-1 Turbo runs Llama-3 8B: SambaNova showcased their Samba-1 Turbo AI hardware running the Llama-3 8B model, highlighting specialized solutions for efficient inference.

AI Drama & Controversy

- Sam Altman's past controversies: It was revealed that Sam Altman was fired from Y Combinator and people at his startup Loopt asked the board to fire him due to his chaotic and deceptive behavior (image), shedding light on the OpenAI CEO's history.

- Yann LeCun and Elon Musk exchange: In a public discussion, Elon Musk had a weak rebuttal to Yann LeCun's scientific record, highlighting tensions between AI pioneers.

Memes & Humor

AI Discord Recap

A summary of Summaries of Summaries

-

LLM Performance and Practical Applications:

- Gemini 1.5 Pro/Advanced models from Google impressed with top leaderboard positions, outperforming models like Llama-3-70b, while Codestral 22B from MistralAI supports 80+ programming languages targeting AI engineers.

- Mistral AI's new Codestral model, an open-weight model under a non-commercial license, encouraged discussions about the balance between open-source accessibility and commercial viability. Codestral, trained in over 80 programming languages, sparked excitement over its potential to streamline coding tasks.

- Launches like the SEAL Leaderboards by Scale AI were noted for setting new standards in AI evaluations, though concerns about evaluator bias due to provider affiliations were raised.

- SWE-agent by Princeton stirred interest for its superior performance and open-source nature, and Llama3-V gathered attention for challenging GPT4-V despite being a smaller model.

- Retrieval-Augmented Generation (RAG) models are evolving with tools like PropertyGraphIndex for constructing rich knowledge graphs, while Iderkity supports translation tasks efficiently.

-

Fine-Tuning, Prompt Engineering, and Model Optimization:

- Engineers discussed Gradient Accumulation and DPO training methods, emphasizing the role of

ref_modelin maintaining consistency during fine-tuning, and tackled quantization libraries for efficient use across different systems.

- Techniques to solve prompt engineering challenges like handling "RateLimit" errors using try/except structures and fine-tuning models for specific domains were shared, underscoring practical solutions (example).

- Members debated the use of transformers versus MLPs, highlighting findings that MLPs may handle certain tasks better, and discussed model-specific issues like context length and optimizer configurations in ongoing fine-tuning efforts.

- Engineers discussed Gradient Accumulation and DPO training methods, emphasizing the role of

-

Open-Source Contributions and AI Community Collaboration:

- OpenAccess AI Collective tackled spam issues, proposed updates for gradient checkpointing in Unsloth, and saw community-led initiatives on fine-tuning LLMs for image and video content comprehension.

- LlamaIndex contributed to open-source by merging into the Neo4j ecosystem, focusing on integrating tools like PropertyGraphIndex for robust knowledge graph solutions.

- Discussions emphasized community efforts around Llama3 model training and collaborative issues submitted on GitHub for libraries like axolotl and torchao indicating ongoing developments and shared problem resolutions.

-

Model Deployment and Infrastructure Issues:

- Engineers grappled with Google Colab disconnections, Docker setup for deployment issues, and the performance benefits of using Triton kernels on NVIDIA A6000 GPUs.

- Lighting AI Studio was recommended for free GPU hours, while discussions on split GPU resources for large model productivity and tackling hardware bottlenecks highlighted user challenges.

- ROC and NVIDIA compatibility setbacks were discussed, with practical suggestions to overcome them, like seeking deals on 7900 XT for expanded VRAM setups to support larger models and transitions from macOS x86 to M1.

-

Challenges, Reactions, and Innovations in AI:

- Helen Toner's revelations on OpenAI’s management sparked debates about transparency, raising concerns about internal politics and ethical AI development (Podcast link).

- Elon Musk's xAI securing $6 billion in funding triggered discussions on the implications for AI competitiveness and infrastructure investment, while community members debated model pricing strategies and their potential impact on long-term investments in technologies.

- Cohere API sparked discussions around effective use for grounded generation and ensuring force-citation display, showing active community engagement in leveraging new models for practical use cases.

PART 1: High level Discord summaries

Perplexity AI Discord

- Web Scraping Wisdom: Discussions highlighted methods for efficient web content extraction, including Python requests, Playwright, and notably, Gemini 1.5 Flash for JavaScript-heavy sites.

- Perplexity API Woes and Wins: Engineers expressed concerns over inconsistency between Perplexity's API responses and its web app's accuracy, pondering different model choices, such as llama-3-sonar-small-32k-online, to potentially boost performance.

- Building a Rival to Rival Perplexity: A detailed project was proposed that mirrors Perplexity's multi-model querying, facing challenges related to scaling and backend development.

- Go with the Flow: Deep-dives into Go programming language showcased its effectiveness, particularly for web scraping applications, emphasizing its scalability and concurrency advantages.

- Advantage Analysis: Users shared Perplexity AI search links covering potentially AI-generated content, a clarification of a query's sensibility, and a comprehensive evaluation of pros and cons.

HuggingFace Discord

- BERT's Token Limit Has Users Seeking Solutions: A user is evaluating methods for handling documents that exceed token limits in models like BERT (512 tokens) and decoder-based models (1,024 tokens). They aim to bypass document slicing and positional embedding tweaks, without resorting to costly new pretraining.

- Diffusers Celebrate with GPT-2 Sentiment Success: The Hugging Face community hails the second anniversary of the Diffusers project, alongside a new FineTuned GPT-2 model for sentiment analysis that achieved a 0.9680 accuracy and F1 score. The model is tailored for Amazon reviews and is available on Hugging Face.

- Reading Group Eager for C4AI's Insights: A new paper reading group is queued up, with eagerness to include presentations from the C4AI community, focusing on debunking misinformation in low-resource languages. The next event is found here.

- Image Processing Queries Guide Users to Resources: Discussions cover the best practices for handling large images with models like YOLO and newer alternatives like convNext and DINOv2. A Github repository for image processing tutorials in Hugging Face was highlighted (Transformers-Tutorials).

- Medical Imaging Seeks AI Assist: Community members exchange thoughts on creating a self-supervised learning framework for analyzing unlabeled MRI and CT scans. The discussion includes leveraging features extracted using pre-trained models for class-specific segmentation tasks.

Unsloth AI (Daniel Han) Discord

- Lightning Strikes with L4: Users recommended Lightning AI Studio due to its "20-ish monthly free hours" and enhanced performance with L4 over Colab's T4 GPUs. A potential collaboration with Lightning AI to benefit the community was proposed.

- Performance Puzzles with Phi3 and Llama3: Discussions revealed mixed reactions to the Phi3 models, with

phi-3-mediumconsidered less impressive than llama3-8b by some. A user highlighted Phi3's inferior performance beyond 2048 tokens context length compared to Llama3.

- Stirring Model Deployment Conversations: The community exchanged ideas on utilizing Runpods and Docker for deploying models, with some members encountering issues with service providers. While no specific Dockerfiles were provided, a server search for them was recommended.

- Colab Premia Not Meeting Expectations: Google Colab's Premium service faced criticism due to continued disconnection issues. Members proposed moving to other platforms like Kaggle and Lightning AI as viable free alternatives.

- Unsloth Gets Hands-On In Local Development: Embarking on supervised fine-tuning with Unsloth, users discussed running models locally, particularly in VSCode for tasks like resume point generation. Links to Colab notebooks and GitHub resources for unsupervised finetuning with Unsloth were shared, such as this finetuning guide and a Colab example.

LLM Finetuning (Hamel + Dan) Discord

Fine-Tuning Frustrations and Marketplace Musings: Engineers discussed fine-tuning challenges, with concerns over Google's Gemini 1.5 API price hike and difficulties serving fine-tuned models in production. A channel dedicated to LLM-related job opportunities was proposed, and the need for robust JSON/Parquet file handling tools was highlighted.

Ins and Outs of Technical Workshops: Participants exchanged insights on LLM fine-tuning strategies, with emphasis on personalized sales emails and legal document summarization. The practicality of multi-agent LLM collaboration and the optimization of prompts for Stable Diffusion were debated.

Exploring the AI Ecosystem: The community delved into a variety of AI topics, revealing Braintrust as a handy tool for evaluating non-deterministic systems and the O'Reilly Radar insights on the complexities of building with LLMs. Discussions also highlighted the potential of Autoevals for SQL query evaluations.

Toolshed for LLM Work: Engineers tackled practical issues like Modal's opaque failures and Axolotl preprocessing GPU support problems. Queries around using shared storage on Jarvislabs and insights into model quantization on Wing Axolotl were shared, with useful resources and tips sprinkled throughout the discussions.

Code, Craft, and Communities: The community vibe flourished with talk of LLM evaluator models, the desirability of Gradio's UI over Streamlit, and the convening of meet-ups from San Diego to NYC. The vibrant exchanges covered technical ground but also nurtured the social fabric of the AI engineering realm.

CUDA MODE Discord

GPGPU Programming Embraces lighting.ai: Engineers discussed lighting.ai as a commendable option for GPGPU programming, especially for those lacking access to NVIDIA hardware commonly used for CUDA and SYCL development.

Easing Triton Development: Developers found triton_util, a utility package simplifying Triton kernel writing, useful for abstracting repetitive tasks, promoting a more intuitive experience. Performance leaps using Triton on NVIDIA A6000 GPUs were observed, while tackling bugs became a focus when dealing with large tensors above 65GB.

Nightly Torch Supports Python 3.12: The PyTorch community highlighted torch.compile issues on Python 3.12, with nightly builds providing some resolutions. Meanwhile, the deprecation of macOS x86 builds in Torch 2.3 sparked discussions about transitioning to the M1 chips or Linux.

Tom Yeh Enhances AI Fundamentals: Prof Tom Yeh is gaining traction by sharing hand calculation exercises on AI concepts. His series comprises Dot Product, Matrix Multiplication, Linear Layer, and Activation workbooks.

Quantum Leaps in Quantization: Engineers are actively discussing and improving quantization processes with libraries like bitsandbytes and fbgemm_gpu, as well as participating in competitions such as NeurIPS. Efforts on Llama2-7B and the FP6-LLM repository updates were shared alongside appreciating the torchao community's supportive nature.

CUDA Debugging Skills Enhanced: A single inquiry about debugging SYCL code was shared, highlighting the need for tools to improve kernel code analysis and possibly stepping into the debugging process.

Turbocharge Development with bitnet PRs:

Various technical issues were addressed in the bitnet channel, including ImportError challenges related to mismatches between PyTorch/dev versions and CUDA, and compilation woes on university servers resolved via a gcc 12.1 upgrade. Collaborative PR work on bit packing and CI improvements were discussed, with resources provided for bit-level operations and error resolution (BitBlas on GitHub, ao GitHub issue).

Social and Techno Tales of Berlin and Seattle: Conversations in off-topic contrasted the social and weather landscapes of Seattle and Berlin. Berlin was touted for its techno scene and startup friendliness, moderated by its own share of gloomy weather.

Tokenizer Tales and Training Talk: An extensive dialog on self-implementing tokenizers and dataset handling ensued, considering compression and cloud storage options. Large-scale training on H100 GPUs remains cost-prohibitive, while granular discussions on GPU specs informed model optimization. Training experiments continue apace, with one resembling GPT-3's strength.

Nous Research AI Discord

Playing with Big Contexts: An engineer suggested training a Large Language Model (LLM) with an extremely long context window with the notion that with sufficient context, an LLM can predict better even with a smaller dataset.

The Unbiased Evaluation Dilemma: Concerns were raised about Scale’s involvement with both supplying data for and evaluating machine learning models, highlighting a potential conflict of interest that could influence the impartiality of model assessments.

Understanding RAG Beyond the Basics: Technical discussions elucidated the complexities of Retrieal-Augmented Generation (RAG) systems, stressing that it's not just a vector similarity match but involves a suite of other processes like re-ranking and full-text searches, as highlighted by discussions and resources like RAGAS.

Doubled Prices and Doubled Concerns: Google's decision to increase the price for Gemini 1.5 Flash output sparked a heated debate, with engineers calling out the unsustainable pricing strategy and questioning the reliability of the API’s cost structure.

Gradient Accumulation Scrutiny: A topic arose around avoiding gradient accumulation in model training, with engineers referring to Google's tuning playbook for insights, while also discussing the concept of ref_model in DPO training as per Hugging Face's documentation.

LM Studio Discord

- Open Source or Not? LM Studio's Dichotomy: LM Studio's main application is confirmed to be closed source, while tools like LMS Client (CLI) and lmstudio.js (new SDK) are open source. Models within LM Studio cannot access local PC files directly.

- Translation Model Buzz: The Aya Japanese to English model was recommended for translation tasks, while Codestral, supporting 80+ programming languages, sparked discussions of integration into LM Studio.

- GPU Selection and Performance Discussions: Debates emerged over the benefits of multi-GPU setups versus single powerful GPUs, specifically questioning the value of Nvidia stock and practicality of modded GPUs. A Goldensun3ds user upgraded to 44GB VRAM, showcasing the setup advantage.

- Server Mode Slows Down the Show: Users noted that chat mode achieves faster results than server mode with identical presets, raising concerns on GPU utilization and the need for GPU selection for server mode operations.

- AMD GPU Users Face ROCm Roadblocks: Version problems with LM Studio and Radeon GPUs were noted, including unsuccessful attempts to use iGPUs and multi-GPU configurations in ROCm mode. Offers on 7900 XT were shared as possible solutions for expanding VRAM.

- A Single AI for Double Duty?: The feasibility of a model performing both moderation and Q&A was questioned, with suggestions pointing towards using two separate models or leveraging server mode for better context handling.

- Codestral Availability Announced: Mistral's new 22B coding model, Codestral, has been released, targeting users with larger GPUs seeking a powerful coding companion. It's available for download on Hugging Face.

Modular (Mojo 🔥) Discord

Mojo Gets a Memory Lane: A blog post illuminated Mojo's approach to memory management with ownership as a central focus, advocating a safe yet high-performance programming model. Chris Lattner's video was highlighted as a resource for digging deeper into the ownership concept within Mojo's compiler systems. Read more about it in their blog entry.

Alignment Ascendancy: Engineers have stressed the importance of 64-byte alignment in tables to utilize the full potency of AVX512 instructions and enhance caching efficiency. They also highlighted the necessity of alignment to prompt the prefetcher's optimal performance and the issues of false sharing in multithreaded contexts.

Optional Dilemmas and Dict Puzzles in Mojo: In the nightly branch conversations, the use of Optional with the ref API sparked extensive discussion, with participants considering Rust's ? operator as a constructive comparison. A related GitHub issue also focused on a bug with InlineArray failing to invoke destructors of its elements.

The Prose of Proposals and Compilations: The merits of naming conventions within auto-dereferenced references were rigorously debated, with the idea floated to rename Reference to TrackedPointer and Pointer to UntrackedPointer. Additionally, the latest nightly Mojo compiler release 2024.5.2912 brought updates like async function borrow restrictions with a comprehensive changelog available.

AI Expands Horizons in Open-World Gaming: An assertion was raised that open-world games could reach new pinnacles if AI could craft worlds dynamically from a wide range of online models, responding to user interactions. This idea suggests a significant opportunity for AI's role in gaming advancements.

Eleuther Discord

- A Helping Hand for AI Newbies: Newcomers to EleutherAI, including a soon-to-graduate CS student, were provided beginner-friendly research topics with resources like a GitHub gist. Platforms for basic AI question-and-answer sessions were noted as lacking, stimulating a conversation about the accessibility of AI knowledge for beginners.

- Premature Paper Publication Puzzles Peers: A paper capturing the community's interest for making bold claims without the support of experiments sparked discussion. Questions were raised around its acceptance on arXiv, contrasting with the acknowledgment of Yann LeCun's impactful guidance and his featured lecture that highlighted differences between engineering and fundamental sciences.

- MLP versus Transformer – The Turning Tide: Debate heated up over recent findings that MLPs can rival Transformers in in-context learning. While intrigued by the MLPs' potential, skepticism abounded about optimizations and general usability, with members referencing resources such as MLPs Learn In-Context and discussions reflecting back on the "Bitter Lesson" in AI architecture's evolution.

- AMD Traceback Trips on Memory Calculation: A member's traceback error while attempting to calculate max memory on an AMD system led them to share the issue via a GitHub Gist, whereas another member sought advice on concurrent queries with "lm-evaluation-harness" and logits-based testing.

- Scaling Discussions Swing to MLPs' Favor: Conversations revealed that optimization tricks might mask underperformance while spotlighting an observation that scaling and adaptability could outshine MLPs' structural deficits. Links shared included an empirical study comparing CNN, Transformer, and MLP networks and an investigation into scaling MLPs.

OpenAI Discord

- Free Users, Rejoice with New Features!: Free users of ChatGPT now enjoy additional capabilities, including browse, vision, data analysis, file uploads, and access to various GPTs.

- ImaGen3 Stirring Mixed Emotions: Discussion swirled around the upcoming release of Google's ImaGen3, marked by skepticism concerning media manipulation and trust. Meanwhile, Google also faced flak for accuracy blunders in historical image generation.

- GPT-4's Memory Issues Need a Fix: Engineers bemoaned GPT-4's intermittent amnesia, expressing a desire for a more transparent memory mechanism and suggesting a backup button for long-term memory preservation.

- RAM Rising: Users call for Optimization: Concerns over excessive RAM consumption spiked, especially when using ChatGPT on browsers like Brave; alternative solutions suggested include using Safari or the desktop app to run smoother sessions.

- Central Hub for Shared Prompts: For those seeking a repository of "amazing prompts," direct your attention to the specific channel designated for this purpose within the Discord community.

Interconnects (Nathan Lambert) Discord

- Codestral Enters the Coding Arena: Codestral, a new 22B model from Mistral fluent in over 80 programming languages, has launched and is accessible on HuggingFace during an 8-week beta period. Meanwhile, Scale AI's introduction of a private data-based LLM leaderboard has sparked discussions about potential biases in model evaluation due to the company's revenue model and its reliance on consistent crowd workers.

- Price Hike Halts Cheers for Gemini 1.5 Flash: A sudden price bump for Google's Gemini 1.5 Flash's output—from $0.53/1M to $1.05/1M—right after its lauded release stirred debate over the API's stability and trustworthiness.

- Awkward Boardroom Tango at OpenAI: The OpenAI board was caught off-guard learning about ChatGPT’s launch on Twitter, according to revelations from ex-board member Helen Toner. This incident illuminated broader issues of transparency at OpenAI, which were compounded by a lack of explicit reasoning behind Sam Altman’s firing, with the board citing "not consistently candid communications."

- Toner's Tattle and OpenAI's Opacity Dominate Discussions: Toner's allegations of frequent dishonesty under Sam Altman's leadership at OpenAI sparked debates on the timing of her disclosures, with speculation about legal constraints and acknowledgement that internal politics and pressure likely shaped the board's narrative.

- DL Community's Knowledge Fest: Popularity is surging for intellectual exchanges like forming a "mini journal club" and appreciation for Cohere's educational video series, while TalkRL podcast is touted as undervalued. Although there's mixed reception for Schulman's pragmatic take on AI safety in Dwarkesh's podcast episode, the proposed transformative hierarchical model to mitigate AI misbehaviors, as highlighted in Andrew Carr’s tweet, is sparking interest.

- Frustration Over FMTI's File Fiasco: There's discontent among the community due to the FMTI GitHub repository opting for CSV over markdown, obstructing easy access to paper scores for engineers.

- SnailBot Ships Soon: Anticipation builds for the SnailBot News update, teased via tagging, with Nate Lambert also stirring curiosity about upcoming stickers.

Stability.ai (Stable Diffusion) Discord

- Colab and Kaggle Speed Up Image Creation: Engineers recommend using Kaggle or Colab for faster image generation with Stable Diffusion; one reports that it takes 1.5 to 2 minutes per image with 16GB VRAM on Colab.

- Tips for Training SDXL LoRA Models: Technical enthusiasts discuss training Stable Diffusion XL LoRA models, emphasizing that 2-3 epochs yield good results and suggesting that conciseness in trigger words improves training effectiveness.

- Navigating ComfyUI Model Paths and API Integration: Community members are troubleshooting ComfyUI configuration for multiple model directories and discussing the integration of ADetailer within the local Stable Diffusion API.

- HUG and Stability AI Course Offerings: There's chatter about the HUG and Stability AI partnership offering a creative AI course, with sessions recorded for later access—a completed feedback form will refund participants' deposits.

- 3D Model Generation Still Incubating: Conversations turn to AI's role in creating 3D models suitable for printing, with members agreeing on the unfulfilled potential of current AI to generate these models.

LlamaIndex Discord

- Graphing the LLM Knowledge Landscape: LlamaIndex announces PropertyGraphIndex, a collaboration with Neo4j, allowing richer building of LLM-backed knowledge graphs. With tools for graph extraction and querying, it provides for custom extractors and joint vector/graph search functions—users can refer to the PropertyGraphIndex documentation for guidelines.

- Optimizing the Knowledge Retrieval: Discussions focused on optimizing RAG models by experimenting with text chunk sizes and referencing the SemanticDocumentParser for generating quality chunks. There were also strategies shared for maximizing the potential of vector stores, such as the mentioned

QueryFusionRetriever, and best practices for non-English embeddings, citing resources like asafaya/bert-base-arabic.

- Innovating in the Codestral Era: LlamaIndex supports the new Codestral model from MistralAI, covering 80+ programming languages and enhancing with tools like Ollama for local runs. Additionally, the FinTextQA dataset is offering an extensive set of question-answer pairs for financial document-based querying.

- Storage and Customization with Document Stores: The community discussed managing document nodes and stores in LlamaIndex, touching on the capabilities of

docstore.persist(), and utilization of different document backends, with references made to Document Stores - LlamaIndex. The engagement also mentioned Simple Fusion Retriever as a solution for managing vector store indexes.

- Querying Beyond Boundaries: The announced Property Graph Index underlines LlamaIndex’s commitment to expand the querying capabilities within knowledge graphs, integrating features to work with labels and properties for nodes and relationships. The LlamaIndex blog sheds light on these advances and their potential impact on the AI engineering field.

Latent Space Discord

- Gemini 1.5 Proves Its Metal: Gemini 1.5 Pro/Advanced now holds second place, edging near GPT-4o, with Gemini 1.5 Flash in ninth, surpassing models like Llama-3-70b, as per results shared on LMSysOrg's Twitter.

- SWE-agent Stirs Up Interest: Princeton's SWE-agent has sparked excitement with its claim of superior performance and open-source status, with details available on Gergely Orosz's Twitter and the SWE-agent GitHub.

- Llama3-V Steps into the Ring: The new open-source Llama3-V model competes with GPT4-V despite its smaller size, grabbing attention detailed on Sidd Rsh's Twitter.

- Tales from the Trenches with LLMs: Insights and experiences from a year of working with LLMs are explored in the article titled "What We Learned from a Year of Building with LLMs," focusing on the evolution and challenges in building AI products.

- SCALE Sets LLM Benchmarking Standard with SEAL Leaderboards: Scale's SEAL Leaderboards have been launched for robust LLM evaluations with shoutouts from industry figures like Alexandr Wang and Andrej Karpathy.

- Reserve Your Virtual Seat at Latent Space: A technical event to explore AI Agent Architectures and Kolmogorov Arnold Networks has been announced for today, with registration available here.

OpenRouter (Alex Atallah) Discord

- Temporary OpenAI Downtime Resolved: OpenAI faced a temporary service interruption, but normal operations resumed following a quick fix with Alex Atallah indicating Azure services remained operational throughout the incident.

- Say Goodbye to Cinematika: Due to low usage, the Cinematika model is set to be deprecated; users have been advised to switch to an alternative model promptly.

- Funding Cap Frustration Fixed: After OpenAI models became inaccessible due to an unexpected spending limit breach, a resolution was implemented and normal service restored, combined with the rollout of additional safeguards.

- GPT-4o Context Capacity Confirmed: Amid misunderstandings about token limitations, Alex Atallah stated that GPT-4o maintains a 128k token context limit and a separate output token cap of 4096.

- Concerns Over GPT-4o Image Prompt Performance: A user's slow processing experience with

openai/gpt-4ousingimage-urlinput hints at possible performance bottlenecks, which might require further investigation and optimization.

LAION Discord

- AI Influencers on the Spotlight: Helen Toner's comment about discovering ChatGPT on Twitter launched dialogues while Yann LeCun's research activities post his VP role at Facebook piqued interest, signaling the continued influence of AI leaders in shaping community opinions. In contrast, Elon Musk's revelation of AI models only when they've lost their competitive edge prompted discussions regarding the strategy of open-source models in AI development.

- Mistral's License Leverages Open Weights: Amidst the talks, Mistral AI's licensing strategy was noted for its blend of open weights under a non-commercial umbrella, emphasizing the complex landscape of AI model sharing and commercialization.

- Model Generation Complications: Difficulty arises when using seemingly straightforward prompts such as 'a woman reading a book' in model generation, with users reporting adverse effects in synthetic caption creation, hinting at persistent challenges in the field of generative AI.

- Discourse on Discriminator Effectiveness: The community dissected research material, particularly noting Dinov2's use as a discriminator, yet indicating a preference for a modified pretrained UNet, recalling a strategy akin to Kandinsky's, where a halved UNet improved performance, shedding light on evolving discriminator techniques in AI research.

- Community Skepticism Towards Rating Incentives: A discussion on the Horde AI community's incentivized system for rating SD images raised doubts, as it was mentioned that such programs could potentially degrade the quality of data, highlighting a common tension between community engagement and data integrity.

LangChain AI Discord

- Trouble Finding LangChain v2.0 Agents Resolved: Users initially struggled with locating agents within LangChain v2.0, with discussions proceeding to successful location and implementation of said agents.

- Insights on AI and Creativity Spark Conversations: A conversation was ignited by a tweet suggesting AI move beyond repetition towards genuine creativity, prompting technical discussions on the potential of AI in creative domains.

- Solving 'RateLimit' Errors in LangChain: The community shared solutions for handling "RateLimit" errors in LangChain applications, advocating the use of Python's try/except structures for robust error management.

- Optimizing Conversational Data Retrieval: Members faced challenges with ConversationalRetrievalChain when handling multiple vector stores, seeking advice on effectively merging data for complete content retrieval.

- Practical Illustration of Persistent Chat Capabilities: A guild member tested langserve's persistent chat history feature, following an example from the repository and inquiring about incorporating "chat_history" into the FastAPI request body, which is documented here.

Educational content on routing logic in agent flows using LangChain was disseminated via a YouTube tutorial, assisting community members in enhancing their automated agents' decision-making pathways.

OpenInterpreter Discord

- Customization is King in Training Workflows: Engineers expressed an interest in personalized training workflows, with discussions centered on enhancing Open Interpreter for individual use cases, suggesting a significant need for customization in AI tooling.

- Users Share Open Interpreter Applications: Various use cases for Open Interpreter sparked discussions, with members exchanging ideas on how to leverage its features for different technical applications.

- Hunting for Open-source Alternatives: Dialogue among engineers highlighted ongoing explorations for alternatives to Rewind, with Rem and Cohere API mentioned as noteworthy options for working with the vector DB.

- Rewind's Connectivity Gets a Nod: One user vouched for Rewind's efficiency dubbing it as a "life hack" despite its shortcomings in hiding sensitive data, reflecting a generally positive reception among technical users.

- Eliminating Confirmation Steps in OI: Addressing efficiency, a member provided a solution for running Open Interpreter without confirmation steps using the

--auto_runfeature, as detailed in the official documentation.

- Trouble with the M5 Screen: A user reported issues with their M5 showing a white screen post-flash, sparking troubleshooting discussions that included suggestions to change Arduino studio settings to include a full memory erase during flashing.

- Unspecified YouTube Link: A solitary link to a YouTube video was shared by a member without context, possibly missing an opportunity for discussion or the chance to provide valuable insights.

OpenAccess AI Collective (axolotl) Discord

"Not Safe for Work" Spam Cleanup: Moderators in the OpenAccess AI Collective (axolotl) swiftly responded to an alert regarding NSFW Discord invite links being spammed across channels, with the spam promptly addressed.

Quest for Multi-Media Model Mastery: An inquiry about how to fine-tune large language models (LLMs) like LLava models for image and video comprehension was posed in the general channel, yet it remains unanswered.

Gradient Checkpointing for MoE: A member of the axolotl-dev channel proposed an update to Unsloth's gradient checkpointing to support MoE architecture, with a pull request (PR) upcoming after verification.

Bug Hunt for Bin Packing: A development update pointed to an improved bin packing algorithm, but highlighted an issue where training stalled post-evaluation, likely linked to the new sampler's missing _len_est implementation.

Sampler Reversion Pulls Interest: A code regression was indicated by sharing a PR to revert multipack batch sampler changes due to flawed loss calculations, indicating the importance of precise metric evaluation in model training.

Cohere Discord

Rethinking PDF Finetuning with RAG: A member proposed Retrieval Augmented Generation (RAG) as a smarter alternative to traditional JSONL finetuning for handling PDFs, claiming it can eliminate the finetuning step entirely.

API-Specific Grounded Generation Insights: API documentation was cited to show how to use the response.citations feature within the grounded generation framework, and an accompanying Hugging Face link was provided as a reference.

Local R+ Innovation with Forced Citations: An engineer shared a hands-on achievement in integrating a RAG pipeline with forced citation display within a local Command R+ setup, demonstrating a reliable way to maintain source attributions.

Cohere's Discord Bot Usage Underlines Segmented Discussions: Enthusiasm around a Discord bot powered by Cohere sparked a reminder to keep project talk within its dedicated channel to maintain order and focus within the community discussions.

Channel Etiquette Encourages Project Segregation: Recognition for a community-built Discord bot was followed by guidance to move detailed discussions to a specified project channel, ensuring adherence to the guild's organizational norms.

tinygrad (George Hotz) Discord

xAI Secures a Whopping $6 Billion: Elon Musk's xAI has successfully raised $6 billion, with notable investors such as Andreessen Horowitz and Sequoia Capital. The funds are aimed at market introduction of initial products, expansive infrastructure development, and advancing research and development of future technologies.

Skepticism Cast on Unnamed Analytical Tools: A guild member expressed skepticism about certain analytical tools, considering them to have "negligible usefulness," although they did not specify which tools were under scrutiny.

New Language Bend Gains Attention: The Bend programming language was acclaimed for its ability to "automatically multi-thread without any code," a feature that complements tinygrad's lazy execution strategy, as shown in a Fireship video.

tinybox Power Supply Query: A question arose about the power supply requirements for tinybox, inquiring whether it utilizes "two consumer power supplies or two server power supplies with a power distribution board," but no resolution was provided.

Link Spotlight: An article from The Verge on xAI’s funding notably asks what portion of that capital will be allocated to acquiring GPUs, a key concern for AI Engineers regarding compute infrastructure.

DiscoResearch Discord

- Goliath Needs Training Wheels: Before additional pretraining, Goliath experienced notable performance dips, prompting a collaborative analysis and response among community members.

- Economical Replication of GPT-2 Milestone: Engineers discussed achieving GPT-2 (124M) replication in C for just $20 on GitHub, noting a HellaSwag accuracy of 29.9, which surpasses GPT-2's original 29.4 score.

- Codestral-22B: Multi-Lingual Monolith: Mistral AI revealed Codestral-22B, a behemoth trained on 80+ programming languages and claimed as more proficient than predecessors, per Guillaume Lample's announcement.

- Calling All Contributors for Open GPT-4-Omni: LAION AI is rallying the community for open development on GPT-4-Omni with a blog post highlighting datasets and tutorials, accessible here.

Mozilla AI Discord

Windows Woes with Llamafile: An engineer encountered an issue while compiling llamafile on Windows, pointing out a problem with cosmoc++ where the build fails due to executables not launching without a .exe suffix. Despite the system reporting a missing file, the engineer confirmed its presence in the directory .cosmocc/3.3.8/bin, and faced the same issue using cosmo bash.

Datasette - LLM (@SimonW) Discord

- RAG to the Rescue for LLM Hallucinations: An engineer suggested using Retrieval Augmented Generation (RAG) to tackle the issue of hallucinations when Language Models (LLMs) answer documentation queries. They proposed an extension to the

llmcommand to recursively create embeddings for a given URL, harnessing document datasets and embedding storage for improved accuracy.

MLOps @Chipro Discord

A Peek Into the Technical Exchange: A user briefly mentioned finding a paper relevant to their interests, thanking another for sharing, and expressed intent to review it. However, no details about the paper's content, title, or field of study were provided.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI Stack Devs (Yoko Li) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The YAIG (a16z Infra) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!