[AINews] Shazeer et al (2024): you are overpaying for inference >13x

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

These 5 memory and caching tricks are all you need.

AI News for 6/20/2024-6/21/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (415 channels, and 2822 messages) for you. Estimated reading time saved (at 200wpm): 287 minutes. You can now tag @smol_ai for AINews discussions!

In a concise 962 word blogpost, Noam Shazeer returned to writing to explain how Character.ai serves 20% of Google Search Traffic for LLM inference, while reducing serving costs by a factor of 33 (compared to late 2022), estimating that leading commercial APIs would cost at least 13.5X more:

Memory-efficiency: "We use the following techniques to reduce KV cache size by more than 20X without regressing quality. With these techniques, GPU memory is no longer a bottleneck for serving large batch sizes."

- MQA > GQA: "reduces KV cache size by 8X compared to the Grouped-Query Attention adopted in most open source models." (Shazeer, 2019)

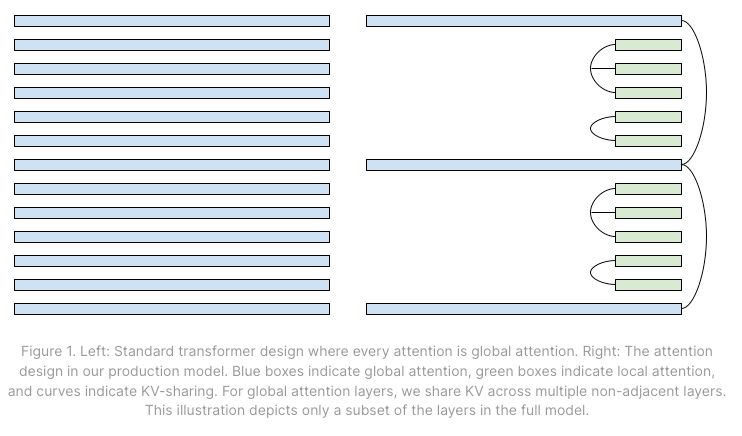

- Hybrid attention horizons: a 1:5 ratio of local (sliding window) attention layers to global (Beltagy et al 2020)

- Cross Layer KV-sharing: local attention layers share KV cache with 2-3 neighbors, global layers share cache across blocks. (Brandon et al 2024)

Stateful Caching: "On Character.AI, the majority of chats are long dialogues; the average message has a dialogue history of 180 messages... To solve this problem, we developed an inter-turn caching system."

- Cached KV tensors in a LRU cache with a tree structure (RadixAttention, Zheng et al., 2023) At a fleet level, we use sticky sessions to route the queries from the same dialogue to the same server. Our system achieves a 95% cache rate.

- Native int8 precision: as opposed to the more common "post-training quantization". Requiring their own customized int8 kernels for matrix multiplications and attention - with a future post on quantized training promised.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

Claude 3.5 Sonnet Release by Anthropic

- Improved Performance: @AnthropicAI released Claude 3.5 Sonnet, outperforming competitor models on key evaluations at twice the speed of Claude 3 Opus and one-fifth the cost. It shows marked improvement in grasping nuance, humor, and complex instructions. @alexalbert__ noted it passed 64% of internal pull request test cases, compared to 38% for Claude 3 Opus.

- New Features: @AnthropicAI introduced Artifacts, allowing generation of docs, code, diagrams, graphics, and games that appear next to the chat for real-time iteration. @omarsar0 used it to visualize deep learning concepts.

- Coding Capabilities: In @alexalbert__'s demo, Claude 3.5 Sonnet autonomously fixed a pull request. @virattt highlighted the agentic coding evals, where it reads code, gets instructions, creates an action plan, implements changes, and is evaluated on tests.

LLM Architecture and Scaling Discussions

- Transformer Dominance: @KevinAFischer argued transformers will continue to scale and dominate, drawing parallels to silicon processors. He advised against working on alternative architectures in academia.

- Scaling and Overfitting: @SebastienBubeck discussed challenges in scaling to AGI, noting models may overfit capabilities at larger scales rather than discovering desired "operations". Smooth scaling trajectories are not guaranteed.

- Importance of Architecture: @aidan_clark emphasized the importance of architecture work to enable current progress, countering views that only scaling matters. @karpathy shared a 94-line autograd engine as the core of neural network training.

Retrieval, RAG, and Context Length

- Long-Context LLMs vs Retrieval: Google DeepMind's @kelvin_guu shared a paper analyzing long-context LLMs on retrieval and reasoning tasks. They rival retrieval and RAG systems without explicit training, but still struggle with compositional reasoning.

- Infini-Transformer for Unbounded Context: @rohanpaul_ai highlighted the Infini-Transformer, which enables unbounded context with bounded memory using a recurrent-based token mixer and GLU-based channel mixer.

- Improving RAG Systems: @jxnlco discussed strategies to improve RAG systems, focusing on data coverage and metadata/indexing capabilities to enhance search relevance and user satisfaction.

Benchmarks, Evals, and Safety

- Benchmark Saturation Concerns: Some expressed concerns about benchmarks becoming saturated or less useful, such as @polynoamial on GSM8K and @arohan on HumanEval for coding.

- Rigorous Pre-Release Testing: @andy_l_jones highlighted @AISafetyInst's testing of Claude 3.5 pre-release as a first for a government assessing a frontier model before release.

- Evals Enabling Fine-Tuning: @HamelHusain shared a slide from @emilsedgh on how evals set up for fine-tuning, creating a flywheel effect.

Multimodal Models and Vision

- Differing Multimodal Priorities: @_philschmid compared recent releases, noting OpenAI and DeepMind prioritized multimodality while Anthropic focused on improving text capabilities in Claude 3.5.

- 4M-21 Any-to-Any Model: @mervenoyann unpacked EPFL and Apple's 4M-21 model, a single any-to-any model for text-to-image, depth masks, and more.

- PixelProse Dataset for Instructions: @tomgoldsteincs introduced PixelProse, a 16M image dataset with dense captions for refactoring into instructions and QA pairs using LLMs.

Miscellaneous

- DeepSeek-Coder-V2 Browser Coding: @deepseek_ai showcased DeepSeek-Coder-V2's ability to develop mini-games and websites directly in the browser.

- Challenges Productionizing LLMs: @svpino noted companies pausing LLM efforts due to challenges in scaling past demos. However, @alexalbert__ shared that Anthropic engineers now use Claude to save hours on coding tasks.

- Mixture of Agents Beats GPT-4: @corbtt introduced a Mixture of Agents (MoA) model that beats GPT-4 while being 25x cheaper. It generates initial completions, reflects, and produces a final output.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

Claude 3.5 Sonnet Release

- Impressive performance: In /r/singularity, Claude 3.5 Sonnet was released by Anthropic and outperforms GPT-4o and other models on benchmarks like LiveBench and GPQA. It also solved 64% of agentic coding problems compared to 38% for Claude 3 Opus in an internal evaluation.

- Vision reasoning abilities: Claude 3.5 Sonnet outperforms GPT-4o on visual tasks, showcasing impressive vision reasoning abilities.

- UI enhancements: In addition to performance improvements, Claude 3.5 Sonnet comes with UI enhancements as noted in /r/LocalLLaMA.

- Promising writing partner: Early tests shared in a YouTube video suggest Claude 3.5 Sonnet shows promise as a writing partner.

OpenAI and Competition

- Desire for competition: In /r/OpenAI, some express a desire for OpenAI to have a model competitive with Claude 3.5 Sonnet to maintain competition and progress in the field.

- Criticism and distrust: OpenAI is facing criticism and distrust over issues like dismissing safety concerns, breaking compute commitments, and the delayed voice model.

Other AI Model Releases and Benchmarks

- Models from China: Over half of recent large language models are coming from China, as noted in /r/singularity.

- Aider benchmark: DeepSeek Coder V2 and Sonnet 3.5 top the Aider benchmark leaderboard, according to a post in /r/LocalLLaMA.

- Florence-2 vision model: Microsoft released Florence-2, a versatile open-source vision model that can handle multiple tasks in a unified way, as shared in /r/OpenAI.

Stable Diffusion and Image Generation

- Stable Diffusion 3 for cars: In /r/StableDiffusion, the Stable Diffusion 3 medium model is considered good at following prompts for generating cars and other subjects, though anatomy and consistency need work.

- Consistent scenes with SD XL: A Reddit video post demonstrates how combining Stable Diffusion XL with img2img and other techniques enables generating consistent scenes and characters.

Memes and Humor

- ChatGPT users leaving for Claude: A meme in /r/singularity jokes about ChatGPT users leaving for Claude after seeing the 3.5 Sonnet update.

AI Discord Recap

SPECIAL NOTE: As we do for fresh frontier model launches, we are displaying the differences in output from running the same prompts across Claude 3.5 and 3 and GPT4o.

Claude 3 Sonnet

1. LLM Advancements and Benchmarking

- Meta's Llama 3 has topped leaderboards like ChatbotArena, outperforming GPT-4-Turbo and Claude 3 Opus as mentioned in this Discord discussion.

- IBM's Granite-8B-Code-Instruct and DeepSeek's DeepSeek-V2 (236B params) were highlighted in this channel for code abilities.

- Skepticism was expressed in this research channel about certain benchmarks, calling for credible sources to set realistic standards.

2. Optimizing LLM Inference and Training

- ZeRO++ promising 4x faster GPU training was discussed in this channel.

- The vAttention paper on efficient KV-caching was mentioned here.

- QServe using W4A8KV4 quantization for GPU serving was brought up in this discussion.

- Techniques like Consistency LLMs exploring parallel decoding were mentioned in this channel.

3. Open-Source AI Frameworks and Community Efforts

- Andrew Ng's course on LlamaIndex for building agentic RAG systems was mentioned in this channel.

- The open-source RefuelLLM-2 was introduced in this discussion as a top model for "unsexy" tasks.

- Modular's Mojo and its potential for AI extensions were teased here.

4. Multimodal AI and Generative Modeling

- Idefics2 8B Chatty for chat and CodeGemma 1.1 7B for coding were discussed in this channel.

- Combining Pixart Sigma, SDXL and PAG to achieve DALLE-3 outputs was proposed in this generative AI discussion.

- The IC-Light open-source project on image relighting was shared in this channel.

Claude 3.5 Sonnet

1. AI Model Releases and Performance Comparisons

- New Models Claim Benchmark Victories: Nous Research's Hermes 2 Theta 70B and Turbcat 8b both claim to outperform larger models like Llama-3 Instruct on various benchmarks. Users across Discord channels discussed these releases, comparing their capabilities to established models like GPT-4 and Claude.

- Claude 3.5 Sonnet Generates Mixed Reactions: Discussions in multiple Discords highlighted Claude 3.5 Sonnet's improved Python coding abilities, but some users found it lacking in JavaScript tasks compared to GPT-4. The model's ability to handle obscure programming languages was noted in the Nous Research Discord.

- Code-Focused Models Gain Traction: The release of DeepSeek Coder v2 sparked conversations about specialized models for coding tasks, with claims of performance comparable to GPT4-Turbo in this domain.

2. AI Development Tools and Infrastructure Challenges

- LangChain Alternatives Sought: A blog post detailing Octomind's move away from LangChain resonated across multiple Discords, with developers discussing alternatives like Langgraph for AI agent development.

- Hardware Limitations Frustrate Developers: Discussions in the LM Studio and CUDA MODE Discords highlighted ongoing challenges with running advanced LLMs on consumer hardware. Users debated the merits of various GPUs, including NVIDIA's 4090 vs. the upcoming 5090, and explored workarounds for memory constraints.

- Groq's Whisper Performance Claims: Groq's announcement of running the Whisper model at 166x real-time speeds generated interest and skepticism across channels, with developers discussing potential applications and limitations.

3. Ethical Concerns in AI Industry Practices

- OpenAI's Government Collaboration Raises Questions: A tweet discussing OpenAI's early access provision to government entities sparked debates across multiple Discords about AI regulation and AGI safety strategies.

- Perplexity AI Faces Criticism: A CNBC interview criticizing Perplexity AI's practices led to discussions in various channels about ethical considerations in AI development and deployment.

- OpenAI's Public Relations Challenges: Members in multiple Discords, including Interconnects, discussed repeated PR missteps by OpenAI representatives, speculating on their implications for the company's public image and internal strategies.

Claude 3 Opus

1. Model Performance Optimization and Benchmarking

- [Quantization] techniques like AQLM and QuaRot aim to run large language models (LLMs) on individual GPUs while maintaining performance. Example: AQLM project with Llama-3-70b running on RTX3090.

- Efforts to boost transformer efficiency through methods like Dynamic Memory Compression (DMC), potentially improving throughput by up to 370% on H100 GPUs. Example: DMC paper by @p_nawrot.

- Discussions on optimizing CUDA operations like fusing element-wise operations, using Thrust library's

transformfor near-bandwidth-saturating performance. Example: Thrust documentation.

- Comparisons of model performance across benchmarks like AlignBench and MT-Bench, with DeepSeek-V2 surpassing GPT-4 in some areas. Example: DeepSeek-V2 announcement.

2. Fine-tuning Challenges and Prompt Engineering Strategies

- Difficulties in retaining fine-tuned data when converting Llama3 models to GGUF format, with a confirmed bug discussed.

- Importance of prompt design and usage of correct templates, including end-of-text tokens, for influencing model performance during fine-tuning and evaluation. Example: Axolotl prompters.py.

- Strategies for prompt engineering like splitting complex tasks into multiple prompts, investigating logit bias for more control. Example: OpenAI logit bias guide.

- Teaching LLMs to use

<RET>token for information retrieval when uncertain, improving performance on infrequent queries. Example: ArXiv paper.

3. Open-Source AI Developments and Collaborations

- Launch of StoryDiffusion, an open-source alternative to Sora with MIT license, though weights not released yet. Example: GitHub repo.

- Release of OpenDevin, an open-source autonomous AI engineer based on Devin by Cognition, with webinar and growing interest on GitHub.

- Calls for collaboration on open-source machine learning paper predicting IPO success, hosted at RicercaMente.

- Community efforts around LlamaIndex integration, with issues faced in Supabase Vectorstore and package imports after updates. Example: llama-hub documentation.

GPT4O (gpt-4o-2024-05-13)

-

AI Model Performance and Training Techniques:

- Gemini 1.5 excels with 1M tokens: Gemini 1.5 Pro impressed users by handling up to 1M tokens effectively, outperforming other models like Claude 3.5 and gaining positive feedback for long-context tasks. This model's ability to process extensive documents and transcripts was highlighted.

- FP8 Flash Attention and GPTFast speed up inference: Discussions around INT8/FP8 kernels in flash attention and the recently introduced GPTFast indicated significant boosts in HF model inference speeds by up to 9x. Notable mentions included an open-source FP8 flash attention addition, set to receive official CUDA support in 12.5.

- Null-shot prompting and DPO over RLHF: Community debates touched on the efficacy of null-shot prompting to exploit hallucinations in LLMs and the shift from Reinforcement Learning with Human Feedback (RLHF) to Direct Policy Optimization (DPO) for simplified training. Paper references included the concept's advantages in the LLMs' task performance.

-

AI Ethics and Accessibility:

- AI Ethics spark debate: A Nature article criticizing OpenAI's departure from open-source principles stirred discussions on AI transparency and accessibility. Concerns were raised over the increasing difficulty of accessing cutting-edge AI tools and code.

- Avoiding insincere AI apologies: Users voiced frustration with AI-generated apologies, calling them insincere and unnecessary. This sentiment reflected broader expectations for more authentic and practical AI interactions rather than automated expressions of regret.

- OpenAI and government collaboration concerns: Concerns mounted over OpenAI's early model access for government entities, highlighted in a tweet. The conversation pointed to potential regulatory implications and strategic shifts towards AGI safety.

-

Open-Source AI Developments and Community Contributions:

- Introducing Turbcat 8b: Announcements of the Turbcat 8b model included notable improvements like expanded datasets and added Chinese support. The model now boasts 5GB in data, with comparisons drawn against larger yet underdeveloped models.

- Axolotl and Backgammon AI Tool: Collaboration efforts highlighted the open-sourced Backgammon AI tool, which simulates scenarios in backgammon for strategic enhancements. Discussions also included the Turbcat model and its functionalities for multilingual processing.

- Dataset for computer vision from Stability.ai: Stability.ai released a dataset featuring 235,000 prompts and images from the Stable Diffusion community. This StableSemantics dataset aims to augment computer vision systems by providing extensive visual semantics data.

-

Hardware and Deployment Challenges:

- GPU usage challenges and optimizations: Engineers shared insights and solutions for optimizing GPU and CPU integrations in different setups, such as enabling the second GPU for LM Studio and discussing alternatives for running sophisticated models. Used 3090s were recommended for cost efficiency, anticipating performance comparisons between NVIDIA 4090 and 5090.

- TinyGrad's tangles with

clip_grad_norm_: Implementingclip_grad_norm_in TinyGrad faced bottlenecks due to Metal's buffer size limitations, suggesting division into 31-tensor chunks as a workaround. The comparison between Metal and CUDA highlighted performance differences, specifically for gradient clipping operations.

- Model deployment issues: Deployment challenges with models like Unsloth on platforms like Hugging Face created discussions around tokenizer compatibility and alternative deployment suggestions. Fine-tuning costs also varied dramatically between Together.ai and Unsloth's H100, raising questions about pricing errors.

-

Event Discussions and Professional Opportunities:

- Techstars and RecSys Virtual Meetups: Upcoming events like the Techstars Startup Weekend in SF from June 28-30 and the RecSys Learners Virtual Meetup on June 29 were highlighted as opportunities for AI professionals to network, learn, and present innovative ideas. Details and RSVP links were shared for participants' convenience.

- Job hunting and skill showcasing: Python AI Engineers actively sought job opportunities, emphasizing their skills in NLP and LLMs. Conversations also included insights into companies' support frameworks, like the Modal team's assistance with large models and developer preferences for Slack over Discord.

- Talks and announcements at AI events: LlamaIndex's founder Jerry Liu's talks at the World's Fair on the future of knowledge assistants were promoted, with mentions of forthcoming special announcements on Twitter.

These discussions provide a comprehensive glance at the innovative, ethical, and practical aspects actively shaping the AI community.

PART 1: High level Discord summaries

OpenAI Discord

- Gemini 1.5 Steps Up in Token Game: Gemini 1.5 Pro shines in handling long-context tasks with an impressive ability to process up to 1M tokens. Users appreciated its expertise on various topics when fed with documents and transcripts, though it comes with some access restrictions.

- AI Ethics Debate Heats Up: A Nature article prompted discussions on the implications of AI model openness, sparking critique of OpenAI's shift from open-source principles. The conversation pointed to broader concerns about the accessibility of AI tools and code.

- Finding Balance in Apologies: Several users voiced their annoyance with the frequency of AI's apologetic responses, deriding the insincerity of machines offering regret. This reflects a broader dissatisfaction with how AI personas handle errors.

- Mac Preference for AI Developers: A user advocating for MacBooks signifies a strong preference within the development community, highlighting ease-of-use in development environments. While Windows Surface laptops were mentioned as contenders for hardware and design, the development experience on Windows was implicitly criticized.

- Dall-E 3 Tackles Complex Imagery: Dall-E 3's capabilities were tested by users attempting to generate intricate images with specific attributes like asymmetry and precise placements, with mixed results. Notably, the OpenAI macOS app received praise for its integration with typical Mac workflows, suggesting a tool that aligns with the productivity preferences of AI professionals.

CUDA MODE Discord

- INT8/FP8 Shakes Up Performance: A resurgence in discussions on INT8/FP8 flash attention kernels was inspired by a HippoML blog post, though code is currently not released for public benchmarking or integration with torchao. Meanwhile, an open source FP8 addition to flash attention was noted, referencing the Cutlass Kernels GitHub and the pending Ada FP8 support in CUDA 12.5.

- GPTFast Paves the Way for Hasty Inference: The creation of GPTFast, a pip package boosting inference speeds for HF models by a factor of up to 9x, includes features like

torch.compile, key-value caching, and speculative decoding.

- GPU Optimization Nuggets Revealed: A discovery of old slides explaining GPU limitations, specifically about Llama13B's incompatibility with 4090 GPUs, encouraged further discussion on optimizing memory usage with LoRA. Additionally, a recent presentation offers strategies for managing GPU vRAM, benchmarking using torch-tune, and optimizing memory.

- Stabilizing the Sync and Dance of NCCL: Experimentation in the NCCL realm raised flags over synchronization issues, with a proposed shift away from MPI to filesystem-based sync as per the NCCL Communicators Guide. Opinions diverged on the practicality of CUDA's managed memory, balancing code cleanliness against performance, and the emergence of training instabilities prompted a look into research on loss spikes (Loss Spikes Paper).

- Intricacies in AI Benchmarking Techniques: The accuracy of processing time benchmarks was tackled with techniques such as

torch.cuda.synchronizeandtorch.utils.benchmark.Timer, and specific best practices were squinted at in the Triton's evaluation code, stressing the importance of L2 cache clearing prior to measurement.

Stability.ai (Stable Diffusion) Discord

- Community Reacts to Channel Cuts: Many members voiced frustration regarding the deletion of underused channels, especially since these were used as a source of inspiration and community interaction. While Fruit mentioned that less active channels tend to attract bot spam, some participants are still looking for a better understanding or a possible restoration of the archives.

- UI Preferences in Stable Diffusion: A lively debate surfaced on the efficiency and usability of ComfyUI relative to other interfaces such as A1111, with some users favoring node-based workflows while others prefer interfaces with traditional HTML-based inputs. No consensus was reached, but the discussion highlighted the diversity of preferences in the community with respect to UI design.

- New Dataset Release for Computer Vision: A new dataset with 235,000 prompts and images, derived from the Stable Diffusion Discord community, was announced, aimed at improving computer vision systems by providing insights into the semantics of visual scenes. The dataset is accessible at StableSemantics.

- Debate Over Channel Management: The decision to delete certain lower-activity channels sparked widespread debate, as users lost a valued resource for visual archives, and are seeking clarity on the criteria used for channel removal.

- Exploring Stable Diffusion Tools: Users shared experiences and resources related to different Stable Diffusion interfaces, including ComfyUI, Invoke, and Swarm, as well as providing guides to assist newcomers in navigating these tools. Conversation threads provided comparisons and personal preferences, aiding others in selecting suitable interfaces for their workflows.

Perplexity AI Discord

- AI Models' Exhibition Match: Interactions within the Discord guild showcased members comparing AI models like Opus, Sonnet 3.5, and GPT4-Turbo, with a spotlight on Sonnet 3.5 matching Opus's performance and discussing the usage of a Complexity extension for switching between these models efficiently.

- Model Access Quandary: Users debated on the actual availability of Claude 3.5 Sonnet across different devices and expressed concerns over usage limitations like the 50 uses per day cap on Opus, which is stifling users' willingness to engage with the model.

- Decoding Inference Hardware: Engineers dissected the possible hardware used for AI inference, speculating between TPUs and Nvidia GPUs, with a nod to AWS's Trainium for its machine learning training efficiency.

- Hermes 2 Theta Takes the Crown: Excitement was noted regarding the launch of Hermes 2 Theta 70B by Nous Research, surpassing competitor benchmarks and bolstering capabilities like function calling, feature extraction, and different output modes, drawing comparisons to GPT-4's proficiency.

- API Management Simplified: A brief guide was shared on managing API keys, directing users to Perplexity settings to generate or delete keys easily, though a query about limiting API searches to specific websites remained unanswered.

HuggingFace Discord

Fancy OCR Work, Florence!: Engineers discussed the unexpected superior OCR capabilities of the Florence-2-base model over its larger counterparts; the findings elicited curiosity and the need for further verification. Surprisingly, the sophisticated model struggled with the seemingly simpler task, indicating a need to measure model capabilities beyond mere scale.

Face-Plant at HuggingFace HQ: Users experienced interruptions with the Hugging Face website, encountering 504 errors and affecting their workflow continuity. This hicriticaluptcy in a critical development resource caused temporary setbacks for users depending on the platform's services.

Helping Hands for AI Projects: Open-source AI projects are seeking collaborative efforts: the Backgammon AI tool aims to simulate backgammon scenarios, while the Nijijourney dataset offers robust benchmarking despite access issues due to its local storage of images.

Play and Contribute: An innovative game, Milton is Trapped, was shared where the objective is to interact with a grumpy AI. Developers are encouraged to contribute to this playful AI endeavor via its GitHub repository.

Ethical Computing Crossroads: An engaging paper highlighting the compromising dialogue between fairness and environmental sustainability in NLP underlines the industry's delicate balancing act. It points out the necessity for a holistic view when advancing AI technologies, where an emphasis on one aspect can inadvertently impair another.

Unsloth AI (Daniel Han) Discord

- Token Troubles in Training: Engineers discussed an issue with EOS tokens in OpenChat 3.5, noting confusion between

<|end_of_turn|>and</s>during various stages of training and inference. For instance, "Unsloth uses<|end_of_turn|>while llama.cpp uses<|reserved_special_token_250|>as the PAD token."

- Price War: Unsloth vs. Together.ai: A price comparison revealed that fine-tuning on Together.ai may cost around $4,000 for 15 billion tokens, as shown by their interactive calculator. By contrast, using Unsloth's H100, the same task could be executed for under $15 in roughly 3 hours, triggering disbelief and speculations of a pricing error.

- Favoring Phi-3-mini Over Mistral: Comparing models, one engineer reported better consistency with phi-3-mini than Mistral 7b, using training datasets of 1k, 10k, and 50k examples, framing data within training as the only acceptable response.

- DPO Over RLHF for Simplified Training: Members considered abandoning Reinforcement Learning with Human Feedback (RLHF) for Direct Policy Optimization (DPO), supported by Unsloth, for being simpler and equally effective. One participant mentioned, "I think I will switch to DPO first." after learning more about its benefits.

- Deployment Woes with Hugging Face: Users shared difficulties deploying Unsloth-trained models on Hugging Face Inference endpoints due to tokenizer issues, which led to inquiries about alternative deployment platforms.

Nous Research AI Discord

- Hermes 2 Theta 70B Trumps the Giants: The newly announced Hermes 2 Theta 70B collaboration between Nous Research, Charles Goddard, and Arcee AI, claims performance that overshadows Llama-3 Instruct and even GPT-4. Key features include function calling, feature extraction, and JSON output modes, with an impressive 9.04 score on MT-Bench. More details are at Perplexity AI.

- Claude 3.5 Masters Obscurity: The latest from Claude 3.5 reveals an ability to tackle problems in self-created obscure programming languages, surpassing established problem-solving parameters.

- AI/Human Collaboration Insight: A shift is noticed, positioning AI usage from mere task execution towards forming a symbiotic working relationship with humans—a detailed thread is available at "Piloting an AI".

- Strategic Use of Model Hallucinations: An arXiv paper describes how null-shot prompting might smartly exploit large language models' (LLMs) hallucinations, outperforming zero-shot prompting in task achievement.

- Access Hermes 2 Theta 70B Now: Downloads for Hermes 2 Theta 70B - both in FP16 and quantized GGUF formats - are up for grabs on Hugging Face, ensuring wide accessibility for eager hands. Find the FP16 version and the quantized GGUF version ready for use.

LM Studio Discord

Squeeze More Power from Your GPUs: Engineers found setting the main_gpu value to 1 enables the second GPU for LM Studio on Windows 10 systems. A user was also successful in running vision models solely on CPU by disabling GPU acceleration and using OpenCL, despite slower performance.

Integrating Ollama Models Just Got Easier: For incorporating Ollama models into LM Studio, contributors are shifting from the llamalink project to the updated gollama project, though different presets and flash attention have been proposed to mitigate model gibberish issues.

Advanced Models Challenge Hardware Capabilities: Discussions revealed frustrations with running high-end LLMs on current hardware setups, even with 96GB of VRAM and 256GB of RAM. The community is also exploring used 3090s for cost efficiency and eagerly anticipates performance comparisons between NVIDIA's 4090 and the upcoming 5090.

Optimizing AI Workflows in the Face of Error: In the wake of usability challenges post-beta updates, engineers recommend leveraging nvidia-smi -1 to check for model loads into vRAM, and consider disabling GPU acceleration for stability in Docker environments.

Chroma and langchain Perfect Their Harmony: A BadRequestError with langchain and Chroma's integration was swiftly addressed with a fix in GitHub Issue #21318, proving the community's responsive problem-solving skills in maintaining seamless AI-operated workflows.

Modular (Mojo 🔥) Discord

Pixelated Meetings No More: Community members discussed resolution issues with community meetings streamed on YouTube, noting that while streams can reach 1440p, phone resolutions are often throttled to 360p, possibly due to internet speed restrictions.

MLIR Quest for 256-Bit Integers: In the quest for handling 256-bit operations for cryptography, one user attempted multiple MLIR dialects but faced hurdles, prompting them to consider internal advice, as syntactical support in MLIR or LLVM is not straightforward.

Kapa.ai Bot Glitches With Autocomplete: Users have been experiencing autocompletion inconsistencies with the Kapa.ai bot on Discord, suggesting that manual typing or dropdown selection might be more reliable until the erratic behavior is addressed.

Mojo's Winding Road to Exceptions: Conversations revealed pending implementation of exception handling in Mojo's standard library, with a roadmap document shedding light on future feature rollouts and current limitations (Mojo roadmap & sharp edges).

Navigating Nightly Build Turbulence: The nightly release of the Mojo compiler was disrupted due to branch protection rule changes, but a community member's commit to fix the compiler version mismatch helped stabilize the pipeline, leading to the successful roll-out of the new 2024.6.2115 release, as detailed in the changelog.

OpenRouter (Alex Atallah) Discord

- Stripe Smoothes Over Payment Snafu: OpenRouter announced a resolved Stripe payment issue that temporarily prevented credits from being added to users' accounts. The resolution was stated as being "Fixed fully, for all payments."

- Claude Calms Down After Outage: Anthropic’s inference engine, Claude, had a 30-minute outage resulting in a 502 error which has been fixed, confirmable via their status page.

- App Listing Advice on OpenRouter: An OpenRouter user wanting to list their app was advised to follow the OpenRouter Quick Start Guide, which requires specific headers for app ranking.

- Claude 3.5 Sonnet Stirs Up Excitement (and Debate): The release of Claude 3.5 Sonnet generated excitement for its improved Python abilities, while sparking debate about its JavaScript performance compared to GPT-4.

- Perplexity Labs Brings Nemetron 340b into Play: Perplexity Labs offers access to their Nemetron 340b model, boasting a response time of 23t/s—members are encouraged to try it out. A query was raised about VS2022 extensions for OpenRouter, with Continue.dev mentioned as a compatible tool.

Eleuther Discord

- Epoch AI's New Datahaven: Epoch AI unveiled an updated datahub housing data on over 800 models, spanning from 1950 to the present day, fostering responsible AI development with an open Creative Commons Attribution license.

- Clarifying Samba from the SambaNova Conundrum: In a wave of new models, distinction emerged between the Microsoft hybrid model termed "Samba," focusing on a mamba/attention blend, and the SambaNova systems, separate entities in the AI model realm.

- GoldFinch Paper Teaser: The anticipated release of the GoldFinch paper will introduce a RWKV hybrid model with a unique super-compressed kv cache and full attention mechanism. Concerns about stability in the training phase were acknowledged for the mamba variant.

- Rethinking Loss Functions: Fascinating debates surfaced around the idea of models internally generating representations of their loss functions during the forward pass, potentially improving performance on tasks requiring an understanding of global properties.

- NumPy Nostalgia Necessitated, Colab to the Rescue: Discussions addressed incompatibilities between NumPy 2.0.0 and modules compiled with 1.x versions, leading to recommendations to either downgrade numpy or update modules, while others found refuge in Colab for specific task executions.

tinygrad (George Hotz) Discord

GPT's Weight Tying Woes: Discussions have surfaced around the proper implementation of weight tying in GPT architectures, noting issues with conflicting methods that cause separate optimization pitfalls and timing out due to a weight initialization method that interferes with weight tying.

TinyGrad's Tangled clip_grad_norm_: Implementing clip_grad_norm_ in TinyGrad is generating performance bottlenecks, predominantly due to Metal's limitations in buffer sizes, suggesting a workaround of dividing the gradients into 31-tensor chunks for optimal efficiency.

Juxtaposing Metal and CUDA: A comparison between Metal and CUDA revealed Metal's inferior handling of tensor operations, specifically gradient clipping. Proposed solutions for Metal involve internal scheduler enhancements to better manage resource constraints.

AMD Device Timeouts in the Hot Seat: Users are experiencing timeouts with AMD devices when running examples like YOLOv3 and ResNet, pointing towards synchronization errors and potential overloads on integrated GPUs such as the Radeon RX Vega 7.

Developer Toolkit Spotlight: A Weights & Biases logging link was shared for insights into TinyGrad's ML performance, showcasing the utility of developer tools in tracking and optimizing machine learning experiments. W&B Log for TinyGrad

LAION Discord

- Caption Dropout Techniques Scrutinized: Engineers debated the effectiveness of using zeros versus encoded empty strings as caption dropout in SD3. No significant result changes were identified when encoded strings were used.

- Sakuga-42M Dataset Hits a Wall: The Sakuga-42M Dataset paper was unexpectedly withdrawn, thwarting advances in cartoon animation research. Specific reasons for the withdrawal remain unclear.

- OpenAI's Cozy Government Relations Raise Eyebrows: A tweet sparked discussions about OpenAI's early access to AI models for the government, prompting calls for increased regulatory measures and questions about strategy shifts towards AGI safety.

- MM-DIT Global Incoherency Alerts: As conversations about MM-DIT arose, concerns about the impact of increasing latent channels on global coherency were emphasized, noting specific issues like inconsistent representations of scenes.

- Dilemmas in Chameleon Model Optimization: While fine-tuning the Chameleon model, anomalously high gradient norms have been causing NaN values, resisting solutions like adjusting learning rates, applying grad norm clipping, or using weight decay.

Interconnects (Nathan Lambert) Discord

- Quick Releases Raise Eyebrows: Discussions arose about the rapid release of new versions, with members questioning whether improvements are due to posttraining or pretraining and the contribution that pod training may have in this process.

- PR Perils for OpenAI's Mira: Mira's repeated public relations mistakes drew criticism from community members, with some suggesting a lack to PR training while others see these errors as a strategy to draw attention away from OpenAI executives. The frustration was amplified by a Twitter incident and a shift by Nathan Lambert to using Claude.

- CNBC Rattles Perplexity's Cage: A CNBC interview cast Perplexity in a negative light, referencing a Wired article that criticized the company's practices. The YouTube video sparked a wave of criticism against Perplexity, with others, including Casey Newton, joining the fray by criticizing the company's founder.

- Search for Engagement: A brief post by ‘natolambert’ calling out for 'snail' suggests an ongoing conversation or a project that might require attention or input from the mentioned individual.

- Writing Ideas Circulate: An interest in developing written work focusing on recent tech topics was noted – a plan that may involve collaborative efforts to deepen understanding.

OpenInterpreter Discord

- Open Interpreter Boasts Cross-Platform Functionality: A demo revealed Open Interpreter working on both Windows and Linux, leveraging gpt4o via Azure, with further enhancements on the horizon.

- Praise for Claude 3.5 Sonnet: An individual highlighted the superior communicative abilities and code quality of Claude 3.5 Sonnet, favoring it over GPT-4 and GPT-4o due to usability.

- Guided Windows Installation for Open Interpreter: The setup documentation for Open Interpreter clarifies the installation process on Windows, which includes

pipinstallations and configuring optional dependencies.

- Introducing DeepSeek Coder v2: The announcement of DeepSeek Coder v2 indicated a robust model focused on responsible and ethical use, potentially on par with GPT4-Turbo for code-specific tasks.

- Open Interpreter's macOS Favoritism: Discussions pointed out that Open Interpreter tends to perform better on macOS, attributed to the core team's extensive testing efforts on that platform.

- AI's Sticky Situation Overcome: A user showcased a tweet representing a fully local, computer-controlling AI's capability to connect to WiFi by reading the password from a sticky note—a tangible leap in practical AI utility.

LLM Finetuning (Hamel + Dan) Discord

- Abandoning Abstractions: The Octomind team detailed in a blog post their departure from using LangChain for building AI agents, citing its rigid structures and difficulty in maintenance, while some members suggested Langgraph as a viable alternative.

- Cold Shoulder to LangChain: Discontent with LangChain was echoed, as it's said to present challenges in debugging and complexity.

- Modal Mavens Amass Acclaim: Members commended the Modal team's support with the BLOOM 176B model, thumbs up for their helpful approach, but one user expressed a preference for Slack over Discord.

- Praise for Eval Framework: A user heralded the eval framework as outstanding due to its ease of use and flexibility with custom enterprise endpoints, emphasizing excellent developer experience with its intuitive API design.

- Credit Confusion and Check-ins: Users are seeking assistance on unlocking credit systems and verifying email registrations, one specific email mention being "alexey.zaytsev@gmail.com".

LangChain AI Discord

Meet AI Innovators at Techstars SF: Engineers interested in startup development should consider attending the Techstars Startup Weekend from June 28-30 in San Francisco; keynotes and mentorships from industry leaders are on the roster. More about the event can be found here.

Reflexion Tutorial Confounds with Complexity: Concerns were raised about the use of PydanticToolsParser over simpler loops in the Reflexion tutorial, questioning the implications of validation failures — the tutorial can be referenced here.

AI Engineering Talent on the Market: An experienced AI engineer with proficiency in LangChain, OpenAI, and multi-modal LLMs is currently seeking full-time opportunities within the industry.

Streaming Headaches with LangChain: Difficulty streaming LangChain's/LangGraph's messages with Flask to a React application has prompted a user to seek community assistance, but a solution remains elusive.

Innovations and Interactions in AI: Two notable contributions include an article on Retrieval Augmentation with MLX, available here, and the introduction of 'Mark', a CLI tool enhancing the use of markdown with GPT models detailed here.

OpenAccess AI Collective (axolotl) Discord

- Bigger and Multilingual: Turbcat 8b Makes Waves: The Turbcat 8b model, which features a dataset increase from 2GB to 5GB and added Chinese support, was introduced, sparking interest and public access for users.

- Uncertain GPU Compatibility Sparks Discussion: Queries about the AMD Mi300x GPU's compatibility with Axolotl's platform remain unanswered; a user suggested awaiting release notes or updates for PyTorch 2.4 to confirm support.

- Model Superiority Debated: Comparisons between Turbcat 8b and an upcoming 72B model have begun, with users highlighting Turbcat's dataset size but recognizing the potential of the larger model still under development.

- Training Time Pay Attention to the Clock: While a simple formula was proposed to estimate training time for models, practitioners stressed the importance of real world runs to gather accurate estimates, accounting for epochs and buffer time for evaluation.

- Message Relocation - Less is More: A lone message in the datasets channel simply redirected users to the general-help channel, emphasizing the organization and focus on streamlined conversation within the community.

Cohere Discord

- API Role Confusion Resolved: Discussion in the guild clarified that Cohere's current API lacks support for OpenAI-style roles like "user" or "assistant"; however, a future API update is expected to introduce these features to facilitate integration.

- Incongruent APIs Challenge Developers: There is a debate about the incompatibility issues between Cohere's Chat API and OpenAI's ChatCompletion API, with concerns that differing API standards are obstructing seamless service integration among AI models.

- Model-Swapping Skepticism: Members are worried about services such as OpenRouter, which may substitute cheaper models instead of the requested ones, and they recommend using direct prompt inspection and comparisons to ensure model accuracy.

- Best Practices for Resource Utilization: An insightful blog post shared by a member encourages the practice of utilizing available resources rather than hoarding them, drawing an enlightening parallel between in-game item management and the real-world promotion of projects or asking for help.

LlamaIndex Discord

- Look Who's Talking at the World's Fair: LlamaIndex founder Jerry Liu will present at the World's Fair on the Future of Knowledge Assistants, with some special announcements scheduled for June 26th at 4:53 PM, followed by another talk on June 27th.

- Job Hunting with Python Prowess: A Python AI engineering pro, skilled in AI-driven applications and large language models (LLMs), is on the job hunt, boasting proficiency in NLP, Transformers, PyTorch, and TensorFlow.

- Graph Embedding Queries Spark Interest: Queries were raised regarding embedding generation for Neo4jGraphStore, emphasizing the integration of embeddings in an existing graph structure without initial LLMs usage.

- LlamaIndex Extension Quest: Users explored how to extend LlamaIndex with practical functionalities such as emailing, Jira integrations, and calendar events, with a nod towards using custom agents documentation for guidance.

- NER Needs and Model Merging Musings: Ollama and LLMs were discussed for Named Entity Recognition (NER) tasks, with one member suggesting a shift to Gliner; additionally, a novel "cursed model merging" technique involving UltraChat and Mistral-Yarn was debated.

Latent Space Discord

- Swyx Pinpoints AI's Next Frontier: Shawn "swyx" Wang delves into new opportunities for AI in software development, emphasizing upcoming use cases in an article, and spotlights the AI Engineer World’s Fair as a key event for industry professionals.

- Groq Takes on Real-time Whispering: Groq asserts their platform can execute the Whisper model at 166x real-time, sparking discourse on its implications for high-efficiency podcast transcription and potential challenges with rate limits.

- Tuned In: Seeking Music-to-Text AI: A discussion emerged about AI systems capable of translating music into textual descriptions that detail genre, key, and tempo, highlighting a gap in the market for services that go beyond lyric generation.

- Mixing It Up with MoA: The Mixture of Agents (MoA) model is unveiled, boasting costs 25x less than GPT-4 yet receiving a higher preference rate of 59% in human comparison tests; it also sets new benchmark records as tweeted by Kyle Corbitt.

AI Stack Devs (Yoko Li) Discord

Spam Strikes in AI Town: Community members have reported a user, <@937822421677912165>, for multiple spam incidents across different channels, prompting calls for moderator intervention. The situation escalated as members expressed frustration, with one stating "wtf what's wrong with u" and encouraging others to report the behavior to Discord.

MLOps @Chipro Discord

- Catch the RecSys Wave: A RecSys Learners Virtual Meetup is calling for attendees for its event on 06/29/2024, slated to begin at 7 AM PST, offering an opportunity for affinity groups in Recommendation Systems to converge. Engineers and AI aficionados can RSVP for the meetup which promises a blend of exciting and informational sessions.

- AI Quality Conference Curiosity: Queries were raised about attendance for the upcoming AI Quality Conference scheduled in San Francisco next Tuesday, with a member seeking additional details about the conference's offerings and themes.

Torchtune Discord

- Debating Data Structures in PyTorch: A member sought insights on whether to use PyTorch's TensorDict or NestedTensor for handling multiple data inputs. The consensus highlighted the efficiency of these structures in streamlining operations by avoiding repetitive code for data type conversions and device handling, and simplifying broadcasting over batch dimensions.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Datasette - LLM (@SimonW) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The YAIG (a16z Infra) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!