[AINews] Ring Attention for >1M Context

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

AI Discords for 2/21/2024. We checked 20 guilds, 317 channels, and 8751 messages for you. Estimated reading time saved (at 200wpm): 796 minutes.

UPDATE FOR YESTERDAY: sorry for the blank email - someone posted a naughty link in the langchain discord that caused the buttondown rendering process to error out. We've fixed it so you can see yesterday's Google Gemini recap here.

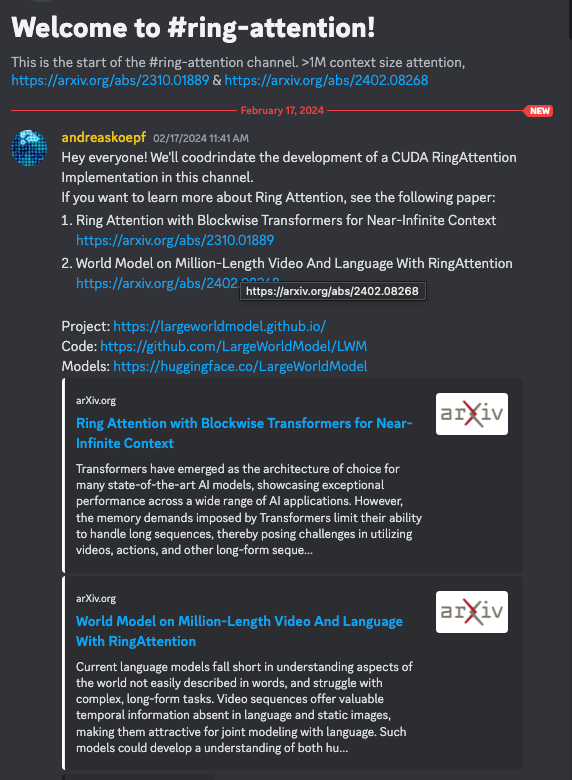

Gemini Pro has woken everyone up to the benefits of long context. The CUDA MODE Discord has started a project to implement the RingAttention paper (Liu, Zaharia, Abbeel, and extended with the World Model RingAttention paper)

The paper of course came with a pytorch impl and lucidrains also has a take. But you can see the CUDA impl here: https://github.com/cuda-mode/ring-attention

Table of Contents

- PART 1: High level Discord summaries

- TheBloke Discord Summary

- LM Studio Discord Summary

- Nous Research AI Discord Summary

- Eleuther Discord Summary

- LAION Discord Summary

- Mistral Discord Summary

- OpenAI Discord Summary

- HuggingFace Discord Summary

- Latent Space Discord Summary

- LlamaIndex Discord Summary

- OpenAccess AI Collective (axolotl) Discord Summary

- CUDA MODE Discord Summary

- Perplexity AI Discord Summary

- LangChain AI Discord Summary

- DiscoResearch Discord Summary

- Skunkworks AI Discord Summary

- Datasette - LLM (@SimonW) Discord Summary

- Alignment Lab AI Discord Summary

- LLM Perf Enthusiasts AI Discord Summary

- AI Engineer Foundation Discord Summary

- PART 2: Detailed by-Channel summaries and links

- TheBloke ▷ #general (1132 messages🔥🔥🔥):

- TheBloke ▷ #characters-roleplay-stories (299 messages🔥🔥):

- TheBloke ▷ #training-and-fine-tuning (3 messages):

- TheBloke ▷ #coding (163 messages🔥🔥):

- LM Studio ▷ #💬-general (598 messages🔥🔥🔥):

- LM Studio ▷ #🤖-models-discussion-chat (149 messages🔥🔥):

- LM Studio ▷ #announcements (4 messages):

- LM Studio ▷ #🧠-feedback (30 messages🔥):

- LM Studio ▷ #🎛-hardware-discussion (130 messages🔥🔥):

- LM Studio ▷ #🧪-beta-releases-chat (266 messages🔥🔥):

- Nous Research AI ▷ #ctx-length-research (97 messages🔥🔥):

- Nous Research AI ▷ #off-topic (16 messages🔥):

- Nous Research AI ▷ #interesting-links (38 messages🔥):

- Nous Research AI ▷ #general (419 messages🔥🔥🔥):

- Nous Research AI ▷ #ask-about-llms (9 messages🔥):

- Nous Research AI ▷ #collective-cognition (3 messages):

- Nous Research AI ▷ #project-obsidian (3 messages):

- Eleuther ▷ #general (101 messages🔥🔥):

- Eleuther ▷ #research (305 messages🔥🔥):

- Eleuther ▷ #interpretability-general (43 messages🔥):

- Eleuther ▷ #lm-thunderdome (64 messages🔥🔥):

- Eleuther ▷ #multimodal-general (6 messages):

- Eleuther ▷ #gpt-neox-dev (1 messages):

- LAION ▷ #general (346 messages🔥🔥):

- LAION ▷ #research (65 messages🔥🔥):

- LAION ▷ #paper-discussion (1 messages):

- Mistral ▷ #general (296 messages🔥🔥):

- Mistral ▷ #models (20 messages🔥):

- Mistral ▷ #deployment (54 messages🔥):

- Mistral ▷ #finetuning (7 messages):

- Mistral ▷ #showcase (13 messages🔥):

- Mistral ▷ #la-plateforme (12 messages🔥):

- OpenAI ▷ #ai-discussions (57 messages🔥🔥):

- OpenAI ▷ #gpt-4-discussions (51 messages🔥):

- OpenAI ▷ #prompt-engineering (91 messages🔥🔥):

- OpenAI ▷ #api-discussions (91 messages🔥🔥):

- HuggingFace ▷ #general (186 messages🔥🔥):

- HuggingFace ▷ #today-im-learning (7 messages):

- HuggingFace ▷ #cool-finds (8 messages🔥):

- HuggingFace ▷ #i-made-this (22 messages🔥):

- HuggingFace ▷ #diffusion-discussions (10 messages🔥):

- HuggingFace ▷ #computer-vision (1 messages):

- HuggingFace ▷ #NLP (36 messages🔥):

- HuggingFace ▷ #diffusion-discussions (10 messages🔥):

- Latent Space ▷ #ai-general-chat (78 messages🔥🔥):

- Latent Space ▷ #ai-announcements (3 messages):

- Latent Space ▷ #llm-paper-club-west (173 messages🔥🔥):

- LlamaIndex ▷ #blog (3 messages):

- LlamaIndex ▷ #general (246 messages🔥🔥):

- LlamaIndex ▷ #ai-discussion (3 messages):

- OpenAccess AI Collective (axolotl) ▷ #general (149 messages🔥🔥):

- OpenAccess AI Collective (axolotl) ▷ #axolotl-dev (26 messages🔥):

- OpenAccess AI Collective (axolotl) ▷ #general-help (51 messages🔥):

- OpenAccess AI Collective (axolotl) ▷ #community-showcase (1 messages):

- OpenAccess AI Collective (axolotl) ▷ #runpod-help (6 messages):

- CUDA MODE ▷ #general (2 messages):

- CUDA MODE ▷ #triton (3 messages):

- CUDA MODE ▷ #cuda (18 messages🔥):

- CUDA MODE ▷ #torch (5 messages):

- CUDA MODE ▷ #suggestions (1 messages):

- CUDA MODE ▷ #jobs (1 messages):

- CUDA MODE ▷ #beginner (12 messages🔥):

- CUDA MODE ▷ #youtube-recordings (1 messages):

- CUDA MODE ▷ #jax (11 messages🔥):

- CUDA MODE ▷ #ring-attention (39 messages🔥):

- Perplexity AI ▷ #general (58 messages🔥🔥):

- Perplexity AI ▷ #sharing (3 messages):

- Perplexity AI ▷ #pplx-api (20 messages🔥):

- LangChain AI ▷ #general (38 messages🔥):

- LangChain AI ▷ #langserve (1 messages):

- LangChain AI ▷ #share-your-work (3 messages):

- LangChain AI ▷ #tutorials (1 messages):

- DiscoResearch ▷ #general (29 messages🔥):

- DiscoResearch ▷ #benchmark_dev (1 messages):

- Skunkworks AI ▷ #general (1 messages):

- Skunkworks AI ▷ #off-topic (4 messages):

- Skunkworks AI ▷ #papers (1 messages):

- Datasette - LLM (@SimonW) ▷ #ai (2 messages):

- Datasette - LLM (@SimonW) ▷ #llm (4 messages):

- Alignment Lab AI ▷ #general-chat (1 messages):

- Alignment Lab AI ▷ #oo (2 messages):

- LLM Perf Enthusiasts AI ▷ #general (1 messages):

- LLM Perf Enthusiasts AI ▷ #opensource (1 messages):

- LLM Perf Enthusiasts AI ▷ #embeddings (1 messages):

- AI Engineer Foundation ▷ #events (3 messages):

PART 1: High level Discord summaries

TheBloke Discord Summary

LLM Guessing Game Evaluation: Experiments with language models demonstrated their potential in understanding instructions, specifically for interactive guessing games where accurate number selection and user engagement are key.

UX Battleground: Chatbots: A heated debate around chatbot interfaces juxtaposed the cumbersome Nvidia's Chat with RTX against the nimble Polymind, underscoring the importance of user-friendly configurations.

RAG's Rigorous Implementation Road: Retrieval and generation feature integration sparked discussion, with attention on the complexity of incorporating such features cleanly and effectively into projects.

Discord Bots CSS Woes: Frustration was aired over CSS challenges when customizing Discord bots, highlighting the struggle for seamless integration between UI design and bot functionality.

VRAM: The Unseen Compute Currency: With an iron focus on resource optimization, the discourse centered on harmonizing VRAM capacity with model demands, emphasizing the balance between performance and computational overhead.

Character Roleplay Fine-tuning Finesse: Users like @superking__ and @netrve shared insights into the art of fine-tuning AI for character roleplay, with strategies revolving around comprehensive base knowledge and targeted training through Dynamic Prompt Optimization (DPO).

AI Story and Role-Play Enthusiasm: The release of new models targeted at story-writing and role-playing, trained on human-generated content for improved, steerable interactions in ChatML, has sparked keen interest for real-world testing.

Code Classification Conundrum: A quest for the ideal LLM to classify code relevance within a RAG pipeline led to the contemplation of deepseek-coder-6.7B-instruct, as community members seek further guidance.

Mistral Model Download Drought: An unelaborated request for local Mistral accessibility surfaces, but with too little information for constructive community support.

Workflow Woes on Mac Studio: The ML workflow struggle on Mac Studio was articulated, including a potential switch from ollama to llama.cpp, praising its simplicity and questioning the industry's push towards ollama.

VSCode Dethroned by Zed: Users like @dirtytigerx promote Zed as superior to Visual Studio Code, highlighting its minimal design and speed. An opening for Pulsar, an Atom-based text editor now open-sourced, is perceived with interest.

Scaling Inference with Tactical GPU Deployment: Cost-effective approaches to scaling inference servers are discussed, suggesting initial prototyping with affordable GPUs like the 4090 on runpod before full-scale deployment, mindful of the dependability of service agreements with cloud providers.

LM Studio Discord Summary

- LM Studio Updates Demand Manual Attention: Users must manually download the latest features and bug fixes from LM Studio v0.2.16 as the in-app update feature is currently non-functional. The updates include Gemma model support, improved download management, and UI enhancements, with critical bugs addressed in v0.2.16, especially for MacOS users experiencing high CPU usage.

- Community Tackles Gemma Glitches: Ongoing discussions reveal the Gemma 7B model has been problematic, with performance issues and errors; however, the Gemma 2B model received positive feedback. The Gemma 7B on M1 Macs showed improvements after GPU slider adjustments. A working Gemma 2B model is available on Hugging Face.

- Stable Diffusion 3 Sparks Interest: Stability.ai announced the early preview of Stable Diffusion 3, sparking discussions among users interested in its improved multi-subject image quality. Enthusiasts consider signing up for the preview and discuss web UI tools like AUTOMATIC1111 for image manipulation tasks separate from LM Studio's focus.

- Hardware Hurdles for Large Models Explored: The community delves into the challenges of running large models like Goliath 120B Q6, exchanging insights on the viability of older GPUs like the Tesla P40, and debating the balance between VRAM capacity and GPU performance for AI tasks.

- Gemma Model Troubleshooting Continues: Users experience mixed success with different quantizations of Gemma, with the 7B model frequently producing gibberish, while the 2B model performs more reliably. LM Studio downloads have faced critical issues, with suggestions to resolve them on LM Studio's website and GitHub. A stable quantized Gemma 2B model confirmed for LM Studio can be found at this Hugging Face link.

Nous Research AI Discord Summary

Scaling LLMs to New Heights: @gabriel_syme highlighted a repository focused on data engineering for scaling language models to 128K context, a significant advancement in the field. The VRAM requirements for such models at 7B scale exceed 600GB, a substantial demand for resources as noted by @teknium.

Google Enters the LLM Arena: Google introduced Gemma, a series of lightweight, open-source models, with enthusiastic coverage from @sundarpichai and mixed community feedback comparing Gemma with existing models like Mistral and LLaMA. Users @big_ol_tender and @mihai4256 engaged in various discussions, from the impact of instruction placement to VM performance across different services.

Open Source Development and Support: @pradeep1148 shared a video suggesting self-reflection could improve RAG models, and @blackblize sought guidance on using AI for artistic image generation with microscope photos. Meanwhile, @afterhoursbilly and @_3sphere critiqued AI-generated imagery of Minecraft's inventory UI.

Emerging AI Infrastructure Discussions: Conversations on Nous-Hermes-2-Mistral-7B-DPO-GGUF reflected queries about its comparison to other models, and @iamcoming5084 talked about out-of-memory errors with Mixtral 8x7b models. Strategies for hosting large models like Mixtral 8x7b were also examined, with users debating over different tools and pointing out errors in inference codes (corrected inference code for Nous-Hermes-2-Mistral-7B-DPO).

Collaborative Project Challenges: In #project-obsidian, @qnguyen3 notified of project delays due to personal circumstances and suggested direct messaging for coordination on the project front.

Eleuther Discord Summary

- Clarifying Model Evaluation and lm eval Confusion:

@lee0099's confusion overlm evalbeing set for runpod led to@hailey_schoelkopfclarifying the difference between lm eval and llm-autoeval, referencing the Open LLM Leaderboard’s HF spaces page for instructions and parameters. No clear consensus was formed on@gaindrew's proposal for ranking models by net carbon emissions due to the challenge in accuracy.

- Gemma's Growing Pains and Technical Teething: The introduction of Google's Gemma by

@sundarpichaistirred debates on its improvement over models like Mistral. Parameter count misrepresentation in Gemma models ("gemma-7b" actually with 8.5 billion parameters) was highlighted. Groq claims 4x throughput on Mistral Mixtral 8x7b model with a substantial cost reduction. Concerns about the environmental footprint of models and@philpax’s report on Groq's claims were discussed alongside researchers delving into model efficiency and PGI's use in addressing data loss.

- Navigating Through Multilingual Model Mysteries: A Twitter post and companion GitHub repository spurred a debate on whether models “think in English” and the utility of a tuned lens on models like Llama.

@mrgonao's discussion on multilingual capabilities led to a consideration of creating a Chinese lens.

- Technical Deep Dive in LM Thunderdome: Amidst a myriad of memory issues,

@pminervinifaced persistent GPU memory occupation post-OOM error in Colab, requiring a runtime restart, with the problem reproduced in Colab’s Evaluate OOM Issue environment. Problems were also reported with evaluating the Gemma-7b model, requiring intervention from@hailey_schoelkopfwho provided a fix approach and optimization tips usingflash_attention_2.

- Tackling False Negatives and Advancing CLIP: In multimodal conversations,

@tz6352and@_.hrafn._discussed the in-batch false negative issue within the CLIP model, elaborating on solutions involving unimodal embeddings and the strategy for negative exclusion by utilizing similarity scores during model training.

- The Importance of Pre-training Sequence Composition: Only one message was recorded from

@pminerviniin the gpt-neox-dev channel, which shared an arXiv paper stating the benefits of intra-document causal masking to eliminate distracting content from previous documents, potentially improving language model performance across various tasks.

LAION Discord Summary

- Google Unveils Gemma Model, Steers Towards Open AI: Google has introduced Gemma, representing a step forward from its Gemini models and suggesting a shift towards more open AI development. There is community interest in Google's motivations behind releasing actual open-sourced weights, given their traditional reluctance to do so.

- Stable Diffusion 3 Interests and Concerns: The early preview of Stable Diffusion 3 has been announced, focusing on improved multi-subject prompt handling and image quality, but its differentiation from earlier versions is under scrutiny. Questions have also arisen regarding the commercial utilization of SD3 and whether open-sourcing serves more as a publicity tactic than a revenue strategy.

- AI Sector Centralization Raises Eyebrows: Discussions reflect growing concerns over the centralization of AI development and resources, such as Stable Diffusion 3 being less open, which potentially moves computing power out of reach for end-users.

- Diffusion Models as Neural Network Creators: An Arxiv paper shares insights on how diffusion models can be used to generate efficient neural network parameters, indicating a fresh and possibly transformative method for crafting new models.

- AnyGPT: The Dawn of a Unified Multimodal LLM: The introduction of AnyGPT, with demo available on YouTube, spotlighted the capability of Language Learning Models (LLMs) to process diverse data types such as speech, text, images, and music.

Mistral Discord Summary

- Mistral's Image Text Extraction Capabilities Scrutinized: Mistral AI is questioned on its ability to retrieve text from complex images. gpt4-vision, gemini-vision, and blip2 were recommended over simpler tools like copyfish and google lens for tasks requiring higher flexibility.

- Mistral API and Fine-tuning Explored: Users exchanged information on various Mistral models including guidance for the Mistral API, fine-tuning Mistral 7B and Mistral 8x7b models, and deploying models on platforms like Hugging Face and Vertex AI. The Basic RAG guide was cited for integrating company data (Basic RAG | Mistral AI).

- Deployment Discussions Highlight Concerns and Cost Assessment: Queries about AWS hosting costs and proper GPU selection for vLLM sparked discussions on deployment options. Documentation was referenced for deploying vLLM (vLLM | Mistral AI).

- Anticipation for Unreleased Mistral Next: Mistral-Next has been confirmed as an upcoming model with no API access at present, while Mistral Next's superb math performance drew comparisons with GPT-4. Details are anticipated but not yet released.

- Showcasing Mistral's Versatility and Potential: A YouTube video showcased enhancing RAG with self-reflection (Self RAG using LangGraph), while another discussed fine-tuning benefits (BitDelta: Your Fine-Tune May Only Be Worth One Bit). Jay9265's test of Mistral-Next on Twitch (Twitch) and prompting capabilities guidance (Prompting Capabilities | Mistral AI) were also featured to highlight Mistral's capabilities and uses.

OpenAI Discord Summary

- Google's AI Continues to Evolve: Google has unveiled a new model with updated features; however, details regarding its name and capabilities were not fully specified. In relation to OpenAI, ChatGPT's mobile version lacks plugin support, as confirmed in the discussions, leading users to try the desktop version on mobile browsers for a full feature set.

- OpenAI Defines GPT-4 Access Limits: Debates occurred regarding GPT-4's usage cap, with members clarifying that the cap is dynamically adjusted based on demand and compute availability. Evidently, there is no reduction in GPT-4's model performance since its launch, putting to rest any circulating rumors about its purported diminishing power.

- Stability and Diversity in AI Models: Stability.ai made news with their early preview of Stable Diffusion 3, promising enhancements in image quality and prompt handling, while discussions around Google's Gemini model raised questions about its approach to diversity.

- Prompt Engineering Mastery: For AI engineers aiming to improve their AI's roleplaying capabilities, the key is crafting prompts with clear, specific, and logically consistent instructions, using open variables and a positive reinforcement approach. Resources for further learning in this domain can be found on platforms like arXiv and Hugging Face.

- Navigating API and Model Capabilities: API interactions operate on a pay-as-you-go basis, separate from any Plus subscriptions, and there's a newly increased file upload limit of twenty 512MB files. Discussions also touched on the nuances of training models with HTML/CSS files, aiding engineers to refine GPT's understanding and output of web development languages.

HuggingFace Discord Summary

- 404 Account Mystery and Diffusion Model Deep Dive: Users reported various issues with HuggingFace such as an account yielding a 404 error, potentially due to inflating library statistics, and challenges configuring the

huggingface-vscodeextension on NixOS. Additionally, deep discussions on diffusion models like SDXL using Fourier transform for enhancing microconditioning inputs were shared, with interests also in interlingua-based translators for university projects and running the BART-large-mnli model with expanded classes.

- Engineering AI's Practicalities:

- A user shared a web app for managing investment portfolios, accompanied by a Kaggle Notebook.

- A multi-label image classification tutorial notebook using SigLIP was introduced.

- TensorFlow issue resolved by reinstalling with

version 2.15. - Sentence similarity challenges in biomedicine were addressed, with contrastive learning and tools like sentence transformers and setfit recommended for fine-tuning.

- Challenging AI Paradigms: - A problem with PEFT not saving the correct heads for models without auto configuration was discussed, and a new approach using the Reformer architecture for memory-efficient models on edge devices was cited. - Discussions around model benchmarking efforts included a shared leaderboard and repository link, inviting contributions and insights.

- Emerging AI Technologies Alerted: - An Android app for monocular depth estimation and an unofficial ChatGPT API using Selenium were presented, raising TOS and protection evasion concerns. - Announcements included Stable Diffusion 3's early preview and excitement for nanotron going open-source on GitHub, signifying continuous improvement and community efforts in the AI space.

Latent Space Discord Summary

- Google Unveils Gemma Language Models: Google introduced a new family of language models named Gemma, with sizes 7B and 2B now available on Hugging Face. The terms of release were shared, highlighting restrictions on distributing model derivatives (Hugging Face blog post, Terms of release).

- Deciphering Tokenizer Differences: An in-depth analysis comparing Gemma’s tokenizer to Llama 2's tokenizer was conducted, revealing Gemma's larger vocabulary and special tokens. This analysis was supported by links to the tokenizer's model files and a diffchecker comparison (tokenizer's model file, diffchecker comparison).

- Stable Diffusion 3 Hits the Scene: Stability AI announced Stable Diffusion 3 in an early preview, improving upon prior versions with better performance in multi-subject prompts and image quality (Stability AI announcement).

- ChatGPT's Odd Behavior Corrected: An incident of unusual behavior by ChatGPT was reported and then resolved, as indicated on the OpenAI status page. Members shared links to tweets and the incident report for context (OpenAI status page).

- Exploring AI-Powered Productivity: Conversations revolved around the integration of Google's Gemini AI into Workspace and Google One services, discussing its new features such as the 1,000,000 token context size and video input capabilities (Google One Gemini AI, Google Workspace Gemini).

LlamaIndex Discord Summary

- Simplifying RAG Construction:

@IFTTTdiscussed the complexities of building advanced RAG systems and suggested a streamlined approach using a method from @jerryjliu0’s presentation that pinpoints pain points in each pipeline component.

- RAG Frontend Creation Made Easy: For LLM/RAG experts lacking React knowledge, Marco Bertelli's tutorial, endorsed by

@IFTTT, demonstrates how to craft an appealing frontend for their RAG backend, with resources available from @llama_index.

- Elevating RAG Notebooks to Applications:

@wenqi_glantzprovides a guide for transforming RAG notebooks into full-stack applications featuring ingestion and inference microservices, shared in a tweet by@IFTTT, with the full tutorial accessible here.

- QueryPipeline Setup and Import Errors in LlamaIndex: Issues such as setting up a simple RAG using QueryPipeline, difficulties importing

VectorStoreIndexfromllama_index, and importingLangchainEmbeddingwere discussed, with the QueryPipeline documentation and suggestions to import fromllama_index.coresuggested as potential fixes.

- LlamaIndex Resource Troubleshooting: Topics covered the

ValueErrorwhen downloading CorrectiveRAGPack, for which a related PR #11272 might offer a solution, and broken documentation links affecting users like@andaldanawho sought updated methods or readers within LlamaIndex for processing data from SQL database entries.

- Engagement and Inquiries in AI Discussion:

@behanzin777showed appreciation for suggested solutions in the community,@dadabit.sought recommendations on summarization metrics and tools within LlamaIndex, and@.dheemanthrequested leads on a user-friendly platform to evaluate LLMs with capabilities akin to MT-Bench and MMLU.

OpenAccess AI Collective (axolotl) Discord Summary

- Google's Gemma Unleashed: Google's new Gemma model family sparks active discussion, with licensing found to be less restrictive than LLaMA 2 and its models now accessible via Hugging Face. A 7B Gemma model was re-uploaded for public use, sidestepping Google's access request protocol. However, finetuning Gemma has presented issues, referencing GitHub for potential early stopping callback problems.

- Axolotl Development Dives into Gemma: Work is underway on the axolotl codebase, integrating readme, val, and example fixes. Training Gemma models on the non-dev version of transformers was stressed, with an updated gemma config file shared for setup ease. There's debate over appropriate hyperparameters, such as learning rate and weight decay for Gemma models. Ways to optimize Mixtral model are also being explored, promising speed boosts in prefilling and decoding with AutoAWQ.

- Alpaca Aesthetics Add to Axolotl: A jinja template for alpaca is being sought after to enhance the axolotl repository. Training tips with DeepSpeed and correct inference formatting after finetuning models are in demand, alongside troubleshooting FlashAttention issues. Repeated inquiries prompt calls for better documentation, drawing attention to the necessity of a comprehensive guide.

- Opus V1 Models Make for Magnetizing Storytelling: Opus V1 models have been unveiled, trained on a substantial corpus for story-writing and role-playing, accessible on Hugging Face. The models benefit from an advanced ChatML prompting mechanics for controlled outputs, with an instructional guide elaborating on steering the narrative.

- RunPod Resources Require Retrieval: A user faced issues with the disappearance of the RunPod image and the Docker Hub was suggested as a place to look for existing tags. Erroneous redirects in the GitHub readme suggest documentation updates are needed to correctly guide users to the right resources.

CUDA MODE Discord Summary

- Groq's LPU Outshines Competitors: Groq's Language Processing Unit achieved 241 tokens per second on large language models, a new AI benchmark record. Further insight into Groq's technology can be seen in Andrew Bitar’s presentation "Software Defined Hardware for Dataflow Compute" available on YouTube.

- NVIDIA Nsight Issues in Docker: Engineers are seeking help with installing NVIDIA Nsight for debugging in Docker containers, with some pointing to similar struggles across cloud providers and one mention of a working solution at lighting.ai studios.

- New BnB FP4 Repo Promises Speed: A new GitHub repository has been released for bnb fp4 code, reported to be faster than bitsandbytes, but requiring CUDA compute capability >= 8.0 and significant VRAM.

- torch.compile Scrutinized: torch.compile's limitations are being debated, especially its failure to capture speed enhancements available through Triton/CUDA and its inability to handle dynamic control flow and kernel fusion gains effectively.

- Gemini 1.5 Discussion Opened: All are invited to join a discussion on Gemini 1.5 through a Discord invite link. Additionally, a video showcasing AI's ability to unlock semantic knowledge from audio files was shared, offering insights into AI learning from audio here.

- ML Engineer Role at SIXT: SIXT in Munich is hiring an ML Engineer with a focus on NLP and Generative AI. Those interested can apply via the career link.

- CUDA Endures Amid Groq AI Rise: Discussions around CUDA’s possible obsolescence with Groq AI's emergence led to reaffirmations of CUDA's foundational knowledge being valuable and unaffected by advancing compilers and architectures.

- TPU Compatibility and GPU Woes with ROCm: Migrating codes to GPU from TPU, facing shape dimension errors, and limited AMD GPU support with ROCm were hot topics. The shared GitHub repo for inference on AMD GPUs lacks a necessary backward function/kernel.

- Ring-Attention Gathers Collaborative Momentum: The community is actively involved in debugging and enhancing the flash-attention-based ring-attention implementation, with live hacking sessions planned to tackle issues like the necessity of FP32 accumulation. Relevant discussions and code can be found in this repo.

- House-Keeping for YouTube Recordings: A reminder was issued to maintain channel integrity, requesting users to post content relevant to youtube-recordings only, and redirect unrelated content to the designated suggestions channel.

Perplexity AI Discord Summary

Gemini Unveiled: @brknclock1215 helps dispel confusion around Google’s Gemini model family, sharing resources like a two-month free trial for Gemini Advanced (Ultra 1.0), a private preview for Gemini Pro 1.5, and directing users to a blog post detailing the differences.

Bot Whisperers Wanted: There's a jesting interest in the Perplexity AI bot, with users discussing its offline status and how to use it. For the perplexed about Perplexity's Pro version and billing, users shared a link to the FAQ for clarity.

API Conundrums and Codes: Contributors report discrepancies between Perplexity's API and website content, seeking improved accuracy. Guidance suggests using simpler queries, while an ongoing issue with gibberish responses from the pplx-70b-online model is acknowledged with an outlook towards resolution. Integrating Google's GEMMA with Perplexity's API is also queried.

Cryptocurrency and Health Searches on Spotlight: Curious minds conducted Perplexity AI searches on topics ranging from cryptocurrency trading jargon to natural oral health remedies, highlighting a community engaged in diverse subjects.

Financial Instruments Query: A quest for understanding led to a search query on financial instruments, pointing to a trend where technical specificity is key in discussions revolving around finance.

LangChain AI Discord Summary

- Dynamic Class Creation Conundrum:

@deltz_81780encountered a ValidationError when attempting to dynamically generate a class for PydanticOutputFunctionsParser and sought assistance with the issue in the general channel.

- AI Education Expansion:

@mjoeldubannounced a LinkedIn Learning course focused on LangChain and LCEL and shared a course link, while a new "Chat with your PDF" LangChain AI tutorial by@a404.ethwas highlighted.

- Support and Discontent: Discussions around langchain support were had, where

@mysterious_avocado_98353expressed disappointment, and@renlo.responded by pointing out paid support options available on the pricing page.

- Error Strikes LangSmith API:

@jacobito15faced an HTTP 422 error from the LangSmith API due to aChannelWritename exceeding 128 characters during batch ingestion trials in the langserve channel.

- Innovation Invitation:

@pk_penguinextended an unnamed trial invitation in share-your-work,@gokusan8896posted about Parallel Function Calls in Any LLM Model on LinkedIn, and@rogesmithbeckoned feedback on a potential aggregate query platform/library.

DiscoResearch Discord Summary

- Google's Open-Source Gemma Models Spark Language Diversity Queries: @sebastian.bodza brought attention to Google's Gemma models being open-sourced, with an inquiry on language support particularly for German, leading to a discussion on their listings on Kaggle and their instruction version availability on Hugging Face. The conversation also touched on commercial aspects and vocabulary size.

- Mixed Reactions to Aleph Alpha's Model Updates: Skepticism over updates to Aleph Alpha's models was expressed by @sebastian.bodza, with a lack of instruction tuning highlighted and a follow-up by @devnull0 about recent hiring potentially influencing future model quality. Criticism was leveled at the updates for not including benchmarks or examples as seen in their changelog.

- Model Performance Scrutinized from Tweets: The efficacy of Gemma and Aleph Alpha's models provoked critical discussions, with posted tweets by @ivanfioravanti and @rohanpaul_ai indicating performance issues with models, particularly in languages like German and when compared to other models like phi-2.

- Batch Sizes Impact Model Scores: Issues were raised by @calytrix concerning the impact of batch size on model performance, specifically that a batch size other than one could lead to lower scores, as indicated in a discussion on the HuggingFace Open LLM Leaderboard.

- Model Test Fairness Under Scrutiny: Discussions on fairness in testing models were sparked by @calytrix, who proposed that a fair test should be realistic, unambiguous, devoid of luck, and easily understandable, and asked for a script to regenerate metrics from a specific blog post, delving into the nuances of what could skew fairness in model evaluations.

Skunkworks AI Discord Summary

- Insider Tips for Neuralink Interviews: A guild member,

@xilo0, is seeking advice for an upcoming interview with Neuralink, specifically on how to approach the "evidence of exceptional ability" question and which projects to highlight to impress Elon Musk's team.

- Exploring the Depths of AI Enhancements:

@pradeep1148shared a series of educational YouTube videos addressing topics such as improving RAG through self-reflection and the questionable value of fine-tuning LLMs, alongside introducing Google's open source model, Gemma.

- Mystery Around KTO Reference: In the papers channel,

nagaraj_arvindcryptically discusses KTO but withholds detail, leaving the context of the discussion incomplete and the significance of KTO to AI Engineers unexplained.

Datasette - LLM (@SimonW) Discord Summary

- Google's Gemini Pro 1.5 Redefines Boundaries: Google's new Gemini Pro 1.5 offers a 1,000,000 token context size and has innovated further by introducing video input capabilities. Simon W expressed enthusiasm for these features, which set it apart from other models like Claude 2.1 and gpt-4-turbo.

- Fresh Docs for Google's ML Products: Fresh documentation for Google's machine learning offerings is now accessible at the Google AI Developer Site, though no specific details about the documentation contents were provided.

- Call for Support with LLM Integration Glitches: In addressing system integration challenges, @simonw recommended that any unresolved issues should be reported to the gpt4all team for assistance.

- Vision for GPT-Vision: @simonw suggested adding image support for GPT-Vision in response to questions about incorporating file support in large language models (LLMs).

- Gemma Model Teething Problems: There have been reports of the new Gemma model outputting placeholder text, not the anticipated results, leading to recommendations for updating dependencies via

llm pythoncommand to potentially remediate this.

Alignment Lab AI Discord Summary

- Scoping Out Tokens?: Scopexbt inquired about the existence of a token related to the community, noting an absence of information.

- GLAN Discussion Initiates:

.benxhshared interest in Gradient Layerwise Adaptive-Norms (GLAN) by posting the GLAN paper, prompting positive reactions.

LLM Perf Enthusiasts AI Discord Summary

- Google Unveils Gemma: In the #opensource channel, potrock shared a blog post announcing Google's new Gemma open models initiative.

- Contrastive Approach Gets a Nod: In the #embeddings channel, a user voiced support for ContrastiveLoss, emphasizing its efficacy in tuning embeddings and noted MultipleNegativesRankingLoss as another go-to loss function.

- Beware of Salesforce Implementations: In the #general channel, res6969 warned against adopting Salesforce, suggesting it could be a disastrous choice for organizations.

AI Engineer Foundation Discord Summary

- Talk Techie to Me: Gemini 1.5 Awaits!:

@shashank.f1extends an invitation for a live discussion on Gemini 1.5, shedding light on previous sessions, including talks on the A-JEPA AI model for extracting semantic knowledge from audio. Previous insights available on YouTube. - Weekend Workshop Wonders:

@yikesawjeezcontemplates shifting their planned event to a weekend, aiming for better engagement opportunities and potential sponsorship collaborations, which might include a connection with@llamaindexon Twitter and a Devpost page setup.

PART 2: Detailed by-Channel summaries and links

TheBloke ▷ #general (1132 messages🔥🔥🔥):

- Exploring LLM Game Dynamics: Users experimented with language models to evaluate their ability to interpret instructions accurately, specifically within the context of guessing games where models were expected to choose a number and interact based on user guesses.

- Chatbot User Experience Discussions: There were comparisons between different chatbot UIs, with a focus on their ease of setup and usage. The conversation included pointed criticisms towards Nvidia's Chat with RTX and appreciation for smaller, more efficient setups like Polymind.

- Function Calling Challenges and RAG Implementations: Discussions included the complexity of implementing Retrieve and Generate (RAG) functionalities and custom implementations by users, with critiques on existing implementations' complexity and praise for more streamlined versions.

- Discord Bots and CSS Troubles: Users shared frustrations with CSS implementation difficulties and talked about customizing Discord bots for better user interaction and task handling.

- Optimizations and Model Preferences: Hardware constraints and optimizations were a significant topic, with users advising on suitable models for various hardware setups. The conversation highlighted the importance of VRAM and the balance between performance and model complexity.

Links mentioned:

- Tweet from Alex Cohen (@anothercohen): Sad to share that I was laid off from Google today. I was in charge of making the algorithms for Gemini as woke as possible. After complaints on Twitter surfaced today, I suddenly lost access to Han...

- Bloomberg - Are you a robot?: no description found

- Bloomberg - Are you a robot?: no description found

- Test Drive The New NVIDIA App Beta: The Essential Companion For PC Gamers & Creators: The NVIDIA app is the easiest way to keep your drivers up to date, discover NVIDIA applications, capture your greatest moments, and configure GPU settings.

- Know Nothing GIF - No Idea IDK I Dunno - Discover & Share GIFs: Click to view the GIF

- Chris Pratt Andy Dwyer GIF - Chris Pratt Andy Dwyer Omg - Discover & Share GIFs: Click to view the GIF

- Wyaking GIF - Wyaking - Discover & Share GIFs: Click to view the GIF

- No Sleep GIF - No Sleep Love - Discover & Share GIFs: Click to view the GIF

- https://i.redd.it/1v6hjhd86vj31.png: no description found

- I Dont Know But I Like It Idk GIF - I Dont Know But I Like It I Dont Know Idk - Discover & Share GIFs: Click to view the GIF

- How fast is ASP.NET Core?: Programming Adventures

- Machine Preparing GIF - Machine Preparing Old Man - Discover & Share GIFs: Click to view the GIF

- Painting GIF - Painting Bob Ross - Discover & Share GIFs: Click to view the GIF

- 3rd Rock GIF - 3rd Rock From - Discover & Share GIFs: Click to view the GIF

- Reddit - Dive into anything: no description found

- LLM (w/ RAG) need a new Logic Layer (Stanford): New insights by Google DeepMind and Stanford University on the limitations of current LLMs (Gemini Pro, GPT-4 TURBO) regarding causal reasoning, and logic. U...

- VRAM Calculator: no description found

- GitHub - itsme2417/PolyMind: A multimodal, function calling powered LLM webui.: A multimodal, function calling powered LLM webui. - GitHub - itsme2417/PolyMind: A multimodal, function calling powered LLM webui.

- Reddit - Dive into anything: no description found

- Interrogate DeepBooru: A Feature for Analyzing and Tagging Images in AUTOMATIC1111: Understand the power of image analysis and tagging with DeepBooru. Learn how this feature enhances AUTOMATIC1111 for anime-style art creation. Interrogate DeepBooru now!

- GitHub - Malisius/booru2prompt: An extension for stable-diffusion-webui to convert image booru posts into prompts: An extension for stable-diffusion-webui to convert image booru posts into prompts - Malisius/booru2prompt

TheBloke ▷ #characters-roleplay-stories (299 messages🔥🔥):

- Tuning Tips for Character Roleplay:

@superking__and@netrveexplored fine-tuning specifics for roleplay models; about having the base model know everything and then fine-tuning so that characters write only what they should know. There was also mention of using DPO (Dynamic Prompt Optimization) for narrowing down training and questioning how scientific papers are formatted in training datasets.

- AI Brainstorming for Better Responses:

@superking__observed that letting the model brainstorm before giving an answer often makes it appear smarter. Alternatively, forcing a model to answer using grammars might make it appear dumber due to limited hardware resources.

- Exploring Scientific Paper Formatting in Models:

@kaltcitshared their process of DPOing on scientific papers, creating a collapsed dataset from academic papers for DPO, and discussed the issue of model loss spikes during training with@c.gato.

- Roleplaying and ChatML Prompts Strategies:

@superking__and@euchalediscussed prompt structures for character roleplay and how to prevent undesired point-of-view shifts, while@netrveshared experiences using MiquMaid v2 for roleplay, noting its sometimes overly eager approach to lewd content.

- New AI Story-Writing and Role-playing Models Released:

@dreamgenannounced the release of new AI models specifically designed for story-writing and role-playing. These models were trained on human-generated data and can be used with prompts in an extended version of ChatML, aiming for steerable interactions. Users like@splice0001and@superking__expressed enthusiasm for testing them out.

Links mentioned:

- LoneStriker/miqu-1-70b-sf-5.5bpw-h6-exl2 · Hugging Face: no description found

- Viralhog Grandpa GIF - Viralhog Grandpa Grandpa Kiki Dance - Discover & Share GIFs: Click to view the GIF

- Sheeeeeit GIF - Sheeeeeit - Discover & Share GIFs: Click to view the GIF

- dreamgen/opus-v1-34b · Hugging Face: no description found

- Opus V1: Story-writing & role-playing models - a dreamgen Collection: no description found

- DreamGen: AI role-play and story-writing without limits: no description found

- Models - Hugging Face: no description found

- Models - Hugging Face: no description found

TheBloke ▷ #training-and-fine-tuning (3 messages):

- Choosing the Right Model for Code Classification: User

@yustee.is seeking advice on selecting an LLM for classifying code relevance related to a query for a RAG pipeline.@yustee.is considering deepseek-coder-6.7B-instruct but is open to recommendations.

- Mistral Download Dilemma: User

@aamir_70931is asking for assistance with downloading Mistral locally but provided no further context or follow-up.

TheBloke ▷ #coding (163 messages🔥🔥):

- ML Workflow Conundrums and Mac Mysteries:

@fred.blissdiscussed the challenges of establishing a workflow for machine learning projects using a Mac Studio and considering the use ofllama.cppinstead of ollama due to its simpler architecture. They express concern over the market push for ollama, although they've been usingllama.cppon non-GPU PCs for some time.

- Exploring MLX and Zed as VSCode Alternatives:

@dirtytigerxrecommended MLX for TensorFlow/Keras tasks and praised Zed, a text editor from the Atom team, for its performance and minimal setup preference over Visual Studio Code. There's also a hint of interest in an open-source project forked from Atom named Pulsar.

- VsCode vs. Zed Debate:

@dirtytigerxelaborated on their preference for Zed over Visual Studio Code to@wbsch, highlighting Zed's minimalistic design and speed. They also discussed their experience with Neovim as an alternative and the potential for Zed to support remote development similar to VSCode.

- Microsoft's Developer-Oriented Shift: A discussion between

@dirtytigerxand@wbschon Microsoft’s transformative approach towards catering to developers, specifically mentioning their acquisition of GitHub leading to positive developments and the popularity of VSCode with integration of tools like Copilot.

- Scaling Inference Servers and GPU Utilization: In a conversation with

@etron711,@dirtytigerxadvised on strategies for scaling inference servers to handle a high number of users, suggesting prototyping with cheaper resources, like $0.80/hr for a 4090 on runpod, as initial steps for cost analysis. They also cautioned about the reliance on GPU availability and SLAs when working with providers like AWS.

Links mentioned:

- GitHub - raphamorim/rio: A hardware-accelerated GPU terminal emulator focusing to run in desktops and browsers.: A hardware-accelerated GPU terminal emulator focusing to run in desktops and browsers. - raphamorim/rio

- GitHub - pulsar-edit/pulsar: A Community-led Hyper-Hackable Text Editor: A Community-led Hyper-Hackable Text Editor. Contribute to pulsar-edit/pulsar development by creating an account on GitHub.

LM Studio ▷ #💬-general (598 messages🔥🔥🔥):

- LM Studio Receives Updates: Users are advised to manually download the latest LM Studios updates from the website as the in-app "Check for Updates" feature isn't functioning.

- Gemma Model Discussions: Many users report problems with the Gemma 7B model, some citing performance issues even after updates. The Gemma 2B model receives some positive feedback, and a link to a usable Gemma 2B on Hugging Face is shared.

- Performance Concerns with New LM Studio Version: Several users describe performance drops and high CPU usage with the latest LM Studio version on MacOS, particularly affecting the Mixtral 7B model.

- Gemma 7B on M1 Macs Requires GPU Slider Adjustment: Users running Gemma 7B on M1 Macs noticed major performance improvements after adjusting the GPU slider to "max", although some still experience slower response times.

- Stable Diffusion 3 Announcement: Stability.ai announces Stable Diffusion 3 in an early preview phase, promising improved performance and multi-subject image quality. Users show interest and discuss signing up for the preview.

Links mentioned:

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- lmstudio-ai/gemma-2b-it-GGUF · Hugging Face: no description found

- MSN: no description found

- Gemma: Introducing new state-of-the-art open models: Gemma is a family of lightweight, state\u002Dof\u002Dthe art open models built from the same research and technology used to create the Gemini models.

- Stable Diffusion 3 — Stability AI: Announcing Stable Diffusion 3 in early preview, our most capable text-to-image model with greatly improved performance in multi-subject prompts, image quality, and spelling abilities.

- google/gemma-7b · Hugging Face: no description found

- LoneStriker/gemma-2b-GGUF · Hugging Face: no description found

- Reddit - Dive into anything: no description found

- How To Run Stable Diffusion WebUI on AMD Radeon RX 7000 Series Graphics: Did you know you can enable Stable Diffusion with Microsoft Olive under Automatic1111 to get a significant speedup via Microsoft DirectML on Windows? Microso...

- جربت ذكاء إصطناعي غير خاضع للرقابة، وجاوبني على اسئلة خطيرة: ستريم كل نهار في تويتش :https://www.twitch.tv/marouane53Reddit : https://www.reddit.com/r/Batallingang/إنستغرام : https://www.instagram.com/marouane53/سيرفر ...

- GitHub - lllyasviel/Fooocus: Focus on prompting and generating: Focus on prompting and generating. Contribute to lllyasviel/Fooocus development by creating an account on GitHub.

- Mistral's next LLM could rival GPT-4, and you can try it now in chatbot arena: French LLM wonder Mistral is getting ready to launch its next language model. You can already test it in chat.

- Need support for GemmaForCausalLM · Issue #5635 · ggerganov/llama.cpp: Prerequisites Please answer the following questions for yourself before submitting an issue. I am running the latest code. Development is very rapid so there are no tagged versions as of now. I car...

LM Studio ▷ #🤖-models-discussion-chat (149 messages🔥🔥):

- Gemma Model Confusion: Users are experiencing issues with the Gemma model.

@macauljreports errors when trying to run the 7b Gemma model on his GPU, while@nullt3rmentions that quantized models are broken and awaiting fixes from llama.cpp.@yagilbadvises checking for a 2B version due to many faulty quants in circulation, and@heyitsyorkieclarifies that LM Studio needs updates before Gemma models can be functional.

- LM Studio Model Compatibility & Errors: Several users, including

@swiftyosand@thorax7835, discuss finding the best model for coding and uncensored dialogue, while@bambalejoencounters glitches with the Nous-Hermes-2-Yi-34B.Q5_K_M.gguf model. A known bug in version 0.2.15 of LM Studio causing gibberish output upon regeneration was addressed, with a fix suggested by@heyitsyorkie.

- Image Generation Model Discussion:

@antonsosnicevinquires about a picture generation feature akin to Adobe's generative fill, directed by@swight709towards AUTOMATIC1111's stable diffusion web UI for capabilities like inpainting and outpainting, highlighting its extensive plugin system and use for image generation separate from LM Studio's text generation focus.

- Hardware and Configuration Challenges: Users including

@goldensun3dsand@wildcat_aurorashare their setups and the challenges of running large models like Goliath 120B Q6, discussing the trade-offs between performance and hardware limitations such as VRAM and the system's memory bandwidth.

- Multimodal AI Anticipation: The conversation touches on the hope for models that can handle tasks beyond their current capabilities.

@drawingthesunexpresses a desire for LLM and stable diffusion models to interact, while@heyitsyorkiehints at future multimodal models with broader functionality.

Links mentioned:

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- EvalPlus Leaderboard: no description found

- Master Generative AI dev stack: practical handbook: Yet another AI article. It might be overwhelming at times. In this comprehensive guide, I’ll simplify the complex world of Generative AI…

- lmstudio-ai/gemma-2b-it-GGUF · Hugging Face: no description found

- ImportError: libcuda.so.1: cannot open shared object file: When I run my code with TensorFlow directly, everything is normal. However, when I run it in a screen window, I get the following error. ImportError: libcuda.so.1: cannot open shared object fi...

- macaulj@macaulj-HP-Pavilion-Gaming-Laptop-15-cx0xxx:~$ sudo '/home/macaulj/Downl - Pastebin.com: Pastebin.com is the number one paste tool since 2002. Pastebin is a website where you can store text online for a set period of time.

- Big Code Models Leaderboard - a Hugging Face Space by bigcode: no description found

- ```json{ "cause": "(Exit code: 1). Please check settings and try loading th - Pastebin.com: Pastebin.com is the number one paste tool since 2002. Pastebin is a website where you can store text online for a set period of time.

- Models - Hugging Face: no description found

- wavymulder/Analog-Diffusion · Hugging Face: no description found

- Testing Shadow PC Pro (Cloud PC) with LM Studio LLMs (AI Chatbot) and comparing to my RTX 4060 Ti PC: I have been using Chat GPT since it launched about a year ago and I've become skilled with prompting, but I'm still very new with running LLMs "locally". Whe...

- GitHub - AUTOMATIC1111/stable-diffusion-webui: Stable Diffusion web UI: Stable Diffusion web UI. Contribute to AUTOMATIC1111/stable-diffusion-webui development by creating an account on GitHub.

- Reddit - Dive into anything: no description found

LM Studio ▷ #announcements (4 messages):

- LM Studio v0.2.15 Release Announced:

@yagilbunveiled LM Studio v0.2.15 with exciting new features including support for Google's Gemma model, improved download management, conversation branching, GPU configuration tools, refreshed UI, and various bug fixes. The update is available for Mac, Windows, and Linux, and can be downloaded from LM Studio Website, with the Linux version here.

- Critical Bug Fix Update: An important update was urged by

@yagilb, asking users to re-download LM Studio v0.2.15 from the LM Studio Website due to critical bug fixes missing from the original build.

- Gemma Model Integration Tips:

@yagilbshared a link for the recommended Gemma 2b Instruct quant for LM Studio users, available on Hugging Face, and reminded users of Google's terms of use for the Gemma Services.

- LM Studio v0.2.16 Is Now Live: Following the previous announcements,

@yagilbinformed users of the immediate availability of LM Studio v0.2.16, which includes everything from the v0.2.15 update along with additional bug fixes for erratic regenerations and chat scrolls during downloads. Users who've updated to v0.2.15 are encouraged to update to v0.2.16.

Links mentioned:

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- lmstudio-ai/gemma-2b-it-GGUF · Hugging Face: no description found

LM Studio ▷ #🧠-feedback (30 messages🔥):

- Local LLM Installation Questions: User

@maaxportinquired about installing a local LLM with AutoGPT after obtaining LM Studio, expressing desire to host it on a rented server.@senecalouckoffered advice, indicating that setting up a local API endpoint and updating the base_url should suffice for local operation.

- Client Update Confusion:

@msz_mgsexperienced confusion with the client version, noting that 0.2.14 was identified as the latest, despite newer updates being available.@heyitsyorkieclarified that in-app updating is not yet supported and manual download and installation are required.

- Gemma Model Errors and Solutions:

@richardchinnisencountered issues with Gemma models, which led to a discussion culminating in@yagilbsharing a link to a quantized 2B model on Hugging Face to resolve the errors.

- Troubleshooting Gemma 7b Download Visibility: Users

@adtigerningand@thebest6337discussed the visibility of Gemma 7b download files, pinpointing issues with viewing Google Files in LM Studio.@heyitsyorkieprovided guidance on downloading manually and the expected file placement.

- Bug Report on Scrolling Issue:

@drawingthesunreported a scrolling issue in chats that was subsequently acknowledged as a known bug by@heyitsyorkie.@yagilbthen announced the bug fix in version 0.2.16, with confirmation of the resolution from@heyitsyorkie.

Links mentioned:

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- lmstudio-ai/gemma-2b-it-GGUF · Hugging Face: no description found

LM Studio ▷ #🎛-hardware-discussion (130 messages🔥🔥):

- Earnings Report Creates Nvidia Nerves:

@nink1shares their anxious anticipation over Nvidia's earnings report, as they've invested their life savings in Nvidia products, particularly the 3090 video cards. Despite teasing by@heyitsyorkieabout potential "big stonks" gains,@nink1clarifies that their investment has been in hardware, not stocks.

- Decoding the Worth of Flash Arrays:

@wolfspyreponders the potential applications for three 30Tb flash arrays, capable of 1M iops each, sparking a playful exchange with@heyitsyorkieabout piracy and the downsides of a buccaneer's life, such as scurvy and poor work conditions.

- VRAM vs. New GPUs for AI Rendering:

@freethepublicdebtqueries the value of using multiple cheap GPUs for increased VRAM when running large models like Mixtral8x7.@heyitsyorkieprovides links to GPU specs, suggesting that while more VRAM is key, GPU performance can't be ignored, and sometimes a single powerful card like the RTX 3090 suffices.

- Tesla P40 Gains Momentum Among Budget Constraints: Participants such as

@wilsonkeebsand@krypt_lynxdiscuss the viability of older GPUs like the Tesla P40 for AI tasks, weighing up their accessibility against slower performance compared to newer alternatives like the RTX 3090.

- AI Capabilities of Older Nvidia Cards Questioned: Several users like

@exio4and@bobzdarshare their experiences and test results regarding the use of older Nvidia GPUs for AI tasks, revealing that advancements in newer cards contribute significantly to performance gains in AI modeling and inference.

Links mentioned:

- no title found: no description found

- Deploying Transformers on the Apple Neural Engine: An increasing number of the machine learning (ML) models we build at Apple each year are either partly or fully adopting the [Transformer…

- MAG Z690 TOMAHAWK WIFI: Powered by Intel 12th Gen Core processors, the MSI MAG Z690 TOMAHAWK WIFI is hardened with performance essential specifications to outlast enemies. Tuned for better performance by Core boost, Memory B...

- Have You GIF - Have You Ever - Discover & Share GIFs: Click to view the GIF

- The Best GPUs for Deep Learning in 2023 — An In-depth Analysis: Here, I provide an in-depth analysis of GPUs for deep learning/machine learning and explain what is the best GPU for your use-case and budget.

- The Best GPUs for Deep Learning in 2023 — An In-depth Analysis): Here, I provide an in-depth analysis of GPUs for deep learning/machine learning and explain what is the best GPU for your use-case and budget.

- NVIDIA GeForce RTX 2060 SUPER Specs: NVIDIA TU106, 1650 MHz, 2176 Cores, 136 TMUs, 64 ROPs, 8192 MB GDDR6, 1750 MHz, 256 bit

- NVIDIA GeForce RTX 3090 Specs: NVIDIA GA102, 1695 MHz, 10496 Cores, 328 TMUs, 112 ROPs, 24576 MB GDDR6X, 1219 MHz, 384 bit

LM Studio ▷ #🧪-beta-releases-chat (266 messages🔥🔥):

- Gemma Quants in Question: Users report mixed results with Google's Gemma models, finding that the

7b-itquants often output gibberish, while the2b-itquants seem stable and work well.@drawless111highlights that full precision models are necessary to meet benchmarks, suggesting that smaller (1-3B) models require more precise prompts and settings. - LM Studio Continually Improving:

@yagilbannounces a new LM Studio download with significant bug fixes, particularly issues with the regenerate feature and multi-turn chats, solved here.@yagilbalso clarifies the regenerate issue was not related to models but to bad quants; the team is figuring out how to ease the download of functional models. - Issues with Gemma 7B Size Explained: Users discussed the large size of Google's Gemma

7b-itmodel, pointing out its lack of quantization and large memory demands. It's noted that llama.cpp currently has issues with Gemma, which are expected to be resolved soon. - User-Friendly Presets for Improved Performance: Users agree that the correct preset is needed to get good results from the Gemma models, with

@pandora_box_openstressing the necessity for specific presets to avoid subpar outputs. - LM Studio Confirmed Working GGUFs:

@yagilbrecommends a 2B IT Gemma model they quantized and tested for LM Studio, with plans to upload a 7B version as well.@issaminuconfirms this 2B model works but is less intelligent than the more functional 7B model.

Links mentioned:

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- 👾 LM Studio - Discover and run local LLMs: Find, download, and experiment with local LLMs

- asedmammad/gemma-2b-it-GGUF · Hugging Face: no description found

- Thats What She Said Dirty Joke GIF - Thats What She Said What She Said Dirty Joke - Discover & Share GIFs: Click to view the GIF

- HuggingChat: Making the community's best AI chat models available to everyone.

- ```json{ "cause": "(Exit code: 1). Please check settings and try loading th - Pastebin.com: Pastebin.com is the number one paste tool since 2002. Pastebin is a website where you can store text online for a set period of time.

- google/gemma-2b-it · Hugging Face: no description found

- lmstudio-ai/gemma-2b-it-GGUF · Hugging Face: no description found

- LoneStriker/gemma-2b-it-GGUF · Hugging Face: no description found

- google/gemma-7b · Why the original GGUF is quite large ?: no description found

- google/gemma-7b-it · Hugging Face: no description found

- Need support for GemmaForCausalLM · Issue #5635 · ggerganov/llama.cpp: Prerequisites Please answer the following questions for yourself before submitting an issue. I am running the latest code. Development is very rapid so there are no tagged versions as of now. I car...

- Add

gemmamodel by postmasters · Pull Request #5631 · ggerganov/llama.cpp: There are couple things in this architecture: Shared input and output embedding parameters. Key length and value length are not derived from n_embd. More information about the models can be found...

Nous Research AI ▷ #ctx-length-research (97 messages🔥🔥):

- Scaling Up with Long-Context Data Engineering:

@gabriel_symeexpressed excitement about a GitHub repository titled "Long-Context Data Engineering", mentioning the implementation of data engineering techniques for scaling language models to 128K context. - VRAM Requirements for 128K Context Models: In a query about the VRAM requirements for 128K context at 7B models,

@tekniumclarified that it needs over 600GB. - Tokenization Queries and Considerations:

@vatsadevmentioned that GPT-3 and GPT-4 have tokenizers which can be found at tiktoken, and also referenced a related video by Andrej Karpathy without providing a direct link. - Token Compression Challenges:

@elder_pliniusraised an issue about token compression when trying to fit the Big Lebowski script within context limits, leading to a discussion with@vatsadevand@blackl1ghtabout tokenizers and server behavior on the OpenAI tokenizer playground that resulted in extended observations about why compressed text is accepted by ChatGPT while the original isn't. - Long Context Inference on Lesser VRAM:

@blackl1ghtshared that they conducted inference on Mistral 7B and Solar 10.7B at 64K context with just 28GB VRAM on a V100 32GB, which led to a discussion with@tekniumand@bloc97on the viability of this approach and the capacity of the kv cache and offloading in larger models.

Links mentioned:

- gpt-tokenizer playground: no description found

- Tweet from Aran Komatsuzaki (@arankomatsuzaki): Microsoft Research presents LongRoPE: Extending LLM Context Window Beyond 2 Million Tokens https://arxiv.org/abs/2402.13753

- Tweet from Pliny the Prompter 🐉 (@elder_plinius): The Big Lebowski script doesn't quite fit within the GPT-4 context limits normally, but after passing the text through myln, it does!

- GitHub - FranxYao/Long-Context-Data-Engineering: Implementation of paper Data Engineering for Scaling Language Models to 128K Context: Implementation of paper Data Engineering for Scaling Language Models to 128K Context - FranxYao/Long-Context-Data-Engineering

Nous Research AI ▷ #off-topic (16 messages🔥):

- Mysterious Minecraft Creature Inquiry: User

@tekniumquestioned the presence of a specific creature in Minecraft. The response by@nonameusrwas a succinct "since always."

- Exploring Self-Reflective AI in RAG:

@pradeep1148shared a link to a YouTube video titled "Self RAG using LangGraph," which suggests self-reflection can enhance Retrieval-Augmented Generation (RAG) models.

- Beginner's Guide Request for Artistic Image Generation: User

@blackblizeasked whether it's feasible for a non-expert to train a model on microscope photos for artistic purposes and sought guidance on the topic.

- Advancements in AI-Generated Minecraft Videos:

@afterhoursbillyanalyzed how an AI understands the inventory UI in Minecraft videos, while@_3sphereadded that while the AI-generated images look right at a glance, they reveal inaccuracies upon closer inspection.

- Nous Models' Avatar Generation Discussed: In response to

@stoicbatman's curiosity about avatar generation for Nous models,@tekniummentioned the use of DALL-E followed by img2img through Midjourney.

Links mentioned:

- Gemma Google's open source SOTA model: Gemma is a family of lightweight, state-of-the-art open models built from the same research and technology used to create the Gemini models. Developed by Goo...

- Self RAG using LangGraph: Self-reflection can enhance RAG, enabling correction of poor quality retrieval or generations.Several recent papers focus on this theme, but implementing the...

- BitDelta: Your Fine-Tune May Only Be Worth One Bit: Large Language Models (LLMs) are typically trained in two phases: pre-training on large internet-scale datasets, and fine-tuning for downstream tasks. Given ...

Nous Research AI ▷ #interesting-links (38 messages🔥):

- Google Unveils Gemma:

@burnytechshared a link to a tweet by@sundarpichaiannouncing Gemma, a family of lightweight and open source models available in 2B and 7B sizes. Sundar Pichai's tweet expresses excitement for global availability and encourages creations using Gemma on platforms ranging from developer laptops to Google Cloud.

- Gemini 1.5 Discussion Happening:

@shashank.f1invited users to a discussion on Gemini 1.5, mentioning a previous session on the A-JEPA AI model, which is not affiliated with Meta or Yann Lecun as noted by@ldj.

- OpenAI's LLama Bested by A Reproduction:

@euclaiseand@tekniumdiscussed how a reproduction of OpenAI's LLama outperformed the original, adding intrigue to the capabilities of imitated models.

- Navigation Through Human Knowledge:

@.benxhprovided a method for navigating the taxonomy of human knowledge and capabilities, suggesting a structured list of all possible fields and directing users to The U.S. Library of Congress for a comprehensive example.

- Microsoft Takes LLMs to New Lengths:

@main.ailinked a tweet by@_akhaliqabout Microsoft's LongRoPE, a technique that extends LLM context windows beyond 2 million tokens, arguably revolutionizing the capacity for long-text handling in models such as LLaMA and Mistral. The tweet highlights this advancement without neglecting the performance at original context window sizes.

Links mentioned:

- Tweet from Sundar Pichai (@sundarpichai): Introducing Gemma - a family of lightweight, state-of-the-art open models for their class built from the same research & tech used to create the Gemini models. Demonstrating strong performance acros...

- Join the hedwigAI Discord Server!: Check out the hedwigAI community on Discord - hang out with 50 other members and enjoy free voice and text chat.

- Tweet from AK (@_akhaliq): Microsoft presents LongRoPE Extending LLM Context Window Beyond 2 Million Tokens Large context window is a desirable feature in large language models (LLMs). However, due to high fine-tuning costs, ...

- Tweet from Emad (@EMostaque): @StabilityAI Some notes: - This uses a new type of diffusion transformer (similar to Sora) combined with flow matching and other improvements. - This takes advantage of transformer improvements & can...

- Library of Congress Subject Headings - LC Linked Data Service: Authorities and Vocabularies | Library of Congress: no description found

- benxh/us-library-of-congress-subjects · Datasets at Hugging Face: no description found

- A-JEPA AI model: Unlock semantic knowledge from .wav / .mp3 file or audio spectrograms: 🌟 Unlock the Power of AI Learning from Audio ! 🔊 Watch a deep dive discussion on the A-JEPA approach with Oliver, Nevil, Ojasvita, Shashank, Srikanth and N...

- JARVIS/taskbench at main · microsoft/JARVIS: JARVIS, a system to connect LLMs with ML community. Paper: https://arxiv.org/pdf/2303.17580.pdf - microsoft/JARVIS

- JARVIS/easytool at main · microsoft/JARVIS: JARVIS, a system to connect LLMs with ML community. Paper: https://arxiv.org/pdf/2303.17580.pdf - microsoft/JARVIS

Nous Research AI ▷ #general (419 messages🔥🔥🔥):

- Gemma vs Mistral Showdown: Tweets are circulating comparing Google Gemma to Mistral's LLMs, claiming that even after a few hours of testing, Gemma doesn't outperform Mistral's 7B models despite being better than llama 2.

- Debating Gemma's Instruction Following:

@big_ol_tendernoticed that for Nous-Mixtral models, placing instructions at the end of commands seems more effective than at the beginning, sparking a discussion about command formats. - The Speed of VMs on Different Services:

@mihai4256is recommended to try VAST for faster, more cost-effective VMs compared to Runpod, while another user notes Runpod's better UX despite speed issues.@lightvector_later reports that all providers seem slow today. - Curiosity for Crypto Payments for GPU Time:

@protofeatherinquires about platforms that offer GPU time purchase with crypto, leading to suggestions of Runpod and VAST, though there's a clarification that Runpod requires a Crypto.com KYC registration. - Potential Axolotl Support for Gemma:

@gryphepadarconducts a full finetune on Gemma with Axolotl, pointing out that it seems Gemma is 10.5B in size, thus requiring a lot more VRAM than Mistral. Moreover, users shared their experiences regarding difficulties and successes with various settings and DPO datasets.

Links mentioned:

- Tweet from Aaditya Ura (Ankit) (@aadityaura): The new Model Gemma from @GoogleDeepMind @GoogleAI does not demonstrate strong performance on medical/healthcare domain benchmarks. A side-by-side comparison of Gemma by @GoogleDeepMind and Mistral...

- Tweet from anton (@abacaj): After trying Gemma for a few hours I can say it won’t replace my mistral 7B models. It’s better than llama 2 but surprisingly not better than mistral. The mistral team really cooked up a model even go...

- Sad Cat GIF - Sad Cat - Discover & Share GIFs: Click to view the GIF

- LMSys Chatbot Arena Leaderboard - a Hugging Face Space by lmsys: no description found

- indischepartij/MiniCPM-3B-Hercules-v2.0 · Hugging Face: no description found

- Tweet from TokenBender (e/xperiments) (@4evaBehindSOTA): based on my tests so far, ignoring Gemma for general purpose fine-tuning or inference. however, indic language exploration and specific use case tests may be explored later on. now back to building ...

- no title found: no description found

- Models - Hugging Face: no description found

- Reddit - Dive into anything: no description found

- eleutherai: Weights & Biases, developer tools for machine learning

- The Novice's LLM Training Guide: Written by Alpin Inspired by /hdg/'s LoRA train rentry This guide is being slowly updated. We've already moved to the axolotl trainer. The Basics The Transformer architecture Training Basics Pre-train...

- GitHub - facebookresearch/diplomacy_cicero: Code for Cicero, an AI agent that plays the game of Diplomacy with open-domain natural language negotiation.: Code for Cicero, an AI agent that plays the game of Diplomacy with open-domain natural language negotiation. - facebookresearch/diplomacy_cicero

- Neuranest/Nous-Hermes-2-Mistral-7B-DPO-BitDelta at main: no description found

- BitDelta: no description found

- GitHub - FasterDecoding/BitDelta: Contribute to FasterDecoding/BitDelta development by creating an account on GitHub.

- Adding Google's gemma Model by monk1337 · Pull Request #1312 · OpenAccess-AI-Collective/axolotl: Adding Gemma model config https://huggingface.co/google/gemma-7b Testing and working!

Nous Research AI ▷ #ask-about-llms (9 messages🔥):

- Custom Tokenizer Training Query:

@ex3ndrinquired about the possibility of training a completely custom tokenizer and the ways to store it.@nanobitzresponded, prompting clarification about the end-goal of such a task. - Performance Inquiry on Nous-Hermes-2-Mistral-7B-DPO-GGUF:

@natefyi_30842asked for a comparison between the new Nous-Hermes-2-Mistral-7B-DPO-GGUF and its solar version, to which@emraza110commented on its proficiency in accurately answering a specific test question. - Out-of-Memory Error with Mixtral Model:

@iamcoming5084brought up an issue regarding out-of-memory errors when dealing with Mixtral 8x7b models. - Fine-Tuning Parameters for Accuracy:

@iamcoming5084sought advice on parameters that could affect the accuracy during the fine-tuning of Mixtral 8x7b and Mistral 7B, tagging@688549153751826432and@470599096487510016for input. - Hosting and Inference for Large Models:

@jacobidiscussed challenges and sought strategies for hosting the Mixtral 8x7b model using an OpenAI API endpoint, mentioning tools like tabbyAPI and llama-cpp. - Error in Nous-Hermes-2-Mistral-7B-DPO Inference Code:

@qtnxpointed out errors in the inference code section for Nous-Hermes-2-Mistral-7B-DPO on Huggingface and supplied a corrected version of the code. Inference code for Nous-Hermes-2-Mistral-7B-DPO.

Links mentioned:

- Welcome Gemma - Google’s new open LLM: no description found

- NousResearch/Nous-Hermes-2-Mistral-7B-DPO · Hugging Face: no description found

Nous Research AI ▷ #collective-cognition (3 messages):

- Short & Not-so-Sweet on Heroku:

@bfpillexpressed a negative sentiment with a blunt "screw heroku". Their frustration appears succinct but not elaborated upon. - Affable Acknowledgment:

@adjectiveallisonresponded, seemingly acknowledging the sentiment but pointing out "I don't think that's the point but sure". The exact point of contention remains unclear. - Consensus or Coincidence?:

@bfpillreplied with "glad we agree", but without context, it's uncertain if true agreement was reached or if the comment was made in jest.

Nous Research AI ▷ #project-obsidian (3 messages):

- Model Updates Delayed Due to Pet Illness:

@qnguyen3apologized for the slower pace in updating and completing models, attributing the delay to their cat falling ill. - Invitation to Directly Message for Coordination:

@qnguyen3invited members to send a direct message if they need to reach out for project-related purposes.

Eleuther ▷ #general (101 messages🔥🔥):

- Confusion Over lm eval:

@lee0099wondered why lm eval seemed to be set up only for runpod, prompting@hailey_schoelkopfto clarify that lm eval is different from llm-autoeval, pointing to the Open LLM Leaderboard's HF spaces page for detailed instructions and command line parameters.

- Discussion on Model Environmental Impact:

@gaindrewspeculated on ranking models by the net carbon emissions they prevent or contribute. Acknowledging that accuracy would be a challenge, the conversation ended without further exploration or links.

- Optimizer Trouble for loubb:

@loubbpresented an unusual loss curve while training based on the Whisper model and brainstormed with others, such as@ai_waifuand@lucaslingle, about potential causes related to optimizer parameters.

- Google's Gemma Introduced:

@sundarpichaiannounced Gemma, a new family of models, leading to debates between users like@lee0099and@.undeletedon whether Gemma constituted a significant improvement over existing models, such as Mistral.

- Theoretical Discussion on Simulating Human Experience:

@rallio.provoked a detailed discussion on the theoretical possibility of simulating human cognition with@sparetime.and@fern.bear. The conversation ranged from the complexity of modeling human emotion and memory to how GPT-4 could potentially be used to create consistent, synthetic human experiences.

Links mentioned:

- Tweet from Sundar Pichai (@sundarpichai): Introducing Gemma - a family of lightweight, state-of-the-art open models for their class built from the same research & tech used to create the Gemini models. Demonstrating strong performance acros...

- PropSegmEnt: A Large-Scale Corpus for Proposition-Level Segmentation and Entailment Recognition: The widely studied task of Natural Language Inference (NLI) requires a system to recognize whether one piece of text is textually entailed by another, i.e. whether the entirety of its meaning can be i...

- Everything WRONG with LLM Benchmarks (ft. MMLU)!!!: 🔗 Links 🔗When Benchmarks are Targets: Revealing the Sensitivity of Large Language Model Leaderboardshttps://arxiv.org/pdf/2402.01781.pdf❤️ If you want to s...

Eleuther ▷ #research (305 messages🔥🔥):

- Groq Attempts To Outperform Mistral:

@philpaxshared an article highlighting that Groq, an AI hardware startup, showcased impressive demos of the Mistral Mixtral 8x7b model on their inference API, achieving up to 4x the throughput and charging less than a third of Mistral's price. The performance improvement could benefit real-world usability for chain of thought and lower latency needs for coding generations and real-time model applications.

- Concerns About Parameter Count Misrepresentation with Gemma Models: Discussion in the channel raised issues with parameter count misrepresentation, for example, "gemma-7b" actually containing 8.5 billion parameters, with suggestions that model classifications such as "7b" should strictly mean up to 7.99 billion parameters at most.

- Exploration of LLM Data and Compute Efficiency:

@jckwindstarted a conversation about the data and compute efficiency of LLMs, noting that they require a lot of data and build inconsistent world models. A shared graphic suggesting LLMs could struggle with bi-directional learning sparked debate and inspired thoughts on whether large context windows or curiosity-driven learning mechanisms could potentially address these inefficiencies.