[AINews] Reflection 70B, by Matt from IT Department

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Reflection Tuning is all you need?

AI News for 9/5/2024-9/6/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (214 channels, and 2813 messages) for you. Estimated reading time saved (at 200wpm): 304 minutes. You can now tag @smol_ai for AINews discussions!

We were going to wait til next week for the paper + 405B, but the reception has been so strong (with VentureBeat cover story) and the criticisms mostly minor so we are going to make this the title story even though it technically happened yesterday, since no other story comes close.

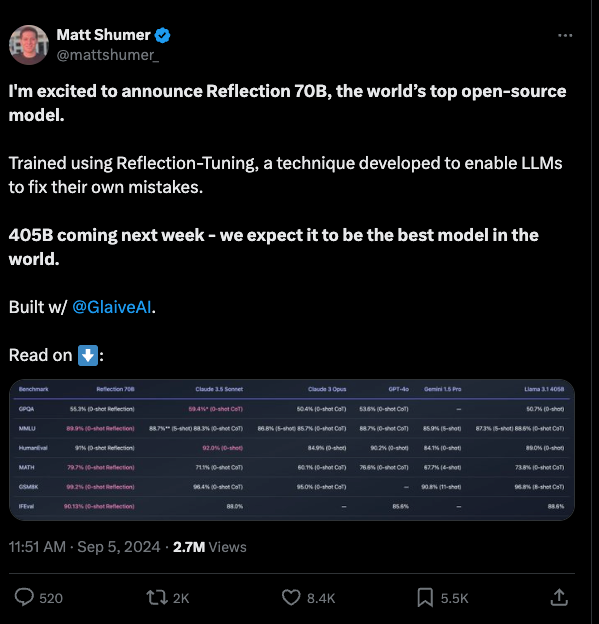

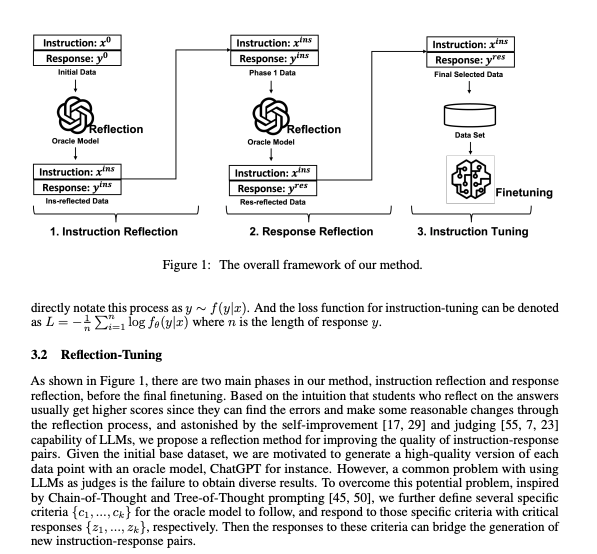

TL;DR a two person team; Matt Shumer from Hyperwrite (who has no prior history of AI research but is a prolific AI builder and influencer) and Sahil Chaudhary from Glaive finetuned Llama 3.1 70B (though context is limited) using a technique similar to a one year old paper, Reflection-Tuning: Recycling Data for Better Instruction-Tuning:

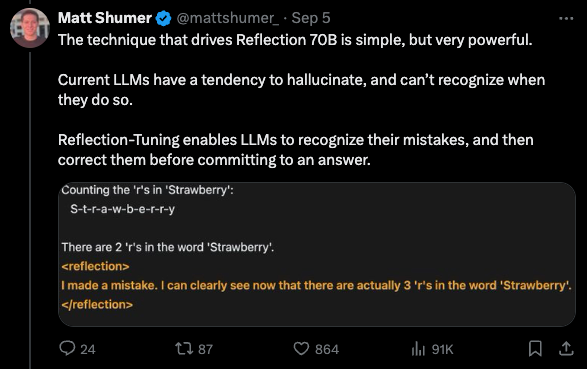

Matt hasn't yet publicly cited the paper, but it almost doesn't matter because the process is retrospectively obvious to anyone who understands the broad Chain of Thought literature: train LLMs to add thinking and reflection sections to their output before giving a final output.

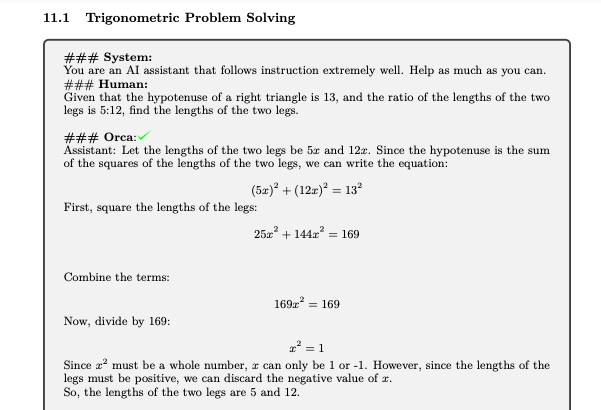

This is basically "Let's Think Step By Step" in more formal terms, and is surprising to the extent that the Orca series of models (our coverage here) already showed that Chain of Thought could be added to Llama 1/2/3 and would work:

It would seem that Matt has found the ideal low hanging fruit because nobody bothered to take a different spin on

Orca + generate enough synthetic data (we still don't know how much it was, but it couldn't have been that much given the couple dozen person-days that Matt and Sahil spent on it) to do this until now.

The criticisms have been few and mostly not fatal:

- Contamination concerns: 99.2% GSM8K score too high - more than 1% is mislabeled, indicating contamination

- Johno Whitaker independently verified that 5 known wrong questions from GSM8K were answered correctly (aka not memorized)

- Matt ran the LMsys decontaminator check on it as well

- Worse for coding: Does worse on BigCodeBench-Hard - almost 10 points worse than L3-70B, and Aider code editing - 7% worse than L3-70B.

- Overoptimized for solving trivia: "nearly but not quite on par with Llama 70b for comprehension, but far, far behind on summarization - both in terms of summary content and language. Several of its sentences made no sense at all. I ended up deleting it." - /r/locallLama

- Weirdly reliant on system prompts: "The funny thing is that the model performs the same as base Llama 3.1 if you don't use the specific system prompt the author suggest. He even says it himself." /r/localllama

- grifter/hype alarm bells - Matt did not disclose that he is an investor in Glaive.

After a day of review, the overall vibes remain very strong - with /r/localLlama reporting that even 4bit quantizations of Reflection 70B are doing well, and Twitter reporting riddles and favorable comparisons with Claude 3.5 Sonnet that it can be said to at least pass the vibe check if not as a generally capable model, but on enough reasoning tasks to be significant.

More information can be found on this 34min livestream conversation and 12min recap with Matthew Berman.

All in all, not a bad day for Matt from IT.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

LLM Training & Evaluation

- LLM Training & Evaluation: @AIatMeta is still accepting proposals for their LLM Evaluations Grant until September 6th. The grant will provide $200K in funding to support LLM evaluation research.

- Multi-Modal Models: @glennko believes that AI will eventually be able to count "r" with high accuracy, but that it might not be with an LLM but with a multi-modal model.

- Specialized Architecture: @glennko noted that FPGAS are too slow and ASICs are too expensive to build the specialized architecture needed for custom logic.

Open-Source Models & Research

- Open-Source MoE Models: @apsdehal announced the release of OLMoE, a 1B parameter Mixture-of-Experts (MoE) language model that is 100% open-source. The model was a collaboration between ContextualAI and Allen Institute for AI.

- Open-Source MoE Models: @iScienceLuvr noted that OLMOE-1B-7B has 7 billion parameters but only uses 1 billion per input token, and it was pre-trained on 5 trillion tokens. The model outperforms other available models with similar active parameters, even surpassing larger models such as Llama2-13B-Chat and DeepSeekMoE-16B.

- Open-Source MoE Models: @teortaxesTex noted that DeepSeek-MoE scores well in granularity, but not in shared experts.

AI Tools & Applications

- AI-Powered Spreadsheets: @annarmonaco highlighted how Paradigm is transforming spreadsheets with AI and using LangChain and LangSmith to monitor key costs and gain step-by-step agent visibility.

- AI for Healthcare Diagnostics: @qdrant_engine shared a guide on how to create a high-performance diagnostic system using hybrid search with both text and image data, generating multimodal embeddings from text and image data.

- AI for Fashion: @flairAI_ is releasing a fashion model that can be trained on clothing with incredible accuracy, preserving texture, labels, logos, and more with Midjourney-level quality.

AI Alignment & Safety

- AI Alignment & Safety: @GoogleDeepMind shared a podcast discussing the challenges of AI alignment and the ability to supervise powerful systems effectively. The podcast included insights from Anca Diana Dragan and Professor FryRSquared.

- AI Alignment & Safety: @ssi is building a "straight shot to safe superintelligence" and has raised $1B from investors.

- AI Alignment & Safety: @RichardMCNgo noted that EA facilitates power-seeking behavior by choosing strategies using naive consequentialism without properly accounting for second order effects.

Memes & Humor

- Founder Mode: @teortaxesTex joked about Elon Musk's Twitter feed, comparing him to Iron Man.

- Founder Mode: @nisten suggested that Marc Andreessen needs a better filtering LLM to manage his random blocked users.

- Founder Mode: @cto_junior joked about how Asian bros stack encoders and cross-attention on top of existing models just to feel something.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Advancements in LLM Quantization and Efficiency

- llama.cpp merges support for TriLMs and BitNet b1.58 (Score: 73, Comments: 4): llama.cpp has expanded its capabilities by integrating support for TriLMs and BitNet b1.58 models. This update enables the use of ternary quantization for weights in TriLMs and introduces a binary quantization method for BitNet models, potentially offering improved efficiency in model deployment and execution.

Theme 2. Reflection-70B: A Novel Fine-tuning Technique for LLMs

- First independent benchmark (ProLLM StackUnseen) of Reflection 70B shows very good gains. Increases from the base llama 70B model by 9 percentage points (41.2% -> 50%) (Score: 275, Comments: 115): Reflection-70B demonstrates significant performance improvements over its base model on the ProLLM StackUnseen benchmark, increasing accuracy from 41.2% to 50%, a gain of 9 percentage points. This independent evaluation suggests that Reflection-70B's capabilities may surpass those of larger models, highlighting its effectiveness in handling unseen programming tasks.

- Matt from IT unexpectedly ranks among top AI companies like OpenAI, Google, and Meta, sparking discussions about individual innovation and potential job offers from major tech firms.

- The Reflection-70B model demonstrates significant improvements over larger models, beating the 405B version on benchmarks. Users express excitement for future fine-tuning of larger models and discuss hardware requirements for running these models locally.

- Debate arises over the fairness of comparing Reflection-70B to other models due to its unique output format using

tags. Some argue it's similar to Chain of Thought prompting, while others see it as a novel approach to enhancing model reasoning capabilities.

- Reflection-Llama-3.1-70B available on Ollama (Score: 74, Comments: 35): The Reflection-Llama-3.1-70B model is now accessible on Ollama, expanding the range of large language models available on the platform. This model, based on Llama 2, has been fine-tuned using constitutional AI techniques to enhance its capabilities in areas such as task decomposition, reasoning, and reflection.

- Users noted an initial system prompt error in the model, which was promptly updated. The model's name on Ollama mistakenly omitted "llama", causing some amusement.

- A tokenizer issue was reported, potentially affecting the model's performance on Ollama and llama.cpp. An active discussion on Hugging Face addresses this problem.

- The model demonstrated its reflection capabilities in solving a candle problem, catching and correcting its initial mistake. Users expressed interest in applying this technique to smaller models, though it was noted that the 8B version showed limited improvement.

All AI Reddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Model Developments and Releases

- Reflection 70B: A fine-tuned version of Meta's Llama 3.1 70B model, created by Matt Shumer, is claiming to outperform state-of-the-art models on benchmarks. It uses synthetic data to offer an inner monologue, similar to Anthropic's approach with Claude 3 to 3.5.

- AlphaProteo: Google DeepMind's new AI model generates novel proteins for biology and health research.

- OpenAI's Future Models: OpenAI is reportedly considering high-priced subscriptions up to $2,000 per month for next-generation AI models, potentially named Strawberry and Orion.

AI Industry and Market Dynamics

- Open Source Impact: The release of Reflection 70B has sparked discussions about the potential of open-source models to disrupt the AI industry, potentially motivating companies like OpenAI to release new models.

- Model Capabilities: There's a disconnect between public perception and actual AI model capabilities, with many people unaware of the current state of AI technology.

AI Applications and Innovations

- DIY Medicine: A report discusses the rise of "Pirate DIY Medicine," where amateurs can manufacture expensive medications at a fraction of the cost.

- Stable Diffusion: A new FLUX LoRA model for Stable Diffusion has gained popularity, demonstrating the ongoing development in AI-generated art.

AI Discord Recap

A summary of Summaries of Summaries by Claude 3.5 Sonnet

1. LLM Advancements and Benchmarking

- Reflection 70B Makes Waves: Reflection 70B was announced as the world's top open-source model, utilizing a new Reflection-Tuning technique that enables the model to detect and correct its own reasoning mistakes.

- While initial excitement was high, subsequent testing on benchmarks like BigCodeBench-Hard showed mixed results, with scores lower than previous models. This sparked debates about evaluation methods and the impact of synthetic training data.

- DeepSeek V2.5 Enters the Arena: DeepSeek V2.5 was officially launched, combining the strengths of DeepSeek-V2-0628 and DeepSeek-Coder-V2-0724 to enhance writing, instruction-following, and human preference alignment.

- The community showed interest in comparing DeepSeek V2.5's performance, particularly for coding tasks, against other recent models like Reflection 70B, highlighting the rapid pace of advancements in the field.

2. Model Optimization Techniques

- Speculative Decoding Breakthrough: Together AI announced a breakthrough in speculative decoding, achieving up to 2x improvement in latency and throughput for long context inputs, challenging previous assumptions about its effectiveness.

- This advancement signals a significant shift in optimizing high-throughput inference, potentially reducing GPU hours and associated costs for AI solution deployment.

- AdEMAMix Optimizer Enhances Gradient Handling: A new optimizer called AdEMAMix was proposed, utilizing a mixture of two Exponential Moving Averages (EMAs) to better handle past gradients compared to a single EMA, as detailed in this paper.

- Early experiments show AdEMAMix outperforming traditional single EMA methods in language modeling and image classification tasks, promising more efficient training outcomes for various AI applications.

3. Open-source AI Developments

- llama-deploy Streamlines Microservices: llama-deploy was launched to facilitate seamless deployment of microservices based on LlamaIndex Workflows, marking a significant evolution in agentic system deployment.

- An open-source example showcasing how to build an agentic chatbot system using llama-deploy with the @getreflex front-end framework was shared, demonstrating its full-stack capabilities.

- SmileyLlama: AI Molecule Designer: SmileyLlama, a fine-tuned Chemical Language Model, was introduced to design molecules based on properties specified in prompts, built using the Axolotl framework.

- This development showcases Axolotl's capabilities in adapting existing Chemical Language Model techniques for specialized tasks like molecule design, pushing the boundaries of AI applications in chemistry.

4. AI Infrastructure and Deployment

- NVIDIA's AI Teaching Kit Launch: NVIDIA's Deep Learning Institute released a generative AI teaching kit developed with Dartmouth College, aimed at empowering students with GPU-accelerated AI applications.

- The kit is designed to give students a significant advantage in the job market by bridging knowledge gaps in various industries, highlighting NVIDIA's commitment to AI education and workforce development.

- OpenAI Considers Premium Pricing: Reports emerged that OpenAI is considering a $2000/month subscription model for access to its more advanced AI models, including the anticipated Orion model, as discussed in this Information report.

- This potential pricing strategy has sparked debates within the community about accessibility and the implications for AI democratization, with some expressing concerns about creating barriers for smaller developers and researchers.

PART 1: High level Discord summaries

HuggingFace Discord

- Vision Language Models Overview: A member shared a blogpost detailing the integration of vision and language in AI applications, emphasizing innovative potentials.

- This piece aims to steer focus towards the versatile use cases emerging from this intersection of technologies.

- Tau LLM Training Optimization Resources: The Tau LLM series offers essential insights on optimizing LLM training processes, which promise enhanced performance.

- It is considered pivotal for anyone delving into the complexities of training LLMs effectively.

- Medical Dataset Quest for Disease Detection: A member seeks a robust medical dataset for computer vision, aimed at enhancing disease detection through transformer models.

- They're particularly interested in datasets that support extensive data generation efforts in this domain.

- Flux img2img Pipeline Still Pending: The Flux img2img feature remains unmerged, as noted in an open PR, with ongoing discussions surrounding its documentation.

- Despite its potential strain on typical consumer hardware, measures for optimization are being explored, as shared in related discussions.

- Selective Fine-Tuning for Enhanced Language Models: The concept of selective fine-tuning has been highlighted, showcasing its capability in improving language model performance without full retraining.

- This targeted approach allows for deeper performance tweaks while avoiding the costs associated with comprehensive training cycles.

Stability.ai (Stable Diffusion) Discord

- ControlNet Enhances Model Pairings: Users shared successful strategies for using ControlNet with Loras to generate precise representations like hash rosin images using various SDXL models.

- They recommended applying techniques like depth maps to achieve better results, highlighting a growing mastery in combining different AI tools.

- Flux Takes the Lead Over SDXL for Logos: The community widely endorsed Flux over SDXL for logo generation, emphasizing its superior handling of logo specifics without requiring extensive training.

- Members noted that SDXL struggles without familiarity with the logo design, making Flux the favored choice for ease and effectiveness.

- Scamming Awareness on the Rise: Discussion on online scams revealed that even experienced users can be vulnerable, leading to a shared commitment to promote ongoing vigilance.

- Empathetic understanding of scamming behaviors emerged as a key insight, reinforcing that susceptibility isn't limited to the inexperienced.

- Tagging Innovations in ComfyUI: Community insights on tagging features in ComfyUI likened its capabilities to Langflow and Flowise, showcasing its flexibility and user-friendly interface.

- Members brainstormed specific workflows to enhance tagging efficacy, pointing to a promising wave of adaptations in the interface’s functionality.

- Insights Into Forge Extensions: Inquiries into various extensions available in Forge highlighted user efforts to improve experience through contributions and community feedback.

- Polls were referenced as a method for shaping future extension releases, underscoring the importance of quality assurance and community engagement.

Unsloth AI (Daniel Han) Discord

- Congrats on Y Combinator Approval!: Team members celebrated the recent backing from Y Combinator, showcasing strong community support and enthusiasm for the project's future.

- They acknowledged this milestone as a significant boost toward development and outreach.

- Unsloth AI Faces Hardware Compatibility Hurdles: Discussions highlighted Unsloth's current struggles with hardware compatibility, notably concerning CUDA support on Mac systems.

- The team aims for hardware agnosticism, but ongoing issues reduce performance on certain configurations.

- Synthetic Data Generation Model Insights: Insights were shared on employing Mistral 8x7B tunes for synthetic data generation, alongside models like jondurbin/airoboros-34b-3.3 for testing.

- Experimentation remains essential for fine-tuning outcomes based on hardware constraints.

- Phi 3.5 Model Outputs Confuse Users: Users reported frustrating experiences with the Phi 3.5 model returning gibberish outputs during fine-tuning efforts, despite parameter tweaks.

- This prompted a wider discussion on troubleshooting and refining input templates for better model performance.

- Interest Surges for Comparison Reports!: A member expressed eagerness for comparison reports on key topics, emphasizing their potential for insightful reading.

- Parallelly, another member announced plans for a YouTube video detailing these comparisons, showcasing community engagement.

LM Studio Discord

- Seeking Free Image API Options: Users investigated free Image API options that support high limits, specifically inquiring about providers offering access for models like Stable Diffusion.

- Curiosity was sparked around any providers that could accommodate these features at scale.

- Reflection Llama-3.1 70B Gets Enhancements: Reflection Llama-3.1 70B impressed as the top open-source LLM with updates that bolster error detection and correction capabilities.

- However, users noted ongoing performance issues and debated optimal prompts to enhance model behavior.

- LM Studio Download Problems After Update: Post-update to version 0.3.2, users faced challenges downloading models, citing certificate errors as a primary concern.

- Workarounds discussed included adjusting VRAM and context size, while clarifications on the RAG summarization feature were provided.

- Mac Studio Battling Speed with Large Models: Concern arose over Mac Studio's capability with 256GB+ memory being sluggish for larger models, with hopes that LPDDR5X 10.7Gbps** could remedy this.

- One discussion highlighted a 70% speed boost potential across all M4s, igniting further interest in hardware upgrades.

- Maximizing Performance with NVLink and RTX 3090: Users shared insights on achieving 10 to 25 t/s with dual RTX 3090 setups, especially with NVLink, while one reported hitting 50 t/s.

- Despite these high numbers, the actual inference performance impact of NVLink drew skepticism from some community members.

Nous Research AI Discord

- Reflection 70B Model Struggles on Benchmarks: Recent tests revealed that Reflection 70B underperformed in comparisons with the BigCodeBench-Hard, particularly affected by tokenizer and prompt issues.

- The community expressed concerns over evaluations, leading to uncertainty about the model’s reliability in real-world applications.

- Community Investigates DeepSeek v2.5 Usability: Members sought feedback on improvements seen with DeepSeek v2.5 during coding tasks, encouraging a share of user experiences.

- This initiative aims to build a collective understanding of the model's effectiveness and contribute to user-driven enhancements.

- Inquiries on API Usability for Llama 3.1: There was a discussion about optimal API options for implementing Llama 3.1 70B, emphasizing the need for tool call format support.

- Suggestions included exploring various platforms, pointing toward Groq as a promising candidate for deployment.

- Challenges with Quantization Techniques: Users reported setbacks with the FP16 quantization of the 70B model, highlighting struggles in achieving satisfactory performance with int4.

- Ongoing discussions revolved around potential solutions to enhance model performance while maintaining quality integrity.

- MCTS and PRM Techniques for Enhanced Performance: Conversations indicated interest in merging MCTS (Monte Carlo Tree Search) and PRM (Probabilistic Roadmap) to boost training efficiencies.

- The community showed enthusiasm about experimenting with these methodologies for improving model evaluation processes.

Latent Space Discord

- OpenAI Considers $2000 Subscription: OpenAI is exploring a pricing model at $2000/month for its premium AI models, including the upcoming Orion model, stirring accessibility concerns within the community.

- As discussions unfold, opinions vary on whether this pricing aligns with market norms or poses barriers for smaller developers.

- Reflection 70B's Mixed Benchmark Results: The Reflection 70B model has shown mixed performance, scoring 20.3 on the BigCodeBench-Hard benchmark, notably lower than Llama3's score of 28.4.

- Critics emphasize the need for deeper analysis of its methodology, especially regarding its claim of being the top open-source model.

- Speculative Decoding Boosts Inference: Together AI reported that speculative decoding can enhance throughput by up to 2x, challenging previous assumptions about its efficiency in high-latency scenarios.

- This advancement could reshape approaches to optimizing inference speeds for long context inputs.

- Exciting Developments in Text-to-Music Models: A new open-source text-to-music model has emerged, claiming impressive sound quality and efficiency, competing against established platforms like Suno.ai.

- Members are keen on its potential applications, although there are varied opinions regarding its practical usability.

- Exploration of AI Code Editors: Discussion on AI code editors highlights tools like Melty and Pear AI, showcasing unique features compared to Cursor.

- Members are particularly interested in how these tools manage comments and TODOs, pushing for better collaboration in coding environments.

OpenAI Discord

- Perplexity steals spotlight: Users praised Perplexity for its speed and reliability, often considering it a better alternative to ChatGPT Plus subscriptions.

- One user noted it is particularly useful for school as it is accessible and integrated with Arc browser.

- RunwayML faces backlash: A user reported dissatisfaction with RunwayML after a canceled community meetup, which raises concerns about their customer service.

- Comments highlighted the discontent among loyal members and how this affects Runway's reputation.

- Reflection model's promising tweaks: Discussion around the Reflection Llama-3.1 70B model focused on its performance and a new training method called Reflection-Tuning.

- Users noted that initial testing issues led to a platform link where they can experiment with the model.

- OpenAI token giveaway generates buzz: An offer for OpenAI tokens sparked significant interest, as one user had 1,000 tokens they did not plan to use.

- This prompted discussions around potential trading or utilizing these tokens within the community.

- Effective tool call integrations: Members shared tips on structuring tool calls in prompts, emphasizing the correct sequence of the Assistant message followed by the Tool message.

- One member noted finding success with over ten Python tool calls in a single prompt output.

Eleuther Discord

- Securing Academic Lab Roles: Members discussed strategies for obtaining positions in academic labs, emphasizing the effectiveness of project proposals and the lower success of cold emailing.

- One member highlighted the need to align research projects with current trends to grab the attention of potential hosts.

- Universal Transformers Face Feasibility Issues: The feasibility of Universal Transformers was debated, with some members expressing skepticism while others found potential in adaptive implicit compute techniques.

- Despite the promise, stability continues to be a significant barrier for wide adoption in practical applications.

- AdEMAMix Optimizer Improves Gradient Handling: The newly proposed AdEMAMix optimizer enhances gradient utilization by blending two Exponential Moving Averages, showing better performance in tasks like language modeling.

- Early experiments indicate this approach outperforms the traditional single EMA method, promising more efficient training outcomes.

- Automated Reinforcement Learning Agent Architecture: A new automated RL agent architecture was introduced, efficiently managing experiment progress and building curricula through a Vision-Language Model.

- This marks one of the first complete automations in reinforcement learning experiment workflows, breaking new ground in model training efficiency.

- Hugging Face RoPE Compatibility Concerns: A member raised questions regarding compatibility between the Hugging Face RoPE implementation for GPTNeoX and other models, noting over 95% discrepancies in attention outputs.

- This raises important considerations for those working with multiple frameworks and might influence future integration efforts.

OpenInterpreter Discord

- Open Interpreter celebrates a milestone: Members enthusiastically celebrated the birthday of Open Interpreter, with a strong community sentiment expressing appreciation for its innovative potential.

- Happy Birthday, Open Interpreter! became the chant, emphasizing the excitement felt around its capabilities.

- Skills functionality in Open Interpreter is still experimental: Discussion revealed that the skills feature is currently experimental, prompting questions about whether these skills persist across sessions.

- Users noted that skills appear to be temporary, which led to suggestions to investigate the storage location on local machines.

- Positive feedback on 01 app performance: Users shared enthusiastic feedback about the 01 app's ability to efficiently search and play songs from a library of 2,000 audio files.

- Despite praise, there were reports of inconsistencies in results, reflecting typical early access challenges.

- Fulcra app expands to new territories: The Fulcra app has officially launched in several more regions, responding to community requests for improved accessibility.

- Discussions indicated user interest in availability across locations such as Australia, rallying support for further expansion.

- Request for Beta Role Access: Multiple users are eager to get access to the beta role for desktop, including one who contributed to the dev kit for Open Interpreter 01.

- A user expressed their disappointment at missing a live session, asking, 'Any way to get access to the beta role for desktop?'

Modular (Mojo 🔥) Discord

- Mojo Values Page Returns 404: Members noted that Modular's values page is currently showing a 404 error at this link and may need redirection to company culture.

- Clarifications suggested that changes were required for the link to effectively point users to the relevant content.

- Async Functions Limitations in Mojo: A user faced issues using

async fnandasync def, revealing these async features are exclusive to nightly builds, causing confusion in stable versions.- Users were advised to check their version and consider switching to the nightly build to access these features.

- DType Constraints as Dict Keys: Discussion sparked over the inability to use

DTypeas a key in Dictionaries, raising eyebrows since it implements theKeyElementtrait.- Participants explored the design constraints within Mojo’s data structures that might limit the use of certain types.

- Constructor Usage Troubleshoot: Progress was shared on resolving constructor issues involving

Arc[T, True]andWeak[T], highlighting challenges with @parameter guards.- Suggestions included improving naming conventions within the standard library for better clarity and aligning structure of types.

- Exploring MLIR and IR Generation: Interest was piqued on how MLIR can be utilized more effectively in Mojo, especially regarding IR generation.

- A resource from a previous LLVM meeting was suggested, 2023 LLVM Dev Mtg - Mojo 🔥, to gain deeper insights on integration.

CUDA MODE Discord

- Reflection 70B launches with exciting features: The Reflection 70B model has been launched as the world’s best open-source model, utilizing Reflection-Tuning to correct LLM errors.

- A 405B model is expected next week, possibly surpassing all current models in performance.

- Investigating TorchDynamo cache lookup delays: When executing large models, members noted 600us spent in TorchDynamo Cache Lookup, mainly due to calls from

torch/nn/modules/container.py.- This points to potential optimizations required in the cache lookup process to improve model training runtime.

- NVIDIA teams up for generative AI education: The Deep Learning Institute from NVIDIA released a generative AI teaching kit in collaboration with Dartmouth College to enhance GPU learning.

- Participants will gain a competitive edge in AI applications, bridging essential knowledge gaps.

- FP16 x INT8 Matmul shows limits on batch sizes: The FP16 x INT8 matmul on the 4090 RTX fails when batch sizes exceed 1 due to shared memory limitations, hinting at a need for better tuning for non-A100 GPUs.

- Users experienced substantial slowdowns with enabled inductor flags yet could bypass errors by switching them off.

- Liger's performance benchmarks raise eyebrows: The performance of Liger's swiglu kernels was contrasted against Together AI's benchmarks, which reportedly offer up to 24% speedup.

- Their specialized kernels outperform cuBLAS and PyTorch eager mode by 22-24%, indicating the need for further tuning options.

Interconnects (Nathan Lambert) Discord

- Reflection Llama-3.1 70B yields mixed performance: The newly released Reflection Llama-3.1 70B claims to be the leading open-source model yet struggles significantly on benchmarks like BigCodeBench-Hard.

- Users observed a drop in performance for reasoning tasks and described the model as a 'non news item meh model' on Twitter.

- Concerns linger over Glaive's synthetic data: Community members raised alarms about the effectiveness of synthetic data from Glaive, recalling issues from past contaminations that might impact model performance.

- These concerns led to discussions about the implications of synthetic data on the Reflection Llama model's generalization capabilities.

- HuggingFace Numina praised for research: HuggingFace Numina was highlighted as a powerful resource for data-centric tasks, unleashing excitement among researchers for its application potential.

- Users expressed enthusiasm about how it could enhance efficiency and innovation in various ongoing projects.

- Introduction of CHAMP benchmark for math reasoning: The community welcomed the new CHAMP benchmark aimed at assessing LLMs' mathematical reasoning abilities through annotated problems that provide hints.

- This dataset will explore how additional context aids in problem-solving under complex conditions, promoting further study in this area.

- Reliability issues of Fireworks and Together: Discussions unveiled that both Fireworks and Together are viewed as less than 100% reliable, prompting the implementation of failovers to maintain functionality.

- Users are cautious about utilizing these tools until assurances of reliability are fortified.

Perplexity AI Discord

- Tech Entry Without Skills: A member expressed eagerness to enter the tech industry without technical skills, seeking advice on building a compelling CV and effective networking.

- Another member mentioned starting cybersecurity training through PerScholas, underscoring a growing interest in coding and AI.

- Bing Copilot vs. Perplexity AI: A user compared Bing Copilot's ability to provide 5 sources with inline images to Perplexity's capabilities, suggesting improvements.

- They hinted that integrating hover preview cards for citations could be a valuable enhancement for Perplexity.

- Perplexity AI's Referral Program: Perplexity is rolling out a merch referral program specifically targeted at students, encouraging sharing for rewards.

- A question arose about the availability of a year of free access, particularly for the first 500 sign-ups.

- Web3 Job Openings: A post highlighted job openings in a Web3 innovation team, looking for beta testers, developers, and UI/UX designers.

- They invite applications and proposals to create mutual cooperation opportunities as part of their vision.

- Sutskever's SSI Secures $1B: Sutskever's SSI successfully raised $1 billion to boost advancements in AI technology.

- This funding aims to fuel further innovations in the AI sector.

tinygrad (George Hotz) Discord

- Bounty Exploration Sparks Interest: A user expressed interest in trying out a bounty and sought guidance, referencing a resource on asking smart questions.

- This led to a humorous acknowledgment from another member, highlighting community engagement in bounty discussions.

- Tinygrad Pricing Hits Zero: In a surprising twist, georgehotz confirmed the pricing for a 4090 + 500GB plan has been dropped to $0, but only for tinygrad friends.

- This prompted r5q0 to inquire about the criteria for friendship, adding a light-hearted element to the conversation.

- Clarifying PHI Operation Confusion: Members discussed the PHI operation's functionality in IR, noting its unusual placement compared to LLVM IR, especially in loops.

- One member suggested renaming it to ASSIGN as it operates differently from traditional phi nodes, aiming to clear up misunderstandings.

- Understanding MultiLazyBuffer's Features: A user raised concerns about the

MultiLazyBuffer.realproperty and its role in shrinking and copying to device interactions.- This inquiry led to discussions revealing that it signifies real lazy buffers on devices and potential bugs in configurations.

- Views and Memory Challenges: Members expressed ongoing confusion regarding the realization of views in the

_recurse_lbfunction, questioning optimization and utilization balance.- This reflection underscores the need for clarity on foundational tensor view concepts, inviting community input to refine understanding.

Torchtune Discord

- Gemma 2 model resources shared: Members discussed the Gemma 2 model card, providing links to technical documentation from Google's lightweight model family.

- Resources included a Responsible Generative AI Toolkit and links to Kaggle and Vertex Model Garden, emphasizing ethical AI practices.

- Multimodal models and causal masks: A member outlined challenges with causal masks during inference for multimodal setups, focusing on fixed sequence lengths.

- They noted that exposing these variables through attention layers is crucial to tackle this issue effectively.

- Expecting speedups with Flex Attention: There is optimism that flex attention with document masking will significantly enhance performance, achieving 40% speedup on A100 and 70% on 4090.

- This would improve dynamic sequence length training while minimizing padding inefficiencies.

- Questions arise on TransformerDecoder design: A member asked whether a TransformerDecoder could operate without self-attention layers, challenging its traditional structure.

- Another pointed out that the original transformer utilized both cross and self-attention, complicating this deviation.

- PR updates signal generation overhaul: Members confirmed that GitHub PR #1449 has been updated to enhance compatibility with

encoder_max_seq_lenandencoder_mask, with testing still pending.- This update paves the way for further modifications to generation utils and integration with PPO.

LlamaIndex Discord

- Llama-deploy Offers Microservices Magic: The new llama-deploy system enhances deployment for microservices based on LlamaIndex Workflows. This opens up opportunities to streamline agentic systems similar to previous iterations of llama-agents.

- An example shared in the community demonstrates full-stack capabilities using llama-deploy with @getreflex, showcasing how to effectively build agentic chat systems.

- PandasQueryEngine Faces Column Name Confusion: Users reported that PandasQueryEngine struggles to correctly identify the column

averageRating, often reverting to incorrect labels during chats. Suggestions included verifying mappings within the chat engine's context.- This confusion could lead to deeper issues in data integrity when integrating engine responses with expected output formats.

- Developing Customer Support Bots with RAG: A user is exploring ways to create a customer support chatbot that efficiently integrates a conversation engine with retrieval-augmented generation (RAG). Members emphasized the synergy between chat and query engines for stronger data retrieval capabilities.

- Validating this integration could enhance user experience in real-world applications where effective support is crucial.

- NeptuneDatabaseGraphStore Bug Reported: Concerns arose regarding a bug in NeptuneDatabaseGraphStore.get_schema() that misses date information in graph summaries. It is suspected the issue may be related to schema parsing errors with LLMs.

- Community members expressed the need for further investigation, especially surrounding the

datetimepackage’s role in the malfunction.

- Community members expressed the need for further investigation, especially surrounding the

- Azure LlamaIndex and Cohere Reranker Inquiry: A discussion emerged about integrating the Cohere reranker as a postprocessor within Azure's LlamaIndex. Members confirmed that while no Azure module exists currently, creating one is feasible due to straightforward documentation.

- The community is encouraged to consider building this integration as it could significantly enhance processing capabilities within Azure environments.

OpenAccess AI Collective (axolotl) Discord

- Reflection Llama-3.1: Top LLM Redefined: Reflection Llama-3.1 70B is now acclaimed as the leading open-source LLM, enhanced through Reflection-Tuning for improved reasoning accuracy.

- Synthetic Dataset Generation for Fast Results: Discussion focused on the rapid generation of the synthetic dataset for Reflection Llama-3.1, sparking curiosity about human rater involvement and sample size.

- Members debated the balance between speed and quality in synthetic dataset creation.

- Challenge Accepted: Fine-tuning Llama 3.1: Members raised queries regarding effective fine-tuning techniques for Llama 3.1, noting its performance boost at 8k sequence length with possible extension to 128k using rope scaling.

- Concerns about fine-tuning complexities arose, suggesting the need for custom token strategies for optimal performance.

- SmileyLlama is Here: Meet the Chemical Language Model: SmileyLlama stands out as a fine-tuned Chemical Language Model designed for molecule creation based on specified properties.

- This model, marked as an SFT+DPO implementation, showcases Axolotl's prowess in specialized model adaptations.

- GPU Power: Lora Finetuning Insights: Inquiries about A100 80 GB GPUs for fine-tuning Meta-Llama-3.1-405B-BNB-NF4-BF16 in 4 bit using adamw_bnb_8bit, underscored the resource requirements for effective Lora finetuning.

- This points to practical considerations essential for managing Lora finetuning processes efficiently.

Cohere Discord

- Explore Cohere's Capabilities and Cookbooks: Members discussed checking out the channel dedicated to capabilities and demos where the community shares projects built using Cohere models, referencing a comprehensive cookbook that provides ready-made guides.

- One member highlighted that these cookbooks showcase best practices for leveraging Cohere's generative AI platform.

- Understanding Token Usage with Anthropic Library: A member inquired about using the Anthropic library, sharing a code snippet for calculating token usage:

message = client.messages.create(...).- They directed others to the GitHub repository for the Anthropic SDK to further explore tokenization.

- Embed-Multilingual-Light-V3.0 Availability on Azure: A member questioned the availability of

embed-multilingual-light-v3.0on Azure and asked if there are any plans to support it.- This inquiry reflects ongoing interest in the integration of Cohere's resources with popular cloud platforms.

- Query on RAG Citations: A member asked how citations will affect the content of text files when using RAG with an external knowledge base, specifically inquiring about receiving citations when they are currently getting None.

- They expressed urgency in figuring out how to resolve the issue regarding the absence of citations in the responses from text files.

DSPy Discord

- Chroma DB Setup Simplified: A member pointed out that launching a server for Chroma DB requires just one line of code:

!chroma run --host localhost --port 8000 --path ./ChomaM/my_chroma_db1, noting the ease of setup.- They felt relieved knowing the database location with such simplicity.

- Weaviate Setup Inquiry: The same member asked if there’s a simple setup for Weaviate similar to Chroma DB, avoiding Go Docker complexities.

- They expressed a need for ease due to their non-technical background.

- Jupyter Notebooks for Server-Client Communication: Another member shared their use of two Jupyter notebooks to run a server and client separately, highlighting it fits their needs.

- They identify as a Biologist and seek uncomplicated solutions.

- Reflection 70B Takes the Crown: Reflection 70B has been announced as the leading open-source model, featuring Reflection-Tuning to enable the model to rectify its own errors.

- A new model, 405B, is on its way next week promising even better performance.

- Enhancing LLM Routing with Pricing: Discussion emerged around routing appropriate LLMs based on queries, intending to incorporate aspects like pricing and TPU speed into the logic.

- Participants noted that while routing LLMs is clear-cut, enhancing it with performance metrics can refine the selection process.

LAION Discord

- SwarmUI Usability Concerns: Members expressed discomfort with user interfaces showcasing 100 nodes compared to SwarmUI, reinforcing its usability issues.

- Discussion highlighted how labeling it as 'literally SwarmUI' reflected a broader concern about UI complexity among tools.

- SwarmUI Modular Design on GitHub: A link to SwarmUI on GitHub was shared, featuring its focus on modular design for better accessibility and performance.

- The repository emphasizes offering easy access to powertools, enhancing usability through a well-structured interface.

- Reflection 70B Debut as Open-Source Leader: The launch of Reflection 70B has been announced as the premier open-source model using Reflection-Tuning, enabling LLMs to self-correct.

- A 405B model is anticipated next week, raising eyebrows about its potential to crush existing benchmark performances.

- Self-Correcting LLMs Make Waves: New discussions emerged around an LLM capable of self-correction that reportedly outperforms GPT-4o in all benchmarks, including MMLU.

- The open-source nature of this model, surpassing Llama 3.1's 405B, signifies a major leap in LLM functionality.

- Lucidrains Reworks Transfusion Model: Lucidrains has shared a GitHub implementation of the Transfusion model, optimizing next token prediction while diffusing images.

- Future extensions may integrate flow matching and audio/video processing, indicating strong multi-modal capabilities.

LangChain AI Discord

- ReAct Agent Deployment Challenges: A member struggles with deploying their ReAct agent on GCP via FastAPI, facing issues with the local SQLite database disappearing upon redeploy. They seek alternatives for Postgres or MySQL as a replacement for

SqliteSaver.- The member is willing to share their local implementation for reference, hoping to find a collaborative solution.

- Clarifying LangChain Callbacks Usage: Discussion emerged on the accuracy of the syntax

chain = prompt | llm, referencing LangChain's callback documentation. Members noted that the documentation appears outdated, particularly with updates in version 0.2.- The conversation underscored the utility of callbacks for logging, monitoring, and third-party tool integration.

- Cerebras and LangChain Collaboration Inquiry: A member inquired about usage of Cerebras alongside LangChain, seeking collaborative insights from others. Responses indicated interest but no specific experiences or solutions were shared.

- This topic remains open for further exploration within the community.

- Decoding .astream_events Dilemma: Members discussed the lack of references for decoding streams from .astream_events(), with one sharing frustration over manually serializing events. The conversation conveyed a desire for better resources and solutions.

- The tedious process highlighted the need for collaboration and resource sharing in the community.

LLM Finetuning (Hamel + Dan) Discord

- Enhancing RAG with Limited Hardware: A member sought strategies to upgrade their RAG system using llama3-8b with 4bit quantization along with the BAAI/bge-small-en-v1.5 embedding model while working with a restrictive 4090 GPU.

- Seeking resources for better implementation, they expressed hardware constraints, highlighting the need for efficient practices.

- Maximizing GPU Potential with Larger Models: In response, another member suggested that a 4090 can concurrently run larger embedding models, indicating that the 3.1 version might also enhance performance.

- They provided a GitHub example showcasing hybrid search integration involving bge & bm25 on Milvus.

- Leveraging Metadata for Better Reranking: The chat underscored the critical role of metadata for each chunk, suggesting it could improve the sorting and filtering of returned results.

- Implementing a reranker, they argued, could significantly enhance the output quality for user searches.

Gorilla LLM (Berkeley Function Calling) Discord

- XLAM System Prompt Sparks Curiosity: A member pointed out that the system prompt for XLAM is unique compared to other OSS models and questioned the rationale behind this design choice.

- Discussion revealed an interest in whether these differences stem from functionality or licensing considerations.

- Testing API Servers Needs Guidance: A user sought effective methods for testing their own API server but received no specific documentation in reply.

- This gap in shared resources highlights a potential area for growth in community support and knowledge sharing.

- How to Add Models to the Leaderboard: A user inquired about the process for adding new models to the Gorilla leaderboard, prompting a response with relevant guidelines.

- Access the contribution details on the GitHub page to understand how to facilitate model inclusion.

- Gorilla Leaderboard Resource Highlighted: Members discussed the Gorilla: Training and Evaluating LLMs for Function Calls GitHub resource that outlines the leaderboard contributions.

- An image from its repository was also shared, illustrating the guidelines available for users interested in participation at GitHub.

Alignment Lab AI Discord

- Greetings from Knut09896: Knut09896 stepped into the channel and said hello, sparking welcome interactions.

- This simple greeting hints at the ongoing engagement within the Alignment Lab AI community.

- Channel Activity Buzz: The activity level in the #general channel appears vibrant with members casually chatting and introducing themselves.

- Such interactions play a vital role in fostering community connections and collaborative discussions.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!