[AINews] Qwen 2 beats Llama 3 (and we don't know how)

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Another model release with no dataset details.

AI News for 6/5/2024-6/6/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (408 channels, and 2450 messages) for you. Estimated reading time saved (at 200wpm): 304 minutes.

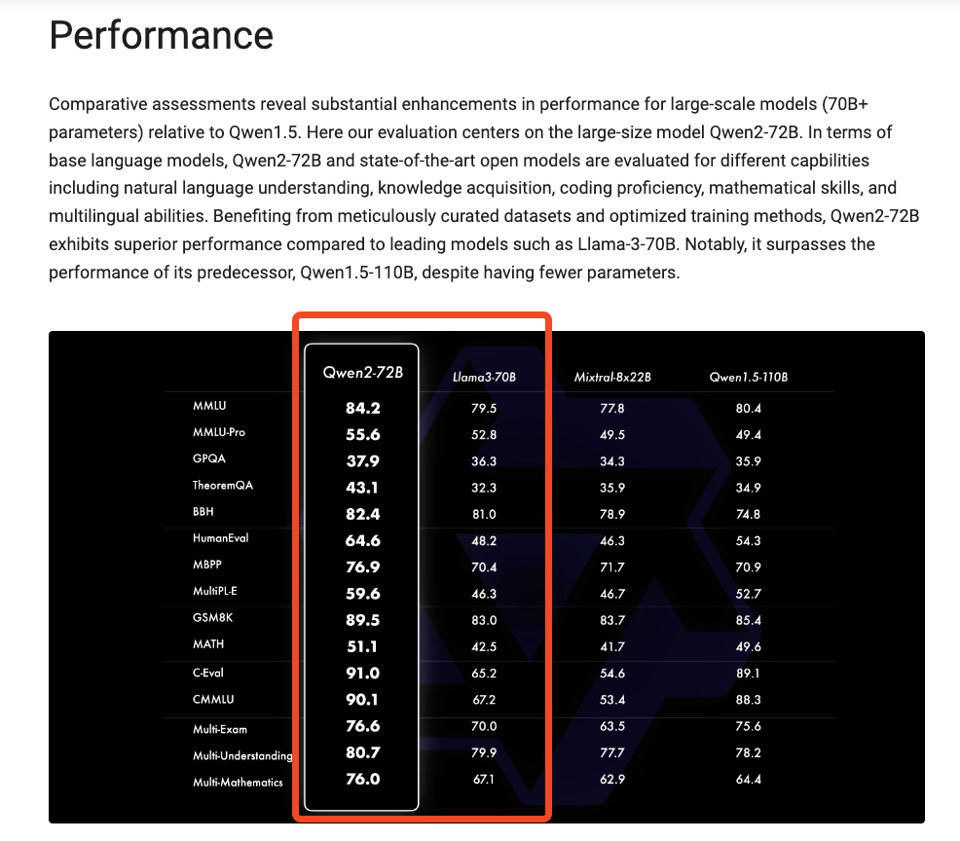

With Qwen 2 being Apache 2.0, Alibaba is now claiming to universally beat Llama 3 for the open models crown:

There are zero details on dataset so it's hard to get any idea of how they pulled this off, but they do drop some hints on post-training:

Our post-training phase is designed with the principle of scalable training with minimal human annotation.

Specifically, we investigate how to obtain high-quality, reliable, diverse and creative demonstration data and preference data with various automated alignment strategies, such as

- rejection sampling for math,

- execution feedback for coding and

- instruction-following, back-translation for creative writing,

- scalable oversight for role-play, etc.

These collective efforts have significantly boosted the capabilities and intelligence of our models, as illustrated in the following table.

They also published a post on Generalizing an LLM from 8k to 1M Context using Qwen-Agent.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3 Opus, best of 4 runs. We are working on clustering and flow engineering with Haiku.

Qwen2 Open-Source LLM Release

- Qwen2 models released: @huybery announced the release of Qwen2 models in 5 sizes (0.5B, 1.5B, 7B, 57B-14B MoE, 72B) as Base & Instruct versions. Models are multilingual in 29 languages and achieve SOTA performance on academic and chat benchmarks. Released under Apache 2.0 except 72B.

- Performance highlights: @_philschmid noted Qwen2-72B achieved MMLU 82.3, IFEval 77.6, MT-Bench 9.12, HumanEval 86.0. Qwen2-7B achieved MMLU 70.5, MT-Bench 8.41, HumanEval 79.9. On MMLU-PRO, Qwen2 scored 64.4, outperforming Llama 3's 56.2.

- Multilingual capabilities: @huybery highlighted Qwen2-7B-Instruct's strong multilingual performance. The models are trained in 29 languages including European, Middle East and Asian languages according to @_philschmid.

Groq's Inference Speed on Large LLMs

- Llama-3 70B Tokens/s: @JonathanRoss321 reported Groq achieved 40,792 tokens/s input rate on Llama-3 70B using FP16 multiply and FP32 accumulate over the full 7989 token context length.

- Llama 70B in 200ms: @awnihannun put the achievement in perspective, noting Groq ran Llama 70B on ~4 Wikipedia articles in 200 milliseconds, which is about the blink of an eye, with 16-bit precision and 32-bit accumulation (lossless).

Sparse Autoencoder Training Methods for GPT-4 Interpretability

- Improved SAE training: @gdb shared a paper on improved methods for training sparse autoencoders (SAEs) at scale to interpret GPT-4's neural activity.

- New training stack and metrics: @nabla_theta introduced a SOTA training stack for SAEs and trained a 16M latent SAE on GPT-4 to demonstrate scaling. They also proposed new SAE metrics beyond MSE/L0 loss.

- Scaling laws and metrics: @nabla_theta found clean scaling laws with autoencoder latent count, sparsity, and compute. Larger subject models have shallower scaling law exponents. Metrics like downstream loss, probe loss, ablation sparsity and explainability were explored.

Meta's No Language Left Behind (NLLB) Model

- NLLB model details: @AIatMeta announced the NLLB model, published in Nature, which can deliver high-quality translations directly between 200 languages, including low-resource ones.

- Significance of the work: @ylecun noted NLLB's ability to provide high-quality translation between 200 languages in any direction, with sparse training data, and for many low-resource languages.

Pika AI's Series B Funding

- $80M Series B: @demi_guo_ announced Pika AI's $80M Series B led by Spark Capital. Guo expressed gratitude to investors and team members.

- Hiring and future plans: @demi_guo_ reflected on the past year's progress and teased updates coming later in the year. Pika AI is looking for talent across research, engineering, product, design and ops (link).

Other Noteworthy Developments

- Anthropic's elections integrity efforts: @AnthropicAI published details on their processes for testing and mitigating elections-related risks. They also shared samples of the evaluations used to test their models.

- Cohere's startup program launch: @cohere launched a startup program to support early-stage companies solving real-world business challenges with AI. Participants get discounted access, technical support and marketing exposure. Cohere also released a library of cookbooks for enterprise-grade frontier models for applications like agents, RAG and semantic search (link).

- Prometheus-2 for RAG evaluation: @llama_index introduced Prometheus-2, an open-source LLM for evaluating RAG applications as an alternative to GPT-4. It can process direct assessment, pairwise ranking and custom criteria.

- LangChain x Groq integration: @LangChainAI announced an upcoming webinar on building LLM agent apps with LangChain and Groq's integration.

- Databutton AI engineer platform: @svpino shared that Databutton launched an AI software engineer platform to help build applications with React frontends and Python backends based on a business idea.

- Microsoft's Copilot+ PCs: @DeepLearningAI reported on Microsoft's launch of Copilot+ PCs with AI-first specs featuring generative models and search capabilities, with the first machines using Qualcomm Snapdragon chips.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

LLM Developments and Applications

- Open-source RAG application: In /r/LocalLLaMA, user open-sourced LARS, a RAG-centric LLM application that generates responses with detailed citations from documents. It supports various file formats, has conversation memory, and allows customization of LLMs and embeddings.

- Favorite open-source LLMs: In /r/LocalLLaMA, users shared their go-to open-source LLMs for different use cases, including Command-R for RAG on small-medium document sets, LLAVA 34b v1.6 for vision tasks, Llama3-gradient-70b for complex questions on large corpora, and more.

- AI business models: In /r/LocalLLaMA, a user questioned how much original work vs leveraging existing LLM APIs new AI businesses are actually doing, as many seem to just wrap OpenAI's API for specific domains.

- Querying many small files with LLMs: In /r/LocalLLaMA, a user sought advice on feeding 200+ small wiki files into an LLM while preserving relationships between them, as embeddings and RAG have been hit-or-miss. Proper LLM training or LoRA are being considered.

- Desktop app for local LLM API: In /r/LocalLLaMA, a user created a desktop app to interact with LMStudio's API server on their local machine and is gauging interest in releasing it for free.

- Educational platform for LLMs: In /r/LocalLLaMA, Open Engineer was announced, a free educational resource covering topics like LLM fine-tuning, quantization, embeddings and more to make LLMs accessible to software engineers.

- LLM assistant for database operations: In /r/LocalLLaMA, a user shared their experience integrating an LLM assistant into software for database CRUD and order validation, considering using the surrounding system to supplement the LLM with product lookups for more robust order processing.

AI Developments and Concerns

- Speculation on superintelligence development: In /r/singularity, a user speculated that major AI labs, chip companies and government agencies are likely already coordinating on superintelligence development behind the scenes, similar to the Manhattan Project, and that the US government is probably forecasting and preparing for the implications.

- Questions around UBI implementation: In /r/singularity, a user expressed frustration at the lack of concrete plans or frameworks for implementing UBI despite it being seen as a solution to AI-driven job displacement, raising questions about funding, population growth impacts, and more that need to be addressed.

- Risks of open-source AGI misuse: In /r/singularity, a user asked how the open-source community will prevent powerful open-source AGI from being misused by bad actors to cause harm, given the lack of safeguards, arguing the "it's the same as googling it" counterargument is oversimplified.

- Controlling ASI: In /r/singularity, a user questioned the belief that ASI could be controlled by humans or militaries given its superior intelligence, with commenters agreeing it's unlikely and attempts at domination are misguided.

AI Assistants and Interfaces

- Chat mode as voice data harvesting: In /r/singularity, a user speculated OpenAI's focus on chat mode is a strategic move to collect high-quality, natural voice data to overcome the limitations of text data for AI training, as voice represents a continuous stream of consciousness closer to human thought processes.

- Unusual ChatGPT use cases: In /r/OpenAI, users shared unusual things they use ChatGPT for, including generating overly dramatic plant watering reminders, converting cooking instructions from oven to air fryer, and mapping shopping list items to store aisles.

- Need for an "AI shell" protocol: In /r/OpenAI, a user envisioned the need for a standardized "AI shell" protocol to allow AI agents to easily interface with and control various devices, similar to SSH or RDP, as existing protocols may not be sufficient.

AI Content Generation

- Evolving views on AI music: In /r/singularity, a user shared their evolving perspective on AI music, seeing it as well-suited for generic YouTube background music due to aggressive restrictions on mainstream music, and asked for others' thoughts.

- AI-generated post-rock playlist: In /r/singularity, a user shared a 30-minute AI-generated post-rock playlist on YouTube featuring immersive tracks made with UDIO, receiving positive feedback about forgetting it's AI music.

- AI in VFX project: In /r/singularity, a user shared a VFX project incorporating AI to create urban aesthetics inspired by ad displays, using procedural systems, image compositing, and layered AnimateDiffs, with CG elements processed individually and integrated.

AI Discord Recap

A summary of Summaries of Summaries

-

LLM and Model Performance Innovations:

- Qwen2 Attracts Significant Attention with models ranging from 0.5B to 7B parameters, appreciated for their ease of use and rapid iteration capabilities, supporting innovative applications with 128K token contexts.

- Stable Audio Open 1.0 Generates Interest leveraging components like autoencoders and diffusion models, as detailed on Hugging Face, raising community engagement in custom audio generation workflows.

- ESPNet Competitive Benchmarks Shared for Efficient Transformer Inference: Discussions around the newly released ESPNet showed promising transformer efficiency, pointing towards enhanced throughput on high-end GPUs (H100), as documented in the ESPNet Paper.

- Seq1F1B Promotes Efficient Long-Sequence Training: The pipeline scheduling method introduces significant memory savings and performance gains for LLMs, as per the arxiv publication.

-

Fine-tuning and Prompt Engineering Challenges:

- Model Fine-tuning Innovations: Fine-tuning discussions highlight the use of gradient accumulation to manage memory constraints, and custom pipelines such as using

FastLanguageModel.for_inferencefor Alpaca-style prompts, as demonstrated in a Google Colab notebook.

- Chatbot Query Generation Issues: Debugging Cypher queries using Mistral 7B emphasized the importance of systematic evaluation and iterative tuning methods in successful model training.

- Adapter Integration Pitfalls: Critical challenges with integrating trained adapters pointed to a need for more efficient adapter loading techniques to maintain performance, supported by practical coding experiences.

- Model Fine-tuning Innovations: Fine-tuning discussions highlight the use of gradient accumulation to manage memory constraints, and custom pipelines such as using

-

Open-Source AI Developments and Collaborations:

- Prometheus-2 Evaluates RAG Apps: Prometheus-2 offers an open-source alternative to GPT-4 for evaluating RAG applications, valued for its affordability and transparency, detailed on LlamaIndex.

- Launch of OpenDevin sparks collaboration interest, featuring a robust AI system for autonomous engineering developed by Cognition, with documentation available via webinar and GitHub.

- Gradient Accumulation Strategies Improve Training: Discussions on Unsloth AI emphasized using gradient accumulation to handle memory constraints effectively, reducing training times highlighted by shared YouTube tutorials.

- Mojo Rising as a Backend Framework: Developers shared positive experiences using Mojo for HTTP server development, depicting its advantages in static typing and compile-time computation features on GitHub.

-

Deployment, Inference, and API Integrations:

- Perplexity Pro Enhances Search Abilities: The recent update added step-by-step search processes via an intent system, enabling more agentic execution, as discussed within the community around Perplexity Labs.

- Discussion on Modal's Deployment and Privacy: Queries about using Modal for LLM deployments included concerns about its fine-tuning stack and privacy policies, with additional support provided through Modal Labs documentation.

- OpenRouter Technical Insights and Limits: Users explored technical specifications and capabilities, including assistant message prefill support and handling function calls through Instructor tool.

-

AI Community Discussions and Events:

- Stable Diffusion 3 Speculation: Community buzz surrounds the anticipated release, with speculation about features and timelines, as detailed in various Reddit threads.

- Human Feedback Foundation Event on June 11: Upcoming discussions on integrating human feedback into AI, featuring speakers from Stanford and OpenAI with recordings available on their YouTube channel.

- Qwen2 Model Launches with Overwhelming Support: Garnering excitement for its multilingual capabilities and enhanced benchmarks, the release on platforms like Hugging Face highlights its practical evaluations.

- Call for JSON Schema Support in Mozilla AI: Requests for JSON schema inclusion in the next version to ease application development were prominently noted in community channels.

- Keynote on Robotics AI and Foundation Models: Investment interests in "ChatGPT for Robotics" amid foundation model companies underscore the strategic alignment detailed in Newcomer's article.

PART 1: High level Discord summaries

LLM Finetuning (Hamel + Dan) Discord

- Web Scraping Tool Talk: Engineers swapped notes on OSS scraping platforms like Beautiful Soup and Scrapy, with a nod to Trafilatura for its proficiency in extracting dynamic content from tricky sources like job postings and SEC filings, citing its documentation.

- New UI Kid on the Block: Google's new Mesop is stirring conversations for its potential in crafting UIs for internal tools, stepping into the domain of Gradio and Streamlit, despite lacking advanced authentication - curiosity piqued with a glance at Mesop's homepage.

- Query Crafting Challenges: Engineers debugged generating Cypher queries with Mistral 7B, emphasizing the importance of systematic evals, test-driven development, and the iterative process—a testament to the nitty-gritty of model fine-tuning.

- Diving into Deployment: Questions swirled about Modal's usage, including its fine-tuning stack complexity and privacy policy—a reference to Modal Labs query for policy seekers, and a nod to their Dreambooth example for practical enlightenment.

- CUDA Compatibility Quirks: The compatibility quirks between CUDA versions took the spotlight as engineers faced issues installing the

xformersmodule—a pointer to Jarvis Labs documentation on updating CUDA came to the rescue.

OpenAI Discord

- GPT-3's Programming Puzzle: While GPT models like GPT-3 are adept at assisting with programming, limitations become apparent with highly specific and complex questions, signaling a push against their current capabilities.

- Logical Loophes with Math Equations: Even simple logical tasks, such as correcting a math equation, can stumble GPT models, revealing gaps in basic logical reasoning.

- Eagerly Awaiting GPT-4o's Special Features: Discussions anticipate GPT-4o updates, with voice and real-time vision features to be initially available to ChatGPT Plus users in weeks, and wider access later, as suggested by OpenAI's official update.

- DALL-E's Text Troubles: Users are sharing workarounds for DALL-E's struggles with generating logos that contain precise text, including prompts to iteratively refine text accuracy and a useful custom GPT prompt.

- 7b and Vision Model Synergy: An integration success story where the 7b model harmonizes well with the llava-v1.6-mistral-7b vision model, expanding possibilities for model collaboration.

Unsloth AI (Daniel Han) Discord

Gradient Accumulation to the Rescue: Engineers agreed that gradient accumulation can alleviate memory constraints and improve training times, but warned of potential pitfalls with larger batch sizes due to unexpected memory allocation behaviors.

Tackling Inferential Velocity with Alpacas: An engineer shared a code snippet leveraging FastLanguageModel.for_inference to utilize Alpaca-style prompts for sequence generation in LLMs, which sparked interest alongside discussions about a shared Google Colab notebook.

Adapter Merging Mayday: Challenges with integrating trained adapters causing significant dips in performance led to calls for more efficient adapter loading techniques to maintain training efficiency.

Qwen2 Models Catch Engineers' Eyes: Excitement bubbles over the release of Qwen2 models, with engineers keen on the smaller-sized models ranging from 0.5B to 7B for their ease of use and faster iteration capabilities.

Quest for Solutions in the Help Depot: Conversations in the help channel emphasized a need for a VRAM-saving lora-adapter file conversion process, quick intel on a bug potentially slowing down inference, strategies for mitigating GPU memory overloads, and clarifications on running gguf models and implementing a RAG system, referenced to Mistral documentation.

Stability.ai (Stable Diffusion) Discord

- Stable Audio Open 1.0 Hits the Stage: Stable Audio Open 1.0 garners community interest for its innovative components, including the autoencoder and diffusion model, with members experimenting with tacotron2, musicgen, and audiogen within custom audio generation workflows.

- Size Matters in Stable Diffusion: Users recommend generating larger resolution images in Stable Diffusion before downsizing as an effective workaround for the random color issue plaguing small resolution (160x90) image outputs.

- ControlNet Sketches Success: ControlNet emerges as a preferred solution among members for converting sketches to realistic images without retaining unwanted colors, providing better control over the final composition and image details.

- CivitAI Content Quality Control Called Into Question: A surge in non-relevant content on CivitAI has led to calls for enhanced filtering capabilities to better curate quality models and maintain user experience.

- Stable Diffusion 3 Awaits Clarification: Despite rampant speculation within the community, the release date and details surrounding Stable Diffusion 3 remain nebulous, with some members referencing a Reddit post for tentative answers.

LM Studio Discord

- Taming the RAM Beast in LM Studio: Users discussed strategies to limit RAM usage in LM Studio, suggesting approaches like loading and unloading models during use; a detailed method can be found in the llamacpp documentation. While not a built-in feature in LM Studio, such tactics are employed to enable models to utilize RAM only when active despite efficiency losses.

- Nomic Embed Models Step into the Limelight: The discussion elevated Nomic Embed Text models to multimodal status, thanks to nomic-embed-vision-v1.5, setting them apart with impressive benchmark performances. The AI community within the server also noted Q6 quant models of Llama-3 as a balance of quality and performance.

- Jostling for the Perfect Configuration: A user raised an issue about model settings resetting when initiating new chats, and discovered that retaining old chats could be a simple fix. The conversation also touched on

use_mlockand--no-mmapsettings in LM Studio, affecting stability during 8B model operations, emphasizing operating system-dependent subtleties.

- Unlocking the Potential of Hardware Synergy for AI: Engineers entered into a hearty debate on Nvidia’s proprietary drivers versus AMD’s open-source approach, highlighting implications for systems administration and security. Additionally, there was excitement about the promises of new Qualcomm chips and caution against judging ARM CPUs solely by synthetic benchmarks.

- Updates and Upgrades Stir Excitement: The Higgs LLAMA model garnered praise for its intelligence at a 70B scale, with anticipation building around an upcoming LMStudio update to incorporate relevant llamacpp adjustments. Another user is considering a massive RAM upgrade in anticipation of the much-discussed LLAMA 3 405B model, reflecting the intertwining interests between hardware capabilities and AI model evolution.

HuggingFace Discord

Moderation is Key: The community debated moderation strategies in response to reports of inappropriate behavior. Professionalism in handling such issues is crucial.

Gradio API Challenges: Integrating Gradio with React Native and Node.js raised questions within the community. It's built with Svelte, so users were directed to investigate Gradio's API compatibility.

Text with Stability: Discussion around Stable Diffusion models for text generation pointed members towards solutions like AnyText and TextDiffuser-2 from Microsoft for robust output.

When Compute Goes Peer-to-Peer: The conversation turned to peer-to-peer compute for distributed machine learning, with tools like Petals and experiences with privacy-conscious local swarms offering promising avenues.

Human Feedback in AI: The Human Feedback Foundation is making strides in incorporating human feedback into AI, with an event on June 11th and a trove of educational sessions on their YouTube channel.

Small Datasets, Big Challenges: In computer vision discussions, dealing with small datasets and unrepresentative validation sets was a pressing concern. Solutions include using diverse training data and maybe even transformers despite their longer training times.

Swin Transformer Tests: There was a query about applying the Swin Transformer to CIFar datasets, highlighting the community's interest in experimenting with contemporary models in various scenarios.

Deterministic Models Turn Down the Heat: A single message highlighted lowering temperature settings to 0.1 to achieve more deterministic model behavior, prompting reflection on model tuning approaches.

Sample Input Snafus: Confusion over text embeddings and proper structuring of sample inputs for models like text-enc 1 and text-enc 2 surfaced, along with a discussion on the challenges posed by added kwargs in a dictionary format.

Re-parameterising with Results: A member successfully re-parameterised Segmind's ssd-1b into a v-prediction/zsnr refiner model and lauded it as a new favorite, hinting at a possible trend toward 1B mixture of experts models.

A Helping Hand for Projects: In a stretch of community aid, members offered personal assistance through DMs for addressing dataset questions, adding to the guild's collaborative environment.

Eleuther Discord

KAN Skepticism Expressed: Kolmogorov-Arnold Networks (KANs) were deemed less efficient than traditional neural networks by guild members, with concerns about their scalability and interpretability. However, there's interest in more efficient implementations of KANs, such as those using ReLU, evidenced by a shared ReLU-KAN architecture paper.

Expanding the Data Curation Toolbox: Participants debated the utility of influence functions in data quality evaluation, with the LESS algorithm (LESS algorithm) being mentioned as a potentially more scalable alternative for selecting high-quality training data.

Breakthroughs in Efficient Model Training: Innovations in model training were widely shared, including Nvidia's new open weights available on GitHub, the exploration of MatMul-free models (arXiv) for increased efficiency, and Seq1F1B's promise for more memory-efficient long-sequence training (arXiv).

Quantization Technique May Boost LLM Performance: The novel QJL method presents a promising avenue for large language models by compressing KV cache requirements through a quantization process (arXiv).

Brain-Data Speech Decoding Adventure: A guild member reported experimenting with Whisper tiny.en embeddings and brain implant data to decode speech, requesting peer suggestions to optimize the model by adjusting layers and loss functions while facing the constraint of a single GPU for training.

Perplexity AI Discord

- Perplexity Pro Gets Smarter: Perplexity Pro has upgraded to show its search process step-by-step, employing an intent system for a more agentic-like execution, approximately a week ago.

- File Format Frustrations: Users are experiencing difficulties with Perplexity's ability to read PDFs, with success varying based on the PDF's content layout ranging from heavily styled to plain text.

- Sticker Shock at Dev Costs: The community reacted with humor and disbelief to a member's request for building a text-to-video MVP on a shoestring budget of $100, highlighting the disconnect between expectations and market rates for developers.

- Haiku Feature Haunts No More: The removal of Haiku and select features from Perplexity labs sparked discussions, leading members to speculate on cost-saving measures and express their discontent due to the impact on their workflow.

- Curious about OpenChat Expansion: An inquiry was raised regarding the potential addition of an "openchat/openchat-3.6-8b-20240522" model to Perplexity, alongside current models like Mistral and Llama 3.

CUDA MODE Discord

- Discovering Past Technical Sessions: Recordings of past CUDA MODE technical events can be accessed on the CUDA MODE YouTube channel.

- Debugging Tensor Memory in PyTorch: A code snippet using

storage().data_ptr()was shared to test if two PyTorch tensors share the same memory, stirring discussion on checking memory overlap. A member requested assistance in locating the source code for a PyTorch C++ function, specifically at::_weight_int4pack_mm.

- Extension on AI Models and Techniques: Conversations hinge on methodologies like MoRA improving upon LoRA, and DPO versus PPO with respect to RLHF. Separate mentions went to CoPE for positional encodings and S3D accelerating inference, all found detailed in AI Unplugged.

- Torch Innovation Sparks: Discussions ignited around torch.compile boosting KAN performance to rival MLPs, with insights shared from Thomas Ahle's tweet, practical experiences, and the GitHub repository for KANs and MLPs.

- MLIR Sets Sights on ARM: An MLIR meeting covered creating an ARM SME Dialect, offering insights into ARM's Scalable Matrix Extension via a YouTube video. Hints pointing to potential Triton ARM support are discussed, with references to 'arm_neon' dialect for NEON operations in the MLIR documentation.

Interconnects (Nathan Lambert) Discord

- The Quest for the ChatGPT of Robotics: Investors are on the lookout for startups that can be the "ChatGPT for robotics," prioritizing AI over hardware. Excitement is building around niche foundation model companies as detailed in this article.

- Qwen2 Attracts Attention: Qwen2's launch has generated interest with models supporting up to 128K tokens in multilingual tasks, but users report gaps in recent event knowledge and general accuracy.

- Dragonfly Unfurls Its Wings in Multi-modal AI: Together.ai's Dragonfly brings advances in visual reasoning, particularly in medical imaging, demonstrating integration of text and visual inputs in model development.

- AI Community Takes a Critical View: From discussions around influential lab employees criticizing smaller players to a shared tweet highlighting the risk of AI labs inadvertently sharing advances with the CCP not the American research community, and The Verge article on Humane AI's safety issue, the community remains vigilant.

- Reinforcement Learning Paper Stirring Interest: Sharing of the “Self-Improving Robust Preference Optimization” (SRPO) paper as announced in this tweet indicates a focus on training LLMs using robust and self-improving RLHF methods. Nathan Lambert plans to dedicate time to discuss such cutting-edge papers.

Modular (Mojo 🔥) Discord

- Mojo Rising: Discussions in various channels have centered on the advantages of using Mojo for backend server development, with examples like lightbug_http demonstrating its use in crafting HTTP servers. The Mojo roadmap was shared, indicating future core programming features, as active comparison to Python sparked debates on performance merits due to Mojo's static typing and compile-time computation.

- Keeping Python Safe: In teaching Python to newcomers, it's essential to avoid potential pitfalls ("footguns") to help learners transition to more complex languages, like C++.

- Model Performance Frontiers Explored: Discussions indicated that extending models like Mistral beyond certain limits requires ongoing pretraining, with suggestions to apply the difference between UltraChat and base Mistral to Mistral-Yarn as a merging strategy, though practicality and skepticism were voiced.

- Community Coding Contributions Cloaked with Humor: Expressions like "licking the cookie" were employed humorously to discuss encouraging open-source contributions, and members playfully reflected on the complexities of technical talks and coding challenges, likening a simple request for quicksort implementation to a noble quest.

- Nightly Builds Yield Illumination and Frustration: Nightly builds were examined with a spotlight on the usage of immutable auto-deref for list iterators and the introduction of features like

String.formatin the latest compiler release2024.6.616. However, network hiccups and the unpredictable nature ofalgorithm.parallelizefor aparallel_sortfunction were sources of frustration, as seen from GitHub discussions and shared troubleshooting on workflow timing and network issues.

Latent Space Discord

- Cohere Cashes In Big Time: Cohere has secured a jaw-dropping $450 million in funding with significant contributions from tech titans like NVidia and Salesforce, despite not boasting a high revenue last year, as per a Reuters report.

- IBM's Granite Gains Ground: IBM's Granite models are being hailed for their transparency, particularly on data usage for training—prompting debates on whether they surpass OpenAI in the enterprise domain with insights from Talha Khan.

- Databricks Dominates Forrester’s AI Rankings: In the latest report by Forrester on AI foundation models, Databricks has been recognized as a leader, emphasizing the tailored needs of enterprises and suggesting benchmark scores aren't everything. The report is highlighted in Databricks' announcement blog and is accessible for free here.

- Qwen 2 Trumps Llama 3: The new Qwen 2 model, with impressive 128K context window capabilities, shows superior performance to Llama 3 in code and math tasks, announced in a recent tweet.

- New Avenues for AI Web Interaction and Assistance: Browserbase celebrates a $6.5 million seed fund aimed at enabling AI applications to navigate the web, shared by founders Nat & Dan, while Nox introduces an AI assistant designed to make the user experience feel invincible, with early sign-ups here.

LlamaIndex Discord

Prometheus-2 Pitches for RAG App Judging: Prometheus-2 is presented as an open-source alternative to GPT-4 for evaluating RAG applications, sparking interest due to concerns about transparency and affordability.

LlamaParse Pioneers Knowledge Graph Construction: A posted notebook demonstrates how LlamaParse can execute first-class parsing to develop knowledge graphs, paired with a RAG pipeline for node retrieval.

Configuration Overload in LlamaIndex: AI engineers are expressing difficulty with the complexity of configuring LlamaIndex for querying JSON data and are seeking guidance, as well as discussing issues with Text2SQL queries not balancing structured and unstructured data retrieval.

Exploring LLM Options for Resource-Limited Scenarios: Discussions on alternative setups for those with hardware limitations veer towards smaller models like Microsoft Phi-3 and experimenting with platforms like Google Colab for heavier models.

Scoring Filters Gain Customizable Edges: Engineers are discussing the capability of LlamaIndex to filter results by customizable thresholds and performance score, indicating a need for fine-tuned precision in search results.

Cohere Discord

- Startup Perks for Early Adopters: Cohere introduces a startup program aiming to equip Series B or earlier stage startups with discounts on AI models, expert support, and marketing clout to spur innovation in AI tech applications.

- Refining the Chatbot Experience: Upcoming changes to Cohere's Chat API on June 10th will bring a new default multi-step tool behavior and a

force_single_stepoption for simplicity's sake, all documented for ease of adoption in the API spec enhancements.

- Hot Temperatures for AI Samplers: OpenRouter stands out by allowing the temperature setting to exceed 1 for AI response samplers, contrasting with Cohere trial's 1.0 upper limit, opening discussions on flexibility in response variation and quality.

- Developing Smart Group Chatbots: Suggestions arose regarding the deployment of AI chatbots in group scenarios like business meetings, analyzing Rhea's advantageous multi-user context handling and potential precision concerns for personalized responses among numerous users.

- Cohere Networking & Demos: Community members welcomed new participant Toby Morning, exchanging LinkedIn profiles (LinkedIn Profile) for broader connections and showcasing enthusiasm for upcoming demonstrations of the Coral AGI system's prowess in multi-user settings.

Nous Research AI Discord

Qwen2 Leaps Ahead: The launch of the Qwen2 models marks a significant evolution from Qwen1.5, now featuring support for 128K token context lengths, 27 additional languages, and pretrained as well as instruction-tuned models in various sizes. They are available on platforms like GitHub, Hugging Face, and ModelScope, along with a dedicated Discord server.

Map Event Prediction Discussion: A user inquired about predicting true versus false event points on a map with temporal data, leading to a conversation about relevant commands and techniques, although specific methods were not provided.

Update on Mistral API and Model Storage: Mistral's introduction of a fine-tuning API and associated costs sparked discussion, with a focus on practical implications for development and experimentation. The API, including pricing details, is explained in their fine-tuning documentation.

Mobile Text Input Gets a Makeover: WorldSim Console updated their mobile platform, resolving bugs related to text input, improving text input reliability, and offering new features such as enhanced copy/paste and cosmetic customization options.

Music Exploration in Off-Topic: One member shared links to explore "Wakanda music", though this might have limited technical relevance for the engineer audience. Among the shared links were music videos like DG812 - In Your Eyes and MitiS & Ray Volpe - Don't Look Down.

OpenRouter (Alex Atallah) Discord

Server Management Made Easy with Pilot: The Pilot bot is revolutionizing how Discord servers are managed by offering features such as "Ask Pilot" for intelligent server insights, "Catch Me Up" for message summarization, and weekly "Health Check" reports on server activity. It's free to use and improves community growth and engagement, accessible through their website.

AI Competitors in Role-Playing Realm: The WizardLM 8x22b model is currently gaining popularity in the role-playing community, nevertheless Dolphin 8x22 emerges as a potential rival, awaiting user tests to compare their effectiveness.

Gemini Flash Sparks Image Output Curiosity: Inquiries about whether Gemini Flash can render images spurred clarification that while no Large Language Model (LLM) presently offers direct image outputs, they can theoretically use base64 or call external services like Stable Diffusion for image generation.

Tool Tips for Handling Function Calls: For handling specific function calls and formatting, Instructor is recommended as a powerful tool, facilitating automated command execution and improving user workflows.

Technical Discussions Amidst Model Enthusiasm: A member's query regarding prefill support in OpenRouter led to a confirmation that it's possible, particularly with the usage of reverse proxies; meanwhile, excitement is building around GLM-4 due to its support for the Korean language, hinting at the model's potential in multilingual applications.

MLOps @Chipro Discord

- Human Feedback Spurs AI Improvement: The upcoming Human Feedback Foundation event on June 11th is set to address the role of human feedback in enhancing AI applications across healthcare and civic domains; interested parties can register via Eventbrite. Additionally, recordings of past events with speakers from UofT, Stanford, and OpenAI are available at the Human Feedback Foundation YouTube Channel.

- Azure Event Attracts AI Enthusiasts: "Unleash the Power of RAG in Azure" is a highly subscribed Microsoft event happening in Toronto, as mentioned by an attendee seeking fellow participants; more details can be found on the Microsoft Reactor page.

- Tackling Messy Data: Engineers discussed strategies for dealing with high-cardinality categorical columns, including the use of aggregate/grouping, manual feature engineering, string matching, and edit distance techniques, all with the goal of refining inputs for better regression outcomes.

- Merging Data and Clustering Techniques: There's a shared perspective that combining spell correction with feature clustering might streamline the challenges posed by high-cardinality categorical data, with an emphasis on treating such issues as core data modeling problems.

- Practical Approaches to Feature Engineering: Conversations pivoted towards pragmatic approaches like breaking down complex problems (e.g., isolating brand and item elements) and incorporating moving averages as part of the simplification technique for price prediction. Appreciation was expressed for the multifaceted solutions discussed, including regex for feature extraction.

OpenAccess AI Collective (axolotl) Discord

Data Feast for AI Enthusiasts: Engineers lauded the accessibility of 15T datasets, humorously noting the conundrum of abundance in data but scarcity in computing resources and funding.

GPU Banter Amidst Hardware Discussions: The suitability of 4090s for pretraining massive datasets sparked a facetious exchange, jesting about the limitations of consumer GPUs for such demanding tasks.

Finetuning Fun with GLM and Qwen2: The community shared tips and configurations for finetuning GLM 4 9b and Qwen2 models, noting that Qwen2's similarity to Mistral simplifies the process.

Quest for Reliable Checkpointing: The use of Hugging Face's TrainingArguments and EarlyStoppingCallback featured in talks about checkpoint strategies, specifically for capturing both the most recent and best performing states based on eval_loss.

Error Hunting in AI Code: Troubleshooting the "returned non-zero exit status 1" error prompted members to suggest pinpointing the failing command, scrutinizing stdout and stderr, and checking for permission or environment variable issues.

LAION Discord

- Catchy Naming Conundrum: In the guild, clarity was sought on the 1B parameter zsnr/vpred refiner; it's critiqued that the model is actually a 1.3B, not a 1B model, sparking a light-hearted jab at the need for more accurate catchy names.

- Vega's Parameter Predicament: Discussions on the Vega model highlighted its swift processing prowess but raised concerns about its insufficient parameter size potentially limiting coherent output generation.

- Elrich Logos Dataset Remains a Mystery: A member queried about the availability of the Elrich logos dataset but did not receive any conclusive information or response regarding access.

- The Dawn of Qwen2: The launch of Qwen2 has been announced, introducing substantial improvements over Qwen1.5 across multiple fronts including language support, context length, and benchmark performance. Qwen2 is now available in different sizes and supports up to 128K tokens with resources spread across GitHub, Hugging Face, ModelScope, and demo.

LangChain AI Discord

- Knowledge Graph Construction Security Measures: A tutorial on constructing knowledge graphs from text was shared, emphasizing the importance of data validation for security before inclusion in a graph database.

- LangChain Tech Nuggets: Confusion about the necessity of tools decorator prompted discussions for clarity, while a request for understanding token consumption tracking methods was observed among users. Additionally, a question arose about the creation of RAG diagrams as seen in a LangChain's FreeCodeCamp tutorial video.

- Flow Control and Search Automation Resources: A LangGraph conditional edges YouTube video was highlighted for its utility in flow control in flow engineering, and a new project termed search-result-scraper-markdown was shared for fetching search results and converting them to Markdown.

- Cross-Framework Agent Collaboration: Users expressed interest in frameworks that enable collaboration among agents built with different tools, incorporating LangChain, MetaGPT, AutoGPT, and even agents from platforms such as coze.com, highlighting the potential of interoperability in the AI space.

- Calls for GUI and Course File Guidance: There was a user query about finding a specific "helper.py" file from the AI Agents LangGraph course, pointing towards a need for better resource discovery methods within technical courses such as those offered on the DLAI course page.

tinygrad (George Hotz) Discord

- Tinygrad Preps for Prime Time: George Hotz highlighted the need for updates in tinygrad before the 1.0 release, with pending PRs expected to resolve the current gaps.

- Unraveling Tensor Puzzles with UOps.CONST: AI engineers examined the role of UOps.CONST in tinygrad, which serves as address offsets in computational processes for determining index values during tensor addition.

- Decoding Complex Code: In response to confusion over a snippet of code, it was clarified that intricate conditions are often needed to efficiently manage tensor shapes and strides within the row-major data layout constraints.

- Indexing Woes Solved by Dynamic Kernel: A discussion on tensor indexing in tinygrad revealed that kernel generation is essential due to the architecture's reliance on static memory access, with the kernel in question enabling operations like Tensor[Tensor].

- Masking with Arange for Getitem Operations: The similarity between the kernel used for indexing operations and an arange kernel was noted, which facilitates the creation of a mask during getitem functions for dynamic tensor indexing.

OpenInterpreter Discord

Need for Speed with Graphics: Members are seeking advice on executing graphics output with interpreter.computer.run, specifically for visualizations like those produced by matplotlib without success thus far.

OS Mode Mayhem: Conversations highlighted troubles in getting --os mode to operate correctly with local models from LM Studio, including issues with local LLAVA models not starting screen recording.

Vision Quest on M1 Mac: Engineers expressed frustration about hardware constraints on vision models for M1 Mac, indicating a strong interest in free and accessible AI solutions, given the high costs associated with OpenAI's offerings.

Integration Anticipation for Rabbit R1: Excitement is brewing over integrating Rabbit R1 with OpenInterpreter, particularly the upcoming webhook feature, to enable practical actions.

Bash Model Request Open: A call for suggestions for an open model suitable for handling bash commands has yet to be answered, leaving an open gap for potential recommendations.

AI Stack Devs (Yoko Li) Discord

Curiosity for AI Town's Development Status: Members in AI Stack Devs sought an update on the project, with one expressing interest in progress, while another apologized for not contributing yet due to a lack of time.

Tileset Troubles in AI Town: An engineering challenge surfaced around parsing spritesheets for AI Town, with a proposal to use the provided level editor or Tiled, supported by conversion scripts from the community.

Learning to Un-Censor LLMs: A member shared insights from a Hugging Face blog post on abliteration, which uncensors LLMs, featuring instruct versions of the third generation of Llama models. They followed up by inquiring about applying this technique to OpenAI models.

Unanswered OpenAI Implementation Query: Despite sharing the study on abliteration, a call for knowledge on how to implement the technique with OpenAI models went unanswered in the thread.

For a deeper dive: - Parsing challenges and methods: (not provided) - Blog post on abliteration: Uncensor any LLM with abliteration.

Datasette - LLM (@SimonW) Discord

- The Truncated Text Mystery: When working with embeddings using

llm, a member discovered that input text exceeding the model's context length doesn't necessarily trigger an error and might result in truncated input. The actual behavior varies by model and underscores a need for clearer documentation on how each model handles excessive input length.

- Embedding Jobs: To Resume or Not to Resume: A query was raised regarding the functionality of

embed-multiin resuming large embedding jobs without reprocessing completed parts. This highlights the need for features that can manage partial completions within embedding processes.

- Documentation Desire for Embedding Behaviors: The response from @SimonW pointing to a lack of clarity in model behavior documentation directed at whether inputs are truncated or produce errors, indicates a larger call from users for comprehensive and accessible documentation on these AI systems.

- Guesswork in Model Truncation: In absence of error messages, it was posited by @SimonW that the large text inputs leading to unexpected embedding results are likely being truncated, a behavior that should be explicitly verified within specific model documentation.

- Efficiency in Large Embedding Tasks: The discussion around whether the

embed-multican identify and skip previously processed data in rerunning large jobs showcases a concern for efficiency and the need for intelligent job handling in long-running AI processes.

Torchtune Discord

Megatron's Checkpoint Conundrum: Engineers enquired about Megatron's compatibility with fine-tuning libraries, noting its unique checkpoint format. It was agreed that converting Megatron checkpoints to Hugging Face format and utilizing Torchtune for fine-tuning was the best course of action.

Mozilla AI Discord

- Call for JSON Schema Integration Heats Up: An AI Engineer proposed the inclusion of a JSON schema in the upcoming software version to streamline application development, acknowledging some bugs but underscoring the ease it brings to building applications. No details on a timeline or potential implementation challenges were provided.

YAIG (a16z Infra) Discord

- Weekend Audio Learning on AI Infrastructure: A link to a weekend listening session was shared by a member, featuring a discussion on AI infrastructure, which could be of interest to AI engineers looking to stay abreigned of the latest trends and challenges in the field. The content is accessible on YouTube.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!