[AINews] Qdrant's BM42: "Please don't trust us"

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Peer review is all you need.

AI News for 7/4/2024-7/5/2024. We checked 7 subreddits, 384 Twitters and 30 Discords (418 channels, and 3772 messages) for you. Estimated reading time saved (at 200wpm): 429 minutes. You can now tag @smol_ai for AINews discussions + try Smol Talk!

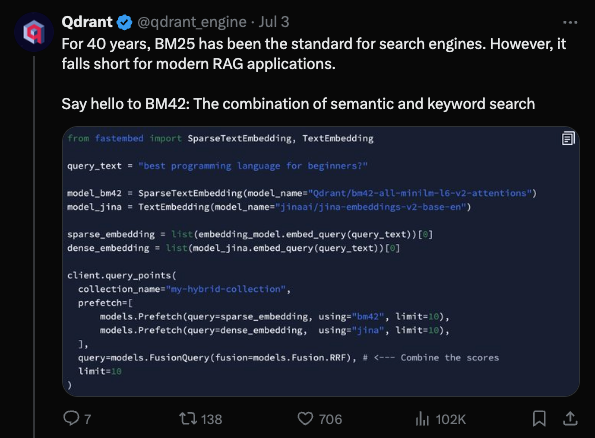

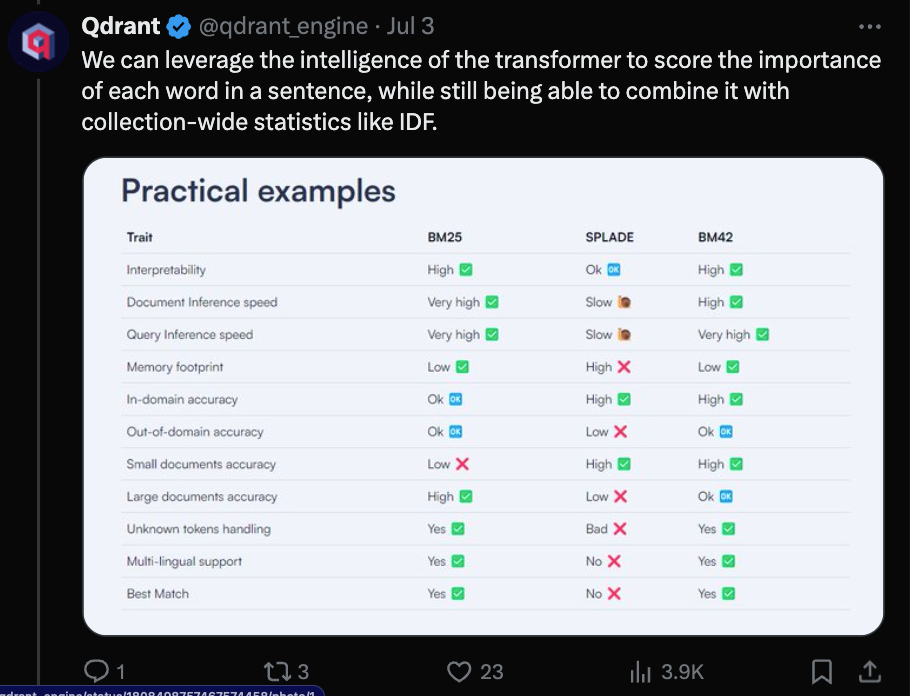

Qdrant is widely known as OpenAI's vector database of choice, and over the July 4 holiday they kicked off some big claims to replace the venerable BM25 (and even the more modern SPLADE), attempting to coin "BM42":

to solve the problem of semantic + keyword search by combining transformer attention for word importance scoring with collection-wide statistics like IDF, claiming advantages over every use case:

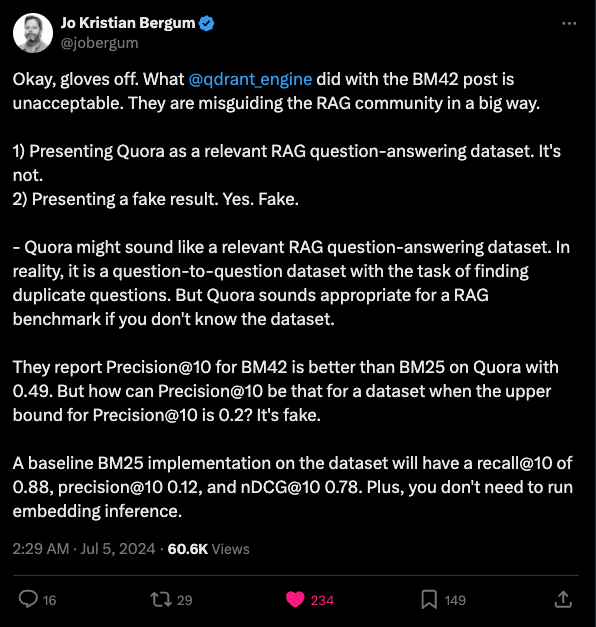

Only one problem... the results. Jo Bergum from Vespa (a competitor), pointed out the odd choice of Quora (a "find similar duplicate" questions dataset, not a Q&A retrieval dataset) as dataset and obviously incorrect evals if you know that dataset:

Specifically, the Quora dataset only has ~1.6 datapoints per query so their precision@10 number was obviously wrong claiming to have >4 per 10.

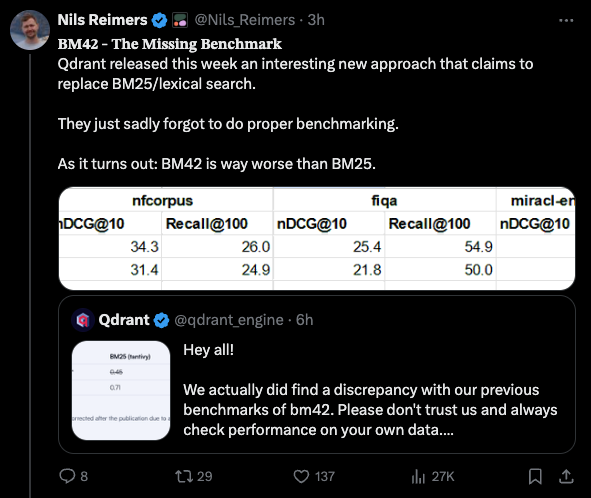

Nils Reimers of Cohere took BM42 and reran on better datasets for finance, biomedical, and Wikipedia domains, and sadly BM42 came up short on all accounts:

For their part, Qdrant has responded to and acknowledged the corrections, and published corrections... except still oddly running a BM25 implementation that scores worse than everyone else expects and conveniently worse than BM42.

Unfortunate for Qdrant, but the rest of us just got a lightning lesson in knowing your data, and sanity checking evals. Lastly, as always in PR and especially in AI, Extraordinary claims require extraordinary evidence.

Meta note: If you have always wanted to customize your own version of AI News, we have now previewed a janky early version of Smol Talk, which you can access here: https://smol.fly.dev

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet.

Stripe Issues and Alternatives

- Stripe account issues: @HamelHusain noted Stripe is "holding all my money hostage" with "endless wall of red tape" despite no refund requests. @jeremyphoward called it "disgraceful" that Stripe canceled an account due to an "AI/ML model failure".

- Appealing Stripe decisions: @HamelHusain appealed a Stripe rejection but got denied within 5 minutes, with Stripe "holding thousands of dollars hostage".

- Alternatives to Stripe: @HamelHusain noted needing a "backup plan" as "Getting caught by AI/ML false positives sucks." @virattt expressed caution about using Stripe after seeing many posts about issues.

AI and LLM Developments

- Anthropic Constitutional AI: @Anthropic noted Claude 3.5 Sonnet suppresses parts of answers with "antThinking" tags that are removed on the backend, which some disagree with being hidden.

- Gemma 2 model optimizations: @rohanpaul_ai shared Gemma 2 can be finetuned 2x faster with 63% less memory using the UnslothAI library, allowing 3-5x longer contexts than HF+FA2. It can go up to 34B on a single consumer GPU.

- nanoLLaVA-1.5 vision model: @stablequan_ai announced nanoLLaVA-1.5, a compact 1B parameter vision model with significantly improved performance over v1.0. Model and spaces were linked.

- Reflection as a Service for LLMs: @llama_index introduced using reflection as a standalone service for agentic LLM applications to validate outputs and self-correct for reliability. Relevant papers were cited.

AI Art and Perception

- AI vs human art perception poll: @bindureddy posted a poll with 3 AI generated images and 1 human artwork, challenging people to identify the human one, as a "quick experiment" on art perception.

- AI art as non-plagiarism: @bindureddy argued AI art is not plagiarism as it does the "same thing humans do" in studying work, getting inspired, and creating something new. Exact replicas are plagiarism, but not brand new creations.

Memes and Humor

- Zuckerberg meme video: @GoogleDeepMind shared a meme video of Mark Zuckerberg reacting. @BrivaelLp joked about Zuckerberg's "masterclass" in transforming into a "badass tech guy".

- Caninecyte definition: @c_valenzuelab jokingly defined a "caninecyte" as a "type of cell characterized by its resemblance to a dog" in a mock dictionary entry.

- Funny family photos: @NerdyRodent humorously asked "Why is it that when I go through old family pictures, someone always has to stick their tongue out?" with an accompanying pixelated artwork.

AI Reddit Recap

Across r/LocalLlama, r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity. Comment crawling works now but has lots to improve!

AI Progress and Implications

- Rapid pace of AI breakthroughs: In /r/singularity, a post highlights how compressed recent AI advances are in the grand scheme of human history, with modern deep learning emerging in just the last "second" if human existence was a 24-hour day. However, an article questions the economic impact of AI so far despite the hype.

- AI humor abilities: Studies show AI-generated humor being rated as funnier than humans and on par with The Onion, though some /r/singularity commenters are skeptical the AI jokes are that original.

- OpenAI security breach: The New York Times reports that in early 2023, a hacker breached OpenAI's communication systems and stole info on AI development, raising concerns they aren't doing enough to prevent IP theft by foreign entities.

- Anti-aging progress: In a YouTube interview, the CSO of Altos Labs discusses seeing major anti-aging effects in mice from cellular reprogramming, with old mice looking young again. Human trials are next.

AI Models and Capabilities

- New open source models discussed include Kyutai's Moshi audio model, the internlm 2.5 xcomposer vision model, and T5/FLAN-T5 being merged into llama.cpp.

- An evaluation of 180+ LLMs on code generation found DeepSeek Coder 2 beat LLama 3 on cost-effectiveness, with Claude 3.5 Sonnet equally capable. Only 57% of responses compiled as-is.

AI Safety and Security

- /r/LocalLLaMA discusses ways to secure LLM apps, including fine-tuning to reject unsafe requests, prompt engineering, safety models, regex filtering, and not rewriting user prompts.

- An example is shared of Google's Gemini AI repeating debunked information, showing current AI can't be blindly trusted as factual.

AI Art and Media

- Workflows are shared for generating AI singers using Stable Diffusion, MimicMotion, and Suno AI, and using ComfyUI to generate images from a single reference.

- /r/StableDiffusion discusses a new open source method for transferring facial expressions between images/video, and the emerging role of AI Technical Artists to build AI art pipelines for game studios.

- /r/singularity predicts a resurgence in demand for live entertainment as AI displaces online media.

Robotics and Embodied AI

- Videos are shared of Menteebot navigating an environment, a robot roughly picking tomatoes, and Japan developing a giant humanoid robot to maintain railways.

- A tweet calls for development of open source mechs.

Miscellaneous

- /r/StableDiffusion expresses concern about the sudden disappearance of the Auto-Photoshop-StableDiffusion plugin developer.

- An extreme horror-themed 16.5B parameter LLaMA model is shared on Hugging Face.

- /r/singularity discusses a "Singularity Paradox" thought experiment about when to buy a computer if progress doubles daily, with comments noting flaws in the premise.

AI Discord Recap

A summary of Summaries of Summaries

1. LLM Performance and Optimization

- New models like Llama 3, DeepSeek-V2, and Granite-8B-Code-Instruct are showing strong performance on various benchmarks. For example, Llama 3 has risen to the top of leaderboards like ChatbotArena, outperforming models like GPT-4-Turbo and Claude 3 Opus.

-

Optimization techniques are advancing rapidly:

- ZeRO++ promises 4x reduction in communication overhead for large model training.

- The vAttention system aims to dynamically manage KV-cache memory for efficient LLM inference.

- QServe introduced W4A8KV4 quantization to boost cloud-based LLM serving performance.

2. Open Source AI Ecosystem

- Tools like Axolotl are supporting diverse dataset formats for LLM training.

- LlamaIndex launched a course on building agentic RAG systems.

- Open-source models like RefuelLLM-2 are being released, focusing on specific use cases.

3. Multimodal AI and Generative Models

-

New multimodal models are enhancing various capabilities:

- Idefics2 8B Chatty focuses on improved chat interactions.

- CodeGemma 1.1 7B refines coding abilities.

- Phi 3 brings AI chatbots to browsers via WebGPU.

- Combinations of models (e.g., Pixart Sigma + SDXL + PAG) are aiming to achieve DALLE-3 level outputs.

4. Stability AI Licensing

- Stability AI revised the license for SD3 Medium after community feedback, aiming to provide more clarity for individual creators and small businesses.

- Discussions about AI model licensing terms and their impact on open source development are ongoing across multiple communities.

- Stability AI's launch of Stable Artisan, a Discord bot integrating various Stable Diffusion models for media generation and editing, was a hot topic (Stable Artisan Announcement). Users discussed the implications of the bot, including questions about SD3's open-source status and the introduction of Artisan as a paid API service.

5. Community Tools and Platforms

- Stability AI launched Stable Artisan, a Discord bot integrating models like Stable Diffusion 3 and Stable Video Diffusion for media generation within Discord.

- Nomic AI announced GPT4All 3.0, an open-source local LLM desktop app, emphasizing privacy and supporting multiple models and operating systems.

6. New LLM Releases and Benchmarking Discussions:

- Several AI communities discussed the release of new language models, such as Meta's Llama 3, IBM's Granite-8B-Code-Instruct, and DeepSeek-V2, with a focus on their performance on various benchmarks and leaderboards. (Llama 3 Blog Post, Granite-8B-Code-Instruct on Hugging Face, DeepSeek-V2 on Hugging Face)

- Some users expressed skepticism about the validity of certain benchmarks, calling for more credible sources to set realistic standards for LLM assessment.

7. Optimizing LLM Training and Inference:

- Across multiple Discords, users shared techniques and frameworks for optimizing LLM training and inference, such as Microsoft's ZeRO++ for reducing communication overhead (ZeRO++ Tutorial), vAttention for dynamic KV-cache memory management (vAttention Paper), and QServe for quantization-based performance improvements (QServe Paper).

- Other optimization approaches like Consistency LLMs for parallel token decoding were also discussed (Consistency LLMs Blog Post).

8. Advancements in Open-Source AI Frameworks and Datasets:

- Open-source AI frameworks and datasets were a common topic across the Discords. Projects like Axolotl (Axolotl Dataset Formats), LlamaIndex (Building Agentic RAG with LlamaIndex Course), and RefuelLLM-2 (RefuelLLM-2 on Hugging Face) were highlighted for their contributions to the AI community.

- The Modular framework was also discussed for its potential in Python integration and AI extensions (Modular Blog Post).

9. Multimodal AI and Generative Models:

- Conversations surrounding multimodal AI and generative models were prevalent, with mentions of models like Idefics2 8B Chatty (Idefics2 8B Chatty Tweet), CodeGemma 1.1 7B (CodeGemma 1.1 7B Tweet), and Phi 3 (Phi 3 Reddit Post) for various applications such as chat interactions, coding, and browser-based AI.

- Generative modeling techniques like combining Pixart Sigma, SDXL, and PAG for high-quality outputs and the open-source IC-Light project for image relighting were also discussed (IC-Light GitHub Repo).

10. New Model Releases and Training Tips in Unsloth AI Community:

- The Unsloth AI community was abuzz with discussions about new model releases like IBM's Granite-8B-Code-Instruct (Granite-8B-Code-Instruct on Hugging Face) and RefuelAI's RefuelLLM-2 (RefuelLLM-2 on Hugging Face). Users shared their experiences with these models, including challenges with Windows compatibility and skepticism over certain performance benchmarks. The community also exchanged valuable tips and insights on model training and fine-tuning.

PART 1: High level Discord summaries

HuggingFace Discord

- Vietnamese Linguistics Voiced: Vi-VLM's Vision**: The Vi-VLM team announced a Vision-Language model tailored for Vietnamese, integrating Vistral and LLaVA frameworks to focus on image descriptions. Viewers can find the demo and supporting code in the linked repository.

- Dataset Availability: Vi-VLM released a dataset specific for VLM training in Vietnamese, which is accessible for enhancing local language model applications. The dataset adds to the linguistic resources available for Southeast Asian languages.

- Grappling with Graphics: WHAM's Alternative Search**: An enthusiast sought alternatives to WHAM for human pose estimation in complex videos, pointing out the ungainly Python and CV dependencies. The community exchange hints at a need for tools that accommodate non-technical users in sophisticated AI applications.

- Learning resources for ViT and U-Net implementations were shared, including a guide from Zero to Mastery and courses by Andrew Ng, indicating community interest in mastering these vision transformer models.

- Tuning In: Audio-Language Model Discourse**: Moshi's linguistic fluidity: Yann LeCun shared a tweet spotlighting Kyutai.org's digital pirate that can comprehend English spoken with a French accent, showcasing the model's diverse auditory processing capabilities.

- Interest in the Flora paper and audio-language models remains strong, reflecting the AI community's focus on cross-modal faculties. Upcoming paper reading sessions on these topics are anticipated with enthusiasm.

- Frozen in Thought: The Mistral Model Stalemate**: Users reported a crawling halt in the Mistral model's inference process, notably at iteration 1800 out of 3000, suggesting possible caching complications. This reflects on the pragmatic challenge of managing resources during extensive computational tasks.

- Conversations surfaced around making effective API calls without downloading models locally, highlighting the need for streamlined remote inference protocols. The API-centric dialogue underscores a trend towards more flexible, cloud-based ML operations.

- Diffusion Discussion: RealVisXL and ISR**: RealVisXL V4.0, optimized for rendering photorealistic visuals, is now in training, with an official page and sponsorship on Boosty, spotlighting community support for model development.

- The existing IDM-VTON's 'no file named diffusion_pytorch_model.bin' error in Google Colab exemplifies the troubleshooting dialogs that emerge within the diffusion model space, emphasizing the practical sides of AI deployment.

Stability.ai (Stable Diffusion) Discord

- Clearing the Confusion: Stability AI’s Community License: Stability AI has revised their SD3 Medium release license after feedback, offering a new Stability AI Community License that clarifies usage for individual creators and small businesses. Details are available in their recent announcement, with the company striking a balance between commercial rights and community support.

- Users can now freely use Stability AI's models for non-commercial purposes under the new license, providing an open source boon to the community and prompting discussions about how these changes could impact model development and accessibility.

- Anime AI Model's Metamorphosis: Animagine XL 3.1: The Animagine XL 3.1 model by Cagliostro Research Lab and SeaArt.ai is driving conversations with its enhancements over predecessor models, bringing higher quality and broader range of anime imagery to the forefront.

- The AAM Anime Mix XL has also captured attention, sparking a flurry of comparisons with Animagine XL 3.1, as enthusiasts discuss their experiences and preferences between the different anime-focused generation models.

- Debating the GPU Arms Race: Multi-GPU Configurations: The technical community is actively discussing the optimization of multiple GPU setups to boost Stable Diffusion's performance, with emphasis on tools like SwarmUI that cater to these complex configurations.

- The debates converge on the challenges of efficiently managing resources and achieving high-quality outputs, highlighting the combination of technical prowess and creativity required to navigate the evolving landscape of AI model training.

- CivitAI's SD3 Stance Spurring Controversy: CivitAI's move to ban SD3 models has divided opinion within the community, as some view it as a potential roadblock for the development of the Stable Diffusion 3 framework.

- The discussions deepen with insights into licensing intricacies, commercial implications, and the overall trajectory of how this decision could shape future collaborations and model evolutions.

- License and Limits: Stable Diffusion Under Scrutiny: The latest conversations scrutinize the license for Stable Diffusion 3 and its compatibility with both individual and enterprise usage, considering the community's need for clarity and freedom in AI model experimentation.

- Community sentiment is split, as discussions pivot around whether the perceived license restrictions unfairly penalize smaller projects or whether they're an inherent part of maturing technologies in the field of AI.

Unsloth AI (Daniel Han) Discord

- Gemma's Quantum Leap: The new Gemma 2 has hit the tech scene, boasting 2x faster finetuning and a lean VRAM footprint, requiring 63% less VRAM (Gemma 2 Blog). Support for hefty 9.7K token contexts on the 27B model was a particular highlight among Unsloth users.

- The marred launch with notebook issues such as mislabeling was glossed over by a community member's remark on the rushed blogpost, but those issues have been swiftly tackled by developers (Notebook Fixes).

- Datasets Galore at Replete-AI: Replete-AI has introduced two extensive datasets, Everything_Instruct and its multilingual cousin, each packing 11-12GB of instruct data (Replete AI Datasets). Over 6 million rows are at AI developers' disposal, promising to fuel the next wave of language model training.

- The community's enthusiasm was tempered with quality checks, probing the datasets for deduplication and content balance, a nod to the seasoned eye for meticulous dataset crafting.

- Notebooks Nailed to the Mast: Requests in collaboration channels have led to a commitment for pinning versatile notebooks, assisting members to swiftly home in on valuable resources.

- Continued efforts were seen with correcting notebook links and the promise to integrate them into the Unsloth GitHub page, showcasing a dynamic community-driven documentation process (GitHub Unsloth).

- Patch and Progress with Unsloth 2024.7: Unsloth's patch 2024.7 got mixed reception due to checkpoint-related errors, yet it marks an important stride by integrating Gemma 2 support into Unsloth's ever-growing toolkit (2024.7 Update).

- Devoted users and Unsloth's responsive devs are on top of the fine-tuning foibles and error resolutions, evidencing a robust feedback loop essential for fine-grained model optimization.

- Facebook's Controversial Token Tactics: Facebook's multi-token prediction model stirred debate over access barriers, stirring a whirlwind of opinions among Unsloth's tight-knit community.

- Critical views on data privacy were par for the course, specifically relating to the need for sharing contact data to utilize Facebook's model, fueling an ongoing conversation on ethical AI usage (Facebook's Multi-Token Model).

Latent Space Discord

- Sprinting on Funding Runway: Following a link to rakis, community members discussed the whopping $85M seed investment intersecting AI with blockchain, sparking conversations on the current venture capital trends in technology.

- The developers of BM42 faced heat for potentially skewed benchmarks, leading to a vigilant community advocating for rigorous evaluation practices; this prompted a revised approach to their metrics and datasets.

- Collision Course: Coding Tools: Users compared git merge tool experiences, singling out lazygit and Sublime Merge, driving the conversation towards the need for more nuanced tools for code conflict resolution.

- Claude 3.5 and other AI-based tools grabbed the spotlight in discussions for their prowess in coding assistance, emphasizing efficiency in code completion and capabilities like handling complex multi-file refactors.

- Tuning into Technical Talk: On the Latent Space Podcast, Yi Tay from Reka illuminated the process of developing a training stack for frontier models while drawing size and strategy parallels with teams from OpenAI and Google Gemini.

- Listeners were invited to engage on Hacker News with the live discussion, bridging the gap between the podcast and broader AI research community dialogues.

- Navigating AV Troubles: OpenAI's AV experienced disruptions during the AIEWF demo, with voices for a switch to Zoom ensuing, followed by a swift action resulting in sharing a Zoom meeting link for better AV stability.

- Compatibility issues between Discord and Linux persisted as a recurrent technical headache, prompting users to explore more Linux-friendly communication alternatives.

- Deconstructing Model Merger Mania: Debates on model merging tactics took center stage with participants mulling the differing objectives and potential integrative strategies for tools like LlamaFile and Ollama.

- The conversation dived into the possibilities of wearable technology integration with AI for enhancing event experiences, paired with a deep consideration for privacy and consent.

LM Studio Discord

- Snapdragon's Surprising Speed: The Surface Laptop with Snapdragon Elite showcased heft, hitting 1.5 seconds to first token and 10 tokens per second on LLaMA3 8b with 8bit precision, whilst only using 10% GPU. No NPU activity yet, but the laptop's speed stirred speculation on eventual NPU boosts to LLaMA models.

- Tech enthusiasts compared Snapdragon's CPU prowess to older Intel counterparts, finding the former's velocity vivacious. Amidst laughter, the tech tribe teases about a makeshift Cardboard NPU, projecting potential performance peaks pending proper NPU programming.

- Quantization Quirks and Code Quests: Quantization quandaries arose with Gemma-2-27b, where model benchmarks behaved bizarrely across different quantized versions. Meanwhile, tailored system prompts polished performance for Gemma 2 27B, prompting PEP 8-adhering and efficient algorithm emission.

- Suggestions surfaced that Qwen2 models trot best with ChatML and a flash attention setting, while users with non-CUDA contraptions cautioned against the chaos of IQ quantization, noting notably nicer behavior on alternative architectures.

- LM Studio's ARM Standoff: A vexed user voiced frustration when LM Studio's AppImage defied a dance with aarch64 CPUs. The error light shone, signaling a syntax struggle, and a lamenting line confirmed, "No ARM CPU support on Linux."

- Dialogues dashed hopes for immediate ARM CPU inclusions, leaving Linux loyalists longing. A shared sibling sentiment suggested an architecture adjustment for LM Studio belongs on the horizon but hasn't hit home base just yet.

- RTX's Rocky Road: RTX 4060 8GB VRAM owners opined their predicament with 20B quantized models; a tenacious tussle with tokens terminated in total system freezes. Fellow forum members felt for them, flashing back to their own fragmentary RTX 4060 experiences.

- Guild guidance gave GPU grievances a glimmer of hope, heralding less loaded models like Mistral 7B and Open Hermes 2.5 for mid-tier machine mates. A commendatory chorus rose for smaller souls, steering clear of titanic token-takers.

- ROCm's Rescue Role: Seeking solace from stifled sessions, users with 7800XT aired their afflictions as models muddled up, missing the mark on GPU offload. A script signalled success, soothing overtaxed systems seeking ROCm solace.

- The cerebral collective converged on solutions, corroborating the effectiveness of the ROCm installation script. Joyous jingles jived in the forum, as confirmation came that GPGPU gurus had gathered a workaround worthy of the wired world.

CUDA MODE Discord

- CUDA Conundrums & Mixed-precision MatMul: Discussions in the CUDA MODE guild veered into optimizing matrix multiplication using CUDA, highlighting a blog post on techniques for column loading in GPU matrix multiplication; another thread featured the release of customized gemv kernels for int2*int8 and the BitBLAS library for mixed-precision operations.

- Users explored TorchDynamo's role in PyTorch performance, and compared ergonomics of Python vs C++ for CUDA kernel development, with Python favored for its agility in initial phases. Some faced challenges adapting to Python 3.12 bytecode changes with

torch.compile, addressed in a recent discussion.

- Users explored TorchDynamo's role in PyTorch performance, and compared ergonomics of Python vs C++ for CUDA kernel development, with Python favored for its agility in initial phases. Some faced challenges adapting to Python 3.12 bytecode changes with

- GPTs Crafting Executive Summaries & Model Training Trials: A blog post detailing the use of GPTs for executive summary drafting sparked interest, while LLM training trials with FP8 gradients were flagged for increasing losses, prompting a switch to BF16 for certain operations.

- Schedule-Free Optimizers boasted smoother loss curves, with empirical evidence of convergence benefits shared by users, meanwhile, a backend SDE's transition to CUDA inference optimization was deliberated with suggestions spanning online resources, course recommendations, and community involvement.

- AI Podcasts & Keynotes Spark Engaging Discussions: Lightning AI's Thunder Sessions podcast with Luca Antiga and Thomas Viehmann caught the attention of community members, whereas Andrej Karpathy's keynote at UC Berkeley was a highlighter of innovation and student talent.

- Casual conversations and channel engagement painted a picture of an interactive forum, with members sharing brief notes of excitement or appreciation, yet holding back on deeper technical exchanges in channels tagged as less focused.

- Deep Learning Frameworks & Triton Kernel Fixes: The quest to build a deep learning framework from scratch in C++, akin to tinygrad, uncovered the complexity hurdle, kindling a debate on the affordances of C++ vs Python in this context, while Triton kernel's

tl.loadissues in parallel CUDA graph instances required ingenuity to circumnavigate latency concerns.- Further intricacies surfaced when discussing the functioning of the

.tomethod in torch.ao, where current limitations restrict dtype and memory format changes, prompting temporary function amendments as discussed in issue trackers and commit logs.

- Further intricacies surfaced when discussing the functioning of the

Perplexity AI Discord

- Llamas Looping Lines: Repetition Glitch in AI**: Users experienced Perplexity AI outputting repetitive responses across models such as Llama 3 and Claude, and were reassured that the issue was being addressed with an imminent fix.

- Alex confirmed the issue's recognition and the ongoing efforts to rectify it, marking a pressing concern within the Perplexity AI's performance benchmark.

- Real-Time Reality Check Fails: Live Access Hiccups**: A gap in expectations has emerged as Perplexity AI users face live internet data retrieval issues, receiving obsolete rather than up-to-date information.

- Despite attempts to resolve the inaccuracies by restarting the application, the users indicated the problem persistence in the feedback channel.

- Math Model Missteps: Perplexity Pro's Calculation Challenges**: Perplexity Pro's computations, such as CAPM beta, were highlighted for inaccuracies despite its GPT-4o origins, casting shadows on its reliable academic application.

- The community expressed its dissatisfaction and concerns regarding the model's utility in fields requiring exact mathematical problem solving.

- Stock Market Success Stories: Perplexity’s Profitable Predictions**: Anecdotes of financial victories like making $8,000 surfaced among users who harnessed Perplexity AI for stock market decisions, triggering conversations on its varied benefits.

- Such user stories serve as testimonials to the diverse capabilities of the Pro version of Perplexity AI in real-world use cases.

- Subscription Scrutiny: Decoding Perplexity AI Plans**: Questions and comparisons flourished as users delved into the differences between Pro and Enterprise Pro plans, particularly concerning model allocations like Sonnet and Opus.

- Enquiries were directed at understanding not just availability but also the specificity of models included in Perplexity’s varied subscription offerings.

LAION Discord

- BUD-E Board Expansion: BUD-E now reads clipboard text, a new feature shown in a YouTube video with details on GitHub. The feature demo, presented in low quality, sparked light-hearted comments.

- The community discussed AI model training challenges due to recurrent usage of overlapping datasets, with FAL.AI's dataset access hurdles highlighting the issue. Contrastingly, breakthroughs like Chameleon are linked to a variety of integrated data.

- Clipdrop Censorship Confusion: Clipdrop's NSFW detection misfired, mislabeling a benign image as inappropriate, much to the amusement of the community.

- Stability AI revises license for SD3 Medium, now under the Stability AI Community License, allowing increased access for individual creators and small businesses after community feedback.

- T-FREE Trend Setter: The new T-FREE tokenizer, detailed in a recently released paper, promises sparse activations over character triplets, negating the need for large reference corpora and potentially reducing embedding layer parameters by over 85%.

- The approach is praised for enhancing performance on less common languages and slimming embedding timers, adding a compact edge to LLMs.

- Alert: Scammer in the Guild: A scammer was flagged in the #[research] channel, putting the community on high alert.

- A string of identical phishing links offering a '$50 gift card' was posted across multiple channels by a user, raising concerns.

OpenAI Discord

- Voices in the Void: The unveiling of a new Moshi AI demo sparked a mix of excitement for its real-time voice interaction and disappointment over issues with interruptions and looped responses.

- Hume AI's playground was scrutinized for its lack of long-term memory, frustrating users who seek persistent AI conversations.

- Memory Banks in Question: GPT's memory prowess came under fire as it saves user preferences but still fabricates responses, with members suggesting enhanced customization to mitigate this.

- A heated GPT-2 versus modern models debate surfaced, comparing the cost-efficiency of older models with the performance leaps in current iterations like GPT-3.5 Turbo.

- ChatGPT: Free vs. Plus Plans: Advantages of the paid ChatGPT Plus plan were clarified, detailing perks such as a higher usage cap, DALL·E access, and an expanded context window.

- GPT-4 usage concerns were addressed, with cooldown periods in place after limit hits, specifically allowing Plus members up to 40 messages every 3 hours.

- AI Toolbox Expansion: Community members explored tools for testing multiple AI responses to prompts, suggesting a custom-built tool and existing options for efficient assessment.

- Conversation turned to API integrations, looking at Rigorous Aggregate Generators (RAG) for linking AI models to diverse datasets and utilizing existing Assistant API endpoints.

- Contest with Context: In #prompt-engineering, strategies for contesting traffic tickets were delineated, advising structured approaches and legal argumentation techniques.

- Discussions blossomed over creating an employee recognition program to heighten workplace morale, focusing on goals and recognition criteria for notable contributions.

Nous Research AI Discord

- Datasets Deluge by Replete-AI: Replete-AI dropped two gargantuan datasets, titled Everything_Instruct and Everything_Instruct_Multilingual, boasting 11-12GB and over 6 million data stripes. Intent is to amalgamate variegated instruct data to advance AI training.

- The Everything_Instruct targets English, while Everything_Instruct_Multilingual brings in a linguistic mix to broaden language handling of AI. Both sets echo past successes like bagel datasets and take a cue from EveryoneLLM AI models. Dive in at Hugging Face.

- Nomic AI Drops GPT4All 3.0: The latest by Nomic AI, GPT4All 3.0, hits the scene as an open-source, local LLM desktop app catering to a plethora of models and prioritizing privacy. The app is noted for its redesigned user interface and is licensed under MIT. Explore its features.

- Touting more than a quarter-million monthly active users, GPT4All 3.0 facilitates private, local interactions with LLMs, cutting internet dependencies. Uptake has been robust, signaling a shift towards localized and private AI tool usage.

- InternLM-XComposer-2.5 Raises the Bar: InternLM introduced InternLM-XComposer-2.5, a juggernaut in large-vision language models that brilliantly juggles 24K interleaved image-text contexts and scales up to 96K via RoPE extrapolation.

- This model is a frontrunner with top-tier results on 16 benchmarks, closing in on behemoths like GPT-4V and Gemini Pro. Brewed with a sprinkle of innovation and a dash of competitive spirit, this InternLM concoction awaits.

- Claude 3.5's Conundrum and Lockdown: Attempts to bypass the ethical constraints in Claude 3.5 Sonnet led to frustration among users, with strategies around specific pre-prompts making little to no dent.

- Despite the resilience of Claude's restrictions, suggestions to experiment with Anthropic's workbench were shared. Yet, users were cautioned about the risks of account restrictions following such endeavors. Peer into the conversation.

- Apollo's Artistic AI Ascent: Achyut Benz bestowed the Apollo project upon the world, an AI that crafts visuals akin to the admired 3Blue1Brown animations. Built atop Next.js, it taps into GroqInc and interweaves both AnthropicAI 3.5 Sonnet & GPT-4.

- Apollo is all about augmenting the learning experience with AI-generated content, much to the enjoyment of the technophile educator. Watch Apollo's reveal.

OpenRouter (Alex Atallah) Discord

- Quantum Leap in LLM Deployment: OpenRouter's deployment strategy for LLM models specifies FP16/BF16 as the default quantization standard, with exceptions noted by an associated quantization icon.

- The adaptation of this quantization approach has sparked detailed discussions on the technical implications and efficiency gains.

- API Apocalypse Averted by OpenRouter: A sudden change in Microsoft's API could have spelled disaster for OpenRouter users, but a swift patch brought things back in line, earning applause from the community.

- The fix restored harmony, reflecting OpenRouter’s readiness for quick turnarounds in the face of technical disruptions.

- Infermatic Instills Privacy Confidence: In an affirmative update, Infermatic declared its commitment to real-time data processing with its new privacy policy, explicitly stating it won’t retain input prompts or model outputs.

- This update brought clarity and a sense of security to users, distancing the platform from previous data retention concerns.

- DeepSeek Decodes Equation Enigma: Users troubleshooting issues with DeepSeek Coder found a workaround for equations not rendering by ingeniously using regex to tweak output strings.

- Persistent problems with TypingMind's frontend not correctly processing prompts were flagged for a fix, demonstrating proactive community engagement.

- Pricey API Piques Peers: Debate heated up around Mistral's Codestral API pricing strategy, with the 22B model considered overpriced by some community members.

- Users steered each other towards more budget-friendly alternatives like DeepSeek Coder, which offers competitive coding capabilities without breaking the bank.

Eleuther Discord

- Fingerprints of the Digital Minds: The community explored Topological Data Analysis (TDA) for unique model fingerprinting and debated the utility of checksum-equivalent metrics for model validation, such as for the

LlamaForCausalLMusing tools like lm-evaluation-harness.- Discussions also touched on Topological Data Analysis to profile model weights by their invariants, referencing resources like TorchTDA and considering bit-level innovations from papers like 1.58-bit LLMs for efficiency.

- Tales of Scaling and Optimization: Attention was given to the efficientcube.ipynb notebook for scaling laws, while AOT compilation capabilities in JAX were highlighted as a step forward in pre-execution code optimization.

- FLOPs estimation methods for JIT-ed functions in Flax were shared, and critical batch sizes were reinvestigated, challenging the assumption that performance is unaffected below a certain threshold.

- Sparse Encoders and Residual Revelations: The deployment of Sparse Autoencoders (SAEs) trained on Llama 3 8B's residual stream discussed utilities for integrating with LLMs for better processing, furnished with details on the model's implementation.

- Looking into residual stream processing, the strategy organized SAEs by layer for optimizing their synergy with Llama 3 8B, as expanded upon in the associated model card.

- Harnessing the Horsepower of Parallel Evaluation: Enthusiast surfaced questions on the viability of caching preprocessed inputs and resolving Proof-Pile Config Errors, noting that changing to

lambada_openaicircumvented the issue.- Notables included model name length issues, prompting OSError(36, 'File name too long'), and guidance was sought on setting up parallel model evaluation, with warnings about single-process evaluation assumptions.

LangChain AI Discord

- LangChain Lamentations: LangChain users reported performance issues when running on CPU, with long response times and convoluted processing steps being a significant pain point.

- The debate is ongoing whether the sluggishness is due to inefficient model reasoning or the absence of GPU acceleration, while some suggest it's bogged down by unnecessary complexity, as discussed here.

- AI Model Showdown: OpenAI vs ChatOpenAI: Discussions ensued over the advantages of using OpenAI over ChatOpenAI as the former might be phased out, sparking a comparison of their implementation efficiencies.

- Members shared mixed experiences around task-specific requirements, while some preferred OpenAI for its familiar interface and tooling.

- Juicebox.ai: The People Search Prodigy: Juicebox.ai's PeopleGPT was praised for its Boolean-free natural language search capabilities to swiftly identify qualified talent, enhancing the talent search with ease-of-use features.

- The technical community lauded its combination of filtering and natural language search, elevating the overall experience for users; details are available here.

- RAG Chatbot Calendar Conundrum: A LangChain RAG-based chatbot developer sought guidance for integrating a demo scheduling function, highlighting the complexities found in the implementation process.

- Community response was geared towards assisting with this integration, indicating a cooperative effort to enhance the chatbot's capabilities despite the absence of explicit links to resources.

- Visual Vectored Virtuosity: A blogpost outlined creating an E2E Image Retrieval app using Lightly SSL and FAISS, complete with a vision transformer model.

- The post, accompanied by Colab Notebook and Gradio app, was shared to encourage peer learning and application.

LlamaIndex Discord

- LlamaIndex RAG-tastic Webinar Whirl: LlamaIndex partnered with Weights & Biases for a webinar demystifying the complexities involved in RAG experimentation and evaluation. The session promises insights into accurate LLM Judge alignment, with a spotlight on Weights and Biases collaboration.

- Anticipation builds as the RAG pipeline serves as a focal point for the upcoming webinar, highlighting challenges in the space. A hint of skepticism over RAG's nuanced evaluation underscores community buzz around the event.

- Rockstars of AI Edging Forward: Rising star @ravithejads shares his ascent in becoming a rockstar AI engineer and educator, fueling aspirations within the LlamaIndex community.

- LlamaIndex illuminates @ravithejads's contribution to OSS and consistent engagement with AI trends, igniting discussions about pathways for professional development in AI.

- Reflecting on 'Reflection as a Service': 'Reflection as a Service' enters the limelight at LlamaIndex, proposing an introspective mechanism to boost LLM reliability by adding a self-corrective layer.

- This innovative approach captivated the community, sparking dialogue on its potential to enhance agentic applications through intelligent self-correction.

- Cloud Function Challenges Versus Collaborative Fixes: Discussions surfaced on the Google Cloud Function regarding hardships with multiple model loading, sparking a collective search for more efficient methods among AI enthusiasts.

- Community wisdom circulates as members share their strategies for reducing load times and optimizing model use, showcasing a collaborative spirit in problem-solving.

- CRAG – Corrective Measures on Stage: Yan et al. introduce Corrective RAG (CRAG), an innovative LlamaIndex service designed to dynamically validate and correct irrelevant context during retrieval, stirring interest among AI practitioners.

- Connections are drawn between CRAG and possibilities for advancing retrieval-augmented generation systems, fueling forward-thinking conversations on refinement and accuracy.

Cohere Discord

- Open Invites to AI Soirees: Community members clarified that no special qualifications are necessary to attend the London AI event; simply filling out a form will suffice. The inclusive policy ensures that events are accessible to all, fostering a diverse exchange of ideas.

- Discussion around event attendance highlighted the importance of community engagement and open access in AI gatherings, as these policies promote broader participation and knowledge sharing across fields and expertise levels.

- API Woes in Production Mode: A TypeError issue was raised by a member deploying an app using Cohere's rerank API in production, sparking a troubleshooting thread in contrast with its smooth local operation.

- The community’s collaborative effort in addressing the rerank API problems showcased the value of shared knowledge and immediate peer support in overcoming technical challenges in a production environment.

- Fresh Faces in AI Development: Newly joined members of diverse backgrounds, including a Computer Science graduate and an AI developer focused on teaching, introduced themselves, expressing eagerness to contribute to the guild's collective expertise.

- The warm welcome extended to newcomers underlines the guild's commitment to nurturing a vibrant community of AI enthusiasts poised for collaborative growth and learning.

- Command R+ Steals the Limelight: Cohere announced their most potent model in the Command R family, Command R+, now ready for use, creating quite the buzz among the tech-savvy audience.

- The release of Command R+ is seen as a significant step in advancing the capabilities and applications of AI models, indicating a continuous drive towards innovation in the field.

- Saving Scripts with Rhea.run: The introduction of a 'Save to Project' feature in Rhea.run was met with enthusiasm as it allows users to create and preserve interactive applications through conversational HTML scripting.

- This new feature emphasizes Rhea.run’s dedication to simplifying the app creation process, thereby empowering developers to build and experiment with ease.

OpenInterpreter Discord

- MacOS Copilot Sneaks into Focus: The Invisibility MacOS Copilot featuring GPT-4, Gemini 1.5 Pro, and Claude-3 Opus was highlighted for its context absorption capabilities and is currently available for free.

- Community members showed interest in potentially open-sourcing grav.ai to incorporate similar functionalities into the Open Interpreter (OI) ecosystem.

- 'wtf' Command Adds Debugging Charm to OI: The 'wtf' command allows Open Interpreter to intelligently switch VSC themes and provide terminal debugging suggestions, sparking community excitement.

- Amazement over the command's ability to execute actions intuitively was voiced, with plans to share further updates on security roundtables and the upcoming OI House Party event.

- Shipping Woes for O1 Light Enthusiasts: Anticipation and frustration were the tones within the community regarding the 01 Light shipments, as discussions revolved around delays.

- Echoed sentiments of waiting reinforced the collective desire for clear communication on shipment timelines.

Modular (Mojo 🔥) Discord

- Mojo Objects Go Haywire!: Members discussed a casting bug affecting Mojo objects compared to Python objects, potentially linked to GitHub Issue #328.

- MLIR's Unsigned Integer Drama: The community discovered that MLIR interpreted unsigned integers as signed, sparking discussion and leading to the creation of GitHub Issue #3065.

- Concern surged around how this unsigned integer casting issue could impact various users, pivoting the conversation to this emerging bug.

- Compiler Nightly News: Segfaults and Solutions: Recent segfaults in the nightly build led to the submission of a bug report and sharing the problematic file, seen here.

- Added to this, new compiler releases were announced, with improvements including an

exclusiveparameter and new methods in version2024.7.505, linked in the changelog.

- Added to this, new compiler releases were announced, with improvements including an

- Marathon March: Mojo's Matrix Multiplication: Benny impressed by sharing a matrix multiplication technique and recommended tailoring block sizes, advising peers to consult UT Austin papers for insights.

- In a separate discussion thread, speed bumps occurred with increased compilation times and segfaults in the latest test suite, with participants directing each other to resources such as a Google Spreadsheet for papers.

LLM Finetuning (Hamel + Dan) Discord

- Solo Smithing Without Chains: Discussion confirmed LangSmith can operate independently of LangChain as demonstrated in examples on Colab and GitHub. LangSmith allows for instrumentation of LLMs, offering insights into application behaviors.

- Community members assuaged concerns about GPU credits during an AI course, emphasizing proper communication of terms and directing to clear info on the course platform.

- Credit Clarity & Monthly Challenges: A hot topic revolves around the $1000 monthly credit and its perishability, with consensus on no rollover but still appreciating the offer.

- A user's doubt about a mysteriously increased balance of $1030 post-Mistral finetuning led to speculation on a possible $30 default credit per month.

- Training Tweaks: Toiling with Tokens: A thread on the

Meta-Llama-3-8B-Instructsetup usingtype: input_outputsparked some confusion, with users examining special tokens and model configurations, referencing GitHub.- Trainers experienced better outcomes favoring L3 70B Instruct over L3 8B, serendipitously found when a configuration defaulted to the instruct model, highlighting model choice implications.

- Credit Confusion & Course Catch-up: Uncertainty loomed about credit eligibility for services, with one member seeking clarification on terms post-enrollment since June 14th.

- Another user echoed concerns about compute credit expiration, requesting an extension for the remaining credit which slipped through the calendar cracks.

Interconnects (Nathan Lambert) Discord

- Debunking the Demo Dilemma: Community member challenged the legitimacy of an AI demo, calling into question the realism of its responses and highlighting significant response time problems. The thread included a link to the contentious demonstration.

- In an apologetic pivot, Stability AI made revisions to the Stable Diffusion 3 Medium in response to community feedback, along with clarifications on their license, earmarking a path for future high-quality Generative AI endeavors.

- Search Smackdown: BM42 vs. BM25: The Qdrant Engine touted its BM42 as a breakthrough in search technology, promising superior RAG integration over the long-established BM25, as seen in their announcement.

- Critics, including Jo Bergum, questioned the integrity of BM42's reported success, suggesting the improbability of the claims and sparking debate on the validity of the findings presented on the dataset from Quora.

- VAEs Vexation and AI Investment Acumen: A humorous account of the difficulties in grasping Variational Autoencoders surfaced, juxtaposed against a claim of exceptional AI investment strategy within the community.

- A serious projection deduced that for AI to bolster GDP growth effectively, it must range between 11-15%, while the community continues to grapple with Anthropic Claude 3.5 Sonnet's opaque operations.

- Google's Grinder in the Global AI Gauntlet: Users discussed Google's sluggish start in the Generative AI segment, expressing concerns over the company's messaging clarity and direction regarding its products like Gemini web app.

- Discourse evolved around the pricing model and effectiveness of Google’s Gemini 1.5, with comparisons to other AI offerings and software like Vertex AI, amid reflections on the First Amendment's application to AI.

OpenAccess AI Collective (axolotl) Discord

- API Queue System Quirks: Reports of issues with the build.nvidia API led to discovery of a new queue system to manage requests, signaling a potentially overloaded service.

- A member encountered script issues with build.nvidia API, observing restored functionality after temporary downtime hinting at service intermittency.

- YAML Yields Pipeline Progress: A member shared their pipeline's integration of YAML examples for few-shot learning conversation models, sparking interest for its application with textbook data.

- Further clarifications were provided on how the YAML-based structure contributed to efficient few-shot learning processes within the pipeline.

- Gemma2 Garners Stability: Gemma2 update brought solutions to past bugs. A reinforcement of version control with a pinned version of transformers ensures smoother future updates.

- Continuous Integration (CI) tools were lauded for their role in preemptively catching issues, promoting a robust environment against development woes.

- A Call for More VRAM: A succinct but telling message from 'le_mess' underlined the perennial need within the group: a request for more VRAM.

- The single-line plea reflects the ongoing demand for higher performance computing resources among practitioners, without further elaboration in the conversation.

tinygrad (George Hotz) Discord

- Tensor Trouble in Tinygrad: Discussions arise about Tensor.randn and Tensor.randint creating contiguous Tensors, while

Tensor.fullleads to non-contiguous structures, prompting an examination of methods that differ from PyTorch's expectations.- A community member queried about placement for a bug test in tinygrad, debating between test_nn or test_ops modules, with the final decision leaning towards an efficient and well-named test within test_ops.

- Training Pains and Gains: Tinygrad users signal concerns about the framework's large-scale training efficiency, calling it sluggish and economically impractical, while considering employing BEAM search despite its complexity and time demands.

- A conversation sparks around the use of pre-trained PyTorch models in Tinygrad, directing users towards

tinygrad.nn.state.torch_loadfor effective model inference operations.

- A conversation sparks around the use of pre-trained PyTorch models in Tinygrad, directing users towards

- Matmul Masterclass: A blog post showcasing a guide to high-performance matrix multiplication achieves over 1 TFLOPS on CPU, shared within the community, detailing the practical implementation approach and source code.

- The share included a link to the blog post that breaks down matrix multiplication into an accessible 150 line C program, inviting discussion on performance optimization in Tinygrad.

Torchtune Discord

- Torchtune's Tuning Talk: Community members exchanged insights on setting evaluation parameters for Torchtune, with mentions of a potential 'validation dataset' parameter to tune performance.

- Others raised concerns about missing wandb logging metrics, specifically for evaluation loss and grad norm statistics, highlighting a need for more robust metric tracking.

- Wandb Woes and Wins: A topic of discussion was wandb's visualization capabilities, where a grad norm graph miss sparked questions about its availability compared to tools like aoxotl.

- Suggestions included adjusting the initial learning rate to affect the loss curve, but despite optimizations, one member noted no significant loss improvements, emphasizing the challenges of parameter fine-tuning.

AI Stack Devs (Yoko Li) Discord

- Code Clash: Python meets TypeScript: A challenging encounter was shared regarding the integration of Python with TypeScript while setting up the Convex platform. Issues surfaced when Convex experienced launch bugs stemming from a lack of pre-installed Python.

- Furthermore, discussion revolved around the difficulties faced in automating the installation of the Convex local backend within a Docker environment, emphasizing the complication arose from the specific configuration of container folders as volumes.

- Pixel Hunt: In Search of the Perfect Sprite: A member explored the domain of sprite sheets, expressing their goal to find visuals resonant with the Cloudpunk game's style, but found their assortment from itch.io lacking the desired cyberpunk nuance.

- They are on the lookout for sprite resources that align better with Cloudpunk's distinctive aesthetic, as previous acquisitions fell short in mirroring the game's signature atmosphere.

DiscoResearch Discord

- Summarizing with a GPT Trio: Three GPTs Walk into a Bar and Write an Exec Summary blog post showcases a dynamic trio of Custom GPTs designed to extract insights, draft, and revise executive summaries swiftly.

- This toolkit enables the producing of succinct and relevant executive summaries under tight deadlines, streamlining the process for delivering condensed yet impactful briefs.

- Magpie's Maiden Flight on HuggingFace: The Magpie model makes its debut on HuggingFace Spaces, offering a tool for generating preference data, albeit with a duplication from davanstrien/magpie.

- User experiences reveal room for improvement, as feedback indicates that the model’s performance isn't fully satisfactory, yet the community remains optimistic about its potential applications.

MLOps @Chipro Discord

- Build With Claude Campaigns Ahead: Engineering enthusiasts are called to action for the Claude hackathon, a creative coding sprint winding down next week.

- Participants aim to craft innovative solutions, employing Claude's capabilities for a chance to shine in the closing contest.

- Kafka's Cost-Cutting Conclave: A webinar set for July 18th at 4 PM IST promises insights into optimizing Kafka for better performance and reduced expenses.

- Yaniv Ben Hemo and Viktor Somogyi-Vass will steer discussions, focusing on scaling strategies and efficiency in Kafka setups.

Datasette - LLM (@SimonW) Discord

- Jovial Jest at Job Jargon: Conversations have sprouted around the growing potential uses for embeddings in the field, sparking some playful banter about job titles.

- One participant quipped about renaming themselves an Embeddings AyEngineer*, lending a humorous twist to the evolving nomenclature in AI.

- Title Tattle Turns Trendy: The rise in embedding-specific roles leads to a light-hearted suggestion of the title Embeddings Engineer.

- This humorous proposition underscores the significance of embeddings in current engineering work and the community's creative spirit.

The LLM Perf Enthusiasts AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The YAIG (a16z Infra) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!