[AINews] Project Stargate: $500b datacenter (1.7% of US GDP) and Gemini 2 Flash Thinking 2

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Masa Son and Noam Shazeer are all you need.

AI News for 1/20/2025-1/21/2025. We checked 7 subreddits, 433 Twitters and 34 Discords (225 channels, and 4353 messages) for you. Estimated reading time saved (at 200wpm): 450 minutes. You can now tag @smol_ai for AINews discussions!

Days like these are a conundrum - on one hand, the obvious big earth shattering news is the announcement of Project Stargate, a US "AI Manhattan project" led by OpenAI and Softbank, and supported by Softbank, OpenAI, Oracle, MGX, Arm, Microsoft, and NVIDIA. For scale, the actual Manhattan project cost $35B inflation adjusted.

Although this was rumored since a year ago, Microsoft's reduced role as exclusive compute partner to OpenAI is prominent by its absence. As with any splashy PR stunt, one should beware AI-washing, but the project is very serious and should be treated as such.

However, it's not really news you can use today, which is what we aim to do here at your local AI newspaper.

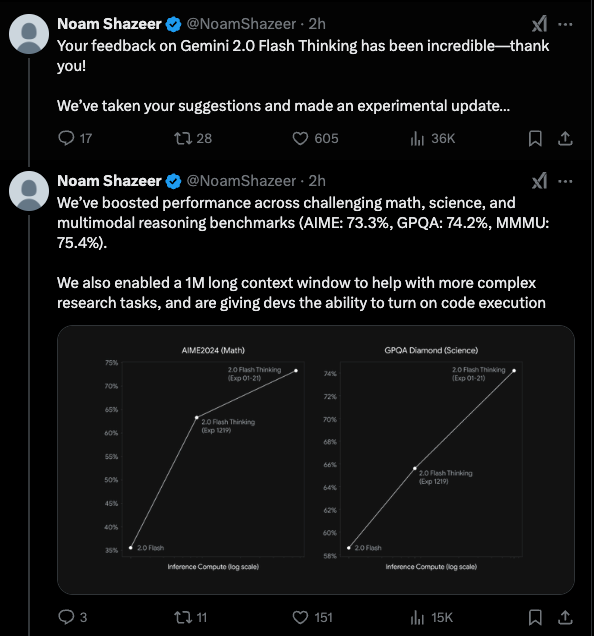

Fortunately, Noam Shazeer got you, with a second Gemini 2.0 Flash Thinking, with another big leap on 2.0 Flash, and 1M long context that you can use today (we will enable in AINews and Smol Talk tomorrow):

AI Studio also got a code interpreter.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

TO BE COMPLETED

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. DeepSeek R1: Release, Performance, and Strategic Vision

- DeepSeek R1 (Qwen 32B Distill) is now available for free on HuggingChat! (Score: 364, Comments: 106): DeepSeek R1, a distillation of Qwen 32B, is now accessible for free on HuggingChat.

- Hosting and Access Concerns: Users discuss the option to self-host DeepSeek R1 to avoid logging into HuggingChat, with some expressing frustration over the need for an account to evaluate the model. A suggestion was made to use a dummy email for account creation to bypass this requirement.

- Performance and Technical Issues: There are reports of performance issues such as the model becoming unresponsive, and discussions on the use of quantization (e.g., FP8, 8-bit) and system prompts affecting model performance. Some users noted that DeepSeek R1 is better at planning than code generation, and others shared tools like cot_proxy to manage the model's "thought" tags.

- Model Comparisons and Preferences: Comparisons were made between DeepSeek R1 and other models like Phi-4 and Llama 70B, with some users preferring distilled models for specific tasks like math and nuanced understanding. There is interest in exploring other variants like Qwen 14B and the anticipation of R1 Lite for improved consistency.

- Inside DeepSeek’s Bold Mission (CEO Liang Wenfeng Interview) (Score: 124, Comments: 27): DeepSeek, led by CEO Liang Wenfeng, distinguishes itself with a focus on fundamental AGI research over rapid commercialization, aiming to shift China's role from a "free rider" to a "contributor" in global AI. Their MLA architecture drastically reduces memory usage and costs, with inference costs significantly lower than Llama3 and GPT-4 Turbo, reflecting their commitment to efficient innovation. Despite challenges like U.S. chip export restrictions, DeepSeek remains committed to open-source development, leveraging a bottom-up structure and young local talent, which could position them as a viable alternative to the closed-source trend in AI.

- DeepSeek's Focus on AGI: Commenters emphasize that DeepSeek's commitment to AGI, rather than profit, is notable, with some likening their approach to OpenAI's early days. There is skepticism about whether DeepSeek will maintain this open-source ethos long-term or eventually follow a closed-source model like other tech giants.

- Leadership and Recognition: Liang Wenfeng is highlighted for his leadership, with a significant mention of his meeting with Chinese Premier Li Qiang, indicating high-level recognition and support. This meeting underscores DeepSeek's growing influence and potential impact on AI development in China.

- Young Talent and Innovation: Commenters praise DeepSeek's team for their creativity and innovation, noting that the team consists of young, recently graduated PhDs who have accomplished significant achievements despite not being well-known before joining the company. This highlights the potential of leveraging young talent for groundbreaking advancements in AI.

- DeepSeek-R1-Distill-Qwen-1.5B running 100% locally in-browser on WebGPU. Reportedly outperforms GPT-4o and Claude-3.5-Sonnet on math benchmarks (28.9% on AIME and 83.9% on MATH). (Score: 72, Comments: 17): DeepSeek-R1-Distill-Qwen-1.5B is running entirely in-browser using WebGPU and reportedly surpasses GPT-4o and Claude-3.5-Sonnet in math benchmarks, achieving 28.9% on AIME and 83.9% on MATH.

- ONNX is discussed as a file format for LLMs, with some users noting it offers performance optimization, potentially up to 2.9x faster on specific hardware compared to other formats like safetensors and GGUF. However, the general consensus is that these are just different data formats appreciated by different hardware/software setups.

- DeepSeek-R1-Distill-Qwen-1.5B is noted for running entirely in-browser on WebGPU, outperforming GPT-4o in benchmarks, with an online demo and source code available on Hugging Face and GitHub. However, some users feel it doesn't match GPT-4o in real-world applications despite its impressive benchmark results.

Theme 2. New DeepSeek R1 Tooling Enhances Usability and Speed

- Deploy any LLM on Huggingface at 3-10x Speed (Score: 109, Comments: 13): The image illustrates a digital dashboard for "Dedicated Deployments" on Huggingface, showcasing two model deployment cards. The "deepseek-ai/DeepSeek-R1-Distill-Llama-70B" model is quantizing at 52% using four NVIDIA H100 GPUs, while the "deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B" model is operational on one NVIDIA H100 GPU, both recently active and ready for requests.

- avianio introduced a deployment service claiming 3-10x speed improvement over HF Inference/VLLM with a setup time of around 5 minutes, utilizing H100 and H200 GPUs. The service supports about 100 model architectures, with future plans for multimodal support, and offers cost-effective, private deployments without logging, priced at $0.01 per million tokens for high traffic scenarios.

- siegevjorn and killver questioned the 3-10x speed claim, seeking clarification on comparison metrics and hardware consistency. killver specifically asked if the claim was valid on the same hardware.

- omomox estimated the cost of deploying 4x H100s to be around $20/hr, highlighting potential cost considerations for users.

- Better R1 Experience in open webui (Score: 117, Comments: 41): The post introduces a simple open webui function for R1 models that enhances the user experience by replacing

- OpenUI vs. LMstudio: There is a comparison between OpenUI and LMstudio, with users expressing a desire for OpenUI to be as responsive as LMstudio. However, the author highlights that webui offers more flexibility by allowing users to modify input and output freely.

- DeepSeek API Support: Some users request adding support for DeepSeek's API to the open webui function, indicating interest in broader compatibility beyond local use.

- VRAM Limitations and Solutions: Users discuss the challenges of using models with limited VRAM, such as 8GB, and share resources like the DeepSeek-R1-Distill-Qwen-7B-GGUF on Hugging Face to potentially address these limitations.

Theme 3. Comparison of DeepSeek R1 Efficiency and Performance to Competitors

- I calculated the effective cost of R1 Vs o1 and here's what I found (Score: 58, Comments: 17): The post analyzes the cost-effectiveness of R1 versus o1 models by comparing their token generation and pricing. R1 generates 6.22 times more reasoning tokens than o1, while o1 is 27.4 times more expensive per million output tokens. Thus, R1 is effectively 4.41 times cheaper than o1 when considering token efficiency, though actual costs may vary slightly due to assumptions about token-to-character conversion.

- Several commenters, including UAAgency and inkberk, criticize the methodology used in the cost comparison, suggesting that the analysis might be biased or based on assumptions that don't accurately reflect real-world usage. Dyoakom and pigeon57434 highlight the potential lack of transparency from OpenAI, questioning the representativeness of the examples provided by the company.

- dubesor86 provides detailed testing results, indicating that R1 does not generate 6.22 times more reasoning tokens than o1. In their testing, R1 produced about 44% more thought tokens, and the real cost difference was 21.7 times cheaper for R1 compared to o1, based on API usage data, which contrasts with the original post's conclusions.

- BoJackHorseMan53 advises against relying solely on assumptions and suggests running actual queries with the API to determine the true cost differences, emphasizing the importance of verifying assumptions with practical testing.

- DeepSeek-R1 PlanBench benchmark results (Score: 56, Comments: 2): The PlanBench benchmark results as of January 20, 2025, compare various models like Claude-3.5 Sonnet, GPT-4, LLaMA-3.1 405B, Gemini 1.5 Pro, and Deepseek R1 across "Blocksworld" and "Mystery Blocksworld" domains. Key metrics include "Zero shot" scores, performance percentages, and average API costs per 100 instances, with models like Claude-3.5 Sonnet achieving a 54.8% success rate on 329 out of 600 questions.

Theme 4. Criticism of 'Gotcha' Tests in LLMs and Competitive Context

- Literally unusable (Score: 95, Comments: 102): Criticism of LLM 'Gotcha' tests highlights the structured response of a language model in counting occurrences of the letter 'r' in "strawberry." The model's analytical and instructional approach includes writing out the word, identifying, and counting the 'r's, emphasizing the presence of 2 lowercase 'r's.

- Model Variability and Performance: Commenters discuss how different model architectures and pretraining data result in varying performance, with smaller models often diverging from results of larger models like R1. Custodiam99 mentions that even the 70b model can be practically unusable, whereas others like Upstairs_Tie_7855 report outstanding results with the same model.

- Quantization and Settings Impact: Several users highlight the importance of using the correct quantization settings and system prompts to achieve accurate results. Youcef0w0 notes that models break with lower cache types than Q8, while TacticalRock emphasizes using the right quantization and temperature settings as per documentation.

- Practical Application and Limitations: Discussions reveal that the models are not AGI but tools requiring proper usage to solve problems effectively. ServeAlone7622 suggests a detailed process for using reasoning models, while MixtureOfAmateurs and LillyPlayer illustrate the models' struggles with specific prompts and overfitting on certain tasks.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. OpenAI Investment $500B: Partnership with Oracle and Softbank

- Trump to announce $500 billion investment in OpenAI-led joint venture (Score: 595, Comments: 181): Donald Trump plans to announce a $500 billion investment in a project led by OpenAI. Specific details of the joint venture and its objectives have not been provided.

- Misunderstanding of Investment Source: Many commenters clarify that the $500 billion investment is from the private sector, not the U.S. government. This investment involves OpenAI, SoftBank, and Oracle in a joint venture called Stargate, initially committing $100 billion with potential growth to $500 billion over four years.

- Concerns About Infrastructure and Location: Commenters express concerns about the adequacy of the U.S. electrical grid to handle AI infrastructure needs, suggesting future reliance on nuclear reactors. The choice of Texas for the project is questioned due to its isolated and unreliable electrical grid.

- Skepticism and Political Concerns: There is skepticism about whether the investment will materialize and criticism of the political implications, with some viewing it as aligning with fascist tendencies. The announcement is compared to previous speculative projects like "Infrastructure week" and the Wisconsin plant.

- Sam Altman’s expression during the entire AI Infra Deal Announcement (Score: 163, Comments: 51): The post lacks specific details or context about Sam Altman's expression during the AI Infra Deal announcement, providing no further information or insights.

- Discussions around Sam Altman's demeanor during the announcement highlight perceptions of anxiety and stress, with comments suggesting he often looks this way. Users liken his expression to a "Fauci face" or "Debra Birx" and speculate about the pressures he faces in his role.

- Several comments humorously reference Elon Musk and geopolitical figures like Putin, suggesting that Altman might be under significant pressure due to internal and external political dynamics. Comparisons are drawn to oligarch management and defenestration politics.

- The conversation includes light-hearted and sarcastic remarks about Altman's expression, with users jokingly attributing it to being a "twink waiting to see his sugardaddy" or worrying about Musk's reactions, indicating a mix of humor and critique in the community's perception of Altman.

Theme 2. OpenAI's New Model Operators

- CEO of Exa with inside information about Open Ai newer models (Score: 215, Comments: 105): CEO of Exa claims to have inside information on the capabilities of OpenAI's newer models, specifically questioning the potential effectiveness of these models as operators. The post does not provide further details or context.

- The discussion highlights skepticism about the hype surrounding AGI and OpenAI's newer models, with several users questioning the realism of claims and drawing parallels to previous overhyped technologies like 3D printers. Users express doubt about the real-world performance of models like o3 compared to their benchmark results, emphasizing the gap between hype and practical application.

- Several comments explore the limitations of current AI models, focusing on their inability to handle tasks that require real-time learning and complex reasoning, such as video comprehension and understanding 3D spaces. Altruistic-Skill8667 predicts that achieving AGI will require significant advancements in compute power and online learning, with a potential timeline extending to 2028 or 2029.

- Some users express concern over the socio-political implications of AI advancements, suggesting that AGI could be used to subjugate the working class under an oligarchic regime. A few comments also touch on the role of government and tech oligarchies in shaping AI's future, with comparisons between the US and China in terms of tech control and regulation.

Theme 3. Anthropic's ASI Prediction: Implications of 2-3 Year Timeline

- Anthropic CEO is now confident ASI (not AGI) will arrive in the next 2-3 years (Score: 173, Comments: 115): Anthropic's CEO, Amodei, predicts Artificial Superintelligence (ASI) could be achieved in the next 2-3 years, surpassing human intelligence. The company plans to release advanced AI models with enhanced memory and two-way voice integration for Claude, amidst competition with companies like OpenAI.

- Discussions highlight skepticism about ASI predictions within 2-3 years, with some researchers and commenters arguing that significant improvements in AI models are needed and that current AI systems are still far from achieving AGI. Dario Amodei's credibility is noted, given his background in AI research, but there is debate over whether his predictions are realistic.

- The distinction between narrow AI and general AI is emphasized, with current AI systems excelling in specific tasks but lacking the comprehensive capabilities of AGI. Commenters note that despite advancements, AI systems still struggle with many tasks that are simple for humans, and the path to AGI and ASI remains undefined.

- Funding and business motivations are questioned, with some suggesting that announcements of imminent ASI could be strategically timed to coincide with fundraising efforts. The comment about Anthropic's current fundraising activities supports this perspective.

AI Discord Recap

A summary of Summaries of Summaries by o1-preview-2024-09-12

Theme 1. DeepSeek R1 Rocks the AI World

- DeepSeek R1 Dethrones Competitors: The open-source DeepSeek R1 matches OpenAI's o1 performance, thrilling the community with its cost-effectiveness and accessibility. Users report strong performance in coding and reasoning tasks, with benchmarks showing it outperforming other models.

- Integration Frenzy Across Platforms: Developers scramble to integrate DeepSeek R1 into tools like Cursor, Codeium, and Aider, despite occasional hiccups. Discussions highlight both successes and challenges, especially regarding tool compatibility and performance.

- Censorship and Uncensored Versions Spark Debate: While some praise DeepSeek R1's safety features, others bemoan over-censorship hindering practical use. An uncensored version circulates, prompting debates about the balance between safety and usability.

Theme 2. OpenAI's Stargate Project Shoots for the Moon

- OpenAI Announces $500 Billion Stargate Investment: OpenAI, along with SoftBank and Oracle, pledges to invest $500 billion in AI infrastructure, dubbing it the Stargate Project. The initiative aims to bolster U.S. AI leadership, with comparisons drawn to the Apollo Program.

- Community Buzzes over AI Arms Race: The staggering investment stirs discussions about an AI arms race and geopolitical implications. Some express concerns that framing AI development as a competition could lead to unintended consequences.

- Mistral AI Makes a Mega IPO Move: Contradicting buyout rumors, Mistral AI announces IPO plans and expansion into Asia-Pacific, fueling speculation about its profitability and strategy.

Theme 3. New Models and Techniques Push Boundaries

- Liquid AI's LFM-7B Makes a Splash: Liquid AI's LFM-7B claims top performance among 7B models, supporting multiple languages including English, Arabic, and Japanese. Its focus on local deployment excites developers seeking efficient, private AI solutions.

- Mind Evolution Evolves AI Thinking: A new paper introduces Mind Evolution, an evolutionary search strategy that achieves over 98% success on planning tasks. This approach beats traditional methods like Best-of-N, signaling a leap in scaling LLM inference.

- SleepNet and DreamNet Dream of Better AI: Innovative models SleepNet and DreamNet propose integrating 'sleep' phases into training, mimicking human learning processes. These methods aim to balance exploration and precision, inspiring discussions on novel AI training techniques.

Theme 4. Users Battle Bugs and Limitations in AI Tools

- Windsurf Users Weather Lag Storms: Frustrated Windsurf users report laggy prompts and errors like "incomplete envelope: unexpected EOF", pushing some towards alternatives like Cursor. The community seeks solutions while expressing discontent over productivity hits.

- Flow Actions Limit Trips Up Coders: Codeium's Flow Actions limit hampers workflows, with users grumbling about repeated bottlenecks. Suggestions for strategic usage emerge, but many await official resolutions.

- Bolt Users Lose Tokens to Bugs: Developers lament losing tokens due to buggy code on Bolt, advocating for free debugging to mitigate losses. One exclaims, "I've lost count of wasted tokens!", highlighting cost concerns.

Theme 5. AI's Expanding Role in Creative and Technical Fields

- DeepSeek R1 Masters Math Tutoring: Users harness DeepSeek R1 for mathematics tutoring, praising its step-by-step solutions and support for special educational needs. Its speed and local deployment make it a favorite among educators.

- Generative AI Shapes Creative Industries: Articles spark debates on AI's impact on art and music, with some fearing AI might replace human creators. Others argue that human skills remain crucial to guide AI outputs effectively.

- Suno Hit with Copyright Lawsuit Over AI Music: AI music generator Suno faces fresh legal challenges from Germany's GEMA, accused of training on unlicensed recordings. The lawsuit fuels industry debates on the legality of AI-generated content.

PART 1: High level Discord summaries

Unsloth AI (Daniel Han) Discord

- DeepSeek-R1's Deceptive Depth: The DeepSeek-R1 model's maximum token length was found to be 16384 instead of the expected 163840, prompting bug concerns in code deployment.

- A tweet about RoPE factors and model embeddings triggered further discussion, with members suggesting incomplete usage of the model.

- LoRA Llama 3 Tuning Tactics: A Medium article by Gautam Chutani demonstrated LoRA-based fine-tuning of Llama 3, integrating Weights & Biases and vLLM for serving.

- He stressed cutting down on GPU overhead via LoRA injections, with community remarks pointing to a more resource-friendly alternative than high-end baseline finetunes.

- Chinchilla's Crisp Calculation: The Chinchilla paper recommends proportional growth of model size and training tokens for peak efficiency, reshaping data planning strategies.

- Participants argued the Chinchilla optimal approach sidesteps focusing on narrow parameter segments, stressing total parameter involvement as a safer strategy.

- Synthetic & Mixed Data Gains: Some promoted synthetic data for tighter evaluation alignment, while others applied mixed-format datasets in Unsloth to broaden coverage in training.

- Attendees noted dynamic adjustments can mitigate overfitting, yet domain-specific relevance remains questionable when venturing beyond real-world material.

- Open-Source UI Overdrive: OpenWebUI, Ollama, and Flowise surfaced as next targets for integration, while Kobold and Aphrodite remain active through the Kobold API.

- Invisietch confirmed a long to-do list, including a CLI for synthetic dataset creation, aiming for a unified backend API to streamline everything.

Cursor IDE Discord

- OpenAI's Stargate Strikes Grand: OpenAI announced a $500 billion investment plan called the Stargate Project to build new AI infrastructure in the US, with cooperation from SoftBank and others, as seen here.

- Community members are abuzz with strategic implications, wondering if Japan's big investments might embolden a new wave of AI competition.

- DeepSeek R1 Gains & Cursor Pains: DeepSeek R1 can be integrated into Cursor via OpenRouter, though some users find the workaround limiting and prefer to wait for native support.

- Benchmark chatter references Paul Gauthier's tweet citing a 57% score on the aider polyglot test, fueling debate on the upcoming competition between DeepSeek R1 and other LLMs.

- Cursor 0.45 Rollback Reactions: The Cursor team keeps rolling back v0.45.1 updates due to indexing issues, forcing developers to revert to earlier versions, as per Cursor Status.

- Some users are frustrated by instability and mention that minimal official statements complicate their workflow, suggesting they might explore alternative code editors like Codefetch.

- Claude 3.5 Competes with DeepSeek: Claude 3.5 performance has improved, sparking direct comparisons to DeepSeek R1 and prompting discussions on speed and accuracy gains.

- Anthropic's silence on future updates raises speculation about their next release, as overshadowed by the competition's momentum.

Codeium (Windsurf) Discord

- Windsurf Woes & Surging Delays: Multiple users lament ongoing lag issues in Windsurf, especially during code prompts, with some encountering 'incomplete envelope: unexpected EOF' errors.

- Some consider switching to Cursor due to these bugs, though potential solutions like adjusting local settings have yet to yield firm fixes.

- DeepSeek R1 Dominates Benchmarks: Community members are excited about DeepSeek R1 surpassing OpenAI O1-preview in various performance tests, according to Xeophon's tweet.

- A follow-up tweet highlights R1 as a league of its own, though doubts remain regarding its tool-call compatibility within Codeium.

- Flow Actions Fizzle Productivity: Many find the Flow Actions limit disruptive to their workflow, citing repeated bottlenecks throughout the day.

- Community members propose strategic usage and partial resets to ease the constraint, though official fixes remain uncertain.

- Codeium Feature Frenzy: A user requested adding DeepSeek R1 support in Codeium, along with calls for better fine-tuning and robust updates for JetBrains IDE users.

- Others mention the need for improved rename suggestions via Codeium's feature request page and highlight Termium for command-line auto-completion.

aider (Paul Gauthier) Discord

- Aider v0.72.0 Release Bolsters Development: The Aider v0.72.0 update includes DeepSeek R1 support via

--model r1or OpenRouter, plus Kotlin syntax and the new--line-endingsoption, addressing Docker image permissions and ASCII fallback fixes.- Community members noted that Aider contributed 52% of its own code for this release and discovered that

examples_as_sys_msg=Truewith GPT-4o yields higher test scores.

- Community members noted that Aider contributed 52% of its own code for this release and discovered that

- DeepSeek R1 Emerges as Powerful Challenger: Users praised DeepSeek R1 for multi-language handling, citing this tweet about near parity with OpenAI's 01 and MIT licensed distribution.

- Conversations hinted at switching from Claude to DeepSeek R1 for cost reasons, referencing DeepSeek-R1 on GitHub for further technical details.

- OpenAI Subscriptions & GPU Costs Spark Debate: Some members reported OpenAI subscription refunds and weighed the cost-effectiveness of DeepSeek, mentioning the CEO da OpenAI article regarding pricing uncertainties.

- European users also found cheaper RTX 3060 and 3090 GPUs, and they consulted Fireworks AI docs for privacy considerations in AI-driven workflows.

- Space Invaders Upgraded with DeepSeek R1: A live coding video showed DeepSeek R1 powering a refined Space Invaders game, demonstrating second-place benchmarking on the Aider LLM leaderboard.

- The user highlighted near equivalence to OpenAI's 01 at a lower price, driving interest in game and dev scenarios that benefit from R1’s coding focus.

LM Studio Discord

- DeepSeek's Bold Foray into Math Mastery: DeepSeek R1 emerged as a strong pick for mathematics tutoring, providing step-by-step solutions and supporting special educational needs, exemplified by Andrew C's tweet about running a 671B version on M2 Ultras.

- One user praised the model's speed and local deployment capabilities, referencing the DeepSeek-R1 GitHub repo for advanced usage scenarios.

- Local Model Magic & OpenAI Touchpoints: Enthusiasts discussed running LLMs on robust home setups like a 4090 GPU with 64GB RAM, referencing LM Studio Docs and Ollama's OpenAI compatibility blog for bridging local models with OpenAI API.

- Others highlighted the significance of quantization (Q3, Q4, etc.) for performance trade-offs and explored solutions like Chatbox AI to unify local and online usage.

- NVIDIA's DIGITS Drama & DGX OS Dilemmas: Users lamented the high cost (around $3000 for 128GB) and uncertain NVIDIA DIGITS support, pointing to NVIDIA DIGITS docs for legacy insights.

- Discussions noted the DGX OS similarities to old DIGITS, with someone suggesting NVIDIA TAO as a modern alternative, though it introduced confusion about container-focused releases.

- GPU Heat Headaches & Future Plans: Some mentioned excessive heat from powerful GPUs, joking no cleaning is needed due to constant burning and referencing second-hand sales for potential cost savings.

- Others plan a GUI-free approach for optimized performance, with an emphasis on lighter setups to mitigate thermal strains in advanced ML tasks.

Nous Research AI Discord

- Liquid AI's LFM-7B Rises for Local Deployments: Liquid AI introduced LFM-7B, a non-transformer model that claims top-tier performance in the 7B range with expanded language coverage including English, Arabic, and Japanese (link).

- Community members praised its local deployment strategy, with some crediting the model's automated architecture search as a potential differentiator.

- Mind Evolution Maneuvers LLM Inference: A new paper on Mind Evolution showcased an evolutionary approach that surpasses Best-of-N for tasks like TravelPlanner and Natural Plan, achieving over 98% success with Gemini 1.5 Pro (arXiv link).

- Engineers discussed the method's iterative generation and recombination of prompts, describing it as a streamlined path to scale inference compute.

- DeepSeek-R1 Distill Model Gains Mixed Reviews: Users trialed DeepSeek-R1 Distill for quantization tweaks and performance angles, referencing a Hugging Face repo close to 8B parameters.

- Some praised its reasoning outputs while others found it overly verbose on casual prompts, yet it remained a highlight for advanced thinking time.

- SleepNet & DreamNet Bring 'Nighttime' Training: SleepNet and DreamNet propose supervised plus unsupervised cycles that mimic 'sleep' to refine model states, as detailed in Dreaming is All You Need and Dreaming Learning.

- They use an encoder-decoder approach to revisit hidden layers during off phases, spurring discussions about integrative exploration.

- Mistral Musings on Ministral 3B & Codestral 2501: Mistral teased Ministral 3B and Codestral 2501, fueling speculation on a weights-licensing plan in a tight AI landscape.

- Observers wondered if Mistral's approach, akin to Liquid AI's architecture experiments, might carve out a specialized niche for smaller-scale deployments.

Stackblitz (Bolt.new) Discord

- Bolt’s Bolder Code Inclusions: Bolt's latest update removes the white screen fiasco and includes a fix for complete code delivery, guaranteeing a spot-on setup from the first prompt as seen in this announcement.

- Engineers welcomed this comprehensive shift, saying "No more lazy code!" and praising the smoother start-up for new projects.

- Prismic Predicaments & Static Solutions: A user faced issues integrating Prismic CMS for a plumbing site, prompting a suggestion to build a static site first for future-proof flexibility.

- Community members favored a minimal approach, with one noting the complexity of "CMS overhead for simple sites."

- Firebase vs Supabase Face-Off: A user argued for swapping Supabase in favor of Firebase, calling it a simpler path for developers.

- Others agreed Firebase eases initial setups, emphasizing how it accelerates quick proofs-of-concept.

- Token Tussles: Developers reported losing tokens due to buggy code on Bolt, advocating free debugging to curb these losses.

- Cost worries soared, with one user declaring "I've lost count of wasted tokens!"

- Next.js & Bolt: Tectonic Ties: A community member tried incorporating WordPress blogs into Next.js using Bolt but saw frameworks update faster than AI tooling.

- Opinions were split, with some saying Bolt may not track rapid Next.js changes closely enough.

Perplexity AI Discord

- Sonar Surges with Speed and Security: Perplexity released the Sonar and Sonar Pro API for generative search, featuring real-time web analysis and demonstrating major adoption by Zoom, while outperforming established engines on SimpleQA benchmarks.

- Community members applauded its affordable tiered pricing and noted that no user data is used for LLM training, suggesting safer enterprise usage.

- DeepSeek vs O1 Rumblings: Multiple members questioned if DeepSeek-R1 would replace the absent O1 in Perplexity, referencing public hints about advanced reasoning capabilities.

- Others praised DeepSeek-R1 for its free, top performance, calling it “the best alternative” while some remained uncertain about O1’s planned future.

- Claude Opus: Retired or Resilient?: Some users declared Claude Opus retired in favor of

Sonnet 3.5, questioning its viability in creative tasks.- Others emphasized that Opus continues to excel in complex projects, insisting it remains the most advanced in its family despite rumored replacements.

- Sonar Pro Tiers & Domain Filter Beta: Contributors highlighted new usage tiers for Sonar and Sonar Pro, noting the search_domain_filter as a beta feature in tier 3.

- Many users sought direct token usage insights from the API output, while some pushed for GDPR-compliant hosting in European data centers.

Interconnects (Nathan Lambert) Discord

- DeepSeek R1 Rocks Benchmarks: On January 20, China's DeepSeek AI unveiled R1, hitting up to 20.5% on the ARC-AGI public eval.

- It outperformed o1 in web-enabled tasks, with the full release details here.

- Mistral’s Mega IPO Move: Contrary to buyout rumors, Mistral AI announced an IPO plan alongside opening a Singapore office for the Asia-Pacific market.

- Members speculated on Mistral’s profitability, referencing this update as proof of their bold strategy.

- Stargate Surges with $500B Pledge: OpenAI, SoftBank, and Oracle united under Stargate, promising $500 billion to bolster U.S. AI infrastructure over four years.

- They’ve likened this grand investment to historical feats like the Apollo program, aiming to cement American AI leadership.

- Anthropic Angles for Claude’s Next Step: At Davos, CEO Dario Amodei teased voice mode and possible web browsing for Claude, as seen in this WSJ interview.

- He hinted at beefier Claude releases, with the community debating how often updates will drop.

- Tulu 3 RLVR Sparks Curiosity: A poster project on Tulu 3’s RLVR grabbed attention, promising new ways to approach reinforcement learning.

- Enthusiasts plan to merge it with open-instruct frameworks, hinting at broader transformations in model usage.

MCP (Glama) Discord

- Tavily Search MCP Server Soars: The new Tavily Search MCP server landed with optimized web search and content extraction for LLMs, featuring SSE, stdio, and Docker-based installs.

- It uses Node scripts for swift deployment, fueling the MCP ecosystem with broader server choices.

- MCP Language Server Showdown: Developers tested isaacphi/mcp-language-server and alexwohletz/language-server-mcp, aiming for get_definition and get_references in large codebases.

- They noted the second repo might be less mature, yet the community remains eager for IDE-like MCP features.

- Roo-Clines Grow Wordy: Members championed adding roo-code tools to roo-cline for extended language tasks, including code manipulation in sprawling projects.

- They envision deeper MCP synergy to streamline code management, suggesting advanced edits in a single CLI ecosystem.

- LibreChat Sparks Complaints: A user slammed LibreChat for tricky configurations and unpredictable API support, even though they admired its polished UI.

- They also bemoaned the absence of usage limits, comparing it to stricter platforms like Sage or built-in MCP servers.

- Anthropic Models Eye Showdown with Sage: A lively exchange broke out on the feasibility of Anthropic model r1, with some guessing 'Prob' they'd get it running.

- Others leaned on Sage for macOS and iPhone, preferring fewer headaches over uncertain Anthropic integrations.

OpenRouter (Alex Atallah) Discord

- Llama's Last Stand at Samba Nova: The free Llama endpoints end this month because of changes from Samba Nova, removing direct user access.

- Samba Nova will switch to a Standard variant with new pricing, provoking talk about paid usage.

- DeepSeek R1 Gains Web Search & Freed Expression: The DeepSeek R1 model enables web search grounding on OpenRouter, maintaining a censorship-free approach at $0.55 per input token.

- Community comparisons reveal performance close to OpenAI's o1, with discussions on fine-tuning cited in Alex Atallah's post.

- Gemini 2.0 Flash: 64K Token Marvel: A fresh Gemini 2.0 Flash Thinking Experimental 01-21 release offers a 1 million context window plus 64K output tokens.

- Observers noted some naming quirks during its ten-minute rollout; it remains available through AI Studio without tiered keys.

- Sneaky Reasoning Content Trick Emerges: A user exposed a method to coax reasoning content from DeepSeek Reasoner by crafty prompt prefixes.

- Concerns arose over token clutter from leftover CoT data, prompting better message handling strategies.

- Perplexity's Sonar Models Catch Eyes: Perplexity debuted new Sonar LLMs with web-search expansions, highlighted in this tweet.

- While some are excited about a potential integration, others doubt the models’ utility, urging votes for OpenRouter support.

Cohere Discord

- Cranked-Up GPT-2 Gains: Engineers discussed adjusting

max_stepsfor GPT-2 re-training, recommended doubling it for two epochs to prevent rapid learning rate decay, referencing Andrew Karpathy's approach.- They also warned that rash changes might waste resources, suggesting thorough knowledge before making fine-tuning decisions.

- RAG Revelations in Live Q&A: A Live Q&A on RAG and tool use with models is scheduled for Tuesday at 6:00 am ET on Discord Stage, encouraging builders to share experiences.

- Participants plan to tackle challenges in integrating new implementations, aiming for a collaborative environment that sparks shared insights.

- Cohere CLI: Terminal Talk for Transformers: The new Cohere CLI lets users chat with Cohere's AI from the command line, showcased on GitHub.

- Community members praised its convenience, with some highlighting how terminal-based interactions could speed up iterative development.

- Cohere For AI: Community Powerhouse: Enthusiasts urged each other to join the Cohere For AI initiative for open machine learning collaboration, referencing Cohere’s official research page.

- They also noted trial keys offering 1000 free monthly requests, reinforcing a welcoming space for newcomers eager to test AI solutions.

- Math Shortfalls in LLM Outputs: Members flagged Cohere for incorrectly calculating 18 months as 27 weeks, casting doubt on LLMs' math reliability.

- They connected this to tokenization issues, calling it a widespread shortcoming that can topple projects if left unaddressed.

Notebook LM Discord Discord

- Classroom Conquest: NotebookLM for College Courses: Members suggested organizing NotebookLM by topics rather than individual sources to ensure data consistency, noting that a 1:1 notebook:source setup is best for podcast generation with a single file.

- They emphasized it eliminates clutter and fosters smoother collaboration, potentially transforming study habits and resource sharing in academic environments.

- Video Victories: AI eXplained's Cinematic Unfolding: The AI eXplained channel released a new episode on AI-generated videos, spotlighting advances in scriptwriting and animated production.

- Early watchers mentioned the wave of interest in these approaches to reshape the film industry, predicting more breakthroughs in audio-visual AI.

- Gemini Gains: Code Assist for Devs: Community members recommended Gemini Code Assist for deeper repository insights, describing it as more accurate than NotebookLM for focused code queries.

- They noted NotebookLM can misfire unless guided with very specific instructions, spurring discussions on code analysis methods and reliability.

- Sacred Summaries: NotebookLM in Church Services: One participant leveraged NotebookLM to parse extensive sermon transcripts, eyeing a 250-page collection and even a 2000-page Bible study.

- They hailed it as a game changer for distilling large religious texts, praising its utility in bridging tech and faith.

- Tooling Treasures: Add-ons & Apps Amp NotebookLM: Users swapped suggestions for add-ons including OpenInterX Mavi and Chrome Web Store extensions to boost functionality.

- They tested methods to retain favorite prompts for quicker work and expressed hope for deeper NotebookLM integrations down the road.

Stability.ai (Stable Diffusion) Discord

- Cohesive Comix with ControlNet: Members explored AI-driven comic panels with ControlNet for consistent scene details, generating each frame separately to keep characters stable. They discovered the approach still produces varied results, requiring frequent re-generation to maintain continuity.

- They also debated whether advanced prompts or additional training data could improve results, with some seeing potential for future improvements once Stable Diffusion matures.

- AI Art Controversy Continues: Contributors noted stronger pushback against AI-rendered artwork in creative communities, highlighting doubts about credibility and respect for original styles. They cited the broader debate on whether AI art displaces manual craft or simply extends it.

- Others raised ethical concerns about using training data from public repositories, referencing broader calls for guidelines that ensure credit to original creators.

- Stable Diffusion AMD Setup Snags: Individuals shared difficulties running Stable Diffusion on AMD hardware, pointing to driver issues and slower performance. They referenced pinned instructions in the Discord as a workaround but acknowledged the need for more robust official support.

- Some found success with updated libraries, but others still faced unexpected black screens or incomplete renders, requiring manual GPU resets.

- Manual vs. AI Background Tweaks: Enthusiasts debated using GIMP for straightforward background edits versus leaning on Stable Diffusion for automatic enhancements. They reported that manual editing offered more controlled results, especially for sensitive details in personal photoshoots.

- Some argued that AI solutions still lack refinement for nuanced tasks, while others saw promise if the models gain more specialized training.

GPU MODE Discord

- Evolving Minds & Taming GRPO: The Mind Evolution strategy for scaling LLM inference soared to over 98% success on TravelPlanner and Natural Plan, as detailed in the arXiv submission.

- A simple local GRPO test is in progress, with future plans to scale via OpenRLHF and Ray and apply RL to maths datasets.

- TMA Takes Center Stage in Triton: Community members investigated TMA descriptors in Triton, leveraging

fill_2d_tma_descriptorand facing autotuning pitfalls that caused crashes.- A working example of persistent GEMM with TMA was shared, but manual configuration remains necessary due to limited autotuner support.

- Fluid Numerics Floats AMD MI300A Trials: The Fluid Numerics platform introduced subscriptions to its Galapagos cluster, featuring the AMD Instinct MI300A node for AI/ML/HPC workloads and a request access link.

- They encouraged users to test software and compare performance between MI300A and MI300X, inviting broad benchmarking.

- PMPP Book Gains More GPU Goodness: A reread of the latest PMPP Book was advised, as it updates content missing from the 2022 release and adds new CUDA material.

- Members recommended cloud GPU options like Cloud GPUs or Lightning AI for hands-on practice with the book’s exercises.

- Lindholm's Unified Architecture Legacy: Engineer Lindholm recently retired from Nvidia, with an insightful November 2024 talk on his unified architecture available via Panopto.

- Participants learned about his impactful design principles and contributions until his retirement two weeks ago.

Eleuther Discord

- GGUF Gains Ground Over Rival Formats: The community noted GGUF is the favored quantization route for consumer hardware, referencing Benchmarking LLM Inference Backends to show its strong performance edge.

- They contrasted tools like vLLM and TensorRT-LLM, emphasizing that startups often choose straightforward backends such as Ollama for local-ready simplicity.

- R1 Riddles & Qwen Quirks: Members put R1 under the microscope, debating its use of PRMs and pondering how 4bit/3bit vs f16 influences MMLU-PRO performance.

- They also considered converting Qwen R1 models to Q-RWKV, eyeing tests like math500 to confirm success and questioning how best to estimate pass@1 with multiple response generations.

- Titans Tackle Memory for Deep Nets: The Titans paper (arXiv:2501.00663) proposes mixing short-term with long-term memory to bolster sequence tasks, building on recurrent models and attention.

- A user asked if it’s "faster to tune the model on such a large dataset?" while others weighed whether scaling data sizes outperforms incremental methods.

- Steering Solutions Still Skimpy: No single open source library dominates SAE-based steering for LLMs, though projects like steering-vectors and repeng show promise.

- They also mentioned representation-engineering, noting its top-down approach but highlighting the general lack of a unified approach.

- NeoX: Nudging HF Format with Dimension Disputes: A RuntimeError in

convert_neox_to_hf.pyrevealed dimension mismatch issues ([8, 512, 4096] vs 4194304), possibly tied to multi-node setups and model_parallel_size=4.- Questions arose about the 3x intermediate dimension setting, while the shared config mentioned num_layers=32, hidden_size=4096, and seq_length=8192 impacting the export process.

Latent Space Discord

- OpenAI Fuels Stargate Project with $500B: OpenAI unveiled The Stargate Project with a pledged $500 billion investment over the next four years, aimed at building AI infrastructure in the U.S. with $100 billion starting now.

- Major backers including SoftBank and Oracle are betting big on this initiative, emphasizing job creation and AI leadership in America.

- Gemini 2.0 Gains Experimental Update: Feedback on Gemini 2.0 Flash Thinking led Noam Shazeer to introduce new changes that reflect user-driven improvements.

- These tweaks aim to refine Gemini’s skill set and reinforce its responsiveness to real-world usage.

- DeepSeek Drops V2 Model with Low Inference Costs: The newly released DeepSeek V2 stands out for reduced operational expenses and a strong performance boost.

- Its architecture prompted buzz across the community, showcasing a fresh approach that challenges established models.

- Ai2 ScholarQA Boosts Literature Review: The Ai2 ScholarQA platform offers a method to ask questions that aggregate information from multiple scientific papers, providing comparative insights.

- This tool aspires to streamline rigorous research by delivering deeper citations and references on demand.

- SWE-Bench Soars as WandB Hits SOTA: WandB announced that their SWE-Bench submission is now recognized as State of the Art, drawing attention to the benchmark’s significance.

- The announcement underlines the competitive drive in performance metrics and fosters further exploration of advanced testing.

OpenAI Discord

- DeepSeek R1 & Sonnet Showdown: Members discussed DeepSeek R1 distilled into Qwen 32B Coder running locally on a system with 32 GB RAM and 16 GB VRAM, offloading heavy computation to CPU for feasible performance.

- They reported a 60% failure rate for R1 in coding, which still outperformed 4O and Sonnet at 99% failure, though stability on Ollama remains uncertain.

- Generative AI Heats Up Creative Industries: A Medium article highlighted Generative AI's ability to produce art, prompting fears it might replace human creators.

- Others argued that human skills are still crucial for shaping AI output effectively, keeping artists involved in the process.

- Content Compliance Chatter: Point was raised that DeepSeek avoids critical or humorous outputs about the CCP, recalling older GPT compliance issues.

- Users questioned whether these constraints limit expression or hamper open-ended debate.

- Archotech Speculation Runs Wild: One user mused about AI evolving into Rimworld-style archotechs, hinting at unintended capabilities and outgrowths.

- They suggested that “we might accidentally spawn advanced entities” as AI companies keep training bigger models.

- GPT Downtime and Lagging Responses: Frequent 'Something went wrong' errors disrupted chats with GPT, though reopening the session generally solved it.

- Several members noted sluggish performance, describing slow replies as a source of collective exasperation.

Yannick Kilcher Discord

- Neural ODEs Spark RL Tactics: In #general, members said Neural ODEs could refine robotics by modeling function complexity with layers, referencing the Neural Ordinary Differential Equations paper.

- They also debated how smaller models might discover high-quality reasoning through repeated random inits in RL, pointing out that noise and irregularity boost exploration.

- GRPO Gains Allies: In #paper-discussion, DeepSeeks GRPO was called PPO minus a value function, relying on Monte Carlo advantage estimates for simpler policy tuning, as seen in the official tweet.

- A recent publication emphasizes reduced overhead, while the group also tackled reviewer shortages by recruiting 12 out of 50+ volunteers.

- Suno Swats Copyright Claims: In #ml-news, AI music generator Suno is facing another copyright lawsuit from GEMA, adding to previous lawsuits from major record labels, as detailed by Music Business Worldwide.

- Valued at $500 million, Suno and rival Udio are accused of training on unlicensed recordings, fueling industry debate on AI-based content's legality.

Modular (Mojo 🔥) Discord

- Clashing Over C vs Python: Members debated C for discipline and Python for quicker memory management insight, referencing future usage in JS or Python.

- One participant highlighted learning C first can deepen understanding for a career shift, but opinions varied widely.

- Forum vs Discord Dilemma: Many urged clarity on posting projects in Discord versus the forum, citing difficulty retrieving important discussions in a rapid-chat setting.

- They suggested using the forum for in-depth updates while keeping Discord for quick bursts of feedback.

- Mojo’s .gitignore Magic: Contributors noted the

.gitignorefor Mojo only excludes.pixiand.magicfiles, which felt suitably minimal.- No concerns arose, with the group appreciating a lean default configuration.

- Mojo and Netlify Not Mixing?: A question popped up about hosting a Mojo app with

lightbug_httpon Netlify, drawing on success with Rust apps.- Members said Netlify lacks native Mojo support, referencing available software at build time for possible features.

- Mojo’s Domain Dilemma: One user asked if Mojo would split from Modular and claim a

.orgdomain like other languages.- Developers confirmed no such move is planned, affirming it stays under modular.com for now.

LlamaIndex Discord

- LlamaIndex Workflows Soar on GCloud Run: A new guide explains launching a two-branch RAG application on Google Cloud Run for ETL and query tasks, detailing a serverless environment and event-driven design via LlamaIndex.

- Members noted the top three features — two-branch architecture, serverless hosting, and an event-driven approach — as keys to streamlined AI workloads.

- Chat2DB GenAI Chatbot Tackles SQL: Contributors highlighted the open-source Chat2DB chatbot, explaining that it lets users query databases in everyday language using RAG or TAG strategies.

- They emphasized its multi-model compatibility, supporting OpenAI and Claude, which makes it a flexible tool for data access.

- LlamaParse Rescues PDF Extraction: Participants recommended LlamaParse for PDF parsing, calling it the world’s first genAI-native document platform for LLM use cases.

- They praised its robust data cleaning and singled it out as a solution for tricky selectable-text PDFs.

- Incognito Mode Zaps Docs Glitch: A user reported that LlamaIndex documentation kept scrolling back to the top when viewed in a normal browser session.

- They confirmed incognito mode on Microsoft Edge solved the glitch, suggesting an extension conflict as the likely cause.

- CAG with Gemini Hits an API Wall: Someone asked how to integrate Cached Augmented Generation (CAG) into Gemini, only to learn that model-level access is essential.

- They discovered no providers currently offer that depth of control over an API, stalling the idea for now.

Nomic.ai (GPT4All) Discord

- ModernBert Entities Emerge: A user showcased syntax for identifying entities in ModernBert, providing a hierarchical document layout for travel topics and seeking best practices for embeddings.

- They looked for suggestions on structuring these documents around entity-based tasks, hoping to refine overall performance.

- Jinja Trove Takes Center Stage: A participant requested robust resources on the advanced features of Jinja templates, prompting a surge of community interest.

- Others chimed in, noting that improved template logic can streamline dynamic rendering in various projects.

- LMstudio Inquiry Finds a Home: Another user sought guidance on LMstudio, asking if the current channel was appropriate while struggling to find a dedicated Discord link.

- They also touched on Adobe Photoshop issues, leading to tongue-in-cheek comments about unofficial support lines.

- Photoshop and Illegal Humor: A short exchange hinted at a possibly illegal question regarding Adobe Photoshop, prompting jokes about the nature of such inquiries.

- Discussion briefly shifted toward broader concerns over sharing questionable requests in public forums.

- Nomic Taxes and Intern Levies: Members joked about tax increases for Nomic, with one participant claiming they should be the recipient of these funds.

- A fun reference to this GIF highlighted the playful tone of the conversation.

LAION Discord

- Bud-E Speaks in 13 Tongues: LAION revealed that Bud-E extends beyond English, supporting 13 languages without specifying the complete list, tapping into fish TTS modules for speech capabilities.

- The team temporarily ‘froze’ the existing project roadmap to emphasize audio and video dataset integration, causing a slight development delay.

- Suno Music’s Sonic Power: The Suno Music feature allows users to craft their own songs by recording custom audio inputs, appealing to mobile creators seeking fast experimentation.

- Members expressed excitement over broad accessibility, highlighting the platform’s potential to diversify creative workflows.

- BUD-E & School-BUD-E Take Center Stage: LAION announced BUD-E version 1.0 as a 100% open-source voice assistant for both general and educational use, including School Bud-E for classrooms.

- This milestone promotes universal access and encourages AI-driven ed-tech, showcased in a tutorial video illustrating BUD-E’s capabilities.

- BUD-E’s Multi-Platform Flexibility: Engineers praised BUD-E for offering compatibility with self-hosted APIs and local data storage, ensuring privacy and easy deployment.

- According to LAION’s blog post, desktop and web variants cater to broad user needs, amplifying free educational reach worldwide.

LLM Agents (Berkeley MOOC) Discord

- Declaration Form Confusion: A member asked if they must fill the Declaration Form again after submitting in December, clarifying that only new folks must submit now.

- Staff reopened the form for those who missed the initial deadline, ensuring no extra steps for prior filers.

- Sponsors Offer Hackathon-Style Projects: A participant asked if corporate sponsors would provide intern-like tasks in the next MOOC, referencing last term’s hackathon as inspiration.

- Organizers indicated that sponsor-led talks may hint at internship leads, though no formal arrangement was revealed.

- MOOC Syllabus Teased for January 27: A member wondered when the new MOOC syllabus will drop, prompting staff to note January 27 as the likely date.

- They are locking in guest speakers first, but promise a rough outline by that day.

tinygrad (George Hotz) Discord

- BEAM Bogs Down YoloV8: One user reported that running YoloV8 with

python examples/webgpu/yolov8/compile.pyunder BEAM slashed throughput from 40fps to 8fps, prompting concerns about a bug.- George Hotz noted that BEAM should not degrade performance and suggested investigating potential anomalies in the code path.

- WebGPU-WGSL Hurdles Slow BEAM: Another user suspected that WGSL conversion to SPIR-V might increase overhead, crippling real-time inference speeds.

- They also emphasized that BEAM requires exact backend support, raising questions about hardware-specific optimizations for WebGPU.

Torchtune Discord

- Torchtune's 'Tune Cat' Gains Momentum: A member praised the Torchtune package and referenced a GitHub Issue proposing a

tune catcommand to streamline usage.- They described the source code as an absolute pleasure to read, signaling a strongly positive user experience.

- TRL's Command Bloats Terminal: A member joked that the TRL help command extends across three terminal windows, overshadowing typical help outputs.

- They suggested the verbose nature might still be crucial for users who want all technical details.

- LLMs Explore Uncertainty & Internal Reasoning: Discussion centered on the idea that models should quantify uncertainty to bolster reliability, while LLMs appear to conduct their own chain of thought before responding.

- Both points underscore a move toward better interpretability, with signs of covert CoT steps for deeper reasoning.

- Advancing RL with LLM Step-Prompts & Distillation: A suggestion emerged for RL-LLM thinking-step prompts, adding structure to standard goal-based instructions.

- Another member proposed applying RL techniques on top of model distillation, expecting further gains even for smaller models.

DSPy Discord

- Dynamic DSPy: RAG's Rendezvous with Real-Time Data: A user asked how DSPy-based RAG manages changing information, hinting at the importance of real-time updates for knowledge retrieval pipelines with minimal overhead.

- They suggested future work could focus on caching mechanisms and incremental indexing, keeping DSPy agile for dynamic workloads.

- Open Problem Ordeal & Syntax Slip-ups: Another thread raised an open problem in DSPy, underscoring continued interest in a lingering technical question.

- A syntax error ('y=y' should use a number) also emerged, highlighting attention to detail and the community's engagement in squashing small issues.

Mozilla AI Discord

- ArXiv Authors Demand Better Data: The paper titled Towards Best Practices for Open Datasets for LLM Training was published on ArXiv, detailing challenges in open-source AI datasets and providing recommendations for equity and transparency.

- Community members praised the blueprint’s potential to level the playing field, highlighting that a stronger open data ecosystem drives LLM improvements.

- Mozilla & EleutherAI Declare Data Governance Summit: Mozilla and EleutherAI partnered on a Dataset Convening focused on responsible stewardship of open-source data and governance.

- Key stakeholders discussed best curation practices, stressing the shared goal of advancing LLM development through collaborative community engagement.

AI21 Labs (Jamba) Discord

- AI Shifts from Hype to Help in Cybersecurity: One member recalled how AI used to be a mere buzzword in cybersecurity, noting their transition into the field a year ago.

- They expressed excitement about deeper integration of AI in security processes, envisioning real-time threat detection and automated incident response.

- Security Teams Embrace AI Support: The discussion highlighted the growing interest in how AI can bolster security teams’ capabilities, especially in handling complex alerts.

- Enthusiasts anticipate sharper analysis tools that AI offers, allowing analysts to focus on critical tasks and reduce manual overhead.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Axolotl AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The OpenInterpreter Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!