[AINews] PRIME: Process Reinforcement through Implicit Rewards

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Implicit Process Reward Models are all you need.

AI News for 1/3/2025-1/6/2025. We checked 7 subreddits, 433 Twitters and 32 Discords (218 channels, and 5779 messages) for you. Estimated reading time saved (at 200wpm): 687 minutes. You can now tag @smol_ai for AINews discussions!

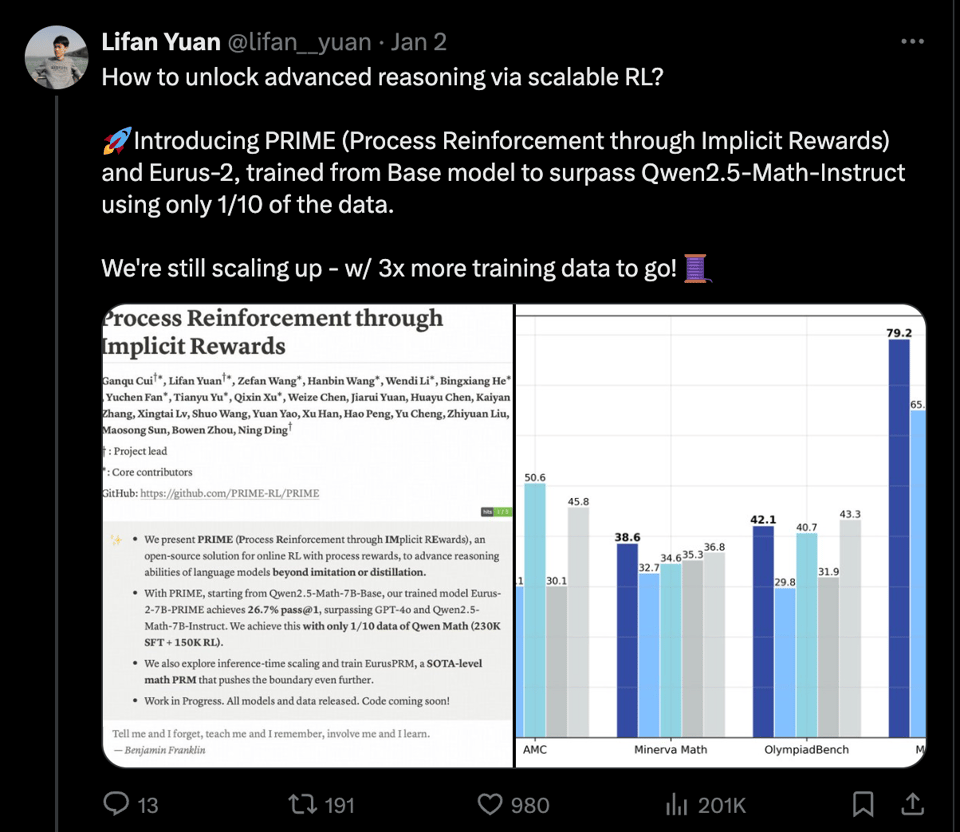

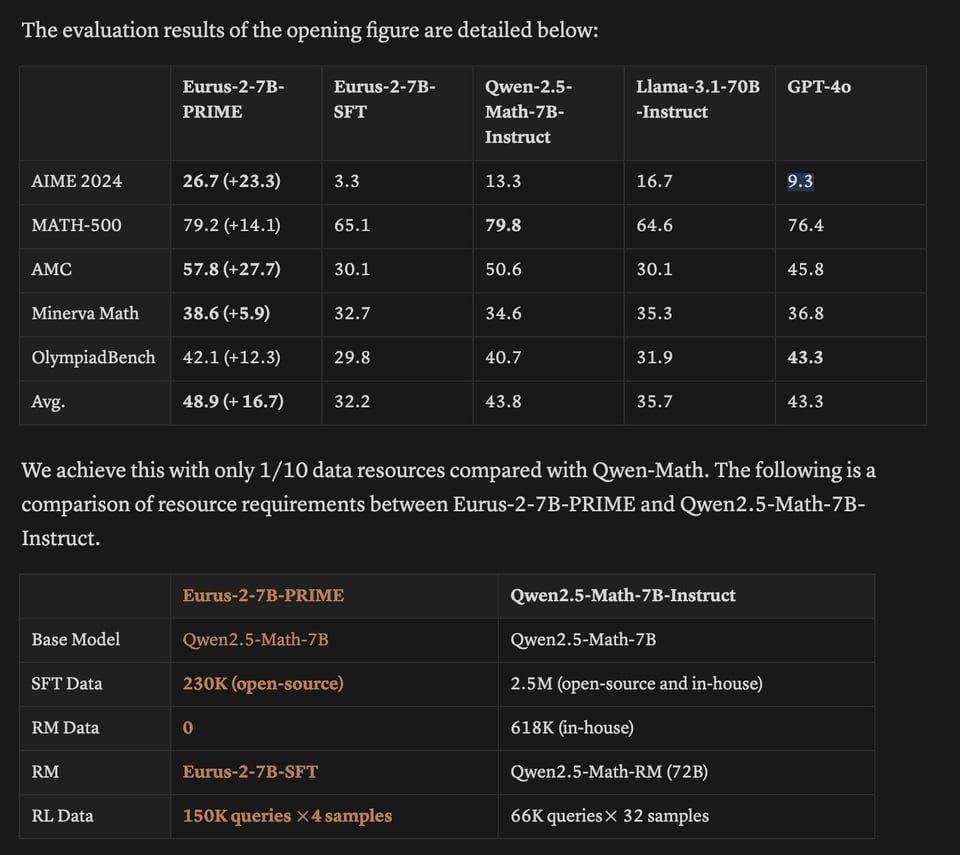

We saw this on Friday but gave it time for peer review, and it is positive enough to give it a headline story (PRIME blogpost):

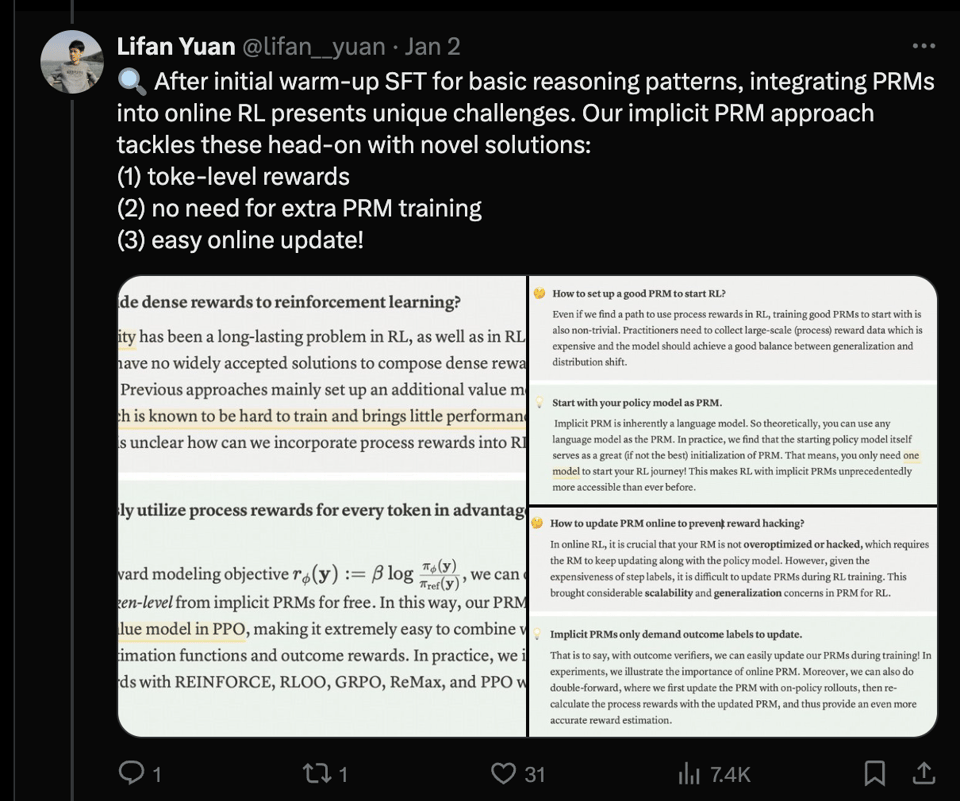

Ever since Let's Verify Step By Step established the importance of process reward models, the hunt has been on for an "open source" version of this. PRIME deals with some of the unique challenges of online RL:

and trains it up on a 7B model for incredibly impressive results vs 4o:

a lucidrains implemenation is in the works.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AGI and Large Language Models (LLMs)

- Proto-AGI and Model Capabilities: @teortaxesTex and @nrehiew_ discuss Sonnet 3.5 as a proto-AGI and the evolving definition of AGI. @scaling01 emphasizes the importance of compute scaling for Artificial Superintelligence (ASI) and the role of test-time compute in future AI development.

- Model Performance and Comparisons: @omarsar0 analyzes Claude 3.5 Sonnet's performance against other models, highlighting reduced performance in math reasoning. @aidan_mclau questions the harness differences affecting model evaluations, emphasizing the need for consistent benchmarking.

AI Tools and Libraries

- Gemini Coder and LangGraph: @_akhaliq showcases Gemini 2.0 coder mode supporting image uploads and AI-Gradio integration. @hwchase17 introduces a local version of LangGraph Studio, enhancing agent architecture development.

- Software Development Utilities: @tom_doerr shares tools like Helix (a Rust-based text editor) and Parse resumes in Python, facilitating code navigation and resume optimization. @lmarena_ai presents the Text-to-Image Arena Leaderboard, ranking models like Recraft V3 and DALL·E 3 based on community votes.

AI Events and Conferences

- LangChain AI Agent Conference: @LangChainAI announces the Interrupt: The AI Agent Conference in San Francisco, featuring technical talks and workshops from industry leaders like Michele Catasta and Adam D’Angelo.

- AI Agent Meetups: @LangChainAI promotes the LangChain Orange County User Group meetup, fostering connections among AI builders, startups, and developers.

Company Updates and Announcements

- OpenAI Financials and Usage: @sama reveals that OpenAI is currently losing money on Pro subscriptions due to higher-than-expected usage.

- DeepSeek and Together AI Partnerships: @togethercompute announces DeepSeek-V3's availability on Together AI APIs, highlighting its efficiency with 671B MoE parameters and ranked #7 in Chatbot Arena.

AI Research and Technical Discussions

- Scaling Laws and Compute Efficiency: @cwolferesearch provides an in-depth analysis of LLM scaling laws, discussing power laws and the impact of data scaling. @RichardMCNgo debates the ASI race and the potential for recursive self-improvement in AI models.

- AI Ethics and Safety: @mmitchell_ai addresses ethical issues for 2025, focusing on data consent and voice cloning as primary concerns.

Technical Tools and Software Development

- Development Frameworks and Utilities: @tom_doerr introduces Browserless, a service for executing headless browser tasks using Docker. @tom_doerr presents Terragrunt, a tool that wraps Terraform for infrastructure management with DRY code principles.

- AI Integration and APIs: @kubtale discusses Gemini coder's image support and AI-Gradio integration, enabling developers to build custom AI applications with ease.

Memes and Humor

- Humorous Takes on AI and Technology: @doomslide humorously remarks on Elon's interactions with the UK state apparatus. @jackburning posts amusing content about AI models and their quirks.

- Light-hearted Conversations: @tejreddy shares a funny anecdote about AI displacement of artists, while @sophiamyang jokes about delayed flights and AI-generated emails.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. DeepSeek V3's Dominance in AI Workflows

- DeepSeek V3 is the shit. (Score: 524, Comments: 207): DeepSeek V3 impresses with its 600 billion parameters, providing reliability and versatility that previous models, including Claude, ChatGPT, and earlier Gemini variants, lacked. The model excels in generating detailed responses and adapting to user prompts, making it a preferred choice for professionals frustrated by inconsistent workflows with state-of-the-art models.

- Users compare DeepSeek V3 to Claude 3.5 Sonnet, noting its coding capabilities but expressing frustration with its slow response times for long contexts. Some users face issues with the API when using tools like Cline or OpenRouter, while others appreciate its stability in the chat web interface.

- Discussions highlight the deployment challenges of DeepSeek V3, particularly the need for GPU server clusters, making it less accessible for individual users. There is a suggestion that investing in Intel GPUs could have been a strategic move to encourage development of more specified code.

- AMD's mi300x and mi355x products are mentioned in relation to AI development, with mi300x being a fast-selling product despite not being initially designed for AI. The upcoming Strix Halo APU is noted as a significant development for high-end consumer markets, indicating AMD's growing presence in the AI hardware space.

- DeepSeek v3 running at 17 tps on 2x M2 Ultra with MLX.distributed! (Score: 105, Comments: 30): DeepSeek v3 reportedly achieves 17 transactions per second (TPS) on a setup with 2x M2 Ultra processors using MLX.distributed technology. The information was shared by a user on Twitter, with a link provided for further details here.

- Discussions highlighted the cost and performance comparison between M2 Ultra processors and used 3090 GPUs, noting that while 3090 GPUs offer higher performance by an order of magnitude, they are significantly less power-efficient. MoffKalast calculated that for the price of $7,499.99, one could power a 3090 GPU for about a decade at full load, considering electricity costs at 20 cents per kWh.

- Context length and token generation were important technical considerations, with users questioning how the number of tokens impacts TPS and whether the 4096-token prompt affects performance. Coder543 referenced a related discussion on Reddit regarding performance differences with various prompt lengths.

- The MOE (Mixture of Experts) model's efficiency was debated, with fallingdowndizzyvr pointing out that all experts need to be loaded since their usage cannot be predicted beforehand, impacting resource allocation and performance.

Theme 2. Dolphin 3.0: Combining Advanced AI Models

- Dolphin 3.0 Released (Llama 3.1 + 3.2 + Qwen 2.5) (Score: 304, Comments: 37): Dolphin 3.0 has been released, incorporating Llama 3.1, Llama 3.2, and Qwen 2.5.

- Discussions around Dolphin 3.0 highlight concerns about model performance and benchmarks, with users noting the absence of comprehensive benchmarks making it difficult to assess the model's quality. A user shared a quick test result showing Dolphin 3.0 scoring 37.80 on the MMLU-Pro dataset, compared to Llama 3.1 scoring 47.56, but cautioned that these results are preliminary.

- The distinction between Dolphin and Abliterated models was clarified, with Abliterated models having their refusal vectors removed, whereas Dolphin models are fine-tuned on new datasets. Some users find Abliterated models more reliable, while Dolphin models are described as "edgy" rather than truly "uncensored."

- There is anticipation for future updates, with Dolphin 3.1 expected to reduce the frequency of disclaimers. Larger models, such as 32b and 72b, are currently in training, as confirmed by Discord announcements, with efforts to improve model behavior by flagging and removing disclaimers.

- I made a (difficult) humour analysis benchmark about understanding the jokes in cult British pop quiz show Never Mind the Buzzcocks (Score: 108, Comments: 34): The post discusses a humor analysis benchmark called "BuzzBench" for evaluating emotional intelligence in language models (LLMs) using the cult British quiz show "Never Mind the Buzzcocks". The benchmark ranks models by their humor understanding scores, with "claude-3.5-sonnet" scoring the highest at 61.94 and "llama-3.2-1b-instruct" the lowest at 9.51.

- Cultural Bias Concerns: Commenters express concerns about potential cultural bias in humor analysis, particularly due to the British context of "Never Mind the Buzzcocks". Questions arise about how such biases are addressed, such as whether British spelling or audience is explicitly stated.

- Benchmark Details: The benchmark, BuzzBench, evaluates models on understanding and predicting humor impact, with a maximum score of 100. The current state-of-the-art score is 61.94, and the dataset is available on Hugging Face.

- Interest in Historical Context: There is curiosity about how models would perform with older episodes of the quiz show, questioning whether familiarity with less popular hosts like Simon Amstell impacts humor understanding.

Theme 3. RTX 5090 Rumors: High Bandwidth Potential

- RTX 5090 rumored to have 1.8 TB/s memory bandwidth (Score: 141, Comments: 161): The RTX 5090 is rumored to have 1.8 TB/s memory bandwidth and a 512-bit memory bus, surpassing all professional cards except the A100/H100 with their 2 TB/s bandwidth and 5120-bit bus. Despite its 32GB GDDR7 VRAM, the RTX 5090 could potentially be the fastest for running any LLM <30B at Q6.

- Discussions highlight the lack of sufficient VRAM in NVIDIA's consumer GPUs, with criticisms that a mere 8GB increase over two generations is inadequate for AI and gaming needs. Users express frustration that NVIDIA intentionally limits VRAM to protect their professional card market, suggesting that a 48GB or 64GB model would cannibalize their high-end offerings.

- Cost concerns are prevalent, with users noting that the price of a single RTX 5090 could equate to multiple RTX 3090s, which still hold significant value due to their VRAM and performance. The price per GB of VRAM for consumer cards is seen as more favorable compared to professional GPUs, but the overall cost remains prohibitive for many.

- Energy efficiency and power draw are critical considerations, as the RTX 5090 is rumored to have a significant power requirement of at least 550 watts, compared to the 3090's 350 watts. Users discuss undervolting as a potential solution, but emphasize that the trade-off between speed, VRAM, and power consumption remains a key factor in GPU selection.

- LLMs which fit into 24Gb (Score: 53, Comments: 32): The author has built a rig with an i7-12700kf CPU, 128Gb RAM, and an RTX 3090 24 GB, highlighting that models fitting into VRAM run significantly faster. They mention achieving good results with Gemma2 27B for generic discussions and Qwen2.5-coder 31B for programming tasks, and inquire about other models suitable for a 24Gb VRAM limit.

- Model Recommendations: Users suggest various models suitable for a 24GB VRAM setup, including Llama-3_1-Nemotron-51B-instruct and Mistral small. Quantized Q4 models like QwQ 32b and Llama 3.1 Nemotron 51b are highlighted for their efficiency and performance in this setup.

- Performance Metrics: Commenters discuss the throughput of different models, with Qwen2.5 32B achieving 40-60 tokens/s on a 3090 and potentially higher on a 4090. 72B Q4 models can run at around 2.5 tokens/s with partial offloading, while 32B Q4 models achieve 20-38 tokens/s.

- Software and Setup: There is interest in the software setups used to achieve these performance metrics, with requests for details on setups using EXL2, tabbyAPI, and context lengths. A link to a Reddit discussion provides further resources for model selection on single GPU setups.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. OpenAI's Financial Struggles Amid O1-Pro Criticism

- OpenAI is losing money (Score: 2550, Comments: 518): OpenAI is reportedly facing financial challenges with its subscription models. Without additional context, the specific details of these financial difficulties are not provided.

- Many users express mixed feelings about the $200 subscription for o1-Pro, with some finding it invaluable for productivity and coding efficiency, while others question its worth due to increased competition among coders and potential for rising costs. Treksis and KeikakuAccelerator highlight its benefits for complex coding tasks, but IAmFitzRoy raises concerns about its impact on the coding job market.

- Discussions reveal skepticism about OpenAI's financial strategy, with comments suggesting that despite high costs, the company continues to lose money, possibly due to the high operational costs of running advanced models like o1-Pro. LarsHaur and Vectoor discuss the company's spending on R&D and the challenges of maintaining a positive cash flow.

- Subscription model criticisms include calls for an API-based model and concerns about the sustainability of current pricing, as noted by MultiMarcus and mikerao10. Users like Fantasy-512 and Unfair-Associate9025 express disbelief at the pricing strategy, questioning the long-term viability and potential for price increases.

- You are not the real customer. (Score: 237, Comments: 34): Anthony Aguirre argues that tech companies prioritize employers over individual consumers in AI investment strategies, aiming for significant financial returns by potentially replacing workers with AI systems. This reflects the broader financial dynamics influencing AI development and infrastructure investment.

- Universal Basic Income (UBI) is criticized as a fantasy that won't solve the economic challenges posed by AI replacing jobs. Commenters argue that the transition will create a new form of serfdom, with companies gaining power by offering technology that is in demand, but ultimately dependent on consumer purchasing power.

- AI Replacement of workers is expected to occur before AI can genuinely perform at human levels, driven by cost reduction rather than quality improvement. This mirrors past trends like offshoring for cheaper labor, reflecting a focus on financial efficiency over service quality.

- Economic Dynamics in AI development are acknowledged by AI practitioners as prioritizing profit and cost-cutting over consumer benefits. The discussion highlights the inevitability of this shift and the potential for a difficult transition for both workers and companies.

Theme 2. AI Level 3 by 2025: OpenAI's Vision and Concerns

- AI level 3 (agents) in 2025, as new Sam Altman's post... (Score: 336, Comments: 162): By 2025, the development of AI Level 3 agents is expected to significantly impact business productivity, marking a shift from basic AI chatbots to more advanced AI systems. The post expresses optimism that these AI advancements will provide effective tools that lead to broadly positive outcomes in the workforce.

- There is skepticism about the impact of AI Level 3 agents on companies, with some arguing that individuals will benefit more than corporations. Immersive-matthew points out that many people have already embraced AI for productivity, while companies have not seen equivalent benefits, suggesting that AI could empower individuals rather than large corporations.

- Concerns about the economic and workforce implications of AI advancements are prevalent. Kiwizoo and Grizzly_Corey discuss potential economic collapse and workforce disruption, suggesting that AI and robotics could eliminate the need for human labor, leading to significant societal changes.

- There is criticism of the hype surrounding AGI and doubts about its near-term realization. Agitated_Marzipan371 and others express skepticism about the feasibility of true AGI by 2025, viewing the term as a marketing strategy rather than a realistic goal, and compare it to other overhyped technological predictions.

Theme 3. Efficiency in AI Models: Claude 3.5 and Google's Advances

- Watched Anthropic CEO interview after reading some comments. I think noone knows why emergent properties occur when LLM complexity and training dataset size increase. In my view these tech moguls are competing in a race where they blindly increase energy needs and not software optimisation. (Score: 130, Comments: 79): The post critiques the approach of tech leaders in the AI field, arguing that they focus on scaling up LLM complexity and training dataset sizes without understanding the emergence of properties like AGI. The author suggests investing in nuclear energy technology as a more effective strategy rather than blindly increasing energy demands without optimizing software.

- Optimization and Scaling: Several comments highlight the focus on optimization in AI development, with RevoDS noting that GPT-4o is 30x cheaper and 8x smaller than GPT-4, suggesting that significant efforts are being made to optimize models alongside scaling. Prescod argues that the industry is not "blindly" scaling but also exploring better learning algorithms, though scaling has been effective so far.

- Nuclear Energy and AI: Rampants mentions that tech leaders like Sam Altman are involved in nuclear startups such as Oklo, and Microsoft is also exploring nuclear energy, indicating a parallel interest in sustainable energy solutions for AI. However, new nuclear projects face significant approval and implementation challenges.

- Emergent Properties and Complexity: Pixel-Piglet discusses the inevitability of emergent properties as neural networks scale, drawing parallels to the human brain. The comment suggests that the complexity of data and models leads to unexpected outcomes, a perspective supported by Ilya Sutskever's observations on model intricacy and emergent faculties.

AI Discord Recap

A summary of Summaries of Summaries by o1-preview-2024-09-12

Theme 1. AI Model Performance and Troubleshooting

- DeepSeek V3 Faces Stability Challenges: Users reported performance issues with DeepSeek V3, including long response times and failures on larger inputs. Despite good benchmarks, concerns arose about its practical precision and reliability in real-world applications.

- Cursor IDE Users Navigate Inconsistent Model Performance: Developers experienced inconsistent behavior from Cursor IDE, especially with Claude Sonnet 3.5, citing context retention issues and confusing outputs. Suggestions included downgrading versions or simplifying prompts to mitigate these problems.

- LM Studio 0.3.6 Model Loading Errors Spark User Frustration: The new release of LM Studio led to exit code 133 errors and increased RAM usage when loading models like QVQ and Qwen2-VL. Some users overcame these setbacks by adjusting context lengths or reverting to older builds.

Theme 2. New AI Models and Tool Releases

- Unsloth Unveils Dynamic 4-bit Quantization Magic: Unsloth introduced dynamic 4-bit quantization, preserving model accuracy while reducing VRAM usage. Testers reported speed boosts without sacrificing fine-tuning fidelity, marking a significant advancement in efficient model training.

- LM Studio 0.3.6 Steps Up with Function Calling and Vision Support: The latest LM Studio release features a new Function Calling API for local model usage and supports Qwen2VL models. Developers praised the extended capabilities and improved Windows installer, enhancing user experience.

- Aider Expands Java Support and Debugging Integration: Contributors highlighted that Aider now supports Java projects through prompt caching and is exploring debugging integration with tools like ChatDBG. These advancements aim to enhance development workflows for programmers.

Theme 3. Hardware Updates and Anticipations

- Nvidia RTX 5090 Leak Excites AI Enthusiasts: A leak revealed that the upcoming Nvidia RTX 5090 will feature 32GB of GDDR7 memory, stirring excitement ahead of its expected CES announcement. The news overshadowed recent RTX 4090 buyers and hinted at accelerated AI training workloads.

- Community Debates AMD vs. NVIDIA for AI Workloads: Users compared AMD CPUs and GPUs with NVIDIA offerings for AI tasks, expressing skepticism about AMD's claims. Many favored NVIDIA for consistent performance in heavy-duty models, though some awaited real-world benchmarks of AMD's new products.

- Anticipation Builds for AMD’s Ryzen AI Max: Enthusiasts showed strong interest in testing AMD's Ryzen AI Max, speculating on its potential to compete with NVIDIA for AI workloads. Questions arose about running it alongside GPUs for combined performance in AI applications.

Theme 4. AI Ethics, Policy, and Industry Movements

- OpenAI's Reflections on AGI Progress Spark Debate: Sam Altman discussed OpenAI's journey towards AGI, prompting debates about corporate motives and transparency in AI development. Critics highlighted concerns over potential impacts on innovation and entrepreneurship due to advanced AI capabilities.

- Anthropic Faces Copyright Challenges Over Claude: Anthropic agreed to maintain guardrails on Claude to prevent sharing copyrighted lyrics amid legal action from publishers. The dispute underscored tensions between AI development and intellectual property rights.

- Alibaba and 01.AI Collaborate on Industrial AI Lab: Alibaba Cloud partnered with 01.AI to establish a joint AI laboratory targeting industries like finance and manufacturing. The collaboration aims to accelerate the adoption of large-model solutions in enterprise settings.

Theme 5. Advances in AI Training Techniques and Research

- PRIME RL Unlocks Advanced Language Reasoning: Researchers examined PRIME (Process Reinforcement through Implicit Rewards), showcasing scalable RL techniques to strengthen language model reasoning. The method demonstrates surpassing existing models with minimal training steps.

- MeCo Method Accelerates Language Model Pre-training: The MeCo technique, introduced by Tianyu Gao, prepends source URLs to training documents, accelerating LM pre-training. Early feedback indicates modest improvements in training outcomes across various corpora.

- Efficient Fine-Tuning with LoRA Techniques Gains Traction: Users discussed using LoRA for efficient fine-tuning of large models with limited GPU capacity. Advice centered on optimizing memory usage without sacrificing model performance, especially for models like DiscoLM on low-VRAM setups.

PART 1: High level Discord summaries

aider (Paul Gauthier) Discord

- Sophia's Soaring Solutions & Aider's Apparent Affinity: The brand-new Sophia platform introduces autonomous agents, robust pull request reviews, and multi-service support, as shown at sophia.dev, aimed at advanced engineering workflows.

- Community members compared these capabilities to Aider's features, praising the overlap and showing interest in testing Sophia for AI-driven software processes.

- Val Town's Turbocharged LLM Tactics: In a blog post, Val Town shared their progress from GitHub Copilot to newer assistants like Bolt and Cursor, trying to stay aligned with rapidly updating LLMs.

- Their approach, described as fast-follows, sparked discussion on adopting proven strategies from other teams to refine code generation systems.

- AI Code Analysis Gains 'Senior Dev' Sight: An experiment outlined in The day I taught AI to read code like a Senior Developer introduced context-aware grouping, enabling the AI to prioritize core changes and architecture first.

- Participants noted this approach overcame confusion in React codebases, calling it a major leap beyond naive, line-by-line AI parsing methods.

- Aider Advances Java and Debugging Moves: Contributors revealed Aider supports Java projects through prompt caching, referencing the installation docs for flexible setups.

- They also explored debugging with frameworks like ChatDBG, highlighting the potential synergy between Aider and interactive troubleshooting solutions.

Unsloth AI (Daniel Han) Discord

- Unsloth’s 4-bit Wizardry: The team introduced dynamic 4-bit quantization, preserving model accuracy while trimming VRAM usage.

- They shared installation tips in the Unsloth docs and reported that testers saw speed boosts without sacrificing fine-tuning fidelity.

- Concurrent Fine-Tuning Feats: A user confirmed it's safe to fine-tune multiple model sizes (0.5B, 1.5B, 3B) simultaneously, as explained in Unsloth Documentation.

- They noted key VRAM limits, advising smaller learning rates and LoRA methods to ensure memory efficiency.

- Rohan's Quantum Twirl & Data Tools: Rohan showcased interactive Streamlit apps for Pandas data tasks, linking his LinkedIn posts and a quantum blog here.

- He combined classical data analysis with complex number exploration, sparking curiosity about integrating AI with emerging quantum methods.

- LLM Leaderboard Showdown: Community members ranked Gemini and Claude at the top, praising Gemini experimental 1207 as a standout free model.

- Discussions suggested Gemini outran other open-source builds, fueling a debate on which LLM truly holds the crown.

Codeium (Windsurf) Discord

- Tidal Tweaks: Codeium Channels & December Changelist: The Codeium server introduced new channels for .windsurfrules strategy, added a separate forum for collaboration, and published the December Changelist.

- Members anticipate a new stage channel and more ways to display community achievements, with many praising the streamlined support portal approach.

- Login Limbo: Authentication Hiccups & Self-Hosting Hopes: Users encountered Codeium login troubles, suggesting token resets, while others questioned on-prem setups for 10–20 devs under an enterprise license.

- Some recommended a single PC for hosting, though concerns arose about possible performance bottlenecks if usage grows.

- Neovim Nudges: Plugin Overhaul & Showcase Channel Dreams: Discussion focused on

codeium.vimandCodeium.nvimfor Neovim, spotlighting verbose comment completions and the prospect of a showcase channel.- Community members expect more refined plugin behavior, hoping soon to exhibit Windsurf- and Cascade-based apps in a dedicated forum.

- Claude Crunch: Windsurf Struggles & Cascade Quirks: Windsurf repeatedly fails to apply code changes with Claude 3.5, producing 'Cascade cannot edit files that do not exist' errors and prompting brand-new sessions.

- Many suspect Claude struggles with multi-file work, nudging users toward Cascade Base for more predictable results.

- Credit Confusion: Premium vs. Flex & Project Structure: Developers debated Premium User Prompt Credits versus Flex Credits, noting that Flex supports continuous prompts and elevated usage with Cascade Base.

- They also suggested consolidating rules into a single .windsurfrules file and shared approaches for backend organization.

Cursor IDE Discord

- Cursor Changelogs & Plans: The newly released Cursor v0.44.11 prompted calls for better changelogs and modular documentation, with a download link.

- Some developers stressed the need for flexible project plan features, wanting simpler steps to track tasks in real time.

- Claude Sonnet 3.5 Surprises: Engineers reported inconsistent performance from Claude Sonnet 3.5, citing context retention issues and confusing outputs in long conversations.

- A few users suggested that reverting versions or using more concise prompts sometimes outperformed comprehensive instructions.

- Cursor's AGI Ambitions: Several users see Cursor as a potential springboard for higher-level intelligence in coding, debating ways to utilize task-oriented features.

- They speculated that refining these features might enhance AI-driven capabilities, closing gaps between manual coding and automated assistance.

- Composer & Context Calamities: Developers encountered trouble with Composer on large files, citing editing issues and poor context handling for expanded code blocks.

- They noticed random context resets when switching tasks, causing unintended changes and confusion across sessions.

LM Studio Discord

- LM Studio 0.3.6 Steps Up with Tool Calls: LM Studio 0.3.6 arrived featuring a new Function Calling / Tool Use API for local model usage, plus Qwen2VL support.

- Model Loads Meet Exit Code 133: Users encountered exit code 133 errors and increased RAM usage when loading QVQ and Qwen2-VL in LM Studio.

- Some overcame these setbacks by adjusting context lengths or reverting to older builds, while others found success using MLX from the command line.

- Function Calling API Draws Praise: The Function Calling API extends model output beyond text, with users applauding both the documentation and example workflows.

- However, a few encountered unanticipated changes in JiT model loading after upgrading to 3.6, calling for additional bug fixes.

- Hardware Tussle Between AMD and NVIDIA: Participants flagged that a 70B model needs more VRAM than most GPUs offer, loitering on CPU inference or smaller quantizations.

- They debated AMD vs NVIDIA claims—some championed AMD CPU performance, though skepticism lingered about real-world gains with massive models.

- Chatter on AMD’s Ryzen AI Max: Enthusiasts expressed strong interest in AMD’s new Ryzen AI Max, questioning its potential to rival NVIDIA for heavy-duty model demands.

- They asked about running it alongside GPUs for combined muscle, reflecting persistent curiosity around multi-device setups.

Stackblitz (Bolt.new) Discord

- Stackblitz Backups Vanish: When reopening Stackblitz, some users found their projects reverted to earlier states, losing code despite frequent saves, as noted in the #prompting channel.

- Community members confirmed varied experiences but offered no universal solution beyond double-checking saves and backups.

- Deployment Workflow Confusion: Users struggled with pushing code to GitHub from different services like Netlify or Bolt, debating whether to rely on Bolt Sync or external tools.

- They agreed that a consistent approach to repository updates is crucial, but no definitive workflow emerged from the discussions.

- Token Tangles & Big Bills: Participants reported hefty token usage, sometimes burning hundreds of thousands for minor modifications, prompting concerns about cost.

- They recommended refining instructions to reduce token waste, emphasizing that careful edits and thorough planning help prevent excessive usage.

- Supabase & Netlify Snags: Developers faced Netlify deployment errors while integrating with Bolt, referencing this GitHub issue for guidance.

- Others encountered Supabase login and account creation problems, often needing new .env setups to get everything working properly.

- Prompting & OAuth Quirks: Community members advised against OAuth in Bolt during development, pointing to repeated authentication failures and recommending email-based logins instead.

- Discussion also highlighted the importance of efficient prompts for Bolt to limit token consumption, with users trading tips on how to shape instructions accurately.

Stability.ai (Stable Diffusion) Discord

- Stable Surprises: Model Mix-Ups: Multiple users discovered that Civit.ai models struggled with basic prompts like 'woman riding a horse', fueling confusion about prompt specificity.

- Some participants shared that certain LoRAs outperformed others, linking to CogVideoX-v1.5-5B I2V workflow and praising the sharper quality outcomes.

- LoRA Logic or Full Checkpoint Craze: Participants debated whether LoRAs or entire checkpoints best addressed style needs, with LoRAs providing targeted enhancements while full checkpoints offered broader capabilities.

- They noted potential model conflicts if multiple LoRAs are stacked, emphasizing a preference for specialized training and referencing GitHub - bmaltais/kohya_ss for streamlined custom LoRA creation.

- ComfyUI Conundrums: Node-Based Mastery: The ComfyUI workflow sparked chatter, as newcomers found its node-based approach both flexible and challenging.

- Resources like Stable Diffusion Webui Civitai Helper were recommended to easily manage LoRA usage and reduce workflow friction.

- Inpainting vs. img2img: The Speed Struggle: Some users reported inpainting took noticeably longer than img2img, despite editing only part of the image.

- They theorized that internal operations can vary widely in complexity, citing references to AnimateDiff for advanced multi-step generation.

- GPU Gossip: 5080 & 5090 Speculations: Rumors swirled around upcoming NVIDIA GPUs, with mention of the 5080 and 5090 possibly priced at $1.4k and $2.6k.

- Concerns also arose over market scalping, prompting some to suggest waiting for future AI-focused cards or more official announcements from NVIDIA.

Latent Space Discord

- GDDR7 Gains with Nvidia's 5090: A last-minute leak from Tom Warren revealed the Nvidia RTX 5090 will include 32GB of GDDR7 memory, just before its expected CES debut.

- Enthusiasts discussed the potential for accelerated training workloads, anticipating official announcements to confirm specs and release timelines.

- Interrupt with LangChain: LangChain unveiled Interrupt: The AI Agent Conference in May at San Francisco, featuring code-centric workshops and deep sessions.

- Some suggested the timing aligns with larger agent-focused events, marking this gathering as a hub for those pushing new agentic solutions.

- PRIME Time: Reinforcement Rewards Revisited: Developers buzzed about PRIME (Process Reinforcement through Implicit Rewards), showing Eurus-2 surpassing Qwen2.5-Math-Instruct with minimal training steps.

- Critics called it a hot-or-cold game between two LLMs, while supporters championed it as a leap for dense step-by-step reward modeling.

- ComfyUI & AI Engineering for Art: A new Latent.Space podcast episode highlighted ComfyUI's origin story, featuring GPU compatibility and video generation for creative work.

- The team discussed how ComfyUI evolved from a personal prototype into a startup innovating the AI-driven art space.

- GPT-O1 Falls Short on SWE-Bench: Multiple tweets, like this one, showed OpenAI’s GPT-O1 hitting 30% on SWE-Bench Verified, contrary to the 48.9% claim.

- Meanwhile, Claude scored 53%, sparking debates on model evaluation and reliability in real-world tasks.

Interconnects (Nathan Lambert) Discord

- Nvidia 5090’s Surprising Appearance: Reports from Tom Warren highlight the Nvidia RTX 5090 surfacing with 32GB GDDR7 memory, overshadowing recent RTX 4090 buyers, just before CES. The leak stirred excitement over its specs and price implications for high-end AI workloads.

- Community reactions ranged from regret over hasty 4090 purchases to curiosity regarding upcoming benchmark results. Some speculated that Nvidia’s next-gen lineup could accelerate compute-intense training pipelines further.

- Alibaba & 01.AI’s Joint Effort: Alibaba Cloud and 01.AI have formed a partnership, as noted in an SCMP article, to establish an industrial AI joint laboratory targeting finance, manufacturing, and more. They plan to merge research resources for large-model solutions, aiming to speed adoption in enterprise settings.

- Questions persist around the scope of resource-sharing and whether overseas expansions will occur. Despite mixed reports, participants spotted potential for boosting next-gen enterprise tech in Asia.

- METAGENE-1 Takes on Pathogens: A 7B parameter metagenomic model named METAGENE-1 has been open-sourced in concert with USC researchers, per Prime Intellect. This tool targets planetary-scale pathogen detection to strengthen pandemic prevention.

- Members highlighted that a domain-specific model of this scale could accelerate epidemiological monitoring. Many anticipate new pipelines for scanning genomic data in large public health initiatives.

- OpenAI’s O1 Hits a Low Score: O1 managed only 30% on SWE-Bench Verified, contradicting the previously claimed 48.9%, while Claude reached 53% in the same test, as reported by Alex_Cuadron. Testers suspect that the difference might reflect prompting details or incomplete validation steps.

- This revelation triggered debates about post-training improvements and real-world performance. Some urged more transparent benchmarks to clarify whether O1 merely needs refined instructions.

- MeCo Method for Quicker Pre-training: The MeCo (metadata conditioning then cooldown) technique, introduced by Tianyu Gao, prepends source URLs to training documents for accelerated LM pre-training. This added metadata provides domain context cues, which may reduce guesswork in text comprehension.

- Skeptics questioned large-scale feasibility, but the method earned praise for clarifying how site-based hints can optimize training. Early feedback indicates a modest improvement in training outcomes for certain corpora.

Nous Research AI Discord

- Hermes 3 Wrestles with Stage Fright: Community members reported Hermes 3 405b producing anxious and timid characters, even for roles meant to convey confidence. They shared tips like adjusting system prompts and providing clarifying examples, but acknowledged the challenge of shaping desired model behaviors.

- Some pointed out that tweaking the prompt baseline is tricky, echoing a broader theme of balancing AI capability with user prompts. Others insisted that thorough manual testing is the only reliable method to verify characterizations.

- ReLU² Rivalry with SwiGLU: A follow-up to Primer: Searching for Efficient Transformers indicated that ReLU² edges out SwiGLU in cost-related metrics, prompting debate over why LLaMA3 didn't adopt it.

- Some participants noted the significance of feed-forward block tweaks, calling the improvement “a new spin on Transformer optimization.” They wondered if lower training overhead might lead to more experiments in upcoming architectures.

- PRIME RL Strengthens Language Reasoning: Users examined the PRIME RL GitHub repository, which claims a scalable RL solution to strengthen advanced language model reasoning. They commented that it could open bigger avenues for structured thinking in large-scale LLMs.

- One user admitted exhaustion when trying to research it but still acknowledged the project’s promising focus, indicating a shared community desire for more robust RL-based approaches. The conversation signaled interest in further exploration once members are re-energized.

- OLMo’s Massive Collaborations: Team OLMo published 2 OLMo 2 Furious (arXiv link), presenting new dense autoregressive models with improved architecture and data mixtures. This effort highlights their push toward open research, with multiple contributors refining training recipes for next-gen LLM development.

- Community members praised the broad collaboration, emphasizing how expansions in architecture and data can spur deeper experimentation. They saw it as a sign that open discourse around advanced language modeling is gaining steam across researchers.

OpenAI Discord

- OmniDefender's Local LLM Leap: In OmniDefender, users integrate a Local LLM to detect malicious URLs and examine file behavior offline. This setup avoids external calls but struggles when malicious sites block outgoing checks, complicating threat detection.

- Community feedback lauded offline scanning potential, referencing GitHub - DustinBrett/daedalOS: Desktop environment in the browser as an example of heavier local applications. One member joked that it showcased a 'glimpse of self-contained defenses' powering malware prevention.

- MCP Spec Gains Momentum: Implementing MCP specifications has simplified plugin integration, sparking a jump in AI development. Some contrasted MCP with older plugin methods, touting it as a new standard for versatile expansions.

- Participants noted that 'multi-compatibility is the logical next step,' fueling widespread interest. They predicted a 'plugin arms race' as providers chase MCP readiness.

- Sky-High GPT Bills: OpenAI caused a stir by revealing $25 per message as the operating cost of its large GPT model. Developers expressed concern about scaling such expenses for routine usage.

- Some participants called the price 'steep' for persistent experiments. Others hoped tiered packages might open advanced GPT-4 features to more users.

- Sora’s Single-Image Snafu: Developers lamented that Sora only supports one image upload per video, trailing platforms with multiple-image workflows. This restriction presents a significant snag for detailed image processing tasks.

- Feedback included comments like 'the image results are decent, but one at a time is a big limitation.' Several pinned hopes on an expansion, calling it 'critical for modern multi-image pipelines.'

- AI Document Analysis Tactics: Members deliberated on scanning vehicle loan documents without exposing PII, recommending redaction before any AI-driven examination. This manual approach aims to safeguard privacy while using advanced parsing techniques.

- One participant dubbed it 'brute-force privacy protection' and urged caution with automated solutions. Another recommended obtaining a sanitized version from official sources to circumvent storing confidential data.

Perplexity AI Discord

- Apple's Siri Snooping Saga Simmers: Apple settled the Siri Snooping lawsuit, raising privacy questions and highlighting user concerns in this article.

- Commenters examined the ramifications of prolonged legal and ethical debates, underscoring the need for robust data protections.

- Swift Gains Steam for Modern Development: A user shared insights on Swift and its latest enhancements, referencing this overview.

- Developers praised Swift’s evolving capabilities, calling it a prime candidate for app creation on Apple platforms.

- Gaming Giant Installs AI CEO: An unnamed gaming company broke ground by appointing an AI CEO, with details in this brief.

- Observers noted this emerging corporate experiment as a sign of fresh approaches to management and strategy.

- Mistral Sparks LLM Curiosity: Members discussed Mistral as a potential LLM option without deep expertise, wondering if it adds new functionality among the many AI tools.

- Users questioned its distinct advantages, seeking concrete performance data before considering broad adoption.

Eleuther Discord

- DeepSeek v3 Dives Deeper: Enthusiasts tested DeepSeek v3 locally with 4x3090 Ti GPUs, targeting 68.9% on MMLU-pro in 3 days, pointing to Eleuther AI’s get-involved page for more resources.

- Their conversation highlighted hardware constraints and suggestions for architectural improvements, referencing a tweet on new Transformer designs.

- MoE Madness and Gated DeltaNet: Members debated high-expert MoE viability and parameter balancing in Gated DeltaNet, linking to the GitHub code and a tweet on DeepSeek’s MoE approach.

- They questioned the practical trade-offs for large-scale labs while praising Mamba2 for reducing parameters, hinting that the million-experts concept may not fully deliver on performance.

- Metadata Conditioning Gains: Researchers proposed Metadata Conditioning (MeCo) as a novel technique for guiding language model learning, citing “Metadata Conditioning Accelerates Language Model Pre-training” (arXiv) and referencing the Cerebras RFP.

- They saw meaningful pre-training efficiency boosts at various scales, and also drew attention to Cerebras AI offering grants to push generative AI research.

- CodeLLMs Under the Lens: Community members shared mechanistic interpretability findings on coding models, pointing to Arjun Guha’s Scholar profile and explored type hint steering via “Understanding How CodeLLMs (Mis)Predict Types with Activation Steering.”

- They discussed Selfcodealign for code generation, teased for 2024, and argued that automated test suite feedback could correct mispredicted types.

- Chat Template Turbulence: Multiple evaluations with chat templates on L3 8B hovered around 70%, referencing lm-evaluation-harness code.

- They uncovered a 73% jump when removing templates, fueling regret for not testing earlier and clarifying request caching nuances for local HF models.

OpenRouter (Alex Atallah) Discord

- llmcord Leaps Forward: The llmcord project has gained over 400 GitHub stars for letting Discord serve as a hub for multiple AI providers, including OpenRouter and Mistral.

- Contributors highlighted its easy setup and potential to unify LLM usage in a single environment, noting its flexible API compatibility.

- Nail Art Gains AI Twist: A new Nail Art Generator uses text prompts and up to 3 images to produce fun designs, powered by OpenRouter and Together AI.

- Members praised its quick outcomes, calling it “a neat fusion of creativity and AI,” and pointing to future expansions in prompts and art styles.

- Gemini Flash 1.5 Steps Up: Community members weighed Gemini Flash 1.5 vs the 8B edition, recommending a smaller model first for better cost control, referencing competitive pricing.

- They noted that Hermes testers see reduced token fees using OpenRouter instead of AI Studio, spurring interest in switching to this model.

- DeepSeek Hits Snags: Multiple users reported DeepSeek V3 downtime and slower outputs for prompts beyond 8k tokens, linking it to scaling issues.

- Some suggested bypassing OpenRouter for a direct DeepSeek connection, expecting that move to cut down latency and avoid temporary limits.

Notebook LM Discord Discord

- Audio Freeze Frenzy at 300 Sources: One user noted that when up to 300 sources are included, the NLM audio overview freezes on one source, disrupting playback.

- They emphasized concerns about reliability for larger projects, with hopes for a fix to improve multi-source handling.

- NotebookLM Plus Packs a Punch: Members dissected the differences between free and paid tiers, referring to NotebookLM Help for specific details on upload limits and features.

- They discussed advanced capabilities like larger file allowances, prompting some to weigh the upgrade for heavier workloads.

- Podcast Breaks and Voice Roulette: Frequent podcast breaks persisted despite custom instructions, prompting suggestions for more forceful system prompts to keep hosts talking.

- One user tested a single male voice successfully, but attempts to opt for only the female expert voice faced unexpected pushback.

- Education Prompts and Memos Made Easy: People explored uploading memos from a Mac to NLM for structured learning, hoping to streamline digital note-taking.

- Another thread pitched a curated list of secondary education prompts, highlighting community-driven sharing of specialized tips.

GPU MODE Discord

- Quantization Quarrels & Tiling Trials: Members noted that quantization overhead can double loading time for float16 weights and that 32x32 tiling in matmul runs 50% slower than 16x16, illustrating tricky impl details on GPU architectures.

- They debated whether register spilling might cause the slowdown and expressed interest in exploring simpler, architecture-aware explanations.

- Triton Tuning & Softmax Subtleties: Users observed 3.5x faster results with

torch.emptyinstead oftorch.empty_like, while caching best block sizes viatriton.heuristicscan cut autotune overhead.- They also reported reshape tricks for softmax kernels, but row-major vs col-major concerns lingered as performance varied.

- WMMA Woes & ROCm Revelations: The wmma.load.a.sync instruction consumes 8 registers for a 16×16 matrix fragment, whereas wmma.store.d.sync only needs 4, sparking debates on data packing intricacies.

- Meanwhile, confusion arose around MI210’s reported 2 thread-block limit vs the A100’s 32, leaving members unsure about hardware design implications.

- SmolLM2 & Bits-and-Bytes Gains: A Hugging Face collaboration on SmolLM2 with 11 trillion tokens launched param sizes at 135M, 360M, and 1.7B, aiming for better efficiency.

- In parallel, a new bitsandbytes maintainer announced their transition from software engineering, underscoring fresh expansions in GPU-oriented optimizations.

- Riddle Rewards & Rejection Tactics: Thousands of completions for 800 riddles revealed huge log-prob variance, prompting negative logprop as a simple reward approach.

- Members explored expert iteration with rejection sampling on top-k completions, eyeing frameworks like PRIME and veRL to bolster LLM performance.

Cohere Discord

- Joint-Training Jitters & Model Mix-ups: A user sought help on joint-training and loss calculation, but others joked they should find compensation instead, while confusion mounted over command-r7b-12-2024 access. LiteLLM issues and n8n errors pointed to an overlooked update for the new Cohere model.

- One member insisted that n8n couldn't find command-r7b-12-2024, advising a pivot to command-r-08-2024, which highlighted the need for immediate support from both LiteLLM and n8n maintainers.

- Hackathon Hype & Mechanistic Buzz: Following a mention of the AI-Plans hackathon on January 25th, a user promoted an alignment-focused event for evaluating advanced systems. They also identified mechanistic interpretation as an area of active exploration, bridging alignment ideas with actual code.

- Quotes from participants expressed excitement in sharing alignment insights, with some citing a synergy between AI-Plans and potential expansions in mech interp research.

- API Key Conundrum & Security Solutions: Multiple reminders emphasized that API keys must remain secure, while users recommended key rotation to avoid accidental exposure. Cohere's support team was also mentioned for professional guidance.

- One user discovered they publicly posted a key by mistake, quickly deleting it and cautioning others to do the same if uncertain.

- Temperature Tinkering & Model Face-Off: A user questioned whether temperature can be set per-item for structured generations, seeking clarity on advanced parameter handling. Another inquired about the best AI model compared to OpenAI's o1, revealing the community's interest in direct performance matches.

- They requested more details on model reliability, prompting further talk about balanced results and how temperature adjustments could shape final outputs.

- Agentic AI Explorations & Paper Chases: A master's student focused on agentic AI pitched bridging advanced system capabilities with human benefits, asking for cutting-edge research angles. They specifically asked for references to Papers with Code to find relevant work.

- Community members suggested more real-world proof points and recommended exploring new publications for progressive agent designs, emphasizing the synergy between concept and execution.

LlamaIndex Discord

- Agentic Invoice Automation: LlamaIndex in Action: A thorough set of notebooks shows how LlamaIndex uses RAG for fully automated invoice processing, with details here.

- They incorporate structured generation for speedier tasks, drawing interest from members exploring agentic workflows.

- Lively UI with LlamaIndex & Streamlit: A new guide showcased a user-friendly Streamlit interface for LlamaIndex with real-time updates, referencing integration with FastAPI for advanced deployments (read here).

- Contributors emphasized immediate user engagement, highlighting the synergy between front-end and LLM data flows.

- MLflow & Qdrant: LlamaIndex's Resourceful Pairing: A step-by-step tutorial demonstrated how to pair MLflow for experiment tracking and Qdrant for vector search with LlamaIndex, found here.

- It outlined Change Data Capture for real-time updates, spurring conversation about scaling storage solutions.

- Document Parsing Mastery with LlamaParse: A new YouTube video showcased specialized document parsing approaches using LlamaParse, designed to refine text ingestion pipelines.

- The video covers essential techniques for optimizing workflows, with participants citing the importance of robust data extraction in large-scale projects.

OpenInterpreter Discord

- Cursor Craves .py Profiles: Developers discovered Cursor now demands profiles in

.pyformat, triggering confusion and half-working setups. They cited “Example py file profile was no help either.”- Several folks tried converting their existing profiles with limited success, lamenting that Cursor’s shift forced them to dig around for stable solutions.

- Claude Engineer Gobbles Tokens: A user found Claude Engineer devouring tokens swiftly, prompting them to strip away add-ons and default to shell plus python access. They said it ballooned usage costs, forcing them to cut back on advanced tools.

- Others echoed difficulties balancing performance and cost, noting that token bloat quickly becomes a burden when the system calls external resources.

- Open Interpreter 1.0 Clashes with Llama: Multiple discussions highlighted Open Interpreter 1.0 JSON issues while running fine-tuned Llama models, causing repeated crashes. This problem reportedly appeared during tasks requiring intricate error handling and tool calling.

- Participants complained that disabling tool calling didn’t always solve the problem, prompting further talk of non-serializable object errors and dependency conflicts.

- Windows 11 Works Like a Charm: An installation guide for Windows 11 24H2 proved crucial, providing a reference here. The guide’s creator reported OpenInterpreter ran consistently on their setup.

- They showcased how the process resolves common pitfalls, reinforcing confidence that Windows 11 remains a viable environment for testing new alpha features.

Nomic.ai (GPT4All) Discord

- GPT4All's Android App Raises Eyebrows: Concerns emerged over the official identity of a GPT4All app on the Google Play Store, prompting community warnings about possible mismatches in the publisher name.

- Some users advised holding off on installs until messaging limits and credibility checks align with known GPT4All offerings.

- Local AI Chatbot Goes Offline with Termux: One user shared successes in creating a local AI chatbot using Termux and Python, highlighting phone-based inference for easy access.

- Others raised concerns about battery usage and storage overhead, confirming that direct model downloads can function entirely on a mobile device.

- C++ Enthusiasts Eye GPT4All for OpenFOAM: Developers weighed whether GPT4All can tackle OpenFOAM code analysis, exploring which model handles advanced C++ queries best.

- Some recommended Workik temporarily, while the group debated GPT4All's readiness for handling intricate library tasks.

- Chat Templates & Python Setup Spark GPT4All Buzz: Fans shared pointers on crafting custom system messages with GPT4All, pointing to the official documentation for step-by-step guidance.

- Others requested tutorials for advanced local memory enhancements via Python, driving interest in integrated offline solutions.

tinygrad (George Hotz) Discord

- Windows CI Wins: The community stressed the need for Continuous Integration (CI) on Windows to enable merges, referencing PR #8492 that fixes missing imports in ops_clang.

- One participant said "without CI, the merging process cannot progress", prompting urgent action to uphold development pace.

- Bounty Bonanza Blooms: Several members submitted Pull Requests to claim bounties, including PR #8517 for working CI on macOS.

- They requested dedicated channels to track these initiatives, citing a desire for streamlined management and status updates.

- Tinychat Browses the Web: A developer showcased tinychat in the browser powered by WebGPU, enabling Llama-3.2-1B and tiktoken to run client-side.

- They proposed adding a progress bar for smoother model weight decompression, which garnered positive feedback from testers.

- CES Catchup & Meeting Mentions: tiny corp announced presence at CES Booth #6475 in LVCC West Hall, displaying a tinybox red device.

- The scheduled meeting covered contract details and multiple technical bounties, setting the stage for upcoming objectives.

- Distributed Plans & Multiview In Action: Architects of Distributed training discussed the use of FSDP, urging code refactors to accommodate parallelization efforts.

- tinygrad notes also emphasized multiview implementation and tutorials, encouraging broad community collaboration.

Modular (Mojo 🔥) Discord

- Mojo Doubles Down on

concat: They introduced read-only and ownership-based versions of theconcatfunction forList[Int], letting developers reuse memory if the language owns the list.- This tactic trims extra copying for large arrays, aiming for stronger speed without burdening users.

- Overloaded and Over It: Discussion focused on how function overloading for custom structs in Mojo hits a snag when two

concatsignatures look identical.- This mismatch reveals the difficulty of code reuse when there's no direct copying mechanism, forcing a compile-time standoff.

- Memory Magic with Owned Args: A key idea is to let overloads differ by read vs. owned inputs so the compiler can streamline final usage and skip unnecessary data moves.

- The plan involves auto-detecting when variables can be freed or reused, closing gaps in memory management logic.

- Buggy Debugger in Issue #3917: A segfault arises when using

--debug-level fullto run certain Mojo scripts, as flagged in Issue #3917.- Users noted regular script execution avoids the crash, but the debugger remains an open question for further fixes.

LAION Discord

- Emotional TTS: Fear & Astonishment: Multiple audio clips showcasing fear and astonishment were shared, inviting feedback on perceived quality differences and expressive tone.

- Participants were asked to vote on their preferred versions, highlighting community-driven refinement of emotional speech models.

- PyCoT's Pythonic Problem-Solving: A user showcased the PyCoT dataset for tackling math word problems using AI-driven Python scripts, referencing the AtlasUnified/PyCoT repository.

- Each problem includes a chain-of-thought approach, demonstrating step-by-step Python logic for more transparent reasoning.

- Hunt for GPT-4o & Gemini 2.0 Audio Data: Members inquired about specialized sets supporting GPT-4o Advanced Voice Mode and Gemini 2.0 Flash Native Speech, aiming to push TTS capabilities further.

- They sought community input on any existing or upcoming audio references, hoping to expand the library of advanced speech data.

DSPy Discord

- Fudan's Foray into Test-Time Tactics: A recent paper from Fudan University examines how test-time compute shapes advanced LLMs, highlighting architecture, multi-step reasoning, and reflection patterns.

- The findings also outline avenues for building intricate reasoning systems using DSPy, making these insights relevant for AI engineers aiming for higher-level model behaviors.

- Few-Shot Fun with System Prompts: Participants questioned how to system prompt the LLM to yield few shot examples, aiming to boost targeted outputs.

- They stressed the importance of concise prompts and direct instructions, citing this as a practical way to elevate models’ responsiveness.

- Docstring Dividends for Mipro: A suggestion arose to embed extra docstrings in the signature or use a custom adapter for improved clarity in classification tasks.

- Mipro leverages such docstrings to refine labels, allowing users to specify examples and instructions that enhance classification accuracy.

- DSPy’s Daring Classification Moves: Contributors showcased how DSPy eases prompt optimization in classification, linking a blog post on pipeline-based workflows.

- They also mentioned success upgrading a weather site via DSPy, praising its direct approach to orchestrating language models without verbose prompts.

- DSPy Show & Tell in 34 Minutes: Someone shared a YouTube video featuring eight DSPy examples boiled down to just 34 minutes.

- They recommended it as a straightforward way to pick up DSPy’s features, noting that it simplifies advanced usage for both new and seasoned users.

LLM Agents (Berkeley MOOC) Discord

- Peer Project Curiosity Continues: Attendees requested a central repository to see others' LLM Agents projects, but no official compilation is available due to participant consent concerns.

- Organizers might reveal top entries, offering a glimpse into the best submissions from the course.

- Quiz 5 Cutoff Conundrum: Participants flagged that Quiz 5 on Compound AI Systems closed prematurely, preventing some from completing it in time.

- One person suggested re-opening missed quizzes for thorough knowledge checks, pointing out confusion around course deadlines.

- Certificate Declarations Dealt a Deadline: A user lamented missing the certificate declaration form after finishing quizzes and projects, losing eligibility for official recognition.

- Course staff clarified that late submissions won't be considered, and forms will reopen only after certificates release in January.

MLOps @Chipro Discord

- Clustering Continues to Command: Despite the rise of LLMs, many data workers confirm that search, time series, and clustering remain widely used, with no major shift to neural solutions so far.

- They cited these core methods as essential for data exploration and predictions, keeping them on par with more advanced ML approaches.

- Search Stays Stalwart: Discussions show that core search methods remain mostly untouched, with minimal LLM influence in RAG or large-scale indexing strategies.

- Members noted that many established services see no reason to upend proven search pipelines, leading to a slow adoption of new language-model-based systems.

- Mini Models Master NLP: A discussion revealed that in certain NLP tasks, simpler approaches like logistic regression sometimes outperform large LLMs.

- Attendees observed that while LLMs can be helpful in many areas, there are cases where old-school classifiers still yield better outcomes.

- LLM Surge Sparks New Solutions: Participants reported an uptick of LLM usage in emerging products, offering different directions for software development.

- Others still rely on well-established methods, highlighting a divide between novelty-driven projects and more stable ML implementations.

Torchtune Discord

- Wandb Profile Buzz: Members discussed using Wandb for profiling but noted one private codebase is unrelated to Torchtune, while a potential branch could still benefit from upcoming benchmarks.

- Observers remarked that this profiling discussion may pave the way for performance insights once Torchtune integrates it more tightly.

- Torch Memory Maneuvers: Users noted that Torch cut memory usage by skipping certain matrix materializations during cross-entropy compilation, emphasizing the role of chunked_nll for performance gains.

- They highlighted that this reduction potentially addresses GPU bottlenecks and enhances efficiency without major code refactoring.

- Differential Attention Mystery: A concept known as differential attention, introduced in an October arXiv paper, has not appeared in recent architectures.

- Attendees suggested that it might be overshadowed by other methods or simply did not deliver expected results in real-world tests.

- Pre-Projection Push in Torchtune: One contributor shared benchmarks showing chunking pre projection plus fusing matmul with loss improved performance in Torchtune, referencing their GitHub code.

- They reported cross-entropy as the most efficient option for memory and time under certain gradient sparsity conditions, underscoring the importance of selective optimization.

Axolotl AI Discord

- No relevant AI topic identified #1: No technical or new AI developments emerged from these messages, focusing instead on Discord scam/spam notifications.

- Hence, there is no content to summarize regarding model releases, benchmarks, or novel tooling.

- No relevant AI topic identified #2: The conversation only addressed a typo correction amid server housekeeping updates.

- No deeper AI or developer-focused details were noted, preventing further technical summary.

Mozilla AI Discord

- Common Voice AMA Leaps Into 2025: The project is hosting an AMA in their newly launched Discord server to reflect on progress and spark conversation on future voice tech.

- They aim to tackle questions about Common Voice and outline next steps after the 2024 review.

- Common Voice Touts Openness in Speech Data: Common Voice gathers extensive public voice data to create open speech recognition tools, championing collaboration for all developers.

- Voice is natural, voice is human, captures the movement’s goal to make speech technology accessible well beyond private labs.

- AMA Panel Packs Expertise: EM Lewis-Jong (Product Director), Dmitrij Feller (Full-Stack Engineer), and Rotimi Babalola (Frontend Engineer) will field questions on the project's achievements.

- A Technology Community Specialist will steer the discussion to highlight the year’s advancements and the vision forward.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!