[AINews] Pixtral 12B: Mistral beats Llama to Multimodality

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Vision Language Models are all you need.

AI News for 9/10/2024-9/11/2024. We checked 7 subreddits, 433 Twitters and 30 Discords (216 channels, and 3870 messages) for you. Estimated reading time saved (at 200wpm): 411 minutes. You can now tag @smol_ai for AINews discussions!

Late last night Mistral was back to its old self - unlike Mistral Large 2 (our coverage here), Pixtral was released as a magnet link with no accompanying paper or blogpost, ahead of the Mistral AI Summit today celebrating the company's triumphant first year.

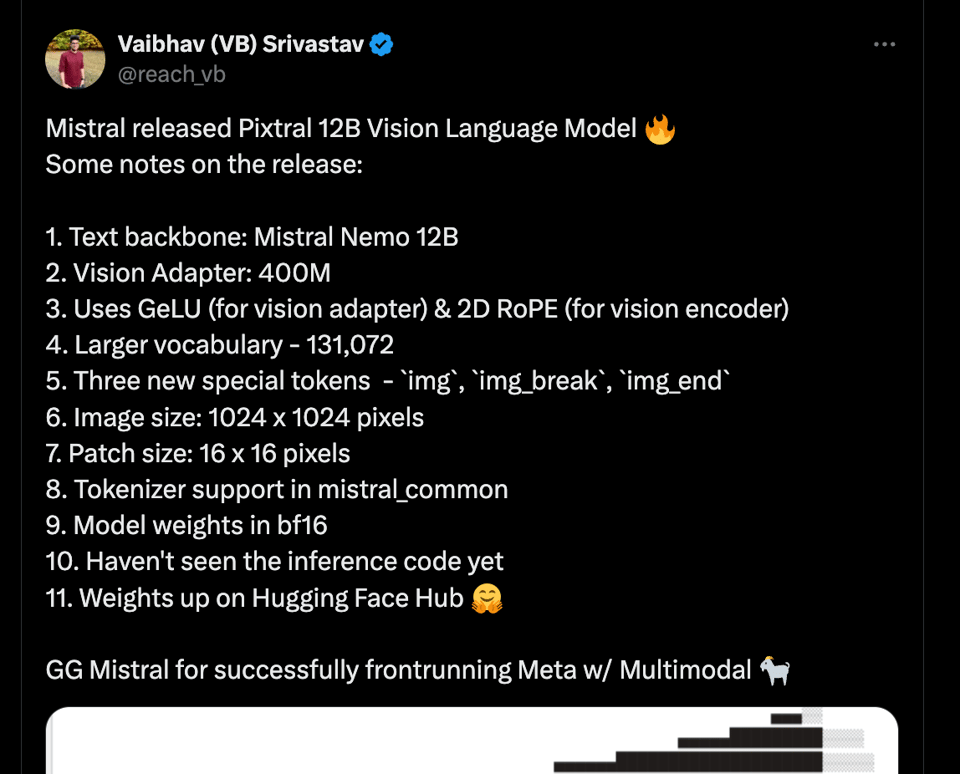

VB of Huggingface had the best breakdown:

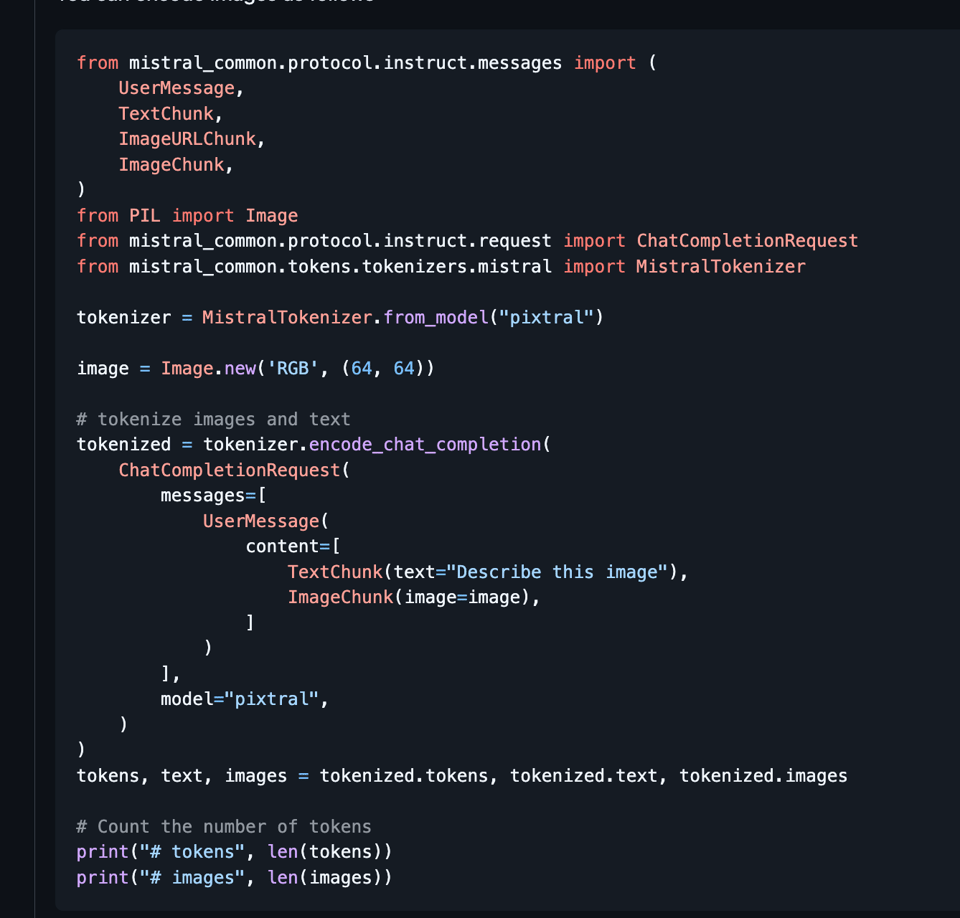

VB rightfully points out that Mistral beat Meta to releasing an open-weights multimodal model. You can see the new ImageChunk API in the mistral-common update:

More hparams are here for those interested in the technical details.

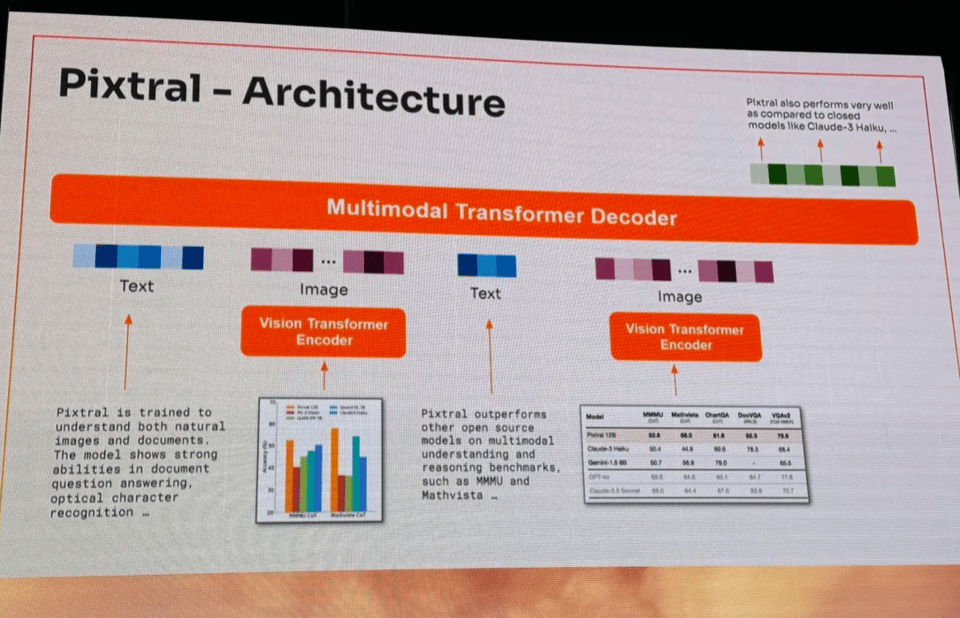

At the Summit, Devendra Chapilot shared more details, on architecture (designed for arbitrary sizes and interleaving)

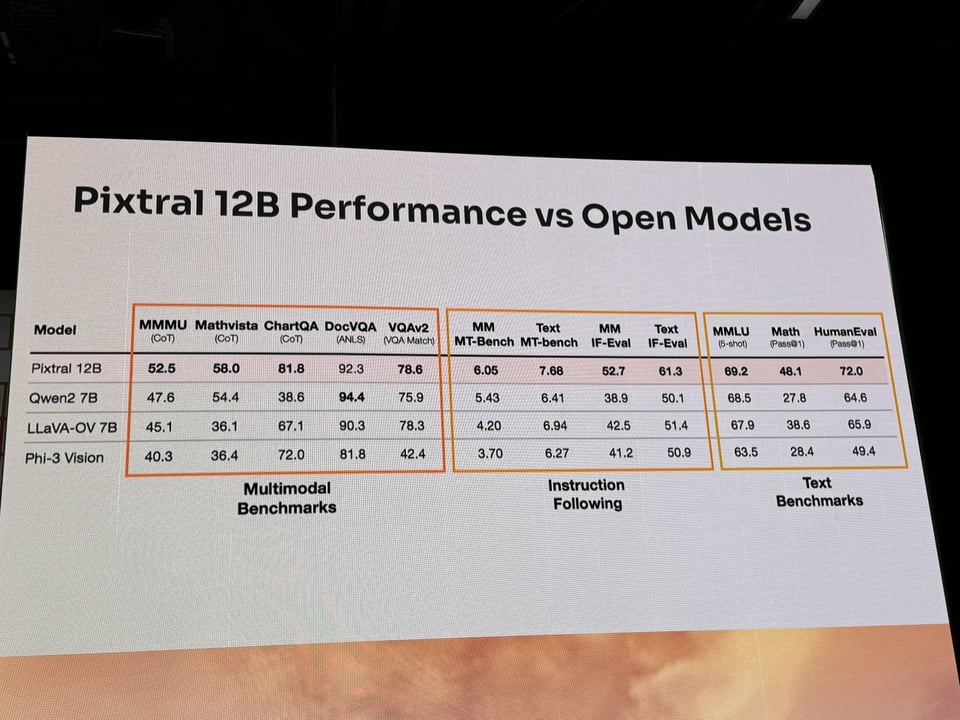

together with impressive OCR and screen understanding examples (with mistakes!) favorable benchmark performance vs open model alternatives (though some Qwen and Gemini Flash 8B numbers were off):

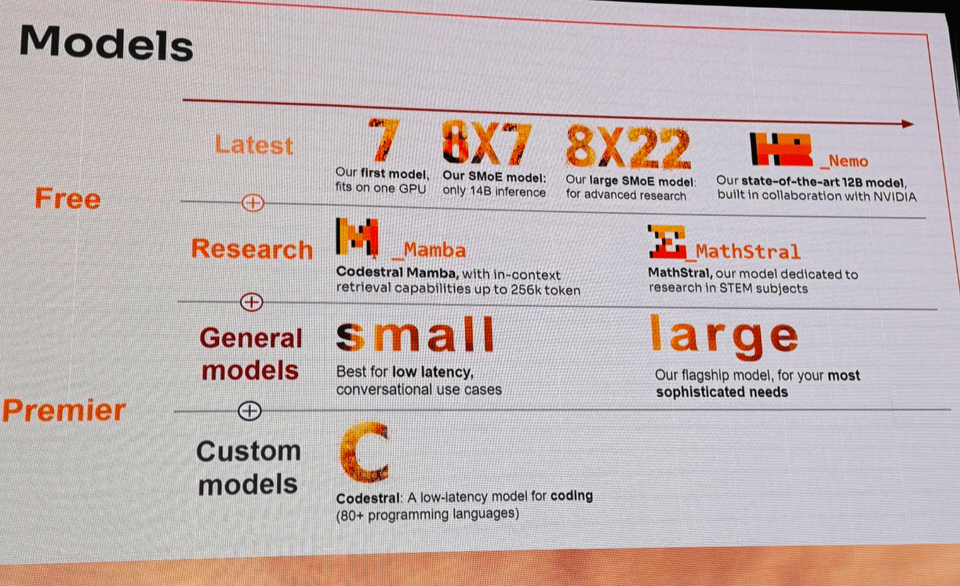

Still an extremely impressive feat and well deserved victory lap for Mistral, who also presented their model priorities and portfolio.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Updates and Benchmarks

- Arcee AI's SuperNova: @_philschmid announced the release of SuperNova, a distilled reasoning Llama 3.1 70B & 8B model. It outperforms Meta Llama 3.1 70B instruct across benchmarks and is the best open LLM on IFEval, surpassing OpenAI and Anthropic models.

- DeepSeek-V2.5: @rohanpaul_ai reported that the new DeepSeek-V2.5 model scores 89 on HumanEval, surpassing GPT-4-Turbo, Opus, and Llama 3.1 in coding tasks.

- OpenAI's Strawberry: @rohanpaul_ai shared that OpenAI plans to release Strawberry as part of its ChatGPT service in the next two weeks. However, @AIExplainedYT noted conflicting reports about its capabilities, with some claiming it's "a threat to humanity" while early testers suggest "its slightly better answers aren't worth the 10 to 20 second wait".

AI Infrastructure and Deployment

- Anthropic Workspaces: @AnthropicAI introduced Workspaces to the Anthropic Console, allowing users to manage multiple Claude deployments, set custom spend or rate limits, group API keys, and control access with user roles.

- SambaNova Cloud: @AIatMeta highlighted that SambaNova Cloud is setting a new bar for inference on 405B models, available for developers to start building today.

- Groq Performance: @JonathanRoss321 claimed that Groq set a new speed record, with plans to improve further.

AI Development Tools and Frameworks

- LangChain Academy: @LangChainAI launched their first course on Introduction to LangGraph, teaching how to build reliable AI agents with graph-based workflows.

- Chatbot Arena Update: @lmsysorg added a new "Style Control" button to their leaderboard, allowing users to apply it to Overall and Hard Prompts to see how rankings shift.

- Hugging Face Integration: @multimodalart shared that it's now easy to add images to the gallery of LoRA models on Hugging Face.

AI Research and Insights

- Sigmoid Attention: @rohanpaul_ai discussed a paper from Apple proposing Flash-Sigmoid, a hardware-aware and memory-efficient implementation of sigmoid attention, yielding up to a 17% inference kernel speed-up over FlashAttention2-2 on H100 GPUs.

- Mixture of Vision Encoders: @rohanpaul_ai shared research on enhancing MLLM performance across diverse visual understanding tasks using a mixture of vision encoders.

- Citation Generation: @rohanpaul_ai reported on a new approach for citation generation with long-context QA, boosting performance and verifiability.

Industry News and Trends

- Klarna's Tech Stack Change: @bindureddy noted that Klarna shut down Salesforce and Workday, replacing them with a simpler tech stack created by AI, potentially 10x cheaper to run than traditional SaaS applications.

- AI Influencer Controversy: @corbtt reported on the Reflection-70B model controversy, stating that after investigation, they do not believe the model that achieved the claimed benchmarks ever existed.

- Mario Draghi's EU Report: @ylecun shared an analysis of Europe's stagnant productivity and ways to fix it by Mario Draghi, highlighting the competitiveness gap between the EU and the US.

AI Reddit Recap

/r/LocalLlama Recap

apologies, our pipeline had issues today. Fixing.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

AI Research and Techniques

- Lipreading with AI: A video demonstrating AI-powered lipreading technology sparked discussions about its potential applications and privacy implications. Some commenters expressed concerns about mass surveillance and deepfake potential, while others saw benefits for accessibility. Source

- China refuses to sign AI nuclear weapons ban: China declined to sign an agreement banning AI from controlling nuclear weapons, raising concerns about the future of AI in warfare. The article notes China wants to maintain a "human element" in such decisions. Source

- Driverless Waymo vehicles show improved safety: A study found that driverless Waymo vehicles get into far fewer serious crashes than human-driven ones, with most crashes being the fault of human drivers in other vehicles. This highlights the potential safety benefits of autonomous driving technology. Source

AI Model Developments and Releases

- OpenAI's GPT-4.5 "Strawberry": Reports suggest OpenAI may release a new text-only AI model called "Strawberry" within two weeks. The model allegedly takes 10-20 seconds to "think" before responding, aiming to reduce errors. However, some testers found the improvements underwhelming compared to GPT-4. Source

- OpenAI research lead departure: A key OpenAI researcher involved in GPT-4 and GPT-5 development has left to start their own company, sparking discussions about talent retention and competition in the AI industry. Source

- Flux fine-tuning improvements: Developers have made progress in fine-tuning the Flux AI model by targeting specific layers, potentially improving training speed and inference quality. This demonstrates ongoing efforts to optimize AI model performance. Source

AI in Entertainment and Media

- James Earl Jones signs over Darth Vader voice rights: Actor James Earl Jones has signed over rights for AI to recreate his iconic Darth Vader voice, highlighting the growing use of AI in entertainment and raising questions about the future of voice acting. Source

- Domo AI video upscaler launch: Domo AI has launched a fast video upscaling tool that can enhance videos up to 4K resolution, showcasing advancements in AI-powered video processing. Source

AI Industry and Research Trends

- Sergey Brin's AI focus: Google co-founder Sergey Brin stated he is working at Google daily due to excitement about recent AI progress, indicating the high level of interest and investment in AI from tech industry leaders. Source

- Public perception of AI job displacement: A meme post sparked discussion about public attitudes towards AI potentially replacing jobs, highlighting the complex emotions and concerns surrounding AI's impact on employment. Source

AI Discord Recap

A summary of Summaries of Summaries by GPT4O-Aug (gpt-4o-2024-08-06)

1. Model Performance and Benchmarking

- Pixtral 12B Outshines Competitors: Pixtral 12B from Mistral demonstrated superior performance over models like Phi 3 and Claude Haiku in OCR tasks, showcased at the Mistral summit.

- Live demos highlighted Pixtral's flexibility in image size handling, sparking discussions on its accuracy compared to rivals.

- Llama-3.1-SuperNova-Lite Excels in Math: Llama-3.1-SuperNova-Lite outperformed Hermes-3-Llama-3.1-8B in mathematical tasks, maintaining accuracy in calculations like Vedic multiplication.

- The model's superior handling of numbers was noted, although both models faced struggles, with SuperNova-Lite showing better numeric integrity.

2. AI and Multimodal Innovations

- Mistral's Pixtral 12B Vision Model: Pixtral 12B, a vision multimodal model, was launched by Mistral, optimized for single GPU usage with 22 billion parameters.

- Though limited to a 4K context size, expectations are high for a long context model by November, enhancing multimodal processing capabilities.

- Hume AI's Empathic Voice Interface 2: Hume AI unveiled the Empathic Voice Interface 2 (EVI 2), merging language and voice to enhance emotional intelligence applications.

- The model is now available, inviting users to create applications requiring deeper emotional engagement, marking advancements in voice AI.

3. Software Engineering and AI Collaboration

- SWE-bench Highlights GPT-4's Efficiency: SWE-bench results show GPT-4 outperforming GPT-3.5 in sub-15 minute tasks, demonstrating enhanced efficiency without a human baseline for comparison.

- Despite improvements, both models falter on tasks exceeding four hours, suggesting limits in problem-solving capabilities.

- Challenges in AI and Software Engineering Integration: Discussions on AI's integration with software engineering reflect growing interest, with AI models showing promise but lacking nuanced human insights.

- AI's role in software engineering tasks is burgeoning, yet it struggles to match seasoned engineers in effectiveness and insight.

4. Open-Source AI Tools and Frameworks

- Modular's Mojo 24.5 Release Anticipation: Anticipation builds for the Mojo 24.5 release, expected within a week, as community meetings discuss resolving clarity issues in interfaces.

- Users eagerly await improved communication on product timelines to prevent misunderstandings and ensure readiness for changes.

- OpenRouter Enhances Programming Tool Integration: OpenRouter offers cost-effective alternatives to Claude API, emphasizing centralized experiments with multiple models.

- Discussions highlight the bypassing of initial rate limits and lower costs, making it a preferred choice for developers.

PART 1: High level Discord summaries

Modular (Mojo 🔥) Discord

- Mojo User Feedback Opportunities: The team is actively seeking users who haven't interacted with Magic to provide feedback during a 30-minute call, offering exclusive swag incentives; interested parties can book a slot here. Inquiries about future swag availability were positively received, indicating potential for broader access.

- Members expressed interest in a possible merch store for more swag options, reflecting the community's enthusiasm for additional engagement opportunities.

- Countdown to Mojo 24.5 Release: Anticipation is building for the upcoming Mojo 24.5 release, which is expected within a week, as discussed in recent community meetings regarding conditional trait conformance that led to user confusion. Members are particularly eager about resolving clarity and visibility issues related to interfaces in complex systems.

- Discussions highlighted the need for better communication on product timelines to prevent misunderstandings and ensure users are well-prepared for the changes.

- Concerns Over Mojo's Copy Behavior: Members voiced worries over Mojo's implicit copy behavior, especially with the

ownedargument convention, which can lead to unplanned copies of large data structures. Suggestions to establish explicit copying as the default option are under consideration for users transitioning from languages like Python.- This led to further debate on how different programming languages manage copying, with users advocating for more transparency in how data is handled.

- Ownership Semantics Create Confusion: The ownership semantics in Mojo sparked a discussion around their potential for creating unpredictable changes in function behavior due to implicit copies, described as 'spooky action at a distance'. There's a call for better clarity in API changes and stricter regulations regarding the

ExplicitlyCopyabletrait to prevent unintended issues such as double frees.- Several members underscored the importance of documentation and community guidelines to help developers navigate these complexities more effectively.

- Mojodojo.dev Gains Attention: The community highlighted the open-source Mojodojo.dev, initially created by Jack Clayton, as a crucial educational resource for Mojo. Members expressed a desire to enhance the platform and were invited to contribute content centered on projects built using Mojo.

- Caroline Frasca emphasized the importance of expanding the blog and YouTube channel content to better showcase projects and resources available for Mojo developers.

Unsloth AI (Daniel Han) Discord

- Mistral's Pixtral Model Emerges: Mistral launched the Pixtral 12b, a vision multimodal model featuring 22 billion parameters optimized to run on a single GPU, though it has a limited 4K context size.

- A full long context model is expected by November, raising expectations for upcoming features in multimodal processing.

- Gemma 2 Outshines Llama 3.1: Gemma 2 consistently outperforms Llama 3.1 in multilingual tasks, particularly excelling in languages like Swedish and Korean.

- Despite the focus on Llama 3, users have acknowledged Gemma 2’s strengths in advanced language tasks.

- Training Efficiency with Smaller Datasets: Users find that smaller, diverse datasets significantly cut down training loss during model optimization.

- Emphasizing quality over quantity, they noted improvements in outcomes when datasets are well-curated and less homogeneous.

- Unsloth Support for Flash Attention 2: Members are integrating Flash Attention 2 with Gemma 2, but encounters with compatibility issues have been noted.

- Despite challenges, there is optimism that final adjustments will resolve conflicts and enhance performance.

- Tuning Challenges with LoRa on phi-3.5: A user reported stagnation in loss improvement when applying LoRa on a phi-3.5 model, initially reducing from 1 to 0.4.

- Recommendations included experimenting with different alpha values to optimize performance further, given the complexities in tuning phi models.

OpenAI Discord

- SWE-bench shows GPT-4's prowess over GPT-3.5: The SWE-bench performance indicates that GPT-4 significantly outperforms GPT-3.5, especially in sub-15 minute tasks, marking enhanced efficiency.

- However, the absence of a human baseline complicates the evaluation of these outcomes against human engineers.

- GameNGEN pushes boundaries with real-time simulations: GameNGEN impressively simulates the game DOOM in real-time, opening avenues for world modeling applications.

- Despite advancements, it still relies on existing game mechanics, raising questions about the originality of 3D environments.

- GPT-4o trumps GPT-3.5 in benchmarks: GPT-4o boasts an 11x improvement over GPT-3.5 when tackling simpler tasks in the SWE-bench framework.

- Nonetheless, both models falter on tasks exceeding four hours, revealing limits to their problem-solving capabilities.

- AI faces challenges in software engineering collaboration: Increasing discussions revolve around AI's integration with software engineers for benchmark tasks, reflecting a burgeoning interest.

- While AI holds promise, it lacks the nuanced insight and effectiveness of seasoned human engineers.

- GAIA benchmark redefines AI difficulty standards: The GAIA benchmark tests AI systems rigorously, while allowing humans to score 80-90% on challenging tasks, a notable distinction from conventional benchmarks.

- This suggests a need for re-evaluation as many existing benchmarks grow increasingly unmanageable even for skilled practitioners.

HuggingFace Discord

- DeepSeek 2.5 merges strengths with 238B MoE: The release of DeepSeek 2.5 integrates features from DeepSeek 2 Chat and Coder 2, featuring a 238B MoE model with a 128k context length and new coding functionalities.

- Function calling and FIM completion offer groundbreaking new standards for chat and coding tasks.

- AI Revolutionizes Healthcare: AI has transformed healthcare by enhancing diagnostics, enabling personalized medicine, and speeding up drug discovery.

- Integrating wearable devices and IoT health monitoring facilitates early disease detection.

- Korean Lemmatizer seeks AI Boost: A member developed a Korean lemmatizer and is seeking ways to utilize AI to resolve word ambiguities.

- They expressed hope for advancements in the ecosystem for better solutions in 2024.

- CSV only provides IDs for image loading: In discussions on image loading, it was noted that CSV files merely contain image IDs, necessitating fetching images or pre-splitting them into directories.

- This method might slightly increase latency compared to creating a DataLoader object from organized folders.

- Multi-agent systems enhance performance: Transformers now support Multi-agent systems, allowing agents to collaborate on tasks, improving overall efficacy in benchmarks.

- This collaborative approach enables specialization on sub-tasks, increasing efficiency.

aider (Paul Gauthier) Discord

- Optimizing Aider's Workflow: Users shared how the

ask first, code laterworkflow with Aider enhances clarity in code implementation, particularly using a plan model.- This approach improves context and reduces reliance on the

/undocommand.

- This approach improves context and reduces reliance on the

- The Benefits of Prompt Caching: Aider's prompt caching feature has shown to cut token usage by 40% through strategic caching of key files.

- This system retains elements such as system prompts, helping to minimize costs during interactions.

- Comparing Aider with Other Tools: Users contrasted Aider with other tools like Cursor and OpenRouter, highlighting Aider's unique features that boost productivity.

- Smart functionalities, like auto-generating aliases and cheat sheets from zsh history, underscore Aider's capabilities.

- Exploring OpenRouter Benefits: Members pointed out the advantages of using OpenRouter over the Claude API, emphasizing cost reductions and the bypassing of initial rate limits.

- OpenRouter facilitates centralized experiments with multiple models, making it a preferred choice.

- Mistral Launches Pixtral Model Torrent: Mistral released the Pixtral (12B) multimodal model as a torrent, suitable for image classification and text generation.

- The download is available via the magnet link

magnet:?xt=urn:btih:7278e625de2b1da598b23954c13933047126238aand supports frameworks like PyTorch and TensorFlow.

- The download is available via the magnet link

LM Studio Discord

- Consistency is Key in AI Images: Users are exploring techniques to maintain character consistency across AI-generated images, even as outfits or backgrounds change.

- The aim is to ensure that the character's facial features and body remain recognizable across different panels.

- Dueling GPUs in Token Processing: Discussions on token processing revealed a user achieving 45 tokens/s on a 6900XT, highlighting discrepancies across GPU models.

- Several members suggested flashing the BIOS to enhance performance while expressing frustration over unexpected results.

- Meet-Up for LM Studio Enthusiasts: LM Studio users are organizing a meet-up in London, focusing on prompt engineering with discussions open for all users.

- Participants are encouraged to find non-students with laptops for a productive exchange.

- Spotlight on RTX 4090D: Discussion centered around the RTX 4090D, a China-exclusive GPU noted for having more VRAM but fewer CUDA cores compared to its counterparts.

- Despite lower gaming performance, it might be a strategic choice for AI workloads due to its memory capacity.

- Surface Studio Pro: Upgrade Frustrations: Users expressed frustration over the Surface Studio Pro's limited upgrade options, debating enhancements like eGPU or SSD.

- Suggestions included investing in a dedicated AI rig rather than upgrading the laptop.

Stability.ai (Stable Diffusion) Discord

- Stable Diffusion Models Battle it Out: Users showcased the performance differences between older models like '1.5 ema only' and newer options, emphasizing advancements in image generation quality.

- The community noted that the RTX 4060 Ti outperforms the 7600 and Quadro P620 for AI tasks, highlighting the importance of GPU selection.

- Resolutions Matter in Image Generation: Optimal generation resolutions, such as 512x512 for earlier models, were recommended to minimize artifacts when upscaling.

- Users shared effective workflows suggesting that starting with lower resolutions enhances final output quality.

- AI Models and Their Familiarity: Concerns emerged regarding the similarity of various LLMs due to shared training data and techniques impacting originality.

- However, some noted that newer models have significantly improved aspects like generating realistic hands, indicating promising advancements.

- GPU Showdown for AI Training: Community members debated NVIDIA's GPUs being the preferred choice for AI model training, mainly due to CUDA compatibility.

- The consensus leaned towards favoring higher-end GPUs with 20GB of VRAM for superior performance, even if lower VRAM options could work for specific models.

- Reflection LLM Under Scrutiny: The Reflection LLM, touted for its capabilities of 'thinking' and 'reflecting,' faced criticism regarding its actual performance compared to claims.

- Concerns about disparities between the API and open-source versions fueled skepticism among users about its effectiveness.

OpenRouter (Alex Atallah) Discord

- Novita Endpoints Encounter Outage: All Novita endpoints faced an outage, leading to a 403 status error for filtering requests without fallbacks.

- Once the issue was resolved, normal functionality resumed for all users.

- Programming Tool Suggestions Ignite Discussion: A user explored using AWS Bedrock with Litelm for rate management, prompting additional suggestions like Aider and Cursor among users.

- Opinions varied on the effectiveness of the tools, stirring a lively debate about user experience and functionality.

- Speculations on Hermes Model Pricing: Users expressed uncertainty if Hermes 3 would remain free, with projections of a potential $5/M charge for updated endpoints.

- This led to discussions about expected performance improvements, alongside mention of ongoing free alternatives possibly remaining available.

- Insights into Pixtral Model's Capabilities: Pixtral 12B may primarily accept image inputs to produce text outputs, suggesting limited text processing capabilities.

- The model is expected to perform similarly to LLaVA, with a focus on specialized image tasks.

- Challenges Integrating OpenRouter with Cursor: Some users faced hurdles when using OpenRouter with Cursor, addressing configuration adjustments needed to activate model functionalities.

- Contributors highlighted existing issues on the cursor repository, particularly relevant to hardcoded routing within specific models.

CUDA MODE Discord

- Optimizing Matmul with cuDNN: Members discussed resources for various matmul algorithms like Grouped GEMM and Split K, with a recommendation to check out Cutlass examples.

- The focus remains on leveraging available optimization techniques for efficient matrix operations in machine learning.

- Neural Network Quantization Challenges: A member is re-implementing Post-Training Quantization and facing an accuracy drop during activation quantization, sharing insights on the torch forum.

- The community provided suggestions, emphasizing the importance of debugging for accuracy retention in quantized models.

- Exciting Developments in Multi-GPU Usage: Innovative ideas for Multi-GPU enhancements were shared, aiming to elongate context lengths and improve memory efficiency, with linked details.

- Participants are encouraged to pursue projects that optimize their use of resources while minimizing overhead.

- OpenAI RSUs and Market Insights: OpenAI employees discussed RSUs appreciating to 6-7x if not sold, sharing the complexities of secondary transactions that allow cashing out, with implications on future IPOs.

- Speculation on the impact of these secondary transactions on share pricing and valuation revealed insights into venture capital negotiations.

- FP6 Added to Main API: The addition of fp6 to the main README of the project was announced, leading to discussions about the integration challenges with BF16 and FP16.

- There is a recognized need for clarity among users to ensure efficient performance management across different precision types.

Interconnects (Nathan Lambert) Discord

- OpenAI Experiences Major Departures: Significant talent departures hit OpenAI as Alex Conneau announces his exit to start a new company, while Arvind shares excitement about joining Meta.

- Discussions hint that references to GPT-5 might indicate upcoming models, but skepticism lingers regarding these speculations.

- Meta's Massive AI Supercomputing Cluster: Meta approaches completion of a 100,000 GPU Nvidia H100 AI supercomputing cluster to train Llama 4, opting against proprietary Nvidia networking gear.

- This bold move underlines Meta's commitment to AI, particularly as competition escalates in the industry.

- Adobe's Generative Video Move: Adobe is set to launch its Firefly Video Model, marking substantial advancements since its rollout in March 2023, with integration into Creative Cloud features on the horizon.

- The beta availability later this year showcases Adobe's focus on generative AI-driven video production.

- Pixtral Model Surpasses Competitors: At the Mistral summit, it was reported that Pixtral 12B outperforms models like Phi 3 and Claude Haiku, noted for flexibility in image size and task performance.

- Live demos during the event revealed Pixtral's strong OCR capabilities, igniting debates on its accuracy compared to rivals.

- Surge AI's Contractual Challenges: Surge AI reportedly failed to deliver data to HF and Ai2 until faced with potential legal action, raising alarm about its reliability on smaller contracts.

- Concerns revolve around their lack of communication amidst delays, casting doubt on their prioritization.

Perplexity AI Discord

- Perplexity Pro Signup Campaign enters final stage: There's only 5 days left for campuses to secure 500 signups to unlock a free year of Perplexity Pro. Sign up at perplexity.ai/backtoschool to participate!

- The updated countdown timer, now at 05:12:11:10, amplifies this call to action—it's the final lap!

- Students face disparities with Perplexity offers: The student offer for a free month of Perplexity Pro is available, but it's limited to US students or specific campuses with enough signups.

- Concerns were voiced about the inequities faced by students from other countries, such as Germany, who also seek promotions.

- Excitement builds for new API features: Anticipation is high for new API functionalities during the upcoming dev day, particularly for 4o voice and image generation features.

- There's also a discussion on creating a hobby tier for users who need less than full pro access.

- Neuralink shares patient updates and SpaceX ambitions: Perplexity AI promoted a YouTube video detailing Neuralink's First Patient Update and SpaceX's target for Mars in 2026.

- The video offers insights into both projects and their ambitious goals for the future.

- Urgent support request from Bounce.ai for API issues: Aki Yu, CTO of Bounce.ai, reported an urgent issue with the Perplexity API impacting over 3,000 active users, stressing the need for immediate assistance.

- Despite reaching out for 4 months, Bounce.ai has yet to receive a response from the Perplexity team, highlighting potential limitations in support channels.

Nous Research AI Discord

- Llama-3.1-SuperNova-Lite excels in math: Members noted that Llama-3.1-SuperNova-Lite showcases superior handling of calculations like Vedic multiplication compared to Hermes-3-Llama-3.1-8B, maintaining accuracy.

- Despite both models struggling, SuperNova-Lite performed notably better in preserving numeric integrity.

- Model comparisons reveal performance gaps: Testing revealed that LLaMa-3.1-8B-Instruct struggled with mathematical tasks, while Llama-3.1-SuperNova-Lite achieved better results.

- A preference emerged for Hermes-3-Llama-3.1-8B, highlighting the discrepancies in their arithmetic capabilities.

- Quality Data enhances performance: Feedback across discussions emphasized that higher quality data significantly boosts model performance as parameters are scaled.

- This underscores the importance of using high-quality datasets for achieving optimal results with LLMs.

- Greener Pastures: Smaller Models for Simple Tasks: A member queried about models smaller than Llama 3.1 8B for basic tasks, mentioning Mistral 7B and Qwen2 7B as potential options.

- Discussions prompted requests for an updated list on models under 3B parameters, indicating community interest in efficiency.

- Desire for Updates on Spatial Reasoning Innovations: Curiosity arose about whether any revolutionary developments have been made in Spatial Reasoning and its allied areas.

- Members eagerly sought insights into the latest innovations that might reshape understanding in AI reasoning capabilities.

Latent Space Discord

- Mistral Showcases Pixtral 12B Model: At an invite-only conference, Mistral launched the Pixtral 12B model, outperforming competitors like Phi 3 and Claude Haiku, as noted by Mistral AI.

- This model supports arbitrary image sizes and interleaving, achieving notable benchmarks that were highlighted during the event featuring Jensen Huang.

- Klarna Cuts Ties with SaaS Providers: Klarna's CEO announced the company is firing its SaaS providers, including those once deemed irreplaceable, provoking discussions about potential operational risks, as detailed by Tyler Hogge.

- Alongside this, Klarna reportedly downsized its workforce by 50%, a decision likely driven by financial challenges.

- Jina AI Launches HTML to Markdown Models: Jina AI introduced two language models, reader-lm-0.5b and reader-lm-1.5b, optimized for converting HTML to markdown efficiently, offering multilingual support and robust performance read more here.

- These models stand out by outperforming larger models while maintaining a significantly smaller size, streamlining accessible content conversion.

- Trieve Secures Funding Boost: Trieve AI successfully secured a $3.5M funding round led by Root Ventures, aimed at simplifying AI application deployment across various industries, as shared by Vaibhav Srivastav here.

- With the new funding, Trieve's existing systems now serve tens of thousands of users daily, indicating strong market interest.

- Hume Launches Empathic Voice Interface 2: Hume AI introduced the Empathic Voice Interface 2 (EVI 2), merging language and voice to enhance emotional intelligence applications check it out.

- This model is now available for users eager to create applications that require deeper emotional engagement.

OpenInterpreter Discord

- Custom Python Code with Open Interpreter: A user inquired about utilizing specific Python code for sentiment analysis tasks in Open Interpreter, sparking interest in broader custom queries over databases.

- The community is eager for confirmation on the feasibility of involving various Python libraries like rich for formatting in terminal applications.

- Documentation Improvement Stirs Engagement: Feedback pointed out that while users find Open Interpreter appealing, the documentation lacks organization, hindering navigation.

- An offer was made to enhance documentation through collaborative efforts, encouraging pull requests for improvements.

- Early Access to Desktop App Approaches: Users are keen for details on the timeline for early access to the upcoming desktop app, which aims to simplify installation processes.

- The community anticipates additional beta testers within the next couple of weeks, aiming to enhance the user experience.

- Refunds and Transition from 01 Light: Discussions erupted around refunds for the discontinued 01 light, leading to a leaked tweet confirming the shift to a new free 01 app.

- Open-sourcing of manufacturing materials is also on the table, coinciding with the 01.1 update for further development.

- Highlighting RAG Context from JSONL Data: A preliminary test run shows promise in offering context from JSONL data designed for RAG, primarily focused on news RSS feeds.

- The tutorial creation will follow the completion of NER processes and data loading into Neo4j, enhancing usability for AI applications.

Cohere Discord

- Cohere's Ticket Support Integration: A member plans to integrate Cohere with Link Safe for ticket support and text processing, expressing excitement about the collaboration.

- I can’t wait to see how this enhances our current workflow!

- Mistral launches Vision Model: Mistral introduced a new vision model, igniting interest about its capabilities and upcoming projects.

- Members speculated on the possibility of a vision model from C4AI, linking it to developments with Maya that need more time.

- Long-Term Need for Human Oversight: Members concurred that human oversight will remain crucial in the advancement of AI, advocating for a reliable approach over the pursuit of machine intelligence.

- Let’s focus on making what we have reliable instead of chasing theoretical capabilities.

- Discord FAQ Bot Takes Shape: Efforts are underway to create a Discord FAQ bot for Cohere, streamlining communication within the community.

- The discussion also opened up possibilities for a virtual hack event, pushing for innovative ideas.

- Inquiry into Aya-101's Status: Is Aya-101 End-of-life? raised speculation about a transition to a new model that could outperform rivals.

- A member referred to it as a potential Phi-killer, stirring curiosity.

Eleuther Discord

- lm-evaluation-harness Guidance Request: A user seeks help using lm-evaluation-harness for evaluating the OpenAI gpt4o model against the swe-bench dataset.

- They appreciate any guidance, indicating that practical advice could significantly aid their evaluation process.

- Pixtral Model Announcement: The community shared the newly released Pixtral-12b-240910 model checkpoint, hinting it is partially aligned with Mistral AI’s recent updates.

- Users can find download details and a magnet URI included in the release note along with a link to Mistral's Twitter.

- RWKV-7 Shows Promise: RWKV-7 is presented as a potential Transformer killer, featuring an identity-plus-low-rank transition matrix derived from DeltaNet.

- A related study on optimizing for sequence length parallelization is showcased on arXiv, enhancing the model's appeal.

- Multinode Training Pitfalls: A user expresses concerns during multinode training over slow Ethernet links, particularly regarding DDP performance between 8xH100 machines.

- Discussion suggests training may suffer from speed limitations, and utilizing DDP across nodes could be less efficient than anticipated.

- Dataset Chunking Practices: A member inquires if splitting datasets into 128-token chunks is standard, implying the decision may often stem from intuition rather than empirical studies.

- Responses indicate many practitioners might overlook the potential effects of chunking on model performance, highlighting a gap in understanding.

LlamaIndex Discord

- Maven Course on RAG in the LLM Era: Check out the Maven course titled Search For RAG in the LLM era, featuring a guest lecture with live coding walkthroughs.

- Participants can engage with code examples alongside industry veterans to enhance their learning experience.

- Quick Tutorial on Building RAG: A straightforward tutorial on building retrieval-augmented generation with LlamaIndex is now available.

- This tutorial focuses on implementing RAG technologies effectively.

- Kotaemon: Build a RAG-Based Document QA System: Learn to build a RAG-based document QA system using Kotaemon, an open-source UI for chatting with documents.

- The session covers setup for a customizable RAG UI and how to organize LLM & embedding models.

- Hands-On AI Scheduler Workshop: Join the workshop at AWS Loft on September 20th to build an AI Scheduler for smart meetings with Zoom, LlamaIndex, and Qdrant.

- Participants will create a RAG recommendation engine focused on meeting productivity using Zoom's transcription SDK.

- Exploring Task Queue Setup for Indexing: A discussion initiated about creating a task queue for building indexes using FastAPI and a Celery backend, focusing on database storage for files and indexing info.

- Participants were encouraged to check existing setups that might fulfill these requirements.

LangChain AI Discord

- POC Development for Query Generation: A member is working on a POC for query generation using LangGraph, facing challenges with increasing token sizes as table counts rise.

- They are utilizing RAG to create vector representations of schemas for query formation and hesitate to add more LLM calls.

- Launch of OppyDev's Major Update: The OppyDev team announced a significant update enhancing the AI-assisted coding tool's usability on both Mac and Windows, along with support for GPT-4 and Llama.

- Users can access one million free GPT-4 tokens through limited-time promo codes; details are available on request.

- Insights on Building RAG Applications: A discussion arose regarding the retention of new line characters from texts retrieved via a web loader in RAG applications before storing in a vector database.

- It was confirmed that retaining new line characters is acceptable, ensuring the text formatting remains intact.

- Real-time Code Review Features in OppyDev: The latest OppyDev update includes a color-coded, editable diff feature for real-time code change monitoring.

- This upgrade significantly enhances developers' ability to track and manage their coding modifications effectively.

Torchtune Discord

- Torchtune lacks FP16 support: A member highlighted that Torchtune does not support FP16, requiring extra work to maintain compatibility with mixed precision modules, while bf16 is seen as the superior alternative.

- This lack of support may pose problems for users operating with older GPUs.

- Qwen2 interface tokenization quirks: The Qwen2 interface allows

Noneforeos_id, which leads to a check before adding it in theencodemethod, raising questions about its intentionality.- A potential bug arises as another part of the code does not perform this check, indicating an oversight.

- Issues with None EOS ID handling: Concerns were raised about allowing

add_eos=Truewitheos_idset toNone, implying inconsistent behavior in the tokenization process within the Qwen2 model.- This inconsistency could confuse users and disrupt expected functionality.

- Questions on padded_collate's efficacy: A member questioned the utility of padded_collate, noting that it isn't used anywhere while calling out a missing logic issue regarding input_ids and labels sequence lengths.

- This prompted follow-up inquiries about whether the padded_collate logic had been correctly incorporated into the ppo recipe.

- Clarifications needed on the PPO recipe: Discussion emerged around whether the

padded_collatelogic within the ppo recipe was complete, as a member indicated they had integrated some of it.- This raised further points about the typical matching of lengths between input_ids and labels.

DSPy Discord

- Sci Scope Launches for Arxiv Insights: Sci Scope is a new tool that categorizes and summarizes the latest Arxiv papers using LLMs, available for free at Sci Scope. Users can subscribe for a weekly summary of AI research, enhancing their literature awareness.

- A discussion arose about ensuring output veracity and reducing hallucinations in summaries, reflecting concerns regarding the reliability of AI-generated content.

- Customizing DSPy for Client Needs: A member queried about integrating client-specific customizations into DSPy-generated prompts for a chatbot, looking to avoid hard-coding client data. They considered a post-processing step for dynamic adaptations and solicited feedback on better implementation strategies.

- This exchange underscores the collaborative spirit within the group, as members actively support one another by sharing insights and solutions.

tinygrad (George Hotz) Discord

- Exploring Audio Models with Tinygrad: A user sought guidance on how to run audio models with tinygrad, specifically looking beyond the existing Whisper example provided in the repo.

- This inquiry spurred suggestions on potential starting points for exploring audio applications in tinygrad.

- Philosophical Approach to Learning: A member quoted, 'The journey of a thousand miles begins with a single step,' emphasizing the importance of intuition in the learning process.

- This sentiment encouraged a reflective exploration of resources within the community.

- Linking to Helpful Resources: Another member shared a link to the smart questions FAQ by Eric S. Raymond, outlining etiquette and strategies for seeking help online.

- This resource serves as a guide for crafting effective queries and maximizing community assistance.

OpenAccess AI Collective (axolotl) Discord

- Mistral's Pixtral Sets Multi-modal Stage: Work is advancing on Mistral's Pixtral to incorporate multi-modal support, echoing recent developments in AI capabilities.

- It's a prescient move considering today's advancements.

- Axolotl Project Gets New Message Structure: A pull request for a new message structure in the Axolotl project aims to enhance how messages are represented, favoring improved functionality.

- For insights, see the details of the New Proposed Message Structure.

- LLM Models Tested for Speed & Performance: A recent YouTube video evaluates the speed and performance of leading LLM models as of September 2024, focusing on tokens per second.

- The testing emphasizes latency and throughput, crucial metrics for any performance evaluation in production.

LAION Discord

- AI Developer Seeks Partner for NYX Model: An AI developer announced ongoing work on the NYX model, featuring over 600 billion parameters, and is actively looking for a collaborator.

- Let’s chat! if you possess expertise in AI and are aligned in timezone for effective collaboration.

- Inquiry on Training Large Models: A developer inquired about the training resources utilized for a 600B parameter model, highlighting the LLaMA-405B that was trained on 15 trillion tokens.

- Curiosity revolved around the data sourcing methodologies for such large models, indicating a keen interest in the underlying processes.

LLM Finetuning (Hamel + Dan) Discord

- Literal AI excels at usability: A user praised Literal AI for its intuitive interface, which enhances LLM applications' accessibility and user experience.

- This reflects a growing demand for user-friendly tools in the competitive landscape of LLM technologies.

- Observability boosts LLM lifecycle health: The significance of LLM observability was highlighted, as it empowers developers to rapidly iterate and handle debugging processes effectively.

- Utilizing logs can enhance smaller models' performance while simultaneously reducing expenses, driving efficient model management.

- Monitoring prompts prevents regressions: Continuous tracking of prompt performances is crucial in averting regressions prior to the deployment of new prompt versions.

- This proactive evaluation safeguards LLM applications against potential failures and increases deployment confidence.

- LLM monitoring ensures production reliability: Robust logging and evaluation mechanisms are essential for monitoring LLM performance in production environments.

- Implementing effective analytics provides teams the capacity to maintain oversight and bolster application stability.

- Integrating with Literal AI is a breeze: Literal AI supports easy integrations across applications, allowing users to tap into the full LLM ecosystem.

- A self-hosted option is available, catering to users in the EU and those managing sensitive data.

Mozilla AI Discord

- Ground Truth Data's Critical Role in AI: A new blog post emphasizes the importance of ground truth data in enhancing the model accuracy and reliability in AI applications, urging readers to contribute to the ongoing discussion join the discussion.

- Ground truth data is touted as essential for driving improvements in AI systems' performances across varying contexts.

- Mozilla Opens Call for Alumni Grant Applications: Mozilla invites past participants of the Mozilla Fellowship to apply for program grants targeting trustworthy AI and healthier internet initiatives, reflecting efforts for structural changes in AI.

- “The internet, and especially artificial intelligence (AI), are at an inflection point.” highlights Hailey Froese's call to action for transformative efforts in this space.

Gorilla LLM (Berkeley Function Calling) Discord

- Evaluation Script Errors Trouble Users: Users encountered a 'No Scores' issue while running

openfunctions_evaluation.pywith--test-category=non_live, receiving no results in the designated folder.- Attempting to rerun with new API credentials didn’t yield success, leading to further complications.

- API Credentials Updated but Issues Persist: In their setup, users added four new API addresses into

function_credential_config.json, hoping for a resolution.- Despite these changes, errors continued during evaluations, confirming that credential updates were ineffective.

- Timeout Troubles with Urban Dictionary API: During evaluation, a Connection Error arose linked to the Urban Dictionary API regarding the term 'lit', indicating there were timeout issues.

- Network problems are suspected as the source of the connection difficulties that users faced.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The DiscoResearch Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!