[AINews] Perplexity starts Shopping for you

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Stripe SDK is all you need?

AI News for 11/18/2024-11/19/2024. We checked 7 subreddits, 433 Twitters and 30 Discords (217 channels, and 1912 messages) for you. Estimated reading time saved (at 200wpm): 253 minutes. You can now tag @smol_ai for AINews discussions!

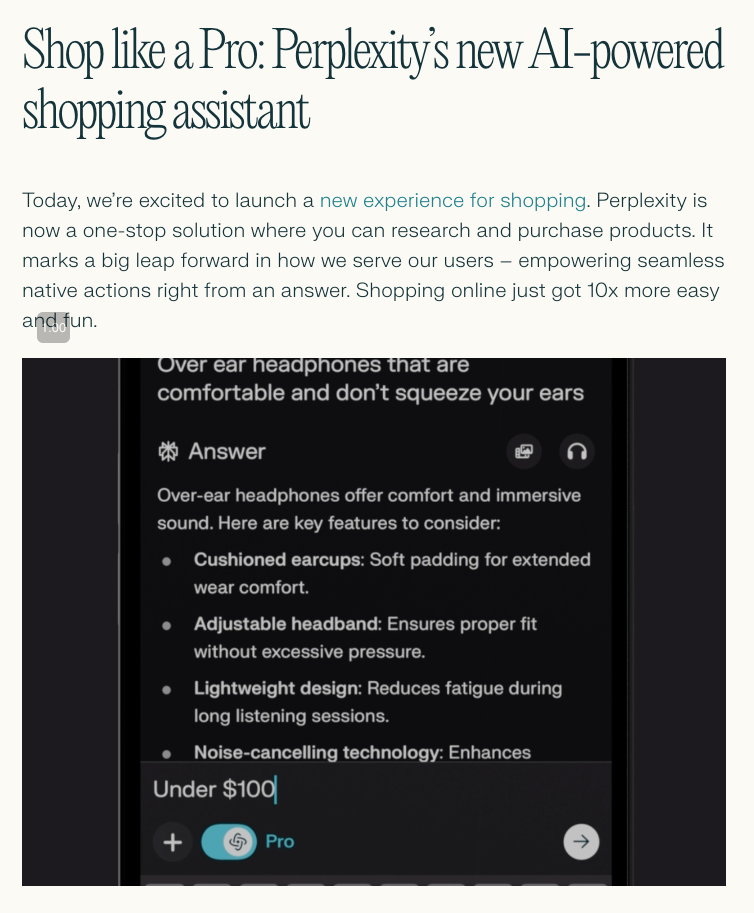

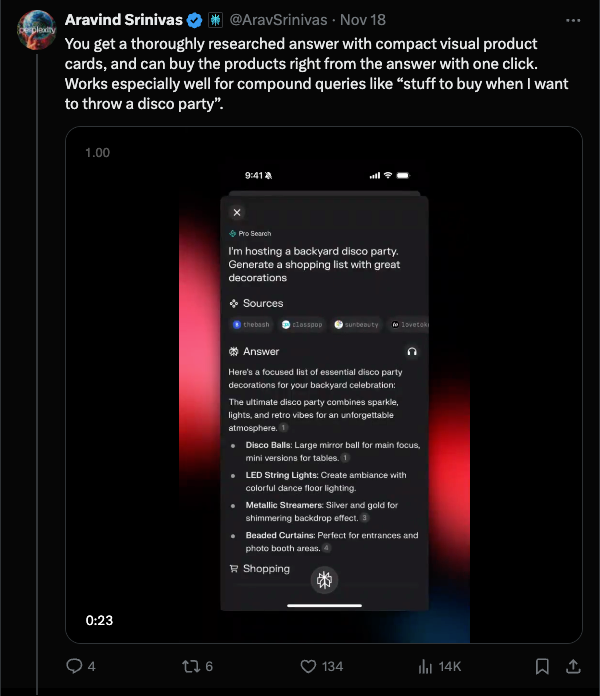

Just 2 days after Stripe launched their Agent SDK (our coverage here), Perplexity is now launching their in-app shopping experience for US-based Pro members. This is the first at-scale AI-native shopping experience, closer to Google Shopping (done well) than Amazon. The examples show the kind of queries you can make with natural language that would be difficult in traditional ecommerce UI:

The new "Buy With Pro" program comes with one-click checkout with "select merchants" (! more on this later) and free shipping.

Snap to Shop is also a great visual ecommerce idea... but it remains to be seen how accurate it really is from people who don't work at Perplexity.

The Buy With Pro program is almost certainly tied to the new Perplexity Merchant Program, which is a standard free data-for-recommendations value exchange.

Both Patrick Collison and Jeff Weinstein were quick to note Stripe's involvement, though both stopped short of directly saying that Perplexity Shopping uses the exact agent SDK that Stripe just shipped.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Model Releases and Performance

- Mistral's Multi-Modal Image Model: @mervenoyann announced the release of Pixtral Large with 124B parameters, now supported in @huggingface. Additionally, @sophiamyang shared that @MistralAI now supports image generation on Le Chat, powered by @bfl_ml, available for free.

- Cerebras Systems' Llama 3.1 405B: @ArtificialAnlys detailed Cerebras' public inference endpoint for Llama 3.1 405B, boasting 969 output tokens/s and a 128k context window. This performance is >10X faster than the median providers. Pricing is set at $6 per 1M input tokens and $12 per 1M output tokens.

- Claude 3.5 and 3.6 Enhancements: @Yuchenj_UW discussed how Claude 3.5 is being outperformed by Claude 3.6, which, despite being more convincing, exhibits more subtle hallucinations. Users like @Tim_Dettmers have started debugging outputs to maintain trust in the model.

- Bi-Mamba Architecture: @omarsar0 introduced Bi-Mamba, a 1-bit Mamba architecture designed for more efficient LLMs, achieving performance comparable to FP16 or BF16 models while significantly reducing memory footprint.

AI Tools, SDKs, and Platforms

- Wandb SDK on Google Colab: @weights_biases announced that the wandb Python SDK is now preinstalled on every Google Colab notebook, allowing users to skip the

!pip installstep and import directly.

- AnyChat Integration: @_akhaliq highlighted that Pixtral Large is now available on AnyChat, enhancing AI flexibility by integrating multiple models like ChatGPT and Google Gemini.

- vLLM Support: @vllm_project introduced support for Pixtral Large with a simple

pip install -U vLLM, enabling users to run the model efficiently.

- Perplexity Shopping Features: @AravSrinivas detailed the launch of Perplexity Shopping, a feature that integrates with @Shopify to provide AI-powered product recommendations and a multimodal shopping experience.

AI Research and Benchmarks

- nGPT Paper and Benchmarks: @jxmnop shared insights on the nGPT paper, highlighting claims of a 4-20x training speedup over GPT. However, the community faced challenges in reproducing results due to a busted baseline.

- VisRAG Framework: @JinaAI_ introduced VisRAG, a framework enhancing retrieval workflows by addressing RAG bottlenecks with multimodal reasoning, outperforming TextRAG.

- Judge Arena for LLM Evaluations: @clefourrier presented Judge Arena, a tool to compare model-judges for nuanced evaluations of complex generations, aiding researchers in selecting the appropriate LLM evaluators.

- Bi-Mamba's Efficiency: @omarsar0 discussed how Bi-Mamba achieves performance comparable to full-precision models, marking an important trend in low-bit representation for LLMs.

AI Company Partnerships and Announcements

- Google Colab and Wandb Partnership: @weights_biases announced the collaboration with @GoogleColab, ensuring that the wandb SDK is readily available for users, streamlining workflow integration.

- Hyatt Partnership with Snowflake: @RamaswmySridhar shared how @Hyatt utilizes @SnowflakeDB to unify data, reduce management time, and innovate quickly, enhancing operational efficiency and customer insights.

- Figure Robotics Hiring and Deployments: @adcock_brett multiple times discussed Figure's commitment to shipping millions of humanoid robots, hiring top engineers, and deploying autonomous fleets, showcasing significant scaling efforts in AI robotics.

- Hugging Face Enhancements: @ClementDelangue highlighted that @huggingface now offers visibility into post engagement, enhancing the platform's role as a hub for AI news and updates.

AI Events and Workshops

- AIMakerspace Agentic RAG Workshop: @llama_index promoted a live event on November 27, hosted by @AIMakerspace, focusing on building local agentic RAG applications using open-source LLMs with hands-on sessions led by Dr. Greg Loughnane and Chris "The Wiz" Alexiuk.

- Open Source AI Night with SambaNova & Hugging Face: @_akhaliq announced an Open Source AI event scheduled for December 10, featuring Silicon Valley’s AI minds, fostering collaboration between @Sambanova and @HuggingFace.

- DevDay in Singapore: @stevenheidel shared excitement about attending the final 2024 DevDay in Singapore, highlighting opportunities to network with other esteemed speakers.

Memes/Humor

- AI Misunderstandings and Frustrations: @transfornix expressed frustrations with zero motivation and brain fog. Similarly, @fabianstelzer shared light-hearted frustrations with AI workflows and unexpected results.

- Humorous Takes on AI and Tech: @jxmnop humorously questioned why a transformer implementation error breaks everything, reflecting common developer frustrations. Additionally, @idrdrdv joked about category theory discouraging newcomers.

- Casual and Fun Interactions: Tweets like @swyx sharing humorous remarks about oauth requirements and @HamelHusain engaging in light-hearted conversations showcase the community's playful side.

- Reactions to AI Developments: @aidan_mclau reacted humorously to seeing others on social networks, and @giffmana shared laughs over AI interactions.

AI Applications and Use Cases

- AI in Document Processing: @omarsar0 introduced Documind, an AI-powered tool for extracting structured data from PDFs, emphasizing its ease of use and AGPL v3.0 License.

- AI in Financial Backtesting: @virattt described backtesting an AI financial agent using @LangChainAI for orchestration, outlining a four-step process to evaluate portfolio returns.

- AI in Shopping and E-commerce: @AravSrinivas showcased Perplexity Shopping, detailing features like multimodal search, Buy with Pro, and integration with @Shopify, aimed at streamlining the shopping experience.

- AI in Healthcare Communication: @krandiash highlighted collaborations with @anothercohen to improve healthcare communication using AI, emphasizing efforts to fix broken systems.

AI Community and General Discussions

- AI Curiosity and Learning: @saranormous emphasized that genuine technical curiosity is a powerful and hard-to-fake trait, encouraging continuous learning and exploration within the AI community.

- Challenges in AI Model Development: @huybery and @karpathy discussed model training challenges, including latency issues, layer normalizations, and the importance of model oversight for trustworthy AI systems.

- AI in Social Sciences and Ethics: @BorisMPower pondered the revolutionary potential of AI in social sciences, advocating for in silico simulations over traditional human interviews for hypothesis testing.

- AI in Software Engineering: @inykcarr and @HellerS engaged in discussions around prompt engineering for LLMs, emphasizing the superpower of 10x productivity gains through effective AI utilization.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. Mistral Large 2411: Anticipation and Release Details

- Mistral Large 2411 and Pixtral Large release 18th november (Score: 336, Comments: 114): Mistral Large 2411 and Pixtral Large are set for release on November 18th.

- Licensing and Usage Concerns: There is significant discussion around the restrictive MRL license for Mistral models, with users expressing frustration over unclear licensing terms and lack of response from Mistral for commercial use inquiries. Some suggest that while the license allows for research use, it complicates commercial applications and sharing of fine-tuned models.

- Benchmark Comparisons: Pixtral Large reportedly outperforms GPT-4o and Claude-3.5-Sonnet in several benchmarks, such as MathVista (69.4) and DocVQA (93.3), but users note a lack of comparison with other leading models like Qwen2-VL and Molmo-72B. There is also speculation about a Llama 3.1 505B model based on a potential typo or leak in the benchmark tables.

- Technical Implementation and Support: Users discuss the potential integration of Pixtral Large with Exllama for VRAM efficiency and tensor parallelism, and confirm that Mistral Large 2411 does not require changes to llama.cpp for support. Additionally, there's mention of a new instruct template potentially enhancing model steerability, drawing parallels to community-suggested prompt formatting.

- mistralai/Mistral-Large-Instruct-2411 · Hugging Face (Score: 303, Comments: 81): The post discusses Mistral Large 2411, a model available on Hugging Face under the repository mistralai/Mistral-Large-Instruct-2411. No additional details or context are provided in the post body.

- Users discuss the performance of Mistral Large 2411, noting mixed results in various tasks. Sabin_Stargem mentions success in NSFW narrative generation but failures in lore comprehension and dice number tasks. ortegaalfredo finds slight improvements overall but prefers qwen-2.5-32B for coding tasks.

- There's a debate on the model's licensing and distribution. TheLocalDrummer and others express concerns about the MRL license, with mikael110 lamenting the end of Apache-2 releases. thereisonlythedance appreciates Mistral's local model access, despite licensing complaints, citing economic necessity.

- Technical discussions involve model deployment and quantization. noneabove1182 shares links to Hugging Face for GGUF quantizations and mentions the absence of evals for comparison with previous versions. segmond expresses skepticism about the lack of evaluation data and notes a slight performance drop in coding tests compared to large-2407.

- Pixtral Large Released - Vision model based on Mistral Large 2 (Score: 123, Comments: 27): Pixtral Large has been released as a vision model derived from Mistral Large 2. Further details about the model's specifications or capabilities were not provided in the post.

- Pixtral Large's Vision Setup: The model is not based on Qwen2-VL; instead, it uses the Qwen2-72B text LLM with a custom vision system. The 7B variant uses the Olmo model as a base, which performs similarly to the Qwen base, indicating the robustness of their dataset.

- Technical Requirements and Capabilities: Running the model may require substantial hardware, such as 4x3090 GPUs or a MacBook Pro with 128GB RAM. The model's vision encoder is larger (1B vs 400M), suggesting it can handle at least 30 high-resolution images, although specifics on "hi-res" remain undefined.

- Performance Benchmarks and Comparisons: Pixtral Large's performance was only compared to Llama-3.2 90B, which is considered suboptimal for its size. Comparisons with Molmo-72B and Qwen2-VL show varied results across datasets like Mathvista, MMMU, and DocVQA, indicating an incomplete picture of its capabilities against the state-of-the-art.

Theme 2. Llama 3.1 405B Inference: Breakthrough with Cerebras

- Llama 3.1 405B now runs at 969 tokens/s on Cerebras Inference - Cerebras (Score: 272, Comments: 49): Cerebras has achieved a performance milestone by running Llama 3.1 405B at 969 tokens per second on their inference platform. This showcases Cerebras' capabilities in handling large-scale models efficiently.

- Users noted that the 405B model is currently available only for enterprise in the paid tier, while Openrouter offers it at a significantly reduced cost, albeit with lower speed. The 128K context length and full 16-bit precision were highlighted as key features of Cerebras' platform.

- Discussions emphasized that Cerebras' performance gains are attributed more to software improvements rather than hardware changes, with some users pointing out the use of WSE-3 clusters and potential alternatives like 8x AMD Instinct MI300X accelerators.

- There was interest in use cases for high-speed inference, such as agentic workflows and high-frequency trading, where rapid processing of large models can offer significant advantages over slower, traditional methods.

Theme 3. AMD GPUs on Raspberry Pi: Llama.cpp Integration

- AMD GPU support for llama.cpp via Vulkan on Raspberry Pi 5 (Score: 144, Comments: 49): The author has been integrating AMD graphics cards on the Raspberry Pi 5 and has successfully implemented the Linux

amdgpudriver on Pi OS. They have compiledllama.cppwith Vulkan support for several AMD GPUs and are gathering benchmark results, which can be found here. They seek community input on additional tests and plan to evaluate lower-end AMD graphics cards for price/performance/efficiency comparisons.- Several users recommended using ROCm instead of Vulkan for better performance with AMD GPUs, but noted that ROCm support on ARM platforms is challenging due to limited compatibility. Alternatives like hipblas were suggested, though they involve complex setup processes Phoronix article.

- There was a discussion regarding quantization optimizations for ARM CPUs, specifically using

4_0_X_Xquantization levels with llama.cpp to leverage ARM-specific instructions likeneon+dotprod. This can improve performance on devices like the Raspberry Pi 5 with BCM2712 using flags such as-march=armv8.2-a+fp16+dotprod. - Benchmarking results on the RX 6700 XT using llama.cpp showed promising performance metrics with Vulkan, but highlighted power consumption concerns, averaging around 195W during tests. The discussion also touched on the efficiency of using GPUs for AI tasks compared to CPUs, with the Raspberry Pi setup consuming only 11.4W at idle.

Theme 4. txtai 8.0: Streamlined Agent Framework Launched

- txtai 8.0 released: an agent framework for minimalists (Score: 60, Comments: 9): txtai 8.0 has been launched as an agent framework designed for minimalists. This release focuses on simplifying the development and deployment of AI applications.

- txtai 8.0 introduces a new agent framework that integrates with Transformers Agents and supports all LLMs, providing a streamlined approach to deploying real-world agents without unnecessary complexity. More details and resources are available on GitHub, PyPI, and Docker Hub.

- The agent framework in txtai 8.0 demonstrates decision-making capabilities through tool usage and planning, as illustrated in a detailed example on Colab. This example showcases how the agent uses tools like 'web_search' and 'wikipedia' to answer complex questions.

- Users inquired about the framework's capabilities, including whether it supports function calling by agents and vision models. These questions highlight the interest in extending txtai's functionality to incorporate more advanced features like visual analysis.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. Flux vs SD3.5: Community Prefers Flux Despite Technical Tradeoffs

- What is the Current State of Flux vs SD3.5? (Score: 40, Comments: 99): Stable Diffusion 3.5 and Flux are being compared by the community, with initial enthusiasm for SD3.5 reportedly declining since its release a month ago. The post seeks clarification on potential technical issues with SD3.5 that may have caused users to return to Flux, though no specific technical comparisons are provided in the query.

- SD3.5 faces significant adoption challenges due to being unavailable on Forge and having limited finetune capabilities compared to Flux. Users report that SD3.5 excels at artistic styles and higher resolutions but struggles with anatomy, particularly hands.

- Community testing reveals Flux is superior for img2img tasks and realistic human generation, while SD3.5 offers better negative prompt support and faster processing. The lack of quality finetunes and LoRAs for SD3.5 has limited its widespread adoption.

- Advanced users suggest combining both models' strengths through workflows like using SD3.5 for initial creative generation followed by Flux for anatomy refinement. Flux was released in August and has maintained stronger community support with extensive finetunes available.

- Ways to minimize Flux "same face" with prompting (TLDR and long-form) (Score: 33, Comments: 5): The post provides technical advice for reducing the "same face" problem in Flux image generation, recommending key strategies like reducing CFG/Guidance to 1.6-2.6, avoiding generic terms like "man"/"woman", and including specific descriptors for ethnicity, body type, and age. The author shares a set of example images demonstrating these techniques, along with an example prompt describing a "sharp faced Lebanese woman" in a kitchen scene, while explaining how model training biases and common prompting patterns contribute to the same-face issue.

- Lower guidance settings in Flux (1.6-2.6) trade prompt adherence for photorealism and variety, while the default 3.5 CFG is maintained by some users for better prompt compliance with complex descriptions.

- The community has previously documented similar solutions for the "sameface" issue, including an auto1111 extension that randomizes prompts by nationality, name, and hair characteristics.

- Users advise against downloading random .zip files for security reasons, suggesting alternative image hosting platforms like Imgur for sharing example generations.

Theme 2. O2 Robotics Breakthrough: 400% Speed Increase at BMW Factory

- Figure 02 is now an autonomous fleet working at a BMW factory, 400% faster in the last few months (Score: 180, Comments: 63): Figure 02 robots now operate as an autonomous fleet at a BMW factory, achieving a 400% speed increase in their operations over recent months.

- Figure 02 robots currently operate for 5 hours before requiring recharge, with each unit costing $130,000 according to BMW's press release. The robots have achieved a 7x reliability increase alongside their 400% speed improvement.

- Multiple users point out the rapid improvement rate of these robots surpasses human capability, with continuous 24/7 operation potential and no need for breaks or benefits. Critics note current limitations in efficiency compared to specialized robotic arms.

- Discussion centers on economic viability, with some arguing for complete plant redesign for automation rather than human-accommodating spaces. The robots require factory lighting and maintenance costs, but don't need heating or insurance.

Theme 3. Claude vs ChatGPT: Enterprise User Experience Discussion

- Should I Upgrade to ChatGPT Plus or Claude AI? Help Me Decide! (Score: 33, Comments: 69): Digital marketing professionals comparing ChatGPT Plus and Claude AI for content creation and technical assistance, with specific focus on handling content ideation (75% of use) and Linux technical support (25% of use). Recent concerns about Claude's reliability and model downgrades have emerged, with users reporting outages and transparency issues in model changes, prompting questions about Claude's viability as a paid service option.

- OpenRouter and third-party apps like TypingMind emerge as popular alternatives to direct subscriptions, offering flexibility to switch between models and potentially lower costs than $20/month services. Users highlight the ability to maintain context and integrate with multiple APIs in one place.

- Claude's recent changes have sparked criticism regarding increased censorship and usage limitations (5x free tier), particularly affecting Spanish-language users and technical tasks. Users report Claude refusing tasks for "ethical reasons" and experiencing significant model behavior changes.

- o1-preview model receives strong praise for its integrated chain of thought capabilities and complex mathematics handling, while Google Gemini 1.5 Pro is highlighted for its 1,000,000 token context window and integration with Google Workspace.

- Claude's servers are DYING! (Score: 86, Comments: 24): Claude users report persistent server capacity issues causing workflow disruptions through frequent high-demand notifications. Users express frustration with service interruptions and request infrastructure upgrades from the Anthropic team.

- Users report optimal Claude usage times are when both India and California are inactive, with multiple users confirming they plan their work around these time zones to avoid overload issues.

- Several users suggest abandoning the Claude web interface in favor of API-based solutions, with one user detailing their journey from using web interfaces to managing 100+ AI models through custom implementations and Open WebUI.

- Users express frustration with concise responses and "Error sending messages. Overloaded" notifications, with some recommending OpenRouter API as an alternative despite higher costs.

Theme 4. CogVideo Wrapper Updated: Major Refactoring and 1.5 Support

- Kijai has updated the CogVideoXWrapper: Support for 1.5! Refactored with simplified pipelines, and extra optimizations. (but breaks old workflows) (Score: 69, Comments: 23): CogVideoXWrapper received a major update with support for CogVideoX 1.5 models, featuring code cleanup, merged Fun-model functionality into the main pipeline, and added torch.compile optimizations along with torchao quantizations. The update introduces breaking changes to old workflows, including removal of width/height from sampler widgets, separation of VAE from the model, support for fp32 VAE, and replacement of PAB with FasterCache, while maintaining a legacy branch for previous versions at ComfyUI-CogVideoXWrapper.

- Testing on an RTX 4090 shows CogVideoX 1.5 processing 49 frames at 720x480 resolution with 20 steps takes approximately 30-40 seconds, with the model being notably faster than previous versions at equivalent frame counts.

- The 2B models require approximately 3GB VRAM for storage plus additional memory for inference, with testing at 512x512 resolution showing peak VRAM usage of about 6GB including VAE decode.

- Alibaba released an updated version of CogVideoXFun with new control model support for Canny, Depth, Pose, and MLSD conditions as of 2024.11.16.

AI Discord Recap

A summary of Summaries of Summaries by O1-preview

Theme 1: Cutting-Edge AI Models Claim Superiority

- Cerebras Boasts Record-Breaking Speed with Llama 3.1: Cerebras claims their Llama 3.1 405B model achieves an astonishing 969 tokens/s, over 10x faster than average providers. Critics argue this is an "apples to oranges" comparison, noting Cerebras excels at batch size 1 but lags with larger batches.

- Runner H Charges Toward ASI, Outperforming Competitors: H Company announced the beta release of Runner H, claiming to break through scaling laws limitations and step toward artificial super intelligence. Runner H reportedly outperforms Qwen on the WebVoyager benchmarks, showcasing superior navigation and reasoning skills.

- Mistral Unleashes Pixtral Large with 128K Context Window: Mistral introduced Pixtral Large, a 124B multimodal model built on Mistral Large 2, handling over 30 high-res images with a 128K context window. It achieves state-of-the-art performance on benchmarks like MathVista and VQAv2.

Theme 2: AI Models Grapple with Limitations and Bugs

- Qwen 2.5 Model Throws Tantrums in Training Rooms: Users report inconsistent results when training Qwen 2.5, with errors vanishing when switching to Llama 3.1. The model seems sensitive to specific configurations, leading to frustration among developers.

- AI Fumbles at Tic Tac Toe and Forgets the Rules: Members observed that AI models like GPT-4 struggle with simple games like Tic Tac Toe, failing to block moves and losing track mid-game. The limitations of LLMs as state machines spark discussions on the need for better frameworks for game logic.

- Mistral's Models Get Stuck in Infinite Loops: Users experienced issues with Mistral models like Mistral Nemo causing infinite loops and repeated outputs via OpenRouter. Adjusting temperature settings didn't fully resolve the problem, pointing to deeper issues with model outputs.

Theme 3: Innovative Research Lights Up the AI Horizon

- Neural Metamorphosis Morphs Networks on the Fly: The Neural Metamorphosis paper introduces self-morphable neural networks by learning a continuous weight manifold, allowing models to adapt sizes and configurations without retraining.

- LLM2CLIP Supercharges CLIP with LLMs: Microsoft unveiled LLM2CLIP, leveraging large language models to enhance CLIP's handling of long and complex captions, boosting its cross-modal performance significantly.

- AgentInstruct Generates Mountains of Synthetic Data: The AgentInstruct framework automates the creation of 25 million diverse prompt-response pairs, propelling the Orca-3 model to a 40% improvement on AGIEval, outperforming models like GPT-3.5-turbo.

Theme 4: AI Tools Evolve and Optimize Workflows

- Augment Turbocharges LLM Inference for Developers: Augment detailed their approach in optimizing LLM inference by providing full codebase context, crucial for developer AI, and overcoming latency challenges to ensure speedy and quality outputs.

- DSPy Dives into Vision with VLM Support: DSPy announced beta support for Vision-Language Models, showcasing in a tutorial how to extract attributes from images, like website screenshots, marking a significant expansion of their capabilities.

- Hugging Face Simplifies Vision Models with Pipelines: Hugging Face's pipeline abstraction now supports vision language models, making it easier than ever to handle both text and images in a unified way.

Theme 5: Community Buzzes with Events and Big Moves

- Roboflow Bags $40 Million to Sharpen AI Vision: Roboflow raised an additional $40 million in Series B funding to enhance developer tools for visual AI, aiming to deploy applications across fields like medical and environmental industries.

- Google AI Workshop to Unleash Gemini at Hackathon: A special Google AI workshop on November 26 will introduce developers to building with Gemini during the LLM Agents MOOC Hackathon, including live demos and direct Q&A with Google AI specialists.

- LAION Releases 12 Million YouTube Samples for ML: LAION announced LAION-DISCO-12M, a dataset of 12 million YouTube links with metadata to support research in foundation models for audio and music.

PART 1: High level Discord summaries

Eleuther Discord

-

Muon Optimizer Underperforms AdamW: Discussions highlighted that the Muon optimizer significantly underperformed AdamW due to inappropriate learning rates and scheduling techniques, leading to skepticism about its claims of superiority.

- Some members pointed out that using better hyperparameters could improve comparisons, yet criticism regarding untuned baselines remains.

- Neural Metamorphosis Introduces Self-Morphable Networks: The paper on Neural Metamorphosis (NeuMeta) proposes a new approach to creating self-morphable neural networks by learning the continuous weight manifold directly.

- This potentially allows for on-the-fly sampling for any network size and configurations, raising questions about faster training by utilizing small model updates.

- SAE Feature Steering Advances AI Safety: Collaborators at Microsoft released a report on SAE feature steering, demonstrating its applications for AI safety.

- The study suggests that steering Phi-3 Mini can enhance refusal behavior while highlighting the need to explore its strengths and limitations.

- Cerebras Acquisition Speculations: Discussions centered around why major companies like Microsoft haven't acquired Cerebras yet, speculating it may be due to their potential to compete with NVIDIA.

- Some members recalled OpenAI's past interest in acquiring Cerebras during the 2017 era, hinting at their enduring relevance in the AI landscape.

- Scaling Laws Remain Relevant Amid Economic Feasibility Concerns: Scaling laws are still considered a fundamental property of models, but economically it's become unfeasible to push further scaling.

- A member humorously noted that if you're not spending GPT-4 or Claude 3.5 budgets, you might not need to worry about diminishing returns yet.

OpenAI Discord

-

GPT-4-turbo Update Sparks Performance Scrutiny: Members are inquiring about the gpt-4-turbo-2024-04-09 update, noting its previously excellent performance.

- One user expressed frustration over inconsistencies in the model’s thinking capabilities post-update.

- NVIDIA's Add-it Elevates AI Image Editing: Discussions highlighted top AI image editing tools like 'magnific' and NVIDIA's _Add-it _, which allows adding objects based on text prompts.

- Members expressed skepticism regarding the reliability and practical accessibility of these emerging tools.

- Temperature Settings Influence AI Creativity: In Tic Tac Toe discussions, higher temperature settings lead to increased creativity in AI responses, which can impede performance in rule-based games.

- Participants noted that at temperature 0, AI responses are consistent but not exactly the same due to other influencing factors.

- LLMs Face Challenges as Game State Machines: Users pointed out that LLMs exhibit inconsistencies when used to represent state machines in games like Tic Tac Toe.

- There’s a consensus on the need for frameworks that handle game logic more effectively than relying solely on LLMs.

- Difficulty Parameters Enhance AI Gameplay: Participants discussed introducing difficulty parameters to improve AI gameplay, such as having the AI think several moves ahead.

- Further discussions were paused as users expressed fatigue from prolonged AI conversations.

Unsloth AI (Daniel Han) Discord

-

Qwen 2.5 Model Issues: Users reported inconsistent results when training the Qwen 2.5 model using the ORPO trainer, noting that switching to Llama 3.1 resolved the errors.

- Discussion centered on whether changes in model type typically affect training outcomes, with insights suggesting that such adjustments might not significantly influence results.

- Reinforcement Learning from Human Feedback (RLHF): The community explored integrating PPO (RLHF) techniques, indicating that mapping Hugging Face components could streamline the process.

- Members shared methodologies for developing a reward model, providing a supportive framework for implementing RLHF effectively.

- Multiple Turn Conversation Fine-Tuning: Guidance was provided on formatting datasets for multi-turn conversations, recommending the use of EOS tokens to indicate response termination.

- Emphasis was placed on utilizing data suited for the multi-turn format, such as ShareGPT, to enhance training efficacy.

- Aya Expanse Support: Support for the Aya Expanse model by Cohere was confirmed, addressing member inquiries about its integration.

- The discussion did not delve into further details, focusing primarily on the positive confirmation of Aya Expanse compatibility.

- Synthetic Data in Language Models: A discussion highlighted the importance of synthetic data for accelerating language model development, referencing the paper AgentInstruct: Toward Generative Teaching with Agentic Flows.

- The paper addresses model collapse and emphasizes the need for careful quality and diversity management in the use of synthetic data.

HuggingFace Discord

-

Pipeline Abstraction Simplifies Vision Models: The pipeline abstraction in @huggingface transformers now supports vision language models, streamlining the inference process.

- This update enables developers to efficiently handle both visual and textual data within a unified framework.

- Diffusers Introduce LoRA Adapter Methods: Two new methods,

load_lora_adapter()andsave_lora_adapter(), have been added to Diffusers for models supporting LoRA, facilitating direct interaction with LoRA checkpoints.

- These additions eliminate the need for previous commands when loading weights, enhancing workflow efficiency.

- Exact Unlearning Highlights Privacy Gaps in LLMs: A recent paper on exact unlearning as a privacy mechanism for machine learning models reveals inconsistencies in its application within Large Language Models.

- The authors emphasize that while unlearning can manage data removal during training, models may still retain unauthorized knowledge such as malicious information or inaccuracies.

- RAG Fusion Transforms Generative AI: An article discusses RAG Fusion as a pivotal shift in generative AI, forecasting significant changes in AI generation methodologies.

- It explores the implications of RAG techniques and their prospective integration across various AI applications.

- Augment Optimizes LLM Inference for Developers: Augment published a blog post detailing their strategy to enhance LLM inference by providing full codebase context, crucial for developer AI but introducing latency challenges.

- They outline optimization techniques aimed at improving inference speed and quality, ensuring better performance for their clients.

Stability.ai (Stable Diffusion) Discord

-

Mochi Outperforms CogVideo in Leaderboards: Members discussed that Mochi-1 is currently outperforming other models in leaderboards despite its seemingly inactive Discord community.

- CogVideo is gaining popularity due to its features and faster processing times but is still considered inferior for pure text-to-video tasks compared to Mochi.

- Top Model Picks for Stable Diffusion Beginners: New users are recommended to explore Auto1111 and Forge WebUI as beginner-friendly options for Stable Diffusion.

- While ComfyUI offers more control, its complexity can be confusing for newcomers, making Forge a more appealing choice.

- Choosing Between GGUF and Large Model Formats: The difference between stable-diffusion-3.5-large and stable-diffusion-3.5-large-gguf relates to how data is handled by the GPU, with GGUF allowing for smaller, chunked processing.

- Users with more powerful setups are encouraged to use the base model for speed, while those with limited VRAM can explore the GGUF format.

- AI-Driven News Content Creation Software Introduced: A user introduced software capable of monitoring news topics and generating AI-driven social media posts, emphasizing its utility for platforms like LinkedIn and Twitter.

- The user is seeking potential clients for this service, highlighting its capability in sectors like real estate.

- WebUI Preferences Among Community Members: The community shared experiences regarding different WebUIs, noting ComfyUI's advantages in workflow design, particularly for users familiar with audio software.

- Some expressed dissatisfaction with the form-filling nature of Gradio, calling for more user-friendly interfaces while also acknowledging the robust optimization of Forge.

aider (Paul Gauthier) Discord

-

OpenAI o1 models now support streaming: Streaming is now available for OpenAI's o1-preview and o1-mini models, enabling development across all paid usage tiers. The main branch incorporates this feature by default, enhancing developer capabilities.

- Aider's main branch will prompt for updates when new versions are released, but automatic updates are not guaranteed for developer environments.

- Aider API compatibility and configurations: Concerns were raised about Aider's default output limit being set to 512 tokens, despite supporting up to 4k tokens through the OpenAI API. Members discussed adjusting configurations, including utilizing

extra_paramsfor custom settings.

- Issues were highlighted when connecting Aider with local models and Bedrock, such as Anthropic Claude 3.5, requiring properly formatted metadata JSON files to avoid conflicts and errors.

- Anthropic API rate limit changes introducing tiered limits: Anthropic has removed daily token limits, introducing new minute-based input/output token limits across different tiers. This update may require developers to upgrade to higher tiers for increased rate limits.

- Users expressed skepticism about the tier structure, viewing it as a strategy to incentivize spending for enhanced access.

- Pixtral Large Release enhances Mistral Performance: Mistral has released Pixtral Large, a 124B multimodal model built on Mistral Large 2, achieving state-of-the-art performance on MathVista, DocVQA, and VQAv2. It can handle over 30 high-resolution images with a 128K context window and is available for testing in the API as

pixtral-large-latest.

- Elbie mentioned a desire to see Aider benchmarks for Pixtral Large, noting that while the previous Mistral Large excelled, it didn't fully meet Aider's requirements.

- qwen-2.5-coder struggles and comparison with Sonnet: Users reported that OpenRouter's qwen-2.5-coder sometimes fails to commit changes or enters loops, possibly due to incorrect setup parameters or memory pressures. It performs worse in architect mode compared to regular mode.

- Comparisons with Sonnet suggest that qwen-2.5-coder may not match Sonnet's efficiency based on preliminary experiences, prompting discussions on training considerations affecting performance.

LM Studio Discord

-

7900XTX Graphics Performance: A user reported that the 7900XTX handles text processing efficiently but experiences significant slowdown with graphics-intensive tasks when using amuse software designed for AMD. Another user inquired about which specific models were tested for graphics performance on the 7900XTX.

- Users are actively evaluating the 7900XTX's capability in different workloads, noting its strengths in text processing while highlighting challenges in graphics-heavy applications.

- Roleplay Models for Llama 3.2: A user sought recommendations for good NSFW/Uncensored, Llama 3.2 based models suitable for roleplay. Another member responded that such models could be found with proper searching.

- The discussion highlighted the difficulty of navigating HuggingFace to locate specific roleplay models, suggesting the need for better search strategies.

- Remote Server Usage for LM Studio: A user sought advice on configuring LM Studio to point to a remote server. Suggestions included using RDP or the openweb-ui to enhance the user experience.

- One user expressed interest in utilizing Tailscale to host inference backends remotely, emphasizing the importance of maintaining consistent performance across setups.

- Windows vs Ubuntu Inference Speed: Tests revealed that a 1b model achieved 134 tok/sec on Windows, while Ubuntu outperformed it with 375 tok/sec, indicating a substantial performance disparity. A member suggested that this discrepancy might be due to different power schemes in Windows and recommended switching to high-performance mode.

- The community is examining the factors contributing to the inference speed differences between operating systems, considering power management settings as a potential cause.

- AMD GPU Performance Challenges: The discussion emphasized that while AMD GPUs offer efficient performance, they suffer from limited software support, making them less appealing for certain applications. A member noted that using AMD hardware often feels like an uphill battle due to compatibility issues with various tools.

- Participants are expressing frustrations over AMD GPU software compatibility, highlighting the need for improved support to fully leverage AMD hardware capabilities.

OpenRouter (Alex Atallah) Discord

-

O1 Streaming Now Live: OpenAIDevs announced that OpenAI's o1-preview and o1-mini models now support real streaming capabilities, available to developers across all paid usage tiers.

- This update addresses previous limitations with 'fake' streaming methods, and the community expressed interest in enhanced clarity regarding the latest streaming features.

- Gemini Models Encounter Rate Limits: Users reported frequent 503 errors while utilizing Google’s

Flash 1.5andGemini Experiment 1114, suggesting potential rate limiting issues with these newer experimental models.

- Community discussions highlighted resource exhaustion errors, with members recommending improved communication from OpenRouter to mitigate such technical disruptions.

- Mistral Models Face Infinite Loop Issues: Issues were raised regarding Mistral models like

Mistral NemoandGemini, specifically pertaining to infinite loops and repeated outputs when used with OpenRouter.

- Suggestions included adjusting temperature settings, but users acknowledged the complexities involved in resolving these technical challenges.

- Surge in Demand for Custom Provider Keys: Multiple users requested access to custom provider keys, underscoring a strong interest in leveraging them for diverse applications.

- In the beta-feedback channel, users also expressed interest in beta custom provider keys and bringing their own API keys, indicating a trend towards more customizable platform integrations.

Notebook LM Discord Discord

-

Audio Track Separations in NotebookLM: A member in #use-cases inquired about methods to obtain separate voice audio tracks during recordings.

- This highlights an ongoing interest in audio management tools, with references to Simli_NotebookLM for speaker separation and mp4 recording sharing.

- Innovative Video Creation Solutions: Discussion on Somli video creation tools and using D-ID Avatar studio at competitive prices was shared.

- Members exchanged steps for building videos with avatars, including relevant coding practices for those interested.

- Enhanced Document Organization with NotebookLM: A member expressed interest in leveraging NotebookLM for compiling and organizing world-building documentation.

- The request underscores NotebookLM's potential to streamline creative processes by effectively managing extensive notes.

- Customized Lesson Creation in NotebookLM: An English teacher shared their experience using NotebookLM to develop reading and listening lessons tailored to student interests.

- The approach includes tool tips as mini-lessons, enhancing students' contextual understanding through practical language scenarios.

- Podcast Generation from Code with NotebookLM: A user discussed experimenting with NotebookLM generating podcasts from code snippets.

- This showcases NotebookLM's versatility in creating content from diverse data inputs, as members explore various generation techniques.

Nous Research AI Discord

-

LLM2CLIP Boosts CLIP's Textual Handling: The LLM2CLIP paper leverages large language models to enhance CLIP's multimodal capabilities by efficiently processing longer captions.

- This integration significantly improves CLIP's performance in cross-modal tasks, utilizing a fine-tuned LLM to guide the visual encoder.

- Neural Metamorphosis Enables Self-Morphable Networks: Neural Metamorphosis (NeuMeta) introduces a paradigm for creating self-morphable neural networks by sampling from a continuous weight manifold.

- This method allows dynamic weight generation for various configurations without retraining, emphasizing the manifold's smoothness.

- AgentInstruct Automates Massive Synthetic Data Creation: The AgentInstruct framework generates 25 million diverse prompt-response pairs from raw data sources to facilitate Generative Teaching.

- Post-training with this dataset, the Orca-3 model achieved a 40% improvement on AGIEval compared to previous models like LLAMA-8B-instruct and GPT-3.5-turbo.

- LLaVA-o1 Enhances Reasoning in Vision-Language Models: LLaVA-o1 introduces structured reasoning for Vision-Language Models, enabling autonomous multistage reasoning in complex visual question-answering tasks.

- The development of the LLaVA-o1-100k dataset contributed to significant precision improvements in reasoning-intensive benchmarks.

- Synthetic Data Generation Strategies Discussed: Discussions highlighted the importance of synthetic data generation in training robust AI models, referencing frameworks like AgentInstruct.

- Participants emphasized the role of large-scale synthetic datasets in achieving benchmark performance enhancements.

GPU MODE Discord

-

Integrating Triton CPU Backend in PyTorch: A GitHub Pull Request was shared to add Triton CPU as an Inductor backend in PyTorch, aiming to utilize Inductor-generated kernels for stress testing the new backend.

- This integration is intended to evaluate the performance and robustness of the Triton CPU backend, fostering enhanced computational capabilities within PyTorch.

- Insights into PyTorch FSDP Memory Allocations: Members discussed how FSDP allocations occur in

CUDACachingAllocatoron device memory during saving operations, rather than on CPU.

- Future FSDP versions are expected to improve sharding techniques, reducing memory allocations by eliminating the need for all-gathering of parameters, with releases targeted for late this year or early next year.

- Enhancements in Liger Kernel Distillation Loss: An issue on GitHub was raised regarding the implementation of new distillation loss functions in the Liger Kernel, outlining motivations for supporting various alignment and distillation layers.

- The discussion highlighted the potential for improved model training techniques through the incorporation of diverse distillation layers, aiming to enhance performance and flexibility.

- Optimizing Register Allocation Strategies: Discussions emphasized that spills in register allocation can severely impact performance, advocating for increasing registers utilization to mitigate this issue.

- Members explored strategies such as defining and reusing single register tiles and balancing resource allocation to minimize spills, particularly when adding additional WGMMAs.

- Addressing FP8 Alignment in FP32 MMA: A challenge was identified where FP8 output thread fragment ownership in FP32 MMA doesn't align with expected inputs, as illustrated in this document.

- To resolve this misalignment without degrading performance via warp shuffle, a static layout permutation of the shared memory tensor is employed for efficient data handling.

Interconnects (Nathan Lambert) Discord

-

Runner H Beta Launch Pushes Towards ASI: The beta release of Runner H has been announced by H Company, marking a significant advancement beyond current scaling laws towards artificial super intelligence (ASI). Tweet from H Company highlighted this milestone.

- The company emphasized that with this beta release, they're not just introducing a product but initiating a new chapter in AI development.

- Pixtral Paper Sheds Light on Advanced Techniques: The Pixtral paper was discussed by Sagar Vaze, specifically referencing Sections 4.2, 4.3, and Appendix E. This paper delves into complex methodologies relevant to ongoing research.

- Sagar Vaze provided insights, pointing out that the detailed discussions offer valuable context to the group's current projects.

- Runner H Outperforms Qwen in Benchmarks: Runner H demonstrated superior performance against Qwen using the WebVoyager benchmarks, as detailed in the WebVoyager paper.

- This success underscores Runner H's edge in real-world scenario evaluations through innovative auto evaluation methods.

- Advancements in Tree Search Methods: A recent report highlights significant gains in tree search techniques, thanks to collaborative efforts from researchers like Jinhao Jiang and Zhipeng Chen.

- These improvements enhance the reasoning capabilities of large language models.

- Exploring the Q Algorithm's Foundations: The Q algorithm was revisited, sparking discussions around its foundational role in current AI methodologies.

- Members expressed nostalgia, acknowledging the algorithm's lasting impact on today's AI techniques.

Latent Space Discord

-

Cerebras' Llama 3.1 Inference Speed: Cerebras claims to offer Llama 3.1 405B at 969 tokens/s, significantly faster than the median provider benchmark by more than 10X.

- Critics argue that while Cerebras excels in batch size 1 evaluations, its performance diminishes for larger batch sizes, suggesting that comparisons should consider these differences.

- OpenAI Enhances Voice Capabilities: OpenAI announced an update rolling out on chatgpt.com for voice features, aimed at making presentations easier for paid users.

- This update allows users to learn pronunciation through their presentations, highlighting a continued focus on enhancing user interaction.

- Roboflow Secures $40M Series B Funding: Roboflow raised an additional $40 million to enhance its developer tools for visual AI applications in various fields, including medical and environmental sectors.

- CEO Joseph Nelson emphasized their mission to empower developers to deploy visual AI effectively, underlining the importance of seeing in a digital world.

- Discussions Around Small Language Models: The community debated the definitions of small language models (SLM), with suggestions indicating models ranging from 1B to 3B as small.

- There's consensus that larger models don’t fit this classification, and distinctions based on running capabilities on consumer hardware were noted.

LlamaIndex Discord

-

AIMakerspace Leads Local RAG Workshop: Join AIMakerspace on November 27 to set up an on-premises RAG application using open-source LLMs, focusing on LlamaIndex Workflows and Llama-Deploy.

- The event offers hands-on training and deep insights for building a robust local LLM stack.

- LlamaIndex Integrates with Azure at Microsoft Ignite: LlamaIndex unveiled its end-to-end solution integrated with Azure at #MSIgnite, featuring Azure Open AI, Azure AI Embeddings, and Azure AI Search.

- Attendees are encouraged to connect with @seldo for more details on this comprehensive integration.

- Integrating Chat History into RAG Systems: A user discussed incorporating chat history into a RAG application leveraging Milvus and Ollama's LLMs, utilizing a custom indexing method.

- The community suggested modifying the existing chat engine functionality to enhance compatibility with their tools.

- Implementing Citations with SQLAutoVectorQueryEngine: Inquiry about obtaining inline citations using SQLAutoVectorQueryEngine and its potential integration with CitationQueryEngine was raised.

- Advisors recommended separating citation workflows due to the straightforward nature of implementing citation logic.

- Assessing Retrieval Metrics in RAG Systems: Concerns were voiced regarding the absence of ground truth data for evaluating retrieval metrics in a RAG system.

- Community members were asked to provide methodologies or tutorials to effectively address this testing challenge.

tinygrad (George Hotz) Discord

-

tinygrad 0.10.0 Released with 1200 Commits: The team announced the release of tinygrad 0.10.0, which includes over 1200 commits and focuses on minimizing dependencies.

- tinygrad now supports both inference and training, with aspirations to build hardware and has recently raised funds.

- ARM Test Failures and Resolutions: Users reported test failures on aarch64-linux architectures, specifically encountering an AttributeError during testing.

- Issues are reproducible across architectures, with potential resolutions including integrating

x.realize()intest_interpolate_bilinear. - Kernel Cache Test Fixes: A fix was implemented for

test_kernel_cache_in_actionby addingTensor.manual_seed(123), ensuring the test suite passes.

- Only one remaining issue persists on ARM architecture, with ongoing discussions on resolutions.

- Debugging Jitted Functions in tinygrad: Setting DEBUG=2 causes the process to continuously output at the bottom lines, indicating it's operational.

- Jitted functions in tinygrad execute only GPU kernels, resulting in no visible output for internal print statements.

Cohere Discord

-

Tokenized Training Impairs Word Recognition: A member highlighted that the word 'strawberry' is tokenized during training, disrupting its recognition in models like GPT-4o and Google’s Gemma2 27B, revealing similar challenges across different systems.

- This tokenization issue affects the model's ability to accurately recognize certain words, prompting discussions on improving word recognition through better training methods.

- Cohere Beta Program for Research Tool: Sign-ups for the Cohere research prototype beta program are closing tonight at midnight ET, granting early access to a new tool designed for research and writing tasks.

- Participants are encouraged to provide detailed feedback to help shape the tool's features, focusing on creating complex reports and summaries.

- Configuring Command-R Model Language Settings: A user inquired about setting a preamble for the command-r model to ensure responses in Bulgarian and avoid confusion with Russian terminology.

- They mentioned using the API request builder for customization, indicating a need for clearer language differentiation in the model's responses.

OpenInterpreter Discord

-

Development Branch Faces Stability Issues: A member reported that the development branch is currently in a work-in-progress state, with

interpreter --versiondisplaying 1.0.0, indicating a possible regression in UI and features.- Another member volunteered to address the issues, noting the last commit was 9d251648.

- Assistance Sought for Skills Generation: Open Interpreter users requested help with skills generation, mentioning that the expected folder is empty and seeking guidance on proceeding.

- It was recommended to follow the GitHub instructions related to teaching the model, with plans for future versions to incorporate this functionality.

- UI Simplifications Receive Mixed Feedback: Discussions emerged around recent UI simplifications, with some members preferring the previous design, expressing comfort with the older interface.

- The developer acknowledged the feedback and inquired whether users favored the old version more.

- Issues with Claude Model Lead to Concerns: Reports indicated problems with the Claude model breaking; switching models temporarily resolved the issue, raising concerns about the Anthropic service reliability.

- Members questioned if these issues persist across different versions.

- Ray Fernando Explores AI Tools in Latest Podcast: In a YouTube episode, Ray Fernando discusses AI tools that enhance the build process, highlighting 10 AI tools that help build faster.

- The episode titled '10 AI Tools That Actually Deliver Results' offers valuable insights for developers interested in tool utilization.

DSPy Discord

-

DSPy Introduces VLM Support: A new DSPy VLM tutorial is now available, highlighting the addition of VLM support in beta for extracting attributes from images.

- The tutorial utilizes screenshots of websites to demonstrate effective attribute extraction techniques.

- DSPy Integration with Non-Python Backends: Members report reduced accuracy when integrating DSPy's compiled JSON output with Go, raising concerns about prompt handling replication.

- A suggestion was made to use the inspect_history method to create templates tailored for specific applications.

- Cost Optimization Strategies in DSPy: Discussions emerged on how DSPy can aid in lowering prompting costs through prompt optimization and potentially using a small language model as a proxy.

- However, there are concerns about long-context limitations, necessitating strategies like context pruning and RAG implementations.

- Challenges with Long-Context Prompts: The inefficiency of few-shot examples with extensive contexts in long document parsing was highlighted, with criticisms on the reliance of model coherence across large inputs.

- Proposals include breaking processing into smaller steps and maximizing information per token to address context-related issues.

- DSPy Assertions Compatibility with MIRPOv2: A query was raised regarding the compatibility of DSPy assertions with MIRPOv2 in the upcoming version 2.5, referencing past incompatibilities.

- This indicates ongoing interest in how these features will evolve and integrate within the framework.

OpenAccess AI Collective (axolotl) Discord

-

Mistral Large introduces Pixtral models: Community members expressed interest in experimenting with the latest Mistral Large and Pixtral models, seeking expertise from experienced users.

- The discussion reflects ongoing experimentation and a desire for insights on the performance of these AI models.

- MI300X training operational: Training with the MI300X is now operational, with several upstream changes ensuring consistent performance.

- A member highlighted the importance of upstream contributions in maintaining reliability during the training process.

- bitsandbytes integration enhancements: Concerns were raised about the necessity of importing bitsandbytes during training even when not in use, suggesting making it optional.

- A proposal was made to implement a context manager to suppress import errors, aiming to increase the codebase's flexibility.

- Axolotl v0.5.2 release: The new Axolotl v0.5.2 has been launched, featuring numerous fixes, enhanced unit tests, and upgraded dependencies.

- Notably, the release addresses installation issues from version v0.5.1 by resolving the

pip install axolotlproblem, facilitating a smoother update for users. - Phorm Bot deprecation concerns: Questions arose regarding the potential deprecation of the Phorm Bot, with indications it might be malfunctioning.

- Members speculated that the issue stems from the bot referencing the outdated repository URL post-transition to the new organization.

Modular (Mojo 🔥) Discord

-

Max Graph Integration Enhances Knowledge Graphs: An inquiry was raised about whether Max Graphs can enhance traditional Knowledge Graphs for unifying LLM inference as one of the agentic RAG tools.

- Darkmatter noted that while Knowledge Graphs serve as data structures, Max Graph represents a computational approach.

- MAX Boosts Graph Search Performance: Discussion on utilizing MAX to boost graph search performance revealed that current capabilities require copying the entire graph into MAX.

- A potential workaround was proposed involving encoding the graph as 1D byte tensors, though memory requirements may pose challenges.

- Distinguishing Graph Types and Their Uses: A user pointed out the distinctions among various graph types, indicating MAX computational graphs relate to computing, while Knowledge Graphs store relationships.

- They further explained that Graph RAG enhances retrieval using knowledge graphs and that an Agent Graph describes data flow between agents.

- Max Graph's Tensor Dependency Under Scrutiny: Msaelices questioned whether Max Graph is fundamentally tied to tensors, noting the constraints of its API parameters restricted to TensorTypes.

- This prompted a suggestion for reviewing the API documentation before proceeding with implementation inquiries.

LLM Agents (Berkeley MOOC) Discord

-

Google AI Workshop on Gemini: Join the Google AI workshop on 11/26 at 3pm PT, focusing on building with Gemini during the LLM Agents MOOC Hackathon. The event features live demos of Gemini and an interactive Q&A with Google AI specialists for direct support.

- Participants will gain insights into Gemini and Google's AI models and platforms, enhancing hackathon projects with the latest technologies.

- Lecture 10 Announcement: Lecture 10 is scheduled for today at 3:00pm PST, with a livestream available for real-time participation. This session will present significant updates in the development of foundation models.

- All course materials, including livestream URLs and assignments, are accessible on the course website, ensuring centralized access to essential resources.

- Percy Liang's Presentation: Percy Liang, an Associate Professor at Stanford, will present on 'Open-Source and Science in the Era of Foundation Models'. He emphasizes the importance of open-source in advancing AI innovation despite current accessibility limitations.

- Liang highlights the necessity for community resources to develop robust open-source models, fostering collective progress in the field.

- Achieving State of the Art for Non-English Models: Tejasmic inquired about strategies to attain state of the art performance for non-English models, particularly in languages with low data points.

- A suggestion was made to direct the question to a dedicated channel where staff are actively reviewing similar inquiries.

Torchtune Discord

-

Flex Attention Blocks Score Copying: A member reported an error when trying to copy attention scores in the flex attention's

score_modfunction, resulting in an Unsupported: HigherOrderOperator mutation error.- Another member confirmed this limitation and referenced the issue for further details.

- Attention Score Extraction Hacks: Members discussed the challenges of copying attention scores with vanilla attention due to inaccessible SDPA internals and suggested that modifying the Gemma 2 attention class could provide a workaround.

- A GitHub Gist was shared detailing a hack to extract attention scores without using triton kernels, though it diverges from the standard Torchtune implementation.

- Vanilla Attention Workarounds: It was revealed that copying attention scores with vanilla attention is not feasible due to the lack of access to SDPA internals, leading to exploration of alternatives.

- A member suggested that modifying the Gemma 2 attention class might offer a solution, as it is more amenable to hacking.

LAION Discord

-

LAION-DISCO-12M Launches with 12 Million Links: LAION announced LAION-DISCO-12M, a collection of 12 million links to publicly available YouTube samples paired with metadata, aimed at supporting basic machine learning research for generic audio and music. This initiative is detailed further in their blog post.

- The release has been highlighted in a tweet from LAION, emphasizing the dataset's potential to enhance audio-related foundation models.

- Metadata Enhancements for Audio Research: The metadata included in the LAION-DISCO-12M collection is designed to facilitate research in foundation models for audio analysis.

- Several developers expressed excitement over the potential use cases highlighted in the announcement, emphasizing the need for better data in the audio machine learning space.

Mozilla AI Discord

-

Transformer Lab Demo kicks off today: Today's Transformer Lab Demo showcases the latest developments in transformer technology.

- Members are encouraged to join and engage in the discussions to explore these advancements.

- Metadata Filtering Session Reminder: A session on metadata filtering is scheduled for tomorrow, led by an expert in channel #1262961960157450330.

- Participants will gain insights into effective data handling practices in AI.

- Refact AI Discusses Autonomous AI Agents: Refact AI will present on building Autonomous AI Agents to perform engineering tasks end-to-end this Thursday.

- They will also answer attendees' questions, offering a chance for interactive learning.

The Alignment Lab AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LLM Finetuning (Hamel + Dan) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!