[AINews] OpenAI launches Operator, its first Agent

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

A virtual browser is all you need.

AI News for 1/22/2025-1/23/2025. We checked 7 subreddits, 433 Twitters and 34 Discords (225 channels, and 4386 messages) for you. Estimated reading time saved (at 200wpm): 483 minutes. You can now tag @smol_ai for AINews discussions!

As widely rumored, OpenAI launched their computer use agent, 3 months after Anthropic's equivalent:

- live today for Pro users in the US

- notably NOT just an open source demo like Anthropic, but a hosted, premium product with an API promised

- Some folks had early access

- Has video export

- Lots of misclicks, but does self correct

- Long horizon, remote VMs up to 20 minutes

- Many clones/alternatives from LangChain, smooth operator

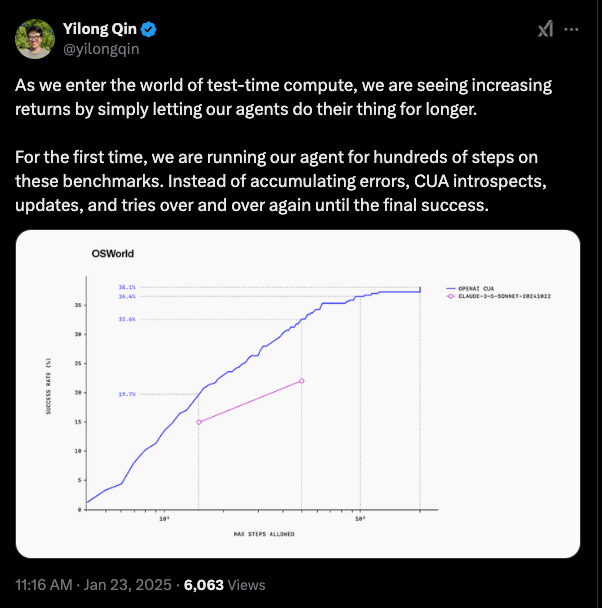

- the separate evals notes show how Operator is a SOTA agent, but still not quite at human level. Exhibits a test time scaling curve:

- As Sam says, there are more agents to launch in the coming weeks and months

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

AI Models and Releases

- OpenAI's Operator Launch: @OpenAI introduced Operator, a computer-using agent capable of interacting with web browsers to perform tasks like booking reservations and ordering groceries. @sama praised the release, while @swyx highlighted its ability to handle repetitive browser tasks efficiently.

- DeepSeek R1 and Models: @deepseek_ai unveiled DeepSeek R1, an open-source reasoning model that outperforms many competitors on Humanity’s Last Exam. @francoisfleuret commended its transformer architecture and performance benchmarks.

- Google DeepMind's VideoLLaMA 3: @arankomatsuzaki announced VideoLLaMA 3, a multimodal foundation model designed for image and video understanding, now open-sourced for broader research and application.

- Perplexity Assistant Release: @perplexity_ai launched Perplexity Assistant for Android, integrating reasoning and search capabilities to enhance daily productivity. Users can now activate the assistant and utilize features like multimodal interactions.

AI Benchmarks and Evaluation

- Humanity's Last Exam: @DanHendrycks introduced Humanity’s Last Exam, a 3,000-question dataset designed to evaluate AI's reasoning abilities across various subjects. Current models score below 10% accuracy, indicating significant room for improvement.

- CUA Performance on OSWorld and WebArena: @omarsar0 shared results of Computer-Using Agent (CUA) on OSWorld and WebArena benchmarks, showcasing improved performance over previous state-of-the-art models, though still trailing behind human performance.

- DeepSeek R1's Dominance: Multiple tweets from @teortaxesTex highlight DeepSeek R1’s superior performance on text-based benchmarks, surpassing models like LLaMA 4 and OpenAI's o1 in various evaluation metrics.

AI Safety and Ethics

- Citations and Safe AI Responses: @AnthropicAI launched Citations, a feature enabling AI models like Claude to provide grounded answers with precise source references, enhancing output reliability and user trust.

- Overhype and Hallucinations in AI: @kylebrussell criticized the overhyping of AI technologies, emphasizing that hallucinations and errors should not lead to an outright dismissal of AI advancements.

- AI as Creative Collaborators: @c_valenzuelab advocated for viewing AI as creative collaborators rather than mere tools, highlighting the importance of subjective and emotional evaluation in artistic endeavors.

AI Research and Development

- Program Synthesis and AGI: @TheTuringPost explored program synthesis as a pathway to Artificial General Intelligence (AGI), combining pattern recognition with abstract reasoning to overcome current deep learning limitations.

- Diffusion Feature Extractors: @ostrisai reported progress on training Diffusion Feature Extractors using LPIPS outputs, resulting in cleaner image features and enhanced text understanding within generated images.

- X-Sample Contrastive Loss (X-CLR): @DeepLearningAI introduced X-Sample Contrastive Loss (X-CLR), a self-supervised loss function that assigns continuous similarity scores to improve contrastive learning performance over traditional methods like SimCLR and CLIP.

AI Industry and Companies

- Stargate Project Investment: @saranormous discussed the $500B Stargate investment, which aims to boost compute power and AI token usage, questioning its long-term impact on intelligence acquisition and industry competition.

- Google Colab’s Impact: @osanseviero highlighted the significant role of Google Colab in democratizing GPU access, fostering advancements in open-source projects, education, and AI research.

- OpenAI and Together Compute Partnership: @togethercompute announced a partnership with Cartesia AI, providing access to Sonic, a low-latency voice AI model, via the Together API. This collaboration aims to create seamless multi-modal experiences by combining chat, image, audio, and code functionalities.

Memes/Humor

- AI Replacing Rule Lawyers: @NickEMoran joked about the inclusion of Magic and D&D in Humanity’s Last Exam, humorously suggesting that LLMs might soon take over the roles of Rules Lawyers.

- AI's Impact on Pop Culture: @saranormous shared a humorous quote reflecting on AI's capabilities, integrating memes to highlight the lighter side of AI advancements.

- Elon and Sam Trustworthiness Debate: @draecomino humorously questioned the trustworthiness of Elon Musk compared to Sam Altman, sparking a light-hearted debate on AI leadership.

- Funny AI Interactions: @nearcyan shared a tweet about the humorous side of AI-generated content, emphasizing the quirky and unexpected outcomes when AI models interact with user prompts.

This summary categorizes the provided tweets into AI Models and Releases, AI Benchmarks and Evaluation, AI Safety and Ethics, AI Research and Development, AI Industry and Companies, and Memes/Humor, ensuring thematic coherence and grouping similar discussion points. Each summary references direct tweets with inline markdown links to maintain factual grounding.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. DeepSeek's Competitiveness Shakes Tech Giants

- deepseek is a side project (Score: 1406, Comments: 165): Deepseek is described as a side project of a quantitative firm with a strong mathematical foundation and ownership of numerous GPUs used for trading and mining. The project aims to optimize the utilization of these GPUs, highlighting the firm's technical capabilities.

- Deepseek's Origin and Intent: Many users highlight that Deepseek is funded by a hedge fund, specifically High-Flyer, and emphasize that it's a side project utilizing idle GPUs. While the project isn't seen as a direct competitor to giants like OpenAI or xAI, it demonstrates significant efficiency and low-cost operations with only 2000 H100 GPUs compared to others using 100K.

- Quantitative Background and GPU Utilization: Comments discuss the hedge fund's quantitative expertise, which allows them to optimize resource usage and create efficient models despite limited hardware. Users note that the skills overlap between high-frequency trading (HFT) and AI development, with quants often working on models that require precise and fast execution, similar to trading algorithms.

- Comparisons and Market Impact: There's skepticism about the need for massive hardware investments by larger companies, questioning the ROI when smaller projects like Deepseek can achieve competitive results. Users humorously note the irony of a hedge fund's side project posing a potential threat to major AI players, highlighting the strategic advantage of leveraging existing resources effectively.

- Meta panicked by Deepseek (Score: 535, Comments: 114): Meta is reportedly alarmed by DeepSeek v3 outperforming Llama 4 in benchmarks, prompting engineers to urgently analyze and replicate DeepSeek's capabilities. Concerns include the high costs of the generative AI organization and difficulties in justifying expenses alongside leadership salaries, indicating organizational challenges and urgency at Meta regarding AI advancements.

- Skepticism on DeepSeek v3's Impact: Many commenters express doubt about the legitimacy of the claim that DeepSeek v3 is causing panic at Meta, citing the significant size difference between DeepSeek's models and Llama's models. ResidentPositive4122 highlights that DeepSeek has been known in the AI space for its strong models, which contradicts the notion of them being an "unknown" threat.

- Meta's Strategic Position: Commenters like FrostyContribution35 and ZestyData argue that Meta still holds a strong position in AI research, with ongoing innovations in architecture improvements like BLT and LCM. They suggest that Meta's extensive data resources and talented research team provide a significant advantage, despite the competitive landscape.

- Organizational and Resource Challenges: Discussions touch on the organizational dynamics at Meta, such as the pressure on engineers due to leadership decisions and the cost of energy in the USA compared to China. The_GSingh points out that despite Meta's extensive research, they lack in implementing new models, while Swagonflyyyy mentions that DeepSeek's cost-effective approach highlights inefficiencies in Meta's spending on AI leadership salaries.

- Open-source Deepseek beat not so OpenAI in 'humanity's last exam' ! (Score: 238, Comments: 36): Deepseek's open-source model, DeepSeek-R1, outperforms other models like GPT-4O and Claude 3.5 Sonnet on the "HLE" test, achieving an accuracy of 9.4% with a calibration error of 81.8%. Despite being not multi-modal, DeepSeek-R1 surpasses its competitors, with detailed results available in Appendix C.2.

- DeepSeek-R1's Performance: DeepSeek-R1, as a side project, impressively outperforms established models like OpenAI's O1 in text-only datasets, with a notable accuracy of 9.4% compared to O1's 8.9%. This achievement highlights the potential of non-mainstream projects to challenge industry leaders.

- Humanity's Last Exam (HLE): This benchmark is critical for testing AI's expert-level reasoning across disciplines, revealing significant gaps in current AI systems. Leading models score below 10%, showcasing the need for improvement in abstract reasoning and specialized knowledge.

- Open Source and Industry Dynamics: DeepSeek's success has sparked discussions about the state of open-source AI, with users questioning the absence of recent releases from major players like Meta and xAI. The conversation also touches on the unexpected rise of projects like DeepSeek, which lack backing from traditional tech giants, yet achieve state-of-the-art performance.

Theme 2. Advanced LLM Architectures: Byte-Level Models and Reasoning Agents

- ByteDance dropping an Apache 2.0 licensed 2B, 7B & 72B "reasoning" agent for computer use (Score: 541, Comments: 52): ByteDance has released Apache 2.0 licensed large language models (LLMs) with parameters of 2 billion, 7 billion, and 72 billion, focusing on enhancing reasoning tasks for computer use. These models are intended to improve computational reasoning capabilities, demonstrating ByteDance's commitment to open-source AI development.

- Discussion highlights the potential and limitations of ByteDance's new models, with users expressing curiosity about the practical use cases for the 2 billion and 7 billion parameter models beyond basic functionalities like "shortcut keys". Some users also reported initial difficulties in getting meaningful outputs from the smaller models, suggesting deployment and usage guides might be needed.

- There is interest in the Gnome Desktop demo, indicating excitement about the models' capabilities in operating systems environments. Users are also discussing the need for LLM-based approaches for non-web-based software, comparing them to tools like AutoHotkey.

- Links to resources such as GitHub repositories and Hugging Face were shared, with some users expressing gratitude for the ease of access. Additionally, there was discussion about using specific prompts from the repository to ensure the models function correctly, highlighting the importance of understanding the training methodologies.

- The first performant open-source byte-level model without tokenization has been released. EvaByte is a 6.5B param model that also has multibyte prediction for faster inference (vs similar sized tokenized models) (Score: 249, Comments: 65): EvaByte, a 6.5 billion parameter open-source byte-level model, has been released, offering multibyte prediction for faster inference without tokenization. The model achieves approximately 60% performance across 14 tasks, with a training token count just above 0.3 on a logarithmic scale, as shown in a scatter plot comparing it to other models.

- Discussions highlight the performance and speed of EvaByte compared to other models, with some users noting its architecture allows for faster decoding—up to 5-10x faster than vanilla byte models and 2x faster than tokenizer-based LMs. The model's ability to handle multimodal tasks more efficiently than BLTs is also emphasized, as it requires fewer training bytes.

- The model's byte-sized tokens are debated, with concerns about slower output speed and fast context fill-up. However, some argue that the improved architecture offsets these drawbacks by enhancing prediction speed, while others note the potential for reduced hardware expenses due to smaller dictionaries and easier computations.

- Users question training data inconsistencies and the model's scaling capabilities, with references to Hugging Face and a blog for further exploration. There is interest in how EvaByte compares with other models like GPT-J and OLMo, and discussions about its training on chatbot outputs, which may lead to errors in responses.

Theme 3. Tooling for Better Reasoning in AI Models: Enhancements in Open WebUI

- Open WebUI adds reasoning-focused features in two new releases OUT TODAY!!! 0.5.5 adds "Thinking" tag support to streamline reasoning model chats (works with R1) . 0.5.6 brings new "reasoning_effort" parameter to control cognitive effort. (Score: 104, Comments: 18): Open WebUI has released two updates, 0.5.5 and 0.5.6, enhancing reasoning model interactions. Version 0.5.5 introduces a 'think' tag that visually indicates the model's thinking duration, while version 0.5.6 adds a reasoning_effort parameter, allowing users to adjust the cognitive effort exerted by OpenAI models, thus improving customization for complex queries. More details can be found on their GitHub releases page.

- The reasoning_effort parameter does not currently impact R1 distilled models, as tested by a user who found no difference in "thinking" time across different settings. The parameter appears to be applicable only to OpenAI reasoning models at this time.

- Inference engines need to implement the "reasoning_effort" themselves, as it's not a model parameter. One suggested method is adjusting the sampling scaling coefficient for the "end of thinking" token, which can effectively modify the perceived cognitive effort.

- Users are anticipating fixes for rendering artifacts and the addition of MCP support to standardize tool usage, which is expected to enhance the utility of the platform.

- Deepseek R1's Open Source Version Differs from the Official API Version (Score: 80, Comments: 57): Deepseek R1's open-source model shows more censorship on CCP-related issues compared to the official API, contradicting expectations. This discrepancy raises concerns about the accuracy of benchmarks and the potential for biased responses, as the open model may perform worse and spread biased viewpoints, affecting third-party providers and human-ranked leaderboards like LM Arena. Tests reveal the open model interrupts its thinking process on sensitive topics, suggesting the models might not be identical, and researchers should specify which version they use in studies.

- There's a clear discrepancy between the open-source model and the official API, with the open model showing more censorship on CCP-related issues. Users, including TempWanderer101 and rnosov, discuss how benchmarks might be inaccurately measuring the open model, and that the models might not be identical, impacting performance and third-party provider quality.

- The censorship issue might be related to differences in prompt handling, with rnosov noting that using a

- There's a discussion on the cost and performance implications, with TempWanderer101 noting pricing differences between TogetherAI and OpenRouter. The potential for confusion between model versions raises concerns about the fairness of benchmarks and the reproducibility of research results, emphasizing the need for clarity in model version identification.

Theme 4. NVIDIA's GPU Innovations for Enhanced AI: Blackwell and Long Context Libraries

- NVIDIA RTX Blackwell GPU with 96GB GDDR7 memory and 512-bit bus spotted (Score: 209, Comments: 92): NVIDIA's RTX Blackwell GPU has been spotted with 96GB of GDDR7 memory and a 512-bit bus, indicating a significant update in memory capacity and bandwidth. This development suggests potential advancements in processing capabilities for high-performance computing and AI applications.

- Discussions highlight the potential pricing of the RTX Blackwell GPU, with estimates ranging from $6,000 to $18,000. Some users compare it to other cards like the MI300X/325X and the H100, suggesting they may offer better performance or value at similar price points.

- There is speculation that the RTX Blackwell could be a successor to the RTX 6000 Ada, which maxed out at 48GB. This new card's 96GB GDDR7 memory is seen as a substantial upgrade, possibly positioning it within the workstation card family.

- Users humorously express concerns about affordability, joking about selling kidneys or working extra shifts to afford the new card. This reflects a broader sentiment that while the card's specs are impressive, its price may be a barrier for many potential buyers.

- First 5090 LLM results, compared to 4090 and 6000 ada (Score: 70, Comments: 44): The NVIDIA GeForce RTX 5090 has been previewed for LLM benchmarks, showing significant improvements over the RTX 4090 and 6000 Ada models. Detailed results and comparisons can be found in the linked Storage Review article.

- Performance Expectations and Bottlenecks: Users expected the RTX 5090 to show a 60-80% increase in tokens per second due to higher memory bandwidth, but suspect a bottleneck or benchmarking issue since these gains weren't observed. FP8 is entering the mainstream, offering better performance over integer quantization, while FP4 is still years from adoption.

- Hardware Features and Comparisons: Discussions highlighted the interest in multi-GPU training capabilities and potential p2p unlocking with custom drivers for the 5090, similar to the 4090. Comparisons between RTX 6000 and GeForce series noted the 6000's higher VRAM and efficiency focus, despite its lower performance relative to the GeForce series.

- Performance Metrics: The RTX 5090 shows a 25-30% improvement in LLMs and a 40% improvement in image generation compared to the 4090, aligning with spec expectations. Users also noted the importance of FP8 and FP4 optimizations in the new generation for enhanced performance.

Other AI Subreddit Recap

/r/Singularity, /r/Oobabooga, /r/MachineLearning, /r/OpenAI, /r/ClaudeAI, /r/StableDiffusion, /r/ChatGPT

Theme 1. OpenAI launches Operator Tool for Computers

- OpenAI launches Operator—an agent that can use a computer for you (Score: 107, Comments: 70): OpenAI has introduced Operator, an agent designed to autonomously use a computer on behalf of users. This development represents a significant advancement in AI capabilities, enabling more complex task automation and interaction with digital environments.

- Users express skepticism about Operator's capabilities, questioning its ability to control operating systems beyond browsers and its effectiveness with complex tasks like handling CAPTCHAs or doing taxes. Concerns about privacy and the high cost of $200-a-month for the service were also prominent discussion points.

- Some comments highlight Operator's potential, noting its use in programming and compatibility with tools like Google Sheets, although its current limitations make it less appealing for non-programmers. The conversation also touched on the rapid pace of AI advancements and the potential for significant improvements, referencing the quick development of video models in 2024.

- Several comments discuss the broader implications of AI on privacy, suggesting that future tech developments may inherently reduce privacy, likening it to the necessity of having a mobile phone today. The EU's stringent data privacy laws were noted as a factor causing delays in AI technology rollouts in the region.

- Is anyone's chat gpt also not working? Internal server error? (Score: 260, Comments: 320): ChatGPT users are experiencing issues, specifically internal server errors, preventing access.

- Many users from various locations, including New Zealand, Spain, and Australia, report ChatGPT being down, experiencing 503 Service Temporarily Unavailable errors, and suggest subscribing to the OpenAI status page for updates. Some users humorously speculate about potential AGI advancements causing the issue.

- Several users mention DeepSeek as an alternative, highlighting its effectiveness in solving complex issues, such as Docker configuration bugs, and considering canceling their OpenAI subscriptions in favor of this free tool.

- There is a suggestion for incorporating a status indicator within the app itself, with users recommending Downdetector as a reliable alternative for monitoring service availability.

- Sam Altman says he’s changed his perspective on Trump as ‘first buddy’ Elon Musk slams him online over the $500 billion Stargate Project (Score: 474, Comments: 104): Sam Altman has reportedly shifted his view on Donald Trump, while Elon Musk criticizes him online regarding the $500 billion Stargate Project. No additional details are provided in the post.

- Discussions highlight concerns about AI and potential dystopian futures, with users expressing fears of AI-driven surveillance states and autonomous drones used for control or warfare. WloveW and lepobz discuss scenarios involving AI's role in surveillance and enforcement, emphasizing the risks of mass deployment by state actors.

- Sam Altman's shift in stance is criticized, with some commenters expressing distrust towards billionaires and their influence on AI and politics. RealPhakeEyez and Sharp_Iodine reflect on the broader implications of billionaires' decisions and their impact on society, linking it to fascism and corporate power.

- The $500 billion Stargate Project and its political associations are discussed, with a comment by -Posthuman- noting the project's history with OpenAI, Microsoft, and the Biden administration, while questioning Trump's involvement and credit claims.

Theme 2. OpenAI's Vision for AI Agents by 2025

- Open Ai set to release agents that aim to replace Senior staff software engineers by end of 2025 (Score: 148, Comments: 147): OpenAI plans to release AI agents designed to assist and potentially replace senior software engineers by the end of 2025. The initiative includes testing an AI coding assistant, aiming for ChatGPT to reach 1 billion daily active users, and replicating the capabilities of experienced programmers, as stated by Sam Altman.

- Many commenters express skepticism about AI's ability to replace senior software engineers by 2025, noting the current limitations of AI, such as the inability to handle complex tasks and context that require human judgment and creativity. Mistakes_Were_Made73 highlights that AI can enhance productivity but not fully replace engineers, while LordDaut points out the limitations of current AI models in tasks like debugging.

- The discussion reflects a concern about the broader implications of AI on white-collar jobs, with rom_ok suggesting that the focus on software engineers might be a strategy to drive down salaries. Crafty_Fault_2238 predicts a significant impact on various white-collar jobs, describing it as an "existential" threat over the next decade.

- Some users, like tQkSushi, share examples of AI improving efficiency in specific tasks but emphasize the challenges in providing AI with sufficient context for complex software tasks. This sentiment is echoed by willieb3, who argues that while AI can assist, it still requires knowledgeable human oversight to function effectively.

AI Discord Recap

A summary of Summaries of Summaries by o1-preview-2024-09-12

Theme 1. DeepSeek R1 vs Existing Models: Capabilities and Controversies

- DeepSeek R1 Outsmarts O1 in Coding Smackdown: Users reported DeepSeek R1 surpassing OpenAI's O1 in coding tasks, even acing bizarre prompts like "POTATO THUNDERSTORM!". Side-by-side tests showed R1 delivering stronger code solutions and swifter reasoning.

- Users Chew Over Slow Performance and Censorship Concerns: While DeepSeek R1 impressed with thorough debugging, some users griped about sluggish responses in Composer mode and overzealous censorship. Sarcastic praise was directed at its safety features, with efforts to find or create an uncensored version.

- DeepSeek R1 Takes on the Big Boys for a Coffee's Cost: Greg Isenberg hailed DeepSeek R1 as making reasoning cheaper than a cup of coffee and open-source, unlike GPT-4, outpacing O1-Pro in some tasks.

Theme 2. OpenAI's Operator and Agents: New Features and User Reactions

- Operator Demos Autonomous Browsing, Users Balk at $200 Price Tag: At 10am PT, Sam Altman unveiled Operator, showcasing its ability to perform tasks within a browser for a hefty $200/month. Some users expressed excitement over its features, while others questioned the steep pricing.

- Browser Control Sparks Security Debates: Operator's capability to control web browsers autonomously raised concerns about CAPTCHA loops and safety. Users compared it with open-source alternatives like Browser Use.

- OpenAI Teases Future of Agents, Leaves Users Eager: The community buzzed about Operator not being the only agent, with hints of more launches in the coming weeks. Users anticipate new ways of automating workflows and integrating AI agents.

Theme 3. AI Assistants and IDEs: Cursor, Codeium Windsurf, Aider, and JetBrains

- Cursor Users Torn Between Chat and Composer Modes: Developers championed Chat mode for friendly code reviews but criticized Composer for unpredictable code changes. Frustrations included models running amok on code without proper context.

- Codeium Windsurf's Flow Credits Wiped Out by Buggy AI Edits: Users reported depleting over 10% of their monthly flow credits in hours due to repeated AI-induced code errors. Fixing these errors consumed credits rapidly, leading to calls for smarter resource use.

- JetBrains Fans Hopeful as They Join AI Waitlist: Despite earlier disappointments, users remain loyal to JetBrains IDEs, joining the JetBrains AI waitlist in hopes it can compete with Cursor and Windsurf. Some joked they'd stick with JetBrains regardless of AI struggles.

Theme 4. AI Model Development and Multi-GPU Support

- Unsloth's Multi-GPU Support on the Horizon: While currently lacking full multi-GPU capabilities, Unsloth AI teased future updates to support large-scale training, reducing single-GPU bottlenecks. Pro users eagerly anticipate smoother training of large models.

- BioML Postdoc Seeks to Adapt Striped Hyena for Eukaryotes: A researcher aims to finetune Striped Hyena, trained on prokaryote genomes, for eukaryotic sequences, referencing Science ado9336. Discussions included challenges with genomic data pretraining.

- Community Cheers Dolphin-R1's Open Release After $6k Sponsorship: Creating Dolphin-R1 cost $6k in API fees, leading the developer to seek a backer for open release. A sponsor stepped up, enabling the dataset to be shared under Apache-2.0 licensing on Hugging Face.

Theme 5. Hardware and Performance Discussions: GPUs, CUDA Updates, and Training Large Models

- NVIDIA's RTX 5090 Brings Speed but Sips More Power: The RTX 5090 boasts 30% faster performance than the 4090 but consumes 30% more power. Users noted the card doesn't fully exploit its 1.7x bandwidth increase for smaller LLMs.

- CUDA 12.8 Release Excites Developers with FP8/FP4 Support: CUDA 12.8 introduced Blackwell architecture support and new FP8 and FP4 TensorCore instructions, generating buzz about potential performance boosts in training.

- DeepSeek R1's Hefty VRAM Demands Stir GPU Debates: Running DeepSeek R1 Distilled Qwen 2.5 32B in float16 format requires at least 64GB VRAM, or 32GB with quantization. Discussions highlighted VRAM constraints and the challenges of training large models on limited hardware.

PART 1: High level Discord summaries

Cursor IDE Discord

-

DeepSeek R1 Races Past O1-Pro: Attendees praised DeepSeek R1 for thorough debugging, referencing Greg Isenberg's tweet that hailed it as cheaper and open source, outpacing O1-pro in some tasks.

- “I just realized DeepSeek R1 JUST made reasoning cheaper than a cup of coffee,” echoed one user, although others noted sluggish responses in Composer mode.

- O1 Subscription Scuffle: Participants discovered OpenAI's O1 Pro version costs $200/month, creating confusion and frustration across the community.

- They contrasted this plan with lower-cost alternatives, suggesting DeepSeek appears more budget-friendly for sustained usage.

- Chat vs. Composer Clash: Developers championed Chat mode as a friendlier tool for code reviews, highlighting its conversational approach.

- They criticized Composer for its unpredictable code modifications, stressing the importance of context-aware editing.

- Usage-Based Pricing Pushback: Users questioned whether they should pay more for AI-related API-call tracking, voicing skepticism about usage-based pricing.

- They demanded transparent fee structures and stronger models that deliver key features without ballooning expenses.

- UI-TARS Ushers Automated GUI Interactions: ByteDance introduced UI-TARS in a paper titled "UI-TARS: Pioneering Automated GUI Interaction with Native Agents", spotlighting advanced GUI automation possibilities.

- Developers explored its codebase in the official GitHub repo, noting potential synergy with agentic LLM processes.

Codeium (Windsurf) Discord

-

Windsurf’s Waves of Web Search: They launched a new web search feature for Codeium (Windsurf), showcased in a short demo video, with developers invited to 'surf' the internet in an integrated environment.

- Community members were urged to support the demo video post, emphasizing that broad engagement helps refine the search functionality for more robust usage.

- Codeium Extensions: A Concern for Updates: Some users expressed worry that Windsurf might overshadow Codeium extensions, citing minimal plugin updates since September.

- A public statement clarified that extension support remains essential for enterprise clients, even though current updates focus on Windsurf’s latest capabilities.

- Devin’s Autonomy Under Fire: Devin was introduced as a fully autonomous AI tool, prompting skepticism about its actual capabilities and whether human-in-the-loop input is still necessary.

- A few discussions compared it to a 'boy who cried wolf' scenario, referencing a blog post describing its performance over multiple tasks.

- Flow Credits and Model Matchups: Users reported rapid depletion of Windsurf flow credits as they repeatedly fixed AI-induced code errors, consuming over 10% of monthly quotas in a matter of hours.

- They also compared deepseek R1 with Sonnet 3.5, highlighting partial successes but calling for more consistent performance and smarter credit usage.

Unsloth AI (Daniel Han) Discord

-

DeepSeek & Qwen's Dynamic Duet: An integrated approach combining DeepSeek R1 with Qwen was applauded, with one user calling it 'damn near perfect' in terms of real-world performance.

- Community members recommended thorough evaluations before any fine-tuning to avoid harming the synergy, pointing to the Qwen 2.5 Coder collection for reference.

- Multi-GPU Hype & VRAM Chatter in Unsloth: Members confirmed Unsloth currently lacks full multi-GPU capabilities but teased future rollout to help large-scale training and reduce single-GPU bottlenecks.

- They noted VRAM usage is tied to model size, with Unsloth's documentation offering insights on memory constraints.

- 'Dolphin-R1' Makes a Splash with Sponsorship: Creating Dolphin-R1 cost $6k in API fees, spurring the developer to seek a backer for open release under Apache-2.0 licensing on Hugging Face.

- A sponsor stepped up, enabling the dataset to be shared with the community, while users praised the transparent approach to costs and data generation.

- Striped Hyena & the Eukaryote Expedition: A bioML postdoc wants to adapt Striped Hyena—trained on prokaryote genomes—for eukaryotic sequences, referencing Science ado9336 and the project repo.

- They highlighted that Unsloth hasn't fully embraced multi-GPU usage for large genomic data, spurring talk of more specialized training approaches for biomolecular tokens.

LM Studio Discord

-

DeepSeek Dilemmas & LM Studio Fixes: Users encountered errors like 'unknown pre-tokenizer type' while loading DeepSeek R1, prompting manual model updates and re-downloads.

- They referenced LM Studio docs for troubleshooting, praising quick solutions for persistent load failures.

- Qwen Quantization Quarrel: The group debated Q5_K_M as a sweet spot between model size and accuracy for Qwen models.

- Larger parameter sets appeared to deliver richer output, leading many to favor bigger footprints despite heavier GPU demands.

- Networking Nuisances in LM Studio: Contributors called for clearer toggles in LM Studio to differentiate localhost-only vs. all-IPs access across devices.

- They shared that ambiguous settings hamper multi-device usage, emphasizing a more direct labeling approach.

- Gemini 2.0 Gains Gusto: Enthusiasts commended Google's Gemini 2.0 Flash for extended context length and highly accurate parsing of legal documents.

- Comparisons with older models like o1 mini spotlighted Gemini's more sustained responses and sharper knowledge retention.

- RTX 5090 & Procyon Performance Chat: NVIDIA's RTX 5090 runs about 30% faster than a 4090 but doesn't fully exploit its 1.7x bandwidth for smaller LLMs, as seen in NVIDIA's official page.

- Procyon AI was suggested for uniform performance testing, highlighting model quantization and VRAM usage in consistent benchmarks.

Perplexity AI Discord

-

Perplexity’s Big Leap on Android: The Perplexity Assistant is now accessible on Android, enabling tasks across apps via this link.

- Voice activation remains a sticking point, though the new multimodal feature to identify real-world objects sparks interest.

- Mistral’s IPO Plan Sparks Speculation: Conversations center on Mistral aiming for an IPO, driving curiosity over potential expansions in its offerings.

- A YouTube video spotlights this move, and community members debate its impact on future model developments.

- DeepSeek R1 Surges in Performance Tests: Some claim DeepSeek R1 might outperform OpenAI in niche tasks, referencing a detailed exploration.

- Engineers see this as a sign of intensifying competition, urging more rigorous comparisons.

- Sonar Models Shift Strategy: The Sonar line dropped Sonar Huge for Sonar Large and hinted at Sonar Pro, fueling questions on performance gains.

- API disruptions, including 524 errors and SOC 2 compliance queries, underscore broader concerns about stability for enterprise use.

- PyCTC Decode & Community Projects: Developers are considering PyCTC Decode for specialized speech applications, directing peers to this link.

- Meanwhile, a music streaming concept and fresh AI prompt ideas reveal diverse experiments among contributors.

OpenRouter (Alex Atallah) Discord

-

Web Search Gains Ground: OpenRouter launched the Web Search API, priced at $4/1k results, enabling a default of 5 fetches per request when appending :online to a model, with docs at OpenRouter.

- They clarified that each query costs around $0.02 and joked about a premature announcement overshadowing the official rollout.

- Reasoning Tokens Exposed: OpenRouter introduced Reasoning Tokens for direct model insights, requiring

include_reasoning: true, as stated in this tweet.

- finish_reason standardization across multiple thinking models aims to unify the explanation style.

- Deepseek R1 Falters Under Load: Deepseek R1 faced responsiveness problems, occasionally hanging and failing to return results from Deepseek and DeepInfra, as described at DeepSeek R1 Distill Llama 70B.

- One user questioned whether the issues stemmed from inherent model flaws or service disruptions.

- Credits & Integration Hiccups: Some users reported API Key priority mix-ups, causing credit usage instead of intended Mistral integrations, while others grumbled about Web Search billing confusion.

- A workaround emerged in the form of Crypto Payments API, letting users buy credits outside standard payment methods.

aider (Paul Gauthier) Discord

-

Aider's Double-LLM Setup: Community members described configuring Aider to run multiple LLMs via

aider.conf.yaml, noting that chat modes default to a single model unless precisely set, as outlined in the installation doc.- They discovered that /chat-mode code can override a separate editor model, fueling confusion among those seeking tight control over each model's role.

- DeepSeek R1's Syntax Snags: Several voices shared that DeepSeek R1 struggles with syntax and context constraints during coding tasks, illustrated in this performance video.

- Some proposed feeding smaller bits of context, quoting 'we had better luck with partial references' as a quick fix.

- Anthropic's Citation Clarification: The new Citations API from Anthropic incorporates source links in Claude's responses, showcased in their announcement.

- Community members praised the simpler path to reliable references, remarking 'this eases the trouble of verifying sources' in generated text.

- Aider Logging for Large Projects: Participants tackled Aider prompts on bigger codebases by offloading output to a file, saving tokens and reducing clutter.

- They cited 'redirecting heavy terminal commands' as a helpful workflow to preserve clarity while capturing detailed logs.

- JetBrains AI Waitlist Buzz: Techies joined the JetBrains AI waitlist, hoping the IDE leader can stand against Cursor and Windsurf after earlier letdowns.

- Some criticized past JetBrains AI attempts but insisted 'JetBrains remains my go-to developer suite regardless of AI' due to robust IDE features.

OpenAI Discord

-

Operator’s Bold Debut & $200 Price Tag: At 10am PT, Sam Altman and team introduced Operator with a $200/month subscription in a YouTube demo.

- The community expressed excitement over its web browser integration, anticipating future expansions to user-driven browser selection.

- DeepSeek R1 Dominates O1 in Coding: Multiple side-by-side tests showed DeepSeek R1 surpassing O1 in coding tasks, even handling random prompts like 'POTATO THUNDERSTORM!' smoothly.

- Community members highlighted stronger code solutions and praised R1’s agility, predicting more intense comparisons to come.

- GPT Outage & Voice Feature Crash: Service disruptions caused GPT to throw 'bad gateway' errors and disabled voice capabilities, as tracked by OpenAI's status page.

- Users jokingly blamed LeBron James and Ronaldo, while official updates indicated ongoing fixes to restore the voice feature.

- Perplexity Assistant Gains Mobile Momentum: Several users praised the Perplexity Assistant as more efficient on mobile than existing OpenAI apps, sparking debate about user satisfaction.

- They criticized OpenAI’s pricing, hinting a shift in loyalty if alternate solutions continue to outperform ChatGPT on portability.

- Spiking Neural Networks Spark Mixed Reactions: Participants considered spiking neural networks for energy efficiency but worried about latency, citing uncertain returns in real implementations.

- Some saw them as both a dead end and a next step, prompting further inquiries into specialized tasks that might benefit from spiking models.

Yannick Kilcher Discord

-

OpenAI Operator Offers Automated Actions: OpenAI is preparing a new ChatGPT feature called Operator that can take actions in a user's browser and allow saving or sharing tasks.

- Community members expect a release this week, noting that it's not yet available in the API but might shape new ways of automating user workflows.

- R1 Qwen 2.5 32B Demands Hefty VRAM: Running R1 Distilled Qwen 2.5 32B in float16 format requires at least 64GB VRAM, or 32GB for q8, according to parameter sizing talks.

- Discussion highlights how 7B parameters at 16-bit need about 14B bytes of memory plus overhead for context windows.

- GSPN Gives Vision a 2D Twist: The new Generalized Spatial Propagation Network (GSPN) promises a 2D-capable attention mechanism optimized for vision tasks, capturing spatial structures more effectively.

- Members praised the Stability-Context Condition, which cuts down effective sequence length to √N and potentially improves context awareness in image data.

- MONA Method Minimizes Multi-Step Mischief: A proposed RL approach, MONA, uses short-sighted optimization plus far-sighted checks to curb multi-step reward hacking.

- Researchers tested MONA in scenarios prone to reward hacking, showing potential for preventing undesired behavior in reinforcement learning setups.

- IntellAgent Evaluates Agents with Simulated Dialogues: The IntellAgent project offers an open-source framework for generating and analyzing agent conversations, capturing fine-grained interaction details.

- Alongside the research paper, early adopters welcomed this approach for robust agent evaluation, focusing on a critique component that highlights conversational flaws.

Nous Research AI Discord

-

Evabyte's Compressed Chunked Attention: The new Evabyte architecture relies on a fully chunked linear attention design with multi-byte prediction, highlighted in this code snippet.

- Engineers pointed out its compressed memory footprint and improved throughput potential, shown through an internal attention sketch that underscores its large-scale efficiency.

- Tensorgrad Twists Tensors: The tensorgrad library from GitHub introduces named edges for user-friendly tensor operations, enabling commands like

kernel @ h_conv @ w_convwithout complicated indexing.

- It provides symbolic reasoning and matrix simplification, leveraging common subexpression elimination in forward and backward passes to boost performance.

- R1 Datasets Appear, Access Puzzle Persists: Participants confirmed that R1 datasets for distilled models are partially accessible, though details on precise download locations remain unclear.

- Curious researchers requested a direct repo link, hoping official clarification from Nous Research will resolve the confusion.

- Brains and Bits: MIT's Representation Convergence: MIT researchers observed that artificial neural networks trained on naturalistic input converge with biological systems, as indicated by this study.

- They found that model-to-brain alignment correlates with inter-model agreement across vision and language stimuli, suggesting a universal basis for certain neural computations.

- Diffusion Gains via TREAD Token Routing: A recent paper, TREAD: Token Routing for Efficient Architecture-agnostic Diffusion Training, tackles sample inefficiency by retaining token information instead of discarding it.

- Its authors claim increased integration across deeper layers, applicable to both transformer and state-space architectures, yielding lower compute costs for diffusion models.

Stackblitz (Bolt.new) Discord

-

Stripe-Supabase Saga: A user faced a 401 error hooking a Stripe webhook to a Supabase edge function, eventually isolating a verify_jwt misconfiguration.

- They corrected the JWT config and are checking the official docs to solidify the integration.

- Token Tangle: After switching from free to paid plans, users noted token allocation dropping from 300k to 150k, creating confusion about daily quotas.

- Some speculate paid plans remove automatic token renewals, prompting them to revisit StackBlitz registration for clarity.

- Bolt Chat Woes: Community members reported chat logs disappearing and needing a full StackBlitz reset to attempt fixes.

- They discussed persistent session strategies, citing bolt.new for possible solutions.

- 3D Display Dilemma: A user’s 3D model viewer attempt with a GLB file produced a white screen, indicating missing or incomplete setup steps.

- Guides recommended Google Model Viewer code, with further references suggested in Cursor Directory.

- Discord Payment Proposals: A user pitched a Discord login feature and new webhook acceptance system for simpler payments across Bolt.new.

- They also mentioned a token draw reward for friend invitations, aiming to boost community engagement with extra flair.

Latent Space Discord

-

Operator Takes the Digital Wheel: OpenAI introduced Operator, an agent that autonomously navigates a browser to perform tasks, as described in their blog post.

- Observers tested it for research tasks and raised concerns about CAPTCHA loops, referencing open-source analogs like Browser Use.

- Imagen 3 Soars Past Recraft-v3: Google’s Imagen 3 claimed the top text-to-image spot, surpassing Recraft-v3 by a 70-point margin on the Text-to-Image Arena leaderboard.

- Community members highlighted refined prompt handling, including a jellyfish on the beach scenario that impressed onlookers with fine details.

- DeepSeek RAG Minimizes Complexity: DeepSeek reroutes retrieval-augmented generation by permitting direct ingestion of extensive documents, as noted in discussion.

- KV caching boosts throughput, prompting some to declare classic RAG an anti pattern for large-scale use cases.

- Fireworks AI Underprices Transcription: Fireworks AI introduced a streaming transcription tool at $0.0032 per minute after a free period, detailed in their announcement.

- They claim near Whisper-v3-large quality with 300ms latency, placing them as a cost-effective alternative in live captioning.

- API Revenue Sharing Sparks Curiosity: Participants noted that OpenAI does not credit API usage toward ChatGPT subscriptions, raising questions on revenue distribution.

- They wondered if any provider pays users for API activity, but found no evidence of such arrangements.

GPU MODE Discord

-

R1’s Real Risk & DDoS Dilemma: Community members raised alarms about R1 due to its ease of jailbreak and the possibility of generating DDoS code, referencing how simple it is to manipulate AI systems in general.

- Some shared a link to the TDE Podcast #11 explaining end-to-end LLM solutions, wondering if these vulnerabilities might be mitigated with more robust code checks.

- Triton’s Tricky Step Sequencing: A contributor discovered step2 must run before step3 to avoid data overwriting issues, noting that step3 indirectly changes x_c in ways affecting the final outcome.

- They proposed testing changes directly on x instead of x_c for clarity, highlighting the subtle effect of variable manipulation in multipass kernels.

- CUDA 12.8 & Blackwell Gains: NVIDIA released CUDA 12.8 featuring Blackwell architecture support and new FP8/FP4 TensorCore instructions.

- Developers also mentioned Accel-Sim for GPU simulation and a tweet about 5th Generation TensorCore instructions, sparking debate on improved performance metrics.

- ComfyUI Calls for ML Engineers: ComfyUI is hiring machine learning engineers for its open source ecosystem, boasting a VC-backed model and big vision from the Bay Area.

- Interested parties can read more about the role in the job listing, as the team highlighted day-one model support from several top companies.

- Tiny GRPO & Reasoning Gym Roll Out: Developers unveiled the Tiny GRPO repository for minimal, hackable implementations at GitHub, encouraging contributions.

- They also kicked off the Reasoning Gym, focused on procedural reasoning tasks, inviting the community to propose new dataset ideas and expansions.

MCP (Glama) Discord

-

Timeout Tussle Tamed & Windows Woes: A user overcame the 60-second MCP server timeout, though they didn't share how they did it, which caught others' interest.

- Another user overcame hidden PATH settings on Windows and created a test.db file in Access, referencing the MCP Inspector tool to confirm stability.

- Container Clash: Podman vs Docker: Members debated the merits of Podman versus Docker, referencing Podman Installation steps for a simpler setup.

- While Podman is daemonless and more lightweight, many devs keep using Docker because of familiarity and broader tool integration.

- Code Lines for Crisp Edits: A user showcased a method for tracking line numbers in code to apply targeted changes, describing it as more efficient than older diff-based approaches.

- By highlighting improved reliability in bigger refactor tasks, the community found it an easier approach for complex code merges.

- Anthropic TS Client Stumbles & SSE Example Fix: A known bug with Anthropic TS client led some devs to switch to Python, as hinted in issue #118.

- One user admitted a copy-paste mistake in the SSE sample and linked a corrected clientSse.ts example to clarify custom header usage, also fielding questions on Node's EventSource reliability.

- Puppeteer Powers Web Interactions: A new mcp-puppeteer-linux package brings browser automation to LLMs, enabling navigation, screenshots, and element clicks.

- Community members praised its JavaScript execution features, calling it a potential game-changer for web-based testing workflows.

Nomic.ai (GPT4All) Discord

-

GPT4All Grows Gracefully: The new GPT4All v3.7.0 release includes Windows ARM support for Qualcomm and Microsoft SQ devices, though users must note CPU-only operation for now.

- The conversation spotlighted macOS crash fixes and advised uninstalling any GitHub-based workaround to revert to the official version.

- Code Interpreter Closes Cracks: The Code Interpreter saw upgrades with improved timeout handling and more flexible console.log usage for multiple arguments.

- Engineers praised this alignment with JavaScript expectations, highlighting easier debugging and smoother developer workflows.

- Chat Template Tangles Tamed: Two crashes and a compatibility glitch in the chat template parser were eliminated, delivering stability for EM German Mistral and five new models.

- Several members referenced GPT4All Docs on Chat Templates for deeper configuration and troubleshooting tips.

- Prompt Engineering Politesse Pays: Enthusiasts argued that refined requests, including polite words like 'Please,' can boost GPT4All responsiveness.

- They also joked about pay-to-play reality for unlimited ChatGPT, nudging colleagues to explore alternatives.

- NSFW and Jinja Jitters: Community members mentioned NSFW content hurdles, pointing to moral filters and zensors blocking explicit outputs.

- Others noted Jinja template complexities with C++-based GPT4All integrations, complicating custom syntax adoption.

Stability.ai (Stable Diffusion) Discord

-

CitiVAI's Quick Shutdowns: Members stated CitiVAI goes down a few times daily, referencing user experiences on r/StableDiffusion, which triggers sporadic restrictions on image creation.

- They explained that these intervals are planned as part of maintenance, with some suggesting an announcement schedule for better planning around downtime.

- Icy Mask Magic: A user shared how they combine black-and-white mask layers with Inkscape to craft ice-themed text, then color it with canny controlnet or direct prompts.

- Others discussed references like SwarmUI's Model Support docs for better integration of customized mask-based generation approaches.

- 5090 GPU Gains at a Cost: A discussion revealed that the 5090 GPU reportedly delivers 20-40% faster rendering but draws 30% more power, with deeper benefits appearing in the B100/B200 line.

- Participants pointed to data like finetuning results on an RTX 2070 consumer-tier GPU indicating consistent improvements for stable diffusion tasks.

- Training Cartoon Characters: Enthusiasts examined fine-tuning to replicate movie characters, referencing Alvin from Alvin and the Chipmunks LoRA model.

- They noted this method merely needs a mid-tier GPU and a bit of time, echoing examples with SwarmUI's prompt syntax tips.

- Clip Skip Clip-Out: A user asked if 'clip skip' is still relevant, discovering it's a leftover from SD1 evolution and rarely used now.

- The group concluded it’s generally unnecessary for modern stable diffusion setups, emphasizing that advanced prompting workflows supplant that old configuration.

Eleuther Discord

-

Google's Titans Flex Next-Gen Memory: Google introduced Titans, promising stronger inference-time memory, as shown in this YouTube video.

- The group noted the paper is hard to replicate, with lingering questions about the exact attention strategy.

- Egomotion Steps Up for Feature Learning: Researchers tested egomotion as a self-directed method, replacing labels with mobility data in this paper.

- They observed strong outcomes for scene recognition and object detection, sparking interest in motion-based training.

- Distributional Dynamic Programming Gains Momentum: A new approach called distributional dynamic programming tackles statistical functionals of return distributions, outlined in this paper.

- It features stock augmentation to broaden solutions once tricky to handle with standard reinforcement learning methods.

- Ruler Tasks Expand Long Context Possibilities: All Ruler tasks were finalized with minor formatting fixes, encouraging more extended context applications for the #lm-thunderdome channel.

- Contributors requested additional long context tasks, emphasizing efforts to push real-world testing boundaries.

LlamaIndex Discord

-

Open-Source RAG Gains Steam: Developers explored a detailed guide to build an open-source RAG system using LlamaIndex, Meta Llama 3, and TruLens, comparing a basic approach with Neo4j to a more agentic setup.

- They pitted OpenAI against Llama 3.2 to gauge performance, fueling excitement on self-hosted and flexible solutions.

- AI Chrome Extensions for Social Platforms: Members discussed a pair of Chrome extensions that harness LlamaIndex to boost the impact of X and LinkedIn posts.

- They praised these AI tools for improving engagement while expanding content creation possibilities.

- AgentWorkflow’s Big Boost: Enthusiasts praised the AgentWorkflow upgrades, highlighting improved speed and output quality over older builds.

- Several projects pivoted to these new features, crediting the revamp with eliminating previous bottlenecks.

- Multi-Agent Mayhem vs Tools: Discussions clarified how multiple agents can be active sequentially, leveraging async tool calls without clobbering one another's context.

- They also clarified that agents rely on tools but can serve as tools themselves in specialized roles.

- Memory Management and Link Glitches: Participants called for better memory modules, noting ChatMemoryBuffer may not optimize context usage, and summaries can add latency.

- A broken Agent tutorial led to 500 errors, prompting them to refer to run-llama GitHub repos for core documentation.

Cohere Discord

-

Cohere's Comical LCoT Conjecture: A member pushed Cohere to release LCoT meme model weights that handle logic and thinking, receiving a reminder of Cohere's enterprise focus.

- They shared a playful GIF to underscore community eagerness for more open experimentation.

- Pydantic's Perfect Pairing with Cohere: A user announced Pydantic now supports Cohere models, prompting excitement over simpler integration for developers.

- This update could streamline workflows and coding practices for anyone building with Cohere, although further release specifics were not detailed.

- Chain of Thought Chatter: Participants proposed cues like 'think before you act' and

- They noted that regular models lacking explicit trace training still gain partial reasoning advantages with well-structured prompts.

- Reranker Riddle: On-Prem Dreams: A user in Chile asked about on-prem hosting for Cohere Reranker to offset high latency from South America.

- No direct solutions surfaced, and they were encouraged to contact the sales team at support@cohere.com for alternatives.

- ASI Ambitions and Anxieties: Discussion covered Artificial Superintelligence (ASI) possibly exceeding human intellect, highlighting potential breakthroughs in healthcare and education.

- Members aired ethical concerns over misuse and noted no official Cohere documentation currently addresses ASI development.

Notebook LM Discord Discord

-

NotebookLM Nudges Study Gains: A member showcased their excitement about integrating NotebookLM into study workflows, including a YouTube video highlighting helpful note-organization features.

- They also discovered how Obsidian plugins can merge markdown notes effectively, fueling conversation on refined knowledge-sharing practices.

- DeepSeek-R1 Dissected in Podcast: A user posted a podcast episode dissecting DeepSeek-R1 Paper Analysis that explores the model's reasoning and RL-based improvements.

- They emphasized how reinforcement learning shapes smaller model development, sparking others to explore scale strategies.

- NotebookLM Language Frustrations: Users faced interruptions when trying to switch from Romanian to English in NotebookLM, with a URL parameter attempt leading to an error.

- Community members sought official methods for language updates, but the confusion persisted.

- High-Caliber Test Questions: One participant introduced a consistent pattern for generating multiple-choice test items from designated chapters in NotebookLM.

- They credited the approach for enabling repetitive success, thus streamlining exam preparation.

- Audio Glitches & Document Cross-Checks: Members encountered audio generation hiccups, including a tendency to draw from entire PDFs when prompts lacked specifics, and some reported playback issues for downloaded files.

- They also debated whether NotebookLM surpasses ChatGPT in analyzing legal documents, noting how cross-references could unveil atypical clauses.

Modular (Mojo 🔥) Discord

-

Mojo Goes Async: In #general, a new forum thread about asynchronous code popped up, highlighting the community's interest in coroutines despite limited official wrappers.

- Members cheered the direct link share, encouraging further discussion on code patterns and usage examples.

- MAX Builds Page Revs Up: The MAX Builds page now showcases community-built packages, shining a spotlight on expansions for Mojo-based projects.

- Contributors are recognized on launch, and anyone can submit a recipe.yaml to the Modular community repo for inclusion.

- No Override? No Worries!: A #mojo conversation confirmed there's no @override decorator in Mojo, with one member clarifying that structs don't enable inheritance anyway.

- This approach means function redefinitions proceed without special syntax, prompting detail-oriented code reviews.

- Generators Spark Discussion: A question arose about Python-style generators, noting the gap in many compiled languages.

- Participants suggested an async proposal requiring explicit yield exposure, pushing for future enhancements in coroutines.

- Reassignments & iadd Debates: Developers tackled read-only references in function definitions, distinguishing mut from owned usage.

- They also explored how iadd underpins +=, clarifying compositional behaviors in Mojo.

LAION Discord

-

Bud-E’s Emotional TTS Debut: Emotional Open Source TTS is heading to Bud-E soon, featuring a shared audio clip that demonstrates the approach’s progress.

- Members praised the expressive vocal range, calling it “an exciting step in audio-based projects” and anticipating further expansions in Bud-E.

- Distortion Dissection with pydub: A researcher is comparing waveforms of an original audio file against a noise-heavy variant using pydub, focusing on small vs. extreme distortion levels.

- They shared images highlighting slight vs. strong noise differences, illustrating improvements in audio exploration.

- Collaborative Colab Notebook: Members proposed notebook sharing with a Google Colab notebook to collectively refine code around audio transformations.

- Participants expressed interest in replicating the approach and offered suggestions for further refinements.

- Widgets for Waveform Comparisons: A request for IPython audio widgets in Colab aims to streamline before-and-after distortion evaluations.

- Members brainstormed potential code snippets, emphasizing simpler playback controls and side-by-side comparisons in the shared notebook.

DSPy Discord

-

Repo Spam Sparks Commotion: Concerns about a repo being spammed surfaced, with speculation tying it to a coin problem and describing it as 'super lame.'

- Some participants dismissed the correlation, shifting focus toward stronger content management efforts.

- Framework Inspiration Over Imitation: A user urged avoiding strict replication of existing frameworks, highlighting use-case alignment for targeted solutions.

- They advocated shaping toolkits around practical objectives rather than relying on others’ approaches.

- Email-Triggered REACT Agent in DSPy: A developer wanted to run a REACT agent via an email trigger and eventually succeeded by using a webhook.

- They cited DSPy’s readiness for external libraries, underscoring flexible trigger-to-agent workflows.

- OpenAI Model Gains Favor, Groq Stays in Play: A contributor praised the OpenAI model for its broad coverage and practicality across tasks.

- Another contributor mentioned Groq compatibility, signaling interest in multiple hardware backends.

tinygrad (George Hotz) Discord

-

Clipping the Bounty: llvm_bf16_cast Gains Traction: A contributor confirmed the llvm_bf16_cast bounty status and raised a PR a few hours earlier, effectively resolving the rewrite request.

- Attention now turns to new tasks, ensuring a stream of tinygrad bounty hunts for further GPU optimizations.

- ILP Takes the Stage with Shapetracker: A member unveiled an ILP-based approach for the shapetracker add problem, though it struggles with speed and requires an external solver.

- Still, the structured handling of shapes could pave the way for more precise rewrite operations in tinygrad.

- George Hotz Backs ILP-based Rewrite Simplifications: George Hotz took interest in the ILP approach, asking if there’s a PR and hinting at possible integration in tinygrad rewrite rules.

- This move could push tinygrad to adopt linear programming for more productive transformations.

- Masks and Views Collide: Merging Tactics Emerge: Participants discussed merging masks and views, suggesting a bounded representation could boost mask capabilities.

- They acknowledged increased complexity yet remain open to the idea of fusing masks for extended shape flexibility.

LLM Agents (Berkeley MOOC) Discord

-

Certificates Timetable Uncertain, MOOC Still Open: A participant asked about course certificates and ways to track distribution, but no official timeline was provided, prompting curiosity.

- Another participant was unsure about LLM MOOC enrollment, and discovered that simply filling out the form confirms participation.

- Agents Anticipate Course Mastery: A participant noted that being an LLM agent automatically grants access to the course, highlighting a high bar for success.

- They suggested that any agent who passes gains major credibility, reflecting the advanced nature of the LLM training.

Gorilla LLM (Berkeley Function Calling) Discord

-

BFCLV3: The Great Tool Mystery: A question arose about whether BFCLV3 provides a system message that outlines how tools like get_flight_cost and get_creditcard_balance interconnect before book_flight is called.

- Members observed no metadata on tool dependencies in tasks labeled simple, parallel, multiple, and parallel_multiple, linking to GitHub source for further detail.

- LLM Testing Methodology Under the Microscope: Participants debated whether BFCLV3 LLMs are tested purely on tool descriptions or if underlying dependency relationships are considered.

- They noted that understanding these relationships is critical for research, as citing details from the BFCLV3 dataset can shed light on real-world function call usage.

Axolotl AI Discord

-

KTO-Liger Lock Up: The KTO loss was merged in the Liger-Kernel repository, promising a boost for model performance and new capabilities.

- Community members expressed excitement about KTO loss and its immediate benefits, anticipating stronger training stability and improved generalization.

- Office Hours Countdown: A reminder went out that Office Hours begin in 4 hours, aimed at providing an interactive forum for questions and design reviews.

- Attendees can join via this Discord event link, expecting a lively exchange around ongoing LLM projects.

MLOps @Chipro Discord

-

Toronto MLOps Meetup on Feb 18: A MLOps event is scheduled in Toronto on February 18 for senior engineers and data scientists, providing a space to exchange field insights.

- Organizers mentioned attendees should direct message for more details, emphasizing the focus on professional networking and knowledge sharing.

- Networking Buzz for Senior Tech Pros: This gathering centers on strengthening connections among senior engineers and data scientists, encouraging peer support and resource sharing.

- Participants see it as a beneficial way to deepen community ties, fostering collaboration across the local AI ecosystem.

Mozilla AI Discord

-

Local-First Hackathon Lands in SF: A Local-First X AI Hackathon is set to take place in San Francisco on Feb. 22, featuring projects that blend local computing with generative AI.

- Organizers emphasized practical collaboration among participants, referring them to an event thread for idea exchange and resource sharing.

- Community Brainstorm Gathers Steam: A dedicated discussion thread encourages participants to share experimental strategies and privacy-preserving machine learning frameworks.

- Planners hope to foster real-world results by inviting local computing enthusiasts to showcase prototypes and code jam sessions during the hackathon.

OpenInterpreter Discord

-

Deepspeek Ties for OpenInterpreter: A user asked about integrating Deepspeek into

>interpreter --os mode, hoping to benefit OpenInterpreter with voice-focused functions.- They mentioned the potential synergy between Deepspeek and OS-level interpreter capabilities but provided no further technical details or links.

- OS Mode May Expand for Voice Features: Participants speculated future OS mode enhancements to accommodate speech-based operations in OpenInterpreter.

- Though plans remain unclear, the integration of Deepspeek might unlock advanced voice support and some level of system interaction.

The Torchtune Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!