[AINews] Olympus has dropped (aka, Amazon Nova Micro|Lite|Pro|Premier|Canvas|Reel)

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Amazon Bedrock is all you need?

AI News for 12/2/2024-12/3/2024. We checked 7 subreddits, 433 Twitters and 29 Discords (198 channels, and 2914 messages) for you. Estimated reading time saved (at 200wpm): 340 minutes. You can now tag @smol_ai for AINews discussions!

we apologize for the repeated emails yesterday. It was a platform bug we had no control over but we will watch closely as obviously we have zero desire to spam you/harm our own deliverability. fortunately ainews is also founded on the idea that email length and quantity is near (but not quite) free.

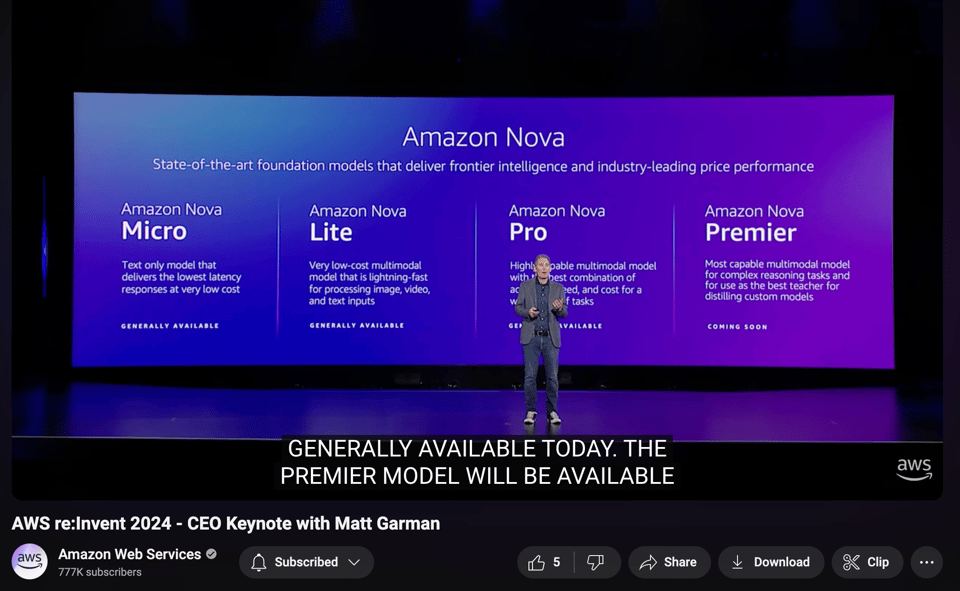

As widely rumored (as Olympus) in the past year, AWS Re:invent (full stream here) kicked off, ex-AWS and now Amazon CEO Andy Jassy had quite a bombshell to drop: their own, for real, actually competitive, not screwing around, set of multimodal foundation models, Amazon Nova (report, blog):

As an incredible (for a large tech player keynote) bonus, there is NO WAITLIST - Micro/Lite/Pro/Canvas/Reel are immediately Generally Available, with Premier and Speech-to-Speech and "Any-to-Any" coming next year.

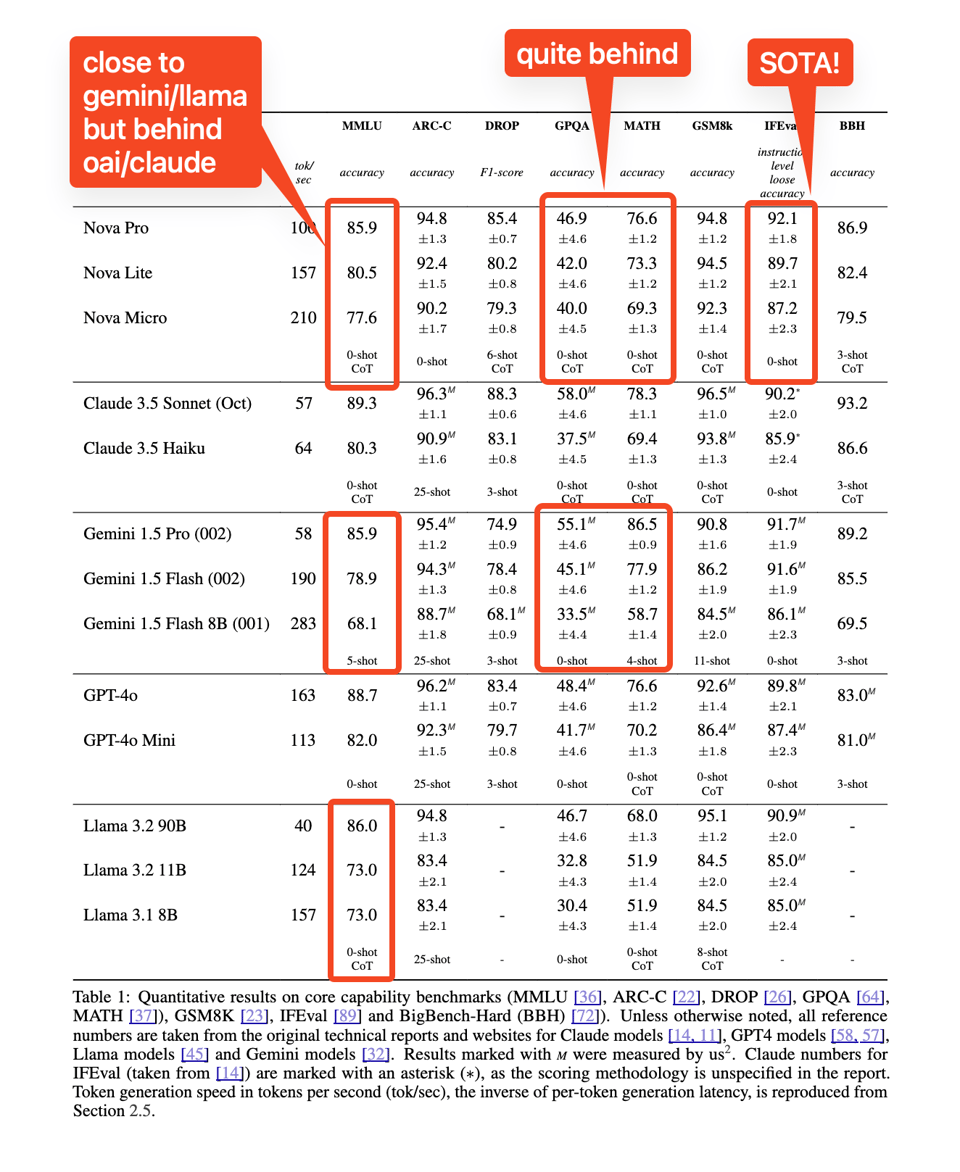

The LMArena elo is running now, but already this is a much more serious contender for real AI Engineer than the previous Titan generation. Not stressed in the keynote, but of high importance are both the high speed (2-4x faster tok/s vs Anthropic/OpenAI):

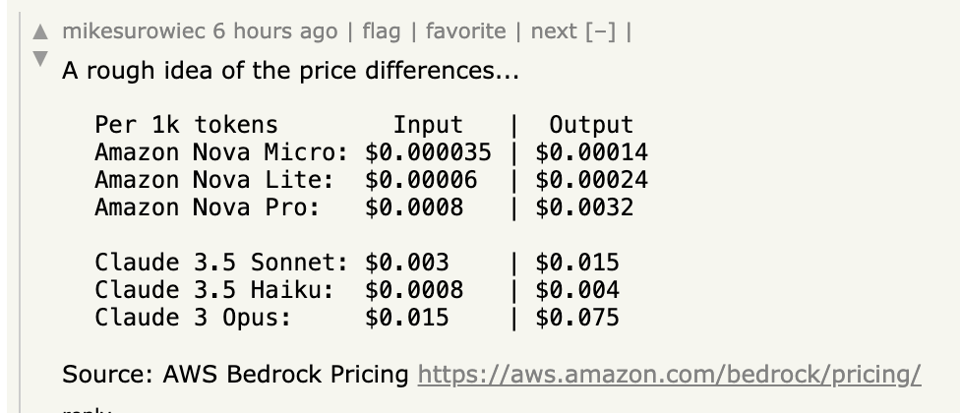

and low cost (25% - 400% cheaper than Claude equivalent):

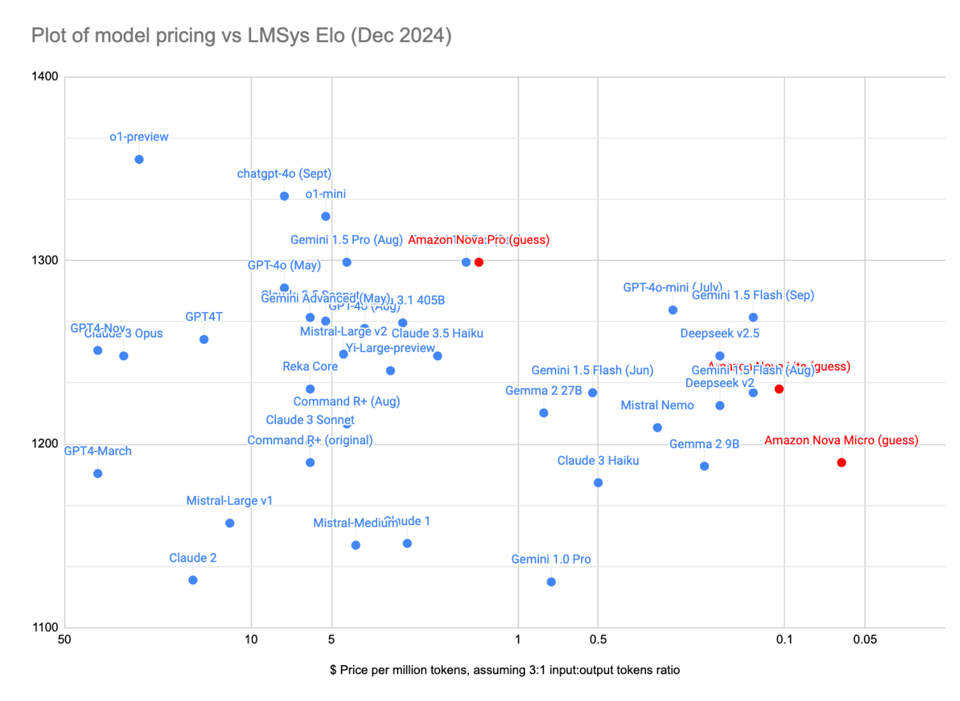

Imputing their Arena scores with their nearest neighbor equivalents, this offers near-frontier price-intelligence performance:

Of course, everyone is making comments about how this lines up with Amazon also investing $4bn in Anthropic, to which, the Everything Store CEO has one answer:

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

Theme 1. Amazon Nova Foundation Models: Release, Pricing, and Evaluation

- Amazon Nova Release Overview: @_philschmid provided a comprehensive overview of the new Amazon Nova models, highlighting their competitive pricing and benchmarks. Nova models are available via Amazon Bedrock, with multiple configurations including Micro, Lite, Pro, and Premier, and extend the context length up to 300k tokens for certain models.

- Pricing Strategy: Nova models undercut the prices of competitors like Google DeepMind Gemini Flash 8B, as noted by @_philschmid, with competitive input/output token pricing.

- Performance and Usage: According to @ArtificialAnlys, the Nova family models, particularly the Pro, outperform models like GPT-4o on specific benchmarks.

- Controversy over Evaluation and Benchmarking: A critical perspective came from @bindureddy, where despite the promising parameters, Nova was found to score below Llama-70B in LiveBench AI metrics. This reiterates the dynamic and competitive nature of model benchmarking.

Theme 2. CycleQD: Evolutionary Approach in Language Models

- CycleQD Methodology and Launch: The most significant discussion came from @SakanaAILabs, introducing CycleQD, a population-based model merging via Quality Diversity. The approach uses evolutionary computation to develop LLM agents with niche capabilities, aimed at lifelong learning. Another tweet by HARDMARU praised the ecological niche analogy as a compelling strategy for skill acquisition in AI systems.

Theme 3. AI Humor and Memes

- Funny Anecdotes and Humor: @arohan humorously shared a moment about forgetting to inform their partner about a six-month-old promotion. Meanwhile, @tom_doerr shared a meme about an "impossible" question, emphasizing the lighter sides of AI interaction.

- Social Media Humor: @teortaxesTex mentioned a tongue-in-cheek strategy regarding NFTs related to an "impossible" question.

Theme 4. Hugging Face Concerns and Community Response

- Storage Quotas and Open Models Controversy: @far__el and others aired grievances about Hugging Face storage limits, viewing it as a potential barrier for the AI open-source community. @mervenoyann clarified that Hugging Face remains generous with storage but emphasized their adaptability towards community-driven repositories.

- Emerging Competitors: @far__el announced OpenFace, an initiative for self-hosting AI models independent of Hugging Face, as a response to recent policy changes.

Theme 5. New and Noteworthy Model Innovations

- HunyuanVideo and Emoationally Attuned Models: @andrew_n_carr highlighted Tencent's HunyuanVideo, noting its open weights and contributions to the video-generation model landscape. Meanwhile, @reach_vb announced Indic-Parler TTS, an emotionally attuned text-to-speech model.

- Model Performance Updates: Discussions around GPT-4o performance updates, like a noticeable uptick in intelligence, were noted by @cognitivecompai.

Theme 6. AI Winter and Industry Outlook

- Concerns about AI's Future: @iScienceLuvr expressed concern about an impending AI winter, suggesting a slowdown or regression in AI advancement or investment enthusiasm, showcasing the fluctuating optimism in the AI industry.

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. HuggingFace Imposes 500GB Limit, Prioritizes Community Contributors

- Huggingface is not an unlimited model storage anymore: new limit is 500 Gb per free account (Score: 249, Comments: 76): HuggingFace introduced a 500GB storage limit for free-tier accounts, marking a shift from their previous unlimited storage policy. This change affects model storage capabilities for free users on the platform.

- Huggingface employee (VB) clarified this is a UI update for existing limits, not a new policy. The platform continues to offer storage and GPU grants for valuable community contributions like model quantization, datasets, and fine-tuning, while targeting misuse and spam.

- Community members reported significant storage usage, with one user at 8.61 TB/500 GB, and expressed concerns about the future availability of large models like LLaMA 65B (requiring ~130GB). Discussion centered around potential solutions including local storage and torrents.

- Users debated the business implications, noting that contributors already invest significant time and effort in creating quantized models for the community. The change prompted comparisons to YouTube's model where users pay to consume rather than upload content.

- Hugging Face added Text to SQL on all 250K+ Public Datasets - powered by Qwen 2.5 Coder 32B 🔥 (Score: 119, Comments: 11): Hugging Face has integrated Text-to-SQL capabilities across their 250,000+ public datasets using Qwen 2.5 Coder 32B model. This integration enables direct SQL query generation from natural language inputs across their entire public dataset collection.

- VB, GPU Poor at Hugging Face, confirmed the implementation uses DuckDB WASM for in-browser SQL query execution. The feature combines Qwen 2.5 32B Coder for query generation with browser-based execution capabilities.

- Users expressed enthusiasm about reducing the need to write SQL manually, particularly highlighting how this helps those less experienced with query writing.

- The announcement garnered positive reception with commenters appreciating the celebratory tone, including the use of confetti animations in the demonstration.

Theme 2. DeepSeek and Qwen Surpass Expectations, Challenge OpenAI's Position

- Open-weights AI models are BAD says OpenAI CEO Sam Altman. Because DeepSeek and Qwen 2.5? did what OpenAi supposed to do! (Score: 541, Comments: 216): DeepSeek and Qwen 2.5 open-source AI models from China demonstrate capabilities that rival closed-source alternatives, prompting Sam Altman to express concerns about open-weights models in a Fox News interview with Shannon Bream. The OpenAI CEO emphasizes the strategic importance of maintaining US leadership in AI development over China, while simultaneously facing criticism as Chinese open-source models achieve competitive performance levels.

- Community sentiment strongly criticizes Sam Altman and OpenAI's perceived hypocrisy, with users pointing out that their $157 billion valuation seems unjustified given the rising competition from open-source models. Many note that previous safety concerns about open-weights models appear unfounded.

- Users highlight that OpenAI's technological advantage or "moat" is rapidly diminishing, with Chinese models like DeepSeek and Qwen achieving competitive performance. Several comments suggest that OpenAI's main strength has been marketing rather than technological superiority.

- Multiple users reference OpenAI's deviation from its original open-source mission, citing early communications with Elon Musk and the company's current stance against open-weights models. The discussion suggests that OpenAI's business strategy relies heavily on maintaining closed-source advantages.

- Opensource is the way (Score: 60, Comments: 14): In a comparison of reasoning capabilities, open-source models (Deepseek R1 and QwQ) outperformed closed APIs (Claude Haiku and OpenAI) on complex reasoning questions, with R1 achieving the fastest correct solution in 25 seconds using Chain of Thought (CoT). A non-coding user found R1 and QwQ particularly helpful for coding tasks, while noting that Claude Sonnet's utility was limited by access restrictions and context length constraints in its free version.

- QwQ and GPT-4o usage comparison reveals that 4o has strict limits of 40 messages for regular users and 80 messages per 3 hours for Plus users, as detailed in OpenAI's FAQ.

- Users anticipate 2025 as the breakthrough year for open-source models, noting that QwQ is currently 5x less expensive than GPT-4o while delivering superior reasoning performance. Both QwQ and R1 are currently in preview/lite versions.

- The free version of GPT-4o is likely the 4o-mini variant, with users noting its performance limitations compared to QwQ based on benchmark results.

Theme 3. National Security Concerns Used to Push AI Regulation

- Open-Source AI = National Security: The Cry for Regulation Intensifies (Score: 114, Comments: 87): Media outlets and policymakers continue to link open-source AI development with national security threats, pushing for increased regulation and oversight. The narrative equates unrestricted AI development with potential security risks, though specific policy proposals remain undefined.

- Chinese AI models like Yi and Qwen are reportedly ahead of Western open-source efforts, with users noting they're not based on Llama. Multiple commenters point out that regulation of US open-source models would primarily benefit Chinese AI development.

- The discussion draws parallels between current AI regulation fears and historical resistance to open-source software in the early 2000s, particularly referencing the Microsoft/SCO situation. Users argue that like Linux, open-source AI will likely accelerate industry innovation.

- Users criticize the media narrative as fear-mongering aimed at establishing AI monopolies through regulation. Many reference Fox News' credibility on technology issues and suggest this is driven by corporate interests rather than legitimate security concerns.

Theme 4. New Tools: Open-WebUI Enhanced with Advanced Features

- 🧙♂️ Supercharged Open-WebUI: My Magical Toolkit for ArXiv, ImageGen, and AI Planning! 🔮 (Score: 97, Comments: 11): The author developed several tools for Open-WebUI, including an arXiv Search tool, Hugging Face Image Generator, and various function pipes like a Planner Agent using Monte Carlo Tree Search and Multi Model Conversations supporting up to 5 different AI models. Running on a setup with R7 5800X, 16GB DDR4, and RX6900XT, the author's AI stack includes Ollama, Open-webUI, OpenedAI-tts, ComfyUI, n8n, quadrant, and AnythingLLM, primarily using 8B Q6 or 14B Q4 models with 16k context, with code available at open-webui-tools and open-webui.

- Users suggested using Python 3.12 to enhance performance, though the developer indicated time constraints for implementation.

- Interest was expressed in the Monte Carlo Tree Search (MCTS) implementation for research summarization, though no specific details or papers were provided in the discussion.

- I built this tool to compare LLMs (Score: 297, Comments: 55): Model comparison tool mentioned without any specific details or functionality, making it impossible to provide a meaningful technical summary of the benchmarking capabilities or implementation details. No additional context provided about the actual tool or its features.

- Users suggested adding smaller language models to the comparison tool, specifically mentioning models like Gemma 2 2B, Llama 3.2 1B/3B, Qwen 2.5 1.5B/3B, and others for on-device applications like PocketPal.

- A significant discussion arose about token count normalization, with detailed analysis showing that Claude-3.5-Sonnet uses approximately twice the tokens compared to GPT-4o for the same input, affecting both cost calculations and context length comparisons.

- An important distinction was made between "Open Source" and "Open Weight" models, noting that the listed self-hostable models are technically Open Weight since their training data isn't publicly available.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

Theme 1. ChatGPT Used to Win $1180 Small Claims Court Case Against Landlord

- UPDATE: ChatGPT allowed me to sue my landlord without needing legal representation - AND I WON! (Score: 1028, Comments: 38): A tenant won a court case against Dr. Joe Prisinzano, co-principal of Jericho High School, who illegally charged a $2,175 security deposit (exceeding one month's $1,450 rent) and failed to repair a broken window during winter, with ChatGPT helping identify a 2019 law violation and prepare legal defense against a retaliatory $5,000 counterclaim. The court awarded the tenant $1,180 and dismissed the counterclaim, though Prisinzano threatened to appeal and pursue defamation charges, while the case gained viral attention through a TikTok video with over 1 million views by Sabrina Ramonov viral TikTok.

- The tenant argues this is a matter of public interest since Joe Prisinzano is an educational leader making $250,000 annually at one of the top US public high schools, with his unethical landlord behavior contradicting his leadership position and warranting public awareness.

- Multiple users shared similar experiences using ChatGPT for legal assistance, though cautioning against sole reliance on AI and recommending 1-hour legal consultations. One user noted that in New York, tenants can claim double damages for illegal security deposits and triple damages for undocumented withholding.

- The original judge ruled conservatively on the tenant's $4,000 punitive damages request, granting only the illegal deposit return and a 7% rent abatement for the broken window period, despite the landlord's written admission of knowingly breaking the law.

Theme 2. HunyuanVideo Claims State-of-Art Video Generation, Beats Gen3 & Luma

- SANA, NVidia Image Generation model is finally out (Score: 136, Comments: 78): SANA, NVIDIA's image generation model, has been released publicly. No additional details were provided in the post body about model capabilities, architecture, or source code location.

- License restrictions are significant - model can only be used non-commercially, must run on NVIDIA processors, requires NSFW filtering, and gives NVIDIA commercial rights to derivative works. The model is available on HuggingFace.

- Technical requirements include 32GB VRAM for training both 0.6B and 1.6B models, with inference requiring 9GB and 12GB VRAM respectively. A future quantized version promises to require less than 8GB for inference.

- The model uses a decoder-only LLM (possibly Gemma 2B) as text encoder instead of T5, and while it's noted to be extremely fast, users report image quality issues and text generation capabilities inferior to Flux. A demo is available at nv-sana.mit.edu.

- Tencent Hunyuan-Video : Beats Gen3 & Luma for text-video Generation. (Score: 37, Comments: 15): Tencent released Hunyuan-video, an open-source text-to-video model that claims to outperform closed-source competitors Gen3 and Luma1.6 in testing. The model includes audio generation capabilities and can be previewed in their demo video.

- The model is available on GitHub and Hugging Face, with an official project page at Tencent Hunyuan.

- System requirements include 60GB GPU memory for 720x1280 resolution with 129 frames, or 45GB for 544x960 resolution with 129 frames, prompting humorous comments about running it on consumer GPUs.

- ComfyUI integration is listed as a future development item in the project's roadmap, suggesting expanded accessibility is planned.

Theme 3. ChatGPT Parent OpenAI Considers Adding Advertisements

- We definitely should be concerned that 2025 starts with W T F (Score: 190, Comments: 157): OpenAI's plans to implement advertising in ChatGPT by 2025 sparked concerns about the future direction of AI monetization. The community expressed skepticism about this development, questioning its implications for user experience and the broader impact on AI business models.

- Data monetization concerns dominate discussions, with users predicting evolution from "promoted suggestions" for free users to eventual sponsored content across all tiers. Community expects integration of targeted advertising based on conversation data, similar to Prime Video's advertising model.

- The concept of "enshittification" emerged as a key theme, with users anticipating a shift from user-focused service to revenue maximization. Multiple commenters pointed to Claude and local LLMs (like Llama, QWQ, and Qwen) as potential alternatives.

- Users expressed concern about AI-generated advertising's potential for subtle manipulation, noting that traditional ad blockers may be ineffective against AI-integrated promotional content. Discussion highlighted how ChatGPT's conversational nature could make sponsored content particularly difficult to identify or regulate.

- Ads might be coming to ChatGPT — despite Sam Altman not being a fan (Score: 70, Comments: 108): OpenAI may introduce advertisements into ChatGPT, despite CEO Sam Altman's previously stated aversion to ad-based revenue models. The title alone suggests a potential shift in OpenAI's monetization strategy, though no specific timeline or implementation details are provided.

- User reactions are overwhelmingly negative, with many stating they would cancel subscriptions immediately if ads are implemented. Multiple users draw parallels to streaming services like Prime Video and Disney+ that introduced ads even to paid tiers.

- The original article appears to be clickbait, as noted by users pointing out that OpenAI has "no active plans" to add ads, with some suggesting this is merely testing public reaction. The top comment clarifying this received 177 upvotes.

- Users express concerns about ad-based incentives compromising ChatGPT's integrity, comparing it to the difference between trusted friend recommendations versus commissioned salespeople. Several comments highlight how ads typically expand from free tiers to paid services over time, citing cable TV and streaming platforms as examples.

Theme 4. Vodafone's AI Commercial Shows New Benchmark in AI Video Production

- Absolutely incredible AI ad by Vodafone. Much much better than Coca-cola's attempt. (Score: 163, Comments: 63): Vodafone created an AI-generated commercial that received positive reception from viewers, with commenters specifically comparing it favorably against Coca-Cola's previous AI advertising attempt. No additional details about the commercial's content or creation process were provided in the post.

- Viewer reactions were largely negative, criticizing the ad's lack of coherence and overuse of stereotypical shots. Multiple users pointed out the commercial's poor watchability without sound and overwhelming number of disconnected scenes.

- The commercial's cost efficiency was highlighted, estimated at "one tenth of an ordinary commercial." Users debated whether the technical achievement outweighed its artistic merit, with the video editor receiving more praise than the AI itself.

- Discussion centered on the industry implications, particularly regarding the potential displacement of actors and traditional production crews. A Campaign Live article about the commercial's creation was referenced but remained paywalled.

AI Discord Recap

A summary of Summaries of Summaries by O1-preview

Theme 1: New Optimizers and Training Techniques Revolutionize AI

- DeMo Decentralizes Model Training: Nous Research unveils the DeMo optimizer, enabling decentralized pre-training of 15B models via Nous DisTrO with performance matching centralized methods. The live run showcases its efficiency and can be watched here.

- Axolotl Integrates ADOPT Optimizer: Axolotl AI incorporates the latest ADOPT optimizer, offering optimal convergence with any beta value and enhancing model training efficiency. Engineers are invited to experiment with these enhancements in the updated codebase.

- Pydantic AI Bridges LLM Integration: The launch of Pydantic AI provides seamless integration with LLMs, enhancing AI applications. It also integrates with DSPy's DSLModel, streamlining development workflows for AI engineers.

Theme 2: New AI Models Stir Excitement and Debate

- Amazon's Nova Takes Aim at GPT-4o: Amazon releases Nova foundation models via Bedrock, boasting competitive capabilities and cost-effective pricing. Nova supports day 0 integration and expands the AI model landscape with affordable options.

- Hunyuan Video Sets Text-to-Video Bar High: Tencent's Hunyuan Video launches as a leading open-source text-to-video model, impressing users despite high resource demands. Initial feedback is positive, with anticipation for future efficiency optimizations.

- Sana Model's Efficiency Under Scrutiny: The Stability.ai community debates the new Sana model, questioning its practical advantages over existing models like Flux. Some suggest that using prior models might yield similar or better results.

Theme 3: AI Tools Face Performance and Update Challenges

- Cursor IDE's Lag Drives Users to Windsurf: Frustrated with Cursor IDE's lag in Next.js projects, users return to Windsurf due to Cursor's persistent performance issues. Cursor's syntax highlighting and chat functionality also receive criticism for causing "visual discomfort" and hindering usability.

- OpenInterpreter Revamps for Speed and Smarts: A complete rewrite of OpenInterpreter's development branch results in a "lighter, faster, and smarter" tool. The new

--serveoption introduces an OpenAI-compatible REST server, enhancing accessibility and usability. - Unsloth AI Fine-Tuning Hits Snags: Users struggle with LoRA fine-tuning on Llama 3.2 and face xformers compatibility issues, leading to shared community fixes. Challenges include OOM errors and discrepancies in sequence length configurations during training.

Theme 4: Community Explores AI Methods and Frameworks

- Function Calling vs MCP: Clash of AI State Managers: Nous Research debates the merits of function calling versus the Model Context Protocol (MCP) for managing AI model state and actions, highlighting confusion and the need for clearer guidelines on their respective applications.

- ReAct Paradigm's Effectiveness Depends on Implementation: LLM Agents course participants emphasize that ReAct's success depends on implementation specifics like prompt design and state management. Benchmarks should reflect these details due to "fuzzy definitions" in the AI field.

- DSPy and Pydantic AI Enhance Developer Workflows: DSPy integrates Pydantic AI, allowing efficient development with DSLModel. Live demos showcase advanced AI development techniques, sparking excitement for implementing Pydantic features in projects.

Theme 5: AI Community Engages in Opportunities and Events

- Ex-Googlers Launch New Venture, Invite Collaborators: Raiza departs Google after 5.5 years to start a new company with former NotebookLM team members, inviting others to join via hello@raiza.ai. They celebrate significant achievements and plan to build innovative products with the community.

- Sierra AI Scouts Talent at Info Session: Sierra AI hosts an exclusive info session unveiling their Agent OS and Agent SDK, while seeking talented developers to join their team. Participants can RSVP here to secure their spot for this opportunity.

- Multi-Agent Meetup Highlights Collaborative Innovation: The upcoming Multi-Agent Meetup at GitHub HQ features experts discussing automating tasks with CrewAI and evaluating agents with Arize AI, fostering collaboration in agentic retrieval applications.

PART 1: High level Discord summaries

Nous Research AI Discord

- Decentralized Pre-training with DisTrO: Nous has initiated a decentralized pre-training of a 15B parameter language model using Nous DisTrO and hardware from partners like Oracle and Lambda Labs, showcasing a loss curve that matches or exceeds traditional centralized training with AdamW.

- The live run can be watched here, and the accompanying DeMo paper and code will be announced soon.

- DeMo Optimizer Release: The DeMo optimizer enables training neural networks in parallel by synchronizing only minimal model states during each optimization step, enhancing convergence while reducing inter-accelerator communication.

- Details about this approach are available in the DeMo paper, and the source code can be accessed on GitHub.

- DisTrO Training Update: The ongoing DisTrO training run is nearing completion, with specific details on hardware and user contributions expected by the end of the week.

- This run serves primarily as a test, and there may not be immediate public registries or tutorials available for users.

- Function Calling vs MCP in AI Models: Function calling is utilized to manage state and actions within AI models, while MCP offers alternative advantages for implementing complex functionalities.

- There is some confusion distinguishing MCP from function calling, underscoring the need for clearer guidelines on their respective applications.

- Using Smaller Models for Specific Tasks: Smaller AI models can outperform larger ones in certain creative tasks, offering benefits such as faster processing and reduced resource usage.

- A balanced approach is suggested where smaller models handle state management while larger models are reserved for more intensive tasks like storytelling.

Eleuther Discord

- JAX Adoption Accelerates in Major AI Labs: Members revealed that Anthropic, DeepMind, and other leading AI labs are increasingly utilizing JAX for their models, though the extent of its primary usage varies across organizations.

- There is ongoing debate about JAX's dominance over PyTorch, with calls for greater transparency regarding industry adoption rates and practices.

- Vendor Lock-in Raises Concerns in Academic Curricula: Discussions highlighted the vendor lock-in issue in academia, where tech companies influence university programs by supplying resources for specific frameworks like PyTorch and JAX.

- Opinions are split; some see benefits in established partnerships, while others worry about limiting students' exposure to a broader range of tools and frameworks.

- DeMo Optimizer Enhances Large-Scale Model Training: The DeMo optimizer introduces a technique to minimize inter-accelerator communication by decoupling momentum updates, which leads to better convergence without full synchronization on high-speed networks.

- Its minimalist design reduces the optimizer state size by 4 bytes per parameter, making it advantageous for training extensive models.

- Externalizing Evals via Hugging Face Proposed: A proposal was made to allow evals to be externally loadable through Hugging Face, similar to how datasets and models are integrated.

- This approach could simplify the loading process for datasets and associated eval YAML files, though concerns about visibility and versioning need to be addressed to ensure reproducibility.

- wall_clock_breakdown Configures Detailed Logging: Members identified that the wall_clock_breakdown configuration option enables detailed logging messages, including optimizer timing metrics like optimizer_allgather and fwd_microstep.

- Clarifications confirmed that enabling this option is essential for generating in-depth performance logs, aiding in performance diagnostics and optimization.

Modular (Mojo 🔥) Discord

- Mojo Socket Communication Delays: The implementation of socket communication in Mojo is postponed due to pending language features, with plans for a standard library that supports swappable network backends like POSIX sockets.

- A major rewrite is scheduled to ensure proper integration once these language features are available.

- Mojo's SIMD Support Simplifies Programming: Discussion highlighted Mojo's SIMD support as simplifying SIMD programming compared to C/C++ intrinsics, which are often chaotic.

- The goal is to map more intrinsics to the standard library in future updates to minimize direct usage.

- High-Performance File Server Project in Mojo: A project aiming to develop a high-performance file server for a game is targeting a 30% higher packets per second rate than Nginx.

- Currently, the project utilizes external calls for networking until the delayed socket communication features become available.

- Reference Trait Proposal for Mojo: A proposal for a

Referencetrait in Mojo aims to enhance the management of mutable and readable references within Mojo code.- This approach is expected to improve borrow-checking and reduce confusion regarding mutability in function arguments.

- Magic Package Distribution Launch: Magic Package Distribution is in development with an early access preview rolling out soon, enabling community members to distribute packages through Magic.

- The team is seeking testers to refine the feature, inviting members to commit by reacting with 🔍 for package reviewing or 🧪 for installation.

aider (Paul Gauthier) Discord

- OpenRouter Performance Lags Behind Direct API: Benchmark analysis revealed that models accessed via OpenRouter deliver inferior performance compared to those accessed directly through the API, sparking discussions on optimization strategies.

- Users are collaboratively exploring solutions to enhance OpenRouter's efficiency, indicating a community-driven effort to resolve the discrepancies.

- Aider Rolls Out Enhanced Features for Developers: Aider's latest

--watch-filesfeature streamlines AI instruction integration into coding workflows, alongside functionalities like/save,/add, and context modification, as detailed in their options reference.- These updates have been well-received, with users noting improved transparency and a more informed programming experience.

- Amazon Unveils Six New Foundation Models at re:Invent: During re:Invent, Amazon announced six new foundation models, including Micro, Lite, and Canvas, emphasizing their multimodal capabilities and competitive pricing.

- These models will be exclusively available via Amazon Bedrock and are positioned as cost-effective alternatives to other US frontier models.

- Enhancing Aider's Context with Model Context Protocol: Users have been integrating the Model Context Protocol (MCP) to improve Aider's context management capabilities, particularly in code-related scenarios, as discussed in this video.

- Tools like IndyDevDan's agent and Crawl4AI are being utilized to create optimized documentation for seamless LLM integration.

- Resolving Aider Update Challenges with Python 3.12: Updating Aider to version 0.66.0 encountered issues, including command failures during package installation, which were resolved by explicitly invoking the Python 3.12 interpreter as outlined in the pipx installation guide.

- This approach has enabled users to successfully upgrade and leverage the latest features without recurring issues.

Cursor IDE Discord

- Cursor Lags in Next.js Projects: Users reported that Cursor experiences significant lag when developing with Next.js on medium to large projects, necessitating frequent 'Reload Window' commands.

- Performance varies based on system RAM, with those on 16GB experiencing more lag compared to 32GB setups, raising concerns about Cursor's performance consistency.

- Windsurf Outperforms Cursor Reliability: Some users reverted to Windsurf due to the ineffective repeat of fixes in the latest Cursor updates.

- They highlighted that Windsurf's agent successfully edits multiple files without losing comments, a functionality currently lacking in Cursor.

- Feature Requests for Cursor Agent: Members are requesting the addition of the @web feature in the Cursor Agent to enhance real-time information access.

- Issues with the agent not recognizing file changes were cited, leading to frustrations regarding its reliability.

- Cursor's Syntax Highlighting Drawbacks: First-time users reported that Cursor's syntax highlighting causes visual discomfort and hinders usability.

- Complaints include the malfunctioning of various VS Code addons within Cursor, detracting from the overall user experience.

- Post-Update Chat Issues in Cursor: After the recent update, users experienced problems with Cursor's chat functionality, including model hallucinations and inconsistent performance.

- Feedback indicates a decline in model quality, making coding tasks more challenging and frustrating.

Perplexity AI Discord

- Perplexity AI Faces Performance Slowdowns: Several users are encountering persistent slowdowns and infinite loading issues while using Perplexity AI's features, indicating potential scaling issues.

- These performance problems have also been observed on other platforms, leading users to consider transitioning to API services for a more stable experience.

- Users Explore Image Generation Capabilities: Discussions on image generation tools involved sharing prompts that yield unexpected, often creative results.

- Users experimented with quantum-themed prompts to generate unique visual outputs, demonstrating the versatile applications of image generation models.

- Amazon Nova Compared to ChatGPT and Claude: There were insightful comparisons of AI models, particularly between Amazon Nova and platforms like ChatGPT and Claude.

- Users evaluated the effectiveness of various foundational models based on specific tasks and their integration with tools like Perplexity.

- Issues with Google Gemini and Drive Integration: A user highlighted inconsistent access to Google Drive documents via Google Gemini, questioning its reliability.

- Concerns were raised about whether advanced features are restricted to paid versions, prompting users to seek practical demonstrations.

- API Error Responses and User Workarounds: Users reported intermittent API errors such as

unable to complete request, leading to confusion.- A temporary workaround involves adding a prefix to user prompts, mitigating error occurrences while awaiting a resolution.

Unsloth AI (Daniel Han) Discord

- LoRA Finetuning with Llama 3.2: Users reported challenges in fine-tuning Llama 3.2 using LoRA, particularly in transitioning from tokenization to processor management, with suggestions to modify Colab notebooks for successful execution.

- Troubleshooting steps included addressing xformers installation issues and ensuring compatibility with current PyTorch and CUDA versions.

- Model Compatibility and xformers Issues: Several users encountered compatibility problems with xformers related to their existing PyTorch and CUDA environments, resulting in runtime errors.

- Recommendations involved reinstalling xformers with matching versions, as well as verifying dependencies to resolve these issues.

- QWen2 VL 7B Fine-Tuning with LLaVA-CoT: A member fine-tuned QWen2 VL 7B using the LLaVA-CoT dataset and released the training script and dataset for community use.

- The resultant model features 8.29B parameters and utilizes BF16 tensor type, with the training script accessible here.

- GGUF Conversion Challenges in Unsloth Models: Users faced issues saving models to GGUF, encountering runtime errors about missing files like 'llama.cpp/llama-quantize' during the conversion process.

- Attempts to resolve these issues by restarting Colab were unsuccessful, suggesting potential recent changes in the underlying library.

- Partially Trainable Embeddings in Training Models: A user discussed creating partially trainable embeddings but faced challenges with the forward function not being called during training.

- Community feedback indicated that the model might be directly accessing weights instead of the modified head, necessitating deeper integration.

Notebook LM Discord Discord

- Raiza Exits Google to Launch New Venture: Raiza announced their departure from Google after 5.5 years, highlighting significant achievements with the NotebookLM team and the development of a product used by millions.

- Raiza is initiating a new company with two former NotebookLM members, inviting collaborators to join via werebuilding.ai and contact at hello@raiza.ai.

- Creative Uses of NotebookLM for Scripts and Podcasts: Users detailed leveraging NotebookLM for scriptwriting, developing detailed camera and lighting setups, and integrating scripts into video projects.

- Another user successfully generates long podcast episodes by outlining content chapter by chapter, utilizing Eleven Labs for audio and visuals in documentary-style projects.

- Enhancing Document Management with PDF OCR Tools: Discussions highlighted the use of PDF24 for applying OCR to scanned documents, transforming them into searchable PDFs with robust security protocols.

- PDF24 is recommended for converting images and photos into searchable formats, streamlining document usability without requiring installation or registration.

- Feature Requests and Integration Challenges in NotebookLM: Users expressed a need for unlimited audio generations in NotebookLM, suggesting potential subscription models to increase daily limits beyond the current 20.

- Challenges with processing long PDFs were noted, with speculation that Gemini 1.5 Pro may offer better capabilities, alongside frustrations about inconsistent Google Drive integration.

- Advancements and Issues in Multilingual AI Support: There were inquiries about changing language settings in NotebookLM to support outputs in languages other than English, with current guidance pointing to altering Google account settings.

- Users reported varying success rates in AI-generated language outputs, particularly with accents like Scottish or Polish, indicating areas for improvement in multilingual capabilities.

OpenAI Discord

- Italy's AI Regulation Act Enforces Data Removal: Italy announced plans to ban AI platforms like OpenAI unless users can request the removal of their data, sparking debates on regulatory effectiveness.

- Concerns were raised about the ineffectiveness of geolocation bans, with discussions on potential user workarounds to bypass these restrictions.

- ChatGPT Plus Plan Suffers Feature Malfunctions: Users reported that after subscribing to the ChatGPT Plus plan for $20, features such as image generation and file reading are not functioning correctly.

- Additionally, several members noted that the responses they receive appear outdated, with the issue persisting for over a week.

- GPT Faces Functionality Issues in Billing Compilation: A user highlighted problems with a GPT designed to compile billing hours, mentioning that it forgets entries and struggles to produce an XLS-compatible list.

- Humorous speculation arose questioning if the GPT is bored with the work, reflecting user frustration with the tool's reliability.

- Leveraging Custom Instructions to Tailor ChatGPT: Members are utilizing custom instructions to adjust ChatGPT's writing style, distinguishing this method from creating new GPTs.

- Providing example texts was recommended to help ChatGPT adapt its output, enhancing alignment with user-specific storytelling preferences.

- Enhancing Prompt Engineering Skills Among AI Engineers: AI engineers expressed interest in accessing free or low-cost resources to improve their prompt engineering for developing custom GPTs with OpenAI ChatGPT.

- Discussions emphasized the importance of refining interaction techniques to maximize the effectiveness and capabilities of ChatGPT.

OpenRouter (Alex Atallah) Discord

- Model removals and price reductions: Two models,

nousresearch/hermes-3-llama-3.1-405bandliquid/lfm-40b, have been removed from availability, prompting users to add credits to maintain their API requests.- Significant price reductions have been made: nousresearch/hermes-3-llama-3.1-405b decreased from 4.5 to 0.9 per million tokens and liquid/lfm-40b from 1 to 0.15, offering more affordable alternatives post-removal.

- Hermes 405B Model Removal: Hermes 405B is no longer available, signaling the model's phase-out, with users debating the cost of alternatives and favoring existing free models.

- The removal raised concerns over model availability, as some users consider purchasing increasingly priced models, while others stick with free options.

- OpenRouter API Key Management: OpenRouter now supports creation and management of API keys, allowing users to set and adjust credit limits per key without automatic resets.

- Users maintain control over their application access by managing key usage manually, ensuring secure and regulated API access.

- Gemini Flash Errors: Users encountered transient 525 Cloudflare errors while accessing Gemini Flash, which quickly resolved themselves.

- The model's instability was noted, with recommendations to verify its functionality via OpenRouter's chat interface.

- BYOK Access Update: The team announced that BYOK (Bring Your Own Key) access will soon be available to all users, though the private beta phase is currently paused.

- Ongoing adjustments are being made to address existing issues before rolling out the feature widely.

Stability.ai (Stable Diffusion) Discord

- Mastering LORA Creation: Users shared strategies for creating effective LORAs, such as using a background LORA made from images and refining outputs with software like Photoshop or Krita.

- One member advised refining generated images before training to ensure higher quality outcomes.

- Stable Diffusion Setup Tips: Multiple users sought guidance on setting up Stable Diffusion, with recommendations including using ComfyUI - Getting Started and various cloud-based options.

- Members emphasized the importance of deciding between running locally or utilizing cloud GPUs, recommending Vast.ai for GPU rentals.

- Scammer Alert Strategies: Concerns about scammers in the server led users to share warnings and advised reporting suspicious accounts to Discord.

- Users discussed recognizing phishing attempts and how certain accounts impersonate support to deceive members.

- Comparing GPU Performance: The conversation highlighted differences in GPU performance, with users comparing experiences across different models and emphasizing the importance of memory and speed.

- A user noted that cheaper cloud GPU options may offer better overall performance compared to local setups due to electricity costs.

- Evaluating the Sana Model: Members discussed a new model called Sana, noting its efficiency and quality compared to prior versions, with some skepticism about its commercial usage.

- It was suggested that for everyday purposes, using Flux or previous models might yield similar or better results.

Latent Space Discord

- Pydantic AI Integrates with LLMs: The new Agent Framework from Pydantic is now live, aiming to seamlessly integrate with LLMs to enable innovative AI applications.

- However, some users express skepticism about its differentiation from existing frameworks like LangChain, suggesting it closely resembles current solutions.

- Bolt Rockets to $8M ARR in 2 Months: Bolt has surpassed $8M ARR within just 2 months as a Claude Wrapper, featuring guests like @ericsimons40 and @itamar_mar.

- The podcast episode delves into Bolt's growth strategies and includes discussions on code agent engineering, highlighting collaborations with @QodoAI and the debut of StackBlitz.

- Tencent Launches Hunyuan Video as Open-Source Leader: Tencent has released Hunyuan Video, establishing it as a premier open-source text-to-video technology known for its high quality.

- Initial user feedback points out the high resource demands for rendering, though there's optimism about forthcoming efficiency enhancements.

- Amazon Unveils Nova Foundation Model: Amazon announced its new foundation model, Nova, positioned to rival advanced models like GPT-4o.

- Early evaluations indicate promise, but user experiences remain mixed, with some not finding it as impressive as Amazon's previous model releases.

- ChatGPT Faces Name Filtering Glitch: ChatGPT is experiencing an issue where specific names, such as David Mayer, trigger response abortions due to a system glitch.

- This problem does not impact the OpenAI API and has sparked discussions on how name associations may affect AI behavior.

Cohere Discord

- Rerank 3.5 Enhances Multilingual Search: Cohere has launched Rerank 3.5, offering enhanced reasoning capabilities and support for over 100 languages such as Arabic, French, Japanese, and Korean via the

rerank-v3.5API.- Users have expressed enthusiasm about the improved performance and its compatibility with various data formats, including multimedia content.

- Cohere Announces API Deprecations: Cohere has announced the deprecation of older models, providing details on deprecated endpoints and recommended replacements as part of their model lifecycle management.

- This move affects applications reliant on legacy models, prompting developers to update their integrations accordingly.

- Harmony Project Launches NLP Harmonisation Tools: The Harmony project introduces NLP tools for harmonizing questionnaire items and metadata, enabling researchers to compare questionnaire items across studies.

- Based at UCL, the project is collaborating with multiple universities and professionals to refine its doc retrieval capabilities.

- API Key Delays Trigger TooManyRequestsError: Users have reported encountering TooManyRequestsError despite upgrading to production API keys, attributing the issue to potential API key setup delays.

- Support has been advised to contact support@cohere.com for assistance, with indications that setup delays are typically minimal.

- Stripe Integration Causes Payment Issues: Some users have experienced credit card payment issues with Cohere's platform, despite previous successful transactions.

- Members suggest the problem may lie with the user's bank and recommend reaching out to support@cohere.com, as payments are processed via Stripe.

GPU MODE Discord

- Xmma and Nvjet Outperform Cutlass in Select Benchmarks: Members evaluated Xmma kernels, noting nvjet catching up on smaller sizes, with a custom kernel running 1.5% faster for N=8192 compared to cutlass.

- nvjet generally competes well against cutlass, with some specific instances where cutlass may slightly outperform.

- Triton MLIR Dialects Documentation Critique: A member inquired about documentation for Triton MLIR Dialects, noting much of the TritonOps documentation is minimal and lacks comprehensive examples.

- Another pointed out the programming guide on GitHub is minimal and unfinished, aiming to aid developers working with the Triton language.

- CUDARC Crate Enables Manual CUDA Bindings: The CUDARC crate offers bindings for the CUDA API, currently supporting only matrix multiplication due to manual implementation.

- Testing revealed that optimizing the matmul function consumes most development time.

- GPU Warp Scheduler and FP32 Core Distribution Insights: A member explained that a warp comprises 32 threads utilizing 32 FP32 cores in parallel, resulting in 128 FP32 cores per SM.

- Discrepancies were noted between A100's 64 FP32 cores for its 4 warp schedulers, versus RTX 30xx and 40xx series with 128 FP32 cores.

- KernelBench Launch and Leaderboard Integrity Issues: KernelBench (Preview) was introduced by @anneouyang to evaluate LLM-generated GPU kernels for neural network optimization.

- Users expressed concerns about incomplete fastest kernels on the leaderboard, referencing an incomplete kernel solution.

LlamaIndex Discord

- NVIDIA Financial Insights Unveiled: @pyquantnews showcases how NVIDIA's financial statements can be utilized for both simple revenue lookups and complex business risk analyses, using practical code examples of setting up LlamaIndex.

- This method enhances business intelligence by leveraging structured financial data.

- Streamlining LlamaCloud with Google Drive: @ravithejads outlines a step-by-step process for configuring a LlamaCloud pipeline using Google Drive as a data source, including chunking and embedding parameters. Full setup instructions are available here.

- This guide assists developers in integrating document indexing seamlessly with LlamaIndex.

- Amazon Launches Nova Foundation Models: Amazon introduced Nova, a set of foundation models offering more affordable pricing compared to competitors and providing day 0 support. Install Nova with

pip install llama-index-llms-bedrock-converseand view examples here.- The release of Nova expands the AI model landscape with cost-effective and high-performance options.

- Effective RAG Implementations: A member shared a repository containing over 10 RAG implementations, including methods like Naive RAG and Hyde RAG, aiding others in customizing RAG for their datasets. Check the repository here.

- These implementations facilitate experimentation with RAG applications tailored to specific AI development needs.

- Embedding Model Token Size Limitations: Discussion highlighted that the HuggingFaceEmbedding class truncates input text longer than 512 tokens, posing challenges for embedding larger texts.

- Members advised selecting appropriate

embed_modelclasses to bypass these constraints effectively.

- Members advised selecting appropriate

LM Studio Discord

- Qwen LV 7B Vision Functionalities: A query was raised about whether the Qwen LV 7B model works with vision functionalities, opening discussions on integrating vision capabilities with various AI models.

- Community members are exploring the potential for combining vision with Qwen LV 7B, discussing possible use cases and technical requirements.

- FP8 Quantization Enhances Model Efficiency: FP8 quantization allows for a 2x reduction in model memory and a 1.6x throughput improvement with little impact on accuracy, as per VLLM docs.

- This optimization is particularly relevant for optimizing performance on machines with limited resources.

- HF Spaces Now Support Docker Containers: A member confirmed that any HF space can run as a docker container, offering flexibility for local deployments and testing.

- This enhancement facilitates easier integration and scalability for AI engineers working on HF spaces.

- Intel Arc Battlemage Cards Face AI Task Skepticism: A member expressed doubt about the new Arc Battlemage cards, suggesting they are not suitable for AI tasks.

- Another member argued that despite being cost-effective for building local inference servers, reliance on Intel for such applications remains questionable.

- LM Studio Performance Issues on Windows: Users reported slow performance and abnormal output when running LM Studio on Windows compared to Mac, noting issues with the 3.2 model.

- Solutions suggested include toggling the

Flash Attentionswitch and checking system specs for compatibility.

- Solutions suggested include toggling the

LLM Agents (Berkeley MOOC) Discord

- Sierra AI's Info Session & Talent Search: Sierra AI is hosting an exclusive info session for developers on December 3 at 9am PT, accessible via a YouTube livestream, where participants will explore Sierra’s Agent OS and Agent SDK capabilities.

- During the session, Sierra AI will discuss their search for talented developers and encourage interested individuals to RSVP to secure their spot for exciting career opportunities.

- AI Safety Final Lecture by Dawn Song: Professor Dawn Song will deliver the final lecture on Towards building safe and trustworthy AI Agents and a Path for Science- and Evidence-based AI Policy at 3:00 PM PST today, streaming live on YouTube.

- She will address the significant risks associated with LLM agents and propose a science-based AI policy to mitigate these threats effectively.

- LLM Agents Course Assignments & Mastery Tier: Participants can still register for the LLM Agents Learning Course by completing the signup form and access all materials on the course website.

- While lab assignments are not mandatory for all certifications, completing all three is required to achieve the Mastery tier, allowing late joiners to catch up as needed.

- Concerns Over GPT-4 PII Leaks: A member raised concerns that GPT-4 may leak personally identifiable information (PII), drawing parallels to the AOL search log release incident in 2006.

- They highlighted that despite AOL's claims of anonymization, the release contained twenty million search queries from over 650,000 users, with the data still accessible online.

- ReAct Paradigm's Implementation Impact: The effectiveness of the ReAct paradigm is highly dependent on implementation specifics such as prompt design and state management, with members noting that benchmarks should reflect these details.

- Comparisons were made to foundational models in traditional ML, sparking discussions on how varying implementations lead to significant differences in benchmark performance due to the fuzzy definitions within the AI field.

OpenInterpreter Discord

- Development Branch Rewritten for Enhanced Performance: The latest development branch has been completely rewritten, making it lighter, faster, and smarter, which has impressed users.

- Members are excited to test this active branch and have been encouraged to provide feedback on missing features from the old implementation.

- New

--serveOption Enables OpenAI-Compatible Server: The new--serveoption introduces an OpenAI-compatible REST server, with version 1.0 excluding the old LMC/web socket protocol.- This setup allows users to connect through any OpenAI-compatible client, enabling actions directly on the server's device.

- TypeError Encountered with Anthropic Integration: Users reported a TypeError when integrating the development branch with Anthropic, specifically an unexpected keyword argument ‘proxies’.

- Users were advised to share the full traceback for debugging and were provided with examples of correct installation commands.

- Community Testing Requested to Enhance Development Branch: Members have requested community participation in testing to improve the development branch's functionality, which receives frequent updates.

- One member expressed reliance on the LMC for communication and finds transitioning to the new setup both terrifying and exciting.

- LiveKit Enables Remote Device Connectivity: O1 utilizes LiveKit to connect devices like iPhones and laptops or a Raspberry Pi running the server.

- This setup facilitates remote access to control the machine via the local OpenInterpreter (OI) instance running on it.

DSPy Discord

- Pydantic AI Integrates with DSLModel: The introduction of Pydantic AI enhances integration with DSLModel, creating a seamless framework for developers.

- This integration leverages Pydantic, widely used across various Agent Frameworks and LLM libraries in Python.

- DSPy Optimization Challenges on AWS Lambda: A member is contemplating running DSPy optimizations on AWS Lambda for LangWatch customers, but the 15-minute limit poses challenges.

- They expressed a need for strategies to work around this time constraint.

- ECS/Fargate Recommended over Lambda: Another member shared their experience, suggesting that running DSPy on Lambda may not be feasible due to storage constraints.

- They recommended exploring ECS/Fargate as a potentially more reliable solution.

- Program Of Thought Deprecation Concerns: A member inquired whether Program Of Thought is on the path to deprecation/no active support post v2.5.

- This suggests ongoing concerns regarding the future of this program within the community.

- Agentic and RAG Examples in DSPy: A member inquired about agentic examples in DSPy where the output from one signature is utilized as input for another, specifically for an email composing program.

- Another member suggested looking at the RAG example but later clarified the location of relevant examples may be on the dspy.ai website.

Torchtune Discord

- Image Generation Feature in Torchtune: A user expressed excitement about adding an image generation feature to Torchtune, referencing Pull Request #2098.

- The pull request aims to incorporate new functionalities that enhance the platform's capabilities.

- T5 Integration in Torchtune: Discussions suggest that T5 might be integrated into Torchtune, based on insights from Pull Request #2069.

- This integration is expected to align T5 features with the upcoming image generation enhancements.

- Fine-tuning ImageGen Models in Torchtune: A member highlighted the potential for fine-tuning image generation models within Torchtune, describing it as an enjoyable project.

- This comment generated light-hearted responses, indicating varying levels of familiarity among members.

- CycleQD Recipe Sharing: A member shared a link to a CycleQD recipe, describing it as a fun project.

MLOps @Chipro Discord

- Members Excited for Upcoming Event: Members expressed their excitement and interest in attending an upcoming event, with one member noting a scheduled visit to India around that time.

- Ooh nice. Yes. I’ll be there. Hope to meet! was shared, highlighting the enthusiasm among participants.

- Clarification on Event Registration Process: A user inquired about the registration process for attending the event, prompting a discussion on effective navigation of the registration system.

- Participants shared strategies to streamline the attendee onboarding experience, ensuring a smooth registration flow.

Axolotl AI Discord

- ADOPT Optimizer Accelerates Axolotl: The team has integrated the latest ADOPT optimizer updates into the Axolotl codebase, encouraging engineers to experiment with the enhancements.

- A member inquired about the advantages of using the ADOPT optimizer within Axolotl, prompting discussions on performance improvements.

- ADOPT Optimizer's Beta Boost: The ADOPT optimizer now supports optimal convergence with any beta value, enhancing performance across diverse scenarios.

- Members explored this capability during discussions, highlighting its potential to optimize performance in various deployment scenarios.

tinygrad (George Hotz) Discord

- PR#7987 Triumphs with Stable Benchmarks: jewnex noted that PR#7987 is worth tweeting after running benchmarks, showing no GPU hang with beam this time 🚀.

- Tweaking Thread Groups in uopgraph.py: A member in learn-tinygrad asked if thread group/grid sizes can be altered during graph rewrite optimizations in

uopgraph.py.- The discussion focused on whether sizes are fixed based on earlier searches in pm_lowerer or can be adjusted post-optimization.

LAION Discord

- Bio-ML Revolution in 2024: The year 2024 marks a surge in machine learning for biology (bio-ML), with notable achievements like Nobel prizes awarded for structural biology prediction and significant investments in protein sequence models.

- Excitement buzzes around the field, although concerns loom about compute-optimal protein sequencing modeling curves that need to be addressed. Through a Glass Darkly | Markov Bio discusses the path toward end-to-end biology and the role of human understanding.

- Introducing Gene Diffusion for Single-Cell Biology: A new model called Gene Diffusion is described, utilizing a continuous diffusion transformer trained on single-cell gene count data to explore cell functional states.

- It employs a self-supervised learning method, predicting clean, un-noised embeddings from gene token vectors, akin to techniques used in text-to-image models.

- Seeking Clarity on Training Regime of Gene Diffusion: Curiosity arises regarding the training regime of the Gene Diffusion model, specifically its input/output relationship and what it aims to predict.

- Members express a desire for clarification on the intricacies of the model, highlighting the need for community assistance in understanding these complex concepts.

Mozilla AI Discord

- December Schedule Events: Three new member events have been added to the December schedule to increase community engagement.

- These events aim to showcase members' projects and boost community involvement.

- Next Gen Llamafile Hackathon Presentations: Students will present their projects using Llamafile for personalized AI tomorrow, emphasizing social good.

- Community members are encouraged to support the students' innovative initiatives.

- Introduction to Web Applets: <@823757327756427295> will be Introducing Web Applets, explaining an open standard & SDK for advanced client-side applications.

- Participants can customize their roles within the community to receive updates.

- Theia IDE Hands-On Demo: <@1131955800601002095> will demonstrate Theia IDE, an open AI-driven development environment.

- The demo will illustrate how Theia enhances development practices.

- Llamafile Release & Security Bounties: A new release for Llamafile was announced with several software improvements.

- <@&1245781246550999141> awarded 42 bounties in the first month to identify vulnerabilities in generative AI.

The HuggingFace Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!