[AINews] OLMo 2 - new SOTA Fully Open LLM

This is AI News! an MVP of a service that goes thru all AI discords/Twitters/reddits and summarizes what people are talking about, so that you can keep up without the fatigue. Signing up here opts you in to the real thing when we launch it 🔜

Reinforcement Learning with Verifiable Rewards is all you need.

AI News for 11/26/2024-11/27/2024. We checked 7 subreddits, 433 Twitters and 29 Discords (197 channels, and 2528 messages) for you. Estimated reading time saved (at 200wpm): 318 minutes. You can now tag @smol_ai for AINews discussions!

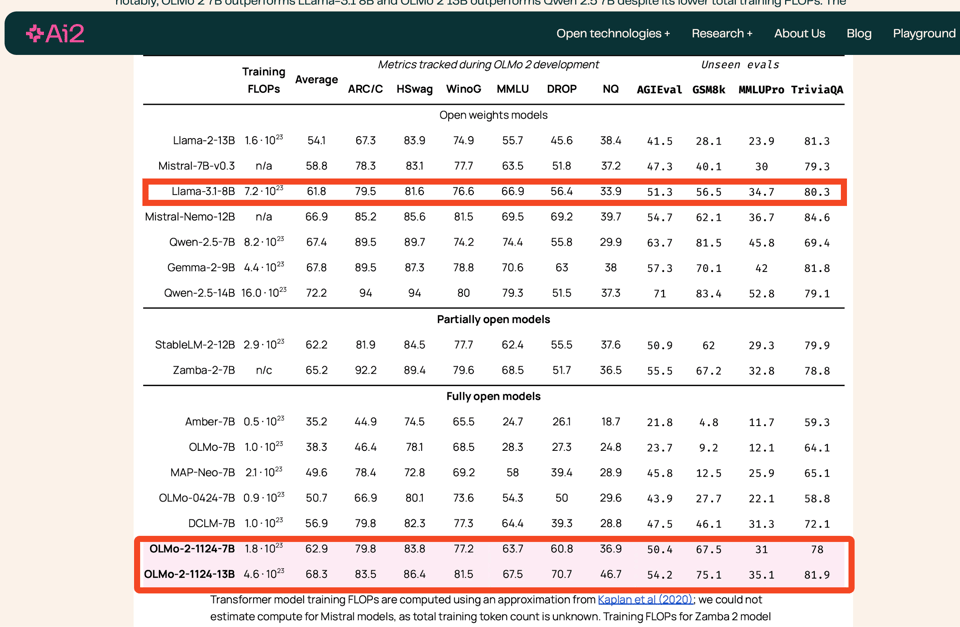

AI2 is notable for having fully open models - not just open weights, but open data, code, and everything else. We last covered OLMo 1 in Feb and OpenELM in April. Now it would see that AI2 have updated OLMo-2 to roughly Llama 3.1 8B equivalent.

They have trained with 5T tokens, particularly using learning rate annealing and introducing new, high-quality data (Dolmino) at the end of pretraining. A full technical report is pending soon so we don't know much else, but the post-training gives credit to Tülu 3, using "Reinforcement Learning with Verifiable Rewards" (paper here, tweet here)) which they just announced last week (with open datasets of course.

The Table of Contents and Channel Summaries have been moved to the web version of this email: !

AI Twitter Recap

all recaps done by Claude 3.5 Sonnet, best of 4 runs.

TO BE COMPLETED

AI Reddit Recap

/r/LocalLlama Recap

Theme 1. AutoRound 4-bit Quantization: Lossless Performance with Qwen2.5-72B

- Lossless 4-bit quantization for large models, are we there? (Score: 118, Comments: 66): Experiments with 4-bit quantization using AutoRound on Qwen2.5-72B instruct model demonstrated performance parity with the original model, even without optimizing quantization hyperparameters. The quantized models are available on HuggingFace in both 4-bit and 2-bit versions.

- MMLU benchmark testing methodology was discussed, with the original poster confirming 0-shot settings and referencing Intel's similar findings. Critics noted that MMLU might be too "easy" for large models and suggested trying MMLU Pro.

- Qwen2.5 models show unique quantization resilience compared to other models like Llama3.1 or Gemma2, with users speculating it was trained with quantization-aware techniques. This is particularly evident in Qwen Coder performance results.

- Discussion focused on the terminology of "lossless," with users explaining that quantization is inherently lossy (like 128kbps AAC compression), though performance impact varies by task - minimal for simple queries but potentially significant for complex tasks like code refactoring.

Theme 2. SmolVLM: 2B Parameter Vision Model Running on Consumer Hardware

- Introducing Hugging Face's SmolVLM! (Score: 115, Comments: 12): HuggingFace released SmolVLM, a 2B parameter vision language model that generates tokens 7.5-16x faster than Qwen2-VL and achieves 17 tokens/sec on a Macbook. The model can be fine-tuned on Google Colab, processes millions of documents on consumer GPUs, and outperforms larger models in video benchmarks despite no video training, with resources available at HuggingFace's blog and model page.

- SmolVLM requires a minimum of 5.02GB GPU RAM, but users can adjust image resolution using

size={"longest_edge": N*384}parameter and utilize 4/8-bit quantization with bitsandbytes, torchao, or Quanto to reduce memory requirements. - The model demonstrates strong OCR capabilities when focused on specific paragraphs but struggles with full screen text recognition, likely due to default resolution limitations of 1536×1536 pixels (N=4) which can be increased to 1920×1920 (N=5) for better document processing.

- Users compare SmolVLM favorably to mini-cpm-V-2.6, noting its accurate image captioning abilities and potential for broader applications.

- SmolVLM requires a minimum of 5.02GB GPU RAM, but users can adjust image resolution using

Theme 3. MLX LM 0.20.1 Matches llama.cpp Flash Attention Speed

- MLX LM 0.20.1 finally has the comparable speed as llama.cpp with flash attention! (Score: 84, Comments: 22): MLX LM 0.20.1 demonstrates significant performance improvements, increasing generation speed from 22.569 to 33.269 tokens-per-second for 4-bit models, reaching comparable speeds to llama.cpp with flash attention. The update shows similar improvements for 8-bit models, with generation speeds increasing from 18.505 to 25.236 tokens-per-second, while maintaining prompt processing speeds around 425-433 tokens-per-second.

- Users discuss quantization differences between MLX and GGUF formats, noting potential quality disparities in the Qwen 2.5 32B model and high RAM usage (70+ GB) for 8-bit MLX versions compared to Q8_0 GGUF.

- llama.cpp released their speculative decoding server implementation, which may outperform MLX when sufficient RAM is available. A discussion thread provides more details.

- Performance optimization tips include increasing GPU memory limits on Apple Silicon using the command

sudo sysctl iogpu.wired_limit_mb=40960to allow up to 40GB of GPU memory usage.

Theme 4. MoDEM: Routing Between Domain-Specialized Models Outperforms Generalists

- MoDEM: Mixture of Domain Expert Models (Score: 76, Comments: 47): MoDEM research demonstrates that routing between domain-specific fine-tuned models outperforms general-purpose models, showing success through a system that directs queries to specialized models based on their expertise domains. The paper proposes an alternative to large general models by using fine-tuned smaller models combined with a lightweight router, making it particularly relevant for open-source AI development with limited compute resources, with findings available at arXiv.

- Industry professionals indicate this architecture is already common in production, particularly in data mesh systems, with some implementations running thousands of ML models in areas like logistics digital twins. The approach includes additional components like deciders, ranking systems, and QA checks.

- WilmerAI demonstrates a practical implementation using multiple base models: Llama3.1 70b for conversation, Qwen2.5b 72b for coding/reasoning/math, and Command-R with offline wikipedia API for factual responses.

- Technical limitations were discussed, including VRAM constraints when loading multiple expert models and the challenges of model merging. Users suggested using LoRAs with a shared base model as a potential solution, referencing Apple's Intelligence system as an example.

Other AI Subreddit Recap

r/machinelearning, r/openai, r/stablediffusion, r/ArtificialInteligence, /r/LLMDevs, /r/Singularity

Theme 1. Anthropic Launches Model Context Protocol for Claude

- Introducing the Model Context Protocol (Score: 106, Comments: 37): Model Context Protocol (MCP) appears to be a protocol for file and data access, but no additional context or details were provided in the post body to create a meaningful summary.

- MCP enables Claude Desktop to interact with local filesystems, SQL servers, and GitHub through APIs, facilitating mini-agent/tool usage. Implementation requires a quickstart guide and running

pip install uvto set up the MCP server. - Users report mixed success with the file server functionality, particularly noting issues on Windows systems. Several users experienced connection problems despite logs showing successful server connections.

- The protocol works with regular Claude Pro accounts through the desktop app, requiring no additional API access. Users express interest in using it for code testing, bug fixing, and project directory access.

- MCP enables Claude Desktop to interact with local filesystems, SQL servers, and GitHub through APIs, facilitating mini-agent/tool usage. Implementation requires a quickstart guide and running

- Model Context Protocol (MCP) Quickstart (Score: 64, Comments: 2): Model Context Protocol (MCP) post appears to have no content or documentation in the body. No technical details or quickstart information was provided to summarize.

- With MCP, Claude can now work directly with local files—create, read, and edit seamlessly. (Score: 23, Comments: 11): Claude gains direct local file manipulation capabilities through MCP, enabling file creation, reading, and editing functionalities. No additional context or details were provided in the post body.

- Users expressed excitement about Claude's new file manipulation capabilities through MCP, though minimal substantive discussion was provided.

- A Mac-compatible version of the functionality was shared via a Twitter link.

Theme 2. Major ChatGPT & Claude Service Disruptions

- Is gpt down? (Score: 38, Comments: 30): ChatGPT users reported service disruptions affecting both the web interface and mobile app. The outage prevented users from accessing the platform through any means.

- Multiple users across different regions including Mexico confirmed the ChatGPT outage, with users receiving error messages mid-conversation.

- Users were unable to get responses from ChatGPT, with one user sharing a screenshot of the error message they received during their conversation.

- The widespread nature of reports suggests this was a global service disruption rather than a localized issue.

- NOOOO!! 😿 (Score: 135, Comments: 45): Claude's parent company Anthropic is limiting access to their Sonnet 3.5 model due to capacity constraints. The post author expresses disappointment and wishes for financial means to maintain access to the model.

- Multiple users report that Pro tier access to Sonnet 3.5 is unreliable with random caps and access denials, leading some to switch back to ChatGPT. A thread discussing Opus limits was shared to document these issues.

- The API pay-as-you-go system emerges as a more reliable alternative, with users reporting costs of $0.01-0.02 per prompt and $10 lasting over a month. Users can implement this through tools like LibreChat for a better interface.

- Access to Sonnet appears to be account-dependent for free users, with inconsistent availability across accounts. Some users suggest there may be an undisclosed triage metric determining access patterns.

Theme 3. MIT PhD's Open-Source LLM Training Series

- [D] Graduated from MIT with a PhD in ML | Teaching you how to build an entire LLM from scratch (Score: 301, Comments: 72): An MIT PhD graduate created a 15-part video series teaching how to build Large Language Models from scratch without libraries, covering topics from basic concepts through implementation details including tokenization, embeddings, and attention mechanisms. The series provides both theoretical whiteboard explanations and practical Python code implementations, progressing from fundamentals in Lecture 1 through advanced concepts like self-attention with key, query, and value matrices in Lecture 15.

- Multiple users questioned the creator's credibility, noting their PhD was in Computational Science and Engineering rather than ML, and pointing to a lack of LLM research publications. Several recommended Andrej Karpathy's lectures as a more established alternative via his YouTube channel.

- Discussion revealed concerns about academic misrepresentation, with users pointing out that the creator's NeurIPS paper was actually a workshop paper rather than a main conference paper, and questioning recent posts about basic concepts like the Adam optimizer.

- Users debated the value of an MIT affiliation in the LLM field specifically, with some noting that institutional prestige doesn't necessarily correlate with expertise in every subfield. The conversation highlighted how academic credentials can be misused for marketing purposes.

Theme 4. Qwen2VL-Flux: New Open-Source Image Model

- Open Sourcing Qwen2VL-Flux: Replacing Flux's Text Encoder with Qwen2VL-7B (Score: 96, Comments: 34): Qwen2vl-Flux, a new open-source image generation model, replaces Stable Diffusion's t5 text encoder with Qwen2VL-7B to enable multimodal generation capabilities including direct image variation without text prompts, vision-language fusion, and GridDot control panel for precise style modifications. The model, available on Hugging Face and GitHub, integrates ControlNet for structural guidance and offers features like intelligent style transfer, text-guided generation, and grid-based attention control.

- VRAM requirements of 48GB+ were highlighted as a significant limitation for many users, making the model inaccessible for consumer-grade hardware.

- Users inquired about ComfyUI compatibility and the ability to use custom fine-tuned Flux or LoRA models, indicating strong interest in integration with existing workflows.

- Community response showed enthusiasm mixed with overwhelm at the pace of new model releases, particularly referencing Flux Redux and the challenge of keeping up with SOTA developments.

AI Discord Recap

A summary of Summaries of Summaries by O1-preview

Theme 1: AI Model Updates and Releases

- Cursor Packs Bugs with New Features in 0.43 Update: Cursor IDE's latest update introduces a revamped Composer UI and early agent functionality, but users report missing features like 'Add to chat' and encounter bugs that hamper productivity.

- Allen AI Crowns OLMo 2 as Open-Model Champion: Unveiling OLMo 2, Allen AI touts it as the best fully open language model yet, boasting 7B and 13B variants trained on up to 5 trillion tokens.

- Stable Diffusion 3.5 Gets ControlNets, Artists Rejoice: Stability.ai enhances Stable Diffusion 3.5 Large with new ControlNets—Blur, Canny, and Depth—available for download on HuggingFace and supported in ComfyUI.

Theme 2: Technical Issues and Performance Enhancements

- Unsloth Squashes Qwen2.5 Tokenizer Bugs, Developers Cheer: Unsloth fixes multiple issues in the Qwen2.5 models, including tokenizer problems, enhancing compatibility and performance as explained in Daniel Han's video.

- PyTorch Boosts Training Speed with FP8 and FSDP2, GPUs Sigh in Relief: PyTorch's FP8 training update reveals a 50% throughput speedup using FSDP2, DTensor, and

torch.compile, enabling efficient training of models up to 405B parameters.

- AMD GPUs Lag Behind, ROCm Leaves Users Fuming: Despite multi-GPU support in LM Studio, users report that AMD GPUs underperform due to ROCm's performance limitations, making AI tasks sluggish and frustrating.

Theme 3: Community Concerns and Feedback

- Cursor Users Demand Better Communication, Support Channels Wanted: Frustrated with bugs and missing features, Cursor IDE users call for improved communication about updates and issues, suggesting a dedicated support channel to address concerns.

- Stability.ai Support Goes Silent, Users Left in the Dark: Users express frustration over unanswered emails and lack of communication from Stability.ai regarding support and invoicing issues, casting doubt on the company's engagement.

- Cohere's API Limits Stall Student Projects, Support Sought: A student developing a Portuguese text classifier hits Cohere's API key limit with no upgrade option, prompting community advice to contact support or explore open-source alternatives.

Theme 4: Advancements in AI Applications

- AI Hits the Right Notes: MusicGen Continues the Tune: Members discuss AI models capable of continuing music compositions, sharing tools like MusicGen-Continuation on Hugging Face to enhance creative workflows.

- NotebookLM Turns Text into Podcasts, Content Creators Celebrate: Users harness NotebookLM to generate AI-driven podcasts from source materials, exemplified by The Business Opportunity of AI, expanding content reach and engagement.

- Companion Gets Emotional, Talks with Feeling: The latest Companion update introduces an emotional scoring system that adapts responses based on conversation tones, enhancing realism and personalization.

Theme 5: Ethical Discussions and AI Safety

- Sora API Leak Raises Concerns Over Artist Compensation: The reported leak of the Sora API on Hugging Face prompts discussions about fair compensation for artists involved in testing, with community members calling for open-source alternatives.

- Anthropic's MCP Debated, Solution Seeking a Problem?: The introduction of the Model Context Protocol (MCP) by Anthropic sparks debate over its necessity, with some questioning whether it's overcomplicating existing solutions.

- Stability.ai Reaffirms Commitment to Safe AI, Users Skeptical: Amid new releases, Stability.ai emphasizes responsible AI practices and safety measures, but some users question the effectiveness and express concerns over potential misuse.

PART 1: High level Discord summaries

Cursor IDE Discord

-

Cursor Composer's 0.43: Feature Frenzy: The recent Cursor update (0.43) introduces a new Composer UI and early agent functionality, though users have reported bugs like missing 'Add to chat'.

- Users face issues with indexing and needing to click 'Accept' multiple times for changes in the composer.

-

Agent Adventures: Cursor's New Feature Debated: The new agent feature in Cursor aims to assist with code editing but has stability and utility issues as per user feedback.

- Some users find the agent helpful for task completion, while others are frustrated by its limitations and bugs.

-

IDE Showdown: Cursor Outperforms Windsurf: Users comparing Cursor and Windsurf IDE report that Cursor's latest version is more efficient and stable, whereas Windsurf faces numerous UI/UX bugs.

- Mixed feelings exist among users switching between the two IDEs, particularly regarding Windsurf's autocomplete capabilities.

-

AI Performance in Cursor Under Scrutiny: User consensus indicates Cursor has improved significantly in AI interactions, though issues like slow responses and lack of contextual awareness persist.

- Reflections on past AI model experiences show how recent Cursor updates impact workflows, with a clear demand for enhanced AI responsiveness.

-

Community Calls for Better Cursor Communication: Members request improved communication regarding Cursor updates and issues, suggesting a dedicated support channel as a solution.

- Despite frustrations, users acknowledge Cursor's development efforts and show strong community engagement around new features.

Eleuther Discord

-

Evaluating Quantization Effects on Models: A member is assessing the impact of quantization on KV Cache using lm_eval, focusing on perplexity metrics on Wikitext.

- They aim to utilize existing evaluation benchmarks to better understand how quantization affects overall model performance without extensive retraining.

-

UltraMem Architecture Enhances Transformer Efficiency: The UltraMem architecture has been proposed to improve inference speed in Transformers by implementing an ultra-sparse memory layer, significantly reducing memory costs and latency.

- Members debated the practical scalability of UltraMem, noting performance boosts while expressing concerns about architectural complexity.

-

Advancements in Gradient Estimation Techniques: A member suggested estimating the gradient of loss with respect to hidden states in ML models, aiming to enhance performance similarly to temporal difference learning.

- The discussion revolves around using amortized value functions and comparing their effectiveness to traditional backpropagation through time.

-

Comprehensive Optimizer Evaluation Suite Needed: There is a growing demand for a robust optimizer evaluation suite that assesses hyperparameter sensitivity across diverse ML benchmarks.

- Members referred to existing tools like Algoperf but highlighted their limitations in testing methodology and problem diversity.

-

Optimizing KV Cache for Model Deployments: Discussions highlighted the relevance of KV Cache for genuine model deployments, noting that many mainstream evaluation practices may not adequately measure its effects.

- One member suggested simulating deployment environments to better understand performance impacts instead of relying solely on standard benchmarks.

HuggingFace Discord

-

Language Models for Code Generation Limitations: Members discussed the limitations of language models in accurately referring to specific line numbers in code snippets, highlighting tokenization challenges. They proposed enhancing interactions by focusing on function names instead of line numbers for improved context understanding.

- A member suggested that targeting function names could mitigate tokenization issues, fostering more effective code generation capabilities within language models.

-

Quantum Consciousness Theories Influencing AI: A user proposed a connection between quantum processes and consciousness, suggesting that complex systems like AI could mimic these mechanisms. This sparked philosophical discussions, though some felt these ideas detracted from the technical conversation.

- Participants debated the relevance of quantum-based consciousness theories to AI development, with some questioning their practicality in current AI frameworks.

-

Neural Networks Integration with Hypergraphs: The conversation explored the potential of advanced neural networks utilizing hypergraphs to extend AI capabilities. However, there was skepticism about the practical application and relevance of these approaches to established machine learning practices.

- Debates centered on whether hypergraph-based neural networks could bridge existing gaps in AI performance, with concerns about implementation complexity.

-

AI Tools for Music Composition Continuation: Members inquired about AI models capable of continuing or extending music compositions, mentioning tools like Suno and Jukebox AI. A user provided a link to MusicGen Continuation on Hugging Face as a solution for generating music continuations.

- The discussion highlighted the growing interest in AI-driven music tools, emphasizing the potential of MusicGen-Continuation for seamless music creation workflows.

-

Challenges in Balancing Technical and Philosophical AI Discussions: A participant expressed frustration with being stuck in discussions that were unproductive or overly abstract regarding AI and consciousness. This led to a mutual recognition of the challenges faced when blending technical and philosophical aspects in AI discussions.

- Members acknowledged the difficulty in maintaining technical focus while engaging with philosophical debates, aiming to foster more productive conversations.

Unsloth AI (Daniel Han) Discord

-

Qwen2.5 Tokenizer Fixes: Unsloth has resolved multiple issues for the Qwen2.5 models, including tokenizer problems and other minor fixes. Details can be found in Daniel Han's YouTube video.

- This update ensures better compatibility and performance for developers using the Qwen2.5 series.

-

GPU Pricing Concerns: Discussions emerged around the pricing of the Asus ROG Strix 3090 GPU, with current market rates noted at $550. Members advised against purchasing used GPUs at inflated prices due to upcoming releases.

- Alternative options and timing for GPU purchases were considered to optimize cost-efficiency.

-

Inference Performance with Unsloth Models: Members discussed performance issues when using the unsloth/Qwen-2.5-7B-bnb-4bit model with vllm, questioning its optimization. Alternatives for inference engines better suited for bitwise optimizations were sought.

- Suggestions included exploring other inference proxies such as codelion/optillm and llama.cpp.

-

Model Loading Strategies: Users inquired about downloading model weights without using RAM, seeking clarity on file management with Hugging Face. Recommended methods include using Hugging Face's caching and storing weights on an NFS mount for better efficiency.

- These strategies aim to optimize memory usage during model loading and deployment.

-

P100 vs T4 Performance: P100 GPUs were discussed in comparison to T4s, with users noting that P100s are 4x slower than T4s based on their experiences. Discrepancies in past performance comparisons were attributed to outdated scripts.

- This highlights the importance of using updated benchmarking scripts for accurate performance assessments.

aider (Paul Gauthier) Discord

-

Aider v0.65.0 Launches with Enhanced Features: The Aider v0.65.0 update introduces a new

--aliasconfiguration for custom model aliases and supports Dart language through RepoMap.- Ollama models now default to an 8k context window, improving interaction capabilities as part of the release's error handling and file management enhancements.

-

Hyperbolic Model Context Size Impact: In discussions, members highlighted that using 128K of context with Hyperbolic significantly affects results, while an 8K output remains adequate for benchmarking purposes.

- Participants acknowledged the crucial role of context sizes in practical applications, emphasizing optimal configurations for performance.

-

Introduction of Model Context Protocol: Anthropic has released the Model Context Protocol, aiming to improve integrations between AI assistants and various data systems by addressing fragmentation.

- This standard seeks to unify connections across content repositories, business tools, and development environments, facilitating smoother interactions.

-

Integration of Aider with Git: The new MCP server for Git enables Aider to map tools directly to git commands, enhancing version control workflows.

- Members debated deeper Git integration within Aider, suggesting that MCP support could standardize additional capabilities without relying on external server access.

-

Cost Structure of Aider's Voice Function: Aider's voice function operates exclusively with OpenAI keys, incurring a cost of approximately $0.006 per minute, rounded to the nearest second.

- This pricing model allows users to estimate usage expenses accurately, ensuring cost-effectiveness for voice-based interactions.

Modular (Mojo 🔥) Discord

-

Fixes Proposed for Segmentation Faults in Mojo: Members discussed potential fixes in the nightly builds for segmentation faults in the def function environment, highlighting that the def syntax remains unstable. Transitioning to fn syntax was suggested for improved stability.

- One member proposed that switching to fn might offer more stability in the presence of persistent segmentation faults.

-

Mojo QA Bot's Memory Usage Drops Dramatically: A member reported that porting their QA bot from Python to Mojo reduced memory usage from 16GB to 300MB, showcasing enhanced performance. This improvement allows for more efficient operations.

- Despite encountering segmentation faults during the porting process, the overall responsiveness of the bot improved, enabling quicker research iterations.

-

Thread Safety Concerns in Mojo Collections: Discussions highlighted the lack of interior mutability in collections and that List operations aren't thread-safe unless explicitly stated. Mojo Team Answers provide further details.

- The community noted that existing mutable aliases lead to safety violations and emphasized the need for developing more concurrent data structures.

-

Challenges with Function Parameter Mutability in Mojo: The community explored issues with ref parameters, particularly why the min function faces type errors when returning references with incompatible origins. Relevant GitHub link.

- Suggestions included using Pointer and UnsafePointer to address mutability concerns, indicating that handling of ref types might need refinement.

-

Destructor Behavior Issues in Mojo: Members inquired about writing destructors in Mojo, with the

__del__method not being called for stack objects or causing errors with copyability. 2023 LLVM Dev Mtg covered related topics.- Discussions highlighted challenges in managing Pointer references and mutable accesses, proposing specific casting methods to ensure correct behavior.

OpenRouter (Alex Atallah) Discord

-

Companion Introduces Emotional Scoring System: The latest Companion update introduces an emotional scoring system that assesses conversation tones, starting neutral and adapting over time.

- This system ensures Companion maintains emotional connections across channels, enhancing interaction realism and user engagement.

-

OpenRouter API Key Error Troubleshooting: Users reported receiving 401 errors when using the OpenRouter API despite valid keys, with suggestions to check for inadvertent quotation marks.

- Ensuring correct API key formatting is crucial to avoid authentication issues, as highlighted by community troubleshooting discussions.

-

Performance Issues with Gemini Experimental Models: Gemini Experimental 1121 free model users encountered resource exhaustion errors (code 429) during chat operations.

- Community members recommended switching to production models to mitigate rate limit errors associated with experimental releases.

-

Access Requests for Integrations and Provider Keys: Members requested access to Integrations and custom provider keys, citing email edu.pontes@gmail.com for integration access.

- Delays in access approvals led to user frustrations, prompting calls for more transparent information regarding request statuses.

Perplexity AI Discord

-

Creating Discord Bots with Perplexity API: A member expressed interest in building a Discord bot using the Perplexity API, seeking assurance about legal concerns as a student.

- Another user encouraged the project, suggesting that utilizing the API for non-commercial purposes would mitigate legal risks.

-

Perplexity AI Lacks Dedicated Student Plan: Members discussed Perplexity AI's pricing structure, noting the absence of a student-specific plan despite the availability of a Black Friday offer.

- It was highlighted that competitors like You.com provide student plans, potentially offering a more affordable alternative.

-

Community Feedback on DeepSeek R1: Users shared their experiences with DeepSeek R1, praising its human-like interactions and utility in logical reasoning classes.

- The discussion emphasized finding a balance between verbosity and usefulness, especially for handling complex tasks.

-

Recent Breakthrough in Representation Theory: A YouTube link was posted regarding a breakthrough in representation theory within algebra, highlighting new research findings.

- This advancement holds significant implications for future studies in mathematical frameworks.

OpenAI Discord

-

AI's Impact on Employment: Discussions compared the impact of AI on jobs to historical shifts like the printing press, highlighting both job displacement and creation.

- Participants raised concerns about AI potentially replacing junior software engineering roles, questioning future job structures.

-

Human-AI Collaboration: Contributors advocated for treating AI as collaborators, recognizing mutual strengths and weaknesses to enhance human potential.

- The dialogue emphasized the necessity of ongoing collaboration between humans and AI to support diverse human experiences.

-

Advancements in Real-time API: The real-time API was highlighted for its low latency advantages in voice interactions, with references to the openai/openai-realtime-console.

- Participants speculated on the API's capability to interpret user nuances like accents and intonations, though specifics remain unclear.

-

AI Applications in Gaming: Skepticism was expressed about the influence of the gaming community on AI technology decisions, citing potential immaturity in some gaming products.

- Concerns were voiced regarding the risks gamers might introduce into AI setups, indicating a trust divide among tech enthusiasts.

-

Challenges with Research Papers: Crafting longer and more researched papers poses significant challenges, especially in writing-intensive courses that rely on peer reviews.

- It's difficult to work with longer, more researched papers due to their complexity, with suggestions to combine peer reviews for improving quality.

Notebook LM Discord Discord

-

Advancements in AI Podcasting with NotebookLM: Users have leveraged NotebookLM to generate AI-driven podcasts, highlighting its capability to transform source materials into engaging audio formats, as showcased in The Business Opportunity of AI: a NotebookLM Podcast.

- Challenges were noted in customizing podcast themes and specifying input sources, with users suggesting enhancements to improve NotebookLM's prompt-following capabilities for more tailored content.

-

Enhancing Customer Support Analysis through NotebookLM: NotebookLM is being utilized to analyze customer support emails by converting

.mboxfiles into.mdformat, which significantly enhances the customer experience.- Users proposed integrating direct Gmail support to streamline the process, making NotebookLM more accessible for organizational use.

-

Transforming Educational Content Marketing via Podcasting: A user repurposed educational content from a natural history museum into podcasts and subsequently created blog posts using ChatGPT to improve SEO and accessibility, resulting in increased content reach.

- This initiative was successfully launched by an intern within a short timeframe, demonstrating the efficiency of combining NotebookLM with other AI tools.

-

Addressing Language and Translation Challenges in NotebookLM: Several users reported that NotebookLM generates summaries in Italian instead of English, expressing frustrations with the language settings.

- Inquiries were made regarding the tool's ability to produce content in other languages and whether the voice generator supports Spanish.

-

Privacy and Data Usage Concerns with NotebookLM's Free Model: Discussions have arisen about NotebookLM's free model, with users questioning the long-term implications and potential usage of data for training purposes.

- Clarifications were provided emphasizing that sources are not used for training AI, alleviating some user concerns about data handling.

Stability.ai (Stable Diffusion) Discord

-

ControlNets Enhance Stable Diffusion 3.5: New capabilities have been added to Stable Diffusion 3.5 Large with the release of three ControlNets: Blur, Canny, and Depth. Users can download the model weights from HuggingFace and access code from GitHub, with support in Comfy UI.

- Check out the detailed announcement on the Stable.ai blog for more information on these new features.

-

Flexible Licensing Options for Stability.ai Models: The new models are available for both commercial and non-commercial use under the Stability AI Community License, allowing free use for non-commercial purposes and for businesses with under $1M in annual revenue. Organizations exceeding this revenue threshold can inquire about an Enterprise License.

- This model ensures users retain ownership of outputs, allowing them to use generated media without restrictive licensing implications.

-

Stability.ai's Commitment to Safe AI Practices: The team expressed a strong commitment to safe and responsible AI practices, emphasizing the importance of safety in their developments. They aim to follow deliberate and careful guidelines as they enhance their technology.

- The company highlighted their ongoing efforts to integrate safety measures into their AI models to prevent misuse.

-

User Support Communication Issues: Many users expressed frustration over lack of communication from Stability.ai regarding support, especially concerning invoicing issues.

- One user noted they sent multiple emails without a reply, leading to doubts about the company's engagement.

-

Utilizing Wildcards for Prompts: A discussion arose around the use of wildcards in prompt generation, with members sharing ideas on how to create varied background prompts.

- Examples included elaborate wildcard sets for Halloween backgrounds, showcasing community creativity and collaboration.

GPU MODE Discord

-

FP8 Training Boosts Performance with FSDP2: PyTorch's FP8 training blogpost highlights a 50% throughput speedup achieved by integrating FSDP2, DTensor, and torch.compile with float8, enabling training of Meta LLaMa models ranging from 1.8B to 405B parameters.

- The post also explores batch sizes and activation checkpointing schemes, reporting tokens/sec/GPU metrics that demonstrate performance gains for both float8 and bf16 training, while noting that larger matrix dimensions can impact multiplication speeds.

-

Resolving Multi-GPU Training Issues with LORA and FSDP: Members reported inference loading failures after fine-tuning large language models with multi-GPU setups using LORA and FSDP, whereas models trained on a single GPU loaded successfully.

- This discrepancy has led to questions about the underlying causes, prompting discussions on memory allocation practices and potential configuration mismatches in multi-GPU environments.

-

Triton's PTX Escape Hatch Demystified: The Triton documentation explains Triton's inline PTX escape hatch, which allows users to write elementwise operations in PTX that pass through MLIR during LLVM IR generation, effectively acting as a passthrough.

- This feature provides flexibility for customizing low-level operations while maintaining integration with Triton's high-level abstractions, as confirmed by the generation of inline PTX in the compilation process.

-

CUDA Optimization Strategies for ML Applications: Discussions in the CUDA channel focused on advanced CUDA optimizations for machine learning, including dynamic batching and kernel fusion techniques aimed at enhancing performance and efficiency in ML workloads.

- Members are seeking detailed methods for hand-deriving kernel fusions as opposed to relying on compiler automatic fusion, highlighting a preference for manual optimization to achieve tailored performance improvements.

LM Studio Discord

-

LM Studio Beta Builds Missing Key Features: Members raised concerns about the current beta builds of LM Studio, highlighting missing functionalities such as DRY and XTC that impact usability.

- One member mentioned that 'The project seems to be kind of dead,' seeking clarification on ongoing development efforts.

-

AMD Multi-GPU Support Hindered by ROCM Performance: AMD multi-GPU setups are confirmed to work with LM Studio, but efficiency issues persist due to ROCM's performance limitations.

- A member noted, 'ROCM support for AI is not that great,' emphasizing the challenges with recent driver updates.

-

LM Studio Runs 70b Model on 16GB RAM: Several members shared positive experiences with LM Studio running a 70b model on their 16GB RAM systems.

- 'I’m... kind of stunned at that,' highlighted the unexpected performance achievements.

-

LM Studio API Usage and Metal Support: A member inquired about sending prompts and context to LM Studio APIs, and asked for configuration examples with model usage.

- There was a question regarding Metal support on M series silicon, noted as being 'automatically enabled.'

- Dual 3090 GPU Configuration: Motherboards and Cooling: Discussions surfaced about acquiring a second 3090 GPU, noting the need for different motherboards due to space limitations.

- Members suggested solutions like risers or water cooling to address air circulation challenges when fitting two 3090s together. Additionally, they referenced GPU Benchmarks on LLM Inference for performance data.

Interconnects (Nathan Lambert) Discord

-

Tülu 3 8B's Shelf Life and Compression: Concerns emerged regarding Tülu 3 8B possessing a brief shelf life of just one week, as members discussed its model stability.

- A member highlighted noticeable compression in the model's performance, emphasizing its impact on reliability.

-

Olmo vs Llama Models Performance: Olmo base differs significantly from Llama models, particularly when scaling parameters to 13B.

- Members observed that Tülu outperforms Olmo 2 in specific prompt responses, indicating superior adaptability.

-

Impact of SFT Data Removal on Multilingual Capabilities: The removal of multilingual SFT data in Tülu models led to decreased performance, as confirmed by community testing results.

- Support for SFT experiments continues, with members praising efforts to maintain performance integrity despite data pruning.

-

Sora API Leak and OpenAI's Marketing Tactics: An alleged leak of the Sora API on Hugging Face triggered significant user traffic as enthusiasts explored its functionalities.

- Speculation arose that OpenAI might be orchestrating the leak to assess public reaction, reminiscent of previous marketing strategies.

-

OpenAI's Exploitation of the Artist Community: Critics accused OpenAI of exploiting artists for free testing and PR purposes under the guise of early access to Sora.

- Artists drafted an open letter demanding fair compensation and advocating for open-source alternatives to prevent being used as unpaid R&D.

Cohere Discord

-

API Key Caps Challenge High School Projects: A user reported hitting the Cohere API key limit while developing a text classifier for Portuguese, with no upgrade option available, prompting advice to contact support for assistance.

- This limitation affects educational initiatives, encouraging users to seek support or explore alternative solutions to continue their projects.

-

Embeddings Endpoint Grapples with Error 500: Error 500 issues have been frequently reported with the Embeddings Endpoint, signaling an internal server error that disrupts various API requests.

- Users have been advised to reach out via support@cohere.com for urgent assistance as the development team investigates the recurring problem.

-

Companion Enhances Emotional Responsiveness: Companion introduced an emotional scoring system that tailors interactions by adapting to user emotional tones based on in-app classifiers.

- Updates include tracking sentiments like love vs. hatred and justice vs. corruption, alongside enhanced security measures to protect personal information.

-

Command R+ Model Shows Language Drift: Users have encountered unintended Russian words in outputs from the Command R+ Model despite specifying Bulgarian in the preamble, indicating a language consistency issue.

- Attempts to mitigate this by adjusting temperature settings have been unsuccessful, suggesting deeper model-related challenges.

-

Open-Source Models Proposed as API Alternatives: Facing billing issues, members proposed using open-source models like Aya's 8b Q4 to run locally as a cost-effective alternative to Cohere APIs.

- This strategy offers a sustainable path for users unable to afford production keys, fostering community-driven solutions.

Nous Research AI Discord

-

Test Time Inference: A member inquired about ongoing test time inference projects within Nous, with others confirming interest and discussing potential initiatives.

- The conversation highlighted a lack of clear projects in this area, prompting interest in establishing dedicated efforts.

-

Real-Time Video Models: A user sought real-time video processing models for robotics, emphasizing the need for low-latency performance.

- CNNs and sparse mixtures of expert Transformers were discussed as potential solutions.

-

Genomic Bottleneck Algorithm: An article was shared about a new AI algorithm simulating the genomic bottleneck, enabling image recognition without traditional training.

- Members discussed its competitiveness with state-of-the-art models despite being untrained.

-

Coalescence Enhances LLM Inference: The Coalescence blog post details a method to convert character-based FSMs into token-based FSMs, boosting LLM inference speed by five times.

- This optimization leverages a dictionary index to map FSM states to token transitions, enhancing inference efficiency.

-

Token-based FSM Transitions: Utilizing the Outlines library, an example demonstrates transforming FSMs to token-based transitions for optimized inference sampling.

- The provided code initializes a new FSM and constructs a tokenizer index, facilitating more efficient next-token prediction during inference.

Latent Space Discord

-

MCP Mayhem: Anthropic's Protocol Sparks Debate: A member questioned the necessity of Anthropic's new Model Context Protocol (MCP), arguing it might not become a standard despite addressing a legitimate problem.

- Another member expressed skepticism, indicating the issue might be better solved through existing frameworks or cloud provider SDKs.

-

Sora Splinter: API Leak Sends Shockwaves: Sora API has reportedly been leaked, offering video generation from 360p to 1080p with an OpenAI watermark.

- Members expressed shock and excitement, discussing the implications of the leak and OpenAI's alleged response to it.

-

OLMo Overload: AI Release Outshines Competitors: Allen AI announced the release of OLMo 2, claiming it to be the best fully open language model to date with 7B and 13B variants trained on up to 5T tokens.

- The release includes data, code, and recipes, promoting OLMo 2's performance against other models like Llama 3.1.

-

PlayAI's $21M Power Play: PlayAI secured $21 Million in funding to develop user-friendly voice AI interfaces for developers and businesses.

- The company aims to enhance human-computer interaction, positioning voice as the most intuitive communication medium in the era of LLMs.

-

Custom Claude: Anthropic Tailors AI Replies: Anthropic introduced preset options for customizing how Claude responds, offering styles like Concise, Explanatory, and Formal.

- This update aims to provide users with more control over interactions with Claude, catering to different communication needs.

LlamaIndex Discord

-

LlamaParse Boosts Dataset Creation: @arcee_ai processed millions of NLP research papers using LlamaParse, creating a high-quality dataset for AI agents with efficient PDF-to-text conversion that preserves complex elements like tables and equations.

- The method includes a flexible prompt system to refine extraction tasks, demonstrating versatility and robustness in data processing.

-

Ragas Optimizes RAG Systems: Using Ragas, developers can evaluate and optimize key metrics such as context precision and recall to enhance RAG system performance before deployment.

- Tools like LlamaIndex and @literalai help analyze answer relevancy, ensuring effective implementation.

-

Fixing Errors in llama_deploy[rabbitmq]: A user reported issues with llama_deploy[rabbitmq] executing

deploy_corein versions above 0.2.0 due to TYPE_CHECKING being False.- Cheesyfishes recommended submitting a PR and opening an issue for further assistance.

-

Customizing OpenAIAgent's QueryEngine: A developer sought advice on passing custom objects like chat_id into CustomQueryEngine within the QueryEngineTool used by OpenAIAgent.

- They expressed concerns about the reliability of passing data through query_str, fearing modifications by the LLM.

-

AI Hosting Startup Launches: Swarmydaniels announced the launch of their startup that enables users to host AI agents with a crypto wallet without coding skills.

- Additional monetization features are planned, with a launch tweet coming soon.

tinygrad (George Hotz) Discord

-

Flash Attention Joins Tinygrad: A member inquired whether flash-attention could be integrated into Tinygrad, exploring potential performance optimizations.

- The conversation highlighted interest in enhancing Tinygrad's efficiency by incorporating advanced features.

-

Tinybox Pro Custom Motherboard Insights: A user questioned if the Tinybox Pro features a custom motherboard, indicating curiosity about the hardware design.

- This inquiry reflects the community's interest in the hardware infrastructure supporting Tinygrad.

-

GENOA2D24G-2L+ CPU and PCIe 5 Compatibility: A member identified the CPU as a GENOA2D24G-2L+ and discussed PCIe 5 cable compatibility in the Tinygrad setup.

- The discussion underscored the importance of specific hardware components in optimizing Tinygrad's performance.

-

Tinygrad CPU Intrinsics Support Enhancement: A member sought documentation on Tinygrad's CPU behavior, particularly support for CPU intrinsics like AVX and NEON.

- There was interest in implementing performance improvements through potential pull requests to enhance Tinygrad.

-

Radix Sort Optimization Techniques and AMD Paper: Discussions explored optimizing the Radix Sort algorithm using

scatterand referenced AMD's GPU Radix Sort paper for insights.- Community members debated methods to ensure correct data ordering while reducing dependency on

.item()andforloops.

- Community members debated methods to ensure correct data ordering while reducing dependency on

LLM Agents (Berkeley MOOC) Discord

-

Hackathon Workshop with Google AI: Join the Hackathon Workshop hosted by Google AI on November 26th at 3 PM PT. Participants can watch live and gain insights directly from Google AI specialists.

- The workshop features a live Q&A session, providing an opportunity to ask questions and receive guidance from Google AI experts.

-

Lecture 11: Measuring Agent Capabilities: Today's Lecture 11, titled 'Measuring Agent Capabilities and Anthropic’s RSP', will be presented by Benjamin Mann at 3:00 pm PST. Access the livestream here.

- Benjamin Mann will discuss evaluating agent capabilities, implementing safety measures, and the practical application of Anthropic’s Responsible Scaling Policy (RSP).

-

Anthropics API Keys Usage: Members discussed the usage of Anthropic API keys within the community. One member confirmed their experience with using Anthropic API keys.

- This confirmation highlights the active integration of Anthropic’s tools among AI engineering projects.

-

In-person Lecture Eligibility: Inquiry about attending lectures in person revealed that in-person access is restricted to enrolled Berkeley students due to lecture hall size constraints.

- This restriction ensures that only officially enrolled students at Berkeley can participate in in-person lectures.

-

GSM8K Inference Pricing and Self-Correction: A member analyzed GSM8K inference costs, estimating approximately $0.66 per run for the 1k test set using the formula [(100 * 2.5/1000000) + (200 * 10/1000000)] * 1000.

- The discussion also covered self-correction in models, recommending adjustments to output calculations based on the number of corrections.

OpenInterpreter Discord

-

OpenInterpreter 1.0 Release: The upcoming OpenInterpreter 1.0 is available on the development branch, with users installing it via

pip install --force-reinstall git+https://github.com/OpenInterpreter/open-interpreter.git@developmentusing the--tools gui --model gpt-4oflags.- OpenInterpreter 1.0 introduces significant updates, including enhanced tool integrations and performance optimizations, as highlighted by user feedback on the installation process.

-

Non-Claude OS Mode Introduction: Non-Claude OS mode is a new feature in OpenInterpreter 1.0, replacing the deprecated

--osflag to provide more versatile operating system interactions.- Users have emphasized the flexibility of Non-Claude OS mode, noting its impact on streamlining development workflows without relying on outdated flags.

-

Speech-to-Text Functionality: Speech-to-text capabilities have been integrated into OpenInterpreter, allowing users to convert spoken input into actionable commands seamlessly.

- This feature has sparked discussions on automation efficiency, with users exploring its potential to enhance interactive development environments.

-

Keyboard Input Simulation: Keyboard input simulation is now supported in OpenInterpreter, enabling the automation of keyboard actions through scripting.

- The community has shown interest in leveraging this feature for testing and workflow automation, highlighting its usefulness in repetitive task management.

-

OpenAIException Troubleshooting: An OpenAIException error was reported, preventing assistant messages due to missing tool responses linked to specific request IDs.

- This issue has raised concerns about tool integration reliability, prompting users to seek solutions for seamless interaction with coding tools.

Torchtune Discord

-

Torchtitan Launches Feature Poll: Torchtitan is conducting a poll to gather user preferences on new features like MoE, multimodal, and context parallelism.

- Participants are encouraged to have their voices heard to influence the direction of the PyTorch distributed team.

-

GitHub Discussions Open for Torchtitan Features: Users are invited to join the GitHub Discussions to talk about potential new features for Torchtitan.

- Engagement in these discussions is expected to help shape future updates and enhance the user experience.

-

DPO Recipe Faces Usage Challenges: Concerns were raised about the low adoption of the DPO recipe, questioning its effectiveness compared to PPO, which has gained more traction among the team.

- This disparity has led to discussions on improving the DPO approach to increase its utilization.

-

Mark's Heavy Contributions to DPO Highlighted: Despite the DPO recipe's low usage, Mark has heavily focused his contributions on DPO.

- This has sparked questions about the differing popularity levels between DPO and PPO within the group.

DSPy Discord

-

DSPy Learning Support: A member expressed a desire to learn more about DSPy and sought community assistance, presenting AI development ideas.

- Despite only a few days of DSPy experience, another member offered their assistance to support their learning.

-

Observers SDK Integration: A member inquired about integrating Observers, referencing the Hugging Face article on AI observability.

- The article outlines key features of this lightweight SDK, indicating community interest in enhancing AI monitoring capabilities.

Axolotl AI Discord

-

Accelerate PR Fix for Deepspeed: A pull request was made to resolve issues with schedule free AdamW when using Deepspeed in the Accelerate library.

- The community raised concerns regarding the implementation and functionality of the optimizer.

-

Hyberbolic Labs Offers H100 GPU for 99 Cents: Hyberbolic Labs announced a Black Friday deal offering H100 GPUs for just 99 cents rental.

- Despite the appealing offer, a member humorously added, good luck finding them.

The MLOps @Chipro Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The LAION Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Mozilla AI Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The Gorilla LLM (Berkeley Function Calling) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

The AI21 Labs (Jamba) Discord has no new messages. If this guild has been quiet for too long, let us know and we will remove it.

PART 2: Detailed by-Channel summaries and links

The full channel by channel breakdowns have been truncated for email.

If you want the full breakdown, please visit the web version of this email: !

If you enjoyed AInews, please share with a friend! Thanks in advance!